Camera Ready Paper Iccmc 2022

Camera Ready Paper Iccmc 2022

Uploaded by

vansh kediaCopyright:

Available Formats

Camera Ready Paper Iccmc 2022

Camera Ready Paper Iccmc 2022

Uploaded by

vansh kediaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Camera Ready Paper Iccmc 2022

Camera Ready Paper Iccmc 2022

Uploaded by

vansh kediaCopyright:

Available Formats

Robust Face Detection and Recognition Using

Image Processing and OpenCV

Aruna Bhat Rahul Kumar Jha Vansh Kedia

Department of Computer Engineering Department of Computer Engineering Department of Computer Engineering

Delhi Technological University Delhi Technological University Delhi Technological University

Delhi, India Delhi, India Delhi, India

aruna.bhat@dtu.ac.in heavenboyrj.11@gmail.com vanshkedia1999@gmail.com

Abstract— We have witnessed enormous progress in the the aid of Python and OpenCV, the technology is the most

fields of Artificial Intelligence, Machine Learning, and efficient method of detecting the face of a person. This

Computer Vision over the last decade, owing to a doubling in technology has applications across a wide range of domains,

processing capacity per year, as well as a massive rise in data including the military, security, schools, colleges, and

internationally. Facial identity, biometrics, and face universities, airplanes, banking, online web applications,

identification have gone from a rarefied issue to a prominent gaming, and other similar areas” [10]. Facial detection is not

topic for academics in computer science these days. Due to the

the same to face recognition. Facial detection is the process

basic nature of the topic, breakthroughs in computer image

processing and understanding studies will give insights into

of recognizing and finding a person's face in a photograph.

how the human brain operates, according to the common The method of recognizing a person's face from a database

belief. Face detection and recognition are often employed in of photos is known as face recognition. Thanks to the

national defense, CCTV surveillance, criminal investigation, media, the use of photographs to identify persons has

personal protection, law enforcement, biometrics, and a variety become commonplace. It is, however, more sensitive to

of other applications. For our project, we utilized OpenCV, fingerprint and retina scanning than other types of

Haar cascade, NumPy, and Matplotlib Python packages. biometrics[9]. “Among the features of the face recognition

Haar-Cascades will be utilized for face detection, and OpenCV system are faster processing, identification automation,

provides three built-in face recognizers: Face recognition invasion of privacy, large data storage, best results,

algorithms such as Eigenfaces, Fisher faces, and Local binary increased security, real-time face recognition of students and

pattern histograms are used. The approach employed in this employees in schools and colleges, employees in corporate

research is literature review, which entails reading and offices, smartphone unlock, and a host of others” [8]. Face

examining numerous books or literature relevant to detection is one of the most complex problems in the real

mathematical ideas that underpin the development of

world scenario because the same person is detected as a

algorithms to detect a human's face, which are subsequently

followed by implementation in code, particularly Python code.

different person or detection fails depending upon

expression, light, pose. Being a part of biometric

Keywords— Face Recognition, Deep neural Network, technology, face detection has become one of the most

histograms, matplotlib, OpenCV, Image Processing important topics in the machine learning field. Facial

recognition software is used in a variety of areas, including

I. INTRODUCTION entertainment, security, law enforcement, and biometrics. It

Face detection is a kind of CV technology that assists in the has evolved over time from a clumsy computer vision

visualization of individual’s faces and is concerned with the approach to a powerful artificial neural network. Face

recognition of instances of objects of a certain type in digital recognition is essential for face analysis, tracking, and

photos and real time images, among other things[11]. It's all identification[7].

about detecting faces in an image or video frame and II. PROBLEM STATEMENT

extracting them so that face recognition algorithms may

utilize them. Face recognition has become more important Through this research project, we plan on gaining a better

as processing power, data storage, and technology have understanding of OpenCV and Face Detection and Face

improved, particularly in industries such as security, CCTV Recognition using OpenCV libraries. We also intend to

surveillance, biometrics, social media, and other comparable present a comparative study of various face detection and

disciplines. Face recognition is a method for determining a recognition frameworks. The objective of our project is to

person's identification based on their unique facial detect human faces from input colored image. Here are few

features[12]. It's all about extracting characteristics that are objectives to design face detection system [15]. The

uniquely described in the face from a Grayscale picture that objective of face detection is:

has been retrieved, scaled, cropped, and transformed. ● Create a real-time facial recognition system [16].

Photos, films, and real-time machines may all benefit from ● To use a Haar Classifier-based facial recognition

such systems[13]. Face recognition is a non-invasive

method.

identification technology that can analyse numerous faces at

the same time, making it quicker than previous methods. ● To use OpenCV to create a face detection system.

“An individual's face may be detected with relative ease A fascinating and challenging problem in the field of AI is

using such technology, which makes use of an existing CV, which is both interesting and difficult to solve.

dataset that has similar look to that of the individual. With Computer Vision links software to the world around us. It

XXX-X-XXXX-XXXX-X/XX/$XX.00 ©20XX IEEE

lets software comprehend and learn from its surroundings. objects, it was primarily driven to detect faces. This

Face detection and identification has risen from obscurity to framework can analyze pictures quickly and achieve

become one of the most prominent fields of computer vision excellent detection rates” [9]. Face Detection system based

research in the recent decade [14]. Due to the basic nature of on retinal connected neural network (RCNN): Different

the topic, breakthroughs in computer image processing and ANN architectures and models have been employed for face

understanding studies will give insights into how the human identification in recent years. “According to Rowley, Baluja,

brain operates, according to the common belief. It is gaining and Kanade, a retinal connected neural network (RCNN)

a lot of traction throughout the globe due to its contactless analyzes small windows of an image to evaluate whether

biometric capabilities. Traditional fingerprint scanners are each window contains a face “[12].

being phased out in favor of AI-based technology, which

has enormous business implications. Its applications include

security and surveillance, authentication, access control,

digital healthcare, image retrieval, and more. As we have IV. METHODOLOGY

seen in recent times Face Detection has gained a lot of We used the OpenCV package and approach to create these

attention from researchers due to its application in computer in Python. Haar Cascades will be utilized for face detector,

and human communication. Face detection is a part of while Eigenfaces, Fisher faces, LBP and LBPH will be

image processing [2-4]. Face detection is a technique for employed for face recognizer. Firstly, we will create a face

compressing, improving, or extracting features images and detection using Haar-cascades then for face recognition we

identifying single or many faces from pictures, removing will be implementing three algorithms Eigenface, Fisher

unwanted background noise. For this, the algorithm face and Linear binary pattern histograms. Additionally, we

basically categorizes images into two groups based on the will be using python deep learning algorithms and more

presence of a face in it or not. The algorithm will be depending on faster, accurate and efficient results [5-6].

thoroughly going through the images and then identify the

A. Tools and Technologies

presence of the face. Face Recognition technology is one of

the most relevant biometric technologies. The technique 1) HAAR Cascode

gets benefits from the most visible human body components Viola-Jones Face detection, also known as Haar Cascades, is

in a non-interrupting way. According to worldwide data, a ML approach for training a cascade function using many

most individuals are unaware of the face recognition process positive instances (such as photographs of people's faces)

that is taking place on them, making it one of the least and negative instances (like images that are without face)

invasive procedures with the least amount of delay. The face [1-3]. The Haar Cascade is a common face detection

recognition algorithm goes through the facial features of the algorithm for photos and videos. Image characteristics are

input image and training image features for the recognition. handled as numeric data collected from the training and

This biometric has been considered, and perhaps testing photos and utilized to discriminate between them to

exaggeratedly, lauded as a great system for relating possible recognize faces.

troubles like terrorists, fiddle artists, and so on, but it has yet

to gain wide acceptance in high-position use. Biometric face

recognition technology is anticipated to surpass point

biometrics as the most common system of stoner

identification and authentication soon.

III. LITERATURE REVIEW

This section gives an overview of the most common human

face recognition methods, which are usually used on frontal

faces, as well as the advantages and disadvantages of each

approach. The approaches examined include eigenfaces,

CNN, Deep Learning, dynamic link architecture, hidden

Markov model, geometrical feature matching, and template Fig.1 Haar cascade flowchart

matching. The techniques are compared based on the face

representations they use. Skin color-based Face detection We have applied every feature of the algorithm on all

Method: “Face detection in color photographs is a training images by giving equal weights at the starting. It

widespread and successful method. Skin color is vital. determines the valid threshold for positive and negative

Using skin color to trace a face has several advantages. pictures. There are chances of error and misprediction, so

Color processing requires far less time than other facial we have selected features with a minimum rate of error and

processing. Each pixel was classified as skin or non-skin better algorithm performance. They are the features that are

using the skin color detection technique” [11]. Viola and best for image classification.

Jones Face Detection system “Paul Viola and Michael Jones

introduced the Viola–Jones object identification framework

in 2001. Although it may be taught to recognize a range of

Fig.2 Haar kernel

The image's sample representation is shown on the left, with

Fig.3 Flowchart of the Eigenfaces Method

pixel values ranging from 0 to 1. The picture in the middle

displays a haar kernel with all bright pixels set to 0 and all

dark pixels set to 1.” An image's haar is computed by Eigenfaces method is widely used as it is independent upon

comparing the average of pixels in darker and brighter facial geometry, simplicity of realization, fast recognition

areas, the sum of all picture pixels in the darker half of haar compared to other approaches, high success rate etc.

feature, as well as all image pixels in the lighter half of haar

feature” [2]. The next step is to look for differences, and if 3) FisherFaces

the difference is near to one, the edge of the difference is Being one of the most popular and superior algorithms,

found. The picture characteristics are calculated using all of Fisher Faces has been in working on facial recognition for a

each kernel's available sizes and pixel positions. There are long time. Fisher Face algorithm method for face

two primary states of the Haar cascade image classifier one recognition is a merger of PCA and LDA [20-21]. The

is training and another one is the detection, and both are Principal Component Analysis (PCA) is used for the

provided by OpenCV [17]. reduction of face space, size, pixel, and the dimension of the

The structure of the face recognition system is end to end image. Dimension reduction during PCA reduces

recognized. The different convolutional layers are used in discriminant information relevant for LDA. Then, Fisher’s

the system of face recognition and detection. The layer with Linear Discriminant (FDL), commonly known as Linear

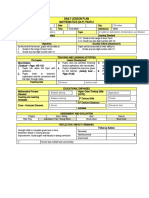

the size is tabulated as shown in table.1 Discriminant Analysis (LDA), is used to extract picture

properties. Face picture matching using minimal Euclidean

[18].

layer Size in Size out kernel

4) Local Binary Patterns Histogram(LBPH)

L2 1 1 128 1 1 128 Local Binary Patterns Histograms is one of the powerful

Lc3 1×32×128 1×1×128 algorithms for texture classification. LBPH detects pixels in

Lc2 1×32×128 1×32×128 maxout p=2 a picture by thresholding their neighbors. In other words,

LBPH compares each pixel to its neighbors to summarize an

Lc1 512×7×7 1×32×128 maxout p=2

image's local structure and converts the result to a binary

Table.1 structure of network layers involved

integer [6].

2) EigenFaces

Principal Component Analysis underpins the Eigenfaces

approach (PCA). For the efficient representation of facial V. RESULT ANALYSIS

pictures, Sirovich and Kirby employed Eigenfaces and PCA.

Fisher Faces algorithm considers faces from all the training

Turk and Pentland went on to apply it for facial recognition

images at once and finds principal components from all of

difficulties. PCA is frequently used in pattern recognition

them at once. Focusing on characteristics that represent all

because it is a technique of subspace projection with low

the faces in the training data rather than distinguishing one

noise sensitivity. The Eigenfaces method's fundamental

from another. As it finds illumination as a relevant feature of

approach is to extract the face's distinguishing

image and takes variation in illumination as principal

characteristics and represents linear combination of

component of the input images and discards other relevant

"eigenfaces" that are acquired during extracting features

features that are useful for recognition, we have used

phase, followed by the computation of facial elements in the

Eigenfaces to extract such features and the issue is fixed.

training set. Because not all elements of the face are equally

Fisher Faces just prevents features of one's image/face

significant for facial identification, Eigenfaces algorithms

becoming dominant on other images.

take that into account. The major component evaluates all of

the training photos and extracts key and useful information

from them. Following that, the input picture characteristics

are compared to the main components (relevant and useful

features taken from training photos) that were saved

throughout the training phase [19]. The face is recognized

by projecting it into the space generated by the eigenfaces.

Then comparing the Euclidean distance between the

eigenface’s eigenvectors and the eigenfaces to the picture.

The recognition is completed if the distance is near to zero.

Fig.4 Face Image with variance in illumination the brightness and contrast of your face photos. “To improve

our accuracy, we could use HSV or other color space instead

One of the major problems of using Fisher Faces method is of RGB, or add image processing processes like edge

computation problems as it has a sophisticated computing enhancement, contour detection, motion detection, etc. [2].

process [22-23]. Also, the condition of face images is We're also scaling photos to a standard size, which may alter

affected due to the variation of light (as shown in above the face's aspect ratio. The picture may be resized via

figure the algorithm will extract just one individual's Facial interpolation without losing its aspect ratio. This approach

features) and different expression and position of face image makes advantage of a neighborhood of pixels to enlarge and

(as shown in figure below the algorithm has extracted reduce the image's size. For face detection, OpenCV’s Haar

different features of one person only). Cascade classifier was employed [25]. Based on the input

image, which might be from a file, a camera, or live video,

The facial identification algorithm evaluates each picture

Fig.5 Grayscale Images

position and labels it as "Face" or "Not Face". Picture

resolution of 100 x 100 pixels is used to classify the face.

The combination of LBP and HOG descriptors improves Faces in the picture may be smaller or bigger; the classifier

face detection performance [4]. LBPH aims to extract local scans the picture many times looking for individual’s faces

characteristics from photos. Its main idea is to focus on local of varied sizes. It may seem to be a complex and

features rather than to look at the whole image as h high time-consuming operation, but thanks to computational

dimensional vector. Face image is represented as a data methods, the categorization is completed quickly, especially

vector. when done at many scales. To categorize picture locations,

the classifier consults data saved in an XML file. Before you

start writing your code, find the XML file that will be

Fig.6 LBPH algorithm working

utilized and double-check that it is accurate [26]

.

In the above figure, a center pixel value (intensity at a

certain point) of the image is taken as the threshold. A

Fig.8 training loss and loss history

pixel's intensity is expressed with a 1 if it is more than or

The fig.8 shows the training and loss history of the system

equal to its neighbor's, and a 0 otherwise. Taking these o

and 1 in an anticlockwise direction forms a binary number

and then it is converted to decimal and a new pixel value VI. CONCLUSION AND FUTURE WORK

for neighbor is defined. Circular LBP may be used to

change the radius and neighbors using bilinear interpolation To upgrade the recognition performance there are many

[8]. Then the image is divided into multiple grids to extract improvements that can be made such as the addition of

the histogram of each grid. Due to grayscale, each color processing, edge detection, increasing no of the input

histogram has 256 positions. image, etc. [24]. To increase the face recognition accuracy

more input image i.e., minimum 40 images of everyone’s

Fig.7 local Binary Pattern Histogram face, all from various viewing angles and having variation

in lighting exposures. If taking photos is not possible then

The final histogram will be representing features of the there are a variety of approaches for obtaining additional

original image. Two histograms are then compared and the input photographs, including producing new images from

image with the closest histogram is recognized. current ones: -

When it comes to picture quality, there are several variables ● By creating minor copies of facial images, we can

that impact the system's accuracy. It's critical to standardize have more training images and improved accuracy

the training photos that we feed into the face recognition and decrease the chances to biased recognition.

system by using various image pre-processing methods.

● We may resize and rotate current face photos to

Face recognition algorithms are often light sensitive, thus if

provide more alternative training data, reducing

we have taught the algorithm to detect a person in low light,

it will be difficult for it to recognize them in excellent light. sensitivity to certain situations.

This difficulty is called as "Illumination dependent," and it ● To have additional photos, we may have

includes issues like facial alignment, consistency among incorporated image noise and to increase noise

pictures, consistency in size, rotation angle, hair and tolerance.

expression, and lighting. That is why, before training your

Having a wide range of conditions is essential in an

system with training photos and implementing face

individual's training images which will help in face

recognition, it is critical to utilize excellent image

recognition in various positions and lighting conditions,

pretreatment filters. Face pixels with an elliptical mask to

else the classifier will be only looking for certain specific

expose the inner facial region, rather than the hair and

conditions else will be unable to recognize them. Also, it's

background, should be deleted. The article uses a grayscale

very important to ensure the training image should not be

image-based face identification system. The project also

too varied i.e., rotations like 90 degrees are not preferred,

explains how to convert a color facial picture to grayscale

which could make the classifier too generic and resulting in

and utilize Histogram Equalization to automatically equalize

poor accuracy so rotation more than 20 degree will end up D. Jayant and A. Bhat, "Study of robust facial

creating a separate set of training image for each person recognition under occlusion using different

with left, forward, and right images. So, for better results techniques," 2021 5th International Conference on

good preprocessing on the training image is required. Intelligent Computing and Control Systems

Histogram Equalization, as previously stated, is a very (ICICCS), 2021, pp. 1230-1235, doi:

simple picture preprocessing approach so we'll need to mix 10.1109/ICICCS51141.2021.9432300.

a few different approaches till we obtain good results. The

[2] Bhat, Aruna. "Possibility Fuzzy C-Means Clustering

algorithm's main goal is to detect faces by subtracting the

For Expression Invariant Face Recognition."

testing image from the training image and looking for

International Journal on Cybernetics & Informatics

similarities. When a person does it, it works well, but when

(UCI) 3, no. 2 (2014): 35-45.

a machine does it, it is just concerned with pixels and

numbers. So, try deleting a pixel's gray scale value in the [3] A. Bhat, S. Rustagi, S. R. Purwaha and S. Singhal,

test picture from the value of a pixel at the same coordinate "Deep-learning based group-photo Attendance

in each training image, and the smaller the difference, the System using One Shot Learning," 2020

better the match. If the background is different, since the International Conference on Electronics and

algorithm does not know what background is and what is Sustainable Communication Systems (ICESC),

foreground (face), it is crucial to get rid of as much of the 2020, pp. 546-551, doi:

background as possible, by using small sections within the 10.1109/ICESC48915.2020.9155755.

individual’s facial area that do not add any kind of

[4] X. Sun, P. Wu, and S. C. H. Hoi, “Face detection

background information. The difficult elements, such as the

using deep learning: An improved faster RCNN

backdrop, eyeglasses, beard, and mustache, are addressed.

approach,” Neurocomputing, vol. 299, pp. 42–50,

Because pictures must be correctly aligned, little

2018, doi: 10.1016/j.neucom.2018.03.030.

low-quality images often provide better recognition than

larger ones with greater resolution. Even if the training [5] S. S. Farfade, M. Saberian, and L.-J. Li,

picture is perfectly matched, if your input picture a shade “Multi-view Face Detection Using Deep

darker in compared to the training picture, the algorithm Convolutional Neural Networks Categories and

may conclude that the two images aren't a good match. In Subject Descriptors,” 5th ACM Int. Conf. Multimed.

certain circumstances, Histogram equalization may assist, Retr., pp. 643–650, 2015.

but it may make matters worse in others, thus brightness

[6] J. Jeon et al., “A real-time facial expression

discrepancies are a challenging and prevalent issue. Issues

recognizer using deep neural network,” ACM

such as a shade on right side of the eye in the training

IMCOM 2016 Proc. 10th Int. Conf. Ubiquitous Inf.

picture and a shade on the left side of the eye in the testing

Manag. Commun., 2016, doi:

image might also cause a mismatch. Face recognition is

10.1145/2857546.2857642.

best done in real-time because of this. If you're training

your algorithm with any individual’s picture and then [7] W. T. Chu and W. W. Li, “Manga FaceNet: Face

attempting to identify him/her in the moment, you'll be detection in manga based on deep neural network,”

using the same camera, environment, lighting condition, ICMR 2017 - Proc. 2017 ACM Int. Conf. Multimed.

expression, viewing direction, and location, all of which Retr., pp. 412–415, 2017, doi:

will result in high identification accuracy. However, when 10.1145/3078971.3079031.

the circumstances are different, such as in other

[8] W. Jiang and W. Wang, “Face detection and

orientations, rooms, or outdoors, or at different times of the

recognition for home service robots with end-to-end

day, it often leads to poor results. Consequently, it is critical

deep neural networks,” ICASSP, IEEE Int. Conf.

to do extensive testing to have a deeper knowledge of

Acoust. Speech Signal Process. - Proc., pp.

algorithms and obtain the best results possible. The

2232–2236, 2017, doi:

emphasis of future research will be on improving the

10.1109/ICASSP.2017.7952553.

success rate of extremely big datasets.

[9] S. Jafri, S. Chawan, and A. Khan, “Face

ACKNOWLEDGMENT Recognition using Deep Neural Network with

The Authors are indebted to central library of Delhi LivenessNet,” Proc. 5th Int. Conf. Inven. Comput.

Technological University for providing all the research Technol. ICICT 2020, pp. 145–148, 2020, doi:

materials and lab access for the proper competition of the 10.1109/ICICT48043.2020.9112543.

research work in the university. We are thankful to Our

supervisor Aruna Bhatt for guiding us in each step in the [10] J. Li and E. Y. Lam, “Facial expression recognition

research work. using deep neural networks,” IST 2015 - 2015 IEEE

Int. Conf. Imaging Syst. Tech. Proc., 2015, doi:

REFERENCES 10.1109/IST.2015.7294547.

[1] B. Ahn, J. Park, and I. S. K. B, “Real-Time Head [11] B. Yang, J. Cao, R. Ni, and Y. Zhang, “Facial

Orientation from a Monocular,” Springer, vol. 1, pp. Expression Recognition Using Weighted Mixture

82–96, 2015, [Online]. Available: Deep Neural Network Based on Double-Channel

https://link.springer.com/chapter/10.1007/978-3-319

Facial Images,” IEEE Access, vol. 6, pp. [21] X. Li, S. Lai, and X. Qian, “DBCFace: Towards

4630–4640, 2017, doi: Pure Convolutional Neural Network Face

10.1109/ACCESS.2017.2784096. Detection,” IEEE Trans. Circuits Syst. Video

Technol., vol. 8215, no. c, pp. 1–13, 2021, doi:

[12] A. Mollahosseini, D. Chan, and M. H. Mahoor,

10.1109/TCSVT.2021.3082635.

“Going deeper in facial expression recognition

using deep neural networks,” 2016 IEEE Winter [22] B. K. Shah, A. Kumar, and A. Kumar, “Dog breed

Conf. Appl. Comput. Vision, WACV 2016, 2016, doi: classifier for facial recognition using convolutional

10.1109/WACV.2016.7477450. neural networks,” Proc. 3rd Int. Conf. Intell.

Sustain. Syst. ICISS 2020, pp. 508–513, 2020, doi:

[13] J. Kim, N. Shin, S. Y. Jo, and S. H. Kim, “Method

10.1109/ICISS49785.2020.9315871.

of intrusion detection using deep neural network,”

2017 IEEE Int. Conf. Big Data Smart Comput. [23] H. Li, S. Alghowinem, S. Caldwell, and T. Gedeon,

BigComp 2017, pp. 313–316, 2017, doi: “Interpretation of Occluded Face Detection Using

10.1109/BIGCOMP.2017.7881684. Convolutional Neural Network,” INES 2019 - IEEE

23rd Int. Conf. Intell. Eng. Syst. Proc., pp. 165–170,

[14] M. Shah and R. Kapdi, “Object detection using deep

2019, doi: 10.1109/INES46365.2019.9109524.

neural networks,” Proc. 2017 Int. Conf. Intell.

Comput. Control Syst. ICICCS 2017, vol. [24] S. Zhang, C. Chi, Z. Lei, and S. Z. Li, “RefineFace:

2018-January, pp. 787–790, 2017, doi: Refinement Neural Network for High Performance

10.1109/ICCONS.2017.8250570. Face Detection,” IEEE Trans. Pattern Anal. Mach.

Intell., vol. 43, no. 11, pp. 4008–4020, 2021, doi:

[15] H. Kim, Y. Lee, B. Yim, E. Park, and H. Kim,

10.1109/TPAMI.2020.2997456.

“On-road object detection using deep neural

network,” 2016 IEEE Int. Conf. Consum. Electron. [25] N. Do, I. Na, and S. Kim, “Forensics Face Detection

ICCE-Asia 2016, pp. 31–34, 2017, doi: From GANs Using Convolutional Neural Network,”

10.1109/ICCE-Asia.2016.7804765. no. August, 2018.

[16] B. K. Shah, V. Kedia, and R. K. Jha, “Integrated [26] A. Bhatia, V. Kedia, A. Shroff, M. Kumar, B. K.

Vendor-Managed Time Efficient Application to Shah, and Aryan, “Fake currency detection with

Production of Inventory Systems,” Proc. 6th Int. machine learning algorithm and image processing,”

Conf. Inven. Comput. Technol. ICICT 2021, pp. Proc. - 5th Int. Conf. Intell. Comput. Control Syst.

275–280, 2021, doi: ICICCS 2021, no. Iciccs, pp. 755–760, 2021, doi:

10.1109/ICICT50816.2021.9358504. 10.1109/ICICCS51141.2021.9432274.

[17] Bhat, Aruna. "Makeup invariant face recognition [27] B. K. Shah, A. K. Jaiswal, A. Shroff, A. K. Dixit, O.

using features from accelerated segment test and N. Kushwaha, and N. K. Shah, “Sentiments

eigen vectors." International Journal of Image and Detection for Amazon Product Review,” 2021 Int.

Graphics 17, no. 01 (2017): 1750005. Conf. Comput. Commun. Informatics, ICCCI 2021,

2021, doi: 10.1109/ICCCI50826.2021.9402414.

[18] Bhat, Aruna. "Robust Face Recognition by

Applying Partitioning Around Medoids Over Eigen [24] M. Kumar, A. Bhatia, P. Gupta, K. Jha, R. K. Jha,

Faces and Fisher Faces." International Journal on and B. K. Shah, “S IGN L ANGUAGE A

Computtaional Sciences & Applications 4, no. 3 LPHABET R ECOGNITION U SING,” no. Iciccs,

(2014). pp. 1859–1865, 2021.

[19] Bhat, Aruna. "Medoid based Model for Face [25] V. Kedia, A. Bhatia, S. R. Regmi, S. Dugar, K. Jha,

Recognition using Eigen and Fisher Faces." and B. K. Shah, “Time Efficient IOS Application

Available at SSRN 3584107 (2013). For CardioVascular Disease Prediction Using

Machine Learning,” no. Iccmc, pp. 869–874, 2021.

[20] A. Shroff, B. K. Shah, A. Jha, A. K. Jaiswal, P.

Sapra, and M. Kumar, “Multiplex Regulation [30] B. Ahn, J. Park, and I. S. K. B, “Real-Time Head

System with Personalised Recommendation Using Orientation from a Monocular,” Springer, vol. 1, pp.

ML,” Proc. 5th Int. Conf. Trends Electron. 82–96, 2015, [Online]. Available:

Informatics, ICOEI 2021, pp. 1567–1573, 2021, doi: https://link.springer.com/chapter/10.1007/978-3-319

10.1109/ICOEI51242.2021.9453005. -16811-1_6.

You might also like

- Learning Theories - BehaviorismDocument20 pagesLearning Theories - BehaviorismJeric Aquino100% (1)

- The Correct Answer Is: YesDocument22 pagesThe Correct Answer Is: YesMark John Paul CablingNo ratings yet

- Conf Paper 1 - VivekDocument5 pagesConf Paper 1 - Vivekvivekbsharma2k20cse27No ratings yet

- Face Recognition in Image ProcessingDocument7 pagesFace Recognition in Image Processingrentala.charitha2021No ratings yet

- Face Detection1Document14 pagesFace Detection1spidermakdi5324No ratings yet

- Face Recognition Based Attendance System: Problem StatementDocument4 pagesFace Recognition Based Attendance System: Problem StatementPriya RathiNo ratings yet

- Face DetectionDocument14 pagesFace Detectionrudracudasma25No ratings yet

- Face Detection by Using OpenCVDocument4 pagesFace Detection by Using OpenCVvickey rajputNo ratings yet

- Face DetectionDocument14 pagesFace DetectionLaraib KhanNo ratings yet

- Fin Irjmets1686037369Document5 pagesFin Irjmets1686037369bhanuprakash15440No ratings yet

- Face DetectionDocument14 pagesFace DetectionShivangani AnkitNo ratings yet

- Real-Time Face Recognition System Using Python and OpenCVDocument6 pagesReal-Time Face Recognition System Using Python and OpenCVselemondocNo ratings yet

- Face Detection and Recognition Using Opencv and PythonDocument3 pagesFace Detection and Recognition Using Opencv and PythonGeo SeptianNo ratings yet

- Research Paper - 1 PDFDocument3 pagesResearch Paper - 1 PDFParikshit sharmaNo ratings yet

- Research Paper - 1Document4 pagesResearch Paper - 1Parikshit sharmaNo ratings yet

- Face Recognition Using Neural NetworkDocument31 pagesFace Recognition Using Neural Networkvishnu vNo ratings yet

- Facial Recognition Using OpenCVDocument7 pagesFacial Recognition Using OpenCVVishalKumarNo ratings yet

- Computers and Electrical Engineering: Chenglin Yu, Hailong PeiDocument13 pagesComputers and Electrical Engineering: Chenglin Yu, Hailong PeiManish JhaNo ratings yet

- Ijsrp-P14508 (1) Paper4Document19 pagesIjsrp-P14508 (1) Paper4pradhanpratiksha19No ratings yet

- Review Related Literature Foreign LiteratureDocument13 pagesReview Related Literature Foreign LiteratureJane RobNo ratings yet

- Review of Related Literature For Face RecognitionDocument8 pagesReview of Related Literature For Face Recognitionafmabzmoniomdc100% (1)

- Synopsis PROJECT 24 (G-10)Document10 pagesSynopsis PROJECT 24 (G-10)shrey.bhaskar.it.2021No ratings yet

- Innovative Face Detection Using Artificial IntelligenceDocument4 pagesInnovative Face Detection Using Artificial IntelligenceInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Synopsis 2Document9 pagesSynopsis 2HARSHIT KHATTERNo ratings yet

- AI in Face DetectionDocument2 pagesAI in Face DetectionAayush ShahiNo ratings yet

- Literature Review Concerning Face RecognitionDocument6 pagesLiterature Review Concerning Face Recognitionaixgaoqif100% (1)

- Recognition of Facial Expression With The Help of IoT, AI and RoboticsDocument7 pagesRecognition of Facial Expression With The Help of IoT, AI and RoboticsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Face Recognition Using Opencv in PythonDocument6 pagesFace Recognition Using Opencv in PythonAbc defNo ratings yet

- Intelligent Facial Recognition and Analytics System - User Alert Functionality With Personalized Notifications-1Document2 pagesIntelligent Facial Recognition and Analytics System - User Alert Functionality With Personalized Notifications-1ghariganesh54No ratings yet

- Vault Box Using Biometric: - Naman Jain (04614803115) - Mohammed Tabish (04514803115)Document17 pagesVault Box Using Biometric: - Naman Jain (04614803115) - Mohammed Tabish (04514803115)Naman JainNo ratings yet

- Face Recognition Enhancement Systembyusing Parallel ProcessingDocument9 pagesFace Recognition Enhancement Systembyusing Parallel Processingnastaran.jahanbin27No ratings yet

- A Real-Time Framework For Human Face Detection andDocument12 pagesA Real-Time Framework For Human Face Detection andDesti AyuNo ratings yet

- Siddharth Institute of Engineering and TechnologyDocument27 pagesSiddharth Institute of Engineering and TechnologyBinny DaraNo ratings yet

- Food Management Based On Face RecognitionDocument4 pagesFood Management Based On Face RecognitionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Wa000Document7 pagesWa000SourabhNo ratings yet

- Comparative Analysis of PCA and 2DPCA in Face RecognitionDocument7 pagesComparative Analysis of PCA and 2DPCA in Face RecognitionDinh Khai LaiNo ratings yet

- Face Recognition Report 1Document26 pagesFace Recognition Report 1Binny DaraNo ratings yet

- Paper 1595Document3 pagesPaper 1595Brototi MukherjeeNo ratings yet

- Face Detection Tracking OpencvDocument6 pagesFace Detection Tracking OpencvMohamed ShameerNo ratings yet

- Face Recognition PaperDocument7 pagesFace Recognition PaperPuja RoyNo ratings yet

- Highly Secured Online Voting System (OVS) Over NetworkDocument6 pagesHighly Secured Online Voting System (OVS) Over Networkijbui iirNo ratings yet

- Face Recognition ReportDocument3 pagesFace Recognition ReportTeja ReddyNo ratings yet

- Facial Recognition in Web Camera Using Deep Learning Under Google COLABDocument5 pagesFacial Recognition in Web Camera Using Deep Learning Under Google COLABGRD JournalsNo ratings yet

- Fin Research PPR PDFDocument10 pagesFin Research PPR PDFKhushi UdasiNo ratings yet

- Criminal Face Recognition Using Raspberry PiDocument3 pagesCriminal Face Recognition Using Raspberry Pirock starNo ratings yet

- Research - Paper Capstone ProjectDocument4 pagesResearch - Paper Capstone ProjectParikshit sharmaNo ratings yet

- Face Recognition Systems A SurveyDocument34 pagesFace Recognition Systems A SurveysNo ratings yet

- 3 Criminal Detection Project ProposalDocument15 pages3 Criminal Detection Project ProposalAbhishek KashidNo ratings yet

- Stranger Detection: Yada Arun KumarDocument9 pagesStranger Detection: Yada Arun KumarMahesh KumarNo ratings yet

- Face Recognition Security SystemDocument6 pagesFace Recognition Security SystemMahmoud OmarNo ratings yet

- Face Detection Based Attendance System Using Open CV ApproachDocument5 pagesFace Detection Based Attendance System Using Open CV ApproachInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Facial Recognition Project FileDocument6 pagesFacial Recognition Project FileabcNo ratings yet

- Teoh 2021 J. Phys. Conf. Ser. 1755 012006Document10 pagesTeoh 2021 J. Phys. Conf. Ser. 1755 012006Ashish Prasad RNo ratings yet

- A Review of Image Recognition TechnologyDocument5 pagesA Review of Image Recognition TechnologyTyuNo ratings yet

- People Monitoring and Mask Detection Using Real-Time Video AnalyzingDocument4 pagesPeople Monitoring and Mask Detection Using Real-Time Video AnalyzingVIVA-TECH IJRINo ratings yet

- Python Based Multiple Face Detection System: June 2020Document11 pagesPython Based Multiple Face Detection System: June 2020orsangobirukNo ratings yet

- ReferencesDocument17 pagesReferencesmaleksd111No ratings yet

- Developing A Face Recognition System Using Convolutional Neural Network and Raspberry Pi Including Facial ExpressionDocument13 pagesDeveloping A Face Recognition System Using Convolutional Neural Network and Raspberry Pi Including Facial ExpressionHuzaifa TahirNo ratings yet

- Facial Recognition System: Unlocking the Power of Visual IntelligenceFrom EverandFacial Recognition System: Unlocking the Power of Visual IntelligenceNo ratings yet

- Materi Ob Chapter 1 TranslateDocument31 pagesMateri Ob Chapter 1 TranslatenurjannahNo ratings yet

- 222024-Текст статті-502556-1-10-20201229Document9 pages222024-Текст статті-502556-1-10-20201229Hồng KimNo ratings yet

- Unit - 2 Seating Arrangements, Coding & Decoding, Venn DiagramsDocument7 pagesUnit - 2 Seating Arrangements, Coding & Decoding, Venn DiagramsVigneshwaraNo ratings yet

- Social StratificationDocument15 pagesSocial StratificationNomy YasinthaNo ratings yet

- Ajinkya Randive: Work Experience EducationDocument1 pageAjinkya Randive: Work Experience EducationAjinkya A RandiveNo ratings yet

- Features of Effective: CommunicationDocument8 pagesFeatures of Effective: CommunicationCherryl IbarrientosNo ratings yet

- Meg Turner Level III ReflectionDocument5 pagesMeg Turner Level III Reflectionapi-594762709No ratings yet

- CT 001 Computational Thinking, Between Papert and WingDocument26 pagesCT 001 Computational Thinking, Between Papert and WingalbertamakurNo ratings yet

- Relatedness Need Satisfaction, Intrinsic Motivation, and Engagement in Secondary School Physical EducationDocument14 pagesRelatedness Need Satisfaction, Intrinsic Motivation, and Engagement in Secondary School Physical EducationsuckamaNo ratings yet

- 10.4324 9780203059036 PreviewpdfDocument47 pages10.4324 9780203059036 PreviewpdfWafa Al-fazaNo ratings yet

- Date SheetDocument3 pagesDate Sheetvishalthenoob2525No ratings yet

- Attitude Towards StatisticsDocument10 pagesAttitude Towards StatisticsMazarn S SwarzeneggerNo ratings yet

- Introduction To Statistics - 19!3!21Document47 pagesIntroduction To Statistics - 19!3!21balaa aiswaryaNo ratings yet

- Patient'S Characteristics: Passage 1Document6 pagesPatient'S Characteristics: Passage 1Saidah FitriNo ratings yet

- Daily Lesson Plan Mathematics (DLP) Year 2Document6 pagesDaily Lesson Plan Mathematics (DLP) Year 2CHONG HUI WEN MoeNo ratings yet

- The Effects of E-Learning Approach On Student'S Academic Performance in English Subject of The Grade 10 Students of Rizal College of TaalDocument39 pagesThe Effects of E-Learning Approach On Student'S Academic Performance in English Subject of The Grade 10 Students of Rizal College of TaalKhristel Alcayde100% (1)

- The Five Love Languages: How An Individual's Upbringing Manifests in LoveDocument31 pagesThe Five Love Languages: How An Individual's Upbringing Manifests in Lovenaomiayenbernal13No ratings yet

- Gujarat Technological UniversityDocument1 pageGujarat Technological Universityfeyayel990No ratings yet

- UnitAllocationB - ed.AndM - Ed.sem22018 2019Document9 pagesUnitAllocationB - ed.AndM - Ed.sem22018 2019Hillary LangatNo ratings yet

- Lisa Benton Case Analysis - Leadership AnalysisDocument2 pagesLisa Benton Case Analysis - Leadership AnalysisIsaac CorreaNo ratings yet

- Traditionsin Political Theory PostmodernismDocument20 pagesTraditionsin Political Theory PostmodernismRitwik SharmaNo ratings yet

- An Investigation On Tadao Ando's Phenomenological ReflectionsDocument12 pagesAn Investigation On Tadao Ando's Phenomenological ReflectionsNicholas HasbaniNo ratings yet

- Session 3, English Teaching MethodsDocument46 pagesSession 3, English Teaching MethodsĐỗ Quỳnh TrangNo ratings yet

- Elln Digital Plan-Do-Study-Act (Pdsa) Form: Action Steps Per Change Idea WHO WhenDocument2 pagesElln Digital Plan-Do-Study-Act (Pdsa) Form: Action Steps Per Change Idea WHO WhenLENARD GRIJALDONo ratings yet

- Act 10 CSEFDocument5 pagesAct 10 CSEFCheck OndesNo ratings yet

- Research Methodology (R20DHS53) Lecture Notes Mtech 1 Year - I Sem (R20) (2021-22)Document54 pagesResearch Methodology (R20DHS53) Lecture Notes Mtech 1 Year - I Sem (R20) (2021-22)atikaNo ratings yet

- Ownershipaccountability 140130011756 Phpapp01Document24 pagesOwnershipaccountability 140130011756 Phpapp01Rajinder BakshiNo ratings yet

- Lesson Plan Template: Ccss - Ela-Literacy - Ccra.Sl.5Document16 pagesLesson Plan Template: Ccss - Ela-Literacy - Ccra.Sl.5api-584001941No ratings yet