ELK Install

ELK Install

Uploaded by

uniroCopyright:

Available Formats

ELK Install

ELK Install

Uploaded by

uniroOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

ELK Install

ELK Install

Uploaded by

uniroCopyright:

Available Formats

https://computingforgeeks.

com/how-to-install-the-elk-stack-on-rhel-8/

https://www.itzgeek.com/how-tos/linux/centos-how-tos/how-to-install-elk-stack-on-rhel-8.html

https://tel4vn.edu.vn/cai-dat-elk-stack-tren-centos-7/

https://www.digitalocean.com/community/tutorials/how-to-install-elasticsearch-logstash-and-kibana-elk-stack-on-

ubuntu-14-04

#### Install OpenJDK 11

sudo yum -y install java-11-openjdk java-11-openjdk-devel

# Add ELK repository

cat <<EOF | sudo tee /etc/yum.repos.d/elasticsearch.repo

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

# Import GPG key:

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

# Clear and update your YUM package index.

sudo yum clean all

sudo yum makecache

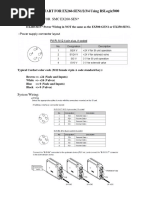

#### Install and Configure Elasticsearch

sudo yum -y install elasticsearch

# Verify Elasticsearch package installation

[integral@vnpasuatapv61 yum.repos.d]$ rpm -qi elasticsearch

Name : elasticsearch

Epoch :0

Version : 7.17.4

Release : 1

Architecture: x86_64

# Set JVM options

sudo vi /etc/elasticsearch/jvm.options

-Xms1g

-Xmx1g

# Start and enable elasticsearch service on boot

sudo systemctl enable --now elasticsearch.service

Created symlink /etc/systemd/system/multi-user.target.wants/elasticsearch.service →

/usr/lib/systemd/system/elasticsearch.service.

# Allow remote access ES from outside

sudo vi /etc/elasticsearch/elasticsearch.yml

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

# ---------------------------------- Network -----------------------------------

#

# By default Elasticsearch is only accessible on localhost. Set a different

# address here to expose this node on the network:

#

#network.host: 192.168.0.1

network.host: 10.191.224.34

transport.host: localhost

transport.port: 9300

http.port: 9200

# --------------------------------- Discovery ----------------------------------

#

# Pass an initial list of hosts to perform discovery when this node is started:

# The default list of hosts is ["127.0.0.1", "[::1]"]

#

#discovery.seed_hosts: ["host1", "host2"]

#discovery.seed_hosts: ["10.191.224.34", "10.191.96.176", "10.191.66.3"]

discovery.seed_hosts: ["10.191.224.34"]

ulimit -n -> 1024

ulimit -u 2048

sudo vi /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

root soft nofile 65536

root hard nofile 65536

sudo vi /etc/sysctl.conf

sysctl -w vm.max_map_count=262144

vm.max_map_count=262144

sysctl -p

sudo vi /etc/pam.d/common-session

add: session required pam_limits.so

sudo vi /etc/pam.d/common-session-noninteractive

add: session required pam_limits.so

[2022-06-01T09:22:22,734][INFO ][o.e.n.Node ] [vnpasuatapv61] initialized

[2022-06-01T09:22:22,735][INFO ][o.e.n.Node ] [vnpasuatapv61] starting ...

[2022-06-01T09:22:22,743][INFO ][o.e.x.s.c.f.PersistentCache] [vnpasuatapv61] persistent cache index loaded

[2022-06-01T09:22:22,744][INFO ][o.e.x.d.l.DeprecationIndexingComponent] [vnpasuatapv61] deprecation component

started

[2022-06-01T09:22:22,841][INFO ][o.e.t.TransportService ] [vnpasuatapv61] publish_address {10.191.224.34:9300},

bound_addresses {[::]:9300}

[2022-06-01T09:22:23,097][INFO ][o.e.b.BootstrapChecks ] [vnpasuatapv61] bound or publishing to a non-loopback

address, enforcing bootstrap checks

[2022-06-01T09:22:23,138][ERROR][o.e.b.Bootstrap ] [vnpasuatapv61] node validation exception

[2] bootstrap checks failed. You must address the points described in the following [2] lines before starting

Elasticsearch.

bootstrap check failure [1] of [2]: initial heap size [1073741824] not equal to maximum heap size [2147483648]; this

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

can cause resize pauses

bootstrap check failure [2] of [2]: the default discovery settings are unsuitable for production use; at least one of

[discovery.seed_hosts, discovery.seed_providers, cluster.initial_master_nodes] must be configured

###################### Kibana ######################

#### Install and Configure Kibana

sudo yum -y install kibana

sudo systemctl enable kibana

sudo systemctl start kibana

# Configure Kibana

sudo vi /etc/kibana/kibana.yml

server.host: "0.0.0.0"

server.name: "vnpasuatapv61"

elasticsearch.hosts: ["http://10.191.224.34:9200"]

# Access Kibana -> need create the administrative Kibana user and password, and store them in the htpasswd.users file.

http://10.191.224.34:5601/app/home

#### Install and Configure Logstash, It will act as a centralized logs server for your client systems which runs an agent

like filebeat

sudo yum -y install logstash

# Configure Logstash

sudo cp /etc/logstash/logstash-sample.conf /etc/logstash/conf.d/logstash.conf

vi /etc/logstash/conf.d/logstash.conf

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://localhost:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

#user => "elastic"

#password => "changeme"

}

}

Test your Logstash configuration with this command:

sudo -u logstash /usr/share/logstash/bin/logstash --path.settings /etc/logstash -t

sudo systemctl enable logstash

sudo systemctl start logstash

###################### Filebeat ######################

#### Client - Install Agent filebeat

# Add ELK repository

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

cat <<EOF | sudo tee /etc/yum.repos.d/elasticsearch.repo

[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md

EOF

# Import GPG key:

sudo rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

# Install and configure filebeat sending log to logstash not to elasticsearch

sudo yum -y install filebeat

sudo vi /etc/filebeat/filebeat.yml

+ filebeat.inputs

- type: log

enabled: true

paths:

- /swlog/integral/app/admin/*.log

- /swlog/integral/app/ITS/*.log

- /swlog/integral/app/RS/*.log

+ output.logstash

# ============================== Filebeat inputs ===============================

filebeat.inputs:

# Each - is an input. Most options can be set at the input level, so

# you can use different inputs for various configurations.

# Below are the input specific configurations.

# filestream is an input for collecting log messages from files.

- type: filestream

# Unique ID among all inputs, an ID is required.

id: admin-IL

# Change to true to enable this input configuration.

enabled: true

# Paths that should be crawled and fetched. Glob based paths.

paths:

- /swlog/integral/app/admin/*.log

- /swlog/integral/app/ITS/*.log

- /swlog/integral/app/RS/*.log

#### Comment out the section elasticsearch template

# ======================= Elasticsearch template setting =======================

#setup.template.settings:

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

# index.number_of_shards: 1

#index.codec: best_compression

#_source.enabled: false

#### Comment out the section output.elasticsearch: as we are not going to store logs directly to Elasticsearch

# ---------------------------- Elasticsearch Output ----------------------------

#output.elasticsearch:

# Array of hosts to connect to.

#hosts: ["localhost:9200"]

# Protocol - either `http` (default) or `https`.

#protocol: "https"

# Authentication credentials - either API key or username/password.

#api_key: "id:api_key"

#username: "elastic"

#password: "changeme"

#### Uncomment output.logstash

# ------------------------------ Logstash Output -------------------------------

output.logstash:

# The Logstash hosts

hosts: ["10.191.224.34:5044"]

# Start and enable filebeat

sudo systemctl start filebeat

sudo systemctl enable filebeat

# List filebeat modules available (Option). Enable/disable module

sudo filebeat modules list

sudo filebeat modules enable system

sudo filebeat modules enable nginx

sudo filebeat modules enable logstash

By default, Filebeat is configured to use default paths for the syslog and authorization logs. In the case of this tutorial,

you do not need to change anything in the configuration. You can see the parameters of the module in the

/etc/filebeat/modules.d/system.yml configuration file.

/etc/filebeat/modules.d/system.yml

sudo vi /etc/filebeat/filebeat.yml

# ================= Configure index lifecycle management (ILM) =================

setup.ilm.overwrite: true

setup.ilm.enabled: false

ilm.enabled: false

# To load the template, The -e tells it to write logs to stdout

sudo filebeat setup -e

sudo filebeat setup -e -E output.logstash.enabled=false -E output.elasticsearch.hosts=['10.191.224.34:9200'] -E

setup.kibana.host=10.191.224.34:5601

sudo filebeat setup -e -E output.logstash.enabled=true -E output.elasticsearch.hosts=['10.191.224.34:9200'] -E

setup.kibana.host=10.191.224.34:5601

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

Exiting: Index management requested but the Elasticsearch output is not configured/enabled

# Validate Filebeat configuration - Start filebeat

cd /usr/share/filebeat/bin

sudo ./filebeat -e -c /etc/filebeat/filebeat.yml

sudo ./filebeat -e -d "*" -c /etc/filebeat/filebeat.yml

sudo systemctl start filebeat

#### ================= Enable Console Output Filebeat =================

output.console:

pretty: true

# ===============================================

# Configure security for the Elastic Stack #

# ===============================================

sudo systemctl stop logstash

sudo systemctl stop kibana

sudo systemctl stop elasticsearch

sudo vi /etc/elasticsearch/elasticsearch.yml

xpack.security.enabled: true

discovery.type: single-node

sed -i.bak '$ a xpack.security.enabled: true' /etc/elasticsearch/elasticsearch.yml

sudo cd /usr/share/elasticsearch/bin

[root@vnpasuatapv61 bin]# ./elasticsearch-setup-passwords interactive

Initiating the setup of passwords for reserved users

elastic,apm_system,kibana,kibana_system,logstash_system,beats_system,remote_monitoring_user.

You will be prompted to enter passwords as the process progresses.

Please confirm that you would like to continue [y/N]y

Enter password for [elastic]: P@ssword123

Reenter password for [elastic]:

Enter password for [apm_system]: P@ssword123

Reenter password for [apm_system]:

Enter password for [kibana_system]: P@ssword123

Reenter password for [kibana_system]:

Enter password for [logstash_system]: P@ssword123

Reenter password for [logstash_system]:

Enter password for [beats_system]: P@ssword123

Reenter password for [beats_system]:

Enter password for [remote_monitoring_user]: P@ssword123

Reenter password for [remote_monitoring_user]:

Changed password for user [apm_system]

Changed password for user [kibana_system]

Changed password for user [kibana]

Changed password for user [logstash_system]

Changed password for user [beats_system]

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

Changed password for user [remote_monitoring_user]

Changed password for user [elastic]

# Generate passwords automatically

echo "y" | /usr/share/elasticsearch/bin/elasticsearch-setup-passwords auto

# ===============================================================

# Configure Kibana connect to Elasticsearch with a password #

# ===============================================================

sudo vi /etc/kibana/kibana.yml

elasticsearch.username: "kibana_system"

elasticsearch.password: "P@ssword123"

sudo systemctl restart kibana

# ===================================================================

# Configure Logstash connect to Elasticsearch with a password #

# ===================================================================

sudo vi /etc/logstash/conf.d/logstash.conf

# Sample Logstash configuration for creating a simple

# Beats -> Logstash -> Elasticsearch pipeline.

input {

beats {

port => 5044

}

}

output {

elasticsearch {

hosts => ["http://10.191.224.34:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

user => "logstash_system"

password => "P@ssword123"

}

}

sudo vi /etc/logstash/logstash.yml

xpack.monitoring.enabled: false

#

=====================================================================================

======

# FIX ERROR: #

# error=>"Got response code '401' contacting Elasticsearch at URL 'http://***:9200/'"} #

#

=====================================================================================

======

https://blog.karatos.in/a?ID=01350-fb4f65d8-8189-4c36-82e8-49c14fdefa5e

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

I. Introduction:

In the previous article, kibana7.2 added login and permissions. After we added access permissions to ELK access, we

found that logstash could not be output to Elasticsearch normally. The error message is as follows, 401, which means

that it is a permission problem. I searched a lot on the Internet and did not say it. Clear, then look at the official

document, and found the following solution

[2019-07-29T17:52:43,230][WARN ][logstash.outputs.elasticsearch] Attempted to resurrect connection to dead ES

instance, but got an error. {:url=>"http://*****:9200/",

:error_type=>LogStash::Outputs::ElasticSearch::HttpClient::Pool::BadResponseCodeError, :error=>"Got response code

'401' contacting Elasticsearch at URL 'http://***:9200/'"}

Configuration in kibana:

Since it is a permission problem, then we can configure the permissions, but the problem appears in the mismatch

Login to Kibana

-> Management: Stack Management

-> Roles:

+ Create role: logstash_writer

+ Cluster privileges: manage_index_templates, monitor, manage_ilm

+ Index privileges:

- Indices: filebeat-*

- Privileges: write, delete, create_index

-> Users:

+ Create user: logstash_internal

+ Password: P@ssword123

+ Privileges: logstash_writer

sudo vi /etc/logstash/conf.d/logstash.conf

output {

elasticsearch {

hosts => ["http://10.191.224.34:9200"]

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

user => "logstash_internal"

password => "P@ssword123"

}

}

# ===================================================================

# Configure Kibana to connect to Elasticsearch with a password #

# ===================================================================

sudo vi /etc/kibana/kibana.yml

elasticsearch.username: "kibana_system"

elasticsearch.password: "P@ssword123"

sed -i.bak '/\.username/s/^#//' /etc/kibana/kibana.yml

Define the username password. Ensure you use the password generated above.

You can securely store the password in Kibana instead of setting it in plain text in the kibana.yml configuration file

using the command;

/usr/share/kibana/bin/kibana-keystore create

/usr/share/kibana/bin/kibana-keystore add elasticsearch.password

When prompted, enter the password for kibana_system user, which is P@ssword123

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

Enter value for elasticsearch.password: ********************

# ===============================================================

# Add other user with elasticsearch-users login to Kibana #

# ===============================================================

/usr/share/elasticsearch/bin/elasticsearch-users useradd kifarunix -r superuser

Some of the known roles include;

Read more about elasticsearch-users command on Elastic page

https://www.elastic.co/guide/en/elasticsearch/reference/current/users-command.html

cd /usr/share/elasticsearch/bins

[root@vnpasuatapv61 bin]# ./elasticsearch-users useradd shlv -r superuser

Enter new password:

ERROR: Invalid password...passwords must be at least [6] characters long

[root@vnpasuatapv61 bin]# ./elasticsearch-users useradd shlv -r superuser

Enter new password: 123456

Retype new password: 123456

[root@vnpasuatapv61 bin]#

[root@vnpasuatapv61 bin]# ./elasticsearch-users list

shlv : superuser

# =======================================

# Changing elasticsearch-users password #

# =======================================

cd /usr/share/elasticsearch/bin

[root@vnpasuatapv61 bin]# ./elasticsearch-users passwd shlv -p P@ssword123

https://www.elastic.co/guide/en/elasticsearch/reference/current/users-command.html

If you use file-based user authentication, the elasticsearch-users command enables you to add and remove users, assign

user roles, and manage passwords per node.

Synopsisedit

/usr/share/elasticsearch/bin/elasticsearch-users

([useradd <username>] [-p <password>] [-r <roles>]) |

([list] <username>) |

([passwd <username>] [-p <password>]) |

([roles <username>] [-a <roles>] [-r <roles>]) |

([userdel <username>])

[root@vnpasuatapv61 ~]# /usr/share/elasticsearch/bin/elasticsearch-users useradd shlv -p P@ssword123 -r superuser

[root@vnpasuatapv61 ~]# /usr/share/elasticsearch/bin/elasticsearch-users list

# ===============

# FIX ERROR #

# ===============

Issue: Your data is not secure

Don’t lose one bit. Enable our free security features.

sudo vi /etc/kibana/kibana.yml

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

security.showInsecureClusterWarning: false

Issue: server.publicBaseUrl is missing and should be configured when running in a production environment. Some

features may not behave correctly. See the documentation.

sudo vi /etc/kibana/kibana.yml

server.publicBaseUrl: "http://10.191.224.34:5601"

Error fetching data

Unable to create actions client because the Encrypted Saved Objects plugin is missing encryption key. Please set

xpack.encryptedSavedObjects.encryptionKey in the kibana.yml or use the bin/kibana-encryption-keys command.

Error: Unable to create actions client because the Encrypted Saved Objects plugin is missing encryption key. Please set

xpack.encryptedSavedObjects.encryptionKey in the kibana.yml or use the bin/kibana-encryption-keys command.

at http://10.191.224.34:5601/46909/bundles/plugin/cases/8.0.0/cases.chunk.6.js:3:17545

# ===============================================================

# How To Rotate and Delete Old Elasticsearch Records #

# ===============================================================

https://www.elastic.co/guide/en/elasticsearch/reference/current/set-up-lifecycle-policy.html

index: "indexname-%{+yyyy.MM.dd}"

Ex: filebeat-7.17.4-2022.06.13

Go to Kibana dashboard -> tack Management

Data -> Index Lifecycle Policy -> Create policy

+ Policy name: 3-days-delete

+ Policy summary:

- Hot phase:

disable rollover

Delete data after this phase

* Rollover:

*

/var/lib/elasticsearch/nodes/0/indices

curl -X GET 'http://10.191.224.34:9200/_cat/indices?v' -u elastic:P@ssword123

curl -X DELETE 'http://10.191.224.34:9200/filebeat-*' -u elastic:P@ssword123

[root@vnpasuatapv61 indices]# curl -X GET 'http://10.191.224.34:9200/_cat/indices?v' -u elastic:P@ssword123

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .geoip_databases ErtxAyMhTcewWwP5_RSFEA 1 0 41 41 38.8mb 38.8mb

green open .security-7 FlyCih6uSh-KreiJF9c7VQ 1 0 62 0 250.5kb 250.5kb

green open .apm-custom-link d0CF_F1SRlGgyy9VKutYCQ 1 0 0 0 226b 226b

green open .transform-internal-007 MYgT3GLNSFq6_NgvKe2g3Q 1 0 0 0 226b 226b

yellow open filebeat-7.17.4-2022.06.10 X1kbYlBYSsiPR1hAWg8WJQ 1 1 4121 0 1mb

1mb

green open .apm-agent-configuration Rl4rY4fSQkmZP6_HcwZOlA 1 0 0 0 226b 226b

yellow open filebeat-7.17.4-2022.06.13 hBKCbBH3QrOmWf97gNDovQ 1 1 17277878 0 3.4gb

3.4gb

green open .kibana_task_manager_7.17.4_001 d0iwCjesQRmRzaabTEuCQQ 1 0 18 6361 21.3mb

21.3mb

green open .async-search 9E9n97SwRs6oCaI-GkGdWw 1 0 0 0 249b 249b

green open .kibana_7.17.4_001 PSLk9DvLQSuGMe_ierDVcQ 1 0 293 33 2.4mb 2.4mb

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

green open .tasks pbiKcBhrT_aduCRLEwt7rA 1 0 4 0 27.4kb 27.4kb

[root@vnpasuatapv61 indices]#

============================================= BEGIN TMP

=============================================

sudo vi /etc/logstash/conf.d/02-beats-input.conf

input {

beats {

port => 5044

}

}

sudo vi /etc/logstash/conf.d/10-syslog-filter.conf

filter {

if [fileset][module] == "system" {

if [fileset][name] == "auth" {

grok {

match => { "message" => ["%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system]

[auth][hostname]} sshd(?:\[%{POSINT:[system][auth][pid]}\])?: %{DATA:[system][auth][ssh][event]} %{DATA:

[system][auth][ssh][method]} for (invalid user )?%{DATA:[system][auth][user]} from %{IPORHOST:[system][auth]

[ssh][ip]} port %{NUMBER:[system][auth][ssh][port]} ssh2(: %{GREEDYDATA:[system][auth][ssh][signature]})?",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]}

sshd(?:\[%{POSINT:[system][auth][pid]}\])?: %{DATA:[system][auth][ssh][event]} user %{DATA:[system][auth]

[user]} from %{IPORHOST:[system][auth][ssh][ip]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]}

sshd(?:\[%{POSINT:[system][auth][pid]}\])?: Did not receive identification string from %{IPORHOST:[system][auth]

[ssh][dropped_ip]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]}

sudo(?:\[%{POSINT:[system][auth][pid]}\])?: \s*%{DATA:[system][auth][user]} :( %{DATA:[system][auth][sudo]

[error]} ;)? TTY=%{DATA:[system][auth][sudo][tty]} ; PWD=%{DATA:[system][auth][sudo][pwd]} ; USER=%

{DATA:[system][auth][sudo][user]} ; COMMAND=%{GREEDYDATA:[system][auth][sudo][command]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]}

groupadd(?:\[%{POSINT:[system][auth][pid]}\])?: new group: name=%{DATA:system.auth.groupadd.name}, GID=%

{NUMBER:system.auth.groupadd.gid}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]}

useradd(?:\[%{POSINT:[system][auth][pid]}\])?: new user: name=%{DATA:[system][auth][user][add][name]},

UID=%{NUMBER:[system][auth][user][add][uid]}, GID=%{NUMBER:[system][auth][user][add][gid]}, home=%

{DATA:[system][auth][user][add][home]}, shell=%{DATA:[system][auth][user][add][shell]}$",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} %

{DATA:[system][auth][program]}(?:\[%{POSINT:[system][auth][pid]}\])?: %{GREEDYMULTILINE:[system][auth]

[message]}"] }

pattern_definitions => {

"GREEDYMULTILINE"=> "(.|\n)*"

}

remove_field => "message"

}

date {

match => [ "[system][auth][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

geoip {

source => "[system][auth][ssh][ip]"

target => "[system][auth][ssh][geoip]"

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

}

}

else if [fileset][name] == "syslog" {

grok {

match => { "message" => ["%{SYSLOGTIMESTAMP:[system][syslog][timestamp]} %{SYSLOGHOST:

[system][syslog][hostname]} %{DATA:[system][syslog][program]}(?:\[%{POSINT:[system][syslog][pid]}\])?: %

{GREEDYMULTILINE:[system][syslog][message]}"] }

pattern_definitions => { "GREEDYMULTILINE" => "(.|\n)*" }

remove_field => "message"

}

date {

match => [ "[system][syslog][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

}

sudo vi /etc/logstash/conf.d/30-elasticsearch-output.conf

output {

elasticsearch {

hosts => ["10.191.224.34:9200"]

manage_template => false

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

}

}

============================================= END TMP

=============================================

file:///D/_ThaoLe/shlv_user_guide/ELK_install.txt[4/14/2023 10:09:00 PM]

You might also like

- Jathaka Alangaram Tamil PDFDocument3 pagesJathaka Alangaram Tamil PDFDhana Jayan44% (9)

- 8700 Hands On Cheat SheetDocument9 pages8700 Hands On Cheat SheetJeya ChandranNo ratings yet

- Lustre Quick CheatsheetDocument4 pagesLustre Quick CheatsheetscibyNo ratings yet

- DBX-3 0 3-DeployDBXDocument131 pagesDBX-3 0 3-DeployDBXPhuong ThaiNo ratings yet

- File BeatDocument4 pagesFile Beatmahmoud husseinNo ratings yet

- Installing Oracle 11g Release 2 Standard Edition On Red Hat Enterprise Linux 5Document6 pagesInstalling Oracle 11g Release 2 Standard Edition On Red Hat Enterprise Linux 5Henrique DamianiNo ratings yet

- How To Install Elastic Stack (ELK) On Red Hat Enterprise LinuxDocument10 pagesHow To Install Elastic Stack (ELK) On Red Hat Enterprise Linuxlu4dpbNo ratings yet

- Setting Up Multi-Source Replication in MariaDB 10Document71 pagesSetting Up Multi-Source Replication in MariaDB 10Juan Manuel Avila FariñaNo ratings yet

- Oracle 10g RAC Install-Config On SolarisDocument9 pagesOracle 10g RAC Install-Config On Solarisjagtap bondeNo ratings yet

- ASM Vs File SystemDocument3 pagesASM Vs File SystemhlapsNo ratings yet

- Install WazuhDocument25 pagesInstall WazuhDanilo CippicianiNo ratings yet

- QUICK GUIDE To Installing Oracle Database 11gR2 - PART1Document7 pagesQUICK GUIDE To Installing Oracle Database 11gR2 - PART1Peter AsanNo ratings yet

- Initializing A Build EnvironmentDocument26 pagesInitializing A Build EnvironmentMuhammad AliNo ratings yet

- RedHat5 Manual NSADocument200 pagesRedHat5 Manual NSApiratnetNo ratings yet

- Mysql PacemakerDocument49 pagesMysql PacemakerSammy Manuel DominguezNo ratings yet

- Portworx Disaster RecoveryDocument5 pagesPortworx Disaster RecoveryAbdul Razak KamaruddinNo ratings yet

- EmbukDocument36 pagesEmbukAdnanZulkarnainNo ratings yet

- Scaling MariaDB With Docker - WebinarDocument47 pagesScaling MariaDB With Docker - WebinarAlvin John RichardsNo ratings yet

- A Practical Guide To Oracle 10g RAC Its REAL Easy!: Gavin Soorma, Emirates Airline, Dubai Session# 106Document113 pagesA Practical Guide To Oracle 10g RAC Its REAL Easy!: Gavin Soorma, Emirates Airline, Dubai Session# 106manjubashini_suriaNo ratings yet

- AIX IO TuningDocument10 pagesAIX IO TuningrfelsburgNo ratings yet

- Configure High Availability Cluster in Centos 7 (Step by Step Guide)Document9 pagesConfigure High Availability Cluster in Centos 7 (Step by Step Guide)HamzaKhanNo ratings yet

- R12 Rapid Clone Mulit Node To Single NodeDocument5 pagesR12 Rapid Clone Mulit Node To Single NodePaulo Henrique G FagundesNo ratings yet

- ORACLE-BASE - Oracle Database 21c Installation On Oracle Linux 8 (OL8)Document10 pagesORACLE-BASE - Oracle Database 21c Installation On Oracle Linux 8 (OL8)Fabio Bersan RochaNo ratings yet

- Final - Log Processing and Analysis With ELK Stack PDFDocument27 pagesFinal - Log Processing and Analysis With ELK Stack PDFRahul NairNo ratings yet

- Applies To:: Cloning A Database Home and Changing The User/Group That Owns It (Doc ID 558478.1)Document4 pagesApplies To:: Cloning A Database Home and Changing The User/Group That Owns It (Doc ID 558478.1)psaikrishNo ratings yet

- Guide To IBM PowerHA SystemDocument518 pagesGuide To IBM PowerHA SystemSarath RamineniNo ratings yet

- Tuxedo AdminDocument336 pagesTuxedo AdminNguyễn CươngNo ratings yet

- Image Guide of OpenstackDocument101 pagesImage Guide of OpenstackAbdou MfopaNo ratings yet

- Logsene Brochure PDFDocument24 pagesLogsene Brochure PDFRamkumarNo ratings yet

- Hands-On Lab Manage Shared LibrariesDocument6 pagesHands-On Lab Manage Shared LibrariesIyyappan ManiNo ratings yet

- 11.3.1.1 Lab - Setup A Multi-VM EnvironmentDocument7 pages11.3.1.1 Lab - Setup A Multi-VM EnvironmentNoacto TwinrockNo ratings yet

- SUSE Linux Enterprise: 10 SP1 The Linux Audit FrameworkDocument76 pagesSUSE Linux Enterprise: 10 SP1 The Linux Audit Frameworkblogas2 blogNo ratings yet

- Elastic Security vs. Wazuh Report From PeerSpot 2023-07-02 1nqdDocument36 pagesElastic Security vs. Wazuh Report From PeerSpot 2023-07-02 1nqdali topanNo ratings yet

- Edureka VM Split Files - 4.0Document2 pagesEdureka VM Split Files - 4.0saikiran9No ratings yet

- Tuning Linux OS On Ibm Sg247338Document494 pagesTuning Linux OS On Ibm Sg247338gabjonesNo ratings yet

- The Complete Guide To The ELK Stack - Logz - IoDocument101 pagesThe Complete Guide To The ELK Stack - Logz - IoWisdom Tree100% (2)

- IBM System Storage DS8870 Performance With High Performance Flash EnclosureDocument32 pagesIBM System Storage DS8870 Performance With High Performance Flash Enclosureliew99No ratings yet

- KVMDocument12 pagesKVMalok541No ratings yet

- Core Dump AnalysisDocument31 pagesCore Dump AnalysisRahul BiliyeNo ratings yet

- VSE InfoScale Enterprise 2020 05Document20 pagesVSE InfoScale Enterprise 2020 05ymlvNo ratings yet

- Infinibad Cheat SheetDocument2 pagesInfinibad Cheat SheetSudhakar LakkarajuNo ratings yet

- Infoscale Enterprise Vse+ Level Training: SF Cluster File System High AvailabilityDocument23 pagesInfoscale Enterprise Vse+ Level Training: SF Cluster File System High AvailabilityymlvNo ratings yet

- How To Configure Samba As A Primary Domain ControllerDocument150 pagesHow To Configure Samba As A Primary Domain ControllerJoemon JoseNo ratings yet

- Install - Guide CentOS7 xCAT Stateful SLURM 1.3.9 x86 - 64Document57 pagesInstall - Guide CentOS7 xCAT Stateful SLURM 1.3.9 x86 - 64Obi Wan KenobiNo ratings yet

- Convert 11gR2 Database To RAC Using RconfigDocument10 pagesConvert 11gR2 Database To RAC Using RconfigFrank MelendezNo ratings yet

- Cluster Admin GuideDocument41 pagesCluster Admin GuideVikash BoraNo ratings yet

- Filebeat 安裝Document2 pagesFilebeat 安裝林志榮No ratings yet

- How To Create A Simple Web Service in Talend ESB - Talend Tales PDFDocument5 pagesHow To Create A Simple Web Service in Talend ESB - Talend Tales PDFjrmutengeraNo ratings yet

- How To Configure HA Proxy Load Balancer With EFT Server HA ClusterDocument8 pagesHow To Configure HA Proxy Load Balancer With EFT Server HA ClusterPrudhvi ChowdaryNo ratings yet

- Red Hat Linux - Centos Server Hardening Guide: FincsirtDocument20 pagesRed Hat Linux - Centos Server Hardening Guide: Fincsirtjuanka pinoNo ratings yet

- sg247844 GPFSDocument426 pagessg247844 GPFSSatish MadhanaNo ratings yet

- Elastic SearchDocument9 pagesElastic SearchNirmal GollapudiNo ratings yet

- DRBD-Cookbook: How to create your own cluster solution, without SAN or NAS!From EverandDRBD-Cookbook: How to create your own cluster solution, without SAN or NAS!No ratings yet

- Spse 4.3Document9 pagesSpse 4.3joddy zentha100% (1)

- Linux MusabDocument9 pagesLinux Musabchandra doddiNo ratings yet

- EBS12.2.4 Installation and Upgradation FinalDocument26 pagesEBS12.2.4 Installation and Upgradation FinalIrfan AhmadNo ratings yet

- Oracle Database WebLogic IDM JasperReports HowtoDocument21 pagesOracle Database WebLogic IDM JasperReports HowtoAlma BasicNo ratings yet

- Linux SAN Related CommandsDocument7 pagesLinux SAN Related CommandsShashi KanthNo ratings yet

- OpenGTS 2.3.9 Ubuntu 10.04Document10 pagesOpenGTS 2.3.9 Ubuntu 10.04jeaneric82No ratings yet

- GitLab InstallDocument6 pagesGitLab InstalluniroNo ratings yet

- InfluxDB Grafana Performance MonitoringDocument3 pagesInfluxDB Grafana Performance MonitoringuniroNo ratings yet

- Jboss 7 ModclusterDocument3 pagesJboss 7 ModclusteruniroNo ratings yet

- Ssh. COM: Tectia Client 6.6 User ManualDocument432 pagesSsh. COM: Tectia Client 6.6 User ManualuniroNo ratings yet

- Kubernetes UbuntuDocument45 pagesKubernetes UbuntuuniroNo ratings yet

- Embedded SystemDocument21 pagesEmbedded SystemDeepak Sharma79% (33)

- Active Passive FTPDocument3 pagesActive Passive FTPharesh4dNo ratings yet

- stm32 Selection ChartDocument8 pagesstm32 Selection ChartXiuNo ratings yet

- Output LogDocument6 pagesOutput LogRafalinpcNo ratings yet

- Release Notes V22.02 Build 12082: Colorgate Printing SoftwareDocument8 pagesRelease Notes V22.02 Build 12082: Colorgate Printing SoftwareconcacNo ratings yet

- Comparing Citrix XenServer™, Microsoft Hyper-V™ and VMware ESX™Document5 pagesComparing Citrix XenServer™, Microsoft Hyper-V™ and VMware ESX™never2tyredNo ratings yet

- Introduction To Computer NetworksDocument46 pagesIntroduction To Computer NetworksIshan MengiNo ratings yet

- ACSmart5 PICS 20190131Document3 pagesACSmart5 PICS 20190131EngineerAmr MohamedNo ratings yet

- Google Chrome OS Is An Open SourceDocument12 pagesGoogle Chrome OS Is An Open SourcejalsakantriNo ratings yet

- Module 3 Chapter 3 Remote ReplicationDocument27 pagesModule 3 Chapter 3 Remote Replicationchetana c gowdaNo ratings yet

- Tl-Ps310u V2 Qig 7106504570Document9 pagesTl-Ps310u V2 Qig 7106504570Jonas RafaelNo ratings yet

- Quick Start Guide For EX260-SENDocument9 pagesQuick Start Guide For EX260-SENDiana MIND AmayaNo ratings yet

- 1.1 Introducing-pfSenseDocument2 pages1.1 Introducing-pfSensesomayeh mosayebzadehNo ratings yet

- AssignmentDocument5 pagesAssignmentSpike Hari100% (1)

- Compaq CodesDocument5 pagesCompaq CodeszmajbkNo ratings yet

- Docs Genieacs Com en LatestDocument49 pagesDocs Genieacs Com en LatestmorebitNo ratings yet

- Dell Latitude D520: Essential Productivity, Exceptional ValueDocument2 pagesDell Latitude D520: Essential Productivity, Exceptional ValueoonNo ratings yet

- Atmel ATmega328 Test Circuit DiagramDocument6 pagesAtmel ATmega328 Test Circuit Diagramcadeyare2No ratings yet

- DS8870 Data MigrationDocument330 pagesDS8870 Data MigrationRichard FarfanNo ratings yet

- Workshop SlidesDocument183 pagesWorkshop SlidesimthiyasNo ratings yet

- OBBS PPT Azwwm4Document32 pagesOBBS PPT Azwwm4Vaishnavi ShitoleNo ratings yet

- TutorialDocument401 pagesTutorialGabi MunteanuNo ratings yet

- Panel PC - VL3 PPC - 1376798Document4 pagesPanel PC - VL3 PPC - 1376798HEXAFIRM MULTITECHNo ratings yet

- Key CloudDocument5 pagesKey CloudLiNuNo ratings yet

- 01-02 MAC Address Table ConfigurationDocument78 pages01-02 MAC Address Table ConfigurationRoberto EnriqueNo ratings yet

- Glade Technology.29802.NoneDocument6 pagesGlade Technology.29802.NonemassimoriserboNo ratings yet

- HDFS MaterialDocument24 pagesHDFS MaterialNik Kumar100% (1)

- An X2 DHP UserManualDocument44 pagesAn X2 DHP UserManualAlejandro PerezNo ratings yet

- MC Lab-Record Final - Watermark-1Document116 pagesMC Lab-Record Final - Watermark-1Mohamed SaleelNo ratings yet