0 ratings0% found this document useful (0 votes)

25 viewsEconometrics Cheatsheet en

The document provides an overview of econometrics concepts including ordinary least squares (OLS) regression. It lists the assumptions of OLS including linearity, random sampling, no perfect collinearity, conditional mean zero, homoscedasticity, no autocorrelation, and normality. It also outlines the asymptotic properties of OLS under these assumptions, such as unbiasedness, consistency, asymptotic normality, and best linear unbiased estimation. Finally, it defines key regression metrics like the sum of squared residuals, explained sum of squares, and standard error.

Uploaded by

lethihonghahbCopyright

© © All Rights Reserved

Available Formats

Download as PDF, TXT or read online on Scribd

0 ratings0% found this document useful (0 votes)

25 viewsEconometrics Cheatsheet en

The document provides an overview of econometrics concepts including ordinary least squares (OLS) regression. It lists the assumptions of OLS including linearity, random sampling, no perfect collinearity, conditional mean zero, homoscedasticity, no autocorrelation, and normality. It also outlines the asymptotic properties of OLS under these assumptions, such as unbiasedness, consistency, asymptotic normality, and best linear unbiased estimation. Finally, it defines key regression metrics like the sum of squared residuals, explained sum of squares, and standard error.

Uploaded by

lethihonghahbCopyright

© © All Rights Reserved

Available Formats

Download as PDF, TXT or read online on Scribd

You are on page 1/ 3

Econometrics Cheat Sheet Assumptions and properties Ordinary Least Squares

By Marcelo Moreno - King Juan Carlos University

Econometric model assumptions Objective - minimizePn the2 Sum of Squared Residuals (SSR):

The Econometrics Cheat Sheet Project

Under this assumptions, the OLS estimator will present min i=1 ûi , where ûi = yi − ŷi

good properties. Gauss-Markov assumptions:

Basic concepts 1. Parameters linearity (and weak dependence in time y

Simple regression model

Equation:

Definitions series). y must be a linear function of the β’s.

yi = β0 + β1 xi + ui

Econometrics - is a social science discipline with the 2. Random sampling. The sample from the population

Estimation:

objective of quantify the relationships between economic has been randomly taken. (Only when cross section)

ŷi = β̂0 + β̂1 xi

agents, test economic theories and evaluate and implement 3. No perfect collinearity.

β1 where:

government and business policies. There are no independent variables that are constant:

β̂0 = y − β̂1 x

Econometric model - is a simplified representation of the Var(xj ) ̸= 0, ∀j = 1, . . . , k. Cov(y,x)

There isn’t an exact linear relation between indepen- β0 β̂ 1 = Var(x)

reality to explain economic phenomena.

Ceteris paribus - if all the other relevant factors remain dent variables.

x

constant. 4. Conditional mean zero and correlation zero.

a. There aren’t systematic errors: E(u | x1 , . . . , xk ) = Multiple regression model

Data types E(u) = 0 → strong exogeneity (a implies b).

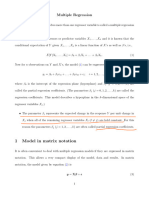

Cross section - data taken at a given moment in time, an y Equation:

b. There are no relevant variables left out of the model:

static photo. Order doesn’t matter. yi = β0 + β1 x1i + · · · + βk xki + ui

Cov(xj , u) = 0, ∀j = 1, . . . , k → weak exogeneity.

Time series - observation of variables across time. Order Estimation:

5. Homoscedasticity. The variability of the residuals is

does matter. ŷi = β̂0 + β̂1 x1i + · · · + β̂k xki

the same for all levels of x:

Panel data - consist of a time series for each observation where:

Var(u | x1 , . . . , xk ) = σu2

of a cross section. β̂0 = y − β̂1 x1 − · · · − β̂k xk

6. No auto-correlation. Residuals don’t contain infor- Cov(y,resid x )

Pooled cross sections - combines cross section from dif- β0 x 2 β̂j = Var(resid xj )j

mation about any other residuals:

ferent time periods. Corr(ut , us | x1 , . . . , xk ) = 0, ∀t ̸= s. Matrix: β̂ = (X T X)−1 (X T y)

7. Normality. Residuals are independent and identically x 1

Phases of an econometric model

1. Specification. 3. Validation. distributed: u ∼ N (0, σu2 )

8. Data size. The number of observations available must

Interpretation of coefficients

2. Estimation. 4. Utilization. Model Dependent Independent β1 interpretation

be greater than (k + 1) parameters to estimate. (It is

Regression analysis Level-level y x ∆y = β1 ∆x

already satisfied under asymptotic situations) Level-log y log(x) ∆y ≈ (β1 /100)(%∆x)

Study and predict the mean value of a variable (dependent Log-level log(y) x %∆y ≈ (100β1 )∆x

variable, y) regarding the base of fixed values of other vari- Asymptotic properties of OLS Log-log log(y) log(x) %∆y ≈ β1 (%∆x)

ables (independent variables, x’s). In econometrics it is Under the econometric model assumptions and the Central Quadratic y x + x2 ∆y = (β1 + 2β2 x)∆x

common to use Ordinary Least Squares (OLS) for regres- Limit Theorem (CLT):

sion analysis. Hold 1 to 4a: OLS is unbiased. E(β̂j ) = βj Error measurements Pn Pn

Hold 1 to 4: OLS is consistent. plim(β̂j ) = βj (to 4b Sum of Sq. Residuals: SSR = i=1 û2i = Pi=1 (yi − ŷi )2

Correlation analysis left out 4a, weak exogeneity, biased but consistent) Explained Sum of Squares:

n

SSE = Pi=1 (ŷi − y)2

Correlation analysis don’t distinguish between dependent n

Hold 1 to 5: asymptotic normality of OLS (then, 7 is Total Sum of Sq.: SST = SSE + SSR = i=1q (yi − y)2

and independent variables.

necessarily satisfied): u ∼ N (0, σu2 ) Standard Error of the Regression: σ̂u = n−k−1 SSR

Simple correlation measures the grade of linear associa- a

Hold 1 to 6: unbiased estimate of σu2 . E(σ̂u2 ) = σu2

p

tion between two variables.Pn Standard Error of the β̂’s: se(β̂) = σ̂u2P· (X T X)−1

r = Cov(x,y) √Pn i=1 ((xi −x)·(y i −y)) Hold 1 to 6: OLS is BLUE (Best Linear Unbiased Esti- n

i=1 (yi −ŷi )

2

σx ·σy = 2

Pn 2 Mean Squared Error: MSE =

i=1 (xi −x) · i=1 (yi −y) mator) or efficient. Pn n

Partial correlation measures the grade of linear associa- Absolute Mean Error: AME

|y −ŷ |

= i=1 n i i

Hold 1 to 7: hypothesis testing and confidence intervals Pn

tion between two variables controlling a third. Mean Percentage Error:

|û /y |

MPE = i=1n i i · 100

can be done reliably.

3.2-en - github.com/marcelomijas/econometrics-cheatsheet - CC-BY-4.0 license

R-squared Individual tests Dummy variables

Tests if a parameter is significantly different from a given

Is a measure of the goodness of the fit, how the regression value, ϑ. Dummy (or binary) variables are used for qualitative infor-

fits to the data: H0 : β j = ϑ mation like sex, civil state, country, etc.

SSE

R2 = SST = 1 − SSR

SST H1 : βj ̸= ϑ Takes the value 1 in a given category and 0 in the rest.

Measures the percentage of variation of y that is lin- β̂ −ϑ Are used to analyze and modeling structural changes

early explained by the variations of x’s. Under H0 : t = se(j β̂ ) ∼ tn−k−1,α/2

j in the model parameters.

Takes values between 0 (no linear explanation of the If |t| > |tn−k−1,α/2 |, there is evidence to reject H0 . If a qualitative variable have m categories, we only have to

variations of y) and 1 (total explanation of the varia- Individual significance test - tests if a parameter is sig- include (m − 1) dummy variables.

tions of y). nificantly different from zero.

When the number of regressors increment, the value of the H0 : βj = 0 Structural change

H1 : βj ̸= 0 Structural change refers to changes in the values of the pa-

R-squared increments as well, whatever the new variables

β̂j rameters of the econometric model produced by the effect

are relevant or not. To solve this problem, there is an ad- Under H0 : t = ∼ tn−k−1,α/2

se(β̂j ) of different sub-populations. Structural change can be in-

justed R-squared by degrees of freedom (or corrected R-

If |t| > |tn−k−1,α/2 |, there is evidence to reject H0 . cluded in the model through dummy variables.

squared):

2 The location of the dummy variables (D) matters:

n−1

R = 1 − n−k−1 · SSR n−1

SST = 1 − n−k−1 · (1 − R )

2 The F test

On the intercept (additive effect) - represents the mean

2 Simultaneously tests multiple (linear) hypothesis about the

For big sample sizes: R ≈ R2 difference between the values produced by the structural

parameters. It makes use of a non restricted model and a

change.

restricted model:

Hypothesis testing Non restricted model - is the model on which we want

y = β0 + δ 1 D + β 1 x 1 + u

On the slope (multiplicative effect) - represents the ef-

to test the hypothesis.

Definitions fect (slope) difference between the values produced by

Restricted model - is the model on which the hypoth-

An hypothesis test is a rule designed to explain from a sam- the structural change.

esis that we want to test have been imposed.

ple, if exist evidence or not to reject an hypothesis y = β0 + β1 x 1 + δ 1 D · x 1 + u

Then, looking at the errors, there are:

that is made about one or more population parameters. Chow’s structural test - is used when we want to analyze

SSRUR - is the SSR of the non restricted model.

Elements of an hypothesis test: the existence of structural changes in all the model param-

SSRR - is the SSR of the restricted model.

Null hypothesis (H0 ) - is the hypothesis to be tested. SSRR −SSRUR n−k−1 eters, it’s a particular expression of the F test, where the

Under H0 : F = · q ∼ Fq,n−k−1

Alternative hypothesis (H1 ) - is the hypothesis that SSRUR

null hypothesis is: H0 : No structural change (all δ = 0).

where k is the number of parameters of the non restricted

cannot be rejected when the null hypothesis is rejected.

model and q is the number of linear hypothesis tested.

Test statistic - is a random variable whose probability Changes of scale

If Fq,n−k−1 < F , there is evidence to reject H0 .

distribution is known under the null hypothesis.

Global significance test - tests if all the parameters as- Changes in the measurement units of the variables:

Critic value - is the value against which the test statistic

sociated to x’s are simultaneously equal to zero. In the endogenous variable, y ∗ = y ·λ - affects all model

is compared to determine if the null hypothesis is rejected

H0 : β1 = β2 = · · · = βk = 0 parameters, βj∗ = βj · λ, ∀j = 1, . . . , k

or not. Is the value that makes the frontier between the

H1 : β1 ̸= 0 and/or β2 ̸= 0 . . . and/or βk ̸= 0 In an exogenous variable, x∗j = xj · λ - only affect the

regions of acceptance and rejection of the null hypothesis.

In this case, we can simplify the formula for the F statistic. parameter linked to said exogenous variable, βj∗ = βj · λ

Significance level (α) - is the probability of rejecting R2 n−k−1

Under H0 : F = 1−R 2 · ∼ F k,n−k−1 Same scale change on endogenous and exogenous - only

the null hypothesis being true (Type I Error). Is chosen k

by who conduct the test. Commonly is 0.10, 0.05 or 0.01. If Fk,n−k−1 < F , there is evidence to reject H0 . affects the intercept, β0∗ = β0 · λ

p-value - is the highest level of significance by which the

null hypothesis cannot be rejected (H0 ). Confidence intervals Changes of origin

The rule is: if the p-value is less than α, there is evidence The confidence intervals at (1 − α) confidence level can be

Changes in the measurement origin of the variables (en-

to reject the null hypothesis at that given α (there is calculated:

dogenous or exogenous), y ∗ = y + λ - only affects the

evidence to accept the alternative hypothesis). β̂j ∓ tn−k−1,α/2 · se(β̂j ) model’s intercept, β0∗ = β0 + λ

3.2-en - github.com/marcelomijas/econometrics-cheatsheet - CC-BY-4.0 license

Multicollinearity Heteroscedasticity Auto-correlation

Perfect multicollinearity - there are independent vari- The residuals ui of the population regression function do The residual of any observation, ut , is correlated with the

ables that are constant and/or there is an exact linear not have the same variance σu2 : residual of any other observation. The observations are not

relation between independent variables. Is the breaking Var(u | x1 , . . . , xk ) = Var(u) ̸= σu2 independent.

of the third (3) econometric model assumption. Is the breaking of the fifth (5) econometric model as- Corr(ut , us | x1 , . . . , xk ) = Corr(ut , us ) ̸= 0 ∀t ̸= s

Approximate multicollinearity - there are indepen- sumption. The “natural” context of this phenomena is time series. Is

dent variables that are approximately constant and/or the breaking of the sixth (6) econometric model as-

there is an approximately linear relation between inde-

Consequences sumption.

OLS estimators still are unbiased.

pendent variables. It does not break any economet-

OLS estimators still are consistent. Consequences

ric model assumption, but has an effect on OLS.

OLS is not efficient anymore, but still a LUE (Linear OLS estimators still are unbiased.

Consequences Unbiased Estimator). OLS estimators still are consistent.

Perfect multicollinearity - the equation system of Variance estimations of the estimators are biased: OLS is not efficient anymore, but still a LUE (Linear

OLS cannot be solved due to infinite solutions. the construction of confidence intervals and the hypoth- Unbiased Estimator).

Approximate multicollinearity esis testing is not reliable. Variance estimations of the estimators are biased:

– Small sample variations can induce to big variations in the construction of confidence intervals and the hypoth-

the OLS estimations.

Detection esis testing is not reliable.

Graphs - look u y

– The variance of the OLS estimators of the x’s that are

collinear, increments, thus the inference of the param-

for scatter pat- Detection

terns on x vs. u Graphs - look for scatter patterns on ut−1 vs. ut or

eter is affected. The estimation of the parameter is x

or x vs. y plots. make use of a correlogram.

very imprecise (big confidence interval).

Ac. Ac.(+) Ac.(-)

x ut ut ut

Detection

Correlation analysis - look for high correlations be- Formal tests - White, Bartlett, Breusch-Pagan, etc.

tween independent variables, > |0.7|. Commonly, the null hypothesis: H0 : Homoscedasticity. ut−1 ut−1

Variance Inflation Factor (VIF) - indicates the in- ut−1

Correction

crement of Var(β̂j ) because of the multicollinearity. Use OLS with a variance-covariance matrix estimator ro-

1

VIF(β̂j ) = 1−R 2 bust to heteroscedasticity (HC), for example, the one pro-

2

j

Formal tests - Durbin-Watson, Breusch-Godfrey, etc.

where Rj denotes the R-squared from a regression be- posed by White.

Commonly, the null hypothesis: H0 : No auto-correlation.

tween xj and all the other x’s. If the variance structure is known, make use of Weighted

– Values between 4 to 10 suggest that it is advisable to Least Squares (WLS) or Generalized Least Squares Correction

analyze in more depth if there might be multicollinear- (GLS): Use OLS with a variance-covariance matrix estimator ro-

ity problems. – Supposing that Var(u) = σu2 ·xi , divide the model vari- bust to heterocedasticity and auto-correlation (HAC), for

– Values bigger than 10 indicates that there are multi- ables by the square root of xi and apply OLS. example, the one proposed by Newey-West.

collinearity problems. – Supposing that Var(u) = σu2 · x2i , divide the model Use Generalized Least Squares. Supposing yt = β0 +

One typical characteristic of multicollinearity is that the variables by xi (the square root of x2i ) and apply OLS. β1 xt + ut , with ut = ρut−1 + εt , where |ρ| < 1 and εt is

regression coefficients of the model aren’t individually dif- If the variance structure is not known, make use of Fea- white noise.

ferent from zero (due to high variances), but jointly they sible Weighted Least Squared (FWLS), that estimates a – If ρ is known, create a quasi-differentiated model where

are different from zero. possible variance, divides the model variables by it and ut is white noise and estimate it by OLS.

then apply OLS. – If ρ is not known, estimate it by -for example- the

Correction Make a new model specification, for example, logarithmic Cochrane-Orcutt method, create a quasi-differentiated

Delete one of the collinear variables. transformation (lower variance). model where ut is white noise and estimate it by OLS.

Perform factorial analysis (or any other dimension reduc-

tion technique) on the collinear variables.

Interpret coefficients with multicollinearity jointly.

3.2-en - github.com/marcelomijas/econometrics-cheatsheet - CC-BY-4.0 license

You might also like

- Econometrics - Fumio Hayashi (Solutions Analytical)No ratings yetEconometrics - Fumio Hayashi (Solutions Analytical)44 pages

- Predictive Analytics and Machine Learning in BusinessNo ratings yetPredictive Analytics and Machine Learning in Business7 pages

- Introduction To Econometrics (ET2013) : Teresa RandazzoNo ratings yetIntroduction To Econometrics (ET2013) : Teresa Randazzo30 pages

- The Classical Linear Regression and EstimatorNo ratings yetThe Classical Linear Regression and Estimator3 pages

- Estimating A Regression Line: F. Chiaromonte 1No ratings yetEstimating A Regression Line: F. Chiaromonte 113 pages

- Errors in Solutions To Systems of Linear EquationsNo ratings yetErrors in Solutions To Systems of Linear Equations6 pages

- Chapter3 Econometrics MultipleLinearRegressionModelNo ratings yetChapter3 Econometrics MultipleLinearRegressionModel41 pages

- R300 - Summer 2018 Advanced Econometric Methods Study AidNo ratings yetR300 - Summer 2018 Advanced Econometric Methods Study Aid9 pages

- SimpleMultipleLinearRegression_FoundationalMathofAI_S24No ratings yetSimpleMultipleLinearRegression_FoundationalMathofAI_S246 pages

- Brief Notes On The Arrow-Debreu-Mckenzie Model of An EconomyNo ratings yetBrief Notes On The Arrow-Debreu-Mckenzie Model of An Economy6 pages

- Ordinary Least Squares Linear Regression Review: Week 4No ratings yetOrdinary Least Squares Linear Regression Review: Week 410 pages

- Module III (Part II)(Regression and Time Series)No ratings yetModule III (Part II)(Regression and Time Series)118 pages

- Class Exercises Topic 2 Solutions: Jordi Blanes I Vidal Econometrics: Theory and ApplicationsNo ratings yetClass Exercises Topic 2 Solutions: Jordi Blanes I Vidal Econometrics: Theory and Applications12 pages

- Economics 508 Introduction To Simultaneous Equation Econometric ModelsNo ratings yetEconomics 508 Introduction To Simultaneous Equation Econometric Models10 pages

- Notes On Linear Programming: CE 377K Stephen D. Boyles Spring 2015No ratings yetNotes On Linear Programming: CE 377K Stephen D. Boyles Spring 201517 pages

- Multiple Linear Regression & Nonlinear Regression ModelsNo ratings yetMultiple Linear Regression & Nonlinear Regression Models51 pages

- Lecture 15: Diagnostics and Inference For Multiple Linear Regression 1 ReviewNo ratings yetLecture 15: Diagnostics and Inference For Multiple Linear Regression 1 Review7 pages

- Simultaneous Equations: Main Reading: Chapter 18,19 +20No ratings yetSimultaneous Equations: Main Reading: Chapter 18,19 +2049 pages

- Maximum Likelihood Estimation of Heckman's Sample Selection ModelNo ratings yetMaximum Likelihood Estimation of Heckman's Sample Selection Model16 pages

- Lecture 15-3 Cross Section and Panel (Truncated Regression, Heckman Sample Selection)No ratings yetLecture 15-3 Cross Section and Panel (Truncated Regression, Heckman Sample Selection)50 pages

- Goode Shailer Wilson Jankowski 2014 JMISNo ratings yetGoode Shailer Wilson Jankowski 2014 JMIS40 pages

- Determinants of Adoption of Improved Agricultural Technology and Its Impact On Income of Smallholder Farmers in Chiro District West Hararghe Zone, Oromia National Regional State, Ethiopia100% (1)Determinants of Adoption of Improved Agricultural Technology and Its Impact On Income of Smallholder Farmers in Chiro District West Hararghe Zone, Oromia National Regional State, Ethiopia10 pages

- Get Applied Linear Regression 4th Edition Sanford Weisberg free all chaptersNo ratings yetGet Applied Linear Regression 4th Edition Sanford Weisberg free all chapters37 pages

- Statistical Methods For Spatial Data Analysis 07f414bf098301cdNo ratings yetStatistical Methods For Spatial Data Analysis 07f414bf098301cd507 pages

- Further Issues With The Classical Linear Regression Model: Introductory Econometrics For Finance' © Chris Brooks 2002 1No ratings yetFurther Issues With The Classical Linear Regression Model: Introductory Econometrics For Finance' © Chris Brooks 2002 174 pages

- Answer Key: Problem Set 4: Colgpa Hsgpa Act SkippedNo ratings yetAnswer Key: Problem Set 4: Colgpa Hsgpa Act Skipped7 pages

- Youth Employment and Entrepreneurship in The PhilippinesNo ratings yetYouth Employment and Entrepreneurship in The Philippines19 pages

- Heteroskedasticity in Regression: Paul E. JohnsonNo ratings yetHeteroskedasticity in Regression: Paul E. Johnson55 pages

- Effects of Corruption and Informality On Economic Growth Through ProductivityEconomiesNo ratings yetEffects of Corruption and Informality On Economic Growth Through ProductivityEconomies22 pages

- Chapter16 Econometrics Measurement Error ModelsNo ratings yetChapter16 Econometrics Measurement Error Models21 pages

- Machine Learning: Notes by Aniket Sahoo - Part IINo ratings yetMachine Learning: Notes by Aniket Sahoo - Part II140 pages