Time Series Forecasting Final Report

Time Series Forecasting Final Report

Uploaded by

Malati ShalavadiCopyright:

Available Formats

Time Series Forecasting Final Report

Time Series Forecasting Final Report

Uploaded by

Malati ShalavadiCopyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Time Series Forecasting Final Report

Time Series Forecasting Final Report

Uploaded by

Malati ShalavadiCopyright:

Available Formats

Time Series Forecasting

Tejas Srivatsav Rachana Narayanan Vamsi Ratnakaram Gore Kao

Carnegie Mellon University Carnegie Mellon University Carnegie Mellon University Carnegie Mellon University

Pittsburgh, PA Pittsburgh, PA Pittsburgh, PA Pittsburgh, PA

tsrivats@andrew.cmu.edu rmuralin@andrew.cmu.edu vratnaka@andrew.cmu.edu gorek@andrew.cmu.edu

1. Introduction data is also complex and may exhibit subtle trends. In

addition, real-time stock prices can exhibit extreme volatility

Time series in the financial industry has long played from day-to-day trades. Therefore, it is of interest to focus

a major role in many business sectors in pricing, asset on speed of computation and reduction in error rates while

management, and risk management. Time series forecasting making fast predictions in the short term trend of a stock

is a technique for predicting future events and making price. We want to thus explore quantum alternatives to

scientific predictions by analyzing historical time stamped classical methods.

data, based on the assumption that future trends will hold

similar to previous trends. This involves using models fit

on historical data to predict future values. Therefore, the

aim of forecasting time series data is to understand how the

3. Related Works

sequence of observations will continue in the future. For

this topic, we specifically focus on predicting future stock

prices given established historical data. [1]. We have seen the use of parameterised quantum circuits

Predicting future stock prices will involve analyzing a (PQCs) where forward propagation is fully quantum and

few different components of the time series data. This can back propagation is classical. Previous works found that

include but is not limited to: PQCs perform similar or better than classical BiLSTM net-

• Trend - The upward or downward movement of the

works on financial time series forecasting with significantly

data over the time span. This can be both linear or non- less training time [1]. Their work applies the implementation

linear. We can look at global and local trends within from Quantum Long Short-Term Memory [2]. They replace

this too. the classical networks within a typical LSTM cell with a

• Seasonality - The repetitive fluctuation from the trend

PQC instead. This PQC architecture contains 3 components:

that occurs within a calendar year at fixed intervals or

• Data Encoding Layer - The input vector is trans-

frequencies.

formed into rotation angles corresponding to qubit

• Cycles - Cyclical fluctuations occur due to macro

rotations.

economic factors like recession. Periodicity of cyclical

• Variational Layer: - Some basic operations occur in

fluctuation is not fixed as opposed to the fixed nature

the quantum domain.

of seasonality.

• Quantum Measurement Layer - The outputted ex-

• Irregularity - Also called White Noise, this constitutes

pected values are used to update the model and keep

the uncorrelated random component of the time series

track of desired cell state elements.

data.

The goal in processing these components will ultimately Emmanoulopoulos and Dimoska note that within the

to be able to make informed, strategic and accurate decisions classical domain, transformer-based models have better pre-

on stocks. Currently, time-series problems are being solved dictive power for time series, however, there remains no

by conventional statistics (e.g ARIMA), machine learning robust implementation of quantum transformers.

models including recurrent neural networks (RNNs), and Sipio proposes using a similar approach to devise a

support vector machines (SVMs) to help us gain insights Quantum-Enhanced Transformer by performing the linear

on the trend of future stock prices. transformations for Multi-Headed Attention within the quan-

tum realm rather than the classical realm [3]. This mixed

2. Problem Statement architecture is used to perform sentiment analysis on the

IMDB dataset, however we expect that it will work just as

Forecasting stock prices is not always an exact prediction well for financial time series forecasting. This is what we

and the likelihood of forecasts can vary wildly. Time series aim to explore.

4. Methods

4.1. Classical LSTM

Figure 1. LSTM cell.

Classical LSTM or Long-Short Term Memory networks

are a kind of recurrent neural network (RNN) for sequence

and temporal dependency data modelling. They are a kind

of RNNs that can learn a longer range of sequences that Figure 2. Classical Transformer Architecture

are dependent on each other. Its range of applications in-

clude machine translation, and NLP models. Compared to Figure 2 represents a typical Transformer architecture.

a regular RNN, it partially solves the problem of vanishing Firstly the input is embedded with positional encoding in

gradients of the original RNN. order to capture the sequential relationships between the

They are composed of memory cells which are con-

data. This encoding allows the model to process data without

nected through layers of recurrent connections. Memory

preserving the sequential order.

cells are responsible for remembering information over long

Next it is processed by the encoder-decoder structure.

periods of time. Each memory cell in an LSTM contains an

The block on the left side represents the model’s encoder

input gate, output gate, and a forget gate. The input gate

layer and the block on the right up until the last linear

determines information allowed to enter the memory cell,

layer represents its decoder layer. Each encoder consists

the output gate determines which information is allowed to

of 3 layers where each layer has 2 sublayers. The first

leave the memory cell, and the forget gate determines the

sublayer implements multi-head self-attention, and the other

information that is forgotten.

implements a fully connected feed-forward neural network

Each LSTM cell at time step t has an additional cell

with two dense layers and a ReLU activation in between.

state. This allows gradients to flow unchanged and can be

Multi-head self-attention serves to linearly project the input

seen as the memory of the LSTM cell. This makes the

sequence in multiple ways so as to extract information about

LSTM more stable, and allows it to predict more accurately.

the elements’ relationships. In this case we used 3 heads so

These successes then further inspired RNN applications in

the input is projected in 3 different ways by 3 different

learning quantum applications from experimental data which

matrices WQ , WK , and WV .

has a sequential way as well.

The corresponding decoder uses the positional embed-

We consider the classical LSTM as a baseline approach

ding to reintroduce the sequential structure and outputs

for comparison with the quantum LSTM as this classical

predictions one by one based on preceding outputs. Finally,

approach is used in popular literature for time series fore-

the output of the decoder layer is fed into a linear layer and

casting tasks.

then a softmax activation to generate the final predictions.

Classically, people often use GPUs or other highly par-

4.2. Classical Transformers

allel compute units to take advantage of this architecture’s

Transformers provide a unique advantage over tradi- sequential independence. Another potential ideal compute

tional LSTMs in two main facets. Transformers are able to source would be quantum computers.

use multi-headed attention allowing the model to build var-

ious semantics using different parameters to create stronger 4.3. Quantum LSTM

models [4]. The other advantage lies in Transformer’s innate

parallelism. Since they do not have the sequential dependen- From the papers we see, we use RNN with variational

cies of LSTMs, we can run computations at a much greater quantum circuits (VQC), a kind of quantum circuits with

rate. tunable gate parameters (or trained) classically. We use a

hybrid quantum-classical approach, similarly seen in pre- Figure 4 shows the classical attention architecture. As

vious works [5], through optimizing with tuned repetitions. previously discussed, the attention architecture is what

We utilize greater power through quantum entanglement and makes the transformer unique. By encoding positional data

we leverage quantum parallelism, which allows quantum within the series itself, we longer need to process data

computers to perform multiple calculations simultaneously. sequentially and instead can process time series data in

The LSTM’s efficiency and trainability can be improved by parallel. The VKQ layers in Figure 4 are what build these

replacing some of the layers with variational quantum layers positional associations within the data and are able to iden-

making it a quantum classical hybrid model. tify trends.

In traditional LSTMs, the input data is processed using Recognizing these layers are the core of the classical

a series of fully connected layers and gates, which can be transformer, we made this the target of our Quantum opti-

computationally expensive. In contrast, Quantum LSTMs mizations. In order to do this we decided to use Pennylane’s

use quantum circuits to process the input data, which library which provides primitives for building Quantum

can potentially offer significant performance improvements Layers from defined Quantum Circuits [6]. In Figure 5 we

compared to traditional LSTMs. show how we use these primitives to build a Quantum Layer.

However, it is important to note that Quantum LSTMs

are still a relatively new and experimental technology, and

there is ongoing research into the best ways to implement

and use them.

Figure 5. Building and Implementing Quantum Layers

First we start by defining our quantum device (in this

case Pennylane’s local qubit simulator). Then we need define

our Quantum circuit with three key components:

Figure 3. QLSTM Cell. Each W represents a variational quantum circuit

(VQC) layer. • AngleEmbedding - Converts inputs in Quantum Gate

Rotations

To implement QLSTM, we replace the key layers of the • BasicEntangler - Entangles Rotations w/ CNOT Gates

LSTM with variational quantum layers enabled by Penny- • qml.expval (PauliZ) - Returns Quantum Measure-

lane. ments (Expectation Value)

After this, we use Pennylane’s QNode function to con-

4.4. Quantum Transformers vert the defined quantum circuit into a Tensorflow Keras

Layer [6]. After this conversion, the quantum layer can be

The design of our quantum transformer was inspired treated as any other tensorflow layer and can be used as a

by the classical transformer architecture. We decided to building block for larger models. In this case, we gave each

approach this problem by replacing certain classical Dense quantum layer 8 qubits. This limits our sequence length to

Linear Layers with Quantum Layers. Specifically we tar- 8 as well. Recall in the transformer architecture we process

geted the Attention Layers in the Classical Transformer. data in parallel. In theory this speeds up execution when

using highly parallel machines like GPU’s which can run

operations across 100s of cores. This unfortunately doesn’t

translate perfectly to quantum computers. While we still

compute in parallel, size is a large factor when attempting

to use modern quantum devices limiting the amount of par-

allelism we can take advantage of. This makes the number

of qubits are the main limiting factor. Considering we have

3 layers per attention head and we have 2 attention heads,

this culminates in a total of 48 qubits. With this value we

are already hitting the limits of many of today’s quantum

computers, but we still wanted to explore the performance

Figure 4. Multi-Headed Attention Layers of this novel architecture.

5. Dataset choice We use Mean Squared Error (MSE) for the loss function

and the ADAM optimizer with a learning rate of 0.01. The

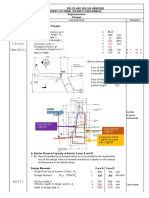

We used S&P 500 Index dataset, a multivariate dataset entire model is trained for 100 epochs. We use Root Mean

on stock market data. The dataset comprises data from 1927 Squared Error (RMSE) as the final evaluation metric for

to 2020. We filtered this dataset to look for values from 2010 both the train and test set in the classical LSTM model.

to 2020 because it took extensive time to run the quantum Figure 8 shows the training loss over the number of

methods on datasets larger than this. Figure 6 shows the epochs. The MSE loss starts around 0.42 and quickly con-

raw data plotted. We split our dataset to a 70% train, and verges to about 0.000365. The training RMSE reaches a

30% test ratio. The input parameters to all our models were value of 24.06. Total training time for 100 epochs is about

previous adjusted closing values, and the output parameters 2 minutes.

are new adjusted closing values.

Figure 6. Time series plot of raw data used.

The range of our data from 2010 to 2020 consists of Figure 8. Training MSE loss plot over 100 epochs.

2828 total data points. Prior to input to the models, all data

points are normalized between -1 and 1. The final output prediction results on the test set are

shown in Figure 9 with testing set RMSE of 137.24.

6. Experiments and Results

6.1. Classical LSTM

A small, simple model is used for the classical LSTM

to model the time series data. The architecture consists of 2

layers: the LSTM layer followed by the output Linear layer.

A hidden dimension size of 32 is used for the LSTM layer.

The overall model consists of 12928 trainable parameters in

the LSTM layer and 33 in the Linear layer, totaling 12961

total trainable parameters (shown in Figure 7).

Figure 9. Output prediction (blue) and groundtruth (red) of the S&P 500

adjusted close price test set using classical LSTM model.

6.2. Classical Transformer

Through experimentation we found that a small trans-

former model with a short sequence length had the most

predictive power on the test data. Specifically, our architec-

ture consists of 1 time embedding layer, 3 encoder layers, 3

decoder layers, and a linear output layer. The time embed-

ding layer was used to encode the dates as a combination of

Figure 7. Classical LSTM model parameters. a periodic (based on sine function) and linear component.

Each encoder layer has a multi-attention layer with 3 heads

A sequence length of 100 data points is used as input to and a feed-forward dimension with 2 dense layers and a

the model at each iteration. For a sequence length of 100, ReLU activation. A sequence length of 4 worked best, and

this includes 99 steps to unroll the entire LSTM and 1 last a dropout of 0.1 was used to prevent overparameterization.

step for the output prediction. This process models a many- The entire model consists of 345 trainable parameters in the

to-one problem for time series forecasting as the input is a encoder layers and 746 trainable parameters in total.

past sequence and the output is a single numerical prediction We use Mean Squared Error (MSE) for the loss function

of the adjusted close price. and the ADAM optimizer with a learning rate of 0.001. The

entire model is trained for 100 epochs so as to compare 6.3. Quantum LSTM

with the LSTM results, however it performed best on the

validation set after 27 epochs and so that is what was

ultimately used. As with the LSTM counterpart, we use Root

Mean Squared Error (RMSE) as the evaluation metric for With fewer network parameters, the QLSTM can learn

the train and test sets in the classical transformer model. faster than the classical LSTM.

Figure 10 below shows the training and validation loss

over the number of epochs. The MSE loss starts around 0.65

• 2 layers : LSTM and variational layer

and quickly converges to about 0.0010. The training RMSE

• 293 trainable parameters dependent on qubits

reaches a value of 39.72. Total training time for 100 epochs

• qubits and epochs varied

is about 6.5 minutes, however it converges after only 1.5

• Modified from the classical shallow regressional LSTM

minutes.

model

We kept a batch size of 4, and a sequence length of 8.

We ran around a 100 epochs, and varied the epochs from

1, 20, 40, and 100. We use a single layer (shallow model)

for the interest of time as training many epochs took alot of

time. We also kept parameters small for the same reason.

Training time was heavily dependent on qubits and epochs.

We also use a Mean Squared Error (MSE) for the loss

function and the ADAM optimizer with a learning rate of

0.01. We use Root Mean Squared Error (RMSE) as the final

evaluation metric for both the train and test set in this model

Figure 10. Training and Validation MSE loss plot over 100 epochs.

as well.

The final output prediction results on the test set are

shown in Figure 11 with testing set RMSE of 186.64.

Figure 11. Output prediction (blue) and groundtruth (red) of the S&P 500

Figure 12. Trained with 3 qubits and 20 epochs.

adjusted close price test set using classical transformer model.

As can be observed, the transformer predicts the test

set less accurately than the classical LSTM contrary to

our initial expectations. But even more surprisingly, the

transformer takes longer to train for the same amount of

epochs as the LSTM despite having almost 20 times fewer

trainable parameters. It is true that the architecture is more

complicated than what the amount of trainable parameters

might suggest, relative to the LSTM, however we thought

that the parallelization might lead to a speed up in compu-

tation. Clearly this was not the case, at least for the chosen

hyperparameters. The model tended to overfit the training

set when we experimented with a larger network and it

overemphasized earlier inputs when increasing the sequence

length. Part of the results might be explained by the the size

of the dataset and the number of features used, or perhaps

it is just the consequence of the task that we chose. Figure 13. 4 qubits, 20 epochs.

software with the current ML framework has been difficult.

But, this is a proof of concept that lends itself to more

experimentation given more compute power.

6.4. Quantum Transformer

The final model structure can summarized in Figure 17.

For the sake of training time we decided to only use one

transformer layer in the quantum implementation. According

to the model summary this model had a total of 243 trainable

parameters.

Figure 14. 4 qubits, 40 epochs.

Figure 15. 4 qubits, 100 epochs.

Figure 17. Quantum Transformer Model Structure

When training this model we used a batch size of 4,

a sequence length of 8, and 2 attention heads. These were

the only parameters we attempted to tune. We trained On

average each training epoch took about 3-4 hours. This was

a limiting factor in how much we could tune and optimize

our parameters (discussed more in the next section), so we

decided to stick with 5 training epochs.

Loss Metrics:

• Training Data:

- Loss: 0.0010

- MAE: 0.0250

- Loss: 0.0010

• Validation Data:

- Loss: 0.0105

Figure 16. QLSTM vs LSTM train loss. - MAE: 0.0856

- Loss: 23.6646

We see that 3 qubits in general gives us negligible • Test Data:

results. Increasing the epochs shows better performance in - Loss: 0.1162

the classical LSTM, and the quantum LSTMs. We also see - MAE: 0.3089

that increasing the qubits causes lesser deviation. Experi- - Loss: 88.1149

menting with 5 qubits took approximately 1 hour per epoch, Figure 18 shows promising results with the train and

and generating graphs for 100 epochs was challenging. validation data, but falls apart when trying to model the

Despite their divergence, they manage to capture parts of test set. Overall, when we compare this model with the

the variation. Under the constraint of less parameters, we classical implementation we see that we actually suffer a

see that the QLSTM learns significantly more information lot in terms of performance. There are a few reasons as

in lesser time. It also learns local features well. Large to why we believe this is the case. First of all, due to

scale time dependent data modelling of time series data the size of each transformer layer, we were only able to

with a performance limitation in the quantum simulation use 1 transformer layer as opposed to 3 in the classical

compatibility with any of AWS’s offered devices and Pen-

nylane’s library primitives. When changing our quantum

device to any of the AWS offering’s our quantum layers

began outputting empty shaped tensors (i.e. nothing). We ex-

perimented with attempting to compile Pennylanes quantum

layer example on AWS and found similar issues. We had

to concede that the AWS Plug-in might not facilitate some

of the primitives that we were trying to use. As a result,

we were stuck trying to optimize our models in simulation

which took a really long time (although we anticipate due

to polling delays time would have been a constraint with

AWS’s quantum devices as well).

Some of our peers also faced issues with Pennylane’s

library and instead switched to IBM Qiskit to build their

models. We also would suggest any future attempts of this

project try out IBM Qiskit to build/train these models.

The previously mentioned quantum constraints also lim-

ited our choice of training data. From our survey of other

attempts to use transformer architectures for financial time

series modeling, it’s quite clear that its predictive power is

very poor unless lots of different time series data is incorpo-

rated. Under ideal conditions we would have used the entire

S&P 500 dataset, which dates back to 1927. We also could

have used other features from the dataset such as open price,

the daily high, the daily low, and volume to build a more

informed model. We speculate that incorporating additional

stocks or indices might further improve our model and create

Figure 18. Quantum Transformer Results the right environment for the transformer architecture to

succeed.

model. We also were faced with the problem of time. As

we were limited to only feasibly training around 5 epochs, References

we believe that the model wasn’t able to learn enough.

We have reason to believe with more epochs, the quantum [1] D. Emmanoulopoulos and S. Dimoska, “Quantum machine learning

in finance: Time series forecasting,” arXiv preprint arXiv:2202.00599,

model may be able to catch up to the classical model. When 2022.

tuning our classical model, we found that at a low number

[2] S. Y.-C. Chen, S. Yoo, and Y.-L. L. Fang, “Quantum long short-term

of epochs we would see flat lines in the test data, similar memory,” in ICASSP 2022-2022 IEEE International Conference on

to the quantum transformer’s output. Unfortunately due to Acoustics, Speech and Signal Processing (ICASSP). IEEE, 2022, pp.

the time constraint and challenges associated with getting 8622–8626.

the quantum model to even build/train we were unable to [3] R. D. Sipio, “Toward a quantum transformer,” Jan 2021. [On-

try more epochs. line]. Available: https://towardsdatascience.com/toward-a-quantum-

transformer-a51566ed42c2

7. Limitations and Future Works [4] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N.

Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,”

Advances in neural information processing systems, vol. 30, 2017.

With this project we faced a few main challenges. One [5] A. Ceschini, A. Rosato, and M. Panella, “Hybrid quantum-classical

of our primary constraints was time. Initially, we expected recurrent neural networks for time series prediction,” in 2022 Interna-

training to be a trivial amount of time, however, we realized tional Joint Conference on Neural Networks (IJCNN). IEEE, 2022,

this was far from the case. Epochs took a lot of time to train pp. 1–8.

especially in simulation. Our Quantum LSTM even with it’s [6] M. Henderson, S. Shakya, S. Pradhan, and T. Cook, “Quanvolutional

relatively few number of qubits took on the order of minutes. neural networks: Powering image recognition with quantum circuits,”

2019. [Online]. Available: https://arxiv.org/abs/1904.04767

This only got worse as the number qubits increased. With

our transformer architecture epochs were on the order of

hours.

We initially thought that switching from local simulators

to AWS similators or actual QPUs would help our training

times, but we were met by a different challenge here,

our code wouldn’t compile on AWS! For this project we

intended to use Pennylane’s AWS Plug-in, which promised

You might also like

- Digital Modulations using MatlabFrom EverandDigital Modulations using MatlabRating: 4 out of 5 stars4/5 (6)

- Stock Price Prediction Using Long Short Term Memory: International Research Journal of Engineering and Technology (IRJET)Document9 pagesStock Price Prediction Using Long Short Term Memory: International Research Journal of Engineering and Technology (IRJET)Amit SheoranNo ratings yet

- Dayananda Sagar College of Engineering, Department of Computer Science and EngineeringDocument20 pagesDayananda Sagar College of Engineering, Department of Computer Science and EngineeringSaptadip SahaNo ratings yet

- Stock Prediction Using Recurrent Neural Network (RNN)Document24 pagesStock Prediction Using Recurrent Neural Network (RNN)XellVonConrad0% (1)

- A Deep Learning Bidirectional Long-Short Term Memory Model For Short-Term Wind Speed ForecastingDocument7 pagesA Deep Learning Bidirectional Long-Short Term Memory Model For Short-Term Wind Speed ForecastingSaid DjaballahNo ratings yet

- CS5560 Lect12-RNN - LSTMDocument30 pagesCS5560 Lect12-RNN - LSTMMuhammad WaqasNo ratings yet

- Long Short-Term Memory Survey PaperDocument6 pagesLong Short-Term Memory Survey PaperHimanshu GaurNo ratings yet

- STMs and LSTM Variations For PredictionDocument16 pagesSTMs and LSTM Variations For PredictionNikhil SainiNo ratings yet

- Exploring The Use of Recurrent Neural Networks For Time Series ForecastingDocument5 pagesExploring The Use of Recurrent Neural Networks For Time Series ForecastingInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Recurrent Neural Networks: Anahita Zarei, PH.DDocument37 pagesRecurrent Neural Networks: Anahita Zarei, PH.DNickNo ratings yet

- Unit 3-2Document50 pagesUnit 3-2Akshaya GopalakrishnanNo ratings yet

- Chapter IIIDocument27 pagesChapter IIIvits.20731a0433No ratings yet

- NLP Exp1Document5 pagesNLP Exp105-Neha KadamNo ratings yet

- EE746 - ReportDocument6 pagesEE746 - ReportSai Susrush KadiyalaNo ratings yet

- Unit 4 - DLDocument23 pagesUnit 4 - DLFaiselNo ratings yet

- Sag Heer 2019Document19 pagesSag Heer 2019Abimael Avila TorresNo ratings yet

- Multi-Step Ahead Time Series Forecasting For Different Data Patterns Based On LSTM Recurrent Neural NetworkDocument6 pagesMulti-Step Ahead Time Series Forecasting For Different Data Patterns Based On LSTM Recurrent Neural NetworkAnish ShahNo ratings yet

- DL Ut - 2Document30 pagesDL Ut - 2Name SurnameNo ratings yet

- Jeff Augen - Trading Options at Expiration-Strategies and Models For Winning The EndgameDocument6 pagesJeff Augen - Trading Options at Expiration-Strategies and Models For Winning The EndgameBe SauNo ratings yet

- Wang 2017Document5 pagesWang 2017abhishek dhangarNo ratings yet

- AML (Advanced Machine Learning)Document11 pagesAML (Advanced Machine Learning)jitunnirmalNo ratings yet

- Deep Learning NotesDocument44 pagesDeep Learning NotesAJAY SINGH NEGI100% (1)

- Unit 3Document27 pagesUnit 3ABDHUL KALAMNo ratings yet

- 4-1 NicDocument26 pages4-1 NicSwastik AgarwalNo ratings yet

- Unit 3Document8 pagesUnit 3Pranav jadhavNo ratings yet

- Liquid Neural Networks PresentationDocument19 pagesLiquid Neural Networks PresentationRabie SofanyNo ratings yet

- Unit 5Document76 pagesUnit 5dengduchupaaNo ratings yet

- Digital Neuromorphic Design of A Liquid State Machine ForDocument8 pagesDigital Neuromorphic Design of A Liquid State Machine ForPhuong anNo ratings yet

- A Recurrent Neural Network With Long Short-Term Memory For State of Charge Estimation of Lithium-Ion BatteriesDocument5 pagesA Recurrent Neural Network With Long Short-Term Memory For State of Charge Estimation of Lithium-Ion BatteriesBINITHA MATHEWNo ratings yet

- Mechanical State Prediction Based On LSTM Neural NetwokDocument6 pagesMechanical State Prediction Based On LSTM Neural Netwokklaim.game27No ratings yet

- ML (Cs-601) Unit 4 CompleteDocument45 pagesML (Cs-601) Unit 4 Completeread4freeNo ratings yet

- Stock Trend Prediction: A Semantic Segmentation Approach: Shima Nabiee and Nader BagherzadehDocument13 pagesStock Trend Prediction: A Semantic Segmentation Approach: Shima Nabiee and Nader BagherzadehParaskevi KivroglouNo ratings yet

- Stock Price Trends Prediction PaperDocument4 pagesStock Price Trends Prediction PaperShashank ChowdaryNo ratings yet

- Shah Etal 2018 - IEEE 2018Document9 pagesShah Etal 2018 - IEEE 2018fortniteNo ratings yet

- A Simple Way To Initialize Recurrent Networks of Rectified Linear UnitsDocument9 pagesA Simple Way To Initialize Recurrent Networks of Rectified Linear Unitsomonait17No ratings yet

- Deep Learning RNNDocument53 pagesDeep Learning RNNsrpatil051100% (1)

- Improved LSTM Based On Attention Mechanism For Short-Term Traffic Flow PredictionDocument6 pagesImproved LSTM Based On Attention Mechanism For Short-Term Traffic Flow PredictionAdriano MedeirosNo ratings yet

- Forecasting Wavelet Transformed Time Series With Attentive Neural NetworksDocument6 pagesForecasting Wavelet Transformed Time Series With Attentive Neural NetworksHind AlmisbahiNo ratings yet

- LSTM Networks Thesis UpdatedDocument5 pagesLSTM Networks Thesis UpdatedZUBAID HASAN 1907026No ratings yet

- Shah Etal 2018 - IEEE 2018Document9 pagesShah Etal 2018 - IEEE 2018mannem karthikNo ratings yet

- Temporal Pattern Recognition in Noisy No PDFDocument29 pagesTemporal Pattern Recognition in Noisy No PDFМладен ВидовићNo ratings yet

- Time Series Forecasting Using Backpropagation Neural NetworksDocument13 pagesTime Series Forecasting Using Backpropagation Neural NetworksAasim MallickNo ratings yet

- LSTMDocument12 pagesLSTMakshithasonia333No ratings yet

- Unit 3Document41 pagesUnit 3Jaya prakashNo ratings yet

- LSTMDocument14 pagesLSTMAbhishek GuptaNo ratings yet

- Dynamic Memristor-Based Reservoir Computing For High-Efficiency Temporal Signal ProcessingDocument9 pagesDynamic Memristor-Based Reservoir Computing For High-Efficiency Temporal Signal Processinghappydsz0627No ratings yet

- A Hybrid Method of Exponential Smoothing and Recurre - 2020 - International JourDocument11 pagesA Hybrid Method of Exponential Smoothing and Recurre - 2020 - International JourcrackendNo ratings yet

- BusinessDocument10 pagesBusinessvarshashajan2000No ratings yet

- Transformers - IntroductionDocument22 pagesTransformers - IntroductionAmirdha Varshini SNo ratings yet

- Time Series Forecasting Using LSTM Networks: A Symbolic ApproachDocument12 pagesTime Series Forecasting Using LSTM Networks: A Symbolic Approach8c354be21dNo ratings yet

- Stock Price PredictionDocument8 pagesStock Price PredictionHamdanNo ratings yet

- Neurocomputing: Jose Maria P. Menezes JR., Guilherme A. BarretoDocument9 pagesNeurocomputing: Jose Maria P. Menezes JR., Guilherme A. Barretonils_erickssonNo ratings yet

- Application of Neural Network To Identify Black Box Model of Twin Rotor MIMO System Based On Mean Squared Error MethodDocument6 pagesApplication of Neural Network To Identify Black Box Model of Twin Rotor MIMO System Based On Mean Squared Error MethodInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- RNN LSTMDocument37 pagesRNN LSTMbossdhruva0No ratings yet

- AAM QB With AnswerDocument4 pagesAAM QB With Answerfarida07parveenNo ratings yet

- Attractor Networks: Fundamentals and Applications in Computational NeuroscienceFrom EverandAttractor Networks: Fundamentals and Applications in Computational NeuroscienceNo ratings yet

- AI-Driven Time Series Forecasting: Complexity-Conscious Prediction and Decision-MakingFrom EverandAI-Driven Time Series Forecasting: Complexity-Conscious Prediction and Decision-MakingNo ratings yet

- Deep Learning with Python: A Comprehensive Guide to Deep Learning with PythonFrom EverandDeep Learning with Python: A Comprehensive Guide to Deep Learning with PythonNo ratings yet

- Terastandard - Bsi Bs en 14019 819 1501652835Document12 pagesTerastandard - Bsi Bs en 14019 819 1501652835soheila ghaderi100% (1)

- Revised - First Mid Examination-B.tech, BBA, BCA, MBA, MCA, BSC, BArch, Diploma ID (Except First Year) Odd Sem 2022-23Document12 pagesRevised - First Mid Examination-B.tech, BBA, BCA, MBA, MCA, BSC, BArch, Diploma ID (Except First Year) Odd Sem 2022-23Roʜʌŋ AŋʌŋɗNo ratings yet

- Specification For Leak Test PipeDocument10 pagesSpecification For Leak Test PipeEsteban Rios PitaNo ratings yet

- Autosar Sws ComDocument216 pagesAutosar Sws ComStefan Ruscanu100% (1)

- User Guide - Essential Surgearrest Surge Protector With LCD Timer P4Gc/P6GcDocument3 pagesUser Guide - Essential Surgearrest Surge Protector With LCD Timer P4Gc/P6GcpauloNo ratings yet

- Seaworthy.: A Selection of Lubricants For Marine and Offshore ApplicationsDocument20 pagesSeaworthy.: A Selection of Lubricants For Marine and Offshore ApplicationsEduardo CastilloNo ratings yet

- ACFrOgATi AZ84elTr9QFHjj5GeniP9wnXnwroO5 4lp4sULSAabTx1SHLz9u21jnSzuqLqEvHEil PLsaXcqG6FfPuXrQS6SZJ9pkhU81 40PA9Ie I9i5dci4 DbYikCZIyUXnk0yinAF I28DDocument46 pagesACFrOgATi AZ84elTr9QFHjj5GeniP9wnXnwroO5 4lp4sULSAabTx1SHLz9u21jnSzuqLqEvHEil PLsaXcqG6FfPuXrQS6SZJ9pkhU81 40PA9Ie I9i5dci4 DbYikCZIyUXnk0yinAF I28DVoot Kids75No ratings yet

- TETPOR LIQUID-Rev 3ADocument4 pagesTETPOR LIQUID-Rev 3AbuattugasmetlitNo ratings yet

- Data Warehosing and Data MiningDocument15 pagesData Warehosing and Data Miningsourabhsaini121214No ratings yet

- H4091-Mm39-Expansion JointDocument15 pagesH4091-Mm39-Expansion JointАндрей РознатовскийNo ratings yet

- Mordaunt Short ms907w User ManualDocument12 pagesMordaunt Short ms907w User ManualiksspotNo ratings yet

- MEA BM SAP Basis Navigation Training MaterialDocument39 pagesMEA BM SAP Basis Navigation Training MaterialabdouNo ratings yet

- EtmpDocument2 pagesEtmpAliNo ratings yet

- OVERHANG Precast-Parapet orDocument63 pagesOVERHANG Precast-Parapet orJem YumenaNo ratings yet

- ReadmeDocument6 pagesReadmenicole valentina malagon cardonaNo ratings yet

- 2022 Full Time Fee Schedule FinalDocument3 pages2022 Full Time Fee Schedule Finalluswazinokukhanya96No ratings yet

- Gala Valve Fire Fighting Catalogue 2018 V#5 - A4 - BDocument7 pagesGala Valve Fire Fighting Catalogue 2018 V#5 - A4 - BChhomNo ratings yet

- Review Paper On Hybrid Solar Wind PowerDocument3 pagesReview Paper On Hybrid Solar Wind PowerIjaz AhmedNo ratings yet

- Linux QuestionnaireDocument31 pagesLinux QuestionnairevinodhNo ratings yet

- Unit Iii Data Analysis and ReportingDocument14 pagesUnit Iii Data Analysis and Reportingjan100% (1)

- MinewolfDocument2 pagesMinewolfDavid Concha AstorgaNo ratings yet

- Install Cm12 Android 5 0 Lollipop On Any Android DeviceDocument21 pagesInstall Cm12 Android 5 0 Lollipop On Any Android Deviceshyamasunder0% (1)

- Senjak's Neon Red CatalogDocument17 pagesSenjak's Neon Red CatalogLazarielNo ratings yet

- Cyclomatic ComplexityDocument19 pagesCyclomatic ComplexityKunal AhireNo ratings yet

- Moot Problem National Moot CourtDocument14 pagesMoot Problem National Moot CourtAditya ShukdevkarNo ratings yet

- Kia Sportage Wheel Size and SpecsDocument1 pageKia Sportage Wheel Size and SpecsEs JunNo ratings yet

- Hot 5Document2 pagesHot 5Robert WilliamsNo ratings yet

- Theory of Automata NotesDocument29 pagesTheory of Automata NotesMuhammad Arslan RasoolNo ratings yet

- Webservices CBS DATA Access Using Jbase SocketDocument11 pagesWebservices CBS DATA Access Using Jbase SocketFin TechnetNo ratings yet

- An EXCEL Tool For Teaching Theis MethodDocument7 pagesAn EXCEL Tool For Teaching Theis MethodRicardo FigueiredoNo ratings yet