Chapter 2

Chapter 2

Uploaded by

vikasmanjunath58Copyright:

Available Formats

Chapter 2

Chapter 2

Uploaded by

vikasmanjunath58Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Chapter 2

Chapter 2

Uploaded by

vikasmanjunath58Copyright:

Available Formats

BCA III SEM OPERATING SYSTEM CHAPTER-3

UNIT 2 – PROCESS MANAGEMENT

SYLLABUS :

Process Concepts: What is a process? – Process and Program – Process States – Process

Control Block – Operations on a process - Co-operating processes – Inter-process

communication

Process scheduling: Scheduler – Types of schedulers – Scheduling criteria – Pre-

emptive and non-pre-emptive scheduling – Scheduling algorithms (FCFS, SJF, Round-

robin, Priority)

Threads: Overview of Threads - Multi-threading model

PROCESS CONCEPTS

WHAT IS A PROCESS?

Process is the execution of a program that performs the actions specified in that program. It

can be defined as an execution unit where a program runs. The OS helps you to create,

schedule, and terminates the processes which is used by CPU. A process created by the main

process is called a child process.

Process operations can be easily controlled with the help of PCB(Process Control Block). You

can consider it as the brain of the process, which contains all the crucial information related

to processing like process id, priority, state, CPU registers, etc.

WHAT IS PROCESS MANAGEMENT?

Process management involves various tasks like creation, scheduling, termination of

processes, and a dead lock. Process is a program that is under execution, which is an

important part of modern-day operating systems. The OS must allocate resources that enable

processes to share and exchange information. It also protects the resources of each process

from other methods and allows synchronization among processes.

It is the job of OS to manage all the running processes of the system. It handles operations by

performing tasks like process scheduling and such as resource allocation.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

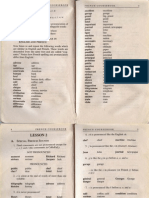

PROCESS CONCEPT [PROCESS ARCHITECTURE ]

Process memory is divided into four sections for efficient working. Below is the

architecture diagram of Process.

Process in memory

➢ Stack: The Stack stores temporary data like function parameters, returns

addresses, and local variables.

➢ Heap :containing dynamically allocated memory during run time.

➢ Data: It contains the global variable.

➢ Text: Text Section includes the current activity, which is represented by the value

of the Program Counter.

STATES OF A PROCESS

During the execution of a process, it undergoes a number of states. So, in this section of

the blog, we will learn various states of a process during its lifecycle.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

➢ New (Create) – In this step, the process is about to be created but not yet created, it is

the program which is present in secondary memory that will be picked up by OS to

create the process.

➢ Ready – New -> Ready to run. After the creation of a process, the process enters the

ready state i.e. the process is loaded into the main memory. The process here is ready

to run and is waiting to get the CPU time for its execution. Processes that are ready for

execution by the CPU are maintained in a queue for ready processes.

➢ Run – The process is chosen by CPU for execution and the instructions within the

process are executed by any one of the available CPU cores.

➢ Blocked or wait – Whenever the process requests access to I/O or needs input from

the user or needs access to a critical region(the lock for which is already acquired) it

enters the blocked or wait state. The process continues to wait in the main memory and

does not require CPU. Once the I/O operation is completed the process goes to the ready

state.

➢ Terminated or completed – Process is killed as well as PCB is deleted.

➢ Suspend ready – Process that was initially in the ready state but was swapped out of

main memory(refer Virtual Memory topic) and placed onto external storage by

scheduler is said to be in suspend ready state. The process will transition back to ready

state whenever the process is again brought onto the main memory.

➢ Suspend wait or suspend blocked – Similar to suspend ready but uses the process

which was performing I/O operation and lack of main memory caused them to move to

secondary memory. When work is finished it may go to suspend ready.-

PROGRAM AND PROCESS

PROGRAM PROCESS

A program is a set of instructions. A process is a program in execution.

A program is a passive/ static entity. A process is an active/ dynamic entity.

A process has a limited life span. It is

A program has a longer life span. It is stored

created when execution starts and

on disk forever.

terminated as execution is finished.

A process contains various resources

A program is stored on a disk in some file. It

like memory address, disk, printer, etc…

does not contain any other resource.

as per requirements.

A program requires memory space on a disk A process contains a memory address

to store all instructions. which is called address space.

Longer Lifespan Limited Lifespan

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

PROCESS CONTROL BLOCKS

PCB stands for Process Control Block. It is a data structure that is maintained by the

Operating System for every process. The PCB should be identified by an integer Process

ID (PID). It helps you to store all the information required to keep track of all the running

processes.

It is also accountable for storing the contents of processor registers. These are saved

when the process moves from the running state and then returns back to it. The

information is quickly updated in the PCB by the OS as soon as the process makes the

state transition.

The following are kept track of by the PCB –

1. Process ID or PID – Unique Integer Id for each process in any stage of execution.

2. Process Stage – The state any process currently is in, like Ready, wait, exit etc

3. Process Privileges – The special access to different resources to the memory or

devices the process has.

4. Pointer – Pointer location to the parent process.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

5. Program Counter – It will always have the address of the next instruction in line

of the processes

6. CPU Registers – Before the execution of the program the CPU registered where

the process needs to be stored at.

7. Scheduling Information – There are different scheduling algorithms for a

process based on which they will be selected in priority. This section contains all

the information about the scheduling.

8. Memory Management Information – The operating system will use a lot of

memory and it needs to know information like – page table, memory limits,

Segment table to execute different programs MIM has all the information about

this.

9. Accounting Information – As the name suggest it will contain all the information

about the time process took, Execution ID, Limits etc.

10. I/O Status – The list of all the information of I/O the process can use.

NOTE :The PCB architecture is completely different for different OSes. The above is

the most generalised architecture

OPERATIONS ON A PROCESS

Process Creation

➢ A process may create several new processes, via a create process system call,

during the course of execution.

➢ The creating process is called the parent process, and the new processes are called

the children of that process.

Process Deletion

➢ A process terminates when it finishes executing its final statement and asks the

operating system to delete it using the exit() system call.

➢ At that point, the process may return a status value (an integer) to it parent

process via the wait() system call. All the resources of the process are de-allocated

by the operating system.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

CO-OPERATING PROCESSES

In the computer system, there are many processes which may be either independent

processes or cooperating processes that run in the operating system.

➢ A process is said to be independent when it cannot affect or be affected by any

other processes that are running the system. It is clear that any process which does

not share any data (temporary or persistent) with any another process then the

process independent.

➢ On the other hand, a cooperating process is one which can affect or affected by any

another process that is running on the computer. the cooperating process is one

which shares data with another process.

There are several reasons for providing an environment that allows process

cooperation:

➢ Information sharing

In the information sharing at the same time, many users may want the same piece of

information(for instance, a shared file) and we try to provide that environment in which

the users are allowed to concurrent access to these types of resources.

➢ Computation speedup

When we want a task that our process run faster so we break it into a subtask, and each

subtask will be executing in parallel with another one. It is noticed that the speedup can

be achieved only if the computer has multiple processing elements (such as CPUs or I/O

channels).

➢ Modularity

In the modularity, we are trying to construct the system in such a modular fashion,

in which the system dividing its functions into separate processes.

➢ Convenience

An individual user may have many tasks to perform at the same time and the user

is able to do his work like editing, printing and compiling.

A cooperating process is one that can affect or be affected by other process

executing in the system.

Example- producer-consumer problem

There is a producer and a consumer, producer produces on the item and places it into

buffer whereas consumer consumes that item. For example, a print program produces

characters that are consumed by the printer driver. A compiler may produce assembly

code, which is consumed by an assembler. The assembler, in turn, may produce objects

modules which are consumed by the loader.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

INTER-PROCESS COMMUNICATION

Processes in operating system needs to communicate with each other. That is called

Interprocess communication. In general, Inter Process Communication is a type of

mechanism usually provided by the operating system (or OS). The main aim or goal of

this mechanism is to provide communications in between several processes.

A diagram that illustrates interprocess communication is as follows −

Inter process communication (IPC) is used for exchanging data between multiple

threads in one or more processes or programs.

There are two fundamental models of Inter-process communication

1. Message passing

2. Shared Memory Area

Message passing

Another important way that inter-process communication takes place with other

processes is via message passing. When two or more processes participate in inter-

process communication, each process sends messages to the others via Kernel. Here is an

example of sending messages between two processes: – Here, the process sends a

message like “M” to the OS kernel. This message is then read by Process B. A

communication link is required between the two processes for successful message

exchange. There are several ways to create these links.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

2. Shared memory

Shared memory is a memory shared between all processes by two or more processes

established using shared memory. This type of memory should protect each other by

synchronizing access between all processes. Both processes like A and B can set up a

shared memory segment and exchange data through this shared memory area. Shared

memory is important for these reasons-

➢ It is a way of passing data between processes.

➢ Shared memory is much faster than these methods and is also more reliable.

➢ Shared memory allows two or more processes to share the same copy of the data.

Suppose process A wants to communicate to process B, and needs to attach its address

space to this shared memory segment. Process A will write a message to the shared

memory and Process B will read that message from the shared memory. So processes are

responsible for ensuring synchronization so that both processes do not write to the same

location at the same time.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

WHAT IS PROCESS SCHEDULING?

Process Scheduling is an OS task that schedules processes of different states like ready,

waiting, and running.

Process scheduling allows OS to allocate a time interval of CPU execution for each process.

Another important reason for using a process scheduling system is that it keeps the CPU

busy all the time. This allows you to get the minimum response time for programs.

OBJECTIVES OF SCHEDULING:

The fundamental objective of scheduling is to arrange the manufacturing activities in

such a way that the cost of production is minimized, and the goods produced are

delivered on due dates.

In general scheduling meets the following objectives:

1. In order to meet the delivery dates the sequence of operations is properly

planned.

2. To have minimum total time of production by having better resources

utilisation.

3. For having maximum capacity utilization and reducing the labour cost by

minimization of idleness of machines and manpower.

4. To avoid unbalanced allocation of work among the various departments and

workstations.

TYPE OF PROCESS SCHEDULERS

A scheduler is a type of system software that allows you to handle process scheduling.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

There are mainly three types of Process Schedulers:

1. Long Term Scheduler

2. Short Term Scheduler

3. Medium Term Scheduler

1. Long Term or job scheduler :

Long term scheduler is also known as job scheduler. It chooses the processes from the

pool (secondary memory) and keeps them in the ready queue maintained in the primary

memory.

Long Term scheduler mainly controls the degree of Multiprogramming. The purpose of

long term scheduler is to choose a perfect mix of IO bound and CPU bound processes

among the jobs present in the pool.

If the job scheduler chooses more IO bound processes then all of the jobs may reside in

the blocked state all the time and the CPU will remain idle most of the time. This will

reduce the degree of Multiprogramming. Therefore, the Job of long term scheduler is very

critical and may affect the system for a very long time.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

2. Short term or CPU scheduler :

It is responsible for selecting one process from ready state for scheduling it on the

running state. Note: Short-term scheduler only selects the process to schedule it

doesn’t load the process on running. Here is when all the scheduling algorithms are

used. The CPU scheduler is responsible for ensuring there is no starvation owing to

high burst time processes.

Dispatcher is responsible for loading the process selected by Short-term scheduler on

the CPU (Ready to Running State) Context switching is done by dispatcher only. A

dispatcher does the following:

1. Switching context.

2. Switching to user mode.

3. Jumping to the proper location in the newly loaded program.

3. Medium-term scheduler :

It is responsible for suspending and resuming the process. It mainly does swapping

(moving processes from main memory to disk and vice versa). Swapping may be

necessary to improve the process mix or because a change in memory requirements has

overcommitted available memory, requiring memory to be freed up. It is helpful in

maintaining a perfect balance between the I/O bound and the CPU bound. It reduces the

degree of multiprogramming.

SCHEDULING CRITERIA IN OS

The aim of the scheduling algorithm is to maximize and minimize the following:

Maximize:

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

• CPU utilization - It makes sure that the CPU is operating at its peak and is busy.

• Throughoutput - It is the number of processes that complete their execution per

unit of time.

Minimize:

• Waiting time- It is the amount of waiting time in the queue.

• Response time- Time retired for generating the first request after submission.

• Turnaround time- It is the amount of time required to execute a specific process.

Scheduling Criteria

➢ CPU Utilization

The scheduling algorithm should be designed in such a way that the usage of the

CPU should be as efficient as possible.

➢ Throughput

It can be defined as the number of processes executed by the CPU in a given

amount of time. It is used to find the efficiency of a CPU.

➢ Response Time

The Response time is the time taken to start the job when the job enters the

queues so that the scheduler should be able to minimize the response time.

Response time = Time at which the process gets the CPU for the first time - Arrival

time

➢ Turnaround time

Turnaround time is the total amount of time spent by the process from coming in

the ready state for the first time to its completion.

Turnaround time = Burst time + Waiting time

or

Turnaround time = Exit time - Arrival time

➢ Waiting time

The Waiting time is nothing but where there are many jobs that are competing for

the execution, so that the Waiting time should be minimized.

Waiting time = Turnaround time - Burst time

Types of CPU Scheduling

Here are two kinds of Scheduling methods:

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

Preemptive Scheduling

In Preemptive Scheduling, the tasks are mostly assigned with their priorities. Sometimes

it is important to run a task with a higher priority before another lower priority task, even

if the lower priority task is still running. The lower priority task holds for some time and

resumes when the higher priority task finishes its execution.

Non-Preemptive Scheduling

In this type of scheduling method, the CPU has been allocated to a specific process. The

process that keeps the CPU busy will release the CPU either by switching context or

terminating. It is the only method that can be used for various hardware platforms. That’s

because it doesn’t need special hardware (for example, a timer) like preemptive

scheduling.

Scheduling Algorithms in Operating System

Scheduling algorithms schedule processes on the processor in an efficient and effective

manner. This scheduling is done by a Process Scheduler. It maximizes CPU utilization by

increasing throughput.

Following are the popular process scheduling algorithms

1. First-Come, First-Served (FCFS) Scheduling

2. Shortest-Job-Next (SJN/SJF) Scheduling

3. Priority Scheduling

4. Round Robin(RR) Scheduling

1. FIRST COME FIRST SERVE:

FCFS considered to be the simplest of all operating system scheduling algorithms. First

come first serve scheduling algorithm states that the process that requests the CPU first

is allocated the CPU first and is implemented by using FIFO queue.

Characteristics of FCFS:

➢ FCFS supports non-preemptive and preemptive CPU scheduling algorithms.

➢ Tasks are always executed on a First-come, First-serve concept.

➢ FCFS is easy to implement and use.

➢ This algorithm is not much efficient in performance, and the wait time is quite

high.

Advantages of FCFS:

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

➢ Easy to implement

➢ First come, first serve method

Disadvantages of FCFS:

➢ FCFS suffers from Convoy effect.

➢ The average waiting time is much higher than the other algorithms.

➢ FCFS is very simple and easy to implement and hence not much efficient.

2. SHORTEST JOB FIRST(SJF):

Shortest job first (SJF) is a scheduling process that selects the waiting process with

the smallest execution time to execute next. This scheduling method may or may not be

preemptive. Significantly reduces the average waiting time for other processes waiting

to be executed. The full form of SJF is Shortest Job First.

Characteristics of SJF:

➢ Shortest Job first has the advantage of having a minimum average waiting

time among all operating system scheduling algorithms.

➢ It is associated with each task as a unit of time to complete.

➢ It may cause starvation if shorter processes keep coming. This problem

can be solved using the concept of ageing.

Advantages of Shortest Job first:

➢ As SJF reduces the average waiting time thus, it is better than the first

come first serve scheduling algorithm.

➢ SJF is generally used for long term scheduling

Disadvantages of SJF:

➢ One of the demerit SJF has is starvation.

➢ Many times it becomes complicated to predict the length of the upcoming CPU

request

3. PRIORITY SCHEDULING:

Pre-emptive Priority CPU Scheduling Algorithm is a pre-emptive method of CPU

scheduling algorithm that works based on the priority of a process. In this algorithm,

the editor sets the functions to be as important, meaning that the most important

process must be done first. In the case of any conflict, that is, where there are more than

one processor with equal value, then the most important CPU planning algorithm works

on the basis of the FCFS (First Come First Serve) algorithm.

Characteristics of Priority Scheduling:

➢ Schedules tasks based on priority.

➢ When the higher priority work arrives while a task with less priority is executed,

the higher priority work takes the place of the less priority one and

➢ The latter is suspended until the execution is complete.

➢ Lower is the number assigned, higher is the priority level of a process.

Advantages of Priority Scheduling:

➢ The average waiting time is less than FCFS

➢ Less complex

Disadvantages of Priority Scheduling:

➢ One of the most common demerits of the Preemptive priority CPU scheduling

algorithm is the Starvation Problem. This is the problem in which a process has

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

to wait for a longer amount of time to get scheduled into the CPU. This condition

is called the starvation problem.

4. ROUND ROBIN:

Round Robin is a CPU scheduling algorithm where each process is cyclically assigned

a fixed time slot. It is the preemptive version of First come First Serve CPU Scheduling

algorithm. Round Robin CPU Algorithm generally focuses on Time Sharing technique.

Characteristics of Round robin:

• It’s simple, easy to use, and starvation-free as all processes get the balanced

CPU allocation.

• One of the most widely used methods in CPU scheduling as a core.

• It is considered preemptive as the processes are given to the CPU for a very

limited time.

Advantages of Round robin:

• Round robin seems to be fair as every process gets an equal share of CPU.

• The newly created process is added to the end of the ready queue.

What is Dispatcher?

It is a module that provides control of the CPU to the process. The Dispatcher should be

fast so that it can run on every context switch. Dispatch latency is the amount of time

needed by the CPU scheduler to stop one process and start another.

.Functions performed by Dispatcher:

• Context Switching

• Switching to user mode

• Moving to the correct location in the newly loaded program.

Convoy Effect in FCFS

FCFS may suffer from the convoy effect if the burst time of the first job is the highest

among all. As in the real life, if a convoy is passing through the road then the other persons

may get blocked until it passes completely. This can be simulated in the Operating System

also.

If the CPU gets the processes of the higher burst time at the front end of the ready queue

then the processes of lower burst time may get blocked which means they may never get

the CPU if the job in the execution has a very high burst time. This is called convoy

effect or starvation.

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

Starvation and Aging

Starvation is a problem of resource management where in the OS, the process does not

has resources because it is being used by other processes. This problem occurs mainly in

a priority-based scheduling algorithm in which the requests with high priority get

processed first and the least priority process takes time to get processed.

Starvation is a phenomenon in which a process that is present in the ready state

and has low priority, keeps on waiting for the CPU allocation because some other

process with higher priority comes with due respect to time.

Aging

To avoid starvation, we use the concept of Aging. In Aging, after some fixed amount of

time quantum, we increase the priority of the low priority processes. By doing so, as

time passes, the lower priority process becomes a higher priority process.

REVIOEW QUESTIONS

TWO-MARKS QUESTIONS

1. Differentiate Process and Program

2. List the objectives of scheduling

3. What is context switching?

4. What is starvation and aging?

5. Define CONVEY effect

6. What is dispatcher

7. What is co-operative and independent process

8. Explain the operations on a process

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

BCA III SEM OPERATING SYSTEM CHAPTER-3

FOUR-MARKS QUESTIONS

1. What is process? Explain process state transition with neat diagram

2. Explain PCB and its contents

3. What is process scheduling? Explain process scheduling criteria

4. differentiate between pre-emptive and non-preemptive scheduling algoritm

EIGHT-MARKS QUESTIONS

1. What is scheduler? Explain different types of schedulers

2. Describe SJF and RR scheduling algorithm with advantages and disadvantages

3. Explain FCFS and Priority scheduling algorithm with advantages and disadvantages

4. Write a note on

a) Co-operating Process

b) Explain Inter-Process communication

SURANA COLLEGE COMPUTER SCEINCE DEPT ASHWINI S DIWAKAR

You might also like

- Process Management, Threads, Process Scheduling - Operating SystemsDocument21 pagesProcess Management, Threads, Process Scheduling - Operating SystemsMukesh67% (3)

- Kurdistan Rising Online July 15Document147 pagesKurdistan Rising Online July 15Sathyanarayanan DNo ratings yet

- System DesignDocument60 pagesSystem Designtry.dixitpNo ratings yet

- Osy Notes 5Document49 pagesOsy Notes 5TAIBA SHAIKHNo ratings yet

- Unit 3 NotesDocument48 pagesUnit 3 Notesrukminigaikar66No ratings yet

- ProcessDocument9 pagesProcesssrigopi1415No ratings yet

- Module 8 Process ManagementDocument4 pagesModule 8 Process ManagementnatsuNo ratings yet

- Unit 2 Process MnagementDocument46 pagesUnit 2 Process MnagementHARSHITHA URS S NNo ratings yet

- Chapter 3 ProcessDocument42 pagesChapter 3 ProcessSanjeev RNo ratings yet

- Unit 2Document60 pagesUnit 2uppiNo ratings yet

- Unit 3 - Operating System Tunning CPTDocument35 pagesUnit 3 - Operating System Tunning CPTAnuskaNo ratings yet

- OSY Chapter 3Document24 pagesOSY Chapter 3Rupesh BavgeNo ratings yet

- Unit 2 Operating SystemDocument171 pagesUnit 2 Operating SystemAyush GargNo ratings yet

- Process and Process OperationDocument6 pagesProcess and Process OperationSoniya KadamNo ratings yet

- Ch3 Osy NotesDocument118 pagesCh3 Osy Notesmanasikotwal00No ratings yet

- Os Chapter 3Document14 pagesOs Chapter 3kulfigaming33No ratings yet

- Unit 2Document65 pagesUnit 2rajendragNo ratings yet

- OS Chapter 3Document15 pagesOS Chapter 3root18No ratings yet

- OS - Unit 3Document16 pagesOS - Unit 3snehatumaskarNo ratings yet

- Question: Explain Context SwitchingDocument10 pagesQuestion: Explain Context SwitchingpathakpranavNo ratings yet

- CH 3 (Processes)Document12 pagesCH 3 (Processes)Muhammad ImranNo ratings yet

- Unit Ii - Process ManagementDocument35 pagesUnit Ii - Process ManagementAnita Sofia KeyserNo ratings yet

- Osy Chapter 3 NotesDocument12 pagesOsy Chapter 3 NotesAstha MadekarNo ratings yet

- Chapter Two OSDocument23 pagesChapter Two OSEfrem MekonenNo ratings yet

- Os Unit 2 Part3Document7 pagesOs Unit 2 Part3aprashant0012No ratings yet

- U2 - Ch1Process ConceptsDocument11 pagesU2 - Ch1Process Conceptssarvesht9328No ratings yet

- Assigment 2Document3 pagesAssigment 2jdustindirkNo ratings yet

- Operating System - ProcessesDocument5 pagesOperating System - Processestanvir anwarNo ratings yet

- Operating Systems - Part 2Document34 pagesOperating Systems - Part 2Umayanga WijesingheNo ratings yet

- OS Unit-II KKWDocument102 pagesOS Unit-II KKWsaikat.sarkarNo ratings yet

- Lecture 5-6 ch3 PrecessesDocument27 pagesLecture 5-6 ch3 PrecessesosamhhongNo ratings yet

- Operating SystemsDocument25 pagesOperating Systemsvaddi srivallideviNo ratings yet

- Os Unit IiDocument34 pagesOs Unit Iiismartgamers6No ratings yet

- A Process Control BlockDocument26 pagesA Process Control Blockrambabu mahatoNo ratings yet

- OS_Module2_Unit1Document53 pagesOS_Module2_Unit1padmakarnickNo ratings yet

- Ch. 4 ... Part - 1Document52 pagesCh. 4 ... Part - 1VNo ratings yet

- Unit 2 (With Page Number)Document30 pagesUnit 2 (With Page Number)Yashi UpadhyayNo ratings yet

- Process Scheduling in OSDocument37 pagesProcess Scheduling in OSCherryNo ratings yet

- (Aos) M2Document8 pages(Aos) M2Faith SangilNo ratings yet

- Operating Systems LECTURE NOTESDocument9 pagesOperating Systems LECTURE NOTESPrince MutsoliNo ratings yet

- Unit Ii Process ManagementDocument44 pagesUnit Ii Process ManagementlibonceanbudayanNo ratings yet

- Os Module IiDocument15 pagesOs Module Iiabhinavghosh159No ratings yet

- PPT-Unit-3-Process ManagementDocument63 pagesPPT-Unit-3-Process Managementcatstudysss100% (1)

- Os Unit 2Document12 pagesOs Unit 2velluraju11No ratings yet

- CS211 Lec 5Document29 pagesCS211 Lec 5Muhammad RafayNo ratings yet

- Unit-1 Process1Document21 pagesUnit-1 Process1indu kaurNo ratings yet

- Module 2 NotesDocument35 pagesModule 2 Notesvaishnavirai273No ratings yet

- Os 4Document5 pagesOs 4moondipti39No ratings yet

- Unit 2. Updated for AutonomousDocument70 pagesUnit 2. Updated for Autonomousryh.digitalservicesNo ratings yet

- Os Module 2Document103 pagesOs Module 2ananyabhats56No ratings yet

- OS Chapter 2Document101 pagesOS Chapter 2200339616138No ratings yet

- Process ManagementDocument26 pagesProcess ManagementBright Alike ChiwevuNo ratings yet

- CHP 2 Process ManagementDocument30 pagesCHP 2 Process ManagementAditiNo ratings yet

- Process ManagementDocument78 pagesProcess ManagementRakesh PatilNo ratings yet

- Os Unit-2Document9 pagesOs Unit-2Sai balakrishnaNo ratings yet

- Unit 2 Material - 1Document20 pagesUnit 2 Material - 1meghal prajapatiNo ratings yet

- Unit 1Document8 pagesUnit 1Study WorkNo ratings yet

- Unit-3 (CPU Scheduling & Deadlocks)Document128 pagesUnit-3 (CPU Scheduling & Deadlocks)SseieprNo ratings yet

- 3 ProcessDocument38 pages3 ProcessPrajwal KandelNo ratings yet

- Chapter03-newDocument93 pagesChapter03-newhgull8490No ratings yet

- Operating Systems Interview Questions You'll Most Likely Be AskedFrom EverandOperating Systems Interview Questions You'll Most Likely Be AskedNo ratings yet

- Lex Iniusta Non Est LexDocument18 pagesLex Iniusta Non Est LexfilosofolbNo ratings yet

- Tanzania ItineraryDocument2 pagesTanzania Itineraryjamietuttle100% (2)

- Instructional Agility KeynoteDocument13 pagesInstructional Agility KeynoteRoy VergesNo ratings yet

- Taj Mahal - WikipediaDocument10 pagesTaj Mahal - WikipediaPritimasuman DasNo ratings yet

- SWP-5 Communication-Skills BSSW2BDocument4 pagesSWP-5 Communication-Skills BSSW2BMary France Paez CarrilloNo ratings yet

- Sample ResumeDocument3 pagesSample ResumewanspNo ratings yet

- PMA 150 Syed Abdul Basit Shah - PDFDocument21 pagesPMA 150 Syed Abdul Basit Shah - PDFrn388628No ratings yet

- LibreOffice 3.4 Basic Programmer's GuideDocument174 pagesLibreOffice 3.4 Basic Programmer's GuideMinoteusNo ratings yet

- Chapter 2. PragmaticsDocument7 pagesChapter 2. PragmaticsFahima MaafaNo ratings yet

- MySQL - MySQL 5.7 Reference Manual - 15.8Document3 pagesMySQL - MySQL 5.7 Reference Manual - 15.8Kariston GoyaNo ratings yet

- Enjoy Morocco, Speak Darija Moroccan Dialectal Arabic - Advanced Course of Darija (Wissocq, Gérard) (Z-Library)Document970 pagesEnjoy Morocco, Speak Darija Moroccan Dialectal Arabic - Advanced Course of Darija (Wissocq, Gérard) (Z-Library)chiaracicala333No ratings yet

- AvALIAÇÃO INGLES - Beyond The 20th CenturyDocument1 pageAvALIAÇÃO INGLES - Beyond The 20th CenturyFernanda Farias da SilvaNo ratings yet

- STD Template v1.3 SCSJ2203 SE@UTM ByMG 16may2016Document9 pagesSTD Template v1.3 SCSJ2203 SE@UTM ByMG 16may2016Naru TosNo ratings yet

- Online Food Ordering SystemDocument68 pagesOnline Food Ordering SystemMajestyNo ratings yet

- Italian in 16 DaysDocument35 pagesItalian in 16 DaysMakJekNo ratings yet

- Understanding and Using English Grammar Student Book ..Document3 pagesUnderstanding and Using English Grammar Student Book ..Arafun TamimNo ratings yet

- Lecture Notes FinalDocument29 pagesLecture Notes FinalIlma ČeljoNo ratings yet

- Helene: U: J R MDocument30 pagesHelene: U: J R MVenifa Wen Libes100% (1)

- Log Configuration For SEEBURGER AdaptersDocument13 pagesLog Configuration For SEEBURGER AdapterskemoT1990TMNo ratings yet

- Assistant - Software Engr - Application JD - Oct 2020Document9 pagesAssistant - Software Engr - Application JD - Oct 2020issa galalNo ratings yet

- What Is New in ControlExpertDocument10 pagesWhat Is New in ControlExpertAshesh PatelNo ratings yet

- QUIZ National Artist of The Phils. PART 2 QUIZ NO. 10Document23 pagesQUIZ National Artist of The Phils. PART 2 QUIZ NO. 10Rainier Agapay GAS 1No ratings yet

- HW 1.2 SolutionsDocument6 pagesHW 1.2 SolutionsArini MelanieNo ratings yet

- Hadin Kai: WallafarDocument23 pagesHadin Kai: WallafarMuhammad M MusaNo ratings yet

- Postmodification by Nonfinite ClausesDocument6 pagesPostmodification by Nonfinite ClausesMa Minh HươngNo ratings yet

- MELC 3 Employ The Appropriate Communicative Styles For Various Situations (Intimate, Casual, Conversational, Consultative, Frozen)Document2 pagesMELC 3 Employ The Appropriate Communicative Styles For Various Situations (Intimate, Casual, Conversational, Consultative, Frozen)Mar Sebastian82% (11)

- Recurring Themes in Alfonsina StorniDocument4 pagesRecurring Themes in Alfonsina StorniNatalia WolfgangNo ratings yet

- Strucid RobloxDocument5 pagesStrucid Robloxheny purwanti100% (2)

- Lectures Stat 530Document59 pagesLectures Stat 530madhavNo ratings yet