python_code

Uploaded by

Suchandana Saha Joygurupython_code

Uploaded by

Suchandana Saha Joyguruimport numpy as np

import lif

import matplotlib.pyplot as plt

# 2 input neurons

# 1 output neuron

# initialize random weights

# Adjust weights using hebbian

#

https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1007692#pcbi

-1007692-g002

# At the end of learning, the neuron�s tuning curves are uniformally distributed

(Fig 2Giii), and the quality of the representation becomes optimal for all input

signals (Fig 2Aiii and 2Ciii).

# What are decoding weights?

# What are tuning curves?

# Are our inputs correlated? (for AND, OR gate)

# When does learning converge? Mainly what does this mean: "Learning converges when

all tuning curve maxima are aligned with the respective feedforward weights (Fig

3Bii; dashed lines and arrows)."

# ---------------------------------------------------------------------------

# https://www.geeksforgeeks.org/single-neuron-neural-network-python/

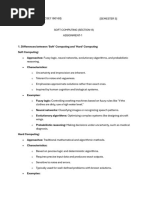

class SNN():

def __init__(self):

np.random.seed(1) # Generate same random weights for every trial

# Matrix containing the weights between each neuron

self.z = np.array[1,0,0,0,1,1,0,1,0,1]

self.alpha = np.array[1,1,1]

self.beta = np.array[2,2,2]

def sigmoid(x):

return 1 / (1 + np.exp(-x))

def forward_propagation(self, inputs):

out = np.dot(inputs, self.weights)

zhat = SNN.sigmoid(out)

error = self.z - zhat

return zhat

# pass spikes from input to output

# pass the out of the input to the in of the output (the weights affect this

process)

# how do the weights effect this connection?

def train(self, inputs, outputs, epochs):

weights = np.random.rand(2, 1)

# perform encoding into input neurons (input_nns)

for i in range(epochs):

output_nns = self.forward_propagation(inputs)

delta_w1 = np.dot([inputs[0]*output_nns, input[0], output_nns],

self.alpha) # dot product? multiply the avg rates?

delta_w2 = np.dot([inputs[1]*output_nns, input[1], output_nns],

self.beta)

weights[0] += delta_w1

weights[1] += delta_w2

# loop through each combo of x and y ?

# use hebbian rule to determine weight adjustment

# apply weight adjustment

# https://praneethnamburi.com/2015/02/05/simulating-neural-spike-trains/

# fr: firing rate estimate (in Hz)

# train_length: length of the spike train (in seconds)

def poissonSpike(fr, nbins, num_trials):

dt = 1 / 1000

spikeMatrix = np.random.rand(num_trials, nbins) < fr * dt

t = np.arange(0, (nbins * (dt - 1)), dt)

return (spikeMatrix, t)

def rasterPlot(spikeMatrix):

spikes_x = []

spikes_y = []

for i in range(spikeMatrix.shape[0]):

for j in range(spikeMatrix.shape[1]):

if spikeMatrix[i][j]:

spikes_y.append(i)

spikes_x.append(j)

plt.scatter(spikes_x, spikes_y, marker="|")

plt.yticks(np.arange(np.amax(spikes_y)))

# plt.xticks(np.arange(np.amax(spikes_x)))

plt.xlabel("Time Step")

plt.ylabel("Trial")

plt.show()

sm, t = poissonSpike(300, 30, 20)

rasterPlot(sm)

You might also like

- The Age of AI and Our Human Future (Henry Kissinger, Eric Schmidt Etc.) (Z-Library)100% (8)The Age of AI and Our Human Future (Henry Kissinger, Eric Schmidt Etc.) (Z-Library)148 pages

- Christopher Langan - CTMU, The Cognitive-Theoretic Model of The Universe, A New Kind of Reality Theory88% (8)Christopher Langan - CTMU, The Cognitive-Theoretic Model of The Universe, A New Kind of Reality Theory56 pages

- Data Structure and Algorithmic Thinking With Python Data Structure and Algorithmic Puzzles PDF95% (20)Data Structure and Algorithmic Thinking With Python Data Structure and Algorithmic Puzzles PDF471 pages

- Gayle Laakmann McDowell - Cracking The Coding Interview - 189 Programming Questions and Solutions (2015, CareerCup)81% (48)Gayle Laakmann McDowell - Cracking The Coding Interview - 189 Programming Questions and Solutions (2015, CareerCup)708 pages

- Gödel, Escher, Bach - An Eternal Golden Braid (20th Anniversary Edition) by Douglas R. Hofstadter (Charm-Quark) PDF100% (10)Gödel, Escher, Bach - An Eternal Golden Braid (20th Anniversary Edition) by Douglas R. Hofstadter (Charm-Quark) PDF821 pages

- Cracking The Coding Interview - 189 Programming Questions and Solutions (6th Edition) (EnglishOnlineClub - Com)100% (10)Cracking The Coding Interview - 189 Programming Questions and Solutions (6th Edition) (EnglishOnlineClub - Com)708 pages

- Chris Bailey - Hyperfocus - The New Science of Attention, Productivity, and Creativity-Viking (2018)100% (25)Chris Bailey - Hyperfocus - The New Science of Attention, Productivity, and Creativity-Viking (2018)306 pages

- The Art of Asking ChatGPT For High-Quality Answers A Complete Guide To Prompt Engineering Techniques (Ibrahim John) (Z-Library)100% (24)The Art of Asking ChatGPT For High-Quality Answers A Complete Guide To Prompt Engineering Techniques (Ibrahim John) (Z-Library)52 pages

- Cs 229, Autumn 2016 Problem Set #2: Naive Bayes, SVMS, and TheoryNo ratings yetCs 229, Autumn 2016 Problem Set #2: Naive Bayes, SVMS, and Theory20 pages

- Deriving A Humanistic Theory of Child deNo ratings yetDeriving A Humanistic Theory of Child de23 pages

- Assignment-1 (MLP From Scratch) : Roll No: EDM18B055No ratings yetAssignment-1 (MLP From Scratch) : Roll No: EDM18B0551 page

- A Gentle Introduction To Neural Networks With PythonNo ratings yetA Gentle Introduction To Neural Networks With Python85 pages

- A Gentle Introduction To Neural Networks With Python100% (1)A Gentle Introduction To Neural Networks With Python85 pages

- Soft Computing Practical Teacher ManualNo ratings yetSoft Computing Practical Teacher Manual87 pages

- Using A Three Layer Deep Neural Network To Solve An Unsupervised Learning ProblemNo ratings yetUsing A Three Layer Deep Neural Network To Solve An Unsupervised Learning Problem13 pages

- Artificial Neural Network: Jony Sugianto 0812-13086659No ratings yetArtificial Neural Network: Jony Sugianto 0812-1308665952 pages

- How To Create A Simple Neural Network in PythonNo ratings yetHow To Create A Simple Neural Network in Python4 pages

- Student Solutions Manual to Accompany Economic Dynamics in Discrete Time, secondeditionFrom EverandStudent Solutions Manual to Accompany Economic Dynamics in Discrete Time, secondedition4.5/5 (2)

- Roadmap How To Learn AI in 2024 (Uncovered AI)No ratings yetRoadmap How To Learn AI in 2024 (Uncovered AI)6 pages

- Python Programming and Maching Learning 2 in 1 B08Y5DPX32100% (7)Python Programming and Maching Learning 2 in 1 B08Y5DPX32145 pages

- Current and Future Trends on AI Applications - Mohammed A Al-SharafiNo ratings yetCurrent and Future Trends on AI Applications - Mohammed A Al-Sharafi456 pages

- Cognitive Control Therapy With Children and AdolescentsNo ratings yetCognitive Control Therapy With Children and Adolescents426 pages

- Determination of Anticonvulsant Effect of Phenytoin in Mice Using Electroconvulsiometer.No ratings yetDetermination of Anticonvulsant Effect of Phenytoin in Mice Using Electroconvulsiometer.10 pages

- Neurodevelopmental Hypothesis of Schizophrenia: ReappraisalNo ratings yetNeurodevelopmental Hypothesis of Schizophrenia: Reappraisal3 pages

- (eBook PDF) Local Anesthesia for Dental Professionals 2nd Edition download100% (6)(eBook PDF) Local Anesthesia for Dental Professionals 2nd Edition download50 pages

- A Bardon Companion A Practical Companion for the Student of Franz Bardon s System of Hermetic Initiation 2nd Edition Rawn Clark - Quickly access the ebook and start reading today100% (1)A Bardon Companion A Practical Companion for the Student of Franz Bardon s System of Hermetic Initiation 2nd Edition Rawn Clark - Quickly access the ebook and start reading today57 pages

- Oral Comm. Types of Speeches According To DeliveryNo ratings yetOral Comm. Types of Speeches According To Delivery18 pages

- Intensive Bimanual Intervention For Children Who.4No ratings yetIntensive Bimanual Intervention For Children Who.48 pages

- A Report On Donald Super Prepared by Shonnel LagustanNo ratings yetA Report On Donald Super Prepared by Shonnel Lagustan22 pages