0 ratings0% found this document useful (0 votes)

699 views03 - Decision - Tree - Hunt Algorithm

Hunt's algorithm is a decision tree induction algorithm developed in 1966. It builds a decision tree recursively by splitting the training records at each node into purer subsets based on an attribute test condition. If all records at a node belong to the same class, it becomes a leaf node labeled with that class. Otherwise, the records are partitioned into smaller subsets using an attribute test, creating a child node for each outcome. This process is repeated recursively on each child node until stopping criteria are met. The algorithm aims to construct a tree that accurately predicts class labels for new data instances.

Uploaded by

Avin Unggul Wijaya XI-MIPA-2Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd

0 ratings0% found this document useful (0 votes)

699 views03 - Decision - Tree - Hunt Algorithm

Hunt's algorithm is a decision tree induction algorithm developed in 1966. It builds a decision tree recursively by splitting the training records at each node into purer subsets based on an attribute test condition. If all records at a node belong to the same class, it becomes a leaf node labeled with that class. Otherwise, the records are partitioned into smaller subsets using an attribute test, creating a child node for each outcome. This process is repeated recursively on each child node until stopping criteria are met. The algorithm aims to construct a tree that accurately predicts class labels for new data instances.

Uploaded by

Avin Unggul Wijaya XI-MIPA-2Copyright

© © All Rights Reserved

We take content rights seriously. If you suspect this is your content, claim it here.

Available Formats

Download as PPT, PDF, TXT or read online on Scribd

You are on page 1/ 28

Hunt’s Algorithm

Algoritma Hunt

Sekolah Tinggi Ilmu Statistik Jakarta 1

Decision Tree Induction Algorithms

Number of Algorithms:

• Hunt’s

– Hunt's Algorithm (1966)

• Quinlan's

– Iterative Dichotomizer3 (1975) uses Entropy

– C4.5 / 4.8 / 5.0 (1993) uses Entropy

• Brieman's

– Classification And Regression Trees (1984) uses Gini

• Kass's

– CHi-squared Automatic Interaction Detector (1980) uses ____

• IBM:

Mehta

– Supervised Learning In Quest (1996) uses Gini

Shafer

– Scalable PaRallelizable INduction of decision Trees (1996) uses Gini

Sekolah Tinggi Ilmu Statistik Jakarta 2

Hunt’s Algorithm

• In the Hunt’s algorithm, a decision tree is

grown in a recursive fashion by partitioning

the training records successively into purer

subsets

Sekolah Tinggi Ilmu Statistik Jakarta 3

Hunt’s Algorithm

• Let Dt be the set of training records that are

associated with node t and y = {y1, y2, · · · , yc}

be the class labels. The following is a recursive

definition of Hunt’s algorithm.

• Step 1: If all the records in Dt belong to the

same class yt, then t is a leaf node labeled as

yt.

Sekolah Tinggi Ilmu Statistik Jakarta 4

Hunt’s Algorithm

• Step 2: If Dt contains records that belong to

more than one class, an attribute test

condition is used to partition the records into

smaller subsets. A child node is then created

for each outcome of the test condition. The

records in Dt are distributed to the children

based upon their outcomes. This procedure is

repeated for each child node.

Sekolah Tinggi Ilmu Statistik Jakarta 5

Hunt’s Algorithm

Dt = {training records @ node t}

• If Dt = {records from different classes}

– Split Dt into smaller subsets via attribute test

– Traverse each subset with same rules

• If Dt = {records from single class yt}

– Set Node t = leaf node with class label yt

• If Dt = {} (empty)

– Set Node t = leaf node with default class label

yd

• Recursively apply above criterion until ...

– No more training records left

Sekolah Tinggi Ilmu Statistik Jakarta 6

Example

• Consider the problem of predicting whether a

loan applicant will succeed in repaying her

loan obligations or become delinquent, and

subsequently, default on her loan.

• The training set used for predicting borrowers

who will default on their loan payments will

be as follows.

Sekolah Tinggi Ilmu Statistik Jakarta 7

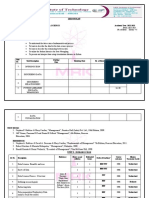

Example. Figure1

Sekolah Tinggi Ilmu Statistik Jakarta 8

Example

• A training set for this problem can be

constructed by examining the historical

records of previous loan borrowers.

• In the training set shown in Figure 1, each

record contains the personal information of a

borrower along with a class label indicating

whether the borrower has defaulted on her

loan payments.

Sekolah Tinggi Ilmu Statistik Jakarta 9

Example

• The initial tree for the classification problem

contains a single node with class label

Defaulted = No as illustrated below:

Figure 1a: Step 1

• This means that most of the borrowers had

successfully repayed their loans.

• However, the tree needs to be refined since

the root node contains records from both

classes. Sekolah Tinggi Ilmu Statistik Jakarta 10

Example

• The records are subsequently divided into smaller

subsets based on the outcomes of the Home

Owner test condition, as shown in Figure below:

Figure 1b: Step 2

• The reason for choosing this attribute test

condition instead of others is an implementation

issue that will be discussed later.

Sekolah Tinggi Ilmu Statistik Jakarta 11

Example

• Now we can assume that this is the best

criterion for splitting the data at this point.

• The Hunt’s algorithm is then applied

recursively to each child of the root node.

• From the training set given in Figure 1, notice

that all borrowers who are home owners had

successfully repayed their loan.

Sekolah Tinggi Ilmu Statistik Jakarta 12

Example

• As a result, the left child of the root is a leaf

node labeled as Defaulted = No as shown in

figure 1b

• For the right child of the root node, we need

to continue applying the recursive step of

Hunt’s algorithm until all the records belong

to the same class.

Sekolah Tinggi Ilmu Statistik Jakarta 13

Example

• This recursive step is shown in Figures 1c and

d below:

Figure1c: Step 3 Figure 1d: step 4

Sekolah Tinggi Ilmu Statistik Jakarta 14

Example

• Generally the whole diagram will be as follows

Sekolah Tinggi Ilmu Statistik Jakarta 15

Design Issues of Decision Tree

Induction

• How to split the training records? - Each

recursive step of the tree growing process

requires an attribute test condition to divide

the records into smaller subsets.

• To implement this step, the algorithm must

provide a method for specifying the test

condition for different attribute types as well as

an objective measure for evaluating the

goodness of each test condition.

Sekolah Tinggi Ilmu Statistik Jakarta 16

Design Issues of Decision Tree

Induction

• When to stop splitting? A stopping condition is

needed to terminate the tree growing

process.

• A possible strategy is to continue expanding a

node until all the records belong to the same

class or if all the records have identical

attribute values.

Sekolah Tinggi Ilmu Statistik Jakarta 17

How to Split an Attribute

• Before automatically creating a decision tree,

you can choose from several splitting

functions that are used to determine which

attribute to split on. The following splitting

functions are available:

– Random - The attribute to split on is chosen

randomly.

– Information Gain - The attribute to split on is the

one that has the maximum information gain.

Sekolah Tinggi Ilmu Statistik Jakarta 18

How to Split an Attribute

– Gain Ratio - Selects the attribute with the highest

information gain to number of input values ratio.

The number of input values is the number of

distinct values of an attribute occurring in the

training set.

– GINI - The attribute with the highest GINI index is

chosen. The GINI index is a measure of impurity of

the examples.

Sekolah Tinggi Ilmu Statistik Jakarta 19

Training Dataset

Age Income Student CreditRating BuysComputer

<=30 high no fair no

<=30 high no excellent no

31 - 40 high no fair yes

>40 medium no fair yes

>40 low yes fair yes

>40 low yes excellent no

31 - 40 low yes excellent yes

<=30 medium no fair no

<=30 low yes fair yes

>40 medium yes fair yes

<=30 medium yes excellent yes

31 - 40 medium no excellent yes

31 - 40 high yes fair yes

Sekolah Tinggi Ilmu Statistik Jakarta 20

>40 medium no excellent no

Resultant Decision Tree

Sekolah Tinggi Ilmu Statistik Jakarta 21

Attribute Selection Measure:

Information Gain (ID3/C4.5)

• The attribute selection mechanism used in ID3

and based on work on information theory by

Claude Shannon

• If our data is split into classes according to

fractions {p1,p2…, pm} then the entropy is

measured as the info required to classify any

arbitrary tuple as follows:

m

E ( p1 ,p2 ,...,pm ) pi log 2 pi

i 1

Sekolah Tinggi Ilmu Statistik Jakarta 22

Attribute Selection Measure:

Information Gain (ID3/C4.5) (cont…)

• The information measure is essentially the

same as entropy

• At the root node the information is as follows:

9 5

info [9,5] E ,

14 14

9 9 5 5

log 2 log 2

14 14 14 14

0.94

Sekolah Tinggi Ilmu Statistik Jakarta 23

Attribute Selection Measure:

Information Gain (ID3/C4.5) (cont…)

• To measure the information at a particular

attribute we measure info for the various

splits of that attribute

• For instance with age attribute look at the

distribution of ‘Yes’ and ‘No’ samples for each

value of age. Compute the expected

information for each of these distribution.

• For age “<=30”

Sekolah Tinggi Ilmu Statistik Jakarta 24

Attribute Selection Measure:

Information Gain (ID3/C4.5) (cont…)

• At the age attribute the information is as

follows:

5 4 5

info [2,3], [4,0], [3,2] info 2,3 info 4,0 info 3,2

14 14 14

5 2 2 3 3

log 2 log 2

14 5 5 5 5

4 4 4 0 0

log 2 log 2

14 4 4 4 4

5 3 3 2 2

log 2 log 2

14 5 5 5 5

0Sekolah

.694Tinggi Ilmu Statistik Jakarta 25

Attribute Selection Measure:

Information Gain (ID3/C4.5) (cont…)

• In order to determine which attributes we

should use at each node we measure the

information gained in moving from one node

to another and choose the one that gives us

the most information

Sekolah Tinggi Ilmu Statistik Jakarta 26

Attribute Selection By Information

Gain Example

• Class P: BuysComputer = “yes”

• Class N: BuysComputer = “no”

– I(p, n) = I(9, 5) =0.940

• Compute the entropy for age:

Age Income Student CreditRating BuysComputer

<=30 high no fair no Age pi ni I(pi, ni)

<=30 high no excellent no

31 - 40 high no fair yes >=30 2 3 0.971

>40 medium no fair yes

>40 low yes fair yes 30 – 40 4 0 0

>40 low yes excellent no

31 - 40 low yes excellent yes

<=30 medium no fair no

>40 3 2 0.971

<=30 low yes fair yes

>40 medium yes fair yes

<=30 medium yes excellent yes

31 - 40 medium no excellent yes

31 - 40 high yes Sekolah

fair Tinggi Ilmu Statistik Jakarta

yes 27

Attribute Selection By Information

Gain Computation

5 4 5

E (age) I (2,3) I (4,0) I (3,2)

14 14 14

0.694

• means “age <=30” has 5 out of 14 samples,

with 2 yes and 3 no. Hence:

Similarly:

Gain(income) 0.029

Gain( student ) 0.151

Gain(credit _ rating ) 0.048

Sekolah Tinggi Ilmu Statistik Jakarta 28

You might also like

- Practical File: Internet Programming LabNo ratings yetPractical File: Internet Programming Lab26 pages

- Module-3 Association Analysis: Data Mining Association Analysis: Basic Concepts and AlgorithmsNo ratings yetModule-3 Association Analysis: Data Mining Association Analysis: Basic Concepts and Algorithms34 pages

- FIND-S Algorithm: Machine Learning 15CSL76No ratings yetFIND-S Algorithm: Machine Learning 15CSL763 pages

- Machine Learning: PAC-Learning and VC-DimensionNo ratings yetMachine Learning: PAC-Learning and VC-Dimension31 pages

- Unit - IV - DIMENSIONALITY REDUCTION AND GRAPHICAL MODELSNo ratings yetUnit - IV - DIMENSIONALITY REDUCTION AND GRAPHICAL MODELS59 pages

- Objective: For One Dimensional Data Set (7,10,20,28,35), Perform Hierarchical ClusteringNo ratings yetObjective: For One Dimensional Data Set (7,10,20,28,35), Perform Hierarchical Clustering13 pages

- Question Bank Module-1: Department of Computer Applications 18mca53 - Machine LearningNo ratings yetQuestion Bank Module-1: Department of Computer Applications 18mca53 - Machine Learning7 pages

- Lecture - 2 Classification (Machine Learning Basic and KNN)No ratings yetLecture - 2 Classification (Machine Learning Basic and KNN)94 pages

- Practical 5: Introduction To Weka For Classfication100% (1)Practical 5: Introduction To Weka For Classfication4 pages

- 1) Aim: Demonstration of Preprocessing of Dataset Student - ArffNo ratings yet1) Aim: Demonstration of Preprocessing of Dataset Student - Arff26 pages

- Unit 5 - Data Mining - WWW - Rgpvnotes.inNo ratings yetUnit 5 - Data Mining - WWW - Rgpvnotes.in15 pages

- Subsets, Graph Coloring, Hamiltonian Cycles, Knapsack Problem. Traveling Salesperson ProblemNo ratings yetSubsets, Graph Coloring, Hamiltonian Cycles, Knapsack Problem. Traveling Salesperson Problem22 pages

- Anna University, Chennai Non-Autonomous Affiliated Colleges Regulations 2021 Choice Based Credit System B.E. Computer Science and EngineeringNo ratings yetAnna University, Chennai Non-Autonomous Affiliated Colleges Regulations 2021 Choice Based Credit System B.E. Computer Science and Engineering86 pages

- JNTUK R20 B.Tech CSE 3-2 Machine Learning Unit 4 NotesNo ratings yetJNTUK R20 B.Tech CSE 3-2 Machine Learning Unit 4 Notes23 pages

- Software Engineering Tools and PracticesNo ratings yetSoftware Engineering Tools and Practices37 pages

- Computer Number Systems and Boolean Algebra Notes Formula SheetNo ratings yetComputer Number Systems and Boolean Algebra Notes Formula Sheet2 pages

- Digital Logic Design Boolean Algebra and Logic SimplificationNo ratings yetDigital Logic Design Boolean Algebra and Logic Simplification46 pages

- 1.relations and Functions - Self EvaluationNo ratings yet1.relations and Functions - Self Evaluation1 page

- Module 1: Fibonacci Numbers and The Golden Ratio: MMW ReviewerNo ratings yetModule 1: Fibonacci Numbers and The Golden Ratio: MMW Reviewer11 pages

- Towards A Definition of An Algorithm: Journal of Logic and Computation February 2006No ratings yetTowards A Definition of An Algorithm: Journal of Logic and Computation February 200639 pages