100%(1)100% found this document useful (1 vote)

381 viewsBinary Logistic Regression

Binary logistic regression is a regression model used when the target variable is binary (can only take two values, like 0 or 1) and is predicted by one or more independent variables that can be continuous or categorical. It assumes the dependent variable is dichotomous, there is a linear relationship between continuous independent variables and the logit of the dependent variable, and an absence of multicollinearity among predictors. Key differences from linear regression include logistic regression not requiring a linear relationship between dependent and independent variables or normality of residuals.

Uploaded by

Osama RiazCopyright

© © All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

100%(1)100% found this document useful (1 vote)

381 viewsBinary Logistic Regression

Binary logistic regression is a regression model used when the target variable is binary (can only take two values, like 0 or 1) and is predicted by one or more independent variables that can be continuous or categorical. It assumes the dependent variable is dichotomous, there is a linear relationship between continuous independent variables and the logit of the dependent variable, and an absence of multicollinearity among predictors. Key differences from linear regression include logistic regression not requiring a linear relationship between dependent and independent variables or normality of residuals.

Uploaded by

Osama RiazCopyright

© © All Rights Reserved

Available Formats

Download as PPTX, PDF, TXT or read online on Scribd

You are on page 1/ 11

Binary Logistic Regression

Binary Logistic Regression

Binary logistic regression (LR) is a regression model where the

target variable is binary, that is, it can take only two values, 0 or

1.

Dependent variable based on one or more independent variables

that can be either continuous or categorical.

If we have more than two categories of the dependent variable

than we will use multiple regression analysis.

Binary Logistic Regression

For Example:

Drug use can be predicted based on prior criminal

convictions, drug use amongst friends, income, age and

gender (i.e., where the dependent variable is "drug use",

measured on a dichotomous scale – "yes" or "no" – and you

have five independent variables: "prior criminal

convictions", "drug use amongst friends", "income", "age"

and "gender").

Assumptions

1. Your dependent variable should be measured on

dichotomous scale. Examples of dichotomous

variables include gender(two groups male and

female), presence of heart disease( two groups: yes

or no).

However, if your dependent variable was not measured

on a dichotomous scale, but a contininous scale

instead, you will need to carry out multiple regression.

Assumptions

2. You have one or more independent variables,

which can be either continuous (i.e.,

an interval or ratio variable) or categorical (i.e.,

an ordinal or nominal variable).

Examples of continuous variables include revision time

(measured in hours), intelligence (measured using IQ

score), exam performance (measured from 0 to 100),

weight (measured in kg), and so forth.

Assumptions

3. Examples of continuous variables include revision

time (measured in hours), intelligence (measured

using IQ score), exam performance (measured from

0 to 100), weight (measured in kg), and so forth.

Assumptions

4. There needs to be a linear relationship between any

continuous independent variables and the logit

transformation of the dependent variable.

Multicollinearity corresponds to a situation where the

data contain highly correlated independent variables.

This is a problem because it reduces the precision of

the estimated coefficients, which weakens the

statistical power of the logistic regression model.

There should be an adequate number of observations

for each independent variable in the dataset to avoid

creating an overfit model.

Differences

Logistic regression does not require a linear

relationship between the dependent and independent

variables. However, it still needs independent variables

to be linearly related to the log-odds of the outcome.

Homoscedasticity (constant variance) is required in

linear regression but not for logistic regression.

The error terms (residuals) must

be normally distributed for linear regression but not

required in logistic regression.

Similarities

Absence of multicollinearity

Observations are independent of each other

You might also like

- Applied Longitudinal Analysis Lecture NotesNo ratings yetApplied Longitudinal Analysis Lecture Notes475 pages

- STATA EXAM (2017) : Evidence For Policy DesignNo ratings yetSTATA EXAM (2017) : Evidence For Policy Design5 pages

- Two-Way (Between-Groups) ANOVA: Statstutor Community ProjectNo ratings yetTwo-Way (Between-Groups) ANOVA: Statstutor Community Project4 pages

- Chapter-13: Analysis of Variance TechniquesNo ratings yetChapter-13: Analysis of Variance Techniques24 pages

- Handleiding Spss Multinomial Logit RegressionNo ratings yetHandleiding Spss Multinomial Logit Regression35 pages

- Statistical Computing Using Statistical Computing UsingNo ratings yetStatistical Computing Using Statistical Computing Using128 pages

- Parametric Statistical Analysis in SPSSNo ratings yetParametric Statistical Analysis in SPSS56 pages

- Regression: Knowledge For The Benefit of HumanityNo ratings yetRegression: Knowledge For The Benefit of Humanity46 pages

- Predictor Variables of Cyberloafing and Perceived Organizational Acceptance (UnpublishedNo ratings yetPredictor Variables of Cyberloafing and Perceived Organizational Acceptance (Unpublished5 pages

- Types of Data & The Scales of Measurement: Data at The Highest Level: Qualitative and QuantitativeNo ratings yetTypes of Data & The Scales of Measurement: Data at The Highest Level: Qualitative and Quantitative7 pages

- An Introduction To Factor Analysis: Philip HylandNo ratings yetAn Introduction To Factor Analysis: Philip Hyland34 pages

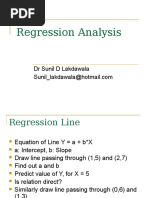

- Regression and Multiple Regression Analysis100% (1)Regression and Multiple Regression Analysis21 pages

- One-Way Repeated Measures Anova: Daniel BoduszekNo ratings yetOne-Way Repeated Measures Anova: Daniel Boduszek15 pages

- Assignment-Based Subjective Questions/AnswersNo ratings yetAssignment-Based Subjective Questions/Answers3 pages

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignFrom EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignNo ratings yet

- Get Statistics For Business & Economics 13th Edition (Ebook PDF) Free All Chapters100% (2)Get Statistics For Business & Economics 13th Edition (Ebook PDF) Free All Chapters41 pages

- Zlib - Pub - Swarm Intelligence Methods For Statistical RegressionNo ratings yetZlib - Pub - Swarm Intelligence Methods For Statistical Regression137 pages

- Buy Ebook (Ebook PDF) Statistics Unplugged 4th Edition by Sally Caldwell Cheap Price100% (8)Buy Ebook (Ebook PDF) Statistics Unplugged 4th Edition by Sally Caldwell Cheap Price41 pages

- Difference Between Literature Survey and Literature ReviewNo ratings yetDifference Between Literature Survey and Literature Review8 pages

- (Ebook) A Concise Handbook of the Indian Economy in the 21st Century by Ashima Goyal ISBN 9780199098163, 9780199496464, 0199098166, 0199496463 2024 scribd download100% (4)(Ebook) A Concise Handbook of the Indian Economy in the 21st Century by Ashima Goyal ISBN 9780199098163, 9780199496464, 0199098166, 0199496463 2024 scribd download65 pages

- Statistics and Probability: Quarter 4 - Module100% (2)Statistics and Probability: Quarter 4 - Module25 pages

- ED H14b Cumualtive Frequency, Box Plots, HistogramNo ratings yetED H14b Cumualtive Frequency, Box Plots, Histogram2 pages

- Bayesian Model Averaging For Linear Regression ModelsNo ratings yetBayesian Model Averaging For Linear Regression Models14 pages

- Statistics For Economics Module TeachingNo ratings yetStatistics For Economics Module Teaching175 pages

- This Study Resource Was: MC Qu. 9-54 The Construction Manager For ABC..No ratings yetThis Study Resource Was: MC Qu. 9-54 The Construction Manager For ABC..3 pages

- Pengaruh Karakteristik Pemerintah Daerah Dan OpiniNo ratings yetPengaruh Karakteristik Pemerintah Daerah Dan Opini20 pages

- Dummy Variable Regression and Oneway ANOVA Models Using SASNo ratings yetDummy Variable Regression and Oneway ANOVA Models Using SAS11 pages

- Demand Forecasting and Inventory Control A Simulation Study On Automotive Spare Parts PDFNo ratings yetDemand Forecasting and Inventory Control A Simulation Study On Automotive Spare Parts PDF16 pages

- Two-Way (Between-Groups) ANOVA: Statstutor Community ProjectTwo-Way (Between-Groups) ANOVA: Statstutor Community Project

- Introduction To Non Parametric Methods Through R SoftwareFrom EverandIntroduction To Non Parametric Methods Through R Software

- Statistical Computing Using Statistical Computing UsingStatistical Computing Using Statistical Computing Using

- Predictor Variables of Cyberloafing and Perceived Organizational Acceptance (UnpublishedPredictor Variables of Cyberloafing and Perceived Organizational Acceptance (Unpublished

- Types of Data & The Scales of Measurement: Data at The Highest Level: Qualitative and QuantitativeTypes of Data & The Scales of Measurement: Data at The Highest Level: Qualitative and Quantitative

- Sample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group DesignFrom EverandSample Size for Analytical Surveys, Using a Pretest-Posttest-Comparison-Group Design

- Get Statistics For Business & Economics 13th Edition (Ebook PDF) Free All ChaptersGet Statistics For Business & Economics 13th Edition (Ebook PDF) Free All Chapters

- Zlib - Pub - Swarm Intelligence Methods For Statistical RegressionZlib - Pub - Swarm Intelligence Methods For Statistical Regression

- Buy Ebook (Ebook PDF) Statistics Unplugged 4th Edition by Sally Caldwell Cheap PriceBuy Ebook (Ebook PDF) Statistics Unplugged 4th Edition by Sally Caldwell Cheap Price

- Difference Between Literature Survey and Literature ReviewDifference Between Literature Survey and Literature Review

- (Ebook) A Concise Handbook of the Indian Economy in the 21st Century by Ashima Goyal ISBN 9780199098163, 9780199496464, 0199098166, 0199496463 2024 scribd download(Ebook) A Concise Handbook of the Indian Economy in the 21st Century by Ashima Goyal ISBN 9780199098163, 9780199496464, 0199098166, 0199496463 2024 scribd download

- ED H14b Cumualtive Frequency, Box Plots, HistogramED H14b Cumualtive Frequency, Box Plots, Histogram

- Bayesian Model Averaging For Linear Regression ModelsBayesian Model Averaging For Linear Regression Models

- This Study Resource Was: MC Qu. 9-54 The Construction Manager For ABC..This Study Resource Was: MC Qu. 9-54 The Construction Manager For ABC..

- Pengaruh Karakteristik Pemerintah Daerah Dan OpiniPengaruh Karakteristik Pemerintah Daerah Dan Opini

- Dummy Variable Regression and Oneway ANOVA Models Using SASDummy Variable Regression and Oneway ANOVA Models Using SAS

- Demand Forecasting and Inventory Control A Simulation Study On Automotive Spare Parts PDFDemand Forecasting and Inventory Control A Simulation Study On Automotive Spare Parts PDF