Yen-Chen Lin

Research Scientist

NVIDIA

Email: yenchenl [at] nvidia (dot) com

Twitter / Google Scholar / Github

I am a research scientist at NVIDIA. I am interested in generative AI.

Previously, I was a Ph.D. student at MIT working with Phillip Isola and Alberto Rodriguez.

MIRA: Mental Imagery for Robotic Affordances

NeRF lets us synthesize novel orthographic views that work well with pixel-wise algorithms for robotic manipulation.

NeRF-Supervision: Learning Dense Object Descriptors from Neural Radiance Fields

Generating correspondences with Neural Radiance Fields (NeRF) enables robotic manipulation of objects (e.g., forks) that can't be reconstructed by RGB-D cameras or multi-view stereo.

iNeRF: Inverting Neural Radiance Fields for Pose Estimation

Performing differentiable rendering with Neural Radiance Fields (NeRF) enables object pose estimation and camera tracking.

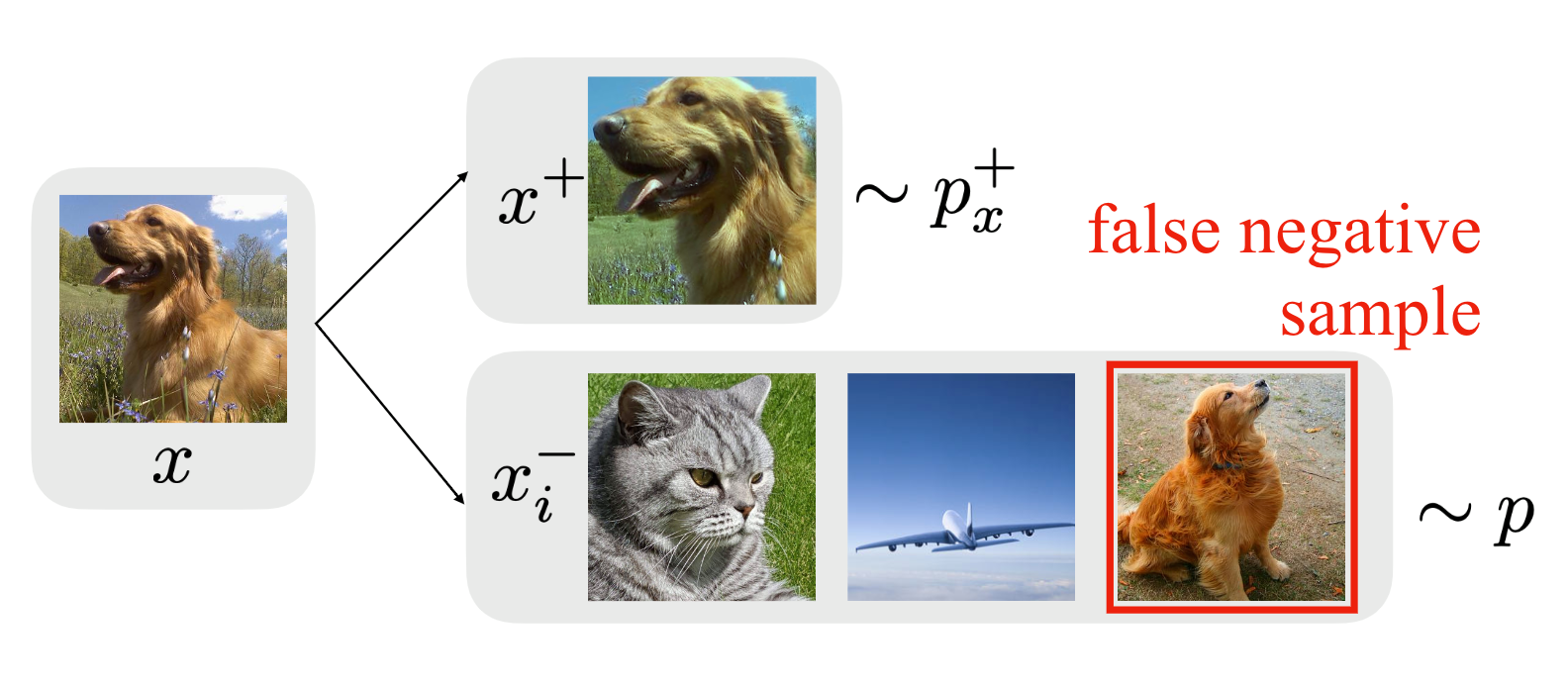

Learning to See before Learning to Act: Visual Pre-training for Manipulation

Transferring pre-trained vision models to perform grasping results in better sample efficiency and accuracy.

Experience-embedded Visual Foresight

Meta-learning the video prediction models allows the robot to adapt to new objects' visual dynamics.

Omnipush: accurate, diverse, real-world dataset of pushing dynamics with RGBD images

A dataset for meta-learning dynamic models.

It consists of 250 pushes for each of 250 objects, all recorded with RGB-D camera and a high precision tracking system.

Tactics for Adversarial Attack on Deep Reinforcement Learning Agents

Strategies for adversarial attacks on deep RL agents.

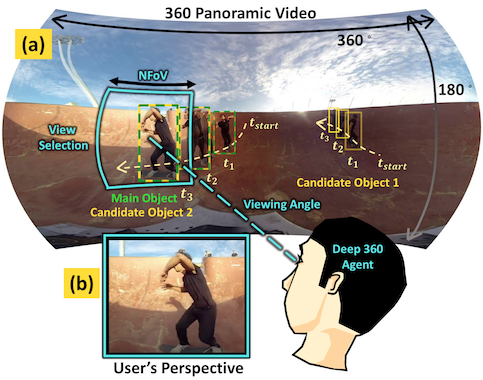

Deep 360 Pilot: Learning a Deep Agent for Piloting through 360° Sports Videos

CVPR 2017

Oral Presentation

An agent that learns to guide users where to look in 360° sports videos.

Tell Me Where to Look: Investigating Ways for Assisting Focus in 360° Video

CHI 2017

A study about how to assist users when watching 360° videos.