AI development refuses to stand still. OpenAI now has two models in its latest model family: GPT-4o and GPT-4o mini. Both have already rolled out to a lot of ChatGPT users, so let's dig in and see what they can do. (And yes, the capitalization upsets me too.)

Table of contents:

What is GPT-4o?

GPT-4o is the latest family of AI models from OpenAI, the company behind ChatGPT, DALL·E, and the whole AI boom we're in the middle of. They're all multimodal models—meaning they can natively handle text, audio, and images.

GPT-4o offers GPT-4 level performance (or better) at much faster speeds and lower costs, while GPT-4o mini is a smaller language model that outperforms GPT 3.5 Turbo with even faster speeds and lower costs.

It also marks the first time free ChatGPT users will be able to use a GPT-4 model (they've been working with GPT-3 and GPT-3.5 Turbo up until now.)

What is GPT-4o mini?

GPT-4o mini is a smaller language model from OpenAI. It's faster and cheaper than GPT-4o, while still being more powerful than previous models like GPT-3.5 Turbo.

GPT-4o vs. GPT-4: What can GPT-4o do?

The "o" in GPT-4o stands for "omni." That refers to the fact that, in addition to taking text inputs, it can also natively understand audio and image inputs—and it can reply with any combination of text, images, and audio. The key here is that this is all being done by the one model, rather than multiple separate models that are working together.

Take ChatGPT's previous version of voice mode. You could ask it questions, and it would reply with audio, but it took ages to reply because it used three separate AI models to do it. First, a speech-to-text model converted what you said to text, then GPT-3.5 or GPT-4 would process that text, and then ChatGPT's response would be converted from text to audio and played back. According to OpenAI, the average response time was 2.8 seconds using GPT-3.5 and 5.4 seconds using GPT-4 to reply. A neat demo, but not particularly practical.

But now, because GPT-4o is natively multimodal and is able to handle the audio input, natural language processing, and audio output itself, ChatGPT is able to reply in an average of 0.32 seconds—and you can really feel the speed. Even text and image queries are noticeably faster, especially with GPT-4o mini.

If this speed was coming at the cost of performance, that would be one thing—but OpenAI claims GPT-4o matches GPT-4 on English text and code benchmarks, while surpassing it on non-English language, vision, and audio benchmarks. Similarly, GPT-4o mini outperforms GPT-3.5 Turbo on textual intelligence and multimodal reasoning across major benchmarks. One upgrade in particular is the new tokenizer—which converts text into small chunks that the AI can understand mathematically. It's much more efficient for languages like Tamil, Hindi, Arabic, and Vietnamese, allowing for more complex prompts and better translation between languages.

It's also possible to interrupt the model when it's speaking to you, though that feature is rolling out over the coming months (I still had to tap to interrupt when I was testing it). OpenAI also says that GPT-4o has a better capacity to speak with emotion, as well as understand your emotional state from your tone of voice.

The situation is similar with image input. GPT-4o is noticeably quicker to respond to questions about images as well as process things in them like handwriting. This quick context-switching makes ChatGPT feel like a much more useful real-world tool.

And this is all on top of GPT-4's existing features. You can still use it for brainstorming, summarizing, data analysis, market research, cold outreach—the list goes on.

How does GPT-4o work?

The GPT-4o models work similarly to the other GPT models, but their neural networks were trained on images and audio at the same time as text, so they've adapted to process them as both inputs and outputs. Unfortunately, we're now at the point of AI corporate competitiveness where the interesting details and advances are no longer being made public. Still, there are a couple of things we can infer from other multimodal models like Google Gemini, as well as OpenAI's previous GPT models.

The GPT in GPT-4o still stands for Generative Pre-trained Transformer, which means they were developed and operate similarly to the other GPT models. Generative pre-training is the process where an AI model is given a few ground rules and heaps of unstructured data and allowed to draw its own connections. In addition to text training data that was used on previous AI models, the GPT-4o models were presumably given billions of images and tens of thousands of hours of audio to parse at the same time. This will have allowed their neural networks to build connections not only between things like the word cow, but also to understand what they look like (four legs, udders, maybe horns), and what they sound like ("moo").

Similarly, GPT-4o models use the transformer architecture that nearly all modern AI models also use. While a bit too complex to dive into right here, I've explained it in more detail in this deep dive on how ChatGPT works. The main thing to understand is that it allows GPT-4o and GPT-4o mini to understand the most important parts of long and complex prompts and remember information from the previous prompts in the same conversation.

On top of all this, OpenAI has fine-tuned its models using human guidance to make them as safe and useful as possible. OpenAI doesn't want to accidentally create Skynet, so they go to a lot of effort before releasing a new model to make it hard for people to make it misbehave. The latest AIs are far less likely to start spouting bigoted nonsense unprompted.

How good is GPT-4o? Does it live up to the hype?

So, how good are GPT-4o and GPT-4 mini? Well, in my testing, GPT-4o's multimodal features were hit and miss—though very impressive when they worked.

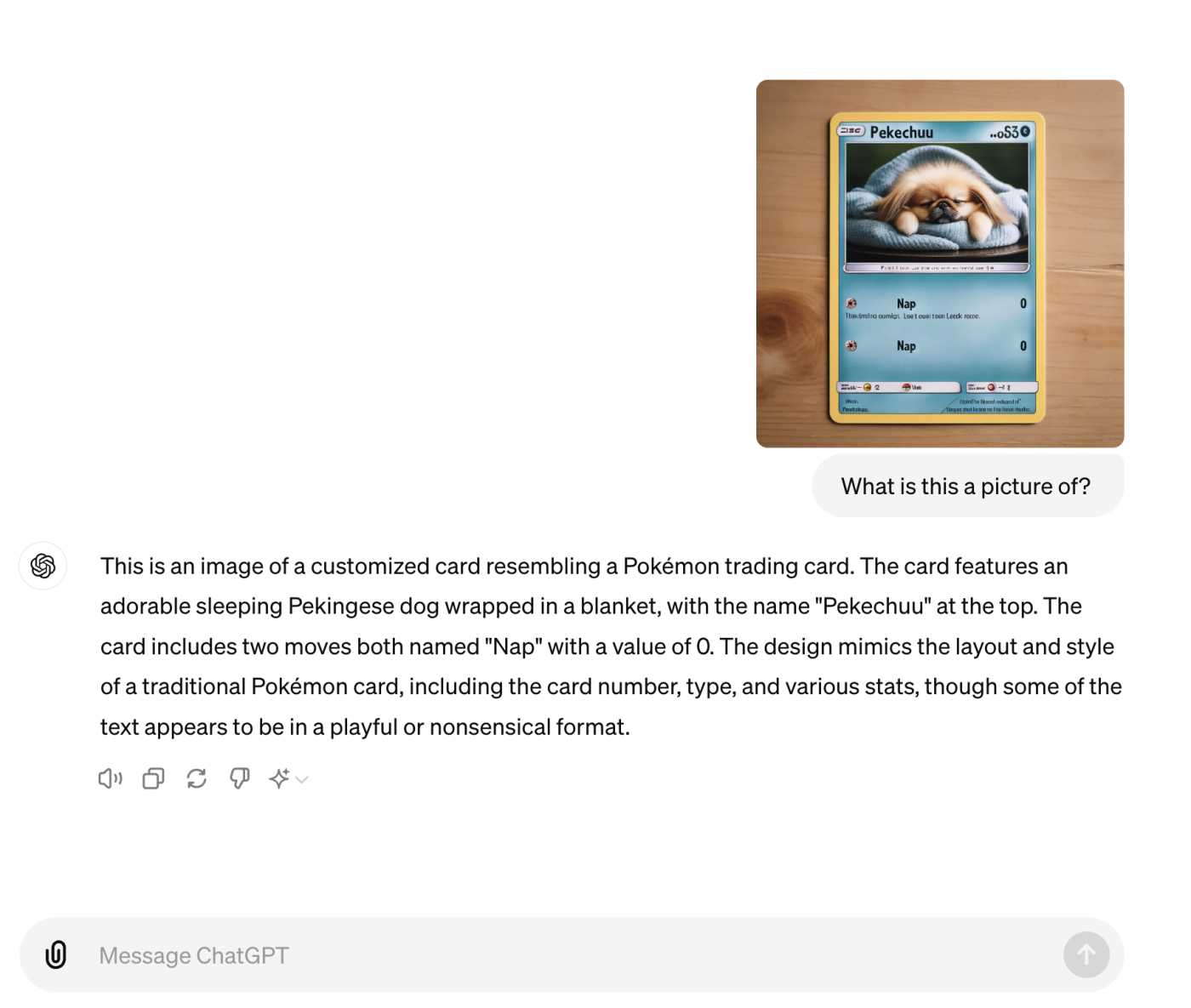

Vision and audio are now a significantly bigger part of the overall ChatGPT experience, especially if you use the mobile app. Of course, this introduces all sorts of new avenues for ChatGPT to hallucinate and otherwise get things wrong. But when things go well, it's awesome. On the iOS app, I was able to ask ChatGPT to look up Red Rum the horse and turn him into a Pokémon character—all without having to use any kind of text interface.

Things like this really do represent a new way for ChatGPT and AI assistants in general to be a more useful part of people's lives. (Not that a Pokémon Red Rum is particularly useful, but you get the idea.)

One consistent problem I found was confidence. In my testing, GPT-4o was confidently wrong on lots of different occasions, in situations where it really shouldn't be. Here's an example.

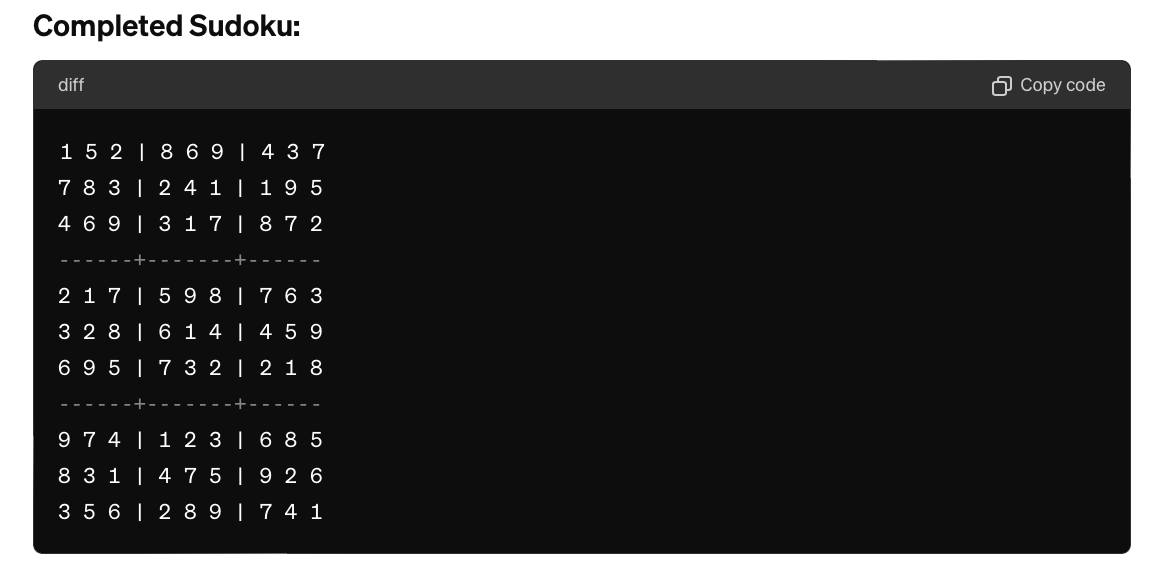

In the past, ChatGPT has always failed to solve Sudoku puzzles for me. It's either failed to analyze them, thrown an error, or flat-out refused. But with GPT-4o activated, ChatGPT was more than willing to take a chance.

Unfortunately, not only was it unable to "see" the grid correctly, but it was also happy to insert random numbers in random locations.

And then it attempted to solve the puzzle it misunderstood. It managed to get the first box and row right, but after that, it all fell apart—yet it was still prepared to present me with a supposedly completed puzzle.

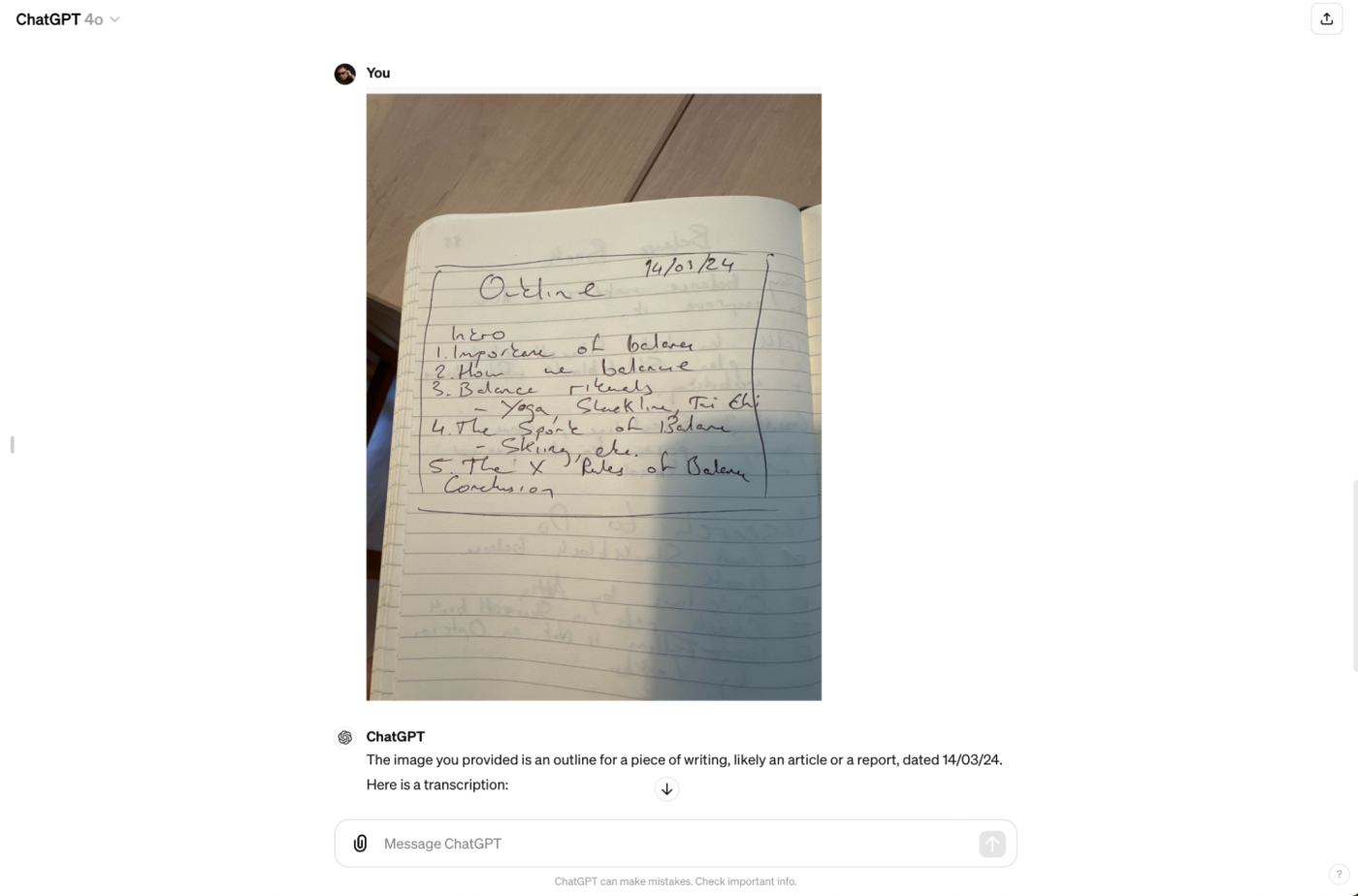

Similarly, when I asked it to parse my handwriting, if there was a section it couldn't read, it just inserted a plausible word rather than saying that it couldn't understand what was written there.

For example, in a handwritten outline for an article I'm researching, it changed the point "Balance Rituals" to "Balance In Fitness." The words are similarly relevant, but not at all the same in meaning or in how they look on the page.

These frequent near misses are almost harder to deal with than the model refusing to answer a question or being completely wrong all the time. Having said that, it really was shockingly good at understanding my ridiculous handwriting.

GPT-4o mini is theoretically less powerful, but it really depends on what you're asking it to do. For many text and image prompts, its responses will be just as useful as GPT-4o. If you're consistently pushing the limits of what AI models are capable of, you might find more holes, but it's definitely a good tool for most people.

One last note: the headline audio features, like being able to interrupt the model without tapping or making it speak with more emotion, aren't coming until the fall, so I wasn't able to test them. But they don't seem likely to cause the same kinds of issues: ChatGPT's audio transcription is already pretty good even with my Irish accent and GPT-4.

How much does GPT-4o cost?

One of the biggest announcements for GPT-4o and GPT-4o mini is that they're free for all ChatGPT users—though there is an unspecified rate limit on the larger model. Users with ChatGPT Plus (which costs $20/month) have five times the rate and also get early access to the new features like the forthcoming upgraded voice mode. GPT-4o mini also replaces GPT-3.5 Turbo as the default model for everyone, though that will take a few weeks to roll out.

Both models are also available through an API for developers. GPT-4o costs $5 per 1 million input tokens and $15 per 1 million output tokens, and GPT-4o mini costs $0.15 per million input tokens and $0.60 per million output tokens.

In particular, OpenAI is positioning GPT-4o mini as a powerful, cost-effective option for developers.

How to try GPT-4o

While GPT-4o's multimodal features might not be as accurate as I'd hoped (at least with how they're currently implemented in ChatGPT), there's still a lot to like. Here's how to access GPT-4o:

The text and vision features of GPT-4o have rolled out to ChatGPT users. GPT-4o mini is currently rolling out and will replace GPT-3.5 Turbo.

If you're a developer, GPT-4o and GPT-4o mini are available through the API right now.

You can use GPT-4o with Zapier via Zapier's ChatGPT integration.

GPT-4o is a key part of the ChatGPT desktop app that recently launched. The app puts ChatGPT a keyboard shortcut away, and is able to answer questions about what's happening on your screen using its vision capabilities.

Even if you do have access already, you might not have all the fancy features they demoed at the launch. But rest easy: ChatGPT will be singing to you in no time.

Automate GPT-4o

GPT-4o is fun to use in ChatGPT, but you can put it to actual work for you with Zapier. Zapier's ChatGPT integration lets you connect ChatGPT (including GPT-4o) to thousands of other apps, so you can add the latest AI to your business-critical workflows. Here are some examples to get you started, but you can trigger ChatGPT from basically any app. Learn more about how to automate ChatGPT.

Start a conversation with ChatGPT when a prompt is posted in a particular Slack channel

Create email copy with ChatGPT from new Gmail emails and save as drafts in Gmail

Generate conversations in ChatGPT with new emails in Gmail

Zapier is the leader in workflow automation—integrating with thousands of apps from partners like Google, Salesforce, and Microsoft. Use interfaces, data tables, and logic to build secure, automated systems for your business-critical workflows across your organization's technology stack. Learn more.

Related reading:

This article was originally published in May 2024. The most recent update was in July 2024.