1 Introduction

ProcessJ [

10,

15] is a process-oriented language featuring synchronous, channel-based message-passing concurrency; a Java-like syntax; and a multi-threaded, JVM-based runtime with a cooperative scheduler. Inspired by Hoare’s

Communicating Sequential Processes (CSP) [

4,

5] and

occam [

14], ProcessJ aims to provide lightweight, scalable concurrency in a language-style familiar to programmers. The ProcessJ compiler produces Java code, which is further compiled to produce Java class files to run on the JVM.

1ProcessJ relies on concurrency primitives as first-class language components. Incorrect implementation of channel-based message-passing concurrency is well documented. Work on both

Communicating Sequential Processes for Java (JCSP) [

19] and

Communicating Scala Objects (CSO) [

7] has illustrated that subtle concurrency issues can go undetected until verification is undertaken. Unlike JCSP and CSO, ProcessJ uses a cooperative scheduler, which requires a different approach to implementing concurrent components. It is therefore necessary to ensure that these concurrency components function correctly when used by ProcessJ programmers. As such, the aims of this work are threefold:

(1)

We aim to show that the implementation of ProcessJ’s channel communication and choice meets the specification model for its behavior.

(2)

We also aim to show that this behavior is preserved when considering the execution environment. In other words, code executed by our scheduler behaves as expected and meets the requirements of the specification.

(3)

We further aim to verify that ProcessJ’s runtime will function correctly when switched from a single-threaded to multi-threaded scheduler.

For all three aims, we use a tool (FDR [

3]) that verifies the concurrent behavior of our system implementations. To meet the first aim, we use a model specification of channel communication and of choice, and use these to verify the behavior of our implementation of channels and choice under single-threaded control. The verified implementation and method of verification will allow other concurrency language implementers to reuse our approach to verify their own cooperative schedulers for message-passing concurrency.

Using the standard approach of not taking an execution environment into account, we verify the correctness of channel communication and alternation for ProcessJ. Unlike previous work in the area, we are not satisfied with the standard approach to verification. After verifying without restrictions, we introduce the concept of an execution environment—in this context, a cooperative scheduler—and discuss why the initial standard approach does not adequately represent what happens in a real program execution on real hardware. We present CSP for the scheduler and adjust the existing channel communication and alternation code to work in tandem with it. We then consider the results of the FDR verification of this new model with just a single scheduler. As we will see, including a scheduler will cause some traces from the formal model to become impossible, but we still have deadlock and livelock freedom. We finally consider the same system again but now with multiple schedulers and discuss the results and the future work that needs to be undertaken to also obtain suitable results for the multi-scheduler system.

1.1 Breakdown of the Article

Section

2 presents some background and related work. In Section

3, we introduce the ProcessJ language and explain how the translation to Java is accomplished. Section

4 gives a brief introduction to the process algebra CSP and the verification models used, as well as the FDR model checker. In Section

5, we perform the ‘standard’ verification of the correctness of the implementation of channel communication in ProcessJ’s runtime; in other words, we do not consider any execution environment. Similar, in Section

6, we perform the exercise but for choice in ProcessJ. In Section

7, we introduce the idea of an execution environment and examine the effects it may have on program execution as well as some of the issues it may introduce with respect to formal verification. In Section

8, we redo the channel communication verification for ProcessJ, but this time with the ProcessJ’s cooperative scheduler included. Similarly, in Section

9, we redo the choice verification, but this time with the execution environment. In Section

10, we conclude and present the future work list, partially derived from the results of the verifications undertaken.

All CSP

\(_{\rm M}\) specifications used in this work are available on GitHub.

22 Background and Related Work

To support multi-processing, a runtime typically takes one of two scheduling approaches:

•

Preemptive scheduling, where processes are allocated processor time slices managed centrally (e.g., via the operating system).

•

Cooperative scheduling, where processes decide when to give up (yield) the processor.

Assuming that all processes have the same priority, preemptive scheduling guarantees that if a process is in a ready state and the application is executing, the process will eventually be allocated processor time. Cooperative scheduling provides no such guarantees, and a process may use the processor indefinitely. With cooperative scheduling, when a process does give up the processor, a method to determine which process to schedule next is required. Typically, and in the case of ProcessJ, a queue of processes is maintained.

On a single processor system, a naive approach to cooperative scheduling can be used, in that the next ready process is popped from the queue ad infinitum. In a multi-processor system, a hybrid solution is required, in which each processor can take a ready process from the queue and execute it. The challenge comes when managing coordination via the different levels of locking, as cross-processor locking is typically achieved via preemptive scheduling constructs provided by the operating system (locks, conditions guards, etc.), whereas intra-processor scheduling can be managed with a cooperative-scheduled equivalent. The aim is to avoid stopping a processor doing work as synchronization occurs and to effectively manage tasks on the queue during synchronization activity. In this regard, only data shared between processes need to be protected.

Mutual-exclusion checking is a long-solved problem in concurrency (e.g., see [

6]). Model checking concurrent algorithms begins with this assumption, as multi-processor behavior is typically managed via a preemptive scheduler provided by the operating system. Atomic operations introduce new challenges for mutual exclusion, but again, the preemptive scheduler enables certain assumptions (e.g., see [

8]).

The assumption of preemptive scheduling has been successfully applied to model check synchronous channel communication. The work presented in this article is inspired by similar work by Welch and Martin [

19] on JCSP [

18]. Lowe [

7] undertook similar work on CSO [

16]. JCSP and CSO both rely on pre-emptive scheduling provided via Java threads, unlike ProcessJ’s cooperative scheduling approach. Our scheduler emulates the

occam-

\(\pi\) multi-processor cooperative scheduler defined by Ritson et al. [

11]. Unlike the work of Ritson et al. [

11], we further verify the behavior of our system being controlled by a scheduler.

The limitations we have discovered in previous works is a lack of consideration of an execution environment. For example, a known issue in Java concurrent code is spurious wakeups [

9]. JCSP now has code to mitigate this issue, but this has never been formally modeled. Therefore, the JCSP verification is limited in its scope. We include considerations of the execution environment in this work and consider it an important contribution.

2.1 Channel-Based Message-Passing Concurrency

ProcessJ uses processes to specify the behavior of its concurrently executing components. A process is a series of steps that are undertaken sequentially. These steps can include the execution of other processes, possibly concurrently. To communicate between processes, ProcessJ provides CSP-like channels: synchronous, blocking, and non-buffered. Both sender and receiver must be present for a message exchange to happen. If the writer arrives first, it must wait for the reader and vice versa.

This style of concurrency requires three fundamental functions to specify system behavior:

•

The ability to execute two or more processes concurrently (in parallel).

•

The ability to communicate between processes via a channel.

•

The ability to select between two or more channel communications to define behavior (choice).

The management of parallel execution is the role of the scheduler. When two or more processes are executing in parallel, it means that any one of them can make the next global program step. Effectively, multiple computations are running at once, although the observed behavior can be externally represented as a sequentially ordered series of steps. In a multi-processor environment, the aim is to manage that any process can make the next program step without causing incorrect behavior or a concurrency issue. These aims are reflected in the key aims of this work.

Coordination between processes is where concurrency issues (e.g., deadlock or livelock) arise. When communicating between processes, we need to ensure that the scheduler can correctly interleave operations of the coordinating processes to meet the specified behavior while avoiding such concurrency issues. Our task as runtime verifiers is to ensure that concurrency behavior (i.e., any process step occurring next) is modeled to sufficiently ensure correct behavior without concurrency issues.

2.2 Execution Environment

In all of the research we have encountered in the literature where the authors set out of prove correctness of a channel primitive, we find a complete lack of consideration of the

execution environment’s behaviors. In other words, no code runs itself, and it is always executed within an execution environment: threads, processes, schedulers, and so forth. However, this is never considered in previous work. We explore this problem further in Section

7.1 and furthermore extend our initial model to include the ProcessJ execution environment to better understand the issues introduced by doing so.

3 ProcessJ

ProcessJ [

15] is a process-oriented programming language being developed at the University of Nevada Las Vegas. The syntax of ProcessJ resembles Java, with new constructs for synchronization, and it has CSP semantics similar to

occam/

occam-

\(\pi\) [

13,

14]. The ProcessJ compiler generates Java source files that are further compiled to produce class files, which in turn are rewritten using the ASM [

2] bytecode rewriting tool to create cooperatively schedulable Java bytecode. Targeting the

Java Virtual Machine (JVM) ensures maximum portability, and tests have shown that a computer can handle upward of half a billion processes on a single core [

15].

ProcessJ supports four different channel types: one-to-one, one-to-many, many-to-one, and many-to-many. In this article, we consider only one-to-one channels and leave the remaining three channel types as future work.

In the following, we present a simple ProcessJ example. The main method starts two processes (reader and writer) concurrently; reader is passed the reading end of a channel, and writer is passed the writing end of the same channel. The writing process sends the value 42 to the reading process, which in turn prints out the value. Once both these processes have terminated, the main process prints “Done.”

3.1 Processes

The ProcessJ compiler outputs Java bytecode with dummy methods taking the place of de-scheduling and resumption points (i.e., for the points in the code where a process yields to the scheduler and where the code is resumed when rescheduled), and has a runLabel variable that keeps track of what the next resumption point is. Each process has the following code at the beginning of its run() method (which is the method invoked by the scheduler):

This Java source is compiled with the regular Java compiler producing Java bytecode. The resulting bytecode needs to be instrumented in such a way that the resume(x) method calls described previously are replaced by goto instructions; resume(x) is replaced by goto L \(_{\tt x}\) , where L \(_{\tt x}\) is the address of the label(x) method invocations. The label(x) instructions are then removed (once their addresses in the instruction stream has been recorded), and yield() method calls are replaced by code that jumps to the end of the code (a goto instruction).

With this instrumentation in place, we now have bytecode that supports cooperative scheduling: it can temporarily suspend anywhere and upon resumption continue execution from the last suspend/yield location. State is preserved by converting all ProcessJ parameters and locals to Java fields. The following code shows the runtime representation of a process:

3 \(setNotReady()\) and \(setReady()\) set a process not ready to run and ready to run, respectively.

As an example operation, consider a channel communication for which the writer arrives first. As the reader is not present, the writer can copy its data to the channel, register itself as the writer on the channel, and wait for the reader to appear. Waiting is performed by yielding, which sets a run label used for resumption when the process is rescheduled. The writer also sets itself not ready to run before returning to the scheduler. While the writer is not ready, the scheduler will not resume it—it simply gets put at the back of the run queue again. Only when a reader appears is the writer set to ready. At this time, the scheduler is free to resume the writer when it reaches the front of the run queue again. In the next section, we show a larger example that illustrates how the code generation and the bytecode rewriting work.

3.2 From ProcessJ to Java Bytecode

As an example of the code generation and rewriting phases, let us consider the following process:

The ProcessJ compiler translates each process into a corresponding Java class that looks as follows:

4isReadyToRead() will set the caller’s ready flag to false if the channel is not ready to be read. terminate() will set a special flag in the process such that the scheduler knows not to reschedule the process again, as it has terminated.

This code compiles as the PJProcess class defines \(label()\) , \(resume()\) , and \(yield()\) and as dummy methods. A \(label(c)\) (c is an integer constant) call in Java Bytecode looks like this:

where @x is the address of the instruction. aload_0 and the constant loading instruction are one-word instruction, and the invocation instruction is a three-word instruction.

The resume() invocation looks the same, but it invokes resume(I)V. yield() has no parameter, thus it looks like this:

For the example code, two of each of resume() and yield() are generated, and label() is generated three times. We need to locate the addresses in the bytecode where the label() invocation is located so that we can record it and use it in the replacement code for resume() and yield(). For example, if a label() invocation is at address x, we calculate address \(x-2\) (the location of the corresponding aload_0. We consult the constant that is loaded in the instruction after the aload_0 to determine which of the labels we have found. In the generated class file for this example, the address for label(1) is 80, and the address for label(2) is 115 and label(0) (the end of the code) is at address 120. The aload_0, the constant-loading instruction, and the invocation instruction can now be replaced by nop (no-operation) instructions.

With these addresses located, the resume() invocations along with their aload_0 and constant-loading instruction can be replaced by a goto instruction (plus a number of nop instructions). More specifically, resume(1) becomes the bytecode instruction goto 80 and resume(2) becomes goto 115. All yield() invocations are replaced with goto 120 (plus a number of nop instructions for padding), where 120 is the address of the end of the code. Naturally, the location of the resume() and yield() invocations are required to replace the instructions in the class files. The following code illustrates the resulting code in Java using gotos and labels (neither of which exist in Java at the source code level):

For the rest of the article, we use L \(_{\tt x}\) rather than label(x) simply for aesthetics.

As we can see, the yield() invocations all translate to goto L \(_{\tt 0}\) rather than a simple return; this is necessary to prevent the Java verifier from reporting unreachable code.

4 Communicating Sequential Processes

CSP [

4,

5] allows the specification of a concurrent system via

processes and

events. A process is an abstract component definition denoted by the event behavior in which the process wishes to engage. Events in CSP are

atomic,

synchronous, and

instantaneous. In other words, an event cannot be divided, it causes all coordinating processes to wait until the event occurs, and the event occurs immediately when executed by the system.

The simplest processes are stop, which engages in no events and will not terminate, and skip, which engages in no events but will terminate.

Events are added to a process using the prefix operator ( \(\rightarrow\) ). For example, the process \(P = a \rightarrow\) stop engages in event a, then stops. A process definition must end by executing another process definition, and processes can be recursive (e.g., \(P = a \rightarrow P\) ).

A process can select alternate behavior based on a choice operator. The three frequent choice types are external (or deterministic) choice, internal (or non-deterministic) choice, and prefix choice.

Given two processes P and Q, the definition \(P □ Q\) is a process that will behave as either P or Q, depending on the first event to be offered by the environment; it is called an external choice. For example, the process

is willing to accept a, then behave as Q, or accept b, then behave as R. If both a and b are available, then the system may choose either.

An internal choice is represented by \(⊓\) . A process \(P ⊓ Q\) can behave as either P or Q without considering the external environment. The choice is internal to the process.

Both external choice and internal choice can operate across a set of events. If E is a set of events, then we can write \(□ _{a \in E}\) and \(⊓ _{a \in E}\) , respectively.

Prefix choice allows us to define an event and a parameter. If we define a set of events as \(\lbrace c.v | v \in Values\rbrace\) , we can consider c as a channel willing to communicate a value v. We can then define input and output operations ( \(?\) and \(!\) , respectively) to allow communication and binding of variables. This is simply a shorthand due to the following identities:

where Values is a finite set.

Processes can be combined via parallel and sequential composition. A parallel composition is denoted as \(P \parallel Q\) . P and Q must now coordinate on their shared set of events. This can be specified via an alphabet. The alphabet of a process is the set of events on which it is willing to synchronize at some point during its lifecycle. For example, the process \((a \rightarrow b \rightarrow P) \parallel [\lbrace b \rbrace ] (b \rightarrow c \rightarrow Q)\) has two processes synchronizing on b. Thus, a must happen, before both processes execute b and then c is performed.

The \(parallel[A][B]\) operator uses explicit alphabets for synchronization. If P has alphabet \(\alpha {}P = A\) and \(\alpha {}Q = B\) , then \(P \parallel [A][B] Q\) is equivalent to \(P \parallel Q\) , which again is equivalent to \(P \parallel [A\cap B] Q\) .

Two processes can also interleave: \(P ||| Q\) . This means that P and Q execute in parallel but do not synchronize on shared events. Returning to our previous example, \((a \rightarrow b \rightarrow P) ||| (b \rightarrow c \rightarrow Q)\) may accept a or b first. The event b is only ever performed by one process at a time.

Sequential composition is denoted as \(P\ ;Q\) . This means that after P has terminated, Q is performed. Sequential composition operates on processes, not events; therefore, it allows the definition of behavior as a sequential composition of discrete process definitions.

4.1 Models

CSP defines three models of behavior to analyze systems. The traces model comes from the externally observed behavior of a system. For example, the process \(P = a \rightarrow b \rightarrow P\) has a traces set \(\lbrace ⟨ ⟩, ⟨{a}⟩, ⟨{a,b}⟩, ⟨{a,b,a}⟩, \dots \rbrace\) . If the set of traces of a system implementation P is a subset of the set of traces of a system specification Q, we state that \(Q ⊑_{T} P\) (P trace refines Q). This means that the trace behavior of P is contained within the behavior of Q.

Observable behavior of a process can be concealed via the hiding operator \(\backslash\) . Hidden events are replaced by \(\tau\) and are ignored when comparing observable traces. For example, \((a \rightarrow b \rightarrow a \rightarrow {\sf skip}) \backslash \lbrace a\rbrace\) has traces \(\lbrace ⟨ ⟩, ⟨{b}⟩ \rbrace\) as \(\tau\) s are not observable. Hiding events allows us to analyze the external behavior of a process, thus allowing assertions such as \(P ⊑_{T} Q \wedge Q ⊑_{T} P\) .

An implementation may contain events that are not part of the specification. These are events required to model the implementation of the system rather than just a specification of behavior. We therefore hide internal events of an implementation to enable refinement checking.

The failures model deals with events that a process may refuse to engage in after a sequence of events occur. This overcomes the limitation of comparing traces. For example, \((P = a \rightarrow P) ⊑_{T} ((P = a \rightarrow P) ⊓ {\sf stop}))\) , although the right-hand definition may non-deterministically refuse to accept any events. A failure is a pair \((s, X),\) where s is a trace of a process P and X is the set of events that can be refused after P has performed the trace s. Stating that \(P ⊑_{F} Q\) means that whenever Q refuses to perform an event, P does likewise.

The final model is the failures-divergences model. Divergences deal with potential livelock scenarios where a process can continually perform internal events (denoted by \(\tau\) ) and not progress in its externally observed behavior. For example, we consider the following two processes:

where \({\bf div}\) is a process that immediately diverges. Although \(P ⊑_{T} Q\) and \(P ⊑_{F} Q\) (and vice versa), Q can continuously not accept a and just perform \(\tau\) . The final refinement check \(⊑_{FD}\) allows processes to be compared in this circumstance.

4.2 FDR

FDR [

3] is a tool to define and analyze CSP specifications. Systems are defined in CSP

\(_{\scriptstyle \textrm {M}}\) —a machine-readable version of CSP. FDR allows processes to be refinement checked via the traces, failures, and failures-divergences models. FDR also supports further assertions such as deadlock freedom, divergence freedom, and whether a process definition is deterministic. FDR supports further operators that are used in CSP via convention such as an

if statement and binding variables via a

let statement. The

if follows the standard functional model with each branch having to end in another process definition. It is therefore common to write the following:

The semantics of \(c?v \rightarrow ({\sf if}\ (v == x)\ {\sf then}\ P\ {\sf else}\ Q)\) in CSP is equivalent to \((c.x \rightarrow P) □ (□ v \leftarrow X\backslash {}\lbrace x\rbrace \bullet c.v \rightarrow Q)\) . The expression \(x \leftarrow X \bullet P(x)\) is the process P executed with the a value x from X. \(x \leftarrow X\) means exactly \(x \in X\) .

FDR can also check a system for determinism and divergence freedom. FDR “considers a process

P to be deterministic providing no witness to non-determinism exists, where a witness consists of a trace

tr and an event

a such that

P can both accept and refuse

a after

tr” [

1].

4.3 Modeling State

All ProcessJ runtime classes have member state variables. It is therefore necessary to model such state in CSP. In this section, we present CSP that can be used to model a general state that can be set and read. A state variable can be modeled in CSP via a process. The process maintains the current value of the state variable, providing events to either get the current value of the variable or set a new value of the variable. We can define a generic process to model a state variable—VARIABLE—with alphabet \(\alpha {}VARIABLE\) as follows:

VARIABLE takes two parameters: var is the channel used to communicate the variable with the environment, and val is the current value of the variable. In the alphabet \(\alpha {}VARIABLE\) , val has type T, which is provided for the given variable by the system specifier.

We are now ready to embark on the first of three major steps in the full verification of our cooperatively scheduled runtime. We follow a similar path to many other verifications by not considering the execution environment at first.

5 UNRESTRICTED CHANNEL Verification

We start by giving a CSP specification of a generic channel, then proceed to verify the ProcessJ channel implementation. Note that for the rest of the article, we shall omit the many alphabets of the various processes simply to save space. They can be found in the CSP \(_{\rm M}\) code available on the GitHub site.

5.1 A Generic Channel Specification

We use the channel specification presented in the work of Welch and Martin [

19], which contains additional actions in both the sender and the receiver. We need to model read and write operations interleaving, and a simple transmit without read and write operations does not provide this behavior. For this purpose, we define channels to represent an object interface:

\({\sf channel}\ read, write: Channels.Processes.Values\\ {\sf channel}\ start\_read, ack: Channels.Processes\)

The writing process (called

LEFT) will accept a message on the

write channel, then transmit the message to the reader (called

RIGHT), and before recursing will communicate on the

ack channel to indicate write completion.

RIGHT will engage in an event on the

start_read channel before receiving a message from the sender (the

LEFT process). To complete the read operation, the transmitted message is passed to the environment on the

read channel. Finally, the reader also recurses. Here is the code from Welch and Martin [

19]:

We can now define a GENERIC_CHANNEL process by composing, in parallel, LEFT and RIGHT as follows:

We hide the events on the transmit channel, as they are internal to the process and not something to be exposed to the environment.

5.1.1 Algebraic Proof.

The generic channel model presented in the previous section is exactly the same as the one developed by Welch and Martin [

19]. They use this simplified model of how the JCSP channel should work as the specification in FDR to prove that their JCSP implementation is equivalent to a regular CSP channel. If we use the same specification and can prove that the ProcessJ channel implementation is equivalent to their simplified model, then we know that the ProcessJ channel does behave like a regular CSP channel.

To use the simplified specification, Welch and Martin [

19] present an algebraic proof of correctness. They do this by introducing simplified CSP processes that use only one-to-one channels and have no alts. They continue on to argue that their channel specification (exactly like this one in the previous section except we renamed it

JCSPCHANNEL to

GENERIC_CHANNEL) indeed does behave like regular one-to-one CSP channels. They state that the proof sought is of the following equation:

For us, rather than

JCSPCHANNEL, we would use

GENERIC_CHANNEL.

\(P^{\prime }_i\) is created based on

\(P_i\) by replacing the real CSP write (

\(a!x \rightarrow Process\) ) by the JCSP version (

\(write.a!x \rightarrow ack.a \rightarrow Process\) ) and

\(P^{\prime }_j\) from

\(P_j\) by replacing the real CSP read (

\(a?x \rightarrow Process(x)\) ) by

\(ready.a \rightarrow read.a?x \rightarrow Process(x)\) . Through a number of steps, they arrive at the following two equations:

for which they argue truth. This means that it is safe to reason about JCSP programs in their natural form, modeling calls to

read() and

write() as

atomic communication events. Since we use the same model (although renamed to

GENERIC_CHANNEL), we can draw the same conclusion: if we can prove correctness between the ProcessJ implementation and the

GENERIC_CHANNEL, then the preceding argument also holds for ProcessJ channels.

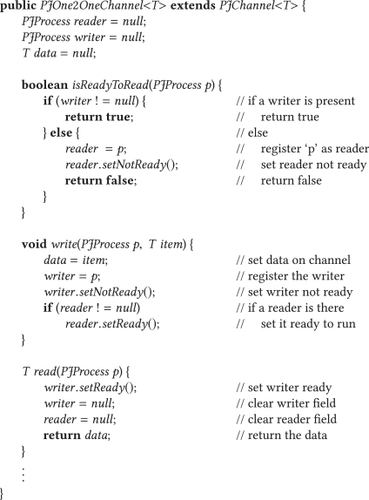

5.2 ProcessJ Channel

The relevant parts of the runtime representation of a one-to-one channel can be found in Figure

1. The three methods found here are related to reading from and writing to a channel. If a process attempts a read (by calling

isReadyToRead()) and receives

false, the process must set itself not ready to run and yield. When that process is executed again (by reaching the head of the run queue with its

ready flag being true), it can read from the channel. Since a

yield() is translated into a

return statement (along with some logic to set a run label), the start of any generated ProcessJ process must contain logic for jumping back to the code where the yield left off. Recall the jumping code from Section

3.1.

When a process reaches a

write statement, the channel is always ready to receive a message

5 (see Section

5.2.1 for more details on this). However, after a write, the process should yield and set itself not ready to maintain synchronous channel semantics.

We now consider two ProcessJ code snippets: the read expression and the write statement with their respective translations into Java.

5.2.1 Writing.

The ProcessJ expression for writing an expression to a channel is as follows:

\(c.write\) (message);

where

c is a ProcessJ variable of channel type and

message is a value of the type carried by the channel. The generated Java code is as follows:

6where ch is a Java object representing a ProcessJ channel and x is a label number. For a one-to-one channel, the first time the writer arrives, the channel can be written to; all subsequent attempts to write will be successful as well, as there is only one writer, and it would not have been rescheduled if the reader had not already received the message. After all, it must have been the reader that set the writer ready to run again after the first communication has happened.

5.2.2 Reading.

The ProcessJ expression to read from a channel is as follows:

It translates into the following Java code:

In generated code, all channel reads will be simple assignments of the following form:

\(var = ch.read\) (). This is necessary, as yielding in the middle of an expression containing channel reads would generate syntactically incorrect Java code. The ProcessJ compiler contains a code rewriter to ensure that this format is obeyed. The second

yield() is a courtesy yield and is technically not necessary. We make use of the fact that this is a one-to-one channel. If a reader is ever rescheduled to run after a

yield() call, it must be because the writer had delivered a message on the channel. This particular example is explained in greater detail in Section

3.2.

5.2.3 Modeling Java Monitors.

Java offers two built-in methods to ensure exclusive access to an object. The first approach is to declare a method

synchronized and the second is to enclose code in a

synchronized block. In the former approach, a lock is taken out on the entire object (or class, if the method is static); in the latter, an object on which a lock can be acquired must be specified. The

read and

write methods of the ProcessJ channel class are declared

synchronized. Thus, we need a method to model a

synchronized block in CSP. As ProcessJ provides its own scheduler, no waiting occurs and therefore we model a

claim and

release of a lock on an object.

7 MONITOR is a process that alternates between engaging in

claim and

release events:

The MONITOR will not accept NULL as pid, and we only allow claims to non-null process references. The MONITOR process will only allow a release event on the same process used for the claim event.

5.3 Modeling ProcessJ Channel State

In this section, we consider how to model the object state (i.e., fields of Figure

1) of the runtime object for a channel; we have already shown how to handle the

ready flag associated with a process, and the fields for a channel are done in the same way. Furthermore, we show the translation of the Java code generated for reading and writing (described in the previous section). If we examine the code in Figure

1, we see that a channel has state, namely

writer,

reader, and

data fields. We can use the

VARIABLE process from Section

4.3 for modeling these fields. To accomplish this, we need to define appropriate channels to pass the

VARIABLE process. For this section, we use just two processes, namely 0 and 1. Thus, we define the following:

Since the reader and writer fields of the channel object contain process identifiers, but also possibly the null value, we extend the possible process identifiers to include a –1 (representing the value null)—we call this set of values Nullable_Processes:

We can replicate the approach for the channel’s fields. First, we need a set representing channels (for now, we have only one):

and now channels for the reader and writer fields:

That leaves just the data field, which we define to take on values in the set Values:

and its corresponding channel definition is as follows:

The three VARIABLE processes representing the three fields (writer, reader, and data) do not interact with each other and also can be interleaved in a process that we will call CHANNEL:

We need a CHANNEL process for each channel in the system (i.e., for each channel defined in the Channel set); these channels are independent from each other and can therefore also be interleaved. Each CHANNEL process in the system must have a MONITOR process associated with it. Since the channel and the monitor do not communicate with each other, they can be also interleaved. We define CHANNELS as follows:

We define a new process called CHANNELS as follows:

Note that in this particular instance, because Channels = {0}, \(CHANNELS = CHANNEL(0) ||| MONITOR(0)\) . We now have a process (CHANNELS), which represents all states (object fields) in all channels of the system. In the next section, we turn to the CSP representation of the Java code that we generated for the \(read()\) and \(write()\) procedures in ProcessJ.

5.4 read() and write() in CSP

We can now translate the \(write()\) method of ProcessJ into CSP in the following way:

Since we are accessing the variable

reader, we need to ‘load’ it from the

reader channel into the variable

v. Similarly, for the

read method from Figure

1, we get the following:

The read method will never be invoked if the writer is null, which would signify that no data is present, and thus read would not have been invoked; therefore, the stop event never happens.

We now have the basics for reading and writing to a channel, and we can continue putting together a PROCESS_READER and a PROCESS_WRITER that fit with the interface of the generic channel model.

5.5 Unrestricted Channel Verification

We now transcribe the code generated for a

write statement and a

read expression in ProcessJ into CSP. We will incorporate the four channels from the generic channel in Section

5.1. In other words, the writer will read in a message to send, send it, and acknowledge before recursing. To incorporate the scheduler correctly, the process (which we call

PROCESS_WRITER) needs to be defined. First, we must specify yielding when no scheduler is present.

5.5.1 Yielding.

Each process needs a ready flag (that determines if the process is ready to be run by the scheduler) associated with it, so we can define the appropriate channel as follows:

where Operations is either get or set, and Bool is either true or false. This channel carries triples of process identifiers, operations, and Boolean values. Each process must have an associated VARIABLE for its ready field. We can create a process READY_FLAGS as follows:

ProcessJ inserts yield points after most synchronization points. A yield point translates to a return instruction in the bytecode. A yielded process will not be scheduled until its ready field is set to true. We can simulate this behavior by defining YIELD as waiting until the appropriate ready flag becomes true:

YIELD(pid) \(\rightarrow\) ready.pid.get.true \(\rightarrow\) skip

Note that YIELD is a procedure called from an active process. A yield is a blocking wait until the ready flag has been set to true. Also note that as we are not considering any scheduler or execution environment in this section, all that is required for a process to run is that it is ready.

5.5.2 Reading and Writing Processes.

We have to be careful about the resume points. If a write succeeds (which it always does), the writing process yields and resumes after the entire code generated for the write (label \(L_x\) ). This translates to a recursive call to the entire writing process—that is, back to accepting a new message from the environment on the write channel. This is modeled with a recursive call to UNRESTRICTED_PROCESS_WRITER. We could have added a yield after the acknowledgment as a courtesy, but it is technically not needed. The code for UNRESTRICTED_PROCESS_WRITER thus looks like this:

The implementation for the reader process (called UNRESTRICTED_PROCESS_READER) is similarly straightforward; however, there are a few points worth making:

•

Since the value of the

writer field of the channel has changed after the

yield in the ‘then’ branch of the

if statement, it must be read again. We know that it has changed since the process was rescheduled, which is something that can only happen because the writer set the reader ready to run again. This takes place in the

READ operation defined in Section

5.4.

•

The second yield is for fairness only; if removed, correctness is still retained. It simply yields while remaining ready to run but must go around the run queue once before being run again.

The CSP for UNRESTRICTED_PROCESS_READER thus looks likes this:

Since an

UNRESTRICTED_PROCESS_WRITER and

UNRESTRICTED_PROCESS_READER do not directly synchronize with each other (they do so via the channel

DATA), we can create an

UNRESTRICTED_READER_WRITER process in which they are interleaved and run in parallel with the

CHANNELS8 as follows:

Finally, we must now run the UNRESTRICTED_READER_WRITER process in parallel with the ready flags for the involved processes. Remember, we are not modeling any execution environment, so the ready flags are all we need. We get UNRESTRICTED_PJ_CHAN_SYSTEM as follows:

Figure

2 illustrates the

UNRESTRICTED_PJ_CHAN_SYSTEM process. We now check that the

UNRESTRICTED_PJ_CHAN_SYSTEM (the implementation) behaves like the

\(GENERIC\_CHANNEL\) (the specification). The results are shown in Table

1.

As we can see, the channel system is both deadlock and livelock free, as well as deterministic in the

failures-divergence semantic model. Furthermore, we get refinement in both directions between the specification and the implementation—also in the

failures-divergence model. At this point, we have achieved the same result for ProcessJ channels and channel communication that Welch and Martin [19] achieved for JCSP channels in their work. In that sense, we have done our work by verifying that the ProcessJ channel implementation meet the specification. However, as stated previously, this does not take into account the actual execution environment that is the ProcessJ runtime. In Section

8, we redo the channel analysis that we just performed, but now with an added execution environment (the ProcessJ scheduler), which will alter the results in a number of ways.

6 UNRESTRICTED Choice Verification

In the previous section, we formally proved that the implementation of ProcessJ channels in generated Java code behaved correctly according to a specification. In this section, we consider another important feature of process-oriented language, namely alternation or choice. ProcessJ has a choice statement. It allows an application to make a choice between reading from channels. An example of an alternation—alt for short—in ProcessJ is the following:

This code illustrates a relatively simple ProcessJ program with a main procedure that concurrently executes two writing and one reading process.

9 The reading process contains an

alt between the two writing processes. This

alt will arbitrarily chose a writing process (given that the writing process is ready to exchange a value with the reader), and the output will be either

Got 42 from writer 1 or Got 43 from writer 2. The

par block in

main will never terminate, as the writer that was not chosen in the

alt is stuck waiting for a reader. Again, we use synchronous, non-buffered, blocking communication. Thus, the string

Done is never output and the program never terminates. A

prioritized alt is a version of an

alt in which the first guard that is ready (in textual order in the program) is always chosen. ProcessJ only supports prioritized

alt.

6.1 A Generic Alternation Specification

To verify correctness of the ProcessJ

alt, a specification is needed. Again, we turn to Martin and Welch [

19]. This time we use a slightly optimized version of their

ALT_SPEC specification:

W0 and W1 represent the two writer processes, and altpid is the reading process. When ALT_SPEC is initially called, ready0, ready1, and waiting are false. This signifies that neither of the two writers are ready and the reader (the alt) is not waiting/ready either. Now, either one or both of the writers can become ready, and if they do, ALT_SPEC can recurse with the appropriate ready parameter flipped to true. We model this using an external choice between the two write events and a third if statement. This if statement requires more consideration: initially, waiting is false, which means that only the select event is available. Indeed, if we use the internal :probe command in FDR, we see that only the select and the write events are available. The select event will be offered by the alt usage process ( \(ALT\_USER\) in the following), and when it happens, ALT_SPEC recurses with waiting set to true. Once waiting is true, we have three scenarios to consider:

•

Writer 0 is ready and writer 1 is not, in which case we can return a 0 on the return channel.

•

Writer 0 is not ready but writer 1 is, in which case we can return a 1 on the return channel.

•

Both writer 0 and writer 1 are ready, in which case we perform an internal choice between returning a 0 or a 1 on the return channel.

The stop is never executed, as there is always an alternative choice to be made.

The two writer processes are built from

LEFT processes from Section

5.1.

ALT_USER replaces the

RIGHT process in regular channel communication:

ALT_USER starts with the select.pid event, which represents the user process engaging in an alt statement. It then receives from the ALT_SPEC the chosen index and proceeds as the RIGHT process in the channel communication read. Finally, it recurses.

We now can create the complete alt specification by running the ALT_USER, the ALT_SPEC, and the two LEFT processes in parallel:

We need to hide the transmit and return events, which we do by defining PRIVATE_ALT_SPEC_ SYSTEM as follows:

FDR verifies that the specification of the generic alt is deadlock free both in the failures and in the failures-divergence model.

6.2 The Semantics of alt in ProcessJ

The generalized alt in ProcessJ is as follows:

where the \(b_i\) ’s are Boolean pre-guard, which are expressions evaluated once per execution of the alt, and they can be used to efficiently turn on and off guards in an alt. The \(g_i\) is a guard (so far, we have seen channel read guards); a guard in a ProcessJ alt can be one of three things: a channel read, a timeout, or a skip. A skip guard is simply a guard that is always ready. A timeout guard is a guard that waits for a timer to time out. This is useful as a way out of an alt in case no channel guards ever become ready. We do not consider the latter two in this article. Finally, \(s_i\) is a statement that is executed if the guard is ready and chosen. Each time an alt is executed, only one ready guard and its associated statement is chosen. Therefore, a more specific example of the kind of alt we consider in this work is the following two-way alt:

The

pri denotes that the

alt is

prioritized as explained earlier. We limit ourselves to show that an

alt with two channel read guards works correctly when verified with the model given in Section

6.1.

We again follow the generalized specification of an

alt given by Welch and Martin [

19]. The two general phases of resolving an

alt (i.e., determining which guard to choose) are an

enable and a

disable phase. In the

enable phase, the guards are inspected from top to bottom and the index of the first

ready guard is returned. A channel read guard is ready if data is present on the channel. If an unready guard is encountered, the reading

alt process should set the

reader field such that the (

alt) process can be set ready by a writer in the case it ends up yielding because no guards were ready. In the

disable phase, the guards are inspected from the found index (or in case no guard was ready, the last index) and backward to 0. In addition to rechecking the guards backward, we disable them. This means resetting the

reader field to

null.

The Java code generated for a simplified alt without Boolean pre-guards and only channel guards is as follows:

We start by setting the reading (alt) process not ready. The enable method is then invoked, and an index will be returned (for our two-guard example, 0, 1, and 2 are the possible return values). Once the enable method has run, if the returned index is 2 the alt process will remain not ready to run (as no ready guard was found—had a ready guard been encountered, the alt process would have been set ready to run; see the code for enable in the following). When a process that is not ready to run yields, it will not be executed until it once again becomes ready to run. This will happen by one or more of the writers setting the alt process ready again when they perform their write to the channel. This is why we register the reader (which is the alt process) in the altGetWriter method shown in the following. If the alt process remains ready to run, a yield will simply place the process at the back of the run queue, and once it reappears at the head, it will be re-executed. We need to add a new method to the PJOne2OneChannel class as follows:

This method (altGetWriter) registers the alt process (the reader) with the channel if a writer is not already present and returns a reference to a writer (or null if none is present). This method will be called on each channel until the enable method finds a ready one:

The i variable is local and therefore returned; in the CSP, it will be global through a VARIABLE process.

For the disable phase, we work backward in the list of guards starting at the one returned from the enable phase. If no guard was chosen in the enable phase, we start the disable phase at the last guard. The disable phase de-registers the alting process as a potential reader for the channels. In addition, if the guard has become ready, it becomes the chosen guard. This is done to correctly implement a prioritized alt. Therefore, we use setReaderGetWriter to set the reader field back to null while returning the writer:

We always call this method with a null parameter value. We can now turn to the Java implementation of the disable process:

A disable can never return a value of –1 for selected. If at least one guard were ready during the enable phase, it will still be ready during the disable phase. If no guards were ready during the enable phase, the alting process would have been set not ready to run. However, since it is now again running, a writer must have set it ready to run, and thus the guard involving that writer will be ready and its index can be selected.

After the disable call, the reader fields of all channels in the guards will be null. Technically, the reader field of the selected channel should be set back to being the reference to the alting process, but since we have the index, this does not matter.

The code generated after the disable phase for the following alt is as follows:

and becomes

This particular part of the code is referred to as the usage part. In the verification part, code blocks can be ignored. The important part is the choice of the channel to read from, as well as performing the read.

In the

alt system we consider here, we need two instances of the

PROCESS_WRITER process described in Section

5.5.2. We cannot reuse the

PROCESS_READER but replace it by a

PROCESS_SELECTOR process, which we will describe in Section

6.4, but first we consider the CSP implementations of

enable() and

disable().

6.3 enable() and disable() in CSP

We need to translate both enable() and disable() methods to CSP. The translation is almost one-to-one except the loop is the Java code is translated into recursion. Before we show the code for ENABLE and DISABLE, we need to introduce two new variables used by these two procedures.

In the Java runtime implementation of the

alt, we utilize a further two variables, namely a counter variable aptly named

i, and a second variable that will eventually contain the index of the selected guard; we call this one

selected. This can easily be done with the

VARIABLE process defined in Section

4.3 by defining appropriate channels:

Two VARIABLE processes can be created in a new process called ALT_VARIABLES as follows:

We now can start with the ENABLE process that sets i equal to 0 and then enter the loop:

The last if statement of ENABLE comes from the start of the disable() procedure. In a similar way, we can translate the disable() method to CSP and arrive at this code:

With enable() and disable() now implemented in CSP, we can move on to the actual alt code in the next section.

6.4 The ProcessJ alt Implementation in CSP

In the previous section, we developed the CSP for the \(enable()\) and the \(disable()\) methods. Before we can proceed with the actual verification, we still need the usage code—that is, the code that calls enable and disable and reads the message on the selected channel. Here, we call this process UNRESTRICTED_PROCESS_SELECTOR:

The bottom part of UNRESTRICTED_PROCESS_SELECTOR after the disable call is the part of the usage that reads the message from the selected channel. The actual block of code that gets executed after the guard was evaluated has been abstracted away here; thus, this process finished by delivering the message to the environment. In addition, selected must be re-read after the READ, as it went out of scope because of the semicolon operator.

We now can create the UNRESTRICTED_SELECTOR_WRITERS process by running the two writers and the alt (the reader) in parallel with the variables in the following way:

Finally, we can run the UNRESTRICTED_SELECTOR_WRITERS in parallel with the required ready flags. The result is the following UNRESTRICTED_PJ_ALT_SYSTEM:

Figure

3 illustrates the

UNRESTRICTED_PJ_ALT_SYSTEM process composition.

We now can perform the verification of the

UNRESTRICTED_PJ_ALT_SYSTEM (the implementation) with respect to the specification

ALT_SPEC_SYSTEM (the specification, as defined in Section

6.1.) Table

2 shows the results of the FDR verification.

The only test that fails is the determinism in the alt. On investigation by probing the system, we can determine that non-determinism is occurring when both writing processes get to a point where either one can notify the selecting process that they are ready. In choice, we have the following situation:

•

The selecting process can set that it is waiting for either writer to be ready. It is therefore not ready.

•

Both writers can start writing to their respective channels, both getting to the point where they need to tell the selecting process they are ready.

•

The writers do not synchronize—they are interleaving.

•

The system must decide which process gets to tell the selector to become ready. This is the same event for both processes—ready.altpid.set.true.

•

Depending on which goes first (non-deterministic decision by system), the next event can be releasing a claim on a monitor that is different for each writer, or the reader continuing, and so forth.

As the environment must choose which one will go first, and both can continue yet they do not synchronize on this communication, non-determinism is introduced. However, after this choice, the system will continue down predictable paths. Therefore, non-determinism is not an issue and would be expected given the implementation of choice.

7 UNRESTRICTED, Unscheduled, Perhaps Unsafe?

In Sections

5 and

6, we saw that all except the determinism check for the

alt succeeded in the strictest semantic model, namely in the

failures-divergence model.

These results are similar in nature to many other comparable proofs of correctness for various algorithms and systems. They all rely on a common assumption, namely that if an event can happen in CSP, then it can happen at any time. Translated to code execution, this is equivalent to the following statement: “any process that is ready to run can run at any time.” Clearly, this is equivalent to having “enough processors” ready for any program, or alternatively, a system with an infinite number of processors. This is obviously not compatible with how things are in the real world—here we are limited by a finite number of resources.

This assumption of limitless execution resources is implicitly made in much of the similar research—or alternatively stated: there is no consideration of an actual execution environment. In the real world, processes are handled by schedulers and they execute in a operating system where various events can happen. In the following two sections, we look at these two issues in turn. First, we consider a single-threaded (single scheduler) execution environment and then a multi-threaded (multiple schedulers) execution environment. However, before we consider these two situations, the next sections explore the issues that may arise when verification fails to take into account the execution environment.

7.1 The Absent Environment

Not considering the execution environment can lead to a false sense of security that we can illustrate using the verification results of the JCSP channels [

19]. Welch and Martin [19] verified the correctness of the JCSP one-to-one channel—indeed, we use much of their channel specification (not implementation) in this work. One may think that the conclusion of their paper is that the implementation of the JCSP channel is correct and will work in any execution environment. However, this is unfortunately not entirely correct (however, at the time they performed the original verification it was indeed correct, but with later versions of Java when POSIX threads were adopted by the JVM it became incorrect). JCSP is a Java library that runs on the JVM. The granularity of concurrency in JCSP is one JVM thread per JCSP process. Java threads are POSIX threads, and they use a

wait and

notify (invoking

wait() on an object will suspend the invoking thread until such time another thread invokes

notify() on the same object

10). This means that a reader of a channel can invoke

wait() on the channel if no writer is present, and when the writer arrives it can invoke

notify() to wake the reader:

synchronized (c) {

if (c.data == null)

c.wait();

// continue here when notified

// now c.data is not null

// ...

}

where

c is a channel object (see page 286 in the work of Welch and Martin [

19]).

Let us now consider the execution environment of POSIX threads. Whether by design, mistake, or simply just unavoidable, POSIX threads suffer from

spurious wakeups. In Java, a spurious wakeup happens when a thread is awoken from a

wait call only to discover that the condition that originally sent it to sleep still has not been satisfied. In the example of the channel read presented previously, this means that when the thread is awoken,

c.data is still

null. Naturally, this would never happen if the reason that the thread awoke was because of the writer depositing a value in

c.data. The reason this can happen is because the operating system may choose to wake everyone waiting on a condition variable. Knowing that such spurious wakeups can happen is crucial to implement the channel read correctly. Indeed, if an execution environment with spurious wakeups had been modeled, the verification of the original JCSP channel implementation would have failed.

11 The standard way to deal with spurious wakeups is to use a loop with the original condition as follows:

synchronized (c) {

while (c.data == null) {

c.wait();

}

// continue here when notified

// now c.data is not null

// ...

}

If the thread is awoken but no data is present, it simply goes back to sleep. Now, it is virtually impossible that this will happen over and over again, thus preventing progress in the execution. However, adding this while statement suddenly makes this code not livelock free any more. In the associated CSP model, it is possible that the while statement never terminates. In reality, this never happens, so the reported livelock can safely be ignored.

Naturally, one has to be careful when making the argument that a livelock can be ignored; removing the while statement (and reverting to the original code) removes the livelock, but adding it back in brings it back. It should be clear that the livelock is only caused by the while statement, but arguing for why the while loop will never loop in perpetuity (i.e., why spurious wakeups do not happen over and over and over again) is not hard. This becomes an example of where automated verification becomes machine-assisted reasoning/verification.

This example illustrates the importance of considering the behaviors of the execution environment in concert with the implementation being verified. Not doing so can have unintended consequences, possibly rendering the implementation, which was presumed correct (because it was verified), incorrect.

It is worth noting that the ProcessJ runtime and scheduler does not make use of any wait() and notify() calls at all. All execution control is done through the ready flags of the ProcessJ processes. Before we embark on the verification of the channel communication and alternation with the single-scheduler execution environment, let us first examine what scheduling means for parallel execution of the CSP processes with a single scheduler.

7.2 Not All Environments Are Equal

To avoid confusion in the following sections, it is worth spending a little time on the difference between the

external environment of a CSP process and the

execution environment of the ProcessJ runtime. Let us define two processes

P and

Q as follows:

If we define

PQ as the parallel composition of

A and

B (synchronizing on events

a an

b) as follows,

it should be clear that the

PQ process is deadlock free

12 because both

P and

Q produce traces of alternating

as and

bs and these are the events that both synchronize on. Probing

PQ does show that the only event available is an alternating sequence of

as and

bs, repeating forever. If, however, we were to slightly alter

P and

Q (here called

\(P^{\prime }\) and

\(Q^{\prime }\) ) and build

\(PQ^{\prime }\) in the following way (note the insertion of the event

c in

\(P^{\prime }\) and

\(Q^{\prime }\) ):

If we retain the sync set as

\(\lbrace a, b\rbrace\) and do not synchronize on event

c, now, the traces of

\(PQ^{\prime }\) will look like

a,b,c,c,a,b,c,c \(,\ldots\) —that is, each of the

c events happen independently (thus, we see two

c events and not just one each time following the

a and

b events—this is because

c is not in the sync set). Formally, the set of traces of

PQ is as follows:

and the events exposed to the environment are the events

a and

b. Exposing these events means that the environment can

drive the process

PQ by engaging on the same events. We can illustrate this point by defining a few new processes as follows:

For the process

R, we know that all but the empty trace of

PQ start with

a. But since the traces of

X start with a

b, and since the synchronization set is {

a,

b}, the process

R will deadlock. This is an example in which, depending on the behavior of the environment, an otherwise deadlock-free process can be used in a parallel composition with another deadlock-free process, but the result will be a process that does deadlock. For the process

\(R^{\prime }\) , since neither

PQ nor

X have event

c, the process is deadlock free. Naturally, now the events

a and

b are not in the synchronization set, hence both processes are free to perform those events independently of each other. Finally,

\(R^{\prime \prime }\) again will deadlock because both

a and

b are in the synchronization set. This small example illustrates how it is possible to provoke deadlocks if events are exposed to the external environment.

If we were to define

and use

PQH in the three definitions of

R,

R, and

\(R^{\prime \prime }\) instead of

PQ, then all three would indeed be deadlock free as the events

a and

b are now wholly internal and not exposed.

Now consider the execution environment of the ProcessJ runtime. This consists of runtime (implementation of the inner workings of the channel, the alt, etc.) as well as the scheduler and the associated run queue. Much like the specification of the channel (GENERIC_CHANNEL) is a parallel composition of a LEFT and a RIGHT process, which communicate using a transmit channel, which is subsequently hidden, the inner workings of the channel in the ProcessJ runtime is also hidden. The only events that are exposed from the runtime (for a channel) are the two start_read and read events for the reader and the write and ack events for the writer. All events that happen between the scheduler and the channel runtime are hidden. This means that the external environment, which for a ProcessJ process is the application program that uses the specific channel, cannot interact with or synchronize on, or even observe, the inner workings of the channel. The equivalent events are hidden. Thus, showing that the inner workings of a channel (i.e., its implementation) is deadlock and live lock free will indeed show that using one or more channels will never deadlock an application program (or live lock it, for that matter) because of an incorrect implementation of the runtime or the scheduler. The only way for deadlocks to occur is to write bad application code—much like the often used example of the Dining Philosophers who all start by picking up their right-hand fork. In the CSP model for the Dining Philosophers, we observe a deadlock, and were we to implement that algorithm in ProcessJ, the system would likewise deadlock—not because of a bad implementation of the channels, but simply because no processes are ready to progress and thus the scheduler cannot find any processes to run. It may well be that the scheduler spins forever looking for a process to run, but that is a different story altogether—one that we return to in later sections.

In Figure

4, on the left, we illustrate the

CSP external environment as the “place” that has access to the non-hidden events/channels of a process. It is the scope of the code where the process can be seen and used in parallel compositions. On the right in Figure

4, we depict the

execution environment as the combination of the scheduler and the runtime code that the compiler links to the generated ProcessJ application (depicted below the execution environment). The dotted lines in the figure represent the channels into the execution environment, which are limited to, for example,

write,

ack,

start_read, and

read for a channel communication.

If these are channels are used “correctly” (i.e., in an application that does not deadlock because of poor design), then we will show in the following sections that the entire application (scheduler and runtime included) will also not deadlock. In other words, any deadlocked execution is 100% because of bad design and not internal issues in the runtime and the scheduler. If the implemented algorithm is transcribed to CSP (which is now possible as we have shown that ProcessJ channels behave just like CSP channels), then FDR should be able to determine why and where a deadlock occurs.

7.3 Not Everything Can Happen at Any Time

Let us examine a single-threaded execution environment in more detail. Consider the following simple CSP:

\(A = a \rightarrow {\sf skip}\\ B = b \rightarrow {\sf skip}\\ AB = A ||| B\)

We clearly have the following set of traces:

After the trace

\(⟨ ⟩\) , FDR computes the set of events the process is willing to engage in as the set

\(\lbrace a,b\rbrace\) . In other words, either of

A and

B can progress (as

A and

B are interleaved). Now consider an execution context with a single scheduler and a single run queue. This run queue will contain the equivalent processes of

A and

B, say

\(P_A\) and

\(P_B\) . There are two different ways such a run queue can look (denoted

\(R_1\) and

\(R_2\) ):

It should be apparent that if the run is

\(R_1\) , then

AB becomes effectively

\(A ; B\) , in CSP terms. If

\(R_2\) is the run queue, then

AB is

\(B ; A\) . We see that the set of possible traces when starting with the

\(R_1\) run queue is as follows:

13In other words, it is the set of traces that can be produced by running the single scheduler with the particular run queue configuration found in

\(R_1\) . Similarly, if we use the

\(R_2\) run queue configuration, we get the following:

It should be clear that for any run queue schedule

\(R_i\) , the following equation always holds:

However, at the same time, we have the following:

In other words, if

AB represents a

specification and

\((P_A ||| P_B)_{R_i}\) an

implementation, then the implementation (when executed by the scheduler according to cooperative scheduling policies) will always

behave and never produce a trace disallowed by the specification. However, there will be traces of the specification that a particular run queue configuration cannot produce (e.g.,

\(⟨{b,a}⟩\) cannot be produced by the

\(R_1\) configuration). This will be the reason for the trace refinement failing in this direction when running with just a single scheduler.

7.4 Not All Deadlocks Are Equal

An important point that should be made is about deadlocks. Using the unrestricted model for channels and

alts that we presented in Sections

5 and

6 where we know that channel communication and alternation is deadlock free (we actually know more—we know that the ProcessJ channel implementation behaves like a CSP channel), what happens if we try to implement, say, the Dining Philosophers? If we use the standard approach of picking up the left fork first and then the right, an implementation using CSP semantics will deadlock; this is easily verified in FDR (for a college of five philosophers, after a trace like

\(⟨{forks.4.left, forks.2.left, forks.left.1, forks.left.3, forks.left.0}⟩\) , or any other permutation of picking-up-fork events, the system will be deadlocked with an acceptance set of { }.) It is important to remember that when FDR reports a system as not deadlock free, it will provide the

shortest trace to a deadlock. However, this does not mean that the system does not have traces (even infinite ones) for which the system does not deadlock. This means that an implementation using that particular specification may or may not deadlock when run.

Having proven that the ProcessJ channels behave like CSP channels, this algorithm, if implemented in ProcessJ, will also risk deadlock. When CSP reports that the Dining Philosophers is not deadlock free, it means that there exists at least one trace that leads to a configuration where the accept set is empty. In ProcessJ, this is equivalent to reaching a situation where no process in the run queue is ready to run—all processes’ ready flags are false. Now, will running the ProcessJ implementation always lead to a deadlock? The answer is no. Similar to traces existing in the CSP model that do not deadlock (even though deadlocking traces do exist), it is possible that the implemented program does not deadlock because of an advantageous schedule; similarly, it may deadlock given a less-advantageous schedule.

To summarize, if FDR determines a process to not be deadlock free, then a ProcessJ implementation may deadlock when executing the implemented program, but it does so not because of the implementation of the underlying runtime system of channel communication and alternation deadlocks but simply because every process in the run queue has its ready flag set to false. In other words, if the corresponding CSP model can deadlock, so can the ProcessJ implementation, but such a deadlock will be caused by bad model design, not a bad runtime system implementation.

As we have seen, when introducing schedulers into the runtime system, not all traces of the equivalent CSP model will be possible. Therefore, the next logical step in this investigation is to add a single scheduler to our runtime system and then verify again that the runtime system’s channel communication and alternation is deadlock free—that is, it is just as safe to execute code in a single-threaded scheduled system as it is in the unrestricted system we just considered. We already know that when imposing a scheduling order, not all traces of the CSP specification will be possible in the ProcessJ implementation, but that is okay as long as we still have deadlock freedom.

7.5 Everything Is Under Control

We are now ready to take the next step in this research, the question that we set out to answer: what happens when events are controlled and ordered by a scheduler? Will the otherwise well-behaved ProcessJ implementation of the channels and alternations still work correctly? We know they are well behaved in the “general” sense as shown in previous sections, but what will happen when some traces become impossible? Is it possible that channel communication or alternation will deadlock? This is what we will attempt to answer in the following two sections. First, we consider a single scheduler that we know will make a number of traces, which were otherwise possible, impossible. After this, we consider the same questions but with two and four schedulers (four is the maximum needed, as we never have more than three readers and writers in our system). What will happen when we have exactly the same number of schedulers as we have processes, and what will happen when we have more schedulers than processes? For this last question, we predict that, because of the naive implementation of the scheduler, we will see livelocks caused by idle schedulers constantly querying the run queue, but all shall be revealed in the following sections.

In the next section, we consider a single-threaded cooperative scheduler executing the ProcessJ processes. Here, the scheduler acts as the environment discussed in Section

8.

8 Single-threaded Cooperative Scheduling

We are now ready to repeat the entire verification exercise again, but this time while considering the execution environment that is a cooperative scheduler with a single execution thread. As discussed previously, ProcessJ utilizes a user-level cooperative scheduler, and we now want to verify that the runtime implementation of channel communication and choice still work as anticipated. We start by discussing the implementation of the cooperative scheduler that is found in the ProcessJ runtime, and for now we will limit our investigation to a scheduler running on a single core/thread.

8.1 Creating a Cooperatively Scheduled Execution Environment

In this section, we describe how we have modeled ProcessJ’s cooperative scheduler execution environment. We finish the section illustrating how the scheduler system can be extended to a two-threaded system and a four-threaded system, and in general.

Modeling the execution environment is an integral part of this verification exercise as illustrated in Section

7.1; if we do not do so, the subsequent analyses may be invalid. We explain the constraints that our execution environment can create in Section

7.3.

8.2 ProcessJ Scheduler

Whether ProcessJ processes are executed by a single- or multi-threaded scheduler, the general execution pattern of such a scheduler is defined as follows:

\(Queue\!\!\lt \!\!Process\!\!\gt \ processQueue\) ;

\(\ldots\)

// enqueue 1 or more processes to run ...

while (! \(processQueue.isEmpty\) ()) {

\(Process\: p = processQueue.dequeue()\) ;

if ( \(p.ready()\) )

\(p.run\) ();

if ( \(!p.terminated\) ())

\(processQueue.enqueue(p)\) ;

}

It should be clear that due to the \(p.run\) () method invocation, the Java thread executing the process is that of the scheduler; therefore, it is vital for correct executions that the processes eventually, voluntarily, give up control and return to the scheduler’s code.

ProcessJ uses a queue of created processes, each with a ready flag to determine if the said process can be scheduled. To explore a queue-based cooperative scheduler, we define the following requirements:

(1)

A process cannot be scheduled unless its ready flag is true.

(2)

For a process to be scheduled, the scheduler must invoke run on it.

(3)

To be descheduled, a process must (invoke) yield (cooperative scheduling).

(4)

A process may set the ready flag of another process to true while scheduled (coordination between processes).

(5)

On descheduling, a process may set its own ready flag to false.

Requirement 1 states that the scheduler must check the ready flag of the next process in the queue to determine if it should be scheduled by calling run (requirement 2). Requirement 3 defines cooperative scheduling in that a process must invoke yield to become descheduled.

Requirements 4 and 5 are added to simulate processes coordinating with each other and determining that they are not in a ready-to-run state. Requirement 5 allows a process to perform a courtesy yield to allow another process execution time while the yielding process remains ready.

To model the execution environment, we also require the queue of processes.

8.2.1 Modeling Queues.

FDR already provides a sequence type; therefore, we only require a process to manage a sequence, much like a state variable. We later share this queue between scheduler processes. The Java queue used in the ProcessJ runtime is protected and therefore safe for use with multiple schedulers. Using events to communicate with the queue (push and pop) ensures that the underlying sequence is protected from multiple access (i.e., race conditions). The definitions of QUEUE are as follows:

When the sequence is empty, QUEUE will only accept push events. When the sequence is not empty, the queue can accept push or pop events. We also add a safety check (length(q) \(\ge\) card(Processes)) to ensure that the system does not try and add multiple entries for the same process into the sequence.

8.2.2 Modeling the Cooperatively Scheduled Execution Environment.

With state variables and a queue defined, we can implement the execution environment in CSP based on the code provided for the ProcessJ scheduler at the beginning of this section: