Abstract

We construct a practical lattice-based zero-knowledge argument for proving multiplicative relations between committed values. The underlying commitment scheme that we use is the currently most efficient one of Baum et al. (SCN 2018), and the size of our multiplicative proof (9 KB) is only slightly larger than the 7 KB required for just proving knowledge of the committed values. We additionally expand on the work of Lyubashevsky and Seiler (Eurocrypt 2018) by showing that the above-mentioned result can also apply when working over rings \(\mathbb {Z}_q[X]/(X^d+1)\) where \(X^d+1\) splits into low-degree factors, which is a desirable property for many applications (e.g. range proofs, multiplications over \(\mathbb {Z}_q\)) that take advantage of packing multiple integers into the NTT coefficients of the committed polynomial.

This research was supported by the SNSF ERC starting transfer grant FELICITY and the EU H2020 project No 780701 (PROMETHEUS).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

1 Introduction

Commitment schemes, and their associated zero-knowledge proofs of knowledge (ZKPoK) of committed messages, from an important ingredient in the construction of generalized zero-knowledge proofs and advanced cryptographic primitives. An additional feature that’s often desirable is being able to prove algebraic relationships among committed values. Very efficient constructions of such primitives exist based on the discrete logarithm problem (e.g. [8]), but the state of affairs is rather different when it comes to quantum-safe assumptions, with the main difficulty being proving multiplicative relations.

There exist generic PCP-type proof techniques [3, 4, 20, 28], which even have asymptotically logarithmic-size proofs, but these proofs have a fixed cost of outputting paths to a Merkle tree in the range of 100–200 KB. One could also think about using fully-homomorphic encryption, which would allow the verifier himself to create additive and multiplicative relations of his choice, thus foregoing the need for a zero-knowledge proof. The main issue with this approach is that one would need to prove that the initial ciphertexts are well-formed, and these proofs are also currently on the order of a few hundred kilobytes (either using generic techniques or lattice-based proofs [6, 31]). There have also been some early lattice-based approaches proposed for this type of problem (e.g. [5, 22]), but they result in proofs that are orders of magnitude longer.

1.1 Results Overview and Related Work

The starting point of recent lattice-based constructions that implicitly construct a multiplicative proof system (c.f. [6, 14,15,16, 31]) is the commitment scheme from [2], which has a ZK proof that is fairly efficient for proving linear relations among committed polynomials over the ring \(\mathcal {R}_q=\mathbb {Z}_q[X]/(X^d+1)\), where q is prime. All of the aforementioned schemes require that the challenge set in the zero-knowledge proof is such that all pairwise differences of elements are invertible. This restriction imposes a constraint on the underlying \(\mathcal {R}_q\) (via e.g. [27]) that the polynomial \(X^d+1\) does not split into many factors. One of the improvements in the current work is the removal of this restriction (we will explain the significance of this below).

Another important improvement in our proofs is of a more technical nature. The prior aforementioned multiplicative proofs create a polynomial function of degree \(\delta \) whose coefficients include the relation we want to be 0 in the \(\delta \)-degree term. The goal of the proof is to show by the Schwartz-Zippel lemma that the polynomial is actually of degree \(\delta -1\) and so the highest-order coefficient is indeed 0. Prior works performed this proof by sending masked openings of the committed polynomials and committing to the lower-degree terms of the \(\delta \)-degree polynomial function (c.f. [6, 14,15,16, 31]). In our work we show additional properties of the ZK proof in [2] that imply that it is not necessary to send the masked message openings.

Our construction is very efficient, with the communication complexity of our multiplicative proof being essentially the same as that in [2] for just proving knowledge of the message. Furthermore, removing the restriction that \(X^d+1\) splits into a few high-degree factors is additionally useful because having \(X^d+1\) split into distinct linear (or very low-degree) factors allows one to commit to (and independently operate on) many elements in \(\mathbb {Z}_q\) by packing them into the NTT coefficients of the committed message. One particular example where this is handy is range proofs where we commit to a number written in binary and want to prove that it is in the range \([0,2^j)\). We sketch the (folklore) idea below:

Proving that a vector \(\vec {v}=v_0v_1\ldots v_{d-1}\in \{0,1\}^d\) is binary and the integer represented by it is less than \(2^j\) is equivalent to the statement

where \(\circ \) is the component-wise product. Thus if we create a commitment to \(\vec {v}\) by putting the coefficients of \(\vec {v}\) into the NTT coefficients of some polynomial \(\textit{\textbf{m}}\) and can create the polynomial \(\textit{\textbf{m}}'\) corresponding to the right multiplicand in (1), then the proof that \(\textit{\textbf{m}}\textit{\textbf{m}}'=0\) would be exactly the range proof we would like since multiplication of NTT slots is component-wise.

Note that the number of NTT slots is the logarithm of the largest integer that can be committed to. As an example, using our multiplicative proofs, range proofs for 32-bit numbers are approximately 5.9 KB in size (see Sect. 5.3). This is about an order of magnitude longer than the discrete logarithm based proofs (c.f. [8, Table 2]), but is shorter than any quantum-safe proof system (e.g. [4, 14, 16, 19]). In particular, the proofs implicit in [14, Protocol 2] and [16, Section 1.3] used a similar approach of putting elements into NTT coefficients, and had 32-bit proof sizes of around 9 KB [30]. For such range proofs, one only needs to commit to a few polynomials, and so the advantage of our proof system which saves on not sending masked polynomials doesn’t manifest itself too much. On the other hand, when applied in the context of proofs of knowledge of a polynomial vector \(\vec {\textit{\textbf{s}}}\) with 2048 small (integer) coefficients satisfying \(\textit{\textbf{A}}\vec {\textit{\textbf{s}}}=\vec {\textit{\textbf{t}}}\), our proof technique combined with the additional techniques in [13] result in an order of magnitude reduction in proof size over [6, 31].

It should be pointed out that the proofs in [4, 8] grow logarithmically in the number of instances, while our proof grows linearly. The results of the current work are thus best suited for non-batched use cases where one wishes to prove knowledge about single instances over \(\mathcal {R}_q\) (which actually could be up to d instances over \(\mathbb {Z}_q\) when taking advantage of NTT packing.)

1.2 Techniques

We will now provide a somewhat technical overview of the main results of the paper. Prior to getting into them, we recall the commitment scheme of [2] and its zero-knowledge proof.

Overview of [2]. The scheme of [2] commits to a message vector \(\vec {\textit{\textbf{m}}}\in \mathcal {R}_q^k\) by choosing a vector \(\vec {\textit{\textbf{r}}}\) with small coefficients and then outputting the commitment

The intuition is that if the opening proof can show that \(\vec {\textit{\textbf{r}}}\) is short, then (2) binds the committer to the short \(\vec {\textit{\textbf{r}}}\) (based on the hardness of the SIS problem), and then the message is uniquely determined from (3). Unfortunately, there do not exist very efficient proofs allowing a prover to prove knowledge of such a short \(\vec {\textit{\textbf{r}}}\) satisfying (2), but one can instead give a rather efficient ZKPoK of a vector \(\bar{\vec {\textit{\textbf{z}}}}\) with coefficients somewhat larger than those of \(\vec {\textit{\textbf{r}}}\), and a polynomial \(\bar{\textit{\textbf{c}}}\) with very small coefficients satisfying

The proof is a \(\varSigma \)-protocol where the prover picks a small-coefficient masking vector \(\vec {\textit{\textbf{y}}}\) and sends \(\vec {\textit{\textbf{w}}}=\vec {\textit{\textbf{B}}}_0\vec {\textit{\textbf{y}}}\) to the verifier in the first step. The verifier then selects a challenge polynomial \(\textit{\textbf{c}}\) from the challenge set (which should consist of polynomials with very small coefficients), and the prover responds with \(\vec {\textit{\textbf{z}}}=\vec {\textit{\textbf{y}}} + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}\). Using standard rejection sampling techniques [23, 24], the prover can make the vector \(\vec {\textit{\textbf{z}}}\) independent of \(\vec {\textit{\textbf{r}}}\) to preserve zero-knowledge. The verifier checks that \(\textit{\textbf{B}}_0\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{w}}}+\textit{\textbf{c}}\vec {\textit{\textbf{t}}}_0\) and that \(\vec {\textit{\textbf{z}}}\) has small coefficients. If both of these are satisfied (and \(\textit{\textbf{c}}\) comes from a large-enough domain), then a standard rewinding (where the extractor sends a fresh \(\textit{\textbf{c}}'\) and receives another valid \(\vec {\textit{\textbf{z}}}'\)) allows the extractor to obtain \(\bar{\vec {\textit{\textbf{z}}}}=\vec {\textit{\textbf{z}}}-\vec {\textit{\textbf{z}}}'\) and \(\bar{\textit{\textbf{c}}}=\textit{\textbf{c}}-\textit{\textbf{c}}'\) satisfying (4).

Combining this with the proof that, unless SIS is easy, there can only be a unique opening \((\bar{\vec {\textit{\textbf{z}}}},\vec {\textit{\textbf{m}}},\bar{\textit{\textbf{c}}})\) where \(\bar{\textit{\textbf{c}}}\) is invertible in \(\mathcal {R}_q\) satisfying (4) and

it implies that the ZKPoK of (4) uniquely determines \(\vec {\textit{\textbf{m}}}\). It is furthermore shown in [2] (also see [11]) that one can prove that a commitment is to some \(\vec {\textit{\textbf{m}}}\) satisfying \(\textit{\textbf{U}}\vec {\textit{\textbf{m}}} = \vec {\textit{\textbf{v}}}\), where \(\textit{\textbf{U}}\) and \(\vec {\textit{\textbf{v}}}\) are an arbitrary public matrix and vector over \(\mathcal {R}_q\). Interestingly, this latter proof does not require any extra communication over the basic opening proof, and both the proof and commitment are comfortably under 10 KB for some simple lattice relations (see Table 2 of [2]).

Distribution of the NTT Coefficients. To show that \(\bar{\textit{\textbf{c}}}\) is invertible, it was proposed in [2] to set the modulus q to a prime such that the polynomial \(X^d+1\) does not split too much modulo q – then by the result in [27], it would imply that all elements in the ring with small coefficients are invertible.

In the current paper we show that one no longer needs such a restriction on q. In particular, the prime q can be chosen to allow \(X^d+1\) to fully split into d linear factors. The observation is that we do not need \(\bar{\textit{\textbf{c}}}\) to always be invertible – it suffices to be able to compute the min-entropy of \(\textit{\textbf{c}}\) modulo each NTT coefficient.

An element in \(\mathcal {R}_q\) is invertible if and only if all of its NTT coefficients are non-zero. To show that \(\bar{\textit{\textbf{c}}}=\textit{\textbf{c}}-\textit{\textbf{c}}'\) is invertible, it would therefore suffice to show that the probability that a random \(\textit{\textbf{c}}\) from the challenge set hits a particular NTT coefficient is smaller than the targeted soundness error.Footnote 1 If \(\textit{\textbf{c}}\) were uniformly random in \(\mathcal {R}_q\), then this probability would be easy to calculate as each of its NTT coefficients has a 1/q probability of being any element in \(\mathbb {Z}_q\). But \(\textit{\textbf{c}}\) is chosen from a challenge set that has small coefficients and so the distribution of its NTT coefficients requires different techniques to compute.

As an example, suppose that \(X^d+1=\prod \limits _{i=1}^d(X-r_i)\bmod \,q\) and that we choose an element \(\textit{\textbf{c}}=\sum \limits _{j=0}^{d-1}c_iX^i\) from \(\mathbb {Z}_q[X]/(X^d+1)\) where \(c_i\leftarrow \{-1,0,1\}\) with equal probability. Then

Observe that for any r, \(\textit{\textbf{c}}(r)\) can be written as

and so the distribution of \(\textit{\textbf{c}}(r)\) is equivalent to the distribution of the random variable \(Y_0\) in the stochastic process \((Y_d,Y_{d-1},Y_{d-2},\ldots ,Y_0)\) where \(Y_d=0\) and \(Y_{i}=c_{i}+rY_{i+1}\) for \(i<d\). Fourier analysis is often a useful technique for analyzing certain properties (e.g. min entropy, mixing time, etc.) of stochastic processes, and we show how to efficiently calculate \(\max _{y\in \mathbb {Z}_q}[Y_0=y]\).Footnote 2 Calculating the exact probability (or putting a very good bound on it) would require computing sums consisting of q terms, which may be prohibitive when q is on the order of billions, so we furthermore show how certain algebraic symmetries allow us to significantly speed up the computation.

In our applications, we will actually be more interested in a more general case of proving that for a factorization

the value \(\textit{\textbf{c}} \bmod (X^k-r_i)\) is not concentrated on any particular polynomial \(c_0'+c_1'X+\ldots +c_{k-1}'X^{k-1}\). But proving this is a simple extension of the above case where we were computing \(\textit{\textbf{c}}(r)=\textit{\textbf{c}}\bmod (X-r)\) because each of the k coefficients \(c_i'X^i\) of \(c\bmod X^k-r_i\) is only dependent on the coefficients \(c_{jk+i}\) for \(0\le j<d/k\) (i.e. the k coefficients are mutually independent). So the distribution of \(c_i'\) has the distribution of the same stochastic process as above, except it consists of d/k steps rather than d.

Proofs of Multiplicative Relations. We now sketch some of the new ingredients of our main result – being able to prove multiplicative relations among committed messages in the commitment scheme defined by (2) and (3). In its most basic form, this involves proving that \(\textit{\textbf{m}}_1\textit{\textbf{m}}_2=\textit{\textbf{m}}_3\), where \(\vec {\textit{\textbf{m}}}=\begin{bmatrix}\textit{\textbf{m}}_1\;\textit{\textbf{m}}_2\;\textit{\textbf{m}}_3\end{bmatrix}^T\).

We first make a series of observations that show that one can extract more than just (4) from the prover that produces valid transcripts \((\vec {\textit{\textbf{w}}},\textit{\textbf{c}},\vec {\textit{\textbf{z}}})\) following the protocol of [2]. If we assume, for the moment, that \(\bar{\textit{\textbf{c}}}\) is invertible, then the extractor can extract a unique \(\vec {\textit{\textbf{r}}}= \bar{\vec {\textit{\textbf{z}}}} / \bar{\textit{\textbf{c}}}\), not necessarily with small coefficients, satisfying

The reason for the uniqueness is that for any small-norm \(\left( \bar{\vec {\textit{\textbf{z}}}}_1,\bar{\textit{\textbf{c}}}_1\right) , \left( \bar{\vec {\textit{\textbf{z}}}}_2,\bar{\textit{\textbf{c}}}_2\right) \) satisfying

if \(\bar{\vec {\textit{\textbf{z}}}}_1 / \bar{\textit{\textbf{c}}}_1 \ne \bar{\vec {\textit{\textbf{z}}}}_2 / \bar{\textit{\textbf{c}}}_2\), then (4) implies that

where the vector being multiplied by \(\textit{\textbf{B}}\) has small coefficients. By the assumption, this vector in additionally non-zero, and so it’s a solution to SIS. The next observation (see Sect. 4) crucial for keeping our product proof short is that as soon as the (successful) Prover sends \(\vec {\textit{\textbf{w}}}\), he has also committed to a \(\vec {\textit{\textbf{y}}}\) satisfying \(\textit{\textbf{B}}\vec {\textit{\textbf{y}}}=\vec {\textit{\textbf{w}}}\). Furthermore, for a challenge \(\textit{\textbf{c}}\), his response \(\vec {\textit{\textbf{z}}}\) will always be

This is important because of how the product proof works. In previous protocols the prover sends masked openings

of the messages with challenge \(\textit{\textbf{x}}\), sometimes equal to \(\textit{\textbf{c}}\), and independently uniformly random maskings \(\textit{\textbf{a}}_i\). Our core approach entails that the message maskings \(\textit{\textbf{a}}_i\) are derived from the randomness masking \(\vec {\textit{\textbf{y}}}\). Hence, since the prover is committed to \(\vec {\textit{\textbf{y}}}\), they are also committed to the \(\textit{\textbf{a}}_i\). Furthermore, the prover doesn’t send the \(\textit{\textbf{f}}_i\) but instead they can be computed by the verifier. We relegate the details to Sect. 5.

After we have established masked openings \(\textit{\textbf{f}}_i\) with fixed maskings \(\textit{\textbf{a}}_i\), we proceed as in previous works. One makes the observation that one can write

After additionally committing to the “garbage terms” \(\textit{\textbf{a}}_1\textit{\textbf{m}}_2 + \textit{\textbf{a}}_2\textit{\textbf{m}}_1 - \textit{\textbf{a}}_3\) and \(\textit{\textbf{a}}_1\textit{\textbf{a}}_2\), the prover proceeds to show that the above equation is linear in \(\textit{\textbf{x}}\), which means that the \(\textit{\textbf{m}}_1\textit{\textbf{m}}_2 - \textit{\textbf{m}}_3\) term is 0.

An almost immediate consequence of our work would therefore result in a significant reduction of the proofs of [6, 31]. We do not discuss this direction further, because with additional techniques, it is shown in [13] how one can use the full product proof of commitments from the current paper to produce an even shorter proof. For this application (and others) we would need to consider the case where \(X^d+1\) fully splits into linear terms in \(\mathcal {R}_q\), and therefore we can no longer assume that \(\bar{\textit{\textbf{c}}}\) is invertible. So we continue to describe the ingredients needed here.

If \(\bar{\textit{\textbf{c}}}\) is not invertible, then some NTT coefficient of \(\bar{\textit{\textbf{c}}}\) is 0. In this case we would need to run the protocol in parallel to obtain extractions \((\bar{\textit{\textbf{c}}}_1,\bar{\vec {\textit{\textbf{z}}}}_1),\ldots ,(\bar{\textit{\textbf{c}}}_\ell ,\bar{\vec {\textit{\textbf{z}}}}_\ell )\) such that for every NTT coefficient, some \(\bar{\textit{\textbf{c}}}_i\) in non-zero in that NTT coefficient. In this case, we can again prove that a valid prover knows a unique \(\vec {\textit{\textbf{r}}}^*\) satisfying (7), and every \(\vec {\textit{\textbf{w}}}\) is similarly a commitment to a \(\vec {\textit{\textbf{y}}}^*\) satisfying (10). One could obtain such \(\bar{\textit{\textbf{c}}}_i\) by sending several challenges in parallel, but for technical reasons (described in Sect. 5) having the challenges \(\textit{\textbf{c}}_i\) related via specific algebraic particular automorphism operations results in smaller proofs. We now explain how the automorphisms are chosen.

When \(X^d+1\) splits into linear terms, one can also write \(X^d+1\) as in (6) where the multiplicative terms \(X^k-r_i\) are not irreducible. In particular, we would like to consider such a factorization where \(q^k\approx 2^{128}\) to have approximately 128 bits of soundness in the protocol. Then using the results on the distribution of \(\textit{\textbf{c}} \bmod X^k-r_i\), we obtain that except with \(2^{-128}\) probability, two \(\textit{\textbf{c}},\textit{\textbf{c}}'\) will not be equivalent modulo \(X^k-r_i\). Since \(X^k-r_i\) can be further factored as \(X^k-r_i=\prod _{j=1}^k(X-r_j)\), this directly implies that one of these NTT coefficients will be distinct – in particular (\(\textit{\textbf{c}} \ne \textit{\textbf{c}}'\bmod X-r_j)\) for some j. Then we define the automorphisms to be exactly those that cycle through the NTT coefficients represented by \(X-r_j\), for \(j=1\) to k, and therefore for every NTT coefficient, one of the k automorphisms will result in \(\bar{\textit{\textbf{c}}}\) being non-zero there.

The combination of these techniques, along with several key optimizations that minimize the number of necessary “garbage terms”, results in a proof (described in Sect. 5) that is only two kilobytes longer (see Sect. 5.3) than just the opening proof in [2]. Furthermore, if one would like to prove many multiplicative relations, the size of the proof even further approaches the size of the proof from [2] because the extra elements needed in the proof amortize over all the proofs.

2 Preliminaries

2.1 Notation

As is often the case in ring-based lattice cryptography, computation will be performed in the ring \(\mathcal {R}_q= \mathbb {Z}_q[X]/(X^d+1)\), which is the quotient ring of the ring of integers \(\mathcal {R}\) of the power-of-two 2d-th cyclotomic number field modulo a rational prime \(q \in Z\).

We use bold letters \(\textit{\textbf{f}}\) for polynomials in \(\mathcal {R}\) or \(\mathcal {R}_q\), arrows for integer vectors \(\vec {v}\) over \(\mathbb {Z}_q\), bold letters with arrows \(\vec {\textit{\textbf{b}}}\) for vectors of polynomials over \(\mathcal {R}\) or \(\mathcal {R}_q\) and capital letters A and \(\textit{\textbf{A}}\) for integer and polynomial matrices, respectively. We write \(x \overset{\$}{\leftarrow }S\) when \(x \in S\) is sampled uniformly at random from the set S and similarly \(x \overset{\$}{\leftarrow }D\) when x is sampled according to the distribution D.

For \(\textit{\textbf{f}}, \textit{\textbf{g}} \in \mathcal {R}\), we have the coefficient norm

The norm is extended to vectors \(\vec {\textit{\textbf{v}}} = (\textit{\textbf{v}}_1, \dots , \textit{\textbf{v}}_k)\) of polynomials in the natural way,

2.2 Prime Splitting and Galois Automorphisms

Let l be a power of two dividing d and suppose \(q - 1 \equiv 2l \pmod {4l}\). Then, \(\mathbb {Z}_q\) contains primitive 2l-th roots of unity but no elements with order a higher power of two, and the polynomial \(X^d + 1\) factors into l irreducible binomials \(X^{d/l} - \zeta \) modulo q where \(\zeta \) runs over the 2l-th roots of unity in \(\mathbb {Z}_q\) [27, Theorem 2.3].

The ring \(\mathcal {R}_q\) has a group of automorphisms \(\mathsf {Aut}(\mathcal {R}_q)\) that is isomorphic to \(\mathbb {Z}_{2d}^\times \),

where \(\sigma _i\) is defined by \(\sigma _i(X) = X^i\). In fact, these automorphisms come from the Galois automorphisms of the 2d-th cyclotomic number field which factor through \(\mathcal {R}_q\).

The group \(\mathsf {Aut}(\mathcal {R}_q)\) acts transitively on the prime ideals \((X^{d/l} - \zeta )\) in \(\mathcal {R}_q\) and every \(\sigma _i\) factors through field isomorphisms

Concretely, for \(i \in \mathbb {Z}_{2d}^\times \) it holds that

To see this, observe that the roots of \(X^{d/l} - \zeta ^{i^{-1}}\) (in an appropriate extension field of \(\mathbb {Z}_q\)) are also roots of \(X^{id/l} - \zeta \). Then, for \(f \in \mathcal {R}_q\),

The cyclic subgroup \(\langle {2l + 1}\rangle \subset \mathbb {Z}_{2d}^\times \) generated by \(2l + 1\) has order d/l [27, Lemma 2.4] and stabilizes every prime ideal \((X^{d/l} - \zeta )\) since \(\zeta \) has order 2l. The quotient group \(\mathbb {Z}_{2d}^\times /\langle {2l+1}\rangle \) has order l and hence acts simply transitively on the l prime ideals. Therefore, we can index the prime ideals by \(i \in \mathbb {Z}_{2d}^\times /\langle {2l+1}\rangle \) and write

Now, the product of the \(k \mid l\) prime ideals \((X^{d/l} - \zeta ^i)\) where i runs over \(\langle {2l/k + 1}\rangle /\langle {2l + 1}\rangle \) is given by the ideal \((X^{kd/l} - \zeta ^k)\). So, we can partition the l prime ideals into l/k groups of k ideals each, and write

Another way to write this, which we will use in our protocols, is to note that \(\mathbb {Z}_{2d}^\times /\langle {2l/k + 1}\rangle \cong \mathbb {Z}_{2l/k}^\times \) and the powers \((2l/k + 1)^i\) for \(i = 0, \dots , k-1\) form a complete set of representatives for \(\langle {2l/k + 1}\rangle /\langle {2l + 1}\rangle \). So, if \(\sigma = \sigma _{2l/k + 1} \in \mathsf {Aut}(\mathcal {R}_q)\), then

and the prime ideals are indexed by \((i,j) \in I = \{0,\dots ,k-1\} \times \mathbb {Z}_{2l/k}^\times \).

2.3 Module SIS/LWE

We employ the computationally binding and computationally hiding commitment scheme from [2] in our protocols, and rely on the well-known Module-LWE (MLWE) and Module-SIS (MSIS) [21, 25, 26, 29] problems to prove the security of our constructions. Both problems are defined over a ring \(\mathcal {R}_q\) for a positive modulus \(q\in \mathbb {Z}^+\).

Definition 2.1

(\(\mathsf {MSIS}_{n,m,\beta _{\text {SIS}}}\)). The goal in the Module-SIS problem with parameters \(n,m>0\) and \(0<\beta _{\text {SIS}}<q\) is to find, for a given matrix \(\textit{\textbf{A}}\overset{\$}{\leftarrow }\mathcal {R}_q^{n\times m}\), \(\vec {\textit{\textbf{x}}}\in \mathcal {R}_q^m\) such that \(\textit{\textbf{A}}\vec {\textit{\textbf{x}}} = \vec {\textit{\textbf{0}}}\) over \(\mathcal {R}_q\) and \(0< \left\Vert {\vec {\textit{\textbf{x}}}}\right\Vert _2 \le \beta _{\text {SIS}}\). We say that a PPT adversary \(\mathcal {A}\) has advantage \(\epsilon \) in solving \(\mathsf {MSIS}_{n,m,\beta _{\text {SIS}}}\) if

Definition 2.2

(\(\mathsf {MLWE}_{n,m,\chi }\)). In the Module-LWE problem with parameters \(n,m>0\) and an error distribution \(\chi \) over \(\mathcal {R}\), the PPT adversary \(\mathcal {A}\) is asked to distinguish \((\textit{\textbf{A}},\vec {\textit{\textbf{t}}})\overset{\$}{\leftarrow }\mathcal {R}_q^{m\times n}\times \mathcal {R}_q^m\) from \((\textit{\textbf{A}},\textit{\textbf{A}}\vec {\textit{\textbf{s}}}+\vec {\textit{\textbf{e}}})\) for \(\textit{\textbf{A}}\overset{\$}{\leftarrow }\mathcal {R}_q^{m\times n}\), a secret vector \(\vec {\textit{\textbf{s}}}\overset{\$}{\leftarrow }\chi ^n \) and error vector \(\vec {\textit{\textbf{e}}}\overset{\$}{\leftarrow }\chi ^m\). We say that \(\mathcal {A}\) has advantage \(\epsilon \) in solving \(\mathsf {MLWE}_{n,m,\chi }\) if

For our practical security estimations of these two problems against known attacks, the parameter m in both of the problems does not play a crucial role. Therefore, we sometimes simply omit m and use the notations \(\mathsf {MSIS}_{n,B}\) and \(\mathsf {MLWE}_{n,\chi }\). The parameters \(\kappa \) and \(\lambda \) denote the module ranks for \(\mathsf {MSIS}\) and \(\mathsf {MLWE}\), respectively.

2.4 Error Distribution, Discrete Gaussians and Rejection Sampling

For sampling randomness in the commitment scheme that we use, and to define the particular variant of the Module-LWE problem that we use, we need to specify the error distribution \(\chi ^d\) on \(\mathcal {R}\). In general any of the standard choices in the literature is fine. So, for example, \(\chi \) can be a narrow discrete Gaussian distribution or the uniform distribution on a small interval. In the numerical examples in Sect. 5.3 we assume that \(\chi \) is the computationally simple centered binomial distribution on \(\{-1,0,1\}\) where \(\pm 1\) both have probability 5/16 and 0 has probability 6/16. This distribution is chosen (rather than the more “natural” uniform one) because it is easy to sample given a random bitstring by computing \(a_1 + a_2 - b_1 - b_2 \bmod 3\) with uniformly random bits \(a_i, b_i\).

Rejection Sampling. In our zero-knowledge proof, the prover will want to output a vector \(\vec {\textit{\textbf{z}}}\) whose distribution should be independent of a secret randomness vector \(\vec {\textit{\textbf{r}}}\), so that \(\vec {\textit{\textbf{z}}}\) cannot be used to gain any information on the prover’s secret. During the protocol, the prover computes \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}} + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}\) where \(\vec {\textit{\textbf{r}}}\) is the randomness used to commit to the prover’s secret, \(\textit{\textbf{c}} \overset{\$}{\leftarrow }C\) is a challenge polynomial, and \(\vec {\textit{\textbf{y}}}\) is a “masking” vector. To remove the dependency of \(\vec {\textit{\textbf{z}}}\) on \(\vec {\textit{\textbf{r}}}\), we use the rejection sampling technique by Lyubashevsky [23, 24]. In the two variants of this technique the masking vector is either sampled uniformly from some bounded region or using a discrete Gaussian distribution. In the high dimensions we will encounter, the Gaussian variant is far superior as it gives acceptable rejection probabilities for much narrower distributions. We first define the discrete Gaussian distribution and then state the rejection sampling algorithm in Fig. 1, which plays a central role in Lemma 2.4.

Definition 2.3

The discrete Gaussian distribution on \(\mathcal {R}^\ell \) centered around \(\vec {\textit{\textbf{v}}} \in \mathcal {R}^\ell \) with standard deviation \(\mathfrak {s}> 0\) is given by

When it is centered around \(\vec {\textit{\textbf{0}}} \in \mathcal {R}^\ell \) we write \(D^{\ell d}_\mathfrak {s}= D^{\ell d}_{\vec {\textit{\textbf{0}}}, \mathfrak {s}}\)

Lemma 2.4

(Rejection Sampling). Let \(V \subseteq \mathcal {R}^\ell \) be a set of polynomials with norm at most T and \(\rho :V \rightarrow [{0,1}]\) be a probability distribution. Also, write \(\mathfrak {s}= 11 T\) and \(M = 3\). Now, sample \(\vec {\textit{\textbf{v}}} \overset{\$}{\leftarrow }\rho \) and \(\vec {\textit{\textbf{y}}} \overset{\$}{\leftarrow }D^{\ell d}_\mathfrak {s}\), set \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}} + \vec {\textit{\textbf{v}}}\), and run \(b \leftarrow \mathsf {Rej}\left( {\vec {\textit{\textbf{z}}}, \vec {\textit{\textbf{v}}}, \mathfrak {s}}\right) \) Then, the probability that \(b = 0\) is at least \((1- 2^{-100})/M\) and the distribution of \((\vec {\textit{\textbf{v}}}, \vec {\textit{\textbf{z}}})\), conditioned on \(b = 0\), is within statistical distance of \(2^{-100}/M\) of the product distribution \(\rho \times D^{\ell d}_\mathfrak {s}\).

Rejection sampling [24].

We will also use the following tail bound, which follows from [1, Lemma 1.5(i)].

Lemma 2.5

Let \(\vec {\textit{\textbf{z}}} \overset{\$}{\leftarrow }D^{\ell d}_\mathfrak {s}\). Then

2.5 Commitment Scheme

In our protocol, we use a variant of the commitment scheme from [2] which commits to a vector of messages in \(\mathcal {R}_q\). Our basic proof of knowledge of multiplicative relations will prove that \(\textit{\textbf{m}}_1\textit{\textbf{m}}_2=\textit{\textbf{m}}_3\), so for simplicity, we just describe the commitment scheme for three messages.

The public parameters are a uniformly random matrix \(\textit{\textbf{B}}_0 \in \mathcal {R}_q^{\mu \times (\lambda + \mu + 3)}\) and uniform vectors \(\vec {\textit{\textbf{b}}}_1,\dots ,\vec {\textit{\textbf{b}}}_3 \in \mathcal {R}_q^{\lambda + \mu + 3}\). To commit to \(\vec {\textit{\textbf{m}}} = (\textit{\textbf{m}}_1, \textit{\textbf{m}}_2, \textit{\textbf{m}}_3)^T \in \mathcal {R}_q^3\), we choose a random short polynomial vector \(\vec {\textit{\textbf{r}}} \overset{\$}{\leftarrow }\chi ^{(\lambda + \mu + 3)d}\) from the error distribution and output the commitment

The commitment scheme is computationally hiding under the Module-LWE assumption and computationally binding under the Module-SIS assumption; see [2]. Moreover, the scheme is not only binding for the opening \((\vec {\textit{\textbf{r}}},\vec {\textit{\textbf{m}}})\) known by the prover, but also binding with respect to a relaxed opening \((\bar{\textit{\textbf{c}}},\vec {\textit{\textbf{r}}}*,\vec {\textit{\textbf{m}}}^*)\). The relaxed opening also includes a short polynomial \(\bar{\textit{\textbf{c}}}\), the randomness vector \(\vec {\textit{\textbf{r}}}^*\) is longer than \(\vec {\textit{\textbf{r}}}\), and the following equations hold,

The notion of relaxed opening is important since there is an efficient protocol for proving knowledge of a relaxed opening. We do not go into details here since we will define a new notion of a binding relaxed opening and provide a proof of knowledge protocol.

The utility of the commitment scheme for zero-knowledge proof systems stems from the fact that one can compute module homomorphisms on committed messages. For example, let \(\textit{\textbf{a}}_1\) and \(\textit{\textbf{a}}_2\) be from \(\mathcal {R}_q\). Then

is a commitment to the message \(\textit{\textbf{a}}_1\textit{\textbf{m}}_1 + \textit{\textbf{a}}_2\textit{\textbf{m}}_2\) with matrix \(\textit{\textbf{a}}_1\vec {\textit{\textbf{b}}}_1 + \textit{\textbf{a}}_2\vec {\textit{\textbf{b}}}_2\). This module homomorphic property together with a proof that a commitment is a commitment to the zero polynomial allows to prove linear relations among committed messages over \(\mathcal {R}_q\).

3 Distribution in the NTT

In this section we present a way to construct challenge sets \(\mathcal {C}\subset \mathcal {R}_q\) so as to be able to compute the (almost exact) probability that \(\textit{\textbf{c}}-\textit{\textbf{c}}'\) is invertible in \(\mathcal {R}_q\), when \(\textit{\textbf{c}}\) and \(\textit{\textbf{c}}'\) are sampled from some distribution C over \(\mathcal {C}\). Recall that \(d\ge l\) are powers of 2. Moreover,

where \(\zeta \in \mathbb {Z}_q\) is a 2l-th root of unity (in this section, the factors \(X^{d/l}-\zeta ^i\) are not necessarily irreducible as this doesn’t really matter for the results here). The challenge set is defined as all degree d polynomials with coefficients in \(\{-1,0,1\}\), i.e., \(\mathcal {C}= \{-1,0,1\}^d \subset \mathcal {R}_q\). The coefficients of a challenge \(\textit{\textbf{c}} \in \mathcal {C}\) are independently and identically distributed, where 0 has probability p and \(\pm 1\) both have probability \((1-p)/2\). For the resulting distribution over \(\mathcal {C}\) we write C, and sampling a challenge \(\textit{\textbf{c}}\) from this distribution is written as \(\textit{\textbf{c}} \leftarrow C\).

In the remainder of this section we use Fourier analysis to study the distribution of \(\textit{\textbf{c}} \mod X^{d/l} - \zeta ^i\) for \(\textit{\textbf{c}}\leftarrow C\) and \(i\in \mathbb {Z}_q^{\times }\). Lemma 3.1 shows that this distribution does not depend on i.

In [9] a similar analysis is performed. The main differences with our approach is that they sample the coefficients from a binomial distribution centered at 0. In particular, our coefficient distribution with \(p=1/2\) corresponds to a special case of the binomial distribution considered in [9]. For our application it makes sense to consider various distributions over \(\{-1,0,1\}\). The binomial distribution does allow for the derivation of an elegant upper bound on the maximum probability of \(\textit{\textbf{c}} \mod X^{d/l}-\zeta ^i\). However, this upper bound is only applicable when \(\sqrt{q} \le 2d\). For this reason we derive a less elegant but much tighter upper bound on various distributions over \(\{-1,0,1\}\), that is also applicable when \(\sqrt{q} >2d\).

Lemma 3.1

Let \(\textit{\textbf{x}} \in \mathcal {R}_q\) be a random polynomial with coefficients independently and identically distributed. Then \(\mathcal {R}_q/(X^{d/l} - \zeta ^i) \cong \mathcal {R}_q/(X^{d/l} - \zeta ^j)\), and \(\textit{\textbf{x}} \bmod (X^{d/l} - \zeta ^i)\) and \(\textit{\textbf{x}} \bmod (X^{d/l} - \zeta ^j)\) are identically distributed for all \(i,j \in \mathbb {Z}_{2l}^{\times }\).

Proof

First suppose that \(X^{d/l}-\zeta ^i\) is irreducible for all \(i\in \mathbb {Z}_{2l}^{\times }\). Then \(\mathfrak {q}_i = (q,X^{d/l}-\zeta ^i)\) is prime in \(K=\mathbb {Q}[X]/(X^d+1)\) and for all \(i,j \in \mathbb {Z}_{2l}^{\times }\) there exists an automorphism \(\sigma \in {\text {Gal}}\left( K/\mathbb {Q}\right) \) such that \(\sigma (\mathfrak {q}_i) = \mathfrak {q}_j\). Hence, \(\sigma \) induces an isomorphism between the finite fields \(\mathcal {R}_q/(X^{d/l} - \zeta ^i)\) and \(\mathcal {R}_q/(X^{d/l} - \zeta ^j)\).

Since the coefficients of \(\textit{\textbf{x}}\) are i.i.d., it holds that \(\sigma (\textit{\textbf{x}})\) follows the same distribution over \(R_q\) as \(\textit{\textbf{x}}\). Hence, \(\textit{\textbf{x}} \mod (X^{d/l} - \zeta ^i)\) follows the same distribution as \(\sigma (\textit{\textbf{x}} \mod (X^{d/l} - \zeta ^i)) = \sigma (\textit{\textbf{x}}) \mod (X^{d/l} - \zeta ^j)\) and as \(\textit{\textbf{x}} \mod (X^{d/l} - \zeta ^j)\) which proves the lemma for this case.

Now suppose that \(X^{d/l}-\zeta ^i\) is reducible in \(\mathbb {Z}_q\), then so is \(X^{d/l}-\zeta ^j\). Moreover, since K is Galois both these polynomials split in the same number irreducible factors and for every pair f(X), g(X) of irreducible factors there exists an automorphism \(\sigma \in {\text {Gal}}(K/\mathbb {Q})\) such that \(\sigma \left( (q,f(X))\right) =(q,g(X))\). Using these automorphisms the lemma follows in an analogous manner.

Let us now consider the coefficients of the polynomial \(\textit{\textbf{c}} \mod (X^{d/l} - \zeta )\) for \(\textit{\textbf{c}}\leftarrow C\). Clearly all coefficients follow the same distribution over \(\mathbb {Z}_q\). Let us write Y for the random variable over \(\mathbb {Z}_q\) that follows this distribution. The following lemma gives an upper bound on the maximum probability of Y.

Lemma 3.2

Let the random variable Y over \(\mathbb {Z}_q\) be defined as above. Then for all \(x\in \mathbb {Z}_q\),

The proof of Lemma 3.2 is given in the full version of the paper.

The following lemma shows that, by utilizing certain algebraic symmetries, we can reduce the number of terms in the summation of Lemma 3.2 by a factor 2l, thereby allowing the maximum probability to be computed more efficiently.

Lemma 3.3

Let the random variable Y over \(\mathbb {Z}_q\) be defined as above. Then for all \(x\in \mathbb {Z}_q\),

Proof

Let \(a,b \in \mathbb {Z}_q^{\times }\) such that \(ab^{-1} \in \langle \zeta \rangle \), i.e., \(a = b \zeta ^m\) for some m. Now note that \(\{1,\zeta ,\dots ,\zeta ^{l-1}\}=\langle \zeta \rangle / \pm 1 = \zeta ^m \langle \zeta \rangle / \pm 1\) for all \(m\in \mathbb {Z}\). Since \(\cos (x)\) is an even function it therefore follows that \(\widehat{P}(a)=\widehat{P}(b)\), from which the lemma immediately follows.

The random variable \(Y=Y_{l}\) corresponds to a random walk of length l over \(\mathbb {Z}_q\) defined as follows

where \(b_n\) are i.i.d. with distribution \(\mu (0)=p\) and \(\mu (1)=\mu (-1)=(1-p)/2\). Random walks of this type have been studied extensively [7, 10, 12, 17, 18] and convergence is expected in time \(O(\log q / H_2(\mu ))\) [7], where

However, there exist random walks of this form for which convergence only occurs in time \(O( \log q \log \log q)\) [12, 17].

Let us consider the following example. Let q be the 32-bit prime \(4294962689 = \mod 1 \mod 512\) and \(d\mid 256\) the dimension of the ring \(\mathcal {R}\). Then, for any d, q splits completely in \(\mathbb {Z}[X]/(X^d+1)\), hence in this case \(l=d\). Moreover, suppose that the coefficients of challenges are sampled from a uniform distribution over \(\{-1,0,1\}\), i.e., \(p=1/3\). Table 1 shows a bound M on the maximum probability \(\max _{x \in \mathbb {Z}_q} |\Pr (Y=x)|\), as defined in Lemma 3.2 and Lemma 3.3.

4 Opening Proof

Suppose the prover knows an opening to the commitment

The standard protocol for proving this, stemming from [2], works by giving an approximate proof for the first equation \(\vec {\textit{\textbf{t}}}_0 = \textit{\textbf{B}}_0\vec {\textit{\textbf{r}}}\). So, the prover commits to a short masking vector \(\vec {\textit{\textbf{y}}}\) from a discrete Gaussian distribution by sending \(\vec {\textit{\textbf{w}}} = \textit{\textbf{B}}_0\vec {\textit{\textbf{y}}}\). Then the verifier sends a short challenge polynomial \(\textit{\textbf{c}} \in \mathcal {C}\subset \mathcal {R}\) and the prover replies with the short vector \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}} + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}\). Here rejection sampling is used to make the distribution of \(\vec {\textit{\textbf{z}}}\) independent from \(\vec {\textit{\textbf{r}}}\). The verifier checks that \(\vec {\textit{\textbf{z}}}\) is short, i.e. \(\left\Vert {\vec {\textit{\textbf{z}}}}\right\Vert _2 \le \beta \), and the equation \(\textit{\textbf{B}}_0\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{w}}} + \textit{\textbf{c}}\vec {\textit{\textbf{t}}}_0\).

For suitable instantiations this proves knowledge of a commitment opening because it is possible to extract two prover replies \(\vec {\textit{\textbf{z}}}\) and \(\vec {\textit{\textbf{z}}}'\) for two challenges \(\textit{\textbf{c}}\) and \(\textit{\textbf{c}}'\), respectively, and a message \(\textit{\textbf{m}}^* \in \mathcal {R}_q\) such that

where \(\bar{\textit{\textbf{c}}} = \textit{\textbf{c}} - \textit{\textbf{c}}'\) is the difference of the challenges. In fact, it can be shown [2] that the commitment scheme is binding with respect to the message \(\textit{\textbf{m}}^*\) under the Module-SIS assumption if we have the additional property that \(\bar{\textit{\textbf{c}}}\) is invertible in the ring \(\mathcal {R}_q\). Then, it must be that \(\textit{\textbf{m}}^* = \textit{\textbf{m}}\), unless the prover knows a Module-SIS solution for \(\textit{\textbf{B}}_0\). The invertibility property is crucial in all previous works that study zero-knowledge proofs for the commitment scheme. It is enforced by choosing the set \(\mathcal {C}\) of challenges such that the difference of every two distinct elements is invertible. Unfortunately, depending on how much the prime q splits in the ring \(\mathcal {R}\), there will not be sufficiently large sets with this property, and even less so large sets consisting of short polynomials. For instance, for both theoretical and practical reasons one often wants q to split completely, but then there can be at most q polynomials which are pairwise different modulo one of the degree 1 prime divisors of q. Even if we let q split slightly less, say in degree 4 prime ideals, then we do not know of large sets of short polynomials that do not collide modulo one of the divisors. This severely restricts the soundness of the protocol and the protocol has to be repeated several times to boost soundness, which blows up the proof size. See [27] for more details about this problem.

The results from Sect. 3 present a way to construct larger challenge sets with the weaker property that \(\bar{\textit{\textbf{c}}}\) is non-invertible only with negligible probability. We generalize the proof further and explain how it is possible to make use of challenge sets where the difference of two elements is non-invertible with non-negligible probability.

So, in the extraction, we drop the assumption that for a pair of accepting transcripts with different challenges \(\textit{\textbf{c}}\) and \(\textit{\textbf{c}}'\), the difference \(\bar{\textit{\textbf{c}}} = \textit{\textbf{c}} - \textit{\textbf{c}}'\) is invertible. This essentially means that we can not uniquely interpolate the prover replies \(\vec {\textit{\textbf{z}}}\) and \(\vec {\textit{\textbf{z}}}'\), and obtain vectors \(\vec {\textit{\textbf{y}}}^*\) and \(\vec {\textit{\textbf{r}}}^*\) such that

But we can restore the interpolation by piecing together several transcript pairs that we interpolate locally modulo the various prime ideals dividing q.

Let \(X^d + 1 \equiv \varvec{\varphi }_1 \dots \varvec{\varphi }_l \pmod {q}\) be the factorization of \(X^d + 1\) into irreducible polynomials modulo q. Thus, our ring \(\mathcal {R}_q\) is the product of the corresponding residue fields \(\kappa _i = \mathbb {Z}_q[X]/(\varvec{\varphi }_i)\), i.e.

Now, what is needed specifically is that for every i there is an accepting transcript pair with nonzero challenge difference \(\bar{\textit{\textbf{c}}}\) modulo \(\varvec{\varphi }_i\). So, concretely, suppose the extractor \(\mathcal {E}\) has obtained l pairs \((\vec {\textit{\textbf{z}}}_i, \vec {\textit{\textbf{z}}}'_i)\), \(i = 1, \dots , l\), of replies from the prover \(\mathcal {P}\) for the challenge pairs \((\textit{\textbf{c}}_i, \textit{\textbf{c}}'_i)\), respectively, such that

Some of the pairs can be equal and the extractor does not always need really need to compute l pairs as long as the above condition is true. We also assume that all transcripts contain the same prover commitment \(\vec {\textit{\textbf{w}}}\) and are accepting; that is, in particular, \(\textit{\textbf{B}}_0\vec {\textit{\textbf{z}}}_i = \vec {\textit{\textbf{w}}} + \textit{\textbf{c}}_i\vec {\textit{\textbf{t}}}_0\) and \(\textit{\textbf{B}}_0\vec {\textit{\textbf{z}}}'_i = \vec {\textit{\textbf{w}}} + \textit{\textbf{c}}'_i\vec {\textit{\textbf{t}}}_0\) for all i. From this data \(\mathcal {E}\) computes the local interpolations

Concretely, we set

Now, let \(\vec {\textit{\textbf{r}}}^*\) and \(\vec {\textit{\textbf{y}}}^*\) over \(\mathcal {R}_q\) be the CRT lifting of the \(\vec {\textit{\textbf{r}}}^*_i\) and \(\vec {\textit{\textbf{y}}}^*_i\). We show it must hold that

for all i. This restores the global interpolations as in Eq. 18. In fact, we show more than this. Namely, that in every accepting transcript with commitment \(\vec {\textit{\textbf{w}}}\), the prover reply must be precisely of the form in Eq. 18. Also the vectors \(\vec {\textit{\textbf{r}}}^*\) and \(\vec {\textit{\textbf{y}}}^*\) are preimages of \(\vec {\textit{\textbf{t}}}_0\) and \(\vec {\textit{\textbf{w}}}\), respectively, which is what we suspect. So the prover really is committed to \(\vec {\textit{\textbf{r}}}^*\) and \(\vec {\textit{\textbf{y}}}^*\) by \(\vec {\textit{\textbf{t}}}_0\) and \(\vec {\textit{\textbf{w}}}\).

Lemma 4.1

If we have obtained l pairs of accepting transcripts with commitment \(\vec {\textit{\textbf{w}}}\) as in the preceding paragraph, then every accepting transcript \((\vec {\textit{\textbf{w}}},\textit{\textbf{c}},\vec {\textit{\textbf{z}}})\) with commitment \(\vec {\textit{\textbf{w}}}\) must be such that \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}}^* + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}^*\) where \(\vec {\textit{\textbf{y}}}^*\) and \(\vec {\textit{\textbf{r}}}^*\) are the vectors computed above independently from \(\textit{\textbf{c}}\), or we obtain an \(\mathsf {MSIS}_{\mu ,8\kappa \beta }\) solution for \(\textit{\textbf{B}}_0\) where \(\kappa \) is a bound on the \(\ell _1\)-norm of the challenges. Moreover, we have \(\textit{\textbf{B}}_0\vec {\textit{\textbf{r}}}^* = \vec {\textit{\textbf{t}}}_0\) and \(\textit{\textbf{B}}_0\vec {\textit{\textbf{y}}}^* = \vec {\textit{\textbf{w}}}\).

Proof

Define \(\vec {\textit{\textbf{y}}}^{*\prime }\) by \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}}^{*\prime } + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}^*\). Fix some \(i \in \{1,\dots ,l\}\). Since all transcripts are accepting we get from subtracting the verification equations,

Now, cross-multiplying by \(\bar{\textit{\textbf{c}}}_i\) and \(\textit{\textbf{c}} - \textit{\textbf{c}}_i\) and subtracting shows that we either have an \(\mathsf {MSIS}_{\mu ,8\kappa \beta }\) solution for \(\textit{\textbf{B}}_0\), or

Suppose the latter case is true. Then we reduce modulo \(\varvec{\varphi }_i\) and substitute the local expressions for \(\vec {\textit{\textbf{z}}}\), \(\vec {\textit{\textbf{z}}}_i\) and \(\vec {\textit{\textbf{z}}}'_i\), which shows

Since \(\bar{\textit{\textbf{c}}}_i \bmod \varvec{\varphi }_i \ne 0\), \(\vec {\textit{\textbf{y}}}^{*\prime } \equiv \vec {\textit{\textbf{y}}}^*_i \equiv \vec {\textit{\textbf{y}}}^*\) modulo \(\varvec{\varphi }_i\). This holds for all i and hence it follows that \(\vec {\textit{\textbf{y}}}^{*\prime } = \vec {\textit{\textbf{y}}}^*\).

We come to the statements \(\textit{\textbf{B}}_0\vec {\textit{\textbf{r}}}^* = \vec {\textit{\textbf{t}}}_0\) and \(\textit{\textbf{B}}_0\vec {\textit{\textbf{y}}}^* = \vec {\textit{\textbf{w}}}\). From the construction of \(\vec {\textit{\textbf{r}}}^*\) and the verification equations it follows that

for all i. Similarly, for \(\vec {\textit{\textbf{y}}}^*\),

The statements in the lemma follow from the Chinese remainder theorem. \(\square \)

Finally, the extracted vector \(\vec {\textit{\textbf{r}}}^*\) can be used to define a binding notion of opening for the commitment scheme where the extracted message \(\textit{\textbf{m}}^*\) is simply set to fulfill

Then we have found an instance of the following definition.

Definition 4.2

A weak opening for the commitment \(\vec {\textit{\textbf{t}}} = \vec {\textit{\textbf{t}}}_0 \parallel \textit{\textbf{t}}_1\) consists of l polynomials \(\bar{\textit{\textbf{c}}}_i \in \mathcal {R}_q\), a randomness vector \(\vec {\textit{\textbf{r}}}^*\) over \(\mathcal {R}_q\) and a message \(\textit{\textbf{m}}^* \in \mathcal {R}_q\) such that

It is easy to show that the commitment scheme is binding with respect to these weak openings.

Lemma 4.3

The commitment scheme is binding with respect to weak openings if \(\mathsf {MSIS}_{\mu ,8\kappa \beta }\) is hard. More precisely, from two different weak openings \(((\bar{\textit{\textbf{c}}}_i),\vec {\textit{\textbf{r}}}^*,\textit{\textbf{m}}^*)\) and \(((\bar{\textit{\textbf{c}}}'_i),\vec {\textit{\textbf{r}}}^{*\prime },\textit{\textbf{m}}^{*\prime })\) with \(\textit{\textbf{m}}^* \ne \textit{\textbf{m}}^{*\prime }\) one can immediately compute a Module-SIS solution for \(\textit{\textbf{B}}_0\) of length at most \(8\kappa \beta \).

Proof

Suppose there are two weak openings \(((\bar{\textit{\textbf{c}}}_i), \vec {\textit{\textbf{r}}}^*, \textit{\textbf{m}}^*)\) and \(((\bar{\textit{\textbf{c}}}'_i), \vec {\textit{\textbf{r}}}^{*\prime }, \textit{\textbf{m}}^{*\prime })\) with \(\textit{\textbf{m}}^* \ne \textit{\textbf{m}}^{*\prime }\). Then, \(\langle {\vec {\textit{\textbf{b}}}_1,\vec {\textit{\textbf{r}}}^*}\rangle + \textit{\textbf{m}}^* = \textit{\textbf{t}}_1 = \langle {\vec {\textit{\textbf{b}}}_1,\vec {\textit{\textbf{r}}}^{*\prime }}\rangle + \textit{\textbf{m}}^{*\prime }\) implies \(\vec {\textit{\textbf{r}}}^* \ne \vec {\textit{\textbf{r}}}^{*\prime }\). Therefore, there exists an \(i \in \{1,\dots ,l\}\) such that \(\vec {\textit{\textbf{r}}}^* \not \equiv \vec {\textit{\textbf{r}}}^{*\prime } \pmod {\varvec{\varphi }_i}\). Consequently, \(\bar{\textit{\textbf{c}}}_i\bar{\textit{\textbf{c}}}'_i(\vec {\textit{\textbf{r}}}^* - \vec {\textit{\textbf{r}}}^{*\prime }) = \bar{\textit{\textbf{c}}}'_i\bar{\textit{\textbf{c}}}_i\vec {\textit{\textbf{r}}}^* - \bar{\textit{\textbf{c}}}_i\bar{\textit{\textbf{c}}}'_i\vec {\textit{\textbf{r}}}^{*\prime } \ne 0\) since the polynomials \(\textit{\textbf{c}}_i\) and \(\textit{\textbf{c}}'_i\) are non-zero modulo \(\varvec{\varphi }_i\). Hence,

is a non-trivial Module-SIS solution for \(\textit{\textbf{B}}_0\) of length at most \(8\kappa \beta \). \(\square \)

It remains to explain how we make it possible to arrive at the transcript pairs that we want to piece together. Suppose \(\mathcal {R}_q\) factors in the following way,

with l irreducible \(\varvec{\varphi }_i = X^{d/l} - \zeta ^i\) and \(\zeta \) a primitive 2l-th root of unity. Let \(\mathcal {C}= \{-1,0,1\}^d \subset \mathcal {R}\) and \(\textit{\textbf{c}} \in \mathcal {C}\) be a random element from \(\mathcal {C}\) where each coefficient is independently identically distributed with \(\Pr (0) = 1/2\) and \(\Pr (-1) = \Pr (1) = 1/4\). Then the d/l coefficients of \(\textit{\textbf{c}} \bmod \varvec{\varphi }_i\) for a fixed i are mutually independent and Lemma 3.3 gives a bound on their maximum probability over \(\mathbb {Z}_q\). We will set parameters such that the maximum probability is not much bigger than 1/q. Then the probability that a cheating prover can get away with only answering challenges with a particular value modulo \(\varvec{\varphi }_i\) is about \(q^{-d/l}\). If this probability is negligible, then, although the projections \(\textit{\textbf{c}} \bmod \varvec{\varphi }_i\) for varying i are not independent, we can get several transcript pairs where for each i at least one \(\bar{\textit{\textbf{c}}} \bmod \varvec{\varphi }_i\) is non-zero. This works by rewinding the prover l times, once for every i, and sending a challenge that differs from a previous successful challenge modulo \(\varvec{\varphi }_i\). If otherwise the probability \(q^{-d/l}\) is not negligible we can run several, say k, copies of the protocol in parallel and reduce the cheating probability to \(q^{-kd/l}\). Then there are k prover commitments \(\vec {\textit{\textbf{w}}}_i\) in the first flow and there won’t be l accepting transcript pairs for each of them. Hence this requires a slightly more general analysis than what we have provided in the overview in this section. We handle this case in the security proof of our protocol given in Fig. 2. It turns out that it is still possible to extract unique preimages \(\vec {\textit{\textbf{y}}}_i\) for all commitments \(\vec {\textit{\textbf{w}}}_i\).

In the k parallel repetitions we do not sample the challenges independently. The reason is that when proving relations on the messages and specifically in our product proof we will need more structure. Let \(\sigma = \sigma _{2l/k + 1} \in {\text {Aut}}(\mathcal {R}_q) \cong \mathbb {Z}_{2d}^\times \) be the automorphism of order kd/l that stabilizes the ideals

for \(j \in \langle {-1,5}\rangle /\langle {2l/k+1}\rangle \cong \mathbb {Z}_{2l/k}^\times \). Now, we let the challenges in the k parallel executions be the images \(\sigma ^i(\textit{\textbf{c}})\), \(i = 0,\dots ,k-1\), of a single polynomial \(\textit{\textbf{c}} \in \mathcal {C}\). If parameters are such that the maximum probability of each of the mutually independent coefficients of \(\textit{\textbf{c}} \bmod (X^{kd/l} - \zeta ^{jk})\) is essentially 1/q, and thus the maximum probability of \(\textit{\textbf{c}} \bmod (X^{kd/l} - \zeta ^{jk})\) is essentially \(q^{-kd/l}\), and this is negligible, then the prover must answer two \(\textit{\textbf{c}}, \textit{\textbf{c}}'\) that differ modulo \(X^{kd/l} - \zeta ^{jk}\). Hence, \(\bar{\textit{\textbf{c}}} = \textit{\textbf{c}} - \textit{\textbf{c}}'\) is non-zero modulo at least one of the divisors, say \((X^{d/l} - \zeta ^{j})\). Therefore, for every other divisor \(\sigma ^i(X^{d/l} - \zeta ^j)\) we have

So we are in the situation where we have an accepting transcript pair with non-zero \(\bar{\textit{\textbf{c}}}\) modulo every prime divisor of \((X^{kd/l} - \zeta ^{jk})\). By repeating the argument for every \(j \in \mathbb {Z}_{2l/k}^\times \), we see that we can get an extraction with non-vanishing \(\bar{\textit{\textbf{c}}}\) modulo every prime divisor of \((X^d + 1)\).

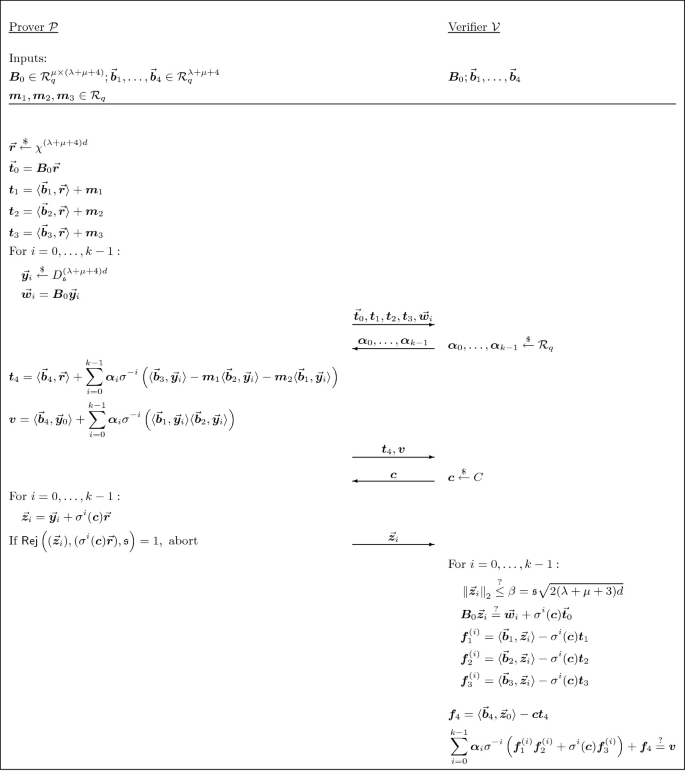

The final protocol is given in Fig. 2. It’s security is stated in Theorem 4.4. The proof of Theorem 4.4 is given in the full version of the paper.

Automorphism opening proof for the commitment scheme. We assume l, k are powers of two such that \(k < l \le d\), \(q - 1 \equiv 2l \pmod {4l}\), and \(\sigma = \sigma _{2l/k + 1} \in \mathsf {Aut}(\mathcal {R}_q)\). Furthermore, C is the challenge distribution over \(\mathcal {R}\) where each coefficient is independently identically distributed with \(\Pr (0) = 1/2\) and \(\Pr (-1) = \Pr (1) = 1/4\), \(\kappa \) is a bound on the \(\ell _1\)-norm of \(\textit{\textbf{c}}\), i.e. \(\left\Vert {\textit{\textbf{c}}}\right\Vert _1 \le \kappa \) with overwhelming probability for \(\textit{\textbf{c}} \overset{\$}{\leftarrow }C\), and \(D_\mathfrak {s}\) is the discrete Gaussian distribution on \(\mathbb {Z}\) with standard deviation \(\mathfrak {s}= 11k\kappa \left\Vert {\vec {\textit{\textbf{r}}}}\right\Vert _2\).

Theorem 4.4

The protocol in Fig. 2 is complete, statistical honest verifier zero-knowledge and computational special sound under the Module-SIS assumption. More precisely, let p be the maximum probability over \(\mathbb {Z}_q\) of the coefficients of \(\textit{\textbf{c}} \bmod X^{kd/l} - \zeta ^k\) as in Lemma 3.3.

Then, for completeness, unless the honest prover \(\mathcal {P}\) aborts due to the rejection sampling, it convinces the honest verifier \(\mathcal {V}\) with overwhelming probability.

For zero-knowledge, there exists a simulator \(\mathcal {S}\), that, without access to secret information, outputs a simulation of a non-aborting transcript of the protocol between \(\mathcal {P}\) and \(\mathcal {V}\) which has statistical distance at most \(2^{-100}\) to the actual interaction.

For knowledge-soundness, there is an extractor \(\mathcal {E}\) with the following properties. When given rewindable black-box access to a deterministic prover \(\mathcal {P}^*\) that convinces \(\mathcal {V}\) with probability \(\varepsilon > p^{kd/l}\), \(\mathcal {E}\) either outputs a weak opening for the commitment \(\vec {\textit{\textbf{t}}}\) or a \(\mathsf {MSIS}_{\mu ,8\kappa \beta }\) solution for \(\textit{\textbf{B}}_0\) in expected time at most \(1/\varepsilon + (l/k)(\varepsilon - p^{kd/l})^{-1}\) when running \(\mathcal {P}^*\) once is assumed to take unit time.

Moreover, the weak opening can be extended to also include k vectors \(\vec {\textit{\textbf{y}}}^*_i \in \mathcal {R}_q^{\lambda + \mu + 1}\) such that \(\textit{\textbf{B}}_0\vec {\textit{\textbf{y}}}^*_i = \vec {\textit{\textbf{w}}}_i\), where \(\vec {\textit{\textbf{w}}}_i\) are the prover commitments sent by \(\mathcal {P}^*\) in the first flow. Furthermore, for every accepting transcript of an interaction with \(\mathcal {P}^*\), the prover replies are given by \(\vec {\textit{\textbf{z}}}_i = \vec {\textit{\textbf{y}}}^*_i + \sigma ^i(\textit{\textbf{c}})\vec {\textit{\textbf{r}}}^*\).

5 Product Proof

In this section we present an efficient protocol for proving multiplicative relations between committed messages. Suppose the prover knows an opening to a commitment \(\vec {\textit{\textbf{t}}}\) to three secret polynomials \(\textit{\textbf{m}}_1, \textit{\textbf{m}}_2, \textit{\textbf{m}}_3 \in \mathcal {R}_q\),

His goal is to prove the multiplicative relation \(\textit{\textbf{m}}_1 \textit{\textbf{m}}_2 = \textit{\textbf{m}}_3\) in \(\mathcal {R}_q\). We recall a simple technique for this, which for example was used in [6, 31]. The prover commits to uniformly random masking polynomials \(\textit{\textbf{a}}_1, \textit{\textbf{a}}_2, \textit{\textbf{a}}_3 \in \mathcal {R}_q\) and two so-called “garbage polynomials”,

Then \(\mathcal {P}\) replies to a challenge polynomial \(\textit{\textbf{x}} \in \mathcal {R}_q\) with masked openings \(\textit{\textbf{f}}_i = \textit{\textbf{a}}_i + \textit{\textbf{x}}\textit{\textbf{m}}_i\) of the messages \(\textit{\textbf{m}}_i\). Now \(\mathcal {P}\) shows that the \(\textit{\textbf{f}}_i\) really open to the committed messages by proving that \(\textit{\textbf{t}}'_i + \textit{\textbf{x}}\textit{\textbf{t}}_i - \textit{\textbf{f}}_i\) is a commitment to zero for \(i=1,2,3\). Concretely, in addition to the standard opening proof for all of the commitments where the prover sends

they will also send

The verifier then checks the equations

This convinces the verifier that the \(\textit{\textbf{f}}_i\) open to the secret messages \(\textit{\textbf{m}}_i\). Next, consider the commitment

The verifier knows that the \(\textit{\textbf{f}}_i\) are of the form \(\textit{\textbf{f}}_i = \textit{\textbf{a}}^*_i + \textit{\textbf{x}}\textit{\textbf{m}}^*_i\) where the polynomials \(\textit{\textbf{a}}^*_i\) and \(\textit{\textbf{m}}^*_i\) are the (extracted) messages in the commitments \(\textit{\textbf{t}}'_i\), \(\textit{\textbf{t}}_i\). Therefore, \(\mathcal {V}\) knows that \(\varvec{\tau }\) is a commitment to the message

where \(\textit{\textbf{m}}^*_4\), \(\textit{\textbf{m}}^*_5\) are the extracted messages from the two garbage commitments. Now the prover completes the product proof by proving that \(\varvec{\tau }\) is a commitment to zero. We explain why this suffices. The message \(\varvec{\mu }\) can be viewed as a quadratic polynomial in \(\textit{\textbf{x}}\) with coefficients that are independent from \(\textit{\textbf{x}}\). If the prover is able to answer three challenges \(\textit{\textbf{x}}\) such that their pairwise differences are invertible, then the polynomial must be the zero polynomial. In particular, the interesting term \(\textit{\textbf{m}}^*_1\textit{\textbf{m}}^*_2 - \textit{\textbf{m}}^*_3\), which is separated from the other terms as the leading coefficient in the challenge \(\textit{\textbf{x}}\), must be zero.

There are two main problems with the technique:

-

1.

The prover needs to send a large commitment \(\vec {\textit{\textbf{t}}}'\) to 5 polynomials together with an opening proof for it, and also the three uniform masked openings \(\textit{\textbf{f}}_i\).

-

2.

Similarly as in the opening proof, the prover can cheat unless it is forced to be able to answer several challenges \(\textit{\textbf{x}}\) with invertible differences. Unlike for the challenge \(\textit{\textbf{c}}\) there is no shortness requirement associated to \(\textit{\textbf{x}}\). Still, if q splits completely, the soundness error is restricted to 1/q even for uniformly random \(\textit{\textbf{x}} \in \mathcal {R}_q\). Repetition is particularly expensive in the case of \(\textit{\textbf{x}}\) since the masking polynomials \(\textit{\textbf{a}}_i\) and corresponding commitments \(\textit{\textbf{t}}'_i\) can not be reused. In fact, sending \(\textit{\textbf{f}}_i = \textit{\textbf{a}}_i + \textit{\textbf{x}}\textit{\textbf{m}}_i\) for different \(\textit{\textbf{x}}\) would break zero-knowledge. This even further increases the cost of the masking and garbage commitment and its opening proof.

Both problems result in concretely quite large communication sizes. We provide solutions to both problems and hereby drastically reduce the proof size.

First Problem. Instead of making the prover send the masked openings \(\textit{\textbf{f}}_i\) and prove their well-formedness by committing to the \(\textit{\textbf{a}}_i\), we let the verifier compute the \(\textit{\textbf{f}}_i\) from the commitments \(\textit{\textbf{t}}_i\). Then the proper relation to the messages \(\textit{\textbf{m}}_i\) follows by construction. This is made possible by the results from Sect. 4. Recall that the verifier will be convinced that the vector \(\vec {\textit{\textbf{z}}}\) in the opening proof is of the form \(\vec {\textit{\textbf{z}}} = \vec {\textit{\textbf{y}}}^* + \textit{\textbf{c}}\vec {\textit{\textbf{r}}}^*\) where \(\vec {\textit{\textbf{y}}}^*, \vec {\textit{\textbf{r}}}^*\) are independent from \(\textit{\textbf{c}}\) and \(\textit{\textbf{t}}_i = \langle {\vec {\textit{\textbf{b}}}_i,\vec {\textit{\textbf{r}}}^*}\rangle + \textit{\textbf{m}}^*_i\) with binded \(\textit{\textbf{m}}^*_i\). Hence, the verifier will be convinced that

But this exactly is a masked opening of \(\textit{\textbf{m}}^*_i\) with challenge \(\textit{\textbf{c}}\) and masking polynomial \(\textit{\textbf{a}}^*_i = \langle {\vec {\textit{\textbf{b}}}_i,\vec {\textit{\textbf{y}}}^*}\rangle \).

Now, when we compute the quadratic relation \(\textit{\textbf{f}}_1\textit{\textbf{f}}_2 + \textit{\textbf{c}}\textit{\textbf{f}}_3\) we need to get rid of the garbage terms. It seems we need to linear combine garbage commitments \(\textit{\textbf{t}}'_4\) and \(\textit{\textbf{t}}'_5\) with the challenge \(\textit{\textbf{c}}\) and hereby construct a new commitment with commitment matrix \(\textit{\textbf{b}}'_4 + \textit{\textbf{c}}\textit{\textbf{b}}'_5\) depending on \(\textit{\textbf{c}}\). If we went down this path we would need to send a second fresh opening proof with new challenge to show that \(\textit{\textbf{t}}'_4 + \textit{\textbf{c}}\textit{\textbf{t}}'_5 - (\textit{\textbf{f}}_1\textit{\textbf{f}}_2 + \textit{\textbf{c}}\textit{\textbf{f}}_3)\) is a commitment to zero. This would be particularly bad if the garbage commitments are part of the commitment to the messages as one wants to have it in applications.

Instead, we use a new proof technique to achieve the same goal without two-layered opening proof and only one garbage commitment. In a nutshell, we use the masked opening \(\textit{\textbf{f}}'_4 = \langle {\vec {\textit{\textbf{b}}}'_4,\vec {\textit{\textbf{z}}}'}\rangle - \textit{\textbf{c}}\textit{\textbf{t}}'_4\) of the garbage term to reduce \(\textit{\textbf{f}}_1\textit{\textbf{f}}_2 + \textit{\textbf{c}}\textit{\textbf{f}}_3\) to the polynomial \(\textit{\textbf{f}}_1\textit{\textbf{f}}_2 + \textit{\textbf{c}}\textit{\textbf{f}}_3 + \textit{\textbf{f}}'_4\) that is constant in \(\textit{\textbf{c}}\). Then we show that the prover can just send this polynomial before seeing \(\textit{\textbf{c}}\) without destroying zero-knowledge. The resulting verification equation, which is quadratic in the commitments, can be handled in the extraction proof by making repeated use of the interpolations of \(\vec {\textit{\textbf{z}}}\), \(\vec {\textit{\textbf{z}}}'\) and the associated expressions for the commitments.

In our protocol we include the single garbage commitment in the commitment to the messages. This has the advantage of saving the separate binding part \(\vec {\textit{\textbf{t}}}'_0\) and the associated cost in the opening proof. Effectively this means that the message commitments become a part of the product proof protocol and this commitment contains an additional commitment to a garbage term,

For usual applications this approach is natural. For example when committing to an integer one usually knows that one needs to later provide a range proof for it so one can as well compute the range proof already when doing the commitment.

For concreteness we state the resulting protocol in Fig. 3. It has negligible soundness error when \(\bar{\textit{\textbf{c}}}\) is invertible with overwhelming probability. Otherwise the protocol could be repeated to boost the soundness but this would increase the number of garbage commitments \(\textit{\textbf{t}}_4\) that need to be transmitted. Instead, we now present a better solution that still only needs a single garbage commitment.

Second Problem. As explained in Sect. 4, we set up parameters so that, for some \(j \in \mathbb {Z}_{2l/k + 1}^\times \), the prover can guess the challenge \(\textit{\textbf{c}}\) modulo each of the k prime ideals \(\sigma ^i(X^{d/l} - \zeta ^j)\), \(i = 0, \dots , k-1\), with non-negligible independent probability of about \(1/q^{d/l}\). This means with the above method the prover will prove

only with non-negligible soundness error. We solve this problem by linear combining all the permutations \(\sigma ^i(\textit{\textbf{m}}_1\textit{\textbf{m}}_2 - \textit{\textbf{m}}_3)\) with independently uniformly random challenge polynomials \(\varvec{\alpha }_i\). So we set out to prove

Then our proof will show

with independent cheating probability for \(i' = 0, \dots , k-1\). But the last equation for a single \(i'\) proves

for all \(i = 0, \dots , k-1\) with cheating probability \(1/q^{d/l}\) by the Schwartz-Zippel Lemma. A careful analysis will show the success probability of a cheating prover will be reduced to essentially at most

Now we derive the corresponding equation for the masked message openings. Here is where we need the randomness openings \(\vec {\textit{\textbf{z}}}_i\) with the permutations \(\sigma ^i(\textit{\textbf{c}})\) of the challenge. The verifier can compute k masked openings for every message with challenges \(\sigma ^i(\textit{\textbf{c}})\) by setting

In the extraction we will have the expressions

Therefore, it follows that

We fold the coefficient of \(\textit{\textbf{c}}\) into the constant coefficient by adding \(\textit{\textbf{f}}_4 = \langle {\vec {\textit{\textbf{b}}}_4,\vec {\textit{\textbf{z}}}_0}\rangle - \textit{\textbf{c}}\textit{\textbf{t}}_4\) computed from the garbage commitment

Then we arrive at

The verifier checks that this is equal to \(\textit{\textbf{v}}\) using the polynomial \(\textit{\textbf{v}}\) that it has received before sending the challenge.

It is important to note that we have departed from a straight-forward repetition of the protocol in Fig. 3. The main advantage being that there is still only one garbage commitment necessary.

5.1 The Protocol

The final protocol is given in Fig. 4. Its security is stated in Theorem 5.1. The proof of the theorem is given in the full version of the paper.

Theorem 5.1

The protocol in Fig. 4 is complete, computational honest verifier zero-knowledge under the Module-LWE assumption and computational special sound under the Module-SIS assumption. More precisely, let p be the maximum probability over \(\mathbb {Z}_q\) of the coefficients of \(\textit{\textbf{c}} \bmod X^{kd/l} - \zeta ^k\) as in Lemma 3.3.

Then, for completeness, in case the honest prover \(\mathcal {P}\) does not abort due to rejection sampling, it convinces the honest verifier \(\mathcal {V}\) with overwhelming probability.

For zero-knowledge, there exists a simulator \(\mathcal {S}\), that, without access to secret information, outputs a simulation of a non-aborting transcript of the protocol between \(\mathcal {P}\) and \(\mathcal {V}\). Then for every algorithm \(\mathcal {A}\) that has advantage \(\varepsilon \) in distinguishing the simulated transcript from an actual transcript, there is an algorithm \(\mathcal {A}'\) with the same running time that has advantage \(\varepsilon - 2^{-100}\) in distinguishing \(\mathsf {MLWE}_{\lambda ,\chi }\).

For soundness, there is an extractor \(\mathcal {E}\) with the following properties. When given rewindable black-box access to a deterministic prover \(\mathcal {P}^*\) that convinces \(\mathcal {V}\) with probability \(\varepsilon \ge (3p^{d/l})^k\), \(\mathcal {E}\) either outputs a weak opening for the commitment \(\vec {\textit{\textbf{t}}}\) with messages \(\textit{\textbf{m}}^*_1\), \(\textit{\textbf{m}}^*_2\) and \(\textit{\textbf{m}}^*_3\) such that \(\textit{\textbf{m}}^*_1\textit{\textbf{m}}^*_2 = \textit{\textbf{m}}^*_3\), or a \(\mathsf {MSIS}_{\mu ,8\kappa \beta }\) solution for \(\textit{\textbf{B}}_0\) in expected time at most \(1/\varepsilon + (l/k)(\varepsilon - p^{kd/l})^{-1}\) when running \(\mathcal {P}^*\) once is assumed to take unit time.

5.2 Amortized Protocol

The protocol from the last section can be extended into a protocol for the case where the prover wants to prove multiplicative relations between many messages. In this extension there will still only be one garbage commitment necessary for proving all of the relations. So the cost for the garbage commitment is amortized over all relations. Suppose we want to prove n product relations

for \(j = 1,\dots ,n\). Then virtually in the same way in which we linear combine the automorphic images of a single relation with uniform challenges, we can use even more challenges and linear combine all the automorphic images of all the relations. Concretely, we want to prove

with \(\varvec{\alpha }_1, \dots , \varvec{\alpha }_{nk} \overset{\$}{\leftarrow }\mathcal {R}_q\). Now a nice feature of the Schwartz-Zippel lemma is that this does not decrease the soundness. Intuitively, as soon as one of the relations is false, then the linear combination of all of the relations will be uniformly random, and this will be detected with overwhelming probability.

5.3 Non-interactive Protocol and Proof Sizes

In this section we compute the size of a non-interactive proof, where we distinguish between the size for the commitment \(\vec {\textit{\textbf{t}}} = \vec {\textit{\textbf{t}}}_0 \parallel \textit{\textbf{t}}_1 \parallel \dots \parallel \textit{\textbf{t}}_3\) to the messages and the size for the actual product proof. The message commitment is to be reused in some other protocol. It consists of \(\mu + 3\) uniformly random polynomials so its size is \((\mu + 3)d\lceil {\log {q}}\rceil \) bits.

The protocol in Fig. 4 is made non-interactive with the help of the standard Fiat-Shamir technique. This means that the challenges are computed by the prover by hashing all previous messages and public information, and the hash function is modeled as a random oracle. To shorten the length of the proof, a standard technique is to not send the input to the hash function, but rather send its output (i.e. the challenge) and let the verifier recompute the input from the later transmitted terms using the verification equation and then test that the hash of these computed input terms is indeed the challenge. Concretely, in the non-interactive version of the product proof, the \(k\mu + 1\) full-size polynomials \(\vec {\textit{\textbf{w}}}_i\) and \(\textit{\textbf{v}}\) do not have to be transmitted and only \(\textit{\textbf{t}}_4\) remains as a non-short polynomial. The polynomials in the vectors \(\vec {\textit{\textbf{z}}}_i\) are short discrete Gaussian vectors with standard deviation \(\mathfrak {s}\). Every coefficient is smaller than \(6\mathfrak {s}\) in absolute value with probability \(1 - 2^{-24}\) [24, Lemma 4.4]. So we can assume this is the case for all coefficients – the non-interactive prover can just restart otherwise. Eventually, we obtain that one non-interactive proof needs

bits.

Example I. Suppose we are given 8 secret polynomials in the ring \(\mathcal {R}_q\) of rank \(d = 128\) with a prime \(q \approx 2^{32}\) that splits completely. So there are 1024 secret NTT coefficients in \(\mathbb {Z}_q\) and we need 8 \(\mathcal {R}_q\)-polynomials to commit to these secret coefficients, not just 3 as before. For this ring the maximum probability over \(\mathbb {Z}_q\) of the coefficients of \(\textit{\textbf{c}} \bmod (X^4 - \zeta ^4)\) for \(\textit{\textbf{c}} \overset{\$}{\leftarrow }C\) is \(p = 2^{-31.44}\) according to the formula in Lemma 3.3 when a coefficient of \(\textit{\textbf{c}}\) is zero with probability 1/2. So \(k = 4\) permutations of a challenge under the automorphism \(\sigma = \sigma _{64}\) are sufficient to reach negligible soundness error. Further, suppose the commitment scheme uses MLWE rank \(\lambda = 10\) and MSIS rank \(\mu = 10\). Then, we find \(\left\Vert {\textit{\textbf{c}}\vec {\textit{\textbf{r}}}}\right\Vert _1 \le 77\) with probability bigger than \(1 - 2^{-100}\). If we set the standard deviation of the discrete Gaussian to \(\mathfrak {s}= 5 \cdot 77 \cdot \sqrt{(\lambda + \mu + 9)d} = 46913\), we need \(\mathsf {MSIS}_{\mu ,8d\beta }\) to be secure for \(\beta = \mathfrak {s}\sqrt{2(\lambda + \mu + 9)d}\). We found the root Hermite factor to be approximately 1.0043. Similarly, \(\mathsf {MLWE}_{\lambda }\) with ternary noise has hermite Factor 1.0043. With these parameters the size of the commitment is 9 KB and the size of our product proof is 31.3 KB.

Example II. For a fair comparison to [2, Parameter set I of Table 2], where the polynomial \(X^d+1\) does not necessarily split into linear factors, we modify the previous example and switch to using a prime q that splits into prime ideals of degree 4 (and so there are 32 NTT slots for each polynomial). Then we have negligible soundness error already with \(k = 1\) and we don’t need parallel repetitions and automorphisms. The protocol is given in Fig. 3 and the product proof size goes down to 8.8 KB.

Example III. In the above comparison to [2], we created a commitment to 256 NTT coefficients each being a polynomial of degree 3. For the 32-bit range proof example stated in the introduction, we only need 32 coefficients and hence only commit to one \(\mathcal {R}_q\)-polynomial. The size of such a commitment is 5.5 KB and our product proof has size 5.9 KB.

Notes

- 1.

We can always amplify the soundness by repetition.

- 2.

In [9], the same techniques were used to show that the statistical distance of Ring-LWE errors is statistically-close to uniform modulo the NTT coefficients. The slight differences are in the distribution of the original polynomial (for our application, it only makes sense to consider polynomials whose coefficients have various distributions over \(\{-1,0,1\}\)) and that we do not need statistical closeness for our application, and obtain tight bounds for a different quantity. We provide more details in Sect. 3.

References

Banaszczyk, W.: New bounds in some transference theorems in the geometry of numbers. Math. Ann. 296, 625–635 (1993)

Baum, C., Damgård, I., Lyubashevsky, V., Oechsner, S., Peikert, C.: More efficient commitments from structured lattice assumptions. In: Catalano, D., De Prisco, R. (eds.) SCN 2018. LNCS, vol. 11035, pp. 368–385. Springer, Cham (2018). https://doi.org/10.1007/978-3-319-98113-0_20

Ben-Sasson, E., Bentov, I., Horesh, Y., Riabzev, M.: Fast Reed-Solomon interactive oracle proofs of proximity. In: ICALP. LIPIcs, vol. 107, pp. 14:1–14:17. Schloss Dagstuhl - Leibniz-Zentrum fuer Informatik (2018)

Ben-Sasson, E., Chiesa, A., Riabzev, M., Spooner, N., Virza, M., Ward, N.P.: Aurora: transparent succinct arguments for R1CS. In: Ishai, Y., Rijmen, V. (eds.) EUROCRYPT 2019. LNCS, vol. 11476, pp. 103–128. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-17653-2_4

Benhamouda, F., Krenn, S., Lyubashevsky, V., Pietrzak, K.: Efficient zero-knowledge proofs for commitments from learning with errors over rings. In: Pernul, G., Ryan, P.Y.A., Weippl, E. (eds.) ESORICS 2015. LNCS, vol. 9326, pp. 305–325. Springer, Cham (2015). https://doi.org/10.1007/978-3-319-24174-6_16

Bootle, J., Lyubashevsky, V., Seiler, G.: Algebraic techniques for short(er) exact lattice-based zero-knowledge proofs. In: Boldyreva, A., Micciancio, D. (eds.) CRYPTO 2019. LNCS, vol. 11692, pp. 176–202. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-26948-7_7

Breuillard, E., Varjú, P.P.: Cut-off phenomenon for the ax+b Markov chain over a finite field (2019)

Bünz, B., Bootle, J., Boneh, D., Poelstra, A., Wuille, P., Maxwell, G.: Bulletproofs: short proofs for confidential transactions and more. In: IEEE Symposium on Security and Privacy, pp. 315–334 (2018)

Chen, H., Lauter, K., Stange, K.E.: Security considerations for Galois non-dual RLWE families. In: Avanzi, R., Heys, H. (eds.) SAC 2016. LNCS, vol. 10532, pp. 443–462. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-69453-5_24

Chung, F.R.K., Diaconis, P., Graham, R.L.: Random walks arising in random number generation. Ann. Probab. 15(3), 1148–1165 (1987)

del Pino, R., Lyubashevsky, V., Seiler, G.: Lattice-based group signatures and zero-knowledge proofs of automorphism stability. In: ACM CCS, pp. 574–591. ACM (2018)

Diaconis, P.: Group representations in probability and statistics. Lecture Notes-Monograph Series, vol. 11, pp. i–192 (1988)

Esgin, M.F., Nguyen, N.K., Seiler, G.: Practical exact proofs from lattices: New techniques to exploit fully-splitting rings (2020). https://eprint.iacr.org/2020/518