We evaluate Nemo on the following three dimensions: First, we evaluate how Nemo applies fine control under different resource and data characteristics. Second, we evaluate how different combinations of optimization passes optimize the same application. Third, we evaluate how the same Nemo policy optimizes different applications.

We run data-processing applications with different combinations of following resource and data characteristics: geographically distributed resources, transient resources, large-shuffle data, and skewed data. We run each application five times, and we report the mean values with error bars showing standard deviations.

5.1 Fine Control

In this experiment, we evaluate how Nemo applies fine control under different resource and data characteristics. For comparison, we run Spark 2.3.0 [

44], because it is an open-source, state-of-the-art system. We enable any available basic optimizations, like speculative execution, for our Spark experiments. We also run a specialized state-of-the-art runtime for each deployment scenario. Specifically, we run Iridium [

30] for geo-distributed resources, Pado [

54] for transient resources, and Hurricane [

3] for data skew, which already has done its comparisons versus Spark in their works and has proven that it performs better. We examine the results of Beam applications on Nemo and Pado, Spark RDD applications on Spark and Iridium, and a Hurricane application on Hurricane.

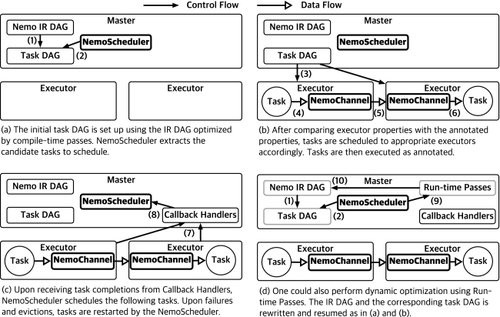

We confirm that the baseline performance is comparable for Beam and basic RDD applications on Nemo. We also confirm that the baseline performance is comparable for Spark and Nemo with the DefaultPass, which configures pull-based on-disk data shuffle with locality-aware computation placement similar to Spark. We observe that the overhead of running the compile-time passes on Nemo is roughly 200 ms for each execution, which is small compared to the entire job completion time of each of the applications, as you can see in each of the experiments below. We also measure and report runtime overheads of the Relay vertex, Trigger vertex, and SkewRTPass throughout this section.

Geo-distributed Resources: To set up geo-distributed resources and heterogeneous cross-site network bandwidths, we use Linux Traffic Control [

2] to control the network speed between instances, as described in Iridium [

30]. Each site is configured with 2 Gbps uplink network speed and a specific downlink network speed between 25 Mbps and 2 Gbps. We experiment with Low, Medium, and High bandwidth heterogeneity with the fastest downlink outperforming the slowest downlink by

\(10\times\),

\(41\times\), and

\(82\times\). With this, we use 20 EC2 instances as resources scattered across 20 sites. The compile-time optimization takes about 173 ms on average. To evaluate data shuffle under heterogeneous network bandwidths, we use a workload that joins two partitions of 373 GB Caida [

5] network trace dataset and computes network packet flow statistics.

The

job completion time (JCT) of Iridium, Spark, and Nemo optimized with the

GeoDistPass are shown in Figure

6(a). Spark degrades significantly with larger bandwidth heterogeneity, since tasks that fetch data through slow network links become stragglers. In contrast, Iridium and Nemo are stable across different network speeds. Figure

6(b) shows that the

cumulative distributive function (CDF) of shuffle read time has a long tail for Spark compared to Iridium and Nemo. Iridium and Nemo show comparable performance with similar largest shuffle read blocked times, although Iridium shows overall better shuffle read blocked times using a more sophisticated linear programming model.

Transient Resources: Based on existing works [

39,

52,

54], we classify resources that are safe from eviction as reserved resources and those prone to eviction as transient resources. We set up 10 EC2 instances for providing transient resources and 2 instances for reserved resources. When an executor running on transient resources is evicted, we allow the system to immediately re-launch a new executor using the transient resources to replace the evicted executor as described in Pado [

54]. To evaluate handling long and complex DAGs with transient resources, we run an

Alternating Least Squares (ALS) [

18] workload, an iterative machine learning recommendation algorithm, on 10 GB Yahoo! Music user ratings data [

51] with over 717M ratings of 136K songs given by 1.8M users. We use 50 ranks and 15 iterations for the parameters. The compile-time optimization takes about 236 ms on average. By varying the mean time to eviction for transient resources, we show how systems deal with the different eviction frequencies. The distribution of the time to eviction is approximated as an exponential distribution, similar to TR-Spark [

52].

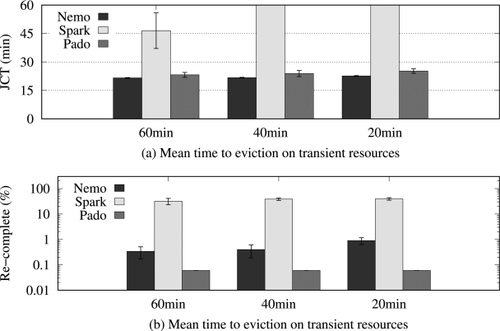

Figure

7(a) shows the JCT of Pado, Spark, and Nemo optimized with the

TransientResource- Pass for different mean times to eviction. With the 40-minute and 20-minute mean time to eviction, Spark is unable to complete the job even after running for an hour, at which point we stop the job. The main reason is heavy recomputation of intermediate data across multiple iterations of the ALS algorithm, which is repeatedly lost in recurring evictions. However, Nemo and Pado successfully finish the job in around 20 minutes, as both systems are optimized to retain a set of selected intermediate data on reserved resources. Figure

7(b) shows the ratio of re-completed tasks to original tasks for different mean times to eviction. It shows that Nemo and Pado re-complete significantly fewer tasks compared to Spark, leading to a much shorter JCT. Nemo and Pado show comparable performance although Nemo re-completes more tasks, because the tasks that both systems re-complete are executed quickly and do not cause cascading recomputations of parent tasks.

Large-shuffle Data: We evaluate how Nemo and Spark handle large shuffle operations using 512 GB, 1 TB, and 2 TB data of the Wikimedia pageview statistics [

50] from 2014 to 2016, as the datasets provide sufficiently large amount of real-world data. We use a map-reduce application that computes the sum of pageviews for each Wikimedia project. The compile-time optimization takes about 155 ms on average. We choose the ratio of map to reduce tasks to 5:1, similar to the ratios used in Riffle [

57] and Sailfish [

32], and use 20 EC2 instances to run the workload.

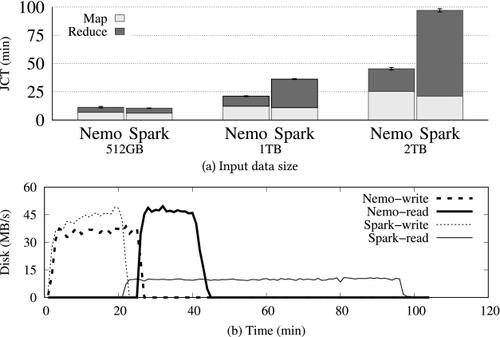

The JCT of Spark and Nemo optimized with the

LargeShufflePass are shown on Figure

8(a). Both show comparable performance for the 512 GB dataset, but Nemo outperforms Spark with larger datasets. To understand the difference, we measured the mean throughput of the disks used for intermediate data. Figure

8(b) illustrates the mean disk throughput of scratch disks used for intermediate data when running the 2 TB workload. Here, a spike in the write throughput is followed by a spike in the read throughput, which illustrates disk writes during the map stage followed by disk reads during the reduce stage while performing the shuffle operation. For Spark, the disk read throughput during the reduce stage is as low as about 10 MB/s, indicating severe disk seek overheads. In contrast, the throughput is as high as 45 MB/s for Nemo, as the

LargeShufflePass enables sequential read of intermediate data by the following reduce tasks, which minimizes the disk seek overhead.

To measure the overhead of the Relay vertex inserted by the LargeShufflePass before the reduce operation, we have also run the 2 TB workload on Nemo without the LargeShufflePass. The reduce operation begins 56 seconds earlier without the LargeShufflePass and the Relay vertex, where 56 seconds represent 2.05% of the JCT of Nemo with the LargeShufflePass.

Skewed Data: To experiment with different degrees of data skewness, we generate synthetic 200 GB key-value datasets with two different key distributions: Zipf and Top10. For the Zipf distribution, we use parameters 0.8 and 1.0 with 1 million keys [

3]. Datasets with Top10 distribution have heaviest 10 keys that represent 20% and 30% of the total data size. We run a map-reduce application that computes the median of the values per key on 10 EC2 instances. The compile-time optimization takes about 188 ms on average, and the runtime pass takes about 268 ms on average. Because this application is non commutative-associative, for evaluating Hurricane, we use an approximation algorithm similar to Remedian [

36] to fully leverage its task cloning optimizations [

3]. The Hurricane application also uses 4 MB data chunks and uses its own storage to handle input and output data, similar to the available example application code.

Figure

9(a) shows the JCT of Hurricane, Spark, and Nemo optimized with the

SkewCTPass and the

SkewRTPass. Performance of Spark degrades significantly with increasing skewness. Especially, Spark fails to complete the job with the 1.0 Zipf parameter, due to the load imbalance in reduce tasks with skewed keys, which leads to out-of-memory errors. In contrast, both Nemo and Hurricane handle data skew gracefully. In particular, Nemo achieves high performance and at the same time computes medians correctly without using an approximation algorithm.

Figure

9(b) shows the CDF of reduce task completion time when processing the 30%-Top10 dataset. The CDF for Spark shows that reduce tasks with popular keys take a significant amount of time to finish compared to its shorter tasks. In contrast, the slowest task completes much quicker than Spark for Hurricane and Nemo. We can observe that Hurricane processes short-lived tasks alongside with longer tasks with its task cloning optimization, showing an illusion that it performs better in the bottom 50% of the CDF, but we can see that longer and shorter tasks have balanced completion times on Nemo with its data repartitioning optimization.

To measure the overhead of the Trigger vertex inserted by the SkewCTPass, we also run the 30%-Top10 workload on Nemo without the SkewCTPass and the SkewRTPass. The reduce operation begins 35 seconds earlier without the Trigger vertex, where 35 seconds represent 5.52% of the JCT of Nemo configured with the SkewCTPass and the SkewRTPass.

These results for each deployment scenario show that each optimization pass on Nemo brings performance improvements on par with specialized runtimes tailored for the specific scenario.

Integrating the Crail Filesystem: Another simple way of dealing with skewed data is to utilize fast data storage during data processing. As shown in Figure

9(a) and Figure

10, default implementations of general data processing systems based on local memory fail to complete the jobs from a certain extent of skewness due to OOM errors that occur while using the local memory as the medium to store intermediate data. Nevertheless, if we use Crail [

41], a high-performance distributed data store designed for fast sharing of ephemeral data, for storing the intermediate data, then we can reduce the costs from skewed data by overcoming the costs with the fast storage and network performances. For this evaluation, we have simply set the

DataStoreProperty for storing intermediate data to

CrailStore, without any other optimizations described above, to compare only effects of the data store.

As shown in Figure

10, while the default usage of local memory brings the best performance among the implementations when the level of skewness is moderate, the performance quickly starts to sharply degrade upon memory pressure caused by the skewness and fails to perform under conditions when the skewness becomes large due to OOM errors. Crail exhibits larger network costs compared to local memory due to its distributed architecture and is slower under moderate skewness, but its performance degrades gracefully even towards intensive skewness, as the skewness has much smaller effect on the local memory pressure. Although using disks could also prevent the system from facing OOM errors, we can see that the disk overheads largely exceed the JCTs of the default and Crail-integrated Nemo. This particular experiment is performed on GCP Compute Engine VM Instances with the same specifications as our other experiments (10 instances each with 16 vCPUs and 64 GiB memory).

5.2 Composability

We now evaluate combinations of different optimization passes. Table

2 summarizes the results.

Skewed Data on Geo-distributed Resources: In this experiment, we use the same 1.0-Zipf workload for the skew handling experiment in Section

5.1, because the workload showed the largest load imbalance. We use 10 EC2 instances representing geo-distributed sites with heterogeneous network speed in between 25 Mbps to 2 Gbps. Here, DP and GDP run into out-of-memory errors due to the reduce tasks with skewed keys that are requested to process excessively large portions of data. SKP and GDP+SKP both successfully complete the job with the skew handling technique in SKP, but GDP+SKP outperforms SKP by also benefiting from the scheduling optimizations in GDP.

Large Shuffle on Transient Resources: For this experiment, we use the same 1 TB workload for the large shuffle experiment in Section

5.1, to use sufficiently large data that incurs disk seek overheads. In this case, we use 10 reserved instances and 10 transient instances with the 20-minute mean time to eviction setting.

Most notably, DP and LSP fail to complete even after 100 minutes, at which point we stop the job and TP runs into out-of-memory errors. We have observed that heavy recomputation caused by frequent resource eviction significantly slows down the DP and LSP cases. We have also found out that the LSP optimization makes the application much more vulnerable to resource evictions compared to DP. The main reason is that with LSP, eviction of a single receiving task in the shuffle boundary leads to the entire recomputation of the sending tasks of the shuffle operation to completely re-shuffle the intermediate data in memory. In contrast, DP does not need to recompute shuffle sending tasks whose output data are not evicted and stored in local disks. TP by itself also is not sufficient, as it leads to out-of-memory errors while pushing large shuffle data in memory from transient resources to reserved resources.

TP+LSP is the only case that successfully completes the job by leveraging both optimizations in TP and LSP. With TP+LSP, the job pushes the shuffle data from transient to reserved resources and also streams them to local disks on reserved resources that are safe from evictions. This allows TP+LSP to handle frequent evictions on transient resources and also to utilize disks for storing large shuffle data with minimum disk seek overheads. However, TP+LSP incurs the overhead of using only half of the resources (transient or reserved) for each end of the data shuffle. As a result, the JCT for TP+LSP with transient resources is around twice the JCT for LSP without using transient resources, which is displayed in Section

5.1. Nevertheless, we believe that this overhead is worthwhile, taking into account that transient resources are much cheaper than reserved resources from the perspective of datacenter utilization [

35,

54].

Large Shuffle with Skewed Data: For this experiment, we generate a synthetic key-value dataset with a skewed key distribution that is around 1 TB in size, as the datasets used in Section

5.1 for skew handling are not sufficiently large to incur disk seek overheads. This dataset has the distribution where heaviest 20 keys represent 30% of the total data size. Using this dataset, we run the same application that we have used for the skewed data experiment in Section

5.1 on 20 EC2 instances.

In this experiment, only SSP+LSP successfully completes the job, whereas all other cases run into out-of-memory errors. DP and LSP fails to complete the job, due to particular tasks assigned with excessively large portions of data, incurring out-of-memory errors. SSP by itself also runs into out-of-memory errors although it repartitions data across the receiving tasks of the shuffle boundary. We have observed that with large data size, the absolute size of the heaviest keys is significantly larger compared to smaller scale experiments with skewed data shown in Section

5.1. Without the LSP optimization, this problem is combined with random disk read overheads that degrade the running time of the shuffle receiving tasks, leading to out-of-memory errors. In contrast, SSP+LSP successfully completes the job by leveraging both of the optimizations from SSP and LSP.

These various results confirm that Nemo can apply combinations of distinct optimization passes to further improve performance for deployment scenarios with a combination of different resource and data characteristics.