Beyond Accuracy: An Empirical Study on Unit Testing in Open-source Deep Learning Projects

Abstract

1 Introduction

2 Background

3 Methodology

3.1 Data Collection

3.2 Study Design

| Name | Category | Definition |

|---|---|---|

| DL units | Loss Function [7] | The purpose of loss functions is to compute the quantity that a model should seek to minimize during training. |

| Optimizer [7, 27] | Optimization algorithms are typically defined by their update rule, which is controlled by hyperparameters that determine its behavior (e.g., the learning rate). | |

| Activation Function [7, 53] | Activation functions are functions used in neural networks to compute the weighted sum of input and biases and transform them to the output of a neuron in a layer. | |

| Layer [7] | A layer consists of a tensor-in tensor-out computation function and some state, held in variables. | |

| Metric [7] | A metric is a function that is used to judge the performance of the model. | |

| Util [7] | Utilities used in DL projects, such as model plotting utilities, data loading utilities, serialization utilities, NumPy utilities, backend utilities. | |

| Others | Other units in DL projects, such as user-defined label classes, sampling, functions. | |

| Unit properties to be tested | Input/Output [16, 38] | The input/output (I/O) test contains tests for value range, shape, type, and so on. It can detect whether the input data meets the model input requirements and also reflects whether the functions implemented in the unit meet the expectations. |

| Error-raising [16, 38] | The error-raising test refers to whether the code can correctly throw an exception and is often used to checkwhether the magnitude range of the values generated during the calculation meets the design requirements. | |

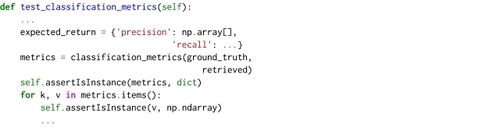

| Metric | The metric test consists of a test of the metric calculation function and the output metric values after the model is trained. It is often used to check whether the metric calculation process is correct or the model design is valid. | |

| Config [38] | The config test contains tests for configurations to check whether the unit has been initialized correctly. | |

| Variable | Variable testing includes the range of values, shape, gradient of variables, and so on. It can reflect whether the model structure is designed as expected and whether the model parameters can be updated. | |

| Others | Other tests, for example, test whether files are generated, whether web requests are successful, and so on. |

4 Results

4.1 RQ1: How Can Unit Tests Help Open-source DL Projects?

| Metrics | Mann-Whitney U p | Effect Size | Pearson p |

|---|---|---|---|

| Star | <0.01 | 0.18 | 0.10 |

| Issue | <0.01 | 0.22 | 0.14 |

| Fork | <0.01 | 0.15 | 0.10 |

| PR | <0.01 | 0.33 | 0.41 |

| Contributor | <0.01 | 0.19 | 0.19 |

4.2 RQ2: To What Extent Are DL Projects Unit Tested?

| Framework | NumPy | Tensorflow | unittest | PyTest | absl | GoogleTest | nose | Others |

|---|---|---|---|---|---|---|---|---|

| Num. of Projects | 2,232 (77.6%) | 1,862 (64.7%) | 440 (15.3%) | 284 (9.9%) | 98 (3.4%) | 23 (0.8%) | 19 (0.7%) | 269 (9.3%) |

4.3 RQ3: Which Units and Properties Are Tested in DL Projects?

| Framework | Input/Output | Error Raising | Metric | Config | Variable | Others |

|---|---|---|---|---|---|---|

| Num. of Test Files | 3,467 (50.3%) | 1,223 (17.8%) | 1,005 (14.6%) | 538 (7.8%) | 345 (5%) | 907 (13.2%) |

5 Discussion and Implications

5.1 Implication for Practitioners

5.2 Implication for Researchers

6 Threats to Validity

7 Related Work

8 Conclusion

References

Index Terms

- Beyond Accuracy: An Empirical Study on Unit Testing in Open-source Deep Learning Projects

Recommendations

Are unit and integration test definitions still valid for modern Java projects? An empirical study on open-source projects

Highlights- Neither unit nor integration tests are better in detecting certain defect types.

Abstract ContextUnit and integration testing are popular testing techniques. However, while the software development context evolved over time, the definitions remained unchanged. There is no empirical evidence, if these commonly ...

Parameterized unit testing: theory and practice

ICSE '10: Proceedings of the 32nd ACM/IEEE International Conference on Software Engineering - Volume 2Unit testing has been widely recognized as an important and valuable means of improving software reliability, as it exposes bugs early in the software development life cycle. However, manual unit testing is often tedious and insufficient. Testing tools ...

Smart Unit Testing Framework

ISSREW '12: Proceedings of the 2012 IEEE 23rd International Symposium on Software Reliability Engineering WorkshopsUnit testing(UT) is an important step in ensuring the quality of software. Considerable effort is spent in unit testing. There are several frameworks to help with UT. Some common frameworks are Cunit, Junit, Nunit etc. All of these have very similar ...

Comments

Information & Contributors

Information

Published In

Publisher

Association for Computing Machinery

New York, NY, United States

Publication History

Check for updates

Author Tags

Qualifiers

- Research-article

Funding Sources

- Australian Research Council

Contributors

Other Metrics

Bibliometrics & Citations

Bibliometrics

Article Metrics

- 0Total Citations

- 685Total Downloads

- Downloads (Last 12 months)685

- Downloads (Last 6 weeks)142

Other Metrics

Citations

View Options

Get Access

Login options

Check if you have access through your login credentials or your institution to get full access on this article.

Sign in