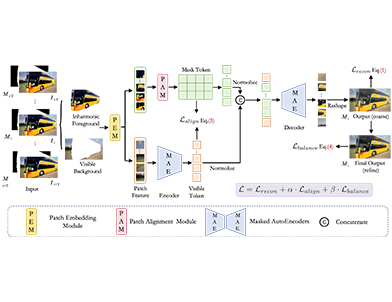

Harmony Everything! Masked Autoencoders for Video Harmonization

Abstract

References

Index Terms

- Harmony Everything! Masked Autoencoders for Video Harmonization

Recommendations

DiffHarmony++: Enhancing Image Harmonization with Harmony-VAE and Inverse Harmonization Model

MM '24: Proceedings of the 32nd ACM International Conference on MultimediaLatent diffusion model has demonstrated impressive efficacy in image generation and editing tasks. Recently, it has also promoted the advancement of image harmonization. However, methods involving latent diffusion model all face a common challenge: the ...

Referring Image Harmonization

ICCIP '23: Proceedings of the 2023 9th International Conference on Communication and Information ProcessingImage harmonization is the process of modifying the foreground of a composite image in order to achieve a cohesive visual consistency with the background. Existing works viewed image harmonization as a purely visual task, using masks to distinguish ...

MultiMAE: Multi-modal Multi-task Masked Autoencoders

Computer Vision – ECCV 2022AbstractWe propose a pre-training strategy called Multi-modal Multi-task Masked Autoencoders (MultiMAE). It differs from standard Masked Autoencoding in two key aspects: I) it can optionally accept additional modalities of information in the input besides ...

Comments

Information & Contributors

Information

Published In

- General Chairs:

- Jianfei Cai,

- Mohan Kankanhalli,

- Balakrishnan Prabhakaran,

- Susanne Boll,

- Program Chairs:

- Ramanathan Subramanian,

- Liang Zheng,

- Vivek K. Singh,

- Pablo Cesar,

- Lexing Xie,

- Dong Xu

Sponsors

Publisher

Association for Computing Machinery

New York, NY, United States

Publication History

Check for updates

Author Tags

Qualifiers

- Research-article

Funding Sources

- China Scholarship Council

- China Scholarship Council

Conference

Acceptance Rates

Contributors

Other Metrics

Bibliometrics & Citations

Bibliometrics

Article Metrics

- 0Total Citations

- 44Total Downloads

- Downloads (Last 12 months)44

- Downloads (Last 6 weeks)33

Other Metrics

Citations

View Options

Login options

Check if you have access through your login credentials or your institution to get full access on this article.

Sign in