Advancements in heuristic task scheduling for IoT applications in fog-cloud computing: challenges and prospects

- Published

- Accepted

- Received

- Academic Editor

- Anne Reinarz

- Subject Areas

- Algorithms and Analysis of Algorithms, Computer Networks and Communications, Distributed and Parallel Computing, Internet Of Things

- Keywords

- Cloud computing, Fog computing, Heuristic methods, Task scheduling, IoT applications, Optimization

- Copyright

- © 2024 Alsadie

- Licence

- This is an open access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, reproduction and adaptation in any medium and for any purpose provided that it is properly attributed. For attribution, the original author(s), title, publication source (PeerJ Computer Science) and either DOI or URL of the article must be cited.

- Cite this article

- 2024. Advancements in heuristic task scheduling for IoT applications in fog-cloud computing: challenges and prospects. PeerJ Computer Science 10:e2128 https://doi.org/10.7717/peerj-cs.2128

Abstract

Fog computing has emerged as a prospective paradigm to address the computational requirements of IoT applications, extending the capabilities of cloud computing to the network edge. Task scheduling is pivotal in enhancing energy efficiency, optimizing resource utilization and ensuring the timely execution of tasks within fog computing environments. This article presents a comprehensive review of the advancements in task scheduling methodologies for fog computing systems, covering priority-based, greedy heuristics, metaheuristics, learning-based, hybrid heuristics, and nature-inspired heuristic approaches. Through a systematic analysis of relevant literature, we highlight the strengths and limitations of each approach and identify key challenges facing fog computing task scheduling, including dynamic environments, heterogeneity, scalability, resource constraints, security concerns, and algorithm transparency. Furthermore, we propose future research directions to address these challenges, including the integration of machine learning techniques for real-time adaptation, leveraging federated learning for collaborative scheduling, developing resource-aware and energy-efficient algorithms, incorporating security-aware techniques, and advancing explainable AI methodologies. By addressing these challenges and pursuing these research directions, we aim to facilitate the development of more robust, adaptable, and efficient task-scheduling solutions for fog computing environments, ultimately fostering trust, security, and sustainability in fog computing systems and facilitating their widespread adoption across diverse applications and domains.

Introduction

Fog computing stands as a transformative breakthrough, reshaping the landscape of traditional cloud computing by extending its reach to the network’s edge (Fahad et al., 2022; Madhura, Elizabeth & Uthariaraj, 2021). This innovation enables real-time processing, data analytics, and application deployment in close proximity to data sources, diverging significantly from centralized cloud architectures. Particularly tailored for Internet of Things (IoT) environments, fog computing accommodates the diverse array of interconnected devices generating data at the network’s periphery, each with its computational capabilities and requirements (Azizi et al., 2022; Abd Elaziz, Abualigah & Attiya, 2021).

In the realm of fog computing, effective task scheduling emerges as a paramount challenge. Task scheduling involves the reasonable allocation of computing resources to tasks, aiming to optimize performance metrics such as makespan, energy consumption, and resource utilization (Jamil et al., 2022). However, achieving optimal task scheduling in fog computing environments proves inherently intricate due to the dynamic nature of the network, the diverse array of heterogeneous computing resources available, and the stringent constraints imposed by edge devices (Bansal, Aggarwal & Aggarwal, 2022; Subbaraj & Thiyagarajan, 2021; Kaur, Kumar & Kumar, 2021).

In light of these challenges, this article embarks on a comprehensive review of task scheduling methodologies tailored for fog computing systems. Through meticulous analysis and evaluation, a spectrum of heuristic approaches is scrutinized, encompassing priority-based strategies, greedy heuristics, metaheuristic algorithms, learning-based approaches, hybrid heuristics, and nature-inspired methodologies. This review critically assesses the strengths, limitations, and practical applications of each approach within the context of fog computing environments.

The primary objective of this review is to provide researchers, practitioners, and stakeholders in the fog computing domain with a thorough understanding of the state-of-the-art task scheduling methodologies. By synthesizing insights from existing literature and delineating key challenges and prospective research trajectories, this article aims to propel the field of fog computing task scheduling forward. Ultimately, this collective effort seeks to catalyze the development of more resilient, adaptable, and efficient solutions tailored to meet the demands of real-world applications.

This study makes significant contributions in the following aspects:

Comprehensive review: The article offers a comprehensive review of task scheduling methodologies for fog computing systems, encompassing various heuristic approaches, including priority-based, greedy heuristics, metaheuristics, learning-based, hybrid heuristics, and nature-inspired heuristics.

Systematic analysis: Through a systematic analysis of relevant literature, the article evaluates the strengths and limitations of each task scheduling approach, offering insights into their effectiveness in optimizing resource allocation, improving energy efficiency, and ensuring timely task execution.

Identification of challenges: The article identifies key challenges facing fog computing task scheduling, such as dynamic environments, heterogeneity, scalability issues, resource constraints, security concerns, and algorithm transparency, providing a clear understanding of the obstacles to overcome in the field.

Proposal of future research directions: By proposing future research directions, including the integration of machine learning approaches, leveraging federated learning, developing resource-aware and energy-efficient algorithms, incorporating security-aware approaches, and advancing explainable AI methodologies, the article guides researchers towards addressing the identified challenges and advancing the field of fog computing task scheduling.

Facilitation of widespread adoption: Through its insights and recommendations, the article aims to facilitate the development of more robust, adaptable, and efficient task scheduling solutions for fog computing environments, ultimately fostering trust, security, and sustainability in fog computing systems and promoting their widespread adoption across diverse applications and domains.

The subsequent sections of this article follow a structured outline: section titled "Methodology" elaborates on the comprehensive methodology employed for this review. The article then advances to section titled "Taxonomy of heuristic approaches for task scheduling in fog computing", where a detailed taxonomy is presented, classifying heuristic methods for further analysis in the study. Section titled "Heuristic approaches for task scheduling" provides a comprehensive overview of various heuristic methods as per the defined taxonomy, critically analyzing them based on Approach, Performance & Optimization, Implementation & Evaluation, Performance Metrics, Strengths, and Limitations. In section titled "Open challenges and future directions", challenges in efficient task scheduling are underscored, followed by an exploration of future directions and emerging trends in "Heuristic-based task scheduling methods". This section discusses potential advancements and critical research areas. Section titled "Conclusion" provides a summary of the main findings and underscores the significance of tackling the challenges associated with heuristic-based task scheduling in fog computing environments.

Methodology

This systematic literature review on heuristic-based task scheduling in fog computing follows the guidelines established in the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) (Page et al., 2021). The subsequent sections detail the specific steps and methodology employed in this review.

Inclusion criteria for reviewed studies

This comprehensive review focuses on diverse studies employing heuristic-based task scheduling in fog computing. The inclusive search criteria encompassed various factors, including terms such as heuristics, optimization, and nature-inspired methods for task scheduling in fog computing. The selected studies were required to meet criteria related to task scheduling, energy optimization, and resource management. Moreover, stringent eligibility criteria for report characteristics, such as English language publication, classification as a scientific article or review, and a publication year between 2019–2024, were meticulously applied. The review followed the PRISMA protocol, ensuring a systematic and standardized selection of studies. Electronic databases like IEEE Xplore, ScienceDirect, and SpringerLink were utilized for the search, along with consideration of highly cited articles from ACM, MDPI, De Gruyter, Hindawi, and Wiley, focusing on task scheduling in fog computing environments, specifically heuristic-based methods.

Search strategy

The selection of search keywords adhered to the defined review framework. Primary concepts for the search included “task scheduling,” “heuristic,” “metaheuristic,” “learning-based,” “fog computing,” “nature-inspired,”, “genetic algorithm,” “simulated annealing,” “reinforcement learning,” and “tabu search” with logical operators (AND/OR) between each keyword. Exclusion criteria were applied based on language, expressly limited to English.

Record selection procedure

Upon acquiring records from database searches and manual exploration, they were imported into the JabRef reference management system for comprehensive review. The authors manually compared titles and authors to identify and eliminate duplicate records. Subsequently, the reviewers meticulously examined each article retrieved from the search, assessed its eligibility, and collaboratively reached a majority consensus to finalize the selection of studies included in the review.

Data collection process

Reviewers actively engaged in evaluating and analyzing the studies included in the review. Data collection was facilitated through a Google spreadsheet, where information from the selected studies was systematically compiled. The resulting document emerged as an advanced matrix, offering a thorough insight into the cutting-edge developments within the field. Each row within the matrix corresponded to an individual study, while the columns were dedicated to distinct data elements earmarked for analysis.

Data items

The collaborative spreadsheet’s columns were thoughtfully designated to align with the specific outcomes for which data was being sought. The defined columns encompassed year, title, authors, general approach, performance & optimization, implementation & evaluation, performance metrics, strengths, and limitations. This meticulous structuring allowed for a systematic and comprehensive collection of relevant data points from the reviewed studies. Articles published from 2019 to March 02, 2024, were scrutinized to comprehend the trends and advancements in this study area. This systematic review identified and included 102 articles that met the predetermined inclusion criteria.

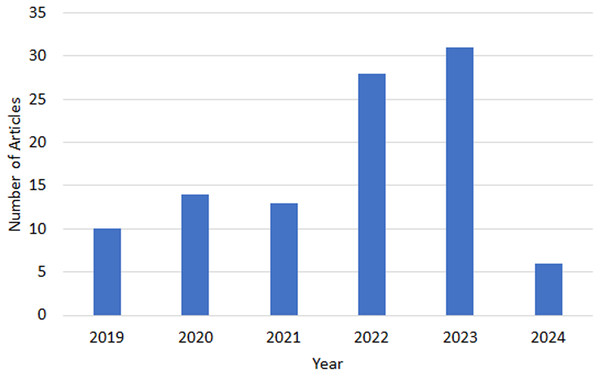

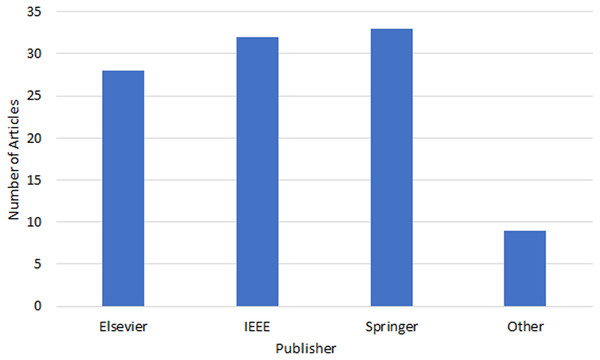

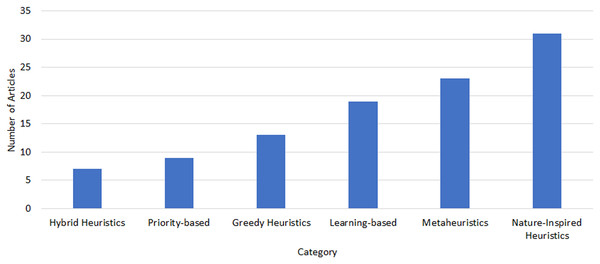

There is a noticeable interest in the field as per the upward trend apparent from the increasing number of publications in various indexed journal databases since 2019, as depicted in Fig. 1. Figure 2 provides an overview of the distribution of selected articles by the publisher, focusing on both journal and conference contributions. The qualified articles in our sample set employ diverse heuristic-based methods, including priority-based, greedy, metaheuristics, learning-based, hybrid and nature-inspired heuristic methods as depicted in Fig. 3.

Figure 1: Trends of heuristic-based task scheduling studies.

Figure 2: Publisher distribution of heuristic-based task scheduling studies.

Figure 3: Heuristic method distribution of task scheduling studies.

Taxonomy of heuristic approaches for task scheduling in fog computing

In the realm of fog computing environments, effective task scheduling stands as a pivotal factor in optimizing resource usage and elevating the performance of IoT applications. This section introduces a taxonomy outlining heuristic methods for task scheduling in fog computing, organizing them according to their fundamental principles and attributes. Refer to Fig. 4 for a visual representation of this taxonomy.

Figure 4: Taxonomy of heuristic approaches for task scheduling in fog computing.

Priority-based heuristics: Priority-based heuristics prioritize task execution based on predefined criteria (Fahad et al., 2022; Tang et al., 2023). Static Priority Scheduling assigns fixed priorities to tasks, typically determined by factors such as deadlines, importance, or resource requirements. In contrast, Dynamic Priority Scheduling adjusts task priorities dynamically during runtime in response to real-time system conditions, workload characteristics, or user-defined policies (Shi et al., 2020).

Greedy heuristics: Greedy heuristics make locally optimal decisions at each step with the aim of achieving a globally optimal solution (Azizi et al., 2022). Earliest deadline first (EDF) schedules tasks based on their earliest deadlines, prioritizing those with imminent deadlines to minimize lateness. Shortest processing time (SPT) selects tasks with the shortest estimated processing time, aiming to minimize overall completion time and improve system throughput. Minimum remaining processing time (MRPT) prioritizes tasks based on their remaining processing time, with shorter tasks given precedence to expedite completion.

Metaheuristic approaches: Metaheuristic approaches are high-level strategies that guide the search for optimal solutions in a solution space (Wu et al., 2022; Keshavarznejad, Rezvani & Adabi, 2021). Genetic algorithms (GA) employ genetic operators to evolve a population of candidate solutions towards an optimal task schedule. Particle swarm optimization (PSO) mimics the collective behavior of a swarm of particles to iteratively explore the solution space and converge towards an optimal task schedule. Ant colony optimization (ACO) draws inspiration from the foraging behavior of ants, utilizing pheromone trails and heuristic information to navigate towards an optimal task schedule on a global scale. Simulated annealing (SA) simulates the gradual cooling of a material to find the global optimum by accepting probabilistic changes in the solution (Dev et al., 2022).

Learning-based heuristics: Learning-based heuristics leverage machine learning approaches to discover optimal task scheduling policies (Wang et al., 2024). Reinforcement learning (RL) utilizes trial-and-error learning to discover optimal task scheduling policies through interactions with the environment and feedback on task completion. Q-Learning learns an optimal action-selection strategy by iteratively updating a Q-table based on rewards obtained from task scheduling decisions (Gao et al., 2020; Yeganeh, Sangar & Azizi, 2023). Deep Q-Networks (DQN) extend Q-learning by employing deep neural networks to approximate the Q-function, enabling more complex and scalable task scheduling policies.

Hybrid heuristics: Hybrid heuristics integrate multiple heuristic approaches to exploit their complementary strengths and improve solution quality (Agarwal et al., 2023; Yadav, Tripathi & Sharma, 2022a). This includes combinations of greedy and metaheuristic approaches, as well as the fusion of learning-based and metaheuristic approaches (Leena, Divya & Lilian, 2020).

Nature-inspired heuristics: Nature-inspired heuristics draw inspiration from natural phenomena to develop efficient task-scheduling strategies (Mishra et al., 2021; Usman et al., 2019). This encompasses biologically inspired algorithms, such as genetic evolution and swarm intelligence, as well as physics-based heuristics derived from principles in physics to optimize task allocation and resource utilization in fog environments.

The taxonomy of heuristic methods provides a comprehensive framework for understanding the diverse approaches to task scheduling in fog computing, each offering unique advantages and applications in optimizing the performance of IoT applications as presented in Table 1.

| Category | Method | Advantages | Disadvantages | Suitability |

|---|---|---|---|---|

| Priority-based | Static priority | Simple, fast, predictable | Ignores dynamic changes, suboptimal results | Static, non-critical tasks |

| Dynamic priority | Adapts to real-time, flexible | Requires accurate priorities, complex implementation | Dynamic, heterogeneous environments | |

| Greedy | EDF | Deadline-aware, low overhead | Starves long tasks, sensitive to deadlines | Real-time, deadline-critical applications |

| SPT | Improves throughput, fast execution | Starves long tasks, ignores resources | Non-critical, independent tasks | |

| MRPT | Prioritizes near completion, reduces waiting times | Task preemption overhead, favors short tasks | Bursty workloads, quick task completion | |

| Metaheuristic | GA | Robust search, global optimization | High complexity, tuning required | Large-scale, complex tasks |

| PSO | Efficient exploration, fast convergence | Sensitive to parameters, local optima | Dynamic, moderate-sized tasks | |

| ACO | Flexible, diverse environments | Parameter tuning, slow convergence | Multi-objective optimization, heterogeneous resources | |

| SA | Escapes local optima, complex problems | Slow convergence, careful cooling schedule | Highly constrained, critical tasks | |

| Learning-based | RL | Adapts to dynamics, learns policies | High training overhead, large datasets, exploration-exploitation | Dynamic, data-driven applications |

| Q-Learning | Simple implementation, no model | Slow convergence, large state-action space | Small-scale tasks, moderate complexity | |

| DQN | Handles complex state-action spaces, faster learning | Large datasets, computationally expensive | Large-scale, data-rich environments | |

| Hybrid | Greedy+ Metaheuristic | Combines strengths, improves quality | Increased complexity, parameter tuning | Complex, dynamic, diverse objectives |

| Learning + Metaheuristic | Learns from data, enhances exploration-exploitation | Highly complex, specialized expertise | Large-scale, data-driven, evolving requirements | |

| Nature-inspired | Biologically-inspired | Efficient, flexible, adaptable | Domain-specific knowledge, complex implementation | Diverse applications, innovation potential |

| Physics-based | Energy-efficient, distributed, scalable | Specific optimization problems, not readily applicable | Resource-constrained, energy-aware scheduling |

Heuristic approaches for task scheduling

Task scheduling in fog computing relies heavily on heuristic approaches to allocate computational resources efficiently and meet the dynamic demands of IoT applications. Heuristic approaches encompass various categories, each presenting distinct strategies for optimizing task scheduling. These categories are elucidated in the subsequent subsections and consolidated in Table 2.

Priority-based heuristics

Priority-based heuristics provide a straightforward method for task scheduling in fog computing environments, where tasks are prioritized based on predefined criteria such as deadlines, importance, or resource requirements (Sharma & Thangaraj, 2024; Choudhari, Moh & Moh, 2018). Static priority scheduling assigns fixed priorities to tasks, offering simplicity but lacking adaptability to dynamic environments. In contrast, dynamic priority scheduling adjusts priorities based on real-time conditions, offering flexibility at the cost of complexity. These heuristics find applications in real-time systems and resource-constrained environments but face challenges such as oversimplification, sensitivity to priority settings, and the potential for task starvation. While effective for specific scenarios, their limitations underscore the need for exploring more sophisticated approaches like learning-based or hybrid heuristics to address the complexities of fog computing environments.

Fahad et al. (2022) introduced a preemptive task scheduling strategy tailored for fog computing environments, known as multi-queue priority (MQP) scheduling. This approach aims to address the challenge of task starvation among less critical applications while ensuring balanced task allocation for both latency-sensitive and less latency-sensitive tasks. Tasks are categorized into short and long based on their processing duration, with dynamically updated preemption time slots. Through an intelligent traffic management case study, the effectiveness of the MQP algorithm in reducing service latencies for long tasks was demonstrated. Simulation outcomes showcased significant reductions in latency compared to alternative scheduling algorithms. The proposed approach targets response time reduction for both latency-sensitive and less latency-sensitive tasks, mitigating the starvation issue for less latency-sensitive tasks by maintaining separate task queues and dynamically adjusting preemption time slots. Simulation findings revealed the efficient task allocation capabilities of the MQP algorithm, resulting in reduced service latencies for long tasks. Across all experimental configurations, an average percentage reduction in latency of 22.68% and 38.45% was achieved compared to First Come-First Serve and shortest job first algorithms, respectively.

Madhura, Elizabeth & Uthariaraj (2021) introduced an innovative task scheduling algorithm tailored specifically for fog computing environments. The algorithm, consisting of three distinct phases namely level sorting, task prioritization, and task selection, aimed at minimizing both makespan and computation costs. Recognizing the pivotal role of efficient task scheduling in achieving high-performance program execution, especially in scenarios involving tasks represented as directed acyclic graphs (DAGs) with precedence constraints, the proposed algorithm strategically allocated tasks based on the computation cost of the node and the task’s execution finishing time. Extensive experimentation with randomly generated graphs and real-world data showcased the algorithm’s superior performance compared to existing approaches such as predicting earliest finish time, heterogeneous earliest finish time algorithm, minimal optimistic processing time, and SDBBATS. Performance metrics including average scheduling length ratio, speedup, and makespan underscored the algorithm’s efficacy in enhancing task scheduling efficiency in fog computing environments.

Movahedi, Defude & Hosseininia (2021) addressed the task scheduling challenge within fog computing environments by introducing a novel method termed OppoCWOA, which harnesses the Whale Optimization Algorithm (WOA). This approach integrates opposition-based learning and chaos theory to augment the efficacy of WOA in optimizing task scheduling. The study exemplified the application of this approach in an intelligent city scenario, elucidating a hierarchical fog-based architecture for task scheduling. Furthermore, the task scheduling conundrum was formulated as a multi-objective optimization problem using integer linear programming (ILP). Through extensive experimentation, the authors compared the performance of OppoCWOA against established meta-heuristic optimization algorithms like PSO, ABC, and GA. The findings underscored the superior efficiency of OppoCWOA, particularly in optimizing time and energy consumption metrics.

Hoseiny et al. (2021) addressed the challenges inherent in executing Internet of Things (IoT) tasks within fog-cloud computing environments, which offer low latency but encounter resource constraints. Their article introduced a scheduling algorithm named PGA, designed to optimize overall computation time, energy consumption, and the percentage of tasks meeting deadlines. Leveraging a hybrid approach and genetic algorithm, the PGA algorithm accounts for task requirements and the heterogeneous characteristics of fog and cloud nodes when assigning tasks to computing nodes. Through simulations, the study demonstrated the algorithm’s superiority over existing strategies. As the IoT ecosystem continues to expand rapidly, efficient processing and networking resources become increasingly vital, with cloud computing playing a crucial role in meeting the demands of IoT applications sensitive to latency.

Choudhari, Moh & Moh (2018) proposed a task scheduling algorithm within the fog layer, employing priority levels to accommodate the growing number of IoT devices while enhancing performance and reducing costs. Their work meticulously described the proposed architecture, queueing and priority models, along with the priority assignment module and task scheduling algorithms. Performance evaluations illustrated that, in comparison to existing algorithms, the proposed approach reduced overall response time and significantly diminished total costs. The article underscored fog computing’s role as a model that introduces a virtualized layer between end-users and cloud data centers, aiming to mitigate transmission and processing delays for IoT systems. It highlighted the importance of this research in advancing fog computing technology and its potential applicability across diverse domains.

Jamil et al. (2022) conducted a thorough and systematic comparative analysis, examining various scheduling algorithms, optimization metrics, and evaluation methodologies within the context of fog computing and the Internet of Everything (IoE). The primary objectives of their survey encompassed several key aspects: firstly, to provide an overview of fog computing and IoE paradigms; secondly, to delineate pertinent optimization metrics tailored to these environments; thirdly, to classify and compare existing scheduling algorithms, supplemented with illustrative examples; fourthly, to rationalize the efficacy of these algorithms and derive insights from the survey findings; and fifthly, to discuss unresolved challenges and outline prospective research avenues in fog computing and IoE domains. The study addressed a spectrum of issues pertaining to resource allocation and task scheduling across fog computing, IoE, cloud computing, and related paradigms. Moreover, the article delved into the significance of simulation and modeling tools, underscoring the utility of advanced methodologies such as deep reinforcement learning in navigating the intricate landscape of task scheduling. In addition to offering a comprehensive review, the article furnished a plethora of references and recommendations for further exploration in this burgeoning field.

Bansal, Aggarwal & Aggarwal (2022) explored diverse scheduling approaches utilized in fog computing, categorizing them into static, dynamic, heuristic, and hybrid approaches. They revealed that 17% of researchers employed static methods, while 23% utilized dynamic approaches, with heuristic approaches being the most prevalent at 47%, followed by hybrid strategies at 13%. Researchers primarily focused on QoS parameters such as response time (19%), cost and energy consumption (18%), and makespan (16%).

Subbaraj & Thiyagarajan (2021) delved into the challenges posed by the increasing number of IoT devices, emphasizing the issues with transmitting real-time sensor data to cloud data centers due to security risks and high costs. Introducing fog computing as a solution, which distributed computing resources closer to the devices, the focus shifted to resource allocation and task scheduling in fog computing, accounting for the heterogeneity of fog devices. The proposed work aimed to utilize multi-criteria decision-making approaches for module mapping in fog environments to meet application performance requirements. It outlined the implementation stages and simulation results, highlighting the heterogeneity of fog devices. The conclusion underscored the significance of the proposed MCDM-based scheduling algorithm in heterogeneous fog environments. This study introduced a novel model for task-resource mapping focused on optimizing performance in fog computing. This model considered multiple performance metrics including MIPS, RAM, storage, latency, bandwidth, trust, and cost. To evaluate fog device performance and allocate tasks effectively, two multi-criteria decision-making techniques, namely Analytic Hierarchy Process (AHP) and Technique for Order Preference by Similarity to Ideal Solution (TOPSIS), were employed. Simulation outcomes demonstrated the superior performance of the proposed method compared to alternative scheduling algorithms in fog environments. Notably, the proposed approach took into consideration performance, security, and cost metrics when making scheduling decisions.

Kaur, Kumar & Kumar (2021) conducted an investigation into the intricacies of task scheduling within Fog computing, shedding light on the obstacles associated with accommodating dynamic user demands amid resource limitations. Their study underscored the challenges arising from the heterogeneous nature of Fog nodes, alongside constraints pertaining to task deadlines, cost considerations, and energy constraints. In response to these complexities, the article proposed a comprehensive taxonomy of research challenges and pinpointed notable gaps in existing methodologies. Additionally, it examined prevalent solutions, conducted a meta-analysis of quality of service parameters, and scrutinized the tools employed for implementing task scheduling algorithms in Fog environments. By undertaking this systematic review, the authors aimed to furnish researchers with valuable insights for identifying specific research gaps and delineating future avenues to enhance scheduling efficacy in Fog computing landscapes.

Wu et al. (2022) proposed an innovative solution named Improved Parallel Genetic Algorithm for IoT Service Placement (IPGA-SPP) to tackle the IoT service placement problem (SPP) within fog computing. To mitigate the risk of genetic algorithms converging to local optima, IPGA-SPP was parallelized with shared memory and elitist operators, thereby enhancing its performance. The approach addressed load balancing by considering resource distribution and prioritizing service execution to minimize latency. Moreover, IPGA-SPP treated SPP as a multi-objective problem, maintaining a set of Pareto solutions to optimize service latency, cost, resource utilization, and service time simultaneously. A notable aspect of this scheme was the integration of a two-way trust management mechanism to ensure trustworthiness between clients and service providers, a crucial yet often overlooked aspect in fog computing solutions. Through simulation in a synthetic fog environment, IPGA-SPP demonstrated an average performance improvement of 8.4% compared to existing methods like CSA-FSPP, GA-PSO, EGA, and WOA-FSP. This approach offers a comprehensive solution that considers latency, cost, and trust aspects, effectively addressing the challenges of IoT service placement while prioritizing Quality of Service (QoS) and security.

Table 3 provides a comparative summary of studies focusing on priority-based heuristic approaches for task scheduling.

| Study | General approach | Performance & optimization | Implementation & evaluation | Performance metrics | Strengths | Limitations |

|---|---|---|---|---|---|---|

| Fahad et al. (2022) | MQP based preemptive task scheduling approach | Balanced task allocation for latency-sensitive and less latency-sensitive applications, addressing task starvation for less important tasks | Smart traffic management case study; Simulation results compared to other scheduling algorithms | Service latencies for long tasks; Average percentage reduction in latency | Reduced service latencies; Separate task queues for each category | Limited mention of scalability and real-world deployment |

| Madhura, Elizabeth & Uthariaraj (2021) | List-based task scheduling algorithm | Minimizing makespan and computation cost | Experimentation with random graphs and real-world data | Average scheduling length ratio, speedup, makespan | Superior performance compared to existing algorithms | Lack of scalability analysis |

| Movahedi, Defude & Hosseininia (2021) | OppoCWOA utilizing the WOA | Integration of opposition-based learning and chaos theory to enhance WOA performance | Comparative experimentation with other meta-heuristic optimization algorithms | Time and energy consumption optimization | Improved performance compared to other algorithms | Limited discussion on scalability and real-world applicability |

| Hoseiny et al. (2021) | PGA algorithm considering task requirements and fog/cloud node heterogeneity | Optimization of computation time, energy consumption, and percentage of deadline satisfied tasks | Simulations demonstrating algorithm superiority over existing strategies | Overall computation time, energy consumption, and percentage of satisfied tasks | Efficient resource allocation; Utilization of hybrid approach and genetic algorithm | Lack of extensive scalability analysis |

| Choudhari, Moh & Moh (2018) | Task scheduling algorithm based on priority levels for fog layer | Supporting increasing number of IoT devices, improving performance, and reducing costs | Performance evaluation showcasing reduction in overall response time and total cost | Overall response time and total cost | Reduced response time and decreased total cost | Limited scalability discussion |

| Jamil et al. (2022) | Comparative study exploring various scheduling algorithms, optimization metrics, and evaluation tools | Reviewing fog computing and IoE paradigms, classification and comparison of scheduling algorithms, discussion of open issues | N/A | N/A | Comprehensive review providing valuable insights and recommendations | No specific implementation or evaluation |

| Bansal, Aggarwal & Aggarwal (2022) | Exploration of scheduling approaches in fog computing categorized into static, dynamic, heuristic, and hybrid approaches | Examination of scheduling approaches, prevalence analysis, discussion of tools and open issues | N/A | N/A | Thorough categorization and analysis | Lack of specific implementation details |

| Subbaraj & Thiyagarajan (2021) | Proposed MCDM-based scheduling algorithm for module mapping in fog environments | Utilization of multi-criteria decision-making approaches for module mapping, considering Fog node heterogeneity | Simulation-based evaluation; Performance-oriented task-resource mapping | MIPS, RAM & storage, latency, bandwidth, trust, cost | Superior performance over other scheduling algorithms | Limited scalability analysis |

| Kaur, Kumar & Kumar (2021) | Exploration of challenges and gaps in task scheduling in Fog computing | Comprehensive taxonomy of research issues, meta-analysis on QoS parameters, review of scheduling tools | N/A | N/A | Identification of research problems and future directions | No specific implementation or evaluation |

| Wu et al. (2022) | Introduction of IPGA for IoT SPP in fog computing | Parallel configuration with shared memory and elitist operators, multi-objective approach, trust management mechanism | Simulation in synthetic fog environment | Service latency, cost, resource utilization, service time | Enhanced deployment process, latency-aware solution | Limited discussion on real-world applicability and scalability |

Greedy heuristics

In task scheduling, where the efficient allocation of tasks to resources is crucial, greedy heuristics provide a rapid and straightforward approach, prioritizing tasks based on specific criteria without guaranteeing the best solution (Azizi et al., 2022). Standard methods like EDF, SPT, and MRPT exemplify this approach, aiming to find satisfactory solutions quickly. While advantageous due to their simplicity, efficiency, and adaptability, greedy heuristics could fall short in guaranteeing optimality and could be sensitive to initial conditions, potentially resulting in suboptimal outcomes. Nevertheless, they excelled in real-time scheduling, large-scale problems, and more straightforward scenarios, offering valuable solutions despite their limitations. Awareness of their strengths and weaknesses was essential for effectively leveraging greedy heuristics in task-scheduling endeavors.

Azizi et al. (2022) developed a mathematical formulation for the task scheduling problem aimed at minimizing the overall energy consumption of fog nodes (FNs) while ensuring the fulfillment of Quality of Service (QoS) criteria for IoT tasks and minimizing deadline violations. They introduced two semi-greedy-based algorithms, namely priority-aware semi-greedy (PSG) and PSG with a multistart procedure (PSG-M), designed to efficiently allocate IoT tasks to FNs. Evaluation metrics encompassed the percentage of IoT tasks meeting their deadlines, total energy consumption, total deadline violation time, and system makespan. Results from experiments showcased that the proposed algorithms enhanced the percentage of tasks meeting deadlines by up to 1.35 times and reduced total deadline violation time by up to 97.6% compared to the next-best outcomes. Moreover, optimization of fog resource energy consumption and system makespan was achieved. The article underscores the complexities inherent in deploying fog computing resources for IoT applications with real-time constraints, introduces novel algorithms to address these challenges, and outlines potential avenues for future research.

Zavieh et al. (2023) introduced a novel methodology termed the Fuzzy Inverse Markov Data Envelopment Analysis Process (FIMDEAP) to tackle the task scheduling and energy consumption challenges prevalent in cloud computing environments. By integrating the advantages of Fuzzy Inverse Data Envelopment Analysis (FIDEA) and Fuzzy Markov Decision Process (FMDP) techniques, this approach adeptly selected physical and virtual machines while operating under fuzzy conditions. Data representation utilized triangular fuzzy numbers, and problem-solving relied on the alpha-cut method. The authors presented a mathematical optimization model and provided a numerical example for clarification purposes. Furthermore, the performance of FIMDEAP was rigorously assessed through simulations conducted in a cloud environment. Results exhibited superior performance compared to existing methods like PSO+ACO and FBPSO+FBACO across critical metrics such as energy consumption, execution cost, response time, gain of cost, and makespan. This innovative method not only enhanced energy optimization but also improved response time and makespan, thereby promoting the adoption of environmentally sustainable practices in cloud networks. Future research avenues were suggested, including the exploration of additional fuzzy methods, integration of machine learning techniques, and incorporation of request forecasting to further refine resource allocation and task processing optimization strategies.

Tang et al. (2023) conducted a study on AI-driven IoT applications within a collaborative cloud-edge environment, leveraging container technology. They introduced a novel container-based task scheduling algorithm dubbed PGT, which integrates a priority-aware greedy strategy with the Technique for Order Preference by Similarity to Ideal Solution (TOPSIS) multi-criteria approach. This algorithm effectively manages containers across both cloud and edge servers within a unified platform, facilitating the deployment of IoT application services within these containers. Tasks are prioritized based on their deadline constraints, with higher precedence given to tasks with shorter deadlines. The proposed algorithm takes into account various performance indicators, including task response time, energy consumption, and task execution cost, to determine the optimal container for task execution. Through simulations conducted in a collaborative cloud-edge environment, the study demonstrated that the proposed scheduling approach surpasses four baseline algorithms in enhancing the Quality of Service (QoS) satisfaction rate, reducing energy consumption, minimizing penalty costs, and mitigating total violation time.

Haja, Vass & Toka (2019) outlined their efforts towards improving network-aware big data task scheduling in distributed systems. They proposed several resource orchestration algorithms designed to address challenges related to network resources in geographically distributed topologies, specifically focusing on reducing end-to-end latency and efficiently allocating network bandwidth. The heuristic algorithms they introduced demonstrated enhanced performance for big data applications compared to default methods. These solutions were implemented and evaluated within a simulation environment, showcasing the improved quality of big data application outcomes. On a related note, the text introduced concepts like edge-cloud computing and mobile edge computing, which involved deploying computing resources closer to end devices to minimize network latency. These approaches, integrated with carrier networks, supported various 5G and beyond applications like Industry 4.0, Tactile Internet, remote driving, and extended reality, facilitating low-latency communication and task offloading from end devices to the distributed environment.

Liu, Lin & Buyya (2022) tackled the scheduling issue by framing it as a modified version of bin-packing and proposed an algorithm driven by heuristics to decrease inter-node communication. They introduced D-Storm, a prototype scheduler developed using the Apache Storm framework, which integrated a self-adaptive MAPE-K (Monitoring, Analysis, Planning, Execution, Knowledge) architecture. By conducting assessments with real-world applications like Twitter sentiment analysis, the researchers illustrated that D-Storm outperformed both the existing resource-aware scheduler and the default Storm scheduler. Notably, D-Storm achieved reductions in inter-node traffic and application latency while also realizing resource savings through task consolidation. This contribution enhances the management and scheduling of data streams in cloud and sensor networks, showcasing tangible enhancements in performance and resource utilization.

Chen et al. (2023) addressed the challenges of task offloading and resource scheduling in vehicular edge computing, proposing a Multi-Aerial Base Station Assisted Joint Computation Offload algorithm based on D3QN in Edge VANETs (MAJVD3). This algorithm utilized SDN Controllers to efficiently schedule resources and tackle issues such as latency, energy consumption, and QoS degradation. The proposed method underwent evaluation and comparison with baseline algorithms, showcasing enhancements in network utility, decreased task latency, and energy consumption. The article discussed related work, the system model, optimization problem formulation, the proposed algorithm, simulation results, and analysis. The authors declared no competing interests and acknowledged funding support. Adewojo & Bass (2023) proposed a novel load balancing algorithm aimed at efficiently distributing workload among virtual machines for three-tier web applications. The algorithm combines five carefully selected server metrics to achieve load distribution. Experimental evaluations were conducted on a private cloud utilizing OpenStack to compare the proposed algorithm’s performance with a baseline algorithm and round-robin approach. Performance was assessed under scenarios involving simulated resource failures and flash crowds, with response times meticulously recorded. Results indicated a 12.5% improvement in average response times compared to the baseline algorithm and a 22.3% improvement compared to the round-robin algorithm during flash crowds. Additionally, average response times were enhanced by 20.7% compared to the baseline algorithm and 21.4% compared to the round-robin algorithm during resource failure situations. These experiments underscored the novel algorithm’s resilience to fluctuating loads and resource failures, showcasing its effectiveness in dynamic scenarios. The text also acknowledged limitations and proposed avenues for future research to further enhance the algorithm’s efficacy.

Table 4 provides a comparative summary of studies focusing on greedy heuristic approaches for task scheduling.

| Study | General approach | Performance & optimization | Implementation & evaluation | Performance metrics | Strengths | Limitations |

|---|---|---|---|---|---|---|

| Azizi et al. (2022) | Mathematical formulation, semi-greedy algorithms | Minimization of energy consumption, meeting QoS requirements | Simulation experiments | Deadline compliance, energy consumption, makespan | Improvements in task deadline meeting, energy consumption | Limited scalability discussion |

| Zavieh et al. (2023) | FIMDEAP | Optimization of multiple metrics using fuzzy methods | Mathematical optimization model, cloud simulation | Energy consumption, execution cost, response time | Outperforms existing methods in key metrics | Requires exploration of additional fuzzy methods |

| Tang et al. (2023) | PGT container-based scheduling algorithm | Improvement of QoS, energy consumption | Simulation in cloud-edge environment | QoS satisfaction rate, energy consumption | Outperforms baseline algorithms | Real-world applicability may need further investigation |

| Haja, Vass & Toka (2019) | Resource orchestration algorithms | Reduction of latency, bandwidth optimization | Simulation experiments | End-to-end latency, bandwidth utilization | Enhanced performance compared to default methods | Limited discussion on real-world deployment |

| Liu, Lin & Buyya (2022) | D-Storm scheduler for data streams | Minimization of inter-node communication, resource savings | Evaluation with real-world applications | Inter-node traffic, application latency | Improvements in performance and resource utilization | Limited scalability discussion |

| Chen et al. (2023) | MAJVD3 algorithm for vehicular edge computing | Reduction of latency, energy consumption | Simulation experiments | Network utility, task latency, energy consumption | Improvements in network utility and latency | Real-world deployment considerations may be needed |

| Adewojo & Bass (2023) | Load balancing algorithm for web applications | Efficient workload distribution | Experimental characterization in private cloud | Average response time under varying scenarios | Resilience to fluctuating loads and failures | Limited scalability discussion |

Metaheuristics

Metaheuristic approaches in task scheduling optimization provided adaptable and versatile solutions by heuristically exploring the search space (Aron & Abraham, 2022; Gupta & Singh, 2023). Examples include genetic algorithms, particle swarm optimization, simulated annealing, and ant colony optimization. These methods iteratively refined task assignments using feedback mechanisms to converge towards optimal or near-optimal solutions (Khan et al., 2019). Metaheuristics excelled in complex and dynamic IoT environments where traditional scheduling approaches might have been insufficient, handling diverse optimization objectives and adapting to changing conditions. However, they might have demanded substantial computational resources and parameter tuning, rendering them less suitable for real-time applications with stringent performance constraints. Various metaheuristic approaches used in task scheduling encompass simulated annealing (SA), tabu search (TS), particle swarm optimization (PSO), genetic algorithm (GA), harmony search (HS), differential evolution (DE), firefly algorithm (FA), bat algorithm (BA), cuckoo search (CS), and WOA.

Hosseinioun et al. (2020) proposed an energy-aware method by employing the Dynamic Voltage and Frequency Scaling (DVFS) technique to reduce energy consumption. Additionally, to construct valid task sequences, a hybrid approach combining the Invasive Weed Optimization and Culture (IWO-CA) evolutionary algorithm was utilized. The experimental results indicated that the proposed algorithm enhanced existing methods in terms of energy consumption. The article addressed the challenges encountered in cloud computing and underscored the role of fog computing in mitigating these challenges, particularly in minimizing latency and energy consumption. It emphasized the importance of task scheduling in distributed systems and introduced a hybrid IWO-CEA algorithm aimed at achieving energy-aware task scheduling in fog computing environments. Furthermore, the article highlighted the use of the DVFS technique to minimize energy consumption while maximizing resource utilization. Experimental results were presented to illustrate the effectiveness of the proposed algorithm. Hosseini, Nickray & Ghanbari (2022) presented a scheduling algorithm called PQFAHP, leveraging a combination of Priority Queue, Fuzzy logic, and Analytical Hierarchy Process (AHP). The PQFAHP algorithm was implemented to integrate diverse priorities and rank tasks according to multiple criteria. These criteria encompassed dynamic scheduling parameters such as completion time, energy consumption, RAM usage, and deadlines. Experimental outcomes underscored the efficacy of the proposed method in integrating multi-criteria for scheduling tasks, surpassing several standard algorithms in key performance metrics including waiting time, delay, service level, mean response time, and the number of scheduled tasks on the mobile fog computing (MFC) platform. The study highlighted significant advancements in fog computing scheduling, including notable reductions in average waiting time, delay, and energy consumption, alongside enhancements in service level. It emphasized the importance of addressing the challenges faced by IoT in MFC environments and proposed a comprehensive solution to optimize resource allocation, minimize execution costs, and enhance system performance. Additionally, the article provided a critical review of existing methodologies in the field, underscoring the need for more effective scheduling algorithms to meet the demands of mobile fog computing.

Apat et al. (2019) focused on mapping independent tasks to the fog layer, where the proposed algorithm demonstrated better performance compared to the cloud data center. The system resources considered included CPU, RAM, etc., with task priorities determined based on deadlines. Additionally, the assumption was made that once a task was assigned to a specific node, it remained there until completion. The article introduced a three-layer architecture designed for efficient task scheduling, particularly in applications like healthcare within smart homes. The text also delved into the limitations of cloud computing, citing issues like connection interruptions and high latency, especially concerning IoT devices. It underscored the increasing significance of IoT devices in daily life while highlighting the mismatch between the centralized nature of cloud computing and the decentralized nature of IoT, which often led to latency issues impacting service quality.

Jalilvand Aghdam Bonab & Shaghaghi Kandovan (2022) presented a novel framework for QoS-aware resource allocation and mobile edge computing (MEC) in multi-access heterogeneous networks, with the objective of maximizing overall system energy efficiency while ensuring user QoS requirements. The framework introduced a customized objective function tailored specifically for the multi-server MEC environment, taking into account computation and communication models to minimize task completion time and enhance energy efficiency within specified delay constraints. By integrating continuous carrier allocation, user association variables, and interference coordination into the objective function, the core optimization problem was reformulated as a mixed integer nonlinear programming (MINLP) task. Moreover, a carrier-matching algorithm was proposed to tackle constraints related to user data rate and transmission power, thereby optimizing the channel allocation strategy. Through extensive simulations, the proposed approach exhibited notable enhancements in energy efficiency and network throughput, particularly evident in multi-source scenarios.

Huang & Wang (2020) introduced a novel framework, referred to as GO, designed to tackle large-scale bilevel optimization problems (BOPs) efficiently. Divided into two main phases, GO first identified and categorized interactions between upper-level and lower-level variables into three subgroups. These subgroups dictated the optimization approach utilized in the subsequent phase. For instance, single-level evolutionary algorithms (EAs) were applied to subgroups containing only upper-level or lower-level variables. At the same time, a bilevel EA was employed for subgroups with both types of variables. Additionally, a criterion was introduced to address situations where multiple optima existed within specific subgroups, enhancing the algorithm’s efficacy. The effectiveness of GO was validated through tests on scalable problems and its application to resource pricing in mobile edge computing. The article underscored the significance of considering interactions between variables in BOPs and provided a practical framework, offering a promising solution to address such challenges efficiently.

Abdel-Basset et al. (2020) introduced a novel approach termed HHOLS for energy-aware task scheduling in fog computing (TSFC), with a focus on enhancing QoS in Industrial Internet of Things (IIoT) applications. The methodology commenced by delineating a layered fog computing model, emphasizing its heterogeneous architecture to accommodate diverse computing resources effectively. To tackle the discrete TSFC problem, the standard Harris Hawks optimization algorithm was adapted through normalization and scaling techniques. Additionally, a swap mutation mechanism was employed to distribute workloads evenly among virtual machines, thereby enhancing solution quality. Notably, the integration of a local search strategy further augmented the performance of HHOLS. Comparative evaluations against other metaheuristic approaches across multiple performance metrics including energy consumption, makespan, cost, flow time, and carbon dioxide emission rate showcased the superior efficacy of HHOLS. The study underscored the pivotal role of fog computing in mitigating QoS challenges within IIoT applications, particularly amidst the escalating data influx to cloud computing. Furthermore, it emphasized the imperative of leveraging sustainable energy sources for fog computing servers to ensure long-term viability and efficiency.

Yadav, Tripathi & Sharma (2022a) introduced a hybrid approach for task scheduling in fog computing, merging a metaheuristic algorithm, fireworks algorithm (FWA), with a heuristic algorithm known as heterogeneous earliest finish time (HEFT). Their combination aimed to minimize makespan and cost factors through bi-objective optimization. Experiments were conducted on distinct scientific workflows to compare the performance of the proposed BH-FWA algorithm against other approaches. Results from exhaustive simulations demonstrated the superiority of the BH-FWA algorithm in fog computing networks, as evidenced by metrics including makespan, cost, and throughput. The article contributed to the field by presenting a novel solution for task scheduling in fog computing environments and provided references for further exploration of related topics.

Abdel-Basset et al. (2023) introduced a pioneering multi-objective task scheduling strategy, denoted as M2MPA, founded on a modified marine predators algorithm (MMPA). This approach aimed to concurrently minimize energy consumption and make-span while adhering to the Pareto optimality theory. M2MPA enhanced the original MMPA by integrating a polynomial crossover operator to bolster exploration and an adaptive CF parameter to refine exploitation. The efficacy of M2MPA underwent assessment using eighteen tasks featuring varying scales and heterogeneous workloads allocated to two hundred fog devices. Through comparative experiments involving six established methodologies, M2MPA showcased significant superiority across diverse performance metrics, encompassing carbon dioxide emission rate, flowtime, make-span, and energy consumption. These findings underscored M2MPA’s efficacy in optimizing task scheduling within fog computing environments, thus offering a promising avenue for adeptly managing data from IoT devices in cyber-physical-social systems.

Abd Elaziz, Abualigah & Attiya (2021) introduced AEOSSA, a modified artificial ecosystem-based optimization (AEO) technique integrated with Salp Swarm Algorithm (SSA) operators, aimed at improving task scheduling for IoT requests in cloud-fog environments. Through this modification, the algorithm’s exploitation capability was enhanced, facilitating the search for optimal solutions. Evaluation of the AEOSSA approach utilized various synthetic and real-world datasets to assess its performance, comparing it against established metaheuristic methods. Results demonstrated the effectiveness of AEOSSA in tackling the task scheduling problem, surpassing other methods in metrics such as makespan time and throughput. This highlighted AEOSSA’s potential as an efficient solution for scheduling IoT tasks in cloud-fog environments.

Yadav, Tripathi & Sharma (2022b) presented a modified fireworks algorithm that incorporated opposition-based learning and differential evolution approaches to address task scheduling optimization in fog computing environments. By utilizing the differential evolution operator, the algorithm aimed to overcome local optima, while opposition-based learning enhanced the diversity of the population’s solution set. The method focused on minimizing both makespan and cost, thereby improving resource utilization efficiency. Through experiments conducted on various workloads, the performance of the proposed approach was compared with several recent metaheuristic approaches, demonstrating its efficacy in task scheduling optimization. The comparison underscored the significance of the proposed method in enhancing optimization outcomes.

Abd Elaziz & Attiya (2021) introduced a novel multi-objective approach, MHMPA, which integrated the marine predator’s algorithm with the polynomial mutation mechanism for task scheduling optimization in fog computing environments. This approach aimed to strike a balance between makespan and carbon emission ratio based on Pareto optimality, utilizing an external archive to store non-dominated solutions. Additionally, an improved version, MIMPA, using the Cauchy distribution and Levy Flight, was explored to enhance convergence and avoid local minima. Experimental results demonstrated the superiority of MIMPA over the standard version across various performance metrics. However, MHMPA consistently outperformed MIMPA even after integrating the polynomial mutation strategy. The efficacy of MHMPA was further validated through comparisons with well-known multi-objective optimization algorithms, showcasing significant improvements in flow time, carbon emission rate, energy, and makespan. Overall, the study highlighted the effectiveness of MHMPA in addressing task scheduling challenges in fog computing environments.

Saif et al. (2023) introduced a Multi-Objectives Grey Wolf Optimizer (MGWO) algorithm aimed at mitigating delay and reducing energy consumption in fog computing, particularly within fog brokers responsible for task distribution. Through extensive simulations, the efficacy of MGWO was verified, showcasing superior performance in mitigating delay and reducing energy consumption compared to existing algorithms. The article provided an overview of task scheduling challenges in cloud-fog computing and presented the MGWO algorithm as a solution. It compared MGWO with existing algorithms, highlighting its advantages in delay reduction and energy efficiency. The study delved into the mechanics of the MGWO algorithm, explaining its basis in the hunting behavior of grey wolves and detailing key components such as prey tracking, encircling, hunting, and attacking. Additionally, it discussed the algorithm’s fitness function, mutation process, utilization of an external archive, and crowding distance. Simulation results affirmed the algorithm’s efficacy in achieving its objectives.

Abohamama, El-Ghamry & Hamouda (2022) presented a semi-dynamic real-time task scheduling algorithm designed for bag-of-tasks applications within cloud-fog environments. This approach formulated task scheduling as a permutation-based optimization problem, leveraging a modified genetic algorithm to generate task permutations and allocate tasks to virtual machines based on minimum expected execution time. An optimality study demonstrated the algorithm’s comparative performance with optimal solutions. Comparative evaluations against traditional scheduling algorithms, including first fit and best fit, as well as genetic and bees life algorithms, highlighted the proposed algorithm’s superiority in terms of makespan, total execution time, failure rate, average delay time, and elapsed run time. The results showcased the algorithm’s ability to achieve a balanced tradeoff between makespan and total execution cost while minimizing task failure rates, positioning it as an efficient solution for real-time task scheduling in cloud-fog environments.

Nguyen et al. (2020) presented a comprehensive approach to address task scheduling challenges in fog-cloud systems within the IoT context. It began by formulating a general model for the fog-cloud system, considering various constraints such as computation, storage, latency, power consumption, and costs. Subsequently, metaheuristic methods were applied to schedule data processing tasks, treating the problem as a multi-objective optimization task. Simulated experiments validated the practical applicability of the proposed fog-cloud model and demonstrated the effectiveness of metaheuristic algorithms compared to traditional methods like Round-Robin. The study underscored the significance of fog computing in reducing latency and bandwidth usage while also emphasizing the crucial role of cloud services in providing high-performance computation and large-scale storage for IoT data processing.

Mousavi et al. (2022) discussed the importance of addressing the challenges posed by the rapid growth of data and latency-sensitive applications in the IoT era through efficient task scheduling in fog computing environments. The article introduced a bi-objective optimization problem focused on minimizing both server energy consumption and overall response time simultaneously. To address this challenge, the article proposed a novel approach called Directed Non-dominated Sorting Genetic Algorithm II (D-NSGA-II). This algorithm incorporates a recombination operator designed to strike a balance between exploration and exploitation capabilities. The algorithm’s performance was evaluated against other meta-heuristic algorithms, demonstrating its superiority in meeting all requests before their deadlines while minimizing energy consumption. The study underscored the significance of fog computing in enhancing system performance and meeting the demands of IoT applications amidst the exponential growth of data.

Salehnia et al. (2023) proposed an IoT task request scheduling method using the Multi-Objective Moth-Flame Optimization (MOMFO) algorithm to enhance the quality of IoT services in fog-cloud computing environments. The approach aimed to reduce completion and system throughput times for task requests while minimizing energy consumption and CO2 emissions. The proposed scheduling method was evaluated using datasets, and its performance was compared with other optimization algorithms, including PSO, FA, salp swarm algorithms (SSA), Harris Hawks optimizer (HHO), and artificial bee colony (ABC). Experimental results showed that the proposed solution effectively reduced task completion time, throughput time, energy consumption, and CO2 emissions while improving the system’s overall performance. The study highlighted the potential of MOMFO in optimizing task scheduling for IoT applications in fog-cloud environments.

Nazeri, Soltanaghaei & Khorsand (2024) proposed a predictive energy-aware scheduling framework for fog computing, integrating a MAPE-K control model comprising monitor, analyzer, planner, and executer components with a shared knowledge base. It introduced an Adaptive Network-based Fuzzy Inference System (ANFIS) in the Analyzer component to predict future resource load and a resource management strategy based on the predicted load to reduce energy consumption. Additionally, it combined the Improved Ant Lion Optimizer (ALO) and weighted GWO into a planner component called I-ALO-GWO for workflow scheduling. The framework’s effectiveness was evaluated on IEEE CEC2019 benchmark functions and applied to scientific workflows using the iFogSim tool. Experimental results showed that I-ALO-GWO improved makespan, energy consumption, and total execution cost by significant percentages compared to alternative methods, addressing the inefficiencies and limitations of existing approaches in fog computing.

Memari et al. (2022) aimed to develop an infrastructure for smart home energy management at minimal hardware cost using cloud and fog computing, alongside proposing a latency-aware scheduling algorithm based on virtual machine matching employing meta-heuristics. Leveraging the effectiveness of Tabu search in various optimization problems, a novel algorithm enhanced with approximate nearest neighbor (ANN) and fruit fly optimization (FOA) algorithms were introduced. Through simulation and implementation of a case study, the algorithm’s performance was evaluated considering execution time, latency, allocated memory, and cost function. Comparison outcomes revealed the superior performance of the proposed algorithm when compared to Tabu search, genetic algorithm, PSO, and simulated annealing methods. The research highlighted the importance of proficient task scheduling in both cloud and fog computing realms, emphasizing its role in optimizing resource allocation and diminishing response time in smart home energy management systems. This is particularly crucial in managing substantial data volumes while concurrently minimizing hardware expenses.

Hussain & Begh (2022) aimed to address the challenges of task scheduling in fog-cloud computing by proposing a novel algorithm called HFSGA (Hybrid Flamingo Search with a Genetic Algorithm) designed to minimize costs and enhance QoS. Utilizing seven benchmark optimization test functions, the performance of HFSGA was compared with other established algorithms. The comparison, validated through Friedman rank test, demonstrated the superiority of HFSGA in terms of percentage of deadline satisfied tasks (PDST), makespan, and cost. Comparative analysis against existing algorithms such as ACO, PSO, GA, Min-CCV, Min-V, and Round Robin (RR) showed HFSGA’s effectiveness in optimizing task scheduling processes. The proposed algorithm was presented as a cost-efficient and QoS-aware solution tailored for fog-cloud environments, offering promising outcomes in improving task scheduling efficiency while minimizing associated costs. The article provided insights into fog-cloud system architecture and underscored the significance of HFSGA in addressing the complexities of task scheduling in such environments.

Dev et al. (2022) addressed the intricate task scheduling challenges arising from the increasing volumes of data in fog computing environments. In their study, they introduced a novel approach named Hybrid PSO and GWO (HPSO_GWO), which combines GWO with PSO. This hybrid meta-heuristic algorithm was developed to effectively allocate tasks to virtual machines (VMs) deployed across fog nodes. The research emphasized the importance of efficient task and resource scheduling in fog computing to ensure optimal QoS. Furthermore, the article referenced related work in the field, providing insights into the ongoing research endeavors aimed at addressing the challenges inherent in fog computing environments.

Javaheri et al. (2022) presented a multi-fog computing architecture tailored for optimizing workflow scheduling within IoT networks, with a focus on mitigating challenges related to fog provider availability and task scheduling efficiency. To address these concerns, the study introduced a hidden Markov model (HMM) designed to predict the availability of fog computing providers. This predictive model was trained using the unsupervised Baum-Welch algorithm and leveraged the Viterbi algorithm to compute fog provider availability probabilities. Subsequently, these probabilities informed the selection of an optimal fog computing provider for scheduling IoT workflows. Additionally, the article proposed an enhanced version of the Harris hawks optimization (HHO) algorithm, termed discrete opposition-based HHO (DO-HHO), tailored specifically for scientific workflow scheduling. Extensive experiments conducted using iFogSim showcased significant reductions in tasks offloaded to cloud computing, instances of missed workflow deadlines, and SLA violations. The architecture comprised IoT networks, fog computing providers, and cloud computing data centers, with a broker node facilitating optimal fog computing resource selection. This approach aimed to enhance workflow scheduling efficiency in fog computing environments, outperforming existing state-of-the-art methods.

Keshavarznejad, Rezvani & Adabi (2021) addressed task offloading as a multi-objective optimization challenge targeting the reduction of total power consumption and task execution delay in the system. Acknowledging the NP-hard complexity of the problem, the researchers employed two meta-heuristic methods: the non-dominated sorting genetic algorithm (NSGA-II) and the Bees algorithm. Through simulations, both meta-heuristic approaches demonstrated resilience in minimizing energy consumption and task execution delay. The proposed techniques effectively balanced offloading probability with the power needed for data transmission, underscoring their efficacy in optimizing task offloading strategies within fog computing environments. The referenced articles covered a broad spectrum of research domains, including mobile cloud computing, fog computing, computation offloading, and optimization methodologies, offering a comprehensive overview of the field’s current landscape and advancements.

Hajam & Sofi (2023) presented an innovative approach to fog computing, focusing on resource allocation and scheduling using the spider monkey optimization (SMO) algorithm. A heuristic initialization-based SMO algorithm was proposed to minimize the total cost of tasks by selecting optimal fog nodes for computation offloading. Three initialization strategies-longest job fastest processor (LJFP), shortest job fastest processor (SJFP), and minimum completion time (MCT)-were compared in terms of average cost, service time, monetary cost, and cost per schedule. Results indicated the superiority of the MCT-SMO approach over other heuristic-based SMO algorithms and PSO in terms of efficiency and effectiveness in fog computing environments. The study provided valuable insights into enhancing task scheduling and resource allocation in fog computing networks.

Hussein & Mousa (2020) introduced two nature-inspired meta-heuristic schedulers, ACO and PSO, aimed at optimizing task scheduling and load balancing in fog computing environments for IoT applications, particularly in intelligent city scenarios. The proposed algorithms considered communication cost and response time to distribute IoT tasks across fog nodes effectively. Experimental evaluations compared the performance of the ACO-based scheduler with the PSO-based and RR algorithms. Results demonstrated that the ACO-based scheduler improved response times for IoT applications and achieved efficient load balancing among fog nodes compared to the PSO-based and RR algorithms. The study highlighted the potential of nature-inspired meta-heuristic algorithms in enhancing task scheduling in fog computing environments and outlined future research directions in multi-objective optimization and dynamic IoT scenarios.

Yadav, Tripathi & Sharma (2023) explored the importance of efficient task scheduling in fog computing networks, particularly for minimizing service time and enhancing stability in dynamic fog devices. It introduced the opposition-based chemical reaction (OBCR) method, a novel approach that combined heuristic ranking, chemical reaction optimization (CRO), and opposition-based learning (OBL) approaches. OBCR aimed to optimize task scheduling by ensuring better exploration and exploitation of the solution space while escaping local optima. The OBCR method utilized four operators to enhance the stability of dynamic fog devices and optimize task scheduling in fog computing networks. Through extensive simulations, OBCR exhibited superior performance over alternative approaches, effectively reducing service-time latency and improving network stability. This contribution addresses significant challenges in fog computing task scheduling, offering a robust solution with the OBCR method.

Abdel-Basset et al. (2020) introduced a novel approach to task scheduling in fog computing, aiming to enhance the QoS for IoT applications by offloading tasks from the cloud. It proposed an energy-aware model called the marine predators algorithm (MPA) for fog computing task scheduling (TSFC). Three versions of MPA were presented, with the modified MPA (MMPA) demonstrating superior performance over other algorithms. The study evaluated various performance metrics, including energy consumption, makespan, flow time, and carbon dioxide emission rate. It emphasized the significance of fog computing in improving QoS for IoT applications, particularly in domains such as healthcare and smart cities, by optimizing task scheduling strategies.

Kishor & Chakarbarty (2022) proposed a meta-heuristic task offloading algorithm, Smart Ant Colony Optimization (SACO), inspired by nature, for offloading IoT-sensor applications tasks in a fog environment. The proposed algorithm was compared with RR, the throttled scheduler algorithm, two bio-inspired algorithms, modified particle swarm optimization (MPSO), and bee life algorithm (BLA). Numerical results demonstrated a significant improvement in latency with the SACO algorithm compared to RR, throttled, MPSO, and BLA, reducing task offloading time by 12.88%, 6.98%, 5.91%, and 3.53%, respectively. The study highlighted the effectiveness of SACO in optimizing task offloading for IoT-sensor applications in fog computing environments, offering potential enhancements in latency reduction and overall system performance.

Khaledian et al. (2024) proposed a hybrid particle swarm optimization and simulated annealing algorithm (PSO-SA) for task prioritization and optimizing energy consumption and makespan in fog-cloud environments. The proposed approach targeted the task scheduling complexities prevalent in the IoT environment, aiming to enhance operational efficiency and overall performance. Simulation results showcased notable enhancements achieved by the PSO-SA algorithm, with a 5% reduction in energy consumption and a 9% improvement in makespan compared to the baseline algorithm (IKH-EFT). These findings underscored the pivotal role of hybrid optimization strategies in optimizing task allocation and system performance within fog and cloud computing environments.

Khiat, Haddadi & Bahnes (2024) addressed task scheduling in a fog-cloud environment and proposed a novel genetic-based algorithm called GAMMR to optimize the balance between total energy consumption and response time. Through simulations conducted on eight datasets of varying sizes, the proposed algorithm’s performance was evaluated. Results indicated that the GAMMR algorithm consistently outperformed the standard genetic algorithm, achieving an average improvement of 3.4% in the normalized function across all tested cases. The references provided in the text offered a comprehensive overview of fog-cloud services, IoT applications, task scheduling strategies, and optimization algorithms in cloud and fog computing environments, contributing to a deeper understanding of current research trends and challenges in the field.

Ahmadabadi, Mood & Souri (2023) evaluated the performance of a newly proposed algorithm in two scenarios. Firstly, the algorithm was compared with several popular multi-objective optimization methods using standard test functions, showing superior performance. Secondly, the algorithm was applied to solve the task scheduling problem in fog-cloud systems within the IoT. The approach incorporated a multi-objective function aiming to minimize makespan, energy consumption, and monetary cost. Additionally, a new operator called star-quake was introduced in the multi-objective version of the gravitational search algorithm (MOGSA) to enhance performance. The results demonstrated significant improvements, including an 18% reduction in makespan, 22% decrease in energy consumption, and 40% reduction in processing cost. Statistical analysis further confirmed the algorithm’s superiority over other approaches in task scheduling. The article contributed to addressing the challenges of task scheduling in fog-cloud systems and highlighted the effectiveness of the proposed algorithm in optimizing system performance metrics.

Table 5 provides a comparative summary of studies focusing on metaheuristic approaches for task scheduling.

| Study | General approach | Performance & optimization | Implementation & evaluation | Performance metrics | Strengths | Limitations |

|---|---|---|---|---|---|---|