Abstract

A modular approach to constructing cryptographic protocols leads to simple designs but often inefficient instantiations. On the other hand, ad hoc constructions may yield efficient protocols at the cost of losing conceptual simplicity. We suggest a new design paradigm, structure-preserving cryptography, that provides a way to construct modular protocols with reasonable efficiency while retaining conceptual simplicity. A cryptographic scheme over a bilinear group is called structure-preserving if its public inputs and outputs consist of elements from the bilinear groups and their consistency can be verified by evaluating pairing-product equations. As structure-preserving schemes smoothly interoperate with each other, they are useful as building blocks in modular design of cryptographic applications. This paper introduces structure-preserving commitment and signature schemes over bilinear groups with several desirable properties. The commitment schemes include homomorphic, trapdoor and length-reducing commitments to group elements, and the structure-preserving signature schemes are the first ones that yield constant-size signatures on multiple group elements. A structure-preserving signature scheme is called automorphic if the public keys lie in the message space, which cannot be achieved by compressing inputs via a cryptographic hash function, as this would destroy the mathematical structure we are trying to preserve. Automorphic signatures can be used for building certification chains underlying privacy-preserving protocols. Among a vast number of applications of structure-preserving protocols, we present an efficient round-optimal blind-signature scheme and a group signature scheme with an efficient and concurrently secure protocol for enrolling new members.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Structure-Preserving Cryptography

Cryptographic protocols often use modular constructions that combine general building blocks such as commitments, encryption, signatures, and zero-knowledge proofs. Modular design is useful to show the feasibility of realizing a particular security goal and may lead to simpler security proofs that are less error-prone than seen in ad hoc constructions. On the other hand, modular design can incur a significant overhead, and in feasibility proofs efficient instantiations are often left as the next challenge. This challenge is often solved by finding a “cleverly crafted” efficient solution for the specific security goal. However, modular constructions make it easier to design and understand protocols. It is therefore desirable to have a framework of interoperable building blocks with efficient instantiations such that we can design protocols that are both modular and efficient at the same time.

Since the seminal works in [24, 63, 86], bilinear groups have been widely used for constructing efficient cryptographic protocols. However, protocols defined over bilinear group are not necessarily compatible with each other as they may involve both group and field elements in different places as well as additional cryptographic primitives such as collision-resistant hash functions for instance.

We propose structure-preserving cryptography as an approach for efficiently instantiating modular constructions over bilinear groups \(\Lambda := (p,{{\mathbb {G}}},\tilde{{{\mathbb {G}}}},{{\mathbb {G}}}_T,e,G,\tilde{G})\) where \({{\mathbb {G}}}, \tilde{{{\mathbb {G}}}}\) and \({{\mathbb {G}}}_T\) are groups of prime order \(p\) generated by \(G, \tilde{G}\) and \(e(G,\tilde{G})\), respectively, with a bilinear map \(e:{{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\rightarrow {{\mathbb {G}}}_T\). In structure-preserving cryptography, building blocks are designed over common bilinear groups \(\Lambda \) such that

-

all public objects such as public keys, messages, signatures, commitments and ciphertexts are elements of the groups \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\), and

-

verifying relations of interest, such as signature verification or opening of a commitment, can be done by performing group operations and evaluating pairing-product equations of the form

$$\begin{aligned} \textstyle \prod \limits _{i}\prod \limits _{j} e(X_i,\tilde{Y}_j)^{c_{i,j}} \;=\; 1, \end{aligned}$$where \(c_{i,j} \in {{\mathbb {Z}}}\) are constants specified by the scheme.

We call cryptographic schemes satisfying these conditions structure-preserving. It will sometimes be useful to consider also relaxed structure preservation where public elements in the target group \({{\mathbb {G}}}_T\) are permitted as well and where pairing-product equations may be of the form \(\prod _i\prod _je(X_i,\tilde{Y}_j)^{c_{i,j}}=T\) with \(T\in {{\mathbb {G}}}_T\).

The properties defining structure preservation are preserved by modular constructions. Namely, a scheme built by modularly combining (relaxed) structure-preserving building blocks is (relaxed) structure-preserving as well. They therefore offer strong compatibility and modularity. On the other hand, the restrictive properties make it more challenging to design structure-preserving schemes. In particular, structure-preserving cryptography inherently prohibits the use of collision-resistant hash functions that break the underlying mathematical structure of the bilinear groups.

Combining non-interactive proofs with other primitives is a typical approach in modular construction of secure cryptographic protocols. A classical way of realizing efficient instantiations is to rely on the random-oracle heuristic [18] for non-interactive proofs—or to directly use interactive assumptions like (variations of) the LRSW assumption [78] and “one-more” assumptions [17]. Due to a series of criticisms starting with [37], more and more practical schemes are being proposed and proved secure in the standard model (i.e., without random oracles) and under falsifiable (and thus non-interactive) intractability assumptions [80]. In [59], Groth and Sahai presented the first (and currently the only) efficient non-interactive proof system based on standard assumptions in bilinear groups. Their proof system, called GS for short, exerts its full power as a non-interactive proof-of-knowledge system when the proof statement is a set of relations described by pairing-product equations, for which the witnesses are group elements in the source groups, that is \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\). Due to these limitations, however, many existing cryptographic schemes cannot be modularly combined with GS proofs. In contrast, structure-preserving schemes are defined so that they are compatible with the GS proof system. Accordingly, by using GS proofs, one can efficiently prove one’s knowledge about the witness for relations of interest in structure-preserving schemes.

We address two major building blocks in cryptographic protocol design, commitment schemes and signature schemes, and present their structure-preserving instantiations with several useful properties as explained in the following.

1.2 Homomorphic Trapdoor Commitments

A non-interactive commitment scheme allows a sender to create a commitment to a message. The commitment hides the message but the sender may later choose to open the commitment to the message. A commitment is bound to a message in the sense that a commitment cannot be opened to two different messages. On top of the fundamental properties of hiding and binding, a commitment may have other desirable features. In a trapdoor commitment scheme [60, 83], a certain piece of trapdoor information makes it possible to circumvent the binding property and open a commitment to an arbitrary message. In a homomorphic commitment scheme, messages and commitments belong to abelian groups, and by multiplying two commitments, we obtain a commitment to the product of the committed messages. Finally, a commitment scheme is often required to be length-reducing such that the commitment is shorter than the message.

An example that provides all those properties is a generalization of Pedersen commitments [83] where a message is a vector of values in \(\mathbb {Z}_p\) and a commitment consists of only one group element. Such commitments have been found useful in contexts such as mix-nets, voting, digital credentials, blind signatures, leakage-resilient one-way functions and zero-knowledge proofs [11, 30, 50, 66, 77, 81].

We present structure-preserving commitment schemes whose public keys, messages, commitments and openings are elements of bilinear groups, and whose opening is verified by evaluating pairing-product equations. Our commitments are trapdoor and homomorphic, and some of them are length-reducing as well. The attributes that discriminate our constructions are the types of bilinear groups used and the groups that messages and commitments belong to. (See Table 1 on page 21 for a summary.)

-

The first and the second homomorphic trapdoor commitment schemes are length-reducing by mapping vectors of source-group elements to a constant number of target-group elements and thus relaxed structure-preserving.

-

The third homomorphic trapdoor commitment scheme is strictly structure-preserving, which means both messages and commitments consists of source-group elements. They are, however, not length-reducing.

-

The last commitment scheme takes messages from \(\mathbb {Z}_p\) and maps them to a single source-group element. This scheme is by definition not structure-preserving though all other properties are provided. We include it here for its usefulness as a building block for applications.

Our commitment schemes can be used to build structure-preserving one-time signatures, as we demonstrate in Sect. 4. The first two length-reducing schemes are useful in reducing the size of zero-knowledge arguments. Groth [58] showed that the square-root-size zero-knowledge arguments in [57] can be reduced to cubic-root-size zero-knowledge arguments by using our homomorphic trapdoor commitments. We explore this topic in Sect. 3.1.

There are follow-up works about structure-preserving commitments. In [9], it is proved that strictly structure-preserving commitment schemes cannot yield commitments that are shorter than messages; a commitment to a \(k\)-element message must have more than \(k\) elements itself. This should be contrasted with the relaxed structure-preserving commitment schemes we give, where a commitment to a \(k\)-element message consists of a small constant number of target-group elements.

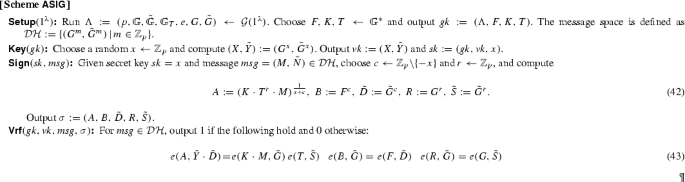

1.3 Signatures

A signature scheme is called structure-preserving if the public verification keys, messages, and signatures are source-group elements of bilinear groups, and the verification of a signature consists of evaluating pairing-product equations. It is called automorphic if in addition its verification keys lie in its message space. There are many applications using combinations of digital signatures and non-interactive zero-knowledge proofs of knowledge such as blind signatures [10, 43], group signatures [16, 19, 68], anonymous credential systems [15], verifiably encrypted signatures [26, 85], non-interactive group encryption [38] and so on, and structure-preserving signatures are ideally suited for these applications.

Research on structure-preserving signature schemes was initiated by Groth [54], who gave the first feasibility result based on the decision linear assumption (DLIN) [23]. His structure-preserving signature scheme yields a signature of size \({\mathcal {O}}(k)\) when the message consists of \(k\) group elements. While it is remarkable that the security can be based on a simple standard assumption, the scheme is not practical due to its large constant factor. Based on the \(q\)-Hidden LRSW assumption for asymmetric bilinear groups, Green and Hohenberger [53] presented a structure-preserving signature scheme that only provides security against random-message attacks. Unfortunately, an extension to chosen-message attack security is not known. In [38], Cathalo et al. gave a scheme with relaxed structure preservation based on a combination of the Hidden Strong Diffie–Hellman Assumption (HSDH), the Flexible Diffie–Hellman Assumption, and the DLIN assumption. Their signature consists of \(9 {k}+ 4\) group elements for a \(k\)-element message, and it was left as an open problem to construct constant-size signatures. There are also several signature schemes, such as [15, 22, 31, 35], where validity is defined via pairing-product equations, but whose signatures do not only contain group elements.

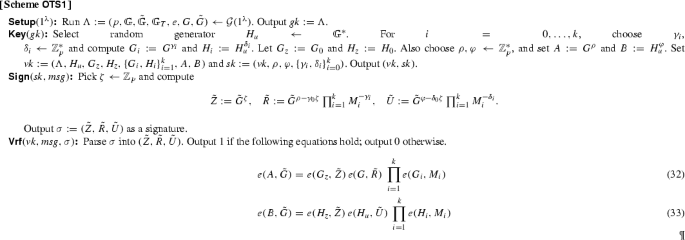

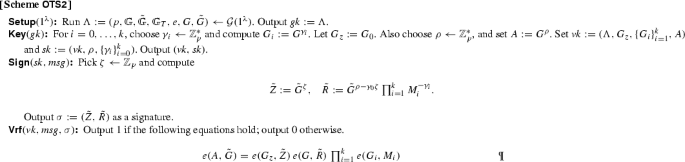

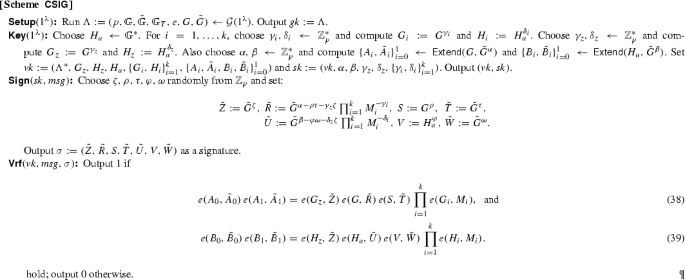

We present several constructions of structure-preserving signatures with high efficiency and useful properties.

-

Structure-PreservingOne-Time Signatures. We construct two signature schemes that are existentially unforgeable when the adversary is only allowed one signing query. The schemes are currently the most efficient in the literature, and their security follows from the decision Diffie–Hellman and the decision linear assumptions, respectively.

-

Constant-Size Structure-Preserving Signatures. We construct the first constant-size structure-preserving signature scheme. A signature consists of 7 group elements independently of the message length and the verifier needs to check two pairing-product equations. Existential unforgeability against adaptive chosen-message attacks is proven under a new non-interactive assumption called the Simultaneous Flexible Pairing Assumption (SFP).

-

Automorphic Signatures. We construct the first automorphic signature scheme, whose signatures consist of 5 group elements. We prove the scheme existentially unforgeable against adaptive chosen-message attacks under a variant of the Strong Diffie–Hellman assumption [22].

After the publication of [5], structure-preserving signatures have been intensively studied. Several constructions over asymmetric bilinear groups, i.e., where \({{\mathbb {G}}}\ne \tilde{{{\mathbb {G}}}}\), are presented in [6]. The latter shows that there is a scheme whose signature consists of only 3 group elements when the security is directly proven in the generic bilinear-group model. It also presents a scheme with 4-element signatures, which is \(3\) group elements fewer compared to our scheme in Sect. 5.1. Its security is based on a non-interactive assumption that is, however, not known to be as tight as the discrete-logarithm assumption when assessed in the generic bilinear-group model. This is the case for the assumption (\(q\)-SFP in Sect. 2.5) that implies security of our scheme.

Significant theoretical advances are made in [2, 3], which present structure-preserving signature schemes based on compact and static, i.e., not \(q\)-type, assumptions such as the decision linear assumption. The schemes are over symmetric and asymmetric bilinear groups and yield signatures consisting of 11 to 14 group elements.

1.4 Applications

The usefulness of structure-preserving cryptography as a design paradigm is demonstrated by a growing list of applications including round-optimal blind signatures [5, 49], group signatures with concurrent join [5, 49, 72, 73], homomorphic signatures [14, 71], anonymous proxy signatures [47], delegatable anonymous credentials [46], direct anonymous attestation [20], transferable e-cash [21, 48], conditional e-cash [89], compact verifiable shuffles [39], network coding [13], oblivious transfer [1, 33, 53], chosen-ciphertext-secure encryption [3, 34, 62] and many more. Among this vast number of applications, we present group signatures and blind signatures in this paper.

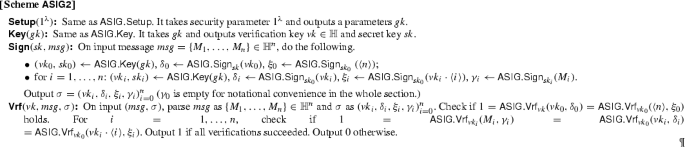

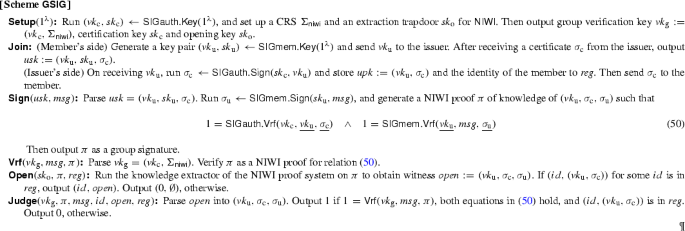

1.4.1 Group Signatures with Concurrent Join

We give a modular structure-preserving construction of group signatures [41] supporting a concurrent join procedure [68] for enrolling new members.

A group signature scheme is a classical primitive ensuring user anonymity. It allows members that were enrolled by a group manager to sign on behalf of a group without revealing their identity. To prevent misuse, anonymity can be revoked by an authority. There are numerous constructions of group signature schemes in the literature providing different properties. However, previous constructions are not in the standard model, do not provide a concurrent join procedure, lack some important property like non-frameability or are inefficient. The scheme in [68] is the first one that allows efficient concurrent join, but its security relies on the random-oracle model [18]. The scheme in [12] is non-frameable but only allows new members to join sequentially and is based on strong interactive assumptions. Both [28, 29] provide efficiency with reasonable assumptions but the group manager enrolling members knows their secret keys and can thus frame them by creating signatures using their keys. The scheme in [55] is non-frameable but does not allow concurrent join. More recent papers, such as [42, 75, 76], focus on advanced properties leaving one or more of the above issues unaddressed.

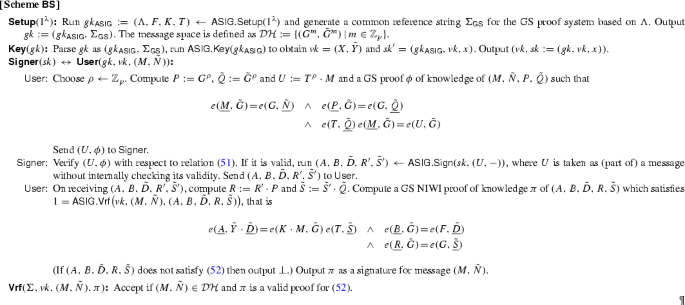

1.4.2 Round-Optimal Blind Signatures

Blind signatures, introduced by Chaum [40], allow a user to obtain a signature on a message such that the signer cannot relate the resulting signature to the execution of the signing protocol. They were formalized by [64, 84] and practical schemes without random oracles have been constructed in e.g., [36, 66, 67, 82]. However, all these schemes require more than one round (i.e., two moves) of communication between the user and the signer to issue a blind signature. This is even the case for most instantiations in the random-oracle model, an exception being Chaum’s scheme proved secure in [17] under an interactive assumption.

In [43], Fischlin gives a generic construction of round-optimal blind signatures in the common reference string (CRS) model; the signing protocol consists of one message from the user to the signer and one response by the signer. This immediately implies concurrent security, an explicit goal in other works such as [61]. Before our work, a practical instantiation of round-optimal blind signatures in the standard model was an open problem. Using our automorphic signature scheme, we provide the first efficient instantiation, which is the basis for commuting signatures and verifiable encryption in [46].

1.5 Correspondence to Preliminary Papers and Organization

This paper is based on three papers [7, 45, 56] submitted separately to crypto 2010 and presented as a merged paper [5], in which the term “structure-preserving signatures” is introduced. In [56], Groth presented the first homomorphic trapdoor commitments to group elements which are, moreover, length-reducing. Fuchsbauer [45] gave the first efficient structure-preserving signatures and used them to efficiently implement round-optimal blind signatures in the standard model. Abe et al. [7] then gave the first constant-size signature scheme on vectors of general group elements and constructed a group signature scheme with concurrently secure enrollment of new members.

Section 2 introduces notations, security notions and building blocks used in this paper. It includes, in Sect. 2.7, a useful technique that appeared in [7]. Section 3 features several homomorphic trapdoor commitment schemes, which originate from [7, 56]. Section 4 presents one-time signature schemes from [7], which can be a warm-up to the fully fledged structure-preserving signature scheme in Sect. 5. In Sect. 6, we present the automorphic signature scheme from [45] with a technique from [8] to extend the message space. Finally, Sect. 7 contains applications discussed above: the group signature scheme and the blind-signature scheme which originally appeared in [7] and [45], respectively.

2 Preliminaries

2.1 Notation

For a set \(S\), \(x \leftarrow S\) denotes assigning to \(x\) a uniformly random value in \(S\). Similarly, \(x_1,\dots ,x_k \leftarrow S\) means independent uniformly random selection of \(k\) elements from \(S\). For a probabilistic algorithm \(A\), we write \(y:=A(x;r)\) for assigning \(y\) the value of the output of the algorithm when running it on input \(x\) using randomness \(r\). We write \(y\leftarrow A(x)\) for the process of picking uniformly random \(r\) and setting \(y:=A(x;r)\).

By \(\Pr [y\leftarrow \mathrm {Exp}(x): \mathrm {Cond}(y)]\), we denote the probability that the condition \(\mathrm {Cond}\) holds for the output \(y\) of running the experiment \(\mathrm {Exp}\) on some input \(x\). The probability is taken over all coin flips used in the experiment \(\mathrm {Exp}\). Typically, the input \(x\) will be of the form \(1^\lambda \), where \(\lambda \in \mathbb {N}\) is a security parameter. We say \(f:\mathbb {N}\rightarrow [0,1]\) is negligible if \(f(\lambda )=\lambda ^{-\omega (1)}\) and \(g:\mathbb {N}\rightarrow [0,1]\) is overwhelming if \(g(\lambda )=1-f(\lambda )\) for some negligible function \(f\). When defining security, we require the attacker’s success probability to be negligible as a function of the security parameter.

We will work over groups \({{\mathbb {G}}},\tilde{{{\mathbb {G}}}},{{\mathbb {G}}}_T\) of prime order \(p\), which we denote multiplicatively. We will in general denote group elements by capital letters, i.e., \(R\in {{\mathbb {G}}}, \tilde{S}\in \tilde{{{\mathbb {G}}}}, T\in {{\mathbb {G}}}_T\). Integers modulo \(p\) will be denoted by lower case or Greek letters. We define \({{\mathbb {G}}}^*={{\mathbb {G}}}{\setminus } \{1\}\), \(\tilde{{{\mathbb {G}}}}^*=\tilde{{{\mathbb {G}}}}{\setminus } \{1\}\) and \({{\mathbb {G}}}_T^*={{\mathbb {G}}}_T{\setminus } \{1\}\). When \(\vec {X}\) is a tuple of group elements, \(|{\vec {X}}|\) denotes the number of elements in \(\vec {X}\).

When a group element is given as input to a function, its group membership must be tested. If the test fails, the function should output a special symbol that means rejection of the input. For conciseness of the description, we treat this procedure as implicit throughout the paper.

2.2 Commitment Schemes

A commitment scheme allows a sender to commit to a secret message \( msg \). Later the sender may open the commitment and reveal the value \( msg \) to the receiver. We focus on non-interactive commitment schemes where the sender and receiver do not need to interact to commit or to open commitments; both the commitment and the opening are bitstrings generated by the sender without interacting with the receiver.

We rely on a trusted setup phase where joint system parameters are generated and a commitment key is produced. We deliberately separate the setup phase in two parts \(\mathsf{Setup }\) and \(\mathsf{Key }\) to distinguish joint system parameters (which in our schemes will contain a description of bilinear groups that may be shared with other protocols such as signature schemes, encryption schemes) and the commitment key, which is specific to the commitment scheme.

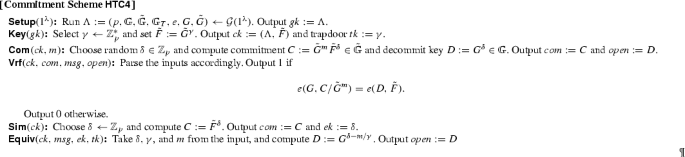

Definition 1

(Trapdoor Commitment Scheme) We define a non-interactive trapdoor commitment scheme C as a tuple of polynomial-time algorithms \({\mathsf {C}}=(\mathsf {Setup}, \mathsf {Key}, \mathsf {Com}, \mathsf {Vrf}, \mathsf {Sim}, \mathsf {Equiv})\) in which:

-

\( gk \leftarrow \mathsf{Setup }(1^\lambda )\) is a common-parameter generator that takes security parameter \(\lambda \) and outputs a set of common parameters, \( gk \).

-

\(( ck , tk ) \leftarrow \mathsf{Key }( gk )\) is a key generator that takes \( gk \) as input and outputs a commitment key \( ck \) and a trapdoor key \(tk\). The commitment key \( ck \) determines the message space \({\mathcal {M}}_ ck \), the commitment space \({\mathcal {C}}_ ck \) and the opening space \({\mathcal {O}}_ ck \).

-

\(( com , open )\leftarrow \mathsf{Com }( ck , msg )\) is a commitment algorithm that takes \( ck \) and message \( msg \in {\mathcal {M}}_ ck \) and outputs a commitment \( com \in {\mathcal {C}}_ ck \) and an opening \( open \in {\mathcal {O}}_ ck \).

-

\(1/0 \leftarrow \mathsf{Vrf }( ck , com , msg , open )\) is a verification algorithm that takes \( ck \), \( com \in {\mathcal {C}}_ ck \), \( msg \in {\mathcal {M}}_ ck \) and \( open \in {\mathcal {O}}_ ck \) as input and outputs \(1\) or \(0\) representing acceptance or rejection, respectively.

-

\(( com , ek )\leftarrow \mathsf{Sim }(ck)\) takes commitment key \( ck \) and outputs commitment \( com \in {\mathcal {C}}_ ck \) and equivocation key \( ek \).

-

\( open \leftarrow \mathsf{Equiv }( ck , msg , ek , tk )\) takes \( ck , ek , tk \) and \( msg \in {\mathcal {M}}_ ck \) as input and returns an opening \( open \).

For correctness, it must hold that for all \(\lambda \in \mathbb {N}\):

For perfect trapdoor commitment schemes, the experiment \(\{ gk \leftarrow \mathsf{Setup }(1^\lambda )\); \(( ck , tk ) \leftarrow \mathsf{Key }( gk )\); \( msg \leftarrow {\mathcal {M}}_ ck \); \(( com , open ) \leftarrow \mathsf{Com }( ck , msg )\); \(( com ', ek ) \leftarrow \mathsf{Sim }( ck )\); \( open ' \leftarrow \mathsf{Equiv }( ck , msg , ek , tk )\}\) must yield identical probability distributions for \(( ck , msg , com , open )\) and \(( ck , msg , com ', open ')\).

In this paper, we consider \(\mathsf{Vrf }\) as deterministic and say that \(( msg , com , open )\) is valid with respect to \( ck \) if \(1 \leftarrow \mathsf{Vrf }( ck , com , msg , open )\). It follows from the correctness of the commitment scheme that in a perfect trapdoor commitment scheme, the equivocation of a trapdoor commitment will be accepted by the verifier.

Commitment schemes must be hiding and binding. Since commitments generated by \(\mathsf{Sim }\) do not contain any information about the message, a perfect trapdoor commitment scheme is perfectly hiding in the sense that legitimate commitments do not reveal anything about the message. For the binding property, we use the following standard definition.

Definition 2

(Binding) A commitment scheme is computationally binding if for any probabilistic polynomial-time adversary \({\mathcal {A}}\)

is negligible.

Definition 1 follows a typical definition of a trapdoor commitment scheme with a simulation algorithm \(\mathsf{Sim }\) that generates fake commitments. A slightly stronger definition referred to as a chameleon hash [69] demands \(\mathsf{Equiv }\) to equivocate legitimate commitments generated by \(\mathsf{Com }\).

Definition 3

(Homomorphic) A commitment scheme is homomorphic if for any correctly generated \( ck \), the message, commitment and opening spaces are abelian groups with binary operations “\(\cdot \),” “\(\odot \)” and “\(\otimes \),” respectively, and for all \(( msg , com , open )\) and \(( msg ', com ', open ')\) valid with respect to \( ck \), it holds that \(1\leftarrow \mathsf{Vrf }( ck , com \cdot com ', msg \odot msg ', open \otimes open ')\).

2.3 Digital Signatures

Definition 4

(Digital Signature Scheme) A digital signature scheme \(\mathsf{SIG }\) is a quadruple of efficient algorithms \((\mathsf{Setup }, \mathsf{Key }, \mathsf{Sign }, \mathsf{Vrf })\) such that

-

\( gk \leftarrow \mathsf{Setup }(1^\lambda )\) is a common-parameter generator that takes security parameter \(\lambda \) and outputs a set of common parameters \( gk \).

-

\(( vk , sk ) \leftarrow \mathsf{Key }( gk )\) is a key generation algorithm that takes common parameters \( gk \) and generates a verification key \( vk \) and a signing key \( sk \). The verification key \(vk \) defines the message space \({\mathcal {M}}\).

-

\(\sigma \leftarrow \mathsf{Sign }( sk , msg )\) is a signature-generation algorithm that computes a signature \(\sigma \) for input message \( msg \in {\mathcal {M}}\) by using signing key \( sk \).

-

\(0/1 \leftarrow \mathsf{Vrf }( vk , msg ,\sigma )\) is a signature-verification algorithm that outputs 1 for acceptance or 0 for rejection.

We require the signature scheme to be correct, i.e., for any \(( vk , sk )\) generated by \(\mathsf{Key }\), any message \( msg \in {\mathcal {M}}\) and any signature \(\sigma \) output by \(\mathsf{Sign }( sk , msg )\) the verification \(\mathsf{Vrf }( vk , msg ,\sigma )\) outputs 1.

We use the standard notion of existential unforgeability against adaptive chosen-message attacks [52] (EUF-CMA for short), which says it is not possible to forge a signature on a previously unsigned message.

Definition 5

(Existential Unforgeability against Adaptive Chosen-Message Attacks) A signature scheme is existentially unforgeable against adaptive chosen-message attacks if for any probabilistic polynomial-time adversary \({{\mathcal {A}}}\)

is negligible, where \(Q_m\) is the set of messages that were queried to the signing oracle \(\mathsf{Sign }( sk ,\cdot )\).

By requiring \((m^{\star },\sigma ^{\star }) \not \in Q_{m,\sigma }\), where \(Q_{m,\sigma }\) are the pairs of messages and signatures observed by \(\mathsf{Sign }( sk ,\cdot )\), we obtain the notion of Strong EUF-CMA (denoted by \({\hbox {sEUF-CMA}} \) for short). This ensures that even if a message has been signed before, it is not possible to forge a new different signature on the message.

2.4 Bilinear Groups

We say \({\mathcal {G}}\) is a bilinear-group generator if on input security parameter \(\lambda \) returns the description of a bilinear group \(\Lambda =(p,{{\mathbb {G}}},\tilde{{{\mathbb {G}}}},{{\mathbb {G}}}_T,e,G,\tilde{G})\leftarrow {\mathcal {G}}(1^\lambda )\) with the following properties:

-

\({{\mathbb {G}}},\tilde{{{\mathbb {G}}}}\) and \({{\mathbb {G}}}_T\) are groups of prime order \(p\), whose bit-length is \(\lambda \).

-

\(e:{{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\rightarrow {{\mathbb {G}}}_T\) is a bilinear map, that is \(e(U^a,\tilde{V}^b)=e(U,\tilde{V})^{ab}\) for all \(U\in {{\mathbb {G}}},\tilde{V}\in \tilde{{{\mathbb {G}}}},a,b\in {{\mathbb {Z}}}_p\).

-

\(G\) and \(\tilde{G}\) are uniformly chosen generators of \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\), and \(e(G,\tilde{G})\) generates \({{\mathbb {G}}}_T\).

-

There are efficient algorithms for computing group operations, evaluating the bilinear map, comparing group elements and deciding membership of the groups. We refer to these as generic operations.

Galbraith et al. [51] distinguish between 3 types of bilinear-group generators. Type I groups have \({{\mathbb {G}}}=\tilde{{{\mathbb {G}}}}\) and are called symmetric bilinear groups. By \({\mathcal {G}}_{\mathsf{sym }}\), we denote a group generator that takes security parameter \(\lambda \) and outputs a description of a symmetric bilinear group \(\Lambda =(p,{{\mathbb {G}}},{{\mathbb {G}}}_T,e,G)\). Type II and Type III groups, which are referred to as asymmetric bilinear groups, have \({{\mathbb {G}}}\ne \tilde{{{\mathbb {G}}}}\), and we may sometimes write \(\Lambda \leftarrow {\mathcal {G}}_{\mathsf{asym }}(1^\lambda )\) to emphasize when we are generating asymmetric bilinear groups. Type II groups have an efficiently computable homomorphism \(\psi :\tilde{{{\mathbb {G}}}}\rightarrow {{\mathbb {G}}}\), while Type III groups do not have efficiently computable homomorphisms in either direction. When we need to explicitly discriminate \(\Lambda \) in Type I, II and III, we write \(\Lambda _{\mathsf{sym }}\), \(\Lambda _{\mathsf{xdh }}\) and \(\Lambda _{\mathsf{sxdh }}\), respectively.

The commitment and signature schemes in this work will have a common setup phase which generates common parameters \( gk \). These will always consist of a description of a bilinear group \(\Lambda \leftarrow {\mathcal {G}}(1^\lambda )\) and in some cases contain additional generators. This definitional approach means that many different structure-preserving protocols may use the same setup \( gk \) and thus work over the same bilinear group, which is what makes them interoperable and easy to combine.

Note that in the symmetric case \(\Lambda \) includes a generator \(G\), and in the asymmetric case, it includes two generators \(G\) and \(\tilde{G}\). Some constructions and assumptions described for asymmetric bilinear groups can accommodate the symmetric case by considering \({{\mathbb {G}}}= \tilde{{{\mathbb {G}}}}\) and \(G = \tilde{G}\). Some applications require \(\tilde{G}\ne G\) in symmetric bilinear groups. In these cases, we pick a random generator, say \(H\), and set \(\tilde{G}= H\). A note will be given when such a treatment is necessary.

2.5 Assumptions

Our commitment and signature schemes rely on different assumptions regarding the bilinear groups we use. Most of the assumptions have reductions from the well-known decisional assumptions DDH and DLIN, but we will also rely on two new computational assumptions \(q\)-SFP and \(q\)-ADH-SDH, which we will describe later in the section. All assumptions are defined relative to a group generator. Therefore, every construction in the succeeding sections assumes that there exists a group generator for which relevant assumptions hold.

Assumption 1

(Decision Diffie–Hellman Assumption (DDH)) The decision Diffie–Hellman assumption holds in \({{\mathbb {G}}}\) relative to \({\mathcal {G}}_{\mathsf{asym }}\) if for all probabilistic polynomial-time \({{\mathcal {A}}}\)

is negligible.

The decision Diffie–Hellman assumption in \(\tilde{{{\mathbb {G}}}}\) is defined analogously. In the bilinear-group setting the decision Diffie–Hellman assumption in \({{\mathbb {G}}}\) (which we denote \(\mathrm{DDH } _{{{\mathbb {G}}}}\)) or in \(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}}\) is sometimes referred to as the external Diffie–Hellman (XDH) assumption. The assumption that DDH holds in both \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\) is sometimes referred to as the symmetric external Diffie–Hellman (SXDH) assumption. The DDH assumption cannot hold in symmetric bilinear groups, in which the decision linear assumption may be made instead.

Assumption 2

(Decision Linear Assumption (DLIN)) The decision linear assumption [23] holds in \({{\mathbb {G}}}\) relative to \({\mathcal {G}}\) if for all probabilistic polynomial-time \({{\mathcal {A}}}\)

is negligible.

The 2-out-of-3 CDH assumption [70] states that given a tuple of group elements \((G,G^a,H)\), it is hard to output \((G^r,H^{ar})\) for an arbitrary \(r\ne 0\). To break the Flexible CDH assumption [74], an adversary must additionally compute \(G^{ar}\). We further weaken the assumption by defining a solution as \((G^r,G^{ar},H^r,H^{ar})\) and generalize it to asymmetric groups by letting \(G\in {{\mathbb {G}}}\) and \(H=\tilde{G}\in \tilde{{{\mathbb {G}}}}\) (whereas in symmetric groups, we let \(H\) be an additional generator of \({{\mathbb {G}}}\) playing the role of \(\tilde{G}\)). The asymmetric weak flexible CDH is formalized as follows:

Assumption 3

(Asymmetric Weak Flexible CDH Assumption (AWF-CDH)) We say that the asymmetric weak flexible computational Diffie–Hellman assumption holds relative to \({\mathcal {G}}\) if for all probabilistic polynomial-time adversaries \({{\mathcal {A}}}\)

is negligible.

Lemma 1

If \(\mathrm{DDH } _{{{\mathbb {G}}}}\) holds relative to \({\mathcal {G}}\), then AWF-CDH holds relative to \({\mathcal {G}}\).

Proof

Let \((\Lambda ,G^a,G^b,G^c)\) be an instance of \(\mathrm{DDH } _{{{\mathbb {G}}}}\). We have to decide whether \(c=ab\). On input \((\Lambda ,G^a)\), a successful AWF-CDH adversary outputs \((G^r,G^{ra},\tilde{G}^r,\tilde{G}^{ra})\). We can thus check whether \(e(G^c,\tilde{G}^r)=e(G^{ab},\tilde{G}^r)=e(G^b,\tilde{G}^{ra})\).\(\square \)

Assumption 4

(Double Pairing Assumption (DBP)) We say the double pairing assumption holds relative to \({\mathcal {G}}_{\mathsf{asym }}\) if for any probabilistic polynomial-time algorithm \({\mathcal {A}}\)

is negligible.

Lemma 2

If \(\mathrm{DDH } _{{\mathbb {G}}}\) holds relative to \({\mathcal {G}}_{\mathsf{asym }}\), then so does \(\mathrm{DBP } _{{\mathbb {G}}}\) relative to \({\mathcal {G}}_{\mathsf{asym }}\).

Proof

Suppose that there is an adversary \({\mathcal {A}}\) that breaks the DBP assumption. Namely, \({\mathcal {A}}\) finds a pair \((\tilde{Z},\tilde{R})\ne (1,1)\) satisfying the equation \(e(G_z,\tilde{Z})\,e(G,\tilde{R})=1\) for randomly chosen \(G_z\) with more than negligible probability. We will construct an adversary \({\mathcal {B}}\) which breaks \(\mathrm{DDH } _{{{\mathbb {G}}}}\) by using \({\mathcal {A}}\) as a black-box.

Given a \(\mathrm{DDH } _{{{\mathbb {G}}}}\) challenge tuple \((\Lambda ,A,B,C)=(\Lambda ,G^a,G^b,G^c)\), algorithm \({\mathcal {B}}\) gives \({\mathcal {A}}\) an input \((\Lambda ,A)\). If \({\mathcal {A}}\) outputs \((\tilde{Z},\tilde{R})\ne (1,1)\) satisfying \(e(G,\tilde{Z})\,e(A,\tilde{R})=1\), it is true that \(\tilde{Z}=\tilde{R}^{-a}\). \({\mathcal {B}}\) outputs \(1\) if \(e(B,\tilde{Z})\ e(C,\tilde{R}) = 1\) and outputs 0, otherwise. This strategy is correct since \(e(B,\tilde{Z})\, e(C,\tilde{R})=e(B,\tilde{R}^{-a})\, e(C,\tilde{R})=e(G,\tilde{R})^{c-ab}\) equals to 1 if and only if \(c=ab \mod p\). Thus, \({\mathcal {B}}\) breaks \(\mathrm{DDH } _{{{\mathbb {G}}}}\) if \({\mathcal {A}}\) breaks \(\mathrm{DBP } _{{{\mathbb {G}}}}\).\(\square \)

We could consider a dual assumption of DBP by swapping \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\). When we need to discriminate these assumptions, we call this assumption DBP in \({{\mathbb {G}}}\) (as it is implied by DDH in \({{\mathbb {G}}}\)) and the dual assumption DBP in \(\tilde{{{\mathbb {G}}}}\), and denote them as \(\mathrm{DBP } _{{{\mathbb {G}}}}\) and \(\mathrm{DBP } _{\tilde{{{\mathbb {G}}}}}\), respectively. Analogously to Lemma 2, \(\mathrm{DBP } _{\tilde{{{\mathbb {G}}}}}\) holds if \(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}}\) holds. Therefore, if DDH holds in both \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\), then DBP holds in both \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\).

Corollary 1

If SXDH holds relative to \({\mathcal {G}}\), then DBP holds in both \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\) relative to \({\mathcal {G}}\).

DBP does not hold in symmetric bilinear groups and we will therefore also rely on the Simultaneous Double Pairing Assumption (SDP), which is plausible in both symmetric and asymmetric bilinear groups.

Assumption 5

(Simultaneous Double Pairing Assumption (SDP)) The simultaneous double pairing assumption SDP holds in \({{\mathbb {G}}}\) relative to \({\mathcal {G}}\) if for all probabilistic polynomial-time adversaries \({{\mathcal {A}}}\)

is negligible.

The following lemma is proved in [38].

Lemma 3

If the DLIN assumption holds relative to a symmetric bilinear groups generator \({\mathcal {G}}_{\mathsf{sym }}\), then the SDP assumption holds relative to \({\mathcal {G}}_{\mathsf{sym }}\).

Assumption 6

(External Diffie–Hellman Inversion Assumption (XDHI)) The XDHI assumption holds relative to \({\mathcal {G}}\) if for all probabilistic polynomial-time adversaries \({{\mathcal {A}}}\)

is negligible.

Assumption 7

(Co-Computational Diffie–Hellman Assumption (co-CDH)) The co-CDH assumption [25] holds relative to \({\mathcal {G}}\) if for all probabilistic polynomial-time adversaries \({{\mathcal {A}}}\)

is negligible.

Depending on the type of the bilinear group \(\Lambda \), the XDHI assumption is implied by standard assumptions, such as the computational Diffie–Hellman assumption (CDH), the co-Diffie–Hellman assumption (co-CDH) and the decisional Diffie–Hellman assumption in \(\tilde{{{\mathbb {G}}}}\) (\(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}}\)), as follows. Note that, \(\mathrm{CDH } \) is implied by \(\mathrm{DLIN } \) in \(\Lambda _{\mathsf{sym }}\) and \(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}}\) is implied by SXDH in \(\Lambda _{\mathsf{sxdh }}\).

Lemma 4

\(\mathrm{CDH } \Rightarrow \mathrm{XDHI } \) for \({\mathcal {G}}_{\mathsf{sym }}\). \(\mathrm{co-CDH } \Rightarrow \mathrm{XDHI } \) for \({\mathcal {G}}_{\mathsf{asym }}\). \(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}} \Rightarrow \mathrm{XDHI } \) for \({\mathcal {G}}_{\mathsf{asym }}\).

Proof

Let \({\mathcal {A}}\) be an XDHI adversary with respect to \({\mathcal {G}}_{\mathsf{sym }}\) and let \(\Lambda _{\mathsf{sym }}= (p, {{\mathbb {G}}}, {{\mathbb {G}}}_T, G, e) \leftarrow {\mathcal {G}}_{\mathsf{sym }}(1^\lambda )\). Note that \(G\) is a uniformly chosen generator. Given a CDH instance \((\Lambda _{\mathsf{sym }}, G^\alpha , G^\beta )\), set \(\Lambda _{\mathsf{sym }}' = (p, {{\mathbb {G}}}, {{\mathbb {G}}}_T, G^\alpha , e)\) and run \({{\mathcal {A}}}\) on the XDHI instance \((\Lambda _{\mathsf{sym }}', G^\beta , G)\). It outputs \(G^{\alpha \beta }\), which is the solution to the CDH instance.

For the second implication, given an co-CDH instance \((\Lambda _{\mathsf{xdh }}, G^{\alpha }, \tilde{G}^{\beta }) \in {{\mathbb {G}}}^* \times \tilde{{{\mathbb {G}}}}^*\), choose \(\tilde{G}' \leftarrow \tilde{{{\mathbb {G}}}}\), set \(\Lambda _{\mathsf{xdh }}' = (p, {{\mathbb {G}}}, \tilde{{{\mathbb {G}}}}, {{\mathbb {G}}}_T, G^\alpha , \tilde{G}', e)\) and run \({{\mathcal {A}}}\) on input \((\Lambda _{\mathsf{xdh }}', \tilde{G}^\beta , \tilde{G})\). It outputs \(G^{\alpha \beta }\), which is the solution to the co-CDH instance.

For the third implication, given an instance \((\Lambda _{\mathsf{sxdh }}, \tilde{G}^{\alpha }, \tilde{G}^{\beta }, \tilde{G}^{\gamma })\) of \(\mathrm{DDH } _{\tilde{{{\mathbb {G}}}}}\), choose \(\tilde{G}' \leftarrow \tilde{{{\mathbb {G}}}}\), set \(\Lambda _{\mathsf{sxdh }}' = (p, {{\mathbb {G}}}, \tilde{{{\mathbb {G}}}}, {{\mathbb {G}}}_T, G, \tilde{G}', e)\) and run \({{\mathcal {A}}}\) on input \((\Lambda _{\mathsf{sxdh }},\tilde{G}^{\alpha }, \tilde{G})\). If successful, \({{\mathcal {A}}}\) outputs \(G^{\alpha }\). Then, \(\gamma = \alpha \beta \) can be tested by checking if \(e(G^\alpha , \tilde{G}^{\beta }) = e(G, \tilde{G}^{\gamma })\) holds or not.\(\square \)

We wish to extend SDP in such a way that even if we are given some solutions, it should be hard to find another solution. Observe that given an answer to an instance of SDP, one can easily get more answers by exploiting the linearity of the relation to be satisfied. We eliminate this linearity by multiplying random pairings to both sides of the SDP equations. We call the added random pairing a flexible pairing since; on the one hand, it provides non-malleability in that solutions cannot be merged, and on the other hand, it can be easily randomized or combined with other solutions if their secret exponents are known.

Assumption 8

(Simultaneous Flexible Pairing Assumption (\(q\)-SFP)) Let \({\mathcal {G}}_{{\mathrm{SFP }}}\) denote an algorithm that takes a group description \(\Lambda \) as input and generates parameters \(P_{{\mathrm{SFP }}}:= (G_z,H_z,H_{u},A,\tilde{A},B,\tilde{B})\) where \(G_z\), \(H_z\), \(H_{u}\) are random generators of \({{\mathbb {G}}}\), and \((A,\tilde{A})\), \((B,\tilde{B})\) are random elements in \({{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\). For \(\Lambda \) and \(P_{{\mathrm{SFP }}}\), let \({\mathcal {I}}_{{\mathrm{SFP }}}\) denote the set of tuples \({I}_j=(\tilde{Z}_{j},\tilde{R}_{j},\tilde{U}_{j}, S_{ij},\tilde{T}_{ij},V_{ij},\tilde{W}_{ij}) \in \tilde{{{\mathbb {G}}}}^*\times \tilde{{{\mathbb {G}}}}\times \tilde{{{\mathbb {G}}}}\times {{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\times {{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\) that satisfy

For \(I_1,\dots ,I_q\), let \(Z(I_1,\dots ,I_q)\) denote collection of \(\tilde{Z}_j\) in \(I_j\). We say the \(q\)-simultaneous flexible pairing assumption holds relative to \({\mathcal {G}}\) if for any probabilistic polynomial-time adversary \({{\mathcal {A}}}\)

is negligible.

Note that the definition deliberately makes the restriction \(\tilde{Z}\in {{\mathbb {G}}}^*\), since \(\tilde{Z}=1\) would make the problem easily solvable.

To show that the \(q\)-SFP assumption is plausible, we will now prove that it holds the generic group model where the adversary only uses the generic bilinear-group operations.

Theorem 1

For any generic algorithm \({\mathcal {A}}\), the probability that \({\mathcal {A}}\) breaks the \(q\)-SFP assumption with \(\ell \) group operations and pairings is bounded by \({\mathcal {O}}(q^2 + \ell ^2)/p\).

Before proving the theorem, we discuss implications of the above bound. In the real computation, the group operation over two elements corresponds to addition of their indices and the pairing operation corresponds to their multiplication. In the generic group model, these indices are simulated by addition and multiplication over variables and formulas. Among the elements initially given to algorithm \({\mathcal {A}}\) there are independent random group elements whose indices are unknown. In the simulation, these unknown indices are treated as independent variables. A group element related to those elements is indexed by a formula that describes the relation. Executions of group operations and pairings are simulated by adding or multiplying the formulas associated with the elements given as inputs to the operations. Since different formulas are supposed to represent different group elements, simulation becomes inconsistent to the real computation if any two indices represented by different formulas evaluate to the same value when concrete random values are assigned to the variables. The probability of inconsistent simulation is therefore an upper bound to any generic algorithm.

If all the formulas are polynomials, the index of a new group element yielded by a generic operation is a polynomial in the variables. For polynomials of total degree less than \(d\), the bound after \(\ell \) group operations with \(k\) initial group elements is given as \({\mathcal {O}}(d \cdot (\ell ^2+k^2))/p\) by Schwartz’s lemma [87, 88]. Consider the case of DL where the initially given group elements are \(G\) and \(G^x\). The above argument tells that the index formula is a polynomial of degree 1 and the bound is \({\mathcal {O}}(\ell ^2)/p\). In the case of SDH, the initial elements are \(G, G^x, G^{x^2}, \dots , G^{x^q}\). Thus, the index formula will be a polynomial of degree \(q\), and the bound is \({\mathcal {O}}(q \cdot (\ell ^2 + q^2))/p\). The loss factor of \(q\) can be as huge as the number of signature issuing, and hence, the security of SDH is far from DL. On the other hand, as we show in the proof, the indices of the initial input to SFP can be represented by Laurent polynomials of form \(\frac{y}{x}\) with a common variable \(x\) of a small constant degree in the denominator. The formula in the numerator varies for elements, but they remain of degree 1. Accordingly, the index of a new group element is a Laurent polynomial of the same form. By offsetting the common denominator \(x\) and applying Schwartz’s lemma to the resulting regular polynomial of degree 2, we have the bound of \({\mathcal {O}}(\ell ^2 + q^2)/p\), which is close to the optimal bound in DL.

Proof

Let us without loss of generality assume we are in the symmetric setting where \({{\mathbb {G}}}=\tilde{{{\mathbb {G}}}}\) and \(G=\tilde{G}\). The symmetric setting gives the adversary more freedom, since it is not restricted to treat elements in \({{\mathbb {G}}}\) and elements in \(\tilde{{{\mathbb {G}}}}\) separately, so proving the lemma for the symmetric case automatically yields a proof in the asymmetric case too.

Using the generic group operations, the adversary can compute \(\tilde{Z},\tilde{R},S,\tilde{T},\tilde{U},V,\tilde{W}\) as linear combinations of the \(8+7q\) elements \(G\), \(G_z\), \(H_z\), \(H_{u}\),\(A,\tilde{A}\), \(B,\tilde{B}\), \(\{\tilde{Z}_{j},\tilde{R}_{j}, S_{ij},\tilde{T}_{ij},\tilde{U}_{j},V_{ij},\tilde{W}_{ij}\}_{j=1}^q\). Taking discrete logarithms of all the group elements with respect to base \(G\), this means the adversary can compute \(z,r,s,t,u,v,w\) as linear combination of \(1\), \(g_z,h_z,h_u,a,\tilde{a},b,\tilde{b},\{z_{j},r_{j}, s_{ij},t_{ij},u_{j},v_{ij},w_{ij}\}_{j=1}^q\), where

We will first consider \(z,r,s,t,u,v,w\) as formal Laurent polynomials in \(\mathbb {Z}_p[g_z,h_z,h_u,\) \(a,\tilde{a},b,\tilde{b},z_{1},\ldots ,w_{iq}]\). This means the adversary picks known coefficients \(\zeta _*\in \mathbb {Z}_p\) and computes

and constructs the Laurent polynomials \(r,s,t,u,v,w\) in a similar way with coefficients labeled \(\rho _*,\sigma _*,\tau _*,\mu _*\), etc., respectively.

Suppose the adversary’s Laurent polynomials satisfy the two verification equations

Let EQ3 be the equations obtained by substituting \(z,r,s,t\) in (4) with corresponding Laurent polynomials. We will first show that EQ3 implies \(\sigma _{r_{j}}=0\) for all \(j\). Suppose for contradiction that \(\sigma _{r_{i}}\ne 0\) for some \(i\). The term \(s_{i}^2t_{i}^2\) only appears in the product \(s\cdot t\), so its coefficient \(\sigma _{r_{i}}\tau _{r_{i}}\) must be 0. This means \(\tau _{r_{i}}=0\). Looking at other terms, we see that most of the coefficients in \(t\) are 0, the term \(s_{i}t_{i}a\) for instance has coefficient \(\sigma _{r_{i}}\tau _{a}\) and gives us \(\tau _{a}=0\), and we get that \(t=\tau _{g_z}g_z+\tau _{g_r}\). The coefficients of the \(s_{j}t_{j}\) terms now give us \(\rho _{r_{j}}+\sigma _{r_{j}}\tau _{g_r}=0\) for all \(j\). Putting all this together, we see the right-hand side of EQ3 has coefficient \(0\) for the \(a\tilde{a}\) term. But the left hand side of EQ3 is \(a\tilde{a}\) yielding a contradiction. We conclude \(\sigma _{r_{j}}=0\) for all \(j\) and by symmetry of \(s\) and \(t\), we also get that \(\tau _{r_{j}}=0\) for all \(j\).

If \(\rho _{r_{i}}\ne 0\) for some \(i\), we get without loss of generality from EQ3 that \(\sigma _{s_{i}}\ne 0\) and \(\tau _{t_{i}}\ne 0\). The coefficients of \(s_{i}^2\) and \(t_{i}^2\) in \(s\cdot t\) have to be 0 since these terms do not appear in \(g_z\cdot z\) or \(r\), so we get \(\sigma _{t_{i}}=0\) and \(\tau _{s_{i}}=0\). Looking at coefficients for terms involving \(s_{i}\) in EQ3, we see that most of them must have 0 coefficients. We therefore get \(t=\tau _{g_z}g_z+\tau _{g_r}+\tau _{t_{i}}t_{i}\). Symmetrically, we get \(s=\sigma _{g_z}g_z+\sigma _{g_r}+\sigma _{s_{i}}s_{i}\). The terms of \(s_{j}t_{j}\) for \(j\ne i\) in EQ3 now give us \(\rho _{r_{j}}=0\) for \(j\ne i\). Since the left-hand side of EQ3 has the term \(a\tilde{a}\), we see \(\rho _{r_{i}}=1\). The \(g_zz_{i}\) terms now shows us that \(\zeta _{z_{i}}=1\). Additional inspection of the different terms gives us \(z=\zeta _{g_z}g_z+\zeta _{g_r}+z_{i}+\zeta _{s_{i}}s_{i}+\zeta _{t_{i}}t_{i}.\)

We now consider the other possibility that \(\rho _{r_{j}}\) is \(0\) for all \(j\). The term \(a\tilde{a}\) gives us without loss of generality that \(\sigma _{a}\ne 0\) and \(\tau _{\tilde{a}}\ne 0\). The coefficients of \(a^2\) and \(\tilde{a}^2\) show us \(\tau _{a}=0\) and \(\sigma _{\tilde{a}}=0\). Inspecting the other terms, we get \(t=\tau _{g_z}g_z+\tau _{g_r}+\tau _{\tilde{a}}\tilde{a}\) and \(s=\sigma _{g_z}g_z+\sigma _{g_r}+\sigma _{a}a\). It then follows by looking at different terms that \(z=\zeta _{g_z}g_z+\zeta _{g_r}+\zeta _{a}a+\zeta _{\tilde{a}}\tilde{a}.\)

We have now deduced from (4) that \(z=\zeta _{g_z}g_z+\zeta _{g_r}+\zeta _{a}a+\zeta _{\tilde{a}}\tilde{a}\) or \(z=\zeta _{g_z}g_z+\zeta _{g_r}+z_{i}+\zeta _{s_{i}}s_{i}+\zeta _{t_{i}}t_{i}\) for some \(i\). By symmetry, we get from (5) that \(z=\zeta _{h_z}h_z+\zeta _{h_u}h_u+\zeta _{b}b+\zeta _{\tilde{b}}\tilde{b}\) or \(z=\zeta _{h_z}h_z+\zeta _{h_u}h_u+z_{i}+\zeta _{v_{i}}v_{i}+\zeta _{w_{i}}w_{i}\) for some \(i\). The equations can be consistent only if \(z\in \{0,z_1,\ldots ,z_q\}\). Neither of those choices of \(z\) would constitute a successful breach of the assumption, so we conclude that there are no formal Laurent polynomials the adversary can use to violate the assumption.

When instantiating the bilinear groups, we pick \(g_z,h_z,h_u,z_{j}\leftarrow \mathbb {Z}_p^*\) and \(a,\tilde{a},b,\tilde{b},\) \(s_{j},t_{j},v_{j},w_{j}\leftarrow \mathbb {Z}_p\). In a typical run, the adversary would expect group elements corresponding to different Laurent polynomials to be different but there is some probability that this fails; when it fails, the adversary may be able to exploit it to break the assumption. The generic adversary’s probability of success can therefore be bounded by the chance that two different Laurent polynomials collide on random inputs in \(\mathbb {Z}_p\).

Let \(F\) and \(F'\) be two different Laurent polynomials associated with elements in \({{\mathbb {G}}}\) computed by the adversary. By multiplying \(F\) and \(F'\) by \(h_u\), we get two different degree 3 polynomials. The probability of having a collision is therefore bounded by \(\frac{3}{p-1}\) according to Schwartz’s lemma [87]. Having initially \(8 + 7 q\) elements, we get after \(\ell _1\) group operations in \({{\mathbb {G}}}\) an upper bound of

for Laurent polynomials for elements in \({{\mathbb {G}}}\) evaluating to the same value.

Using the pairing operation, the adversary gets Laurent polynomials for elements in \({{\mathbb {G}}}_T\) corresponding to products of Laurent polynomials for elements in \({{\mathbb {G}}}\). Multiplying by \(h_u^2\), we get polynomials of degree at most \(6\). The risk of having a collision after \(\ell _T\) pairing operations and group operations in \({{\mathbb {G}}}_T\) is bounded by \(\left( {\begin{array}{c}\ell _T\\ 2\end{array}}\right) \cdot \frac{6}{p-1} = \frac{{\mathcal {O}}(\ell _T^2)}{p}\). By setting \(\ell = \ell _1 + \ell _T\), we simplify the sum of the upper bounds to \(\frac{{\mathcal {O}}(q^2 + \ell ^2)}{p}\) as stated in Theorem 1.\(\square \)

Given an answer \((\tilde{Z},\tilde{R},\tilde{U})\) to the SDP problem (Assumption 5) then setting \((S, \tilde{T}, V, \tilde{W}) := (A, \tilde{A}, B, \tilde{B})\) results in a correct solution \((\tilde{Z},\tilde{R},\tilde{U},S,\tilde{T},V,\tilde{W})\) to the SFP problem. We thus obtain the following:

Lemma 5

If the \(q\)-SFP assumption (for arbitrary \(q\)) holds relative to \({\mathcal {G}}\), then the SDP assumption holds relative to \({\mathcal {G}}\).

Proof

Suppose that there exists an algorithm \({\mathcal {A}}\) that successfully finds a valid answer \((\tilde{Z}, \tilde{R}, \tilde{U})\) to SDP. We construct an algorithm that breaks SFP as follows. Given an SFP instance \((\Lambda , G_z, H_z, H_{u}, A, \tilde{A}, B, \tilde{B}, {I}_1, \dots , {I}_{q})\), input \((\Lambda ,G_z, H_z, H_{u})\) to \({\mathcal {A}}\). If \({\mathcal {A}}\) outputs \((\tilde{Z}^{\star }, \tilde{R}^{\star }, \tilde{U}^{\star })\) breaking the SDP instance, set \((S^{\star }, \tilde{T}^{\star }, V^{\star }, \tilde{W}^{\star }) := (A, \tilde{A}, B, \tilde{B})\) and output \({I}^{\star }=(\tilde{Z}^{\star },\tilde{R}^{\star },\tilde{U}^{\star },S^{\star },\tilde{T}^{\star },V^{\star },\tilde{W}^{\star })\).

Multiplying \(1 = e(G_z,\tilde{Z}^{\star }), e(G_{r},\tilde{R}^{\star })\) to both sides of \(e(A,\tilde{A}) = e(S^{\star },\tilde{T}^{\star })\) results in Eq. (2). Similarly, multiplying \(1 = e(H_z,\tilde{Z}^{\star })\,e(H_{u},\tilde{U}^{\star })\) to both sides of \(e(B,\tilde{B}) = e(V^{\star },\tilde{W}^{\star })\) results in Eq. (3). Thus, \(I^{\star }\) satisfies the SFP equations. Since \((\tilde{Z}^{\star },\tilde{R}^{\star },\tilde{U}^{\star })\) is a valid answer to SDP, \(\tilde{Z}^{\star } \ne 1\) holds. Since every \(\tilde{Z}_j\) in \(I_j\) is uniformly chosen and independent of \((G_z, H_z, H_{u}, A, \tilde{A}, B, \tilde{B})\), it is independent of the view of the adversary. Thus, \(\tilde{Z}^{\star } = \tilde{Z}_j\) happens only with negligible probability for every \(j\in \{1,\dots ,q\}\). Thus, \(I^{\star }\) is a correct and valid answer to the \(q\)-SFP instance.\(\square \)

Our last assumption is a variant of the \(q\)-strong Diffie–Hellman (SDH) assumption [22]. In [48], it is shown that SDH implies hardness of the following two problems in bilinear groups:

-

1.

Given \(G,G^x\) and \(q-1\) random pairs \((G^\frac{1}{x+c_i},c_i)\), output a new pair \((G^\frac{1}{x+c},c)\).

-

2.

Given \(G,K,G^x\) and, for random \(c_i,v_i\): \(\big ((K\cdot G^{v_i})^\frac{1}{x+c_i},c_i,v_i\big )_{i=1}^{q-1}\), output a new \(((K\cdot G^{v})^\frac{1}{x+c},c,v)\).

Boyen and Waters [29] define the Hidden SDH assumption which states that the first problem is hard when the pairs are substituted with triples of the form \((G^{1/(x+c_i)},G^{c_i},H^{c_i})\), for a fixed generator \(H\); the scalar \(c_i\) is thus “hidden.” Analogously, we define a variant of the second problem by “hiding” the scalars \(c_i\) and \(v_i\), stating that given \(F,G,H,K,G^x,H^x,\big ((K\!\cdot G^{v_i})^\frac{1}{x+ c_i}, F^{c_i}, H^{c_i}, G^{v_i}, H^{v_i} \big )_{i=1}^{q-1}\), it is hard to output a tuple \((A=(K\!\cdot G^{v})^\frac{1}{x+ c}, B=F^{c}, D=H^{c}, V=G^{v}, W=H^{v})\) with \((c,v) \ne (c_i,v_i)\) for all \(i\). Due to the pairing, a tuple can still be effectively verified. The assumption holds in the generic group model for both asymmetric and symmetric groups, and we state it for the former.

Assumption 9

(Asymmetric Double Hidden Strong DH Assumption (\(q\)-ADH-SDH)) Let \({\mathcal {G}}_{{\mathrm{ADH-SDH }}}\) denote an algorithm that takes a group description \(\Lambda \) as input and generates parameters \(P_{{\mathrm{ADH-SDH }}}:= (F, K, X, \tilde{Y}) \in {{\mathbb {G}}}^3\times \tilde{{{\mathbb {G}}}}\) where \(F\), \(K\) and \(X=G^x\) are random generators of \({{\mathbb {G}}}\), and \(\tilde{Y}=\tilde{G}^x\).

For \(\Lambda \) and \(P_{{\mathrm{ADH-SDH }}}\), let \({\mathcal {I}}_{{\mathrm{ADH-SDH }}}\) denote the set of tuples \({I}_i=(A_i,B_i,\tilde{D}_i,V_i,\tilde{W}_i)\in {{\mathbb {G}}}^2\times \tilde{{{\mathbb {G}}}}\times {{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\) that satisfy \(\tilde{Y}\cdot \tilde{D}_i \ne 1\) and

We say the \(q\)-asymmetric double hidden strong Diffie–Hellman assumption holds relative to \({\mathcal {G}}\) if for any probabilistic polynomial-time adversary \({{\mathcal {A}}}\)

is negligible.

In symmetric bilinear groups (where \({{\mathbb {G}}}= \tilde{{{\mathbb {G}}}}\)), we replace \(\tilde{G}\in \tilde{{{\mathbb {G}}}}\) by a random generator \(H\in {{\mathbb {G}}}\). We prove generic security of the assumption in symmetric groups, which yields a stronger result, as it implies security in asymmetric groups.

Theorem 2

The \(q\)-ADH-SDH assumption holds in generic bilinear groups when \(q\) is a polynomial.

Proof

We prove the symmetric case, therefore every \(\tilde{G}\) in the statement of Assumption 9 is replaced by a random generator \(H\) of \({{\mathbb {G}}}\); that is, we prove that given \((p,{{\mathbb {G}}},{{\mathbb {G}}}_T,e,G)\) and \((F,K,H,X)\mathop {\leftarrow }\limits ^{{\scriptscriptstyle \$}}{{\mathbb {G}}}^4\) and \(Y:=H^{\log _G X}\), as well as \(q-1\) tuples \((A_,B_i,D_i,V_i,W_i)\) satisfying

it is hard to generate a new tuple \((A,B,D,V,W)\) satisfying the above.

We follow the approach of [22] in proving the generic security. Every element from \({{\mathbb {G}}}\) and \({{\mathbb {G}}}_T\) is represented by a random string, and the adversary has access to an oracle for group operations in \({{\mathbb {G}}}\) and \({{\mathbb {G}}}_T\) and pairings: Given the representation of two elements \(A,A'\in {{\mathbb {G}}}\), the oracle responds with the representation of \(A\cdot A'\in {{\mathbb {G}}}\), or \(e(A,A')\) respectively, and analogously for \(T,T'\in {{\mathbb {G}}}_T\). Internally, the simulator represents the elements as their logarithms relative to the group generator \(G\) (and \({{\mathbb {G}}}_T\) elements relative to \(e(G,G)\)). When answering queries, it stores the symbolic representation of the returned element as an addition or multiplication of the polynomials for the queried elements. At the end, the simulator chooses random secret values and instantiates all stored polynomials. The simulation was perfect if no nonidentical polynomials yield the same value, which (due to Schwartz’s lemma [87], since the initial polynomials are of constant degrees and the adversary can only make polynomially many queries) is negligible in \(\lambda \).

It remains to show that from a challenge the adversary cannot symbolically compute a new tuple satisfying (8). We represent every group element by its discrete logarithm (index) with respect to \(G\). An ADH-SDH tuple \({I}_i\) that satisfies the equations in (8) can be written as

for some \(c_i\in \mathbb {Z}_p{\setminus }\{-x\}\) and \(v_i \in \mathbb {Z}_p\). Let a lower-case letter denote the index of the group elements denoted by the corresponding upper-case letter. A \(q\)-ADH-SDH instance is thus represented by the following rational fractions:

Let \((A^*,B^*,D^*,V^*,W^*)\) be a solution to this instance, that is, a tuple satisfying the equations in (8). Considering the logarithms of the \({{\mathbb {G}}}_T\)-elements in these equations w.r.t. basis \(e(G,G)\) yields

In a generic group, all the adversary can do is apply the group operation to the elements of its input. We will show that the only linear combinations \((a^*,b^*,d^*,v^*,w^*)\) of elements in (10) satisfying (11) are \((a^*=a_i={\textstyle \frac{k+v_i}{x+c_i}},b^*=b_i=c_if,d^*=d_i=c_ih,v^*=v_i,w^*=w_i=v_ih)\) for some \(i\). A quintuple from the instance is, however, not a valid solution, meaning a generic adversary cannot break the assumption. We make the following ansatz for \(a^*\):

Analogously, we write \(b^*,d^*,v^*\) and \(w^*\), whose coefficients we denote by \(\beta \), \(\delta \), \(\mu \) and \(\omega \), respectively.

By the last equation of (11) we have that for any \(v^*\) the adversary forms, it has to provide \(w^*=v^*h\) as well. We can therefore limit the elements used for \(v^*\) to those of which their product with \(h\) is also given: \(1,x\) and \(v_i\) (for all \(i\)). This yields

Similarly, plugging in the ansätze for \(b^*\) and \(d^*\) in the second equation of (11) and equating coefficients eliminates all of the coefficients except those for \(fh\) (which yields \(\beta _f=\delta _h=:\gamma \)) and those for \(c_ifh\) (which yields \(\beta _{b,i}=\delta _{d,i}=:\gamma _i\)), for all \(i\). We have thus

We substitute \(a^*,d^*,v^*\) by their ansätze in the first equation of (11); that is, \(~a^*(xh+d^*)-v^*h=kh\). Since every term contains \(h\), for convenience we omit one \(h\) per term (i.e., we symbolically “divide” the equation by \(h\)). The first equation of (11) can thus be written as

which after regrouping yields

To do straightforward comparison of coefficients, we would have to multiply the equation by \(\prod _{i=1}^{q-1}(x+c_i)\) first. For the sake of presentation, we keep the fractions and instead introduce new equations for the cases where a linear combination leads to a fraction that cancels down.

Now, comparison of coefficients of the two sides of the above equation shows that all coefficients in lines (12a)–(12e) must be 0, whereas for the last line we have a different situation: Adding \(\frac{x(k+v_i)}{x+c_i}\) and \(\frac{c_i(k+v_i)}{x+c_i}\) reduces to \(k+v_i\) (but this is the only combination that reduces); we have thus

We now solve the equations “all coefficients in Lines (12a) to (12e) equal 0,” and Eqs. (13) and (14) for the values \(\big (\alpha ,\alpha _f, \alpha _h,\alpha _k,\alpha _x,\alpha _y,\gamma ,\mu ,\mu _x,\{\alpha _{a,i},\alpha _{b,i}, \alpha _{d,i},\) \(\alpha _{v,i},\alpha _{w,i},\gamma _{i},\mu _{v,i}\}\big )\).

The first four terms and the last term in Line (12c) and the first two terms in Line (12d) immediately yield: \(\alpha _f=\alpha _k=\alpha _x=\alpha _y=\alpha _{b,i}=\alpha _{v,i}=\alpha _{w,i}=0\) for all \(i\). Now \(\alpha _y=0\) implies \(\alpha _h=0\) by the last term in (12a), and moreover, \(\alpha _{d,i}=0\) for all \(i\) by the fifth term in (12c). Plugging in these values, the only equations different from “\(0=0\)” are the following:

where the second equation in (15), denoted by “(15.2)”, follows from the fourth term in (12a) and \(\alpha _x=0\). (16.1) and (16.2) follow from the first term in (12b) and the third term in (12d), respectively. Equations (17) are the equations in (13); those in (18) are the ones from (14) taking into account that \(\alpha _k=0\) and \(\alpha _{v,i}=0\) for all \(i\). The variables not yet proved to be 0 are \(\alpha ,\gamma ,\mu ,\mu _x,\alpha _{a,i},\gamma _{i}\) and \(\mu _{v,i}\) for \(1\le i\le q-1\).

We first show that there exists \(i^*\in \{1,\ldots ,q-1\}\) such that \(\alpha _{a,j}=0\) for all \(j\ne i^*\): Assume there exist \(i\ne j\) such that \(\alpha _{a,i}\ne 0\) and \(\alpha _{a,j}\ne 0\); then by (17.1), we have \(\gamma _{i}=\gamma _{j}=1\), which contradicts (17.2).

This result implies the following: by (18.1), we have \(\alpha _{a,i^*}=1\), and by (17.1), we have \(\gamma _{i^*}=1\), whereas for all \(j\ne i^*\): \(\gamma _{j}=0\) by (17.2). We have thus shown that \(\alpha _{a,i^*}=\gamma _{i^*}=1\) and \(\alpha _{a,j}=\gamma _{j}=0\) for all \(j\ne i^*\).

This now implies \(\alpha =0\) (by 16.2), and thus, \(\mu =\mu _x=0\) by [(15.1) and (15.2), respectively]. Moreover, \(\gamma =0\) (by 16.1) and for all \(i\): \(\alpha _{a,i}=\mu _{v,i}\) (by 18.2). The only nonzero variables are thus \(\alpha _{a,i^*}=\gamma _{i^*}=\mu _{v,i^*}=1\).

Plugging in our results in the ansätze for \(a^*,b^*,d^*,v^*\) and \(w^*\), we proved that there exists \(i^*\in \{1,\ldots ,q-1\}\) such that \(a^*=\frac{k+v_{i^*}}{x+c_{i^*}}\), \(b^*=c_{i^*}f\), \(d^*=c_{i^*}h\), \(v^*=v_{i^*}\) and \(w^*=v_{i^*}h\). This means that the only tuples \((A^*,B^*,D^*,V^*,W^*)\) satisfying (8) and being generically constructible from a ADH-SDH instance are the tuples from that instance, which concludes our proof of generic security of ADH-SDH.\(\square \)

2.6 The Groth–Sahai Proof System for Pairing-Product Equations

The Groth–Sahai (GS) proof system [59] gives efficient non-interactive witness-indistinguishable (NIWI) proofs and non-interactive zero-knowledge (NIZK) proofs for languages that can be described as sets of satisfiable equations, each of which falls in one of the following categories: pairing-product equations, multi-exponentiation equations and general arithmetic gates. The GS proof system can be instantiated under different assumption: for instance in the asymmetric setting under the SXDH assumption, which says DDH holds in both \({{\mathbb {G}}}\) and \(\tilde{{{\mathbb {G}}}}\) and in the symmetric setting under the DLIN assumption.

In GS proofs, there are two types of common reference string (CRS), which are computationally indistinguishable. One type is called “real” and gives perfect soundness and allows extraction of the group elements of a witness given a secret extraction key that is set up together with the CRS. The second type is called “simulated” and yields perfectly witness-indistinguishable proofs, which are also zero knowledge for some types of equations. When proving a statement, described as a set of equations, one first commits to the witness components and then produces elements for each equation that prove the corresponding committed values satisfy the equation.

Of the types of equations the GS proof system supports, we are mainly interested in pairing-product equations over variables \(X_1,\ldots ,X_m\in {{\mathbb {G}}}\) and \(\tilde{Y}_1,\ldots ,\tilde{Y}_n\in \tilde{{{\mathbb {G}}}}\):

where \(\{A_i\}_{i=1}^n \in {{\mathbb {G}}}^n\), \(\{\tilde{B}_i\}_{i=1}^m \in \tilde{{{\mathbb {G}}}}^m\), \(\{c_{i,j}\}_{i=1,j=1}^{\;m,\;\;n}\in \mathbb {Z}_p\), and \(T\in {{\mathbb {G}}}_T\) are public constants. When the equations involve variables only in one of the groups, we get simpler, one-sided equations \(\prod _{i=1}^n e(A_i,\tilde{Y}_i)=T\) or \(\prod _{i=1}^m e(X_i,\tilde{B}_i)=T\), which also yield more efficient proofs.

In the SXDH instantiation, a commitment to a group element consists of two group elements and a proof for a pairing-product equation costs 8 group elements. In the symmetric DLIN instantiation, a commitment consists of 3 group elements and a proof consists of 9 group elements. For linear pairing-product equations, the size of a proof drops to 2 and 3 group elements in the SXDH and the DLIN settings, respectively.

The GS proof system is witness indistinguishable when \(T \in {{\mathbb {G}}}_T\) is an element in the target group without some particular structure. If for each equation \(T=1\), possibly after some rewriting of equations, the GS proof system becomes zero knowledge.

Note that in this context the word proof can denominate either “proof of satisfiability” or language membership (which thus includes the commitments) or mean a proof that the content of some given commitments satisfies a given equation. We adopt the latter diction and say proof of knowledge when we include the commitments. Please refer to [59] for further details.

2.7 Pairing-Randomization Techniques

We introduce techniques that randomize elements in a pairing or a pairing product without changing their value in \({{\mathbb {G}}}_T\). Not all of them are used in this paper, but they do have applications, e.g., [7].

-

Inner Randomization \((X',Y') \leftarrow \mathsf{Rand }(X,Y)\): A pairing \(A = e(X, Y) \ne 1\) is randomized as follows. Choose \(\gamma \leftarrow \mathbb {Z}_p^*\) and let \((X', Y') =( X^\gamma , Y^{1/\gamma })\). It then holds that \((X', Y')\) distributes uniformly over \({{\mathbb {G}}}\times \tilde{{{\mathbb {G}}}}\) under the condition of \(A = e(X', Y')\). If \(A=1\), then first flip a coin and pick \(e(1,1)\) with probability \(1/(2p-1)\). Otherwise, select \(X'\ne 1\) uniformly, and output either \(e(1,X')\) or \(e(X',1)\) with probability \(1/2\).

-

Sequential Randomization \(\{X'_i,Y'_i\}_{i=1}^{{k}} \leftarrow \mathsf{RandSeq }(\{X_i,Y_i\}_{i=1}^{{k}})\): A pairing product \(A = e(X_1, Y_1) \cdots e(X_{k}, Y_{k})\) is randomized into \(A = e(X'_1, Y'_1) \cdots e(X'_{k}, Y'_{k})\) as follows: Pick \((\gamma _1, \dots , \gamma _{k-1}) \leftarrow \mathbb {Z}_p^{{k}-1}\). We begin with randomizing the first pairing using the second pairing as follows. First, verify that \(Y_1 \ne 1\) and \(X_2 \ne 1\). If \(Y_1 = 1\), replace the first pairing \(e(X_1, 1)\) with \(e(1, Y_1)\) with a new random \(Y_1 (\ne 1)\). The case of \(X_2 = 1\) is handled the same way. Then, multiply \(1 = e(X_2^{-\gamma _1}, Y_1)\, e(X_2, Y_1^{\gamma _1})\) to both sides of the formula. We thus obtain

$$\begin{aligned} A = e(X_1 X_2^{-\gamma _1}, Y_1)\, e(X_2, Y_1^{\gamma _1} Y_2)\, e(X_3, Y_3) \cdots e(X_{k}, Y_{k}). \end{aligned}$$(19)Next, we randomize the second pairing using the third one. As before, if \(Y_1^{\gamma _1} Y_2=1\) or \(X_3=1\), replace them with random values. Then, multiply \(1 = e(X_3^{-\gamma _2}, Y_1^{\gamma _1} Y_2)\) \(e(X_3, (Y_1^{\gamma _1} Y_2)^{\gamma _2})\). We thus have

$$\begin{aligned} A = e(X_1 X_2^{-\gamma _1}, Y_1)\, e(X_2 X_3^{-\gamma _2}, Y_1^{\gamma _1} Y_2)\, e(X_3, (Y_1^{\gamma _1} Y_2)^{\gamma _2} Y_3) \cdots e(X_{k}, Y_{k}). \end{aligned}$$(20)This continues up to the \(({k}-1)\)-th pairing. When done, the value of the \(i\)-th pairing distributes uniformly in \({{\mathbb {G}}}_T\) due to the uniform choice of \(\gamma _i\). The \(k\)-th pairing follows the distribution determined by \(A\) and the preceding \({k}-1\) pairings. Finally, process every pairing by the inner randomization.

-

Extension \(\{X'_i,Y'_i\}_{i=1}^{{k}'} \leftarrow \mathsf{Extend }(\{X_i,Y_i\}_{i=1}^{{k}})\): For \({k}' \ge {k}\), a pairing product \(A = e(X_1, Y_1) \cdots e(X_{k}, Y_{k})\) is randomized to \(A = e(X'_1, Y'_1) \cdots e(X'_{{k}'}, Y'_{{k}'})\) as follows: For \(i\in [{k},{k}']\), let \(X_i = 1\) and \(Y_i = 1\). Then, execute \(\{X'_i,Y'_i\}_{i=1}^{{k}'} \leftarrow \mathsf{RandSeq }(\{X_i,Y_i\}_{i=1}^{{k}'})\) and output \(\{X'_i,Y'_i\}_{i=1}^{{k}'}\).

-

One-side Randomization \(\{X'_i\}_{i=1}^{{k}} \leftarrow \mathsf{RandOneSide }(\{G_i,X_i\}_{i=1}^{{k}})\): This algorithm works only in the symmetric setting \(\Lambda _{\mathsf{sym }}\). Let \(G_i\) be an element in \({{\mathbb {G}}}^*\). A pairing product \(A = e(G_1, X_1) \cdots e(G_k, X_k)\) is randomized into \(A = e(G_1, X'_1)\cdots e(G_k, X'_k)\) as follows. Pick \((\gamma _1, \dots , \gamma _{{k}-1}) \leftarrow \mathbb {Z}_p^{{k}-1}\) and multiply \(1 = e(G_1, G_2^{\gamma _1})\, e(G_2, G_1^{-\gamma _1})\) to both sides of the formula. We thus obtain

$$\begin{aligned} A = e(G_1, X_1 G_2^{\gamma _1})\, e(G_2, X_2 G_1^{-\gamma _1})\, e(G_3, X_3) \cdots e(G_{k}, X_{k}). \end{aligned}$$(21)Next, multiply \(1 = e(G_2, G_3^{\gamma _2})\, e(G_3, G_2^{-\gamma _2})\), which yields

$$\begin{aligned} A = e(G_1, X_1 G_2^{\gamma _1})\, e(G_2, X_2 G_1^{-\gamma _1} G_3^{\gamma _2})\, e(G_3, X_3 G_2^{-\gamma _2}) \cdots e(G_{k}, X_{k}). \end{aligned}$$(22)Continue until \(\gamma _{{k}-1}\), so we eventually have \(A = e(G_1, X'_1) \cdots e(G_k, X'_k)\). Observe that every \(X'_i\) for \(i=1,\dots ,{k}-1\) is distributed uniformly in \({{\mathbb {G}}}\) due to the uniform multiplicative factor \(G_{i+1}^{\gamma _i}\). In the \({k}\)-th pairing, \(X'_k\) follows the distribution determined by \(A\) and the preceding \({k}-1\) pairings. Thus, \((X'_1,\dots , X'_{k})\) is uniform over \({{\mathbb {G}}}^{k}\) conditioned on being evaluated to \(A\).

Note that the algorithms yield uniform elements and thus may include pairings that evaluate to \(1_{{{\mathbb {G}}}_T}\). If this is not preferable, it can be avoided by repeating that particular step once again excluding the bad randomness.

3 Homomorphic Trapdoor Commitments

Homomorphic trapdoor commitments are used in a number of contexts, in particular as a building block in zero-knowledge proofs. An example of a frequently used scheme is that of Pedersen [83] that can be used to commit to elements from the field \(\mathbb {Z}_p\).

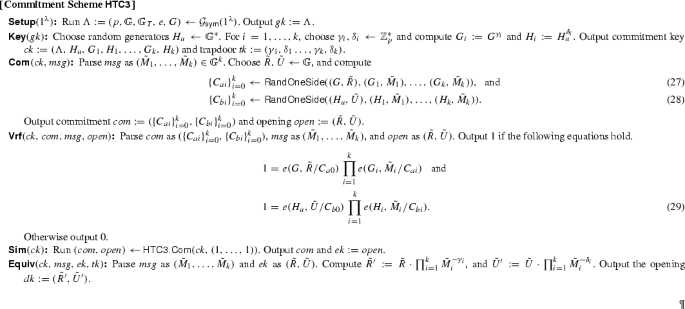

Our goal in this section is to construct homomorphic trapdoor commitment schemes for group elements. We will construct both strict structure-preserving commitments where both messages, commitments and openings are elements of the source groups \({{\mathbb {G}}}, \tilde{{{\mathbb {G}}}}\) and relaxed structure-preserving commitments where we allow the commitments to contain elements from the target group \({{\mathbb {G}}}_T\).

In [9], it is shown that when committing with source-group elements, the size of the commitment cannot be smaller than the size of the input message. The strict structure-preserving commitments therefore grow linearly with the number of group elements in the messages. To contrast this result, we also show that in the relaxed structure-preserving setting it is possible to get constant-size commitments for messages containing many group elements.

Table 1 summarizes the performance of structure-preserving homomorphic trapdoor commitment schemes from this section and the existing ones from [9, 38].

3.1 Commitments Using Target-Group Elements

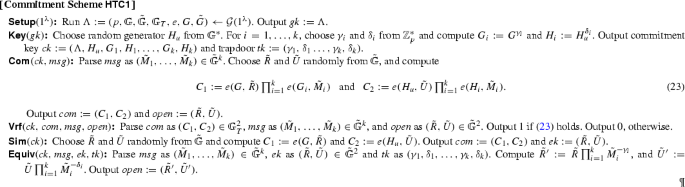

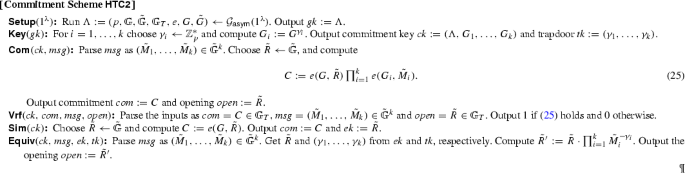

This section includes two homomorphic trapdoor commitment schemes whose commitments consist of elements in \({{\mathbb {G}}}_T\). The first scheme, \(\mathsf{HTC{1} } \), works in both symmetric and asymmetric groups and can be seen as an optimization of the scheme we first presented in [56] with a simpler assumption. The second scheme, \(\mathsf{HTC{2} } \), only works in the asymmetric setting in exchange of gaining efficiency.

Both schemes can be used to commit to \(k\) group elements in \(\tilde{{{\mathbb {G}}}}\) at once. It is inspired by the Pedersen commitment scheme, but uses pairings instead of exponentiations. The use of the pairing means that we commit to source-group elements, but the final commitment is a group element in the target group.

Theorem 3

\(\mathsf{HTC{1} } \) is a homomorphic trapdoor commitment scheme. It is perfectly trapdoor and computationally binding if the SDP assumption holds in \({{\mathbb {G}}}\) relative to \({\mathcal {G}}\).

Proof

Correctness trivially holds by construction.

To see that the scheme is homomorphic, observe that

This means \((C_1 C'_1, C_2 C'_2)\) is a valid commitment to \((\tilde{M}_1 \tilde{M}'_1,\cdots ,\tilde{M}_k \tilde{M}'_k)\) that can be opened with \((\tilde{R}\tilde{R}', \tilde{U}\tilde{U}')\).

The commitment scheme is perfectly hiding because the components \(e(G_{r},\tilde{R})\) and \(e(H_{u},\tilde{U})\) make \(C_1\) and \(C_2\) uniformly random regardless of the message. The scheme is also perfectly trapdoor. To see this, observe that for any \((\tilde{M}_1,\dots ,\tilde{M}_k)\) and any \((C_1,C_2,\tilde{R},\tilde{U})\) and \((\tilde{R}', \tilde{U}')\) legitimately generated by \(\mathsf{HTC{1} }.\mathsf{Sim } \) and \(\mathsf{HTC{1} } \mathsf{.Equiv } \), respectively, and it holds that

Thus, \(\mathsf{HTC{1} }.\mathsf{Vrf } \) accepts \((\tilde{M}_1,\dots ,\tilde{M}_k)\) and \((\tilde{R}',\tilde{U}')\) as a correct opening for \((C_1,C_2)\). Moreover, since \(C_1,C_2\) are uniformly random and the commitments and messages uniquely determine the openings, real commitments and openings have the same probability distribution as do simulated commitments and trapdoor openings.

Finally, we will show that \(\mathsf{HTC{1} } \) is computationally binding. Suppose that there exists an adversary that successfully opens a commitment to two distinct messages. We show that one can break SDP by using such an adversary. Given an SDP challenge \((\Lambda , H_{u}, G_z, H_z)\), do as follows.

-

Set \(G_i:=G_z^{\chi _i} G^{\gamma _i}\) and \(H_i:= H_z^{\chi _i} H_{u}^{\delta _i}\) for \(i=1,\dots ,{k}\). Abort if \(G_i=1\) or \(H_i=1\) for any \(i\). Otherwise, run the adversary on \(ck = (\Lambda ,H_{u},G_1,H_1,\ldots ,G_k,H_k)\).

-

Given two openings \((\tilde{M}_1,\dots ,\tilde{M}_k, \tilde{R}, \tilde{U})\) and \((\tilde{M}'_1,\dots ,\tilde{M}'_k, \tilde{R}', \tilde{U}')\) from the adversary that yield the same commitment \((C_1,C_2)\), compute

$$\begin{aligned} \textstyle \tilde{Z}^{\star } := \prod \limits _{i=1}^{{k}} \Big (\frac{\tilde{M}_i}{^{\!\!\!\!\!}{\tilde{M'_i}}}\Big )^{\chi _i}, \quad \tilde{R}^{\star } := \frac{\tilde{R}}{^{\!\!\!\!\!}{\tilde{R}'}} \prod \limits _{i=1}^{{k}} \Big (\frac{\tilde{M}_i}{^{\!\!\!\!\!}{\tilde{M}'_i}}\Big )^{\gamma _i}, \quad \tilde{U}^{\star } := \frac{\tilde{U}}{^{\!\!\!\!\!}{\tilde{U}'}} \prod \limits _{i=1}^{{k}} \Big (\frac{\tilde{M}_i}{^{\!\!\!\!\!}{\tilde{M}'_i}} \Big )^{\delta _i}. \end{aligned}$$(24) -

Output \((\tilde{Z}^{\star }, \tilde{R}^{\star }, \tilde{U}^{\star })\).