Abstract

We present the first tight security proofs for two general classes of Strong RSA (SRSA) based signature schemes. Among the covered signature schemes are the signature schemes by Cramer–Shoup, Zhu, Fischlin, and the SRSA-based Camenisch–Lysyanskaya scheme with slightly modified parameter sizes. We also present two variants of our signature classes in bilinear groups that output very short signatures. Similarly to before, these variants have tight security proofs under the Strong Diffie–Hellman (SDH) assumption. We so obtain very efficient SDH-based variants of the Cramer–Shoup, Fischlin, and Zhu signature scheme and the first tight security proof for the recent Camenisch–Lysyanskaya scheme that was proposed and proven secure under the SDH assumption. Central to our results is a new proof technique that allows the simulator to avoid guessing which of the attacker’s signature queries will be reused in the forgery. In contrast to previous proofs, our security reduction does not lose a factor of q here, where q is the number of signature queries made by the adversary.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Provable Security and Tight Reductions

The central idea of provable security is to design a cryptographic scheme in such a way that if an attacker \(\mathcal {A}\) could efficiently break its security properties then one can also construct an efficient algorithm \(\mathcal {B}\), to break a supposedly hard problem. In this way, we prove the security of the scheme by reduction to the hardness assumption. Now, if \(\mathcal {B}\) has almost the same success probability as \(\mathcal {A}\) while running in roughly the same time we say that the security reduction is tight. Otherwise, the security reduction is said to be loose. Of course, any scheme is trivially secure under the sole assumption that it is hard to break. However, cryptographers are particularly interested in security reductions where a (complex) scheme whose security is defined via an interactive security game is shown to be secure under non-interactive security assumptions only (e.g. under the well-known factoring assumption). This is because non-interactive security assumptions can be analyzed much better than interactive ones.

Motivation

Cryptographers are interested in tight security proofs as they allow for shorter security parameters and better efficiency. This paper was also motivated by the observation that for several of the existing Strong RSA (SRSA) based signature schemes without random oracles we do not know if tight security proofs exist. Those schemes which we know to have a tight security proof, also have some limitations concerning practicability (which in turn cannot be found among the signature schemes with a loose security reduction). For example, in some schemes the signing algorithms injectively map messages to primes. Since prime generation depends on the input message, these schemes cannot pre-compute primes in times of relative low workload. As prime generation is one of the most costly operations in these schemes, their reaction time is considerably lower than in comparable schemes that allow to pre-compute primes. In 2007, Chevallier-Mames and Joye addressed this problem in the following way [7]: they took a tightly secure signature scheme, the Gennaro–Halevi–Rabin scheme [11], and improved its efficiency by re-designing one of its most time-consuming functions. Basically, they introduced a new method to map messages to primes which is much more efficient in the verification process than the original method from [11] by choosing a random prime and making use of a chameleon hash function to map the input message to that prime. In this way, primes can be pre-computed and the reaction time of the signer can significantly be lowered. Unfortunately, their approach also has a serious disadvantage as the so computed primes are much larger than in the original GHR scheme. In addition to that, there is also a general disadvantage when improving a scheme’s efficiency (or that of its security reduction) by modifying its algorithms: the resulting efficiency benefits only apply to new implementations. Existing implementations (of the original scheme) are not affected. In contrast, as security reductions are merely thought experiments (that do not actually have to be implemented), improving the tightness of a scheme’s security reduction benefits both existing and new implementations. In this paper, we therefore take the same approach as Bernstein at EUROCRYPT ’08 who proved tight security for the original Rabin–Williams signature scheme in the random-oracle model [2]. In contrast to Bernstein, we concentrate on schemes that are secure in the standard model.

Contribution

In this work, we address the following question: are there tight security proofs for the existing, practical signature schemes by Cramer–Shoup [9], Zhu [24], Camenisch–Lysyanskaya [4] and Fischlin [10] (which we only know to have loose security reductions)? We answer this question in the affirmative and present the first tight proofs for the above signature schemes. However, our result is not limited to the original schemes. In our analysis, we generalize the schemes by Camenisch–Lysyanskaya (with slightly modified parameter lengths), Fischlin and Zhu by introducing a new family of randomization functions, called combining functions. The result of this generalization is an abstract signature scheme termed ‘combining scheme’. Signatures always consist of three components, a random value r, a random prime e, and an integer s such that

for some RSA modulus n and some public generators u,v,w. The concrete schemes only differ in how they instantiate the combining function z(r,m). In a similar way, we introduce a second general class of signature schemes called ‘chameleon hash scheme’ that is inspired by the structure of the Cramer–Shoup signature scheme. Here, signatures always look like

where ch(r,m) refers to a concrete instantiation of a chameleon hash function. Then, we prove the combining signature scheme and the chameleon hash scheme to be tightly secure under the SRSA assumption when instantiated with any secure combining function, respectively chameleon hash function. Unfortunately, the security proof of the SRSA-based chameleon hash scheme does not directly transfer to the original Cramer–Shoup signature scheme. This is simply because in the Cramer–Shoup scheme the key material of the chameleon hash function is not chosen independently from the rest of the signature scheme. Nevertheless, the proof of the Cramer–Shoup signature scheme is technically very similar to the proof of the chameleon hash scheme (and we also provide a separate proof of tight security of the Cramer–Shoup signature scheme). Finally, we show that our results not only hold under the SRSA assumption. We analyze whether there also exist tight security reductions for analogous schemes based on the SDH assumption in bilinear groups. Interestingly, most of the above schemes have not been considered yet under the SDH assumption (except for the Camenisch–Lysyanskaya scheme), although, at the same security level, the group description is much shorter in bilinear groups than in factoring based groups. We develop a SDH-based variant of the combining signature scheme and the chameleon hash scheme and prove it to be existentially unforgeable under adaptive chosen message attacks with a tight security reduction. In doing so, we present the first SDH-based variants of the Fischlin, the Zhu and the Cramer–Shoup signature scheme and the first tight security proof of the SDH-based Camenisch–Lysyanskaya scheme. When instantiated with existing combining functions (respectively chameleon hash functions), we obtain short and efficient signature schemes. Our results can be interpreted in two positive ways: (1) Existing implementations of the affected signature schemes (with a fixed parameter size) provide higher security than expected. (2) New implementations can have shorter security parameters what transfers to higher efficiency. The first conclusion is particularly surprising and rather exceptional; usually new progress in cryptanalysis makes deployed cryptographic systems ‘less secure’ than expected.

Technical Contribution

In the existing proofs, the simulator partitions the set of forgeries by at first guessing j∈{1,…,q} where q is the number of signature queries made by the attacker. Only if the attacker’s forgery shares some common random values with the answer to the jth signature query the simulator can break the SRSA assumption. Otherwise, the simulator just aborts. The number of signature queries rises polynomially in the security parameter and the security proof loses a factor of q here. Our main contribution is a new technique that renders the initial guess unnecessary. As a consequence, any forgery helps the simulator to break the SRSA assumption. This results in a tight security proof.

On a more technical level, we first exploit that two of the components of the signatures r and e are drawn uniformly at random. As a consequence, the simulator can compute the entire set R of randomness pairs {(r i ,e i )} i=1,…,q that it is going to use in the responses to the adversary’s signature queries before setting up the public key. Given R, the simulator can now carefully design a linear function f with several useful properties. First, since it is linear, f can be embedded in the exponents of only two (random-looking) public group generators, for example in u and v in the chameleon hash scheme. Second, the properties of f guarantee that the simulator can successfully simulate the signing oracle (if it actually uses the randomness pairs in R to respond to the signature queries). Third, if the adversary computes a forgery r ∗,e ∗,s ∗ where only a single random value has been used in a previous signature (i.e. either e ∗=e i or r ∗=r i for some 1≤i≤q but not both), the simulator can break the underlying security assumption.

Related Work

Our work is related to the existing hash-and-sign signature schemes without random oracles that are proven secure under the SRSA or the SDH assumption. We subsequently give a brief overview on the available results, also see Table 1. In 1988, Goldwasser, Micali and Rivest published the first provably secure, but inefficient signature scheme [12]. More than a decade later, in 1999, Gennaro, Halevi, and Rabin [11] presented a signature scheme that is secure in the standard model under the Flexible or Strong RSA assumption (SRSA). This scheme is more efficient, both the key and the signature size are less than two group elements (à 1024 bits), but as a drawback, it relies on an impractical function that injectively maps messages to primes [8, 17]. Advantageously, the Gennaro–Halevi–Rabin signature scheme is known to have a tight security proof. At the same time and also based on the SRSA assumption, Cramer and Shoup [9] proposed an efficient standard model signature scheme, that unlike [11] does not require to map messages to primes. In contrast, primes can be drawn uniformly at random from the set of primes of a given bitlength. Based on this work, Zhu [23, 24], Fischlin [10], Camenisch and Lysyanskaya [4], and Hofheinz and Kiltz [13] in the following years presented further SRSA-based schemes. These schemes are either more efficient than the Cramer–Shoup scheme or very suitable in protocols for issuing signatures on committed values. In 2011, Joye presented a revised version of the Zhu scheme as, for the parameter sizes given in [23, 24], the original scheme suffers from a flaw in the security proof [14]. Joye’s version uses slightly different parameter lengths what fixes the main problem in the security proof. In 2011, Catalano, Fiore, and Warinschi, presented an adaptive definition of pseudo-free groups, where the adversary is also allowed to learn solutions to other non-trivial equations before having to solve his challenge equation [6]. The authors then showed that (1) adaptive pseudo-free groups can generically be used to construct digital signature schemes (and even network coding signatures) and that (2) the well-known RSA group is an adaptive pseudo-free group under the SRSA assumption. In this way, they can generalize most of the existing SRSA-based signature schemes in a single framework. Unfortunately, their work does not consider concrete security bounds. As a consequence, it does not cover highly optimized schemes like the SRSA-based signature scheme by Hofheinz and Kiltz [13]. In 2004, Boneh and Boyen presented the first hash-and-sign signature scheme that makes use of bilinear groups [3]. The big advantage of bilinear groups is the very compact representation of group elements. The Boneh–Boyen signature scheme is proven tightly secure under a new flexible assumption, the q-Strong Diffie–Hellman (SDH) assumption. In 2004, Camenisch and Lysyanskaya also presented a signature scheme that relies on bilinear groups [5]. Unlike the Boneh–Boyen scheme, their scheme is proven secure under the LRSW assumption that was proposed by Lysyanskaya, Rivest, Sahai, and Wolf [16]. However, in the same paper Camenisch and Lysyanskaya propose a variant that is based on the SDH assumption in bilinear groups. The corresponding security proof was provided four years later in [1, 18]. Similar to the SRSA-based Camenisch–Lysyanskaya scheme the security proof of the SDH scheme is loose.

2 Preliminaries

Before presenting our results we briefly review the necessary formal definitions. For convenience, we also describe two general key generation procedures (settings) in Sects. 2.7 and 2.8. When describing our signature schemes in Sect. 3 we will refer to the corresponding setting and only describe the signature generation and verification algorithms.

2.1 Notation

For \(a,b\in\mathbb{Z},\ a\leq b\) we write [a;b] to denote the set {a,a+1,…,b−1,b}. For a string x, we write |x|2 to denote its bit length. If \(z\in\mathbb{Z}\), we write |z| to denote the absolute value of z. For a set X, we use |X| to refer to its size and \(x \stackrel {\$}{\leftarrow } X\) to indicate that x is drawn from X uniformly at random. For \(n\in\mathbb{N}\), we use QR n to denote the set of quadratic residues modulo n, i.e. \(QR_{n}=\{x|\exists y\in\mathbb {Z}_{n}^{*}: y^{2}=x \bmod n\}\). If \(\mathcal {A}\) is an algorithm we write \(\mathcal {A}(x_{1},x_{2},\ldots)\) to denote that \(\mathcal {A}\) has input parameters x 1,x 2,… . Accordingly, \(y\leftarrow \mathcal {A}\ (x_{1},x_{2},\ldots)\) means that \(\mathcal {A}\) outputs y when running with inputs x 1,x 2,… . We write PPT (probabilistic polynomial time) for randomized algorithms that run in polynomial time. We write \(\kappa\in\mathbb{N}\) to indicate the security parameter and 1κ to describe the string that consist of κ ones.

2.2 Signature Scheme

A digital signature scheme \(\mathsf {SIG}= ( \mathsf {SIG.KeyGen}, \mathsf {SIG.Sign}, \mathsf {SIG.Verify})\) consists of three algorithms. The PPT algorithm \(\mathsf {SIG.KeyGen}\) on input the security parameter \(\kappa\in \mathbb{N}\) in unary generates a secret and public key pair (SK,PK). The PPT algorithm \(\mathsf {SIG.Sign}\) takes as input a secret key SK and the message m in the message space \(\mathcal{M}_{\mathsf {SIG}}\) and outputs a signature σ in the signature space \(\mathcal{S}_{\mathsf {SIG}}\). Finally, the deterministic polynomial time algorithm \(\mathsf {SIG.Verify}\) processes a public key PK, a message m and a signature σ to check whether σ is a legitimate signature on m signed by the holder of the secret key corresponding to PK. Accordingly, the algorithm outputs 1 to indicate a successful verification and 0 otherwise.

2.3 Strong Existential Unforgeability

The standard notion of security for signature schemes is due to Goldwasser, Micali and Rivest [12]. We use a slightly stronger definition called strong existential unforgeability. The signature scheme \(\mathsf {SIG}= ( \mathsf {SIG.KeyGen}, \mathsf {SIG.Sign}, \mathsf {SIG.Verify})\) is strongly existentially unforgeable under an adaptive chosen message attack if it is infeasible for a forger, who only knows the public key and the global parameters, to produce, after obtaining polynomially (in the security parameter) many signatures σ 1,…,σ q on messages \(m_{1},\ldots ,m_{q}\in\mathcal{M}_{\mathsf {SIG}}\) of its choice from a signing oracle \(\mathcal {O} (\mathit{SK},\cdot)\), a new message/signature pair.

Definition 1

We say that SIG is (q,t,ϵ)-secure, if for all t-time adversaries \(\mathcal {A}\) that send at most q queries to the signing oracle \(\mathcal {O} (\mathit{SK},\cdot)\) it holds that

where the probability is over the random coins of \(\mathsf {SIG.KeyGen}\) and \(\mathcal {A}\) and \((m^{*},\sigma^{*})\in\mathcal{M}_{\mathsf {SIG}}\times\mathcal{S}_{\mathsf {SIG}}\) is not among the message/signature pairs obtained using \(\mathcal {O} (\mathit{SK},\cdot)\), i.e. ((m ∗,σ ∗)∉{(m 1,σ 1),…,(m q ,σ q )}).

2.4 Collision-Resistant Hashing

A hash function \(\mathsf {H}=(\mathsf {H.KeyGen},\mathsf {H.Eval})\) consist of two algorithms. The PPT \(\mathsf {H.KeyGen}\) takes as input 1κ and outputs a hash key k H . The deterministic algorithm \(\mathsf {H.Eval}\) takes as input the hash key k H and a message \(m\in\mathcal{M}_{\mathsf {H}}\) and outputs a hash value \(z\in\mathcal{H}_{\mathsf {H}}\).

Definition 2

(Collision-Resistant Hash Function)

H is called (t H ,ϵ H )-collision-resistant if for all t H -time adversaries \(\mathcal {A}\) it holds that

where the probability is over the random bits of \(\mathcal {A}\).

If H is used in a signature scheme, we assume that \(k_{\mathsf {H}}\leftarrow \mathsf {H.KeyGen}(1^{\kappa})\) is generated in the key generation algorithm \(\mathsf {SIG.KeyGen}\) and made part of the public key PK. If the hash key k H is clear from the context we write h(m) as a shortcut for \(\mathsf {H.Eval}(k_{\mathsf {H}},m)\).

2.5 Chameleon Hash Function

A useful tool in many of the signature schemes without random oracles is a chameleon hash function [15]. A chameleon hash function \(\mathsf {CH}=(\mathsf {CH.KeyGen},\mathsf {CH.Eval},\mathsf {CH.Coll})\) consists of three algorithms. The PPT algorithm \(\mathsf {CH.KeyGen}\) takes as input the security parameter 1κ and outputs a secret key SK CH and a public key PK CH . Given PK CH , a random r from a randomization space \(\mathcal{R}_{\mathsf {CH}}\) and a message m from a message space \(\mathcal {M}_{\mathsf {CH}}\), the algorithm \(\mathsf {CH.Eval}\) outputs a chameleon hash value c in the hash space \(\mathcal{C}_{\mathsf {CH}}\). Analogously, \(\mathsf {CH.Coll}\) deterministically outputs, on input SK CH and \((r,m,m')\in\mathcal {R}_{\mathsf {CH}}\times\mathcal{M}_{\mathsf {CH}}\times\mathcal{M}_{\mathsf {CH}}\), \(r'\in\mathcal {R}_{\mathsf {CH}}\) such that \(\mathsf {CH.Eval}(\mathit{PK}_{\mathsf {CH}},m,r)=\mathsf {CH.Eval}(\mathit{PK}_{\mathsf {CH}},m',r')\).

Definition 3

(Collision-Resistant Chameleon Hash Function)

We say that CH is (ϵ CH ,t CH )-collision-resistant if for all t CH -time adversaries \(\mathcal {A}\) that are only given PK CH it holds that

where the probability is over the random choices of PK CH and the coin tosses of \(\mathcal {A}\).

We also require that for an arbitrary but fixed public key PK CH output by \(\mathsf {CH.KeyGen}\), all messages \(m\in\mathcal{M}_{\mathsf {CH}}\) generate equally distributed hash values when drawing \(r\in \mathcal {R} _{\mathsf {CH}}\) uniformly at random and outputting \(\mathsf {CH.Eval}(\mathit{PK}_{\mathsf {CH}},r,m)\). In the following, if CH is used in a signature scheme we assume that \((\mathit{SK}_{\mathsf {CH}},\mathit{PK}_{\mathsf {CH}})\leftarrow \mathsf {CH.KeyGen}(1^{\kappa})\) is generated as part of \(\mathsf {SIG.KeyGen}\) and PK CH is made part of the public key.Footnote 1 If the keys are obvious from the context, we write ch(r,m) for \(\mathsf {CH.Eval}(\mathit{PK}_{\mathsf {CH}},r,m)\) and ch −1(r,m,m′) for \(\mathsf {CH.Coll}(\mathit{SK}_{\mathsf {CH}},r,m,m')\).

The security of chameleon hash functions can be based on well-analyzed standard assumptions like the discrete logarithm assumption [15] or the factoring assumption [15, 22]. Since the factoring assumption is weaker than the SRSA assumption and the discrete logarithm assumption is weaker than the SDH assumption, we can use chameleon hash functions as a building block for SRSA and SDH-based signature schemes without relying on additional complexity assumptions.

2.6 Combining Function

In this section, we introduce a new class of randomization functions called combining functions. A combining function is a function in two input parameters, a message m (that is chosen by the adversary) and a random value r that map to some output value z. Intuitively, security requires that it behaves computationally (or statistically) close to a bijection in both m and r. In particular, it should be hard to (1) find m such that for a randomly chosen output value z there exists no r mapping m to z and (2) compute distinct m,m′ that map to the same output value (for some common randomness r). Additionally, we want that inversion is easy, i.e. for given m, z we can efficiently compute a suitable r such that m and r map to z. We will subsequently use the concept of combining functions to generalize several existing signature schemes. Let us begin with the formal definition.

A combining function \(\mathsf {CMB}=(\mathsf {CMB.KeyGen},\mathsf {CMB.Eval})\) consist of two algorithms. On input 1κ the PPT \(\mathsf {CMB.KeyGen}\) outputs a (combining) key k CMB . On input k CMB , randomness \(r\in\mathcal {R}_{\mathsf {CMB}}\) and message \(m\in\mathcal{M}_{\mathsf {CMB}}\), the deterministic algorithm \(\mathsf {CMB.Eval}\) outputs a value \(z\in\mathcal{Z}_{\mathsf {CMB}}\).

Definition 4

(Secure Combining Functions)

We say that CMB is (t CMB ,ϵ CMB ,δ CMB )-combining if there exist functions ϵ CMB (κ) and δ CMB (κ) and for \(k_{\mathsf {CMB}}\leftarrow \mathsf {CMB.KeyGen}(1^{\kappa})\) the following properties hold:

-

1.

For all \(m\in\mathcal{M}_{\mathsf {CMB}}\) it holds that \(| \mathcal {R} _{\mathsf {CMB}}|=| \mathcal {Z} _{m}|\) where \(\mathcal {Z} _{m}\subseteq\mathcal{Z}_{\mathsf {CMB}}\) is defined as \(\mathcal {Z} _{m}=\mathsf {CMB.Eval}(k_{\mathsf {CMB}},\mathcal{R}_{\mathsf {CMB}},m)\) (i.e. \(\mathsf {CMB.Eval}\) is injective in the input parameter r for all m).

-

2.

There exists an efficient deterministic algorithm \(\mathsf {CMB.Invert}\) that on input k CMB , \(t\in\mathcal{Z}_{\mathsf {CMB}}\), and \(m\in\mathcal {M}_{\mathsf {CMB}}\) outputs ⊥ if \(t\notin\mathcal{Z}_{m}\) and r with \(t=\mathsf {CMB.Eval}(k_{\mathsf {CMB}}, r,m)\) otherwise.

-

3.

The uniform distribution on \(\mathcal {Z} _{m}\) and \(\mathcal{Z}_{\mathsf {CMB}}\) are δ CMB -indistinguishable, i.e. for all \(m\in\mathcal{M}_{\mathsf {CMB}}\), \(t \stackrel {\$}{\leftarrow } \mathcal{Z}_{\mathsf {CMB}}\), and \(r' \stackrel {\$}{\leftarrow } \mathcal {R} _{\mathsf {CMB}}\) it holds for all t CMB -time attackers \(\mathcal {A}\) that

$$\begin{aligned} \bigl\vert \mbox {Pr}\bigl[\mathcal {A}\bigl(k_\mathsf {CMB},r' \bigr)=1 \bigr]-\mbox {Pr}\bigl[\mathcal {A}\bigl(k_\mathsf {CMB}, \mathsf {CMB.Invert}(k_\mathsf {CMB},t,m) \bigr)=1 \bigr]\bigr\vert \leq\delta _\mathsf {CMB}, \end{aligned}$$where the probability is taken over the random bits of \(\mathsf {CMB.KeyGen}(1^{\kappa})\) and \(\mathcal {A}\) and over the random choices of t and r′.

-

4.

For all \(r\in\mathcal{R}_{\mathsf {CMB}}\), it holds for all t CMB -time attackers \(\mathcal {A}\) that

$$\begin{aligned} \mbox {Pr}\begin{bmatrix}(m,m')\leftarrow \mathcal {A}(k_\mathsf {CMB},r),\ m,m'\in\mathcal {M}_\mathsf {CMB},\\ m\neq m',\ \mathsf {CMB.Eval}(k_\mathsf {CMB},r,m)=\mathsf {CMB.Eval}(k_\mathsf {CMB},r,m') \end{bmatrix} \leq\epsilon_\mathsf {CMB}, \end{aligned}$$where the probability is taken over the random bits of \(\mathcal {A}\) and \(\mathsf {CMB.KeyGen}(1^{\kappa})\).

In the following, we assume that when used in signature schemes, \(k_{\mathsf {CMB}}\leftarrow \mathsf {CMB.KeyGen}(1^{\kappa})\) is generated during the key generation phase of the signature scheme. If the key k CMB is clear from the context we write z(r,m) short for \(\mathsf {CMB.Eval}(k_{\mathsf {CMB}},r,m)\) and z −1(t,m) short for \(\mathsf {CMB.Invert}(k_{\mathsf {CMB}},t,m)\). If security is independent from a random choice of the key, we may, for consistency, assume that \(\mathsf {CMB.KeyGen}\) just outputs a special, fixed key ⊤.

In Table 2, we present three concrete examples (CMB 1, CMB 2, CMB 3) of statistically secure combining functions. The following lemma shows that these examples are indeed combining functions with respect to Definition 4.

Lemma 1

CMB 1 and CMB 2 are (⋅,0,0)-combining. CMB 3 is \((\cdot,0,2^{l_{m}-l_{r}+1})\)-combining.

Proof

Let us first analyze CMB 1 and CMB 2. We have that \(\mathcal {M} _{\mathsf {CMB}}= \mathcal {R} _{\mathsf {CMB}}= \mathcal {Z} _{\mathsf {CMB}}= \mathcal {Z} _{m}\) for all \(m\in \mathcal {M} _{\mathsf {CMB}}\) and we can efficiently compute r as r=t−mmodp or r=t⊕m for all given \(t\in \mathcal {Z} _{\mathsf {CMB}}\) and \(m\in \mathcal {M} _{\mathsf {CMB}}\). Furthermore, since z is bijective in both input parameters z −1(t,m) is uniformly distributed in \(\mathcal {R} _{\mathsf {CMB}}\) for all \(m\in\mathcal{M}_{\mathsf {CMB}}\) and random \(t\in\mathcal{Z}_{\mathsf {CMB}}\). Thus, δ CMB =0. Finally, since z is a bijection in the second input parameter, it is collision-free in both combining functions and we have that ϵ CMB =0. Now, let us analyze CMB 3. For given \(m\in \mathcal {M} _{\mathsf {CMB}}\) and random \(t\in \mathcal {Z} _{\mathsf {CMB}}\), z −1(t,m) outputs r=t−m if \(t-m\in \mathcal {R} _{\mathsf {CMB}}\) (i.e. if \(t\in \mathcal {Z} _{m}\)) and ⊥ otherwise. To show that z(r,⋅) is collision-free, observe that m≠m′ implies r+m≠r+m′ for all \(r\in \mathcal {R} _{\mathsf {CMB}}\). To prove the bound on δ CMB note that for \(t' \stackrel {\$}{\leftarrow } \mathcal{Z}_{m}\), z −1(t′,m) is uniform in \(\mathcal {R} _{\mathsf {CMB}}\) since \(|\mathcal{Z}_{m}|=| \mathcal {R} _{\mathsf {CMB}}|\) implies that z −1(⋅,m) defines a bijection from \(\mathcal {Z} _{m}\) to \(\mathcal {R} _{\mathsf {CMB}}\). For \(t' \stackrel {\$}{\leftarrow } \mathcal{Z}_{m}\) and \(t \stackrel {\$}{\leftarrow } \mathcal {Z}_{\mathsf {CMB}}\) we get

□

Three further examples of combining functions can be obtained when first applying a (t H ,ϵ H )-collision-resistant hash function that maps (long) messages from \(\mathcal{M}_{\mathsf {H}}\) (for example \(\mathcal{M}_{\mathsf {H}}=\{0,1\}^{*}\)) to \(\mathcal{H}_{\mathsf {H}}=\mathcal {M}_{\mathsf {CMB}}\). The next lemma guarantees that the results are still combining according to Definition 4.

Lemma 2

Let CMB be a (t CMB ,ϵ CMB ,δ CMB )-combining function with message space \(\mathcal{M}_{\mathsf {CMB}}\). Let H be a (t H ,ϵ H )-collision-resistant hash function with message space \(\mathcal{M}_{\mathsf {H}}\) and hash space \(\mathcal{H}_{\mathsf {H}}=\mathcal{M}_{\mathsf {CMB}}\). Then it holds that \(\mathsf {CMB}'=(\mathsf {CMB.KeyGen}',\mathsf {CMB.Eval}', \mathsf {CMB.Invert}')\) is (min{t CMB ,t H },ϵ CMB +ϵ H ,δ CMB )-combining with message space \(\mathcal {M} _{\mathsf {CMB}'}=\mathcal{M}_{\mathsf {H}}\) where the algorithms of CMB′ are defined as follows:

-

\(\mathsf {CMB.KeyGen}'(1^{\kappa})\) outputs \(k'_{\mathsf {CMB}}=(k_{\mathsf {CMB}},k_{\mathsf {H}})\) where \(k_{\mathsf {CMB}}\leftarrow \mathsf {CMB.KeyGen}(1^{\kappa})\) and \(k_{\mathsf {H}}\leftarrow \mathsf {H.KeyGen}(1^{\kappa})\) and

-

\(\mathsf {CMB.Eval}'(k'_{\mathsf {CMB}},r,m)\) outputs \(z(r,h(m))=\mathsf {CMB.Eval}(k_{\mathsf {CMB}},r,\mathsf {H.Eval}(k_{\mathsf {H}},m))\).

The proof of Lemma 2 is relatively straight-forward.

Proof

First observe that given \(m\in \mathcal {M} _{\mathsf {H}}\) and \(t\in \mathcal {Z} _{h(m)}\) we can always compute \(r\in \mathcal {R} _{\mathsf {CMB}}\) (or ⊥) just by finding an appropriate r for h(m) with t=z(r,h(m)) using the properties of CMB. We simply set \(\mathsf {CMB.Invert}'(k'_{\mathsf {CMB}},t,m)\) to output \(\mathsf {CMB.Invert}(k_{\mathsf {CMB}},t, \mathsf {H.Eval}(k_{\mathsf {H}},m))\).

Now, for contradiction assume that an attacker \(\mathcal {A}\) can find collisions for CMB′ in time min{t CMB ,t H } with probability better than ϵ H +ϵ CMB . Let (m,m′) be such a collision. Then, we have either found a collision (m,m′) of the hash function (if h(m)=h(m′)) or a collision (h(m),h(m′)) in the combining function (in case h(m)≠h(m′)). In the first case, \(\mathcal {A}\) has computed a hash collision in time equal or less t H with a probability greater than ϵ H +ϵ CMB ≥ϵ H . This contradicts the fact that H is (t H ,ϵ H )-collision-resistant. On the other hand, if \(\mathcal {A}\) has found a collision in the combining function, this means that \(\mathcal {A}\) can break the collision-resistance of CMB in time less or equal than t CMB with probability greater than ϵ H +ϵ CMB ≥ϵ CMB . This contradicts the fact that CMB is (t CMB ,ϵ CMB ,δ CMB )-combining.

To prove the probability bound δ CMB , recall that \(h( \mathcal {M} _{\mathsf {H}})\subseteq \mathcal {M} _{\mathsf {CMB}}\). Therefore if there is an adversary \(\mathcal {A}\) that can distinguish between random r′ and z −1(t,h(m)) for some \(m\in \mathcal {M} _{\mathsf {H}}\) and random \(t \stackrel {\$}{\leftarrow } \mathcal{Z}_{h(m)}\) then it can also distinguish between r′ and z −1(t,m′) for \(m'=h(m)\in \mathcal {M} _{\mathsf {CMB}}\). In the following, all probabilities are considered over the random bits used to compute \(k_{\mathsf {H}}\leftarrow \mathsf {H.KeyGen}(1^{\kappa})\) and \(k_{\mathsf {CMB}}\leftarrow \mathsf {CMB.KeyGen}(1^{\kappa})\). We get that

So any attacker \(\mathcal {A}\) against CMB′ with success probability better than δ CMB is also an attacker against CMB with success probability greater or equal to δ CMB . □

2.7 The Strong RSA Setting

Definition 5

(Strong RSA Assumption (SRSA))

Given an RSA modulus n=pq, where p,q are primes of size l n /2−1 (with polynomial l n =l n (κ)), and an element \(u\in\mathbb{Z}^{*}_{n}\), we say that the (t SRSA,ϵ SRSA)-SRSA assumption holds if for all t SRSA-time adversaries \(\mathcal {A}\)

where the probability is over the random choices of u,n and the random coins of \(\mathcal {A}\).

Definition 6

(SRSA Setting)

In this setting, \(\mathsf {SIG.KeyGen}(1^{\kappa})\) outputs (SK=(p,q),PK=n) for a safe modulus n=pq such that p=2p′+1, q=2q′+1, and p,q,p′,q′ are primes. All computations are performed in the cyclic group QR n . Let l i =l i (κ) for i∈{c,e,n,r,z} be polynomials. We require that |n|2=l n and |p′|2=|q′|2=l n /2−1. Furthermore, we assume that the (t SRSA,ϵ SRSA)-SRSA assumption holds. We let u,v,w be public random generators of QR n . When using a combining function CMB, we assume that \(k_{\mathsf {CMB}}\leftarrow \mathsf {CMB.KeyGen}(1^{\kappa})\), \(\mathcal {M} _{\mathsf {SIG}}= \mathcal {M} _{\mathsf {CMB}}\), \(\mathcal {Z} _{\mathsf {CMB}}\subseteq[0;2^{l_{z}}-1]\), \(\mathcal {R} _{\mathsf {CMB}}\subseteq[0;2^{l_{r}}-1]\), and that k CMB is also part of PK. When using a chameleon hash function CH, we assume that \(\mathit{PK}_{\mathsf {CH}}\leftarrow \mathsf {CH.KeyGen}(1^{\kappa})\), \(\mathcal {M} _{\mathsf {SIG}}= \mathcal {M} _{\mathsf {CH}}\), \(\mathcal{C}_{\mathsf {CH}}\subseteq[0;2^{l_{c}}-1]\), and that PK CH is also part of PK. We let \(E\subseteq[2^{l_{ e }-1};2^{l_{ e }}-1]\) denote the set of l e -bit primes. Finally, we require that l c ,l z ,l r <l e <l n /2−1.

2.8 The Strong Diffie–Hellman Setting

Definition 7

(Bilinear Groups)

Let \(\mathbb {G}_{1}=\langle g_{1}\rangle ,\mathbb {G}_{2}=\langle g_{2}\rangle\) and \(\mathbb {G}_{T}\) be groups of prime order p. The function \(e:\mathbb {G}_{1}\times \mathbb {G}_{2} \rightarrow \mathbb {G}_{T} \) is a bilinear pairing if it holds that (1) for all \(a\in \mathbb {G}_{1}\), \(b\in \mathbb {G}_{2}\), and \(x,y \in\mathbb{Z}_{p}\) we have e(a x,b y)=e(a,b)xy (bilinearity), (2) \(e(g_{1},g_{2})\neq1_{\mathbb {G}_{T}}\) is a generator of \(\mathbb {G}_{T}\) (non-degeneracy), and (3) e is efficiently computable (efficiency). We call \((\mathbb {G}_{1},g_{1},\mathbb {G}_{2},g_{2},\mathbb {G}_{T},p,e)\) a bilinear group.

Definition 8

(SDH Assumption (SDH))

Let \((\mathbb {G}_{1},\hat{g}_{1},\mathbb {G}_{2},\hat{g}_{2},\mathbb {G}_{T},p,e)\) be a bilinear group. Let l p =l p (κ) be a polynomial and |p|2=l p . We say that the (q SDH,t SDH,ϵ SDH)-SDH assumption holds if for all t SDH-time attackers \(\mathcal {A}\) that are given a (q SDH+3)-tuple of group elements \(W= ( g_{1},g^{x}_{1},g^{(x^{2})}_{1},\ldots,g^{ (x^{q_{ \text {SDH} }}) }_{1},g_{2},g^{x}_{2} ) \in \mathbb {G}_{1}^{q_{ \text {SDH} }\thinspace+1} \times \mathbb {G}_{2}^{2}\) it holds that

where the probability is over the random choices of the generators \(g_{1}\in \mathbb {G}_{1}\), \(g_{2}\in \mathbb {G}_{2}\), \(x\in\mathbb{Z}_{p}\) and the random bits of \(\mathcal {A}\).

Definition 9

(SDH Setting)

Let l p =l p (κ) be a polynomial. In the SDH setting, all computations are performed in the cyclic groups of \((\mathbb {G}_{1},g_{1},\mathbb {G}_{2},g_{2},\mathbb {G}_{T},p,e)\) with |p|2=l p . \(\mathsf {SIG.KeyGen}(1^{\kappa})\) chooses \(x \stackrel {\$}{\leftarrow } \mathbb {Z}_{p}\) and outputs \((\mathit{SK}=x,\mathit{PK}=g_{2}^{x})\). We assume that the (q SDH,t SDH,ϵ SDH)-SDH assumption holds. Finally, we suppose that the values \(a , b , c \in \mathbb {G}_{1}\) are public random generators of \(\mathbb {G}_{1}\). In case we use a combining function CMB, we assume that \(\mathcal {M} _{\mathsf {SIG}}= \mathcal {M} _{\mathsf {CMB}}\), \(\mathcal {Z} _{\mathsf {CMB}}\subseteq \mathbb {Z}_{p}\), \(\mathcal {R} _{\mathsf {CMB}}\subseteq \mathbb {Z}_{p}\), and that k CMB is also part of PK. When using a chameleon hash function CH, we assume that \(\mathcal {M} _{\mathsf {SIG}}= \mathcal {M} _{\mathsf {CH}}\), \(\mathit{PK}_{\mathsf {CH}}\leftarrow \mathsf {CH.KeyGen}(1^{\kappa})\), \(\mathcal{C}_{\mathsf {CH}}\subseteq \mathbb {Z}_{p}\), and that PK CH is also part of PK.

3 Signature Classes

For convenience, we now introduce two general signature classes. The combining signature scheme \({\mathcal {S}_{ \text {CMB} }}\) constitutes an useful abstraction of the Camenisch–Lysyanskaya, the Fischlin, and the Zhu signature scheme using combining functions. The chameleon signature scheme \({\mathcal {S}_{ \text {CH} }}\) can be regarded as a general variant of the original Cramer–Shoup signature scheme where we do not specify a concrete instantiation of the chameleon hash function. We provide an overview in Table 3.

3.1 SRSA-Based Combining Signature Scheme \((\mathcal{S}_{\mathrm{CMB},\mathrm{SRSA}})\)

In the SRSA setting, \(\mathsf {SIG.Sign}(\mathit{SK},m)\) randomly chooses \(r \in\mathcal{R}_{\mathsf {CMB}}\) and e∈E and computes a signature σ=(r,s,e) on message m with \(s = ( u v ^{ r } w ^{z( r ,m)} )^{\frac{1}{ e }}\). Let us now show, that our construction indeed generalizes the claimed signature schemes. Observe that we can easily obtain the Fischlin scheme [10] when we instantiate the combining function with CMB 2 of Table 2. Furthermore, using CMB 3 we can also get the Camenisch–Lysyanskaya scheme [4] with smaller parameter sizes. This becomes obvious if we substitute v by v′=vw as uv r w r+m=u(vw)r w m=u(v′)r w m. Let us explain in more detail how our parameter choices deviate from [4]. In the original scheme, it is required that l r =l n +l m +160. As a result, the authors recommend for 160 bit long messages that l r =1346, l s =1024, and l e =162. In our scheme, we simply require that l m ≤l r <l e <l n /2−1. For comparable security, we could set l r =320, l s =1024, and l e =321 for a probability ϵ CMB =2−160. Therefore, the signature size of our signature scheme is much shorter—only (320+1024+321)/(1346+1024+162)≈66 % of the original signature size—and the scheme is more efficient—since shorter exponents imply faster exponentiations—than the original scheme. We stress that this improvement does not even exploit our new security proof. A tight security proof accounts for considerable, additional efficiency. We note that when we use our variant of the Camenisch–Lysyanskaya scheme with long messages we first have to apply a collision-resistant hash function to the message. What we essentially get is (the revised) scheme of Zhu [14, 23, 24]. By Lemma 2, the resulting function is still combining. The verification algorithm \(\mathsf {SIG.Verify}(\mathit{PK},m,\sigma)\) takes a purported signature σ=(r,s,e) and checks if \(s ^{ e }\stackrel{?}{=} u v ^{ r } w ^{z( r ,m)}\), if |e|2=l e , and if e is odd.

3.2 SDH-based Combining Signature Scheme \((\mathcal{S}_{\mathrm{CMB},\mathrm{SDH}})\)

We also present a SDH-based variant \({\mathcal {S}_{ \text {CMB} , \text {SDH} }}\) of the combining signature scheme. We remark that for the Camenisch–Lysyanskaya scheme there already exists a corresponding SDH-based variant, originally introduced in [5] and proven secure in [1, 18]. Similar to \({\mathcal {S}_{ \text {CMB} , \text {SRSA} }}\), we obtain the SDH-based Camenisch–Lysyanskaya scheme when instantiating the combining function with CMB 1. In the same way, we can also get SDH-based variants of the Fischlin signature scheme (using CMB 2) and of Zhu’s scheme (using Lemma 2). In the SDH-based combining scheme, \(\mathsf {SIG.Sign}(\mathit{SK},m)\) at first chooses a random \(r \in\mathcal{R}_{\mathsf {CMB}}\) and a random \(t \in \mathcal {Z} _{\mathsf {CMB}}\). It then computes the signature σ=(r,s,t) with \(s = ( a b ^{ r } c ^{z( r ,m)} )^{\frac{1}{x+ t }}\). Given a signature σ=(r,s,t), \(\mathsf {SIG.Verify}(\mathit{PK},m,\sigma)\) checks if \(e ( s ,\mathit{PK}g_{2}^{ t } )\stackrel {?}{=}e ( a b ^{ r } c ^{z( r ,m)},g_{2} )\).

3.3 SRSA-Based Chameleon Hash Signature Scheme \((\mathcal{S}_{\mathrm{CH},\mathrm{SRSA}})\)

The signature scheme \({\mathcal {S}_{ \text {CH} , \text {SRSA} }}\) is defined in the SRSA setting. \(\mathsf {SIG.KeyGen}(1^{\kappa})\) additionally generates the key material (SK CH ,PK CH ) for a chameleon hash function CH using \(\mathsf {CH.KeyGen}(1^{\kappa})\). The value PK CH is added to the scheme’s public key PK. (SK CH is not required. However, it may be useful when turning the signature scheme into an online-offline signature scheme [22].) The signature generation algorithm \(\mathsf {SIG.Sign}(\mathit{SK},m)\) first chooses a random \(r \in\mathcal{R}_{\mathsf {CH}}\) and a random prime e∈E. It then outputs the signature σ=(r,s,e) on a message m where \(s = ( u v ^{ch( r ,m)} )^{\frac{1}{ e }}\). To verify a purported signature σ=(r,s,e) on m, \(\mathsf {SIG.Verify}(\mathit{PK},m,\sigma )\) checks if e is odd, if |e|2=l e , and if \(s ^{ e }\stackrel {?}{=} u v ^{ch( r ,m)}\).

3.4 SDH-Based Chameleon Hash Signature Scheme \((\mathcal{S}_{\mathrm{CH},\mathrm{SDH}})\)

Let us now define a new variant of the chameleon hash signature scheme that is based on the SDH assumption. Again, \(\mathsf {SIG.KeyGen}(1^{\kappa})\) also adds the public key PK CH of a chameleon hash function to PK. In the SDH setting, \(\mathsf {SIG.Sign}(\mathit{SK},m)\) first chooses a random \(r \in\mathcal{R}_{\mathsf {CH}}\) and a random \(t \in \mathcal {Z} _{\mathsf {CH}}\). Using SK=x, it then outputs the signature σ on m as σ=(r,s,t) where \(s = ( a b ^{ch( r ,m)} )^{\frac{1}{x+ t }}\). To verify a given signature σ=(r,s,t) on m, \(\mathsf {SIG.Verify}(\mathit{PK},m,\sigma)\) checks if \(e ( s ,\mathit{PK}g_{2}^{ t } )\stackrel {?}{=}e ( a b ^{ch( r ,m)},g_{2} )\). A suitable chameleon hash function can for example be found in [15].

3.5 The Cramer–Shoup Signature Scheme \((\mathcal{S}_{\mathrm{CS},\mathrm{SRSA}})\)

Let us now review the Cramer–Shoup signature scheme that is defined in the SRSA setting. The Cramer–Shoup scheme \({\mathcal {S}_{ \text {CS} , \text {SRSA} }}\) also uses a collision-resistant hash function \(\mathsf {H}=(\mathsf {H.KeyGen},\mathsf {H.Eval})\) with message space \(\mathcal{M}_{\mathsf {H}}=\{0,1\}^{*}\) and hash space \(\mathcal {H}_{\mathsf {H}}=\{0,1\}^{l_{c}}\). The message space of the signature scheme is so extended to \(\mathcal {M} _{\mathsf {SIG}}=\{0,1\}^{*}\). We assume l c <l e <l n /2−1.

-

\(\mathsf {SIG.KeyGen}(1^{\kappa})\) additionally computes a random l e -bit prime \(\tilde {e} \). The secret key is SK=(p,q) the public key is \(\mathit{PK}=(n, \tilde {e} )\).

-

\(\mathsf {SIG.Sign}(\mathit{SK},m)\) first chooses a random r∈QR n and evaluates (the chameleon hash function, as shown below) \(c=r^{ \tilde {e} } /v^{h(m)}\bmod n\). Then it draws a random l e -bit prime \(e\neq \tilde {e} \) and computes the value \(s= (uv^{h(c)} )^{\frac{1}{e}}\bmod n\). The signature is σ=(r,s,e).

-

\(\mathsf {SIG.Verify}(\mathit{PK},m,\sigma)\) re-computes \(c=r^{ \tilde {e} } /v^{h(m)}\bmod n\) and checks if \(s\stackrel{?}{=} (uv^{h(c)} )^{\frac{1}{e}}\bmod n\), if e is odd, and if |e|2=l e .

It might be not obvious that the underlying structure of the Cramer–Shoup signature scheme is similar to that of the schemes in the chameleon hash class. To explain this we show that \(c=r^{ \tilde {e} } /v^{h(m)}\bmod n\) indeed constitutes a chameleon hash function. We emphasize that this chameleon hash function uses the same modulus n (and the same generator v) that is also required for computing s. As a consequence its security cannot be based on the difficulty of factoring. However, security can still be based on the SRSA-assumption.

Lemma 3

In the SRSA setting, let \(\mathsf {H}=(\mathsf {H.KeyGen},\mathsf {H.Eval})\) be a (t H ,ϵ H )-collision-resistant hash function with message space \(\mathcal {M} _{\mathsf {H}}=\{0,1\}^{*}\) and output space \(\mathcal{H}_{\mathsf {H}}=\{0,1\} ^{l_{c}}\). Let k H be generated as \(k_{\mathsf {H}}\leftarrow \mathsf {H.KeyGen}(1^{\kappa})\) and \(\tilde {e} \in E\) be a prime with l c <l e <l n /2−1. Set \(\mathit{PK}_{\mathsf {CH}}=(k_{\mathsf {H}},e,n,v=v'^{ \tilde {e} }\bmod n)\) and SK CH =v′ for some random v′∈QR n . Then, \(ch(r,m)=h (v^{-h(m)}r^{ \tilde {e} }\bmod n )\), ch −1(r,m,m′)=r⋅(v′)(h(m′)−h(m))modn, and the algorithms to set up SK CH and PK CH constitute a chameleon hash function with \(\mathcal {M} _{\mathsf {CH}}=\{0,1\}^{*}\), \(\mathcal {R} _{\mathsf {CH}}=QR_{n}\), and \(\mathcal {C} _{\mathsf {CH}}=\{ 0,1\}^{l_{c}}\) that is (t,3ϵ H /2+3ϵ SRSA) collision-resistant with t≈min{t H ,t SRSA}.

Proof

First, given SK CH , \(r\in \mathcal {R} _{\mathsf {CH}}\), and \(m,m'\in \mathcal {M} _{\mathsf {CH}}\), we can easily find \(r'\in \mathcal {R} _{\mathsf {CH}}\) such that ch(r,m)=ch(r′,m′) by computing r′=r⋅(v′)(h(m′)−h(m))modn.

Second, observe that if r is chosen uniformly at random from QR n , \(v^{-h(m)}r^{ \tilde {e} }\bmod n\) is also distributed uniformly at random for all \(m\in \mathcal {M} _{\mathsf {CH}}\). Therefore, \(h(v^{-h(m)}r^{ \tilde {e} }\bmod n)\) is equally distributed for every \(m\in \mathcal {M} _{\mathsf {CH}}\).

Third, for contradiction assume a t≈min{t H ,t SRSA}-time attacker \(\mathcal {A}\) that can find (m,r),(m′,r′) with m≠m′ such that \(h (v^{-h(m)}r^{ \tilde {e} }\bmod n )=h (v^{-h(m')}(r')^{ \tilde {e} }\bmod n )\) with probability better than 3/2ϵ H +3ϵ SRSA. Then, we can construct an algorithm \(\mathcal {B}\) that breaks the SRSA assumption or the security of the hash function. \(\mathcal {B}\) at first guesses with probability ≥1/3 if \(\mathcal {A}\) outputs a collision such that (1) h(m)=h(m′), (2) \(v^{-h(m)}r^{ \tilde {e} }\bmod n \neq v^{-h(m')}(r')^{ \tilde {e} }\bmod n\) and h(m)≠h(m′) or (3) \(v^{-h(m)}r^{ \tilde {e} }\bmod n = v^{-h(m')}(r')^{ \tilde {e} }\bmod n\) and h(m)≠h(m′). In the first two cases, \(\mathcal {B}\) can output a collision for the hash function with probability better than ϵ H . In the last case, \(\mathcal {B}\) can break an RSAchallenge \((\hat {u} \bmod\hat{n},\hat{n},\hat{e})\) with probability better than ϵ SRSA: \(\mathcal {B}\) just inputs \((v:=u,{ \tilde {e} }=\hat{e},n=\hat {n})\) into \(\mathcal {A}\). Since for the output of \(\mathcal {A}\) it holds that \(v^{h(m')-h(m)}=(r/r')^{ \tilde {e} } \bmod n\) and because \(|h(m')-h(m)|<{ \tilde {e} }\) and \({ \tilde {e} }\) is prime, \(\mathcal {B}\) can compute \(a,b\in\mathbb{Z}\) such that \(\gcd((h(m')-h(m)),{ \tilde {e} })=a(h(m')-h(m))+b{ \tilde {e} }=1\). It can thus find a solution to the RSAchallenge as (r/r′)a v bmodn. All cases contradict our starting assumptions which implies that \(\mathcal {A}\) cannot exists, what finally proves Lemma 3. □

Unfortunately, the proof of the more general chameleon hash scheme class does not formally transfer to the Cramer–Shoup signature scheme because in the Cramer–Shoup scheme parts of the key material of its chameleon hash function are not chosen independently of the rest of the signature scheme. In particular, the chameleon hash function uses the same RSA modulus n and the same value v. This requires slightly more care in the security proof. We provide a full proof of the Cramer–Shoup signature scheme in Sect. 7.

4 Security

Theorem 1

The Cramer–Shoup signature scheme, the combining signature class (in both the SRSA and the SDH setting), and the chameleon signature class (in both the SRSA and the SDH setting) are tightly secure against adaptive chosen message attacks. In particular, this implies that the Camenisch–Lysyanskaya (with modified parameter sizes), the Fischlin, the Zhu, and the SDH-based Camenisch–Lysyanskaya scheme are tightly secure against strong existential forgeries under adaptive chosen message attacks.

We subsequently provide the intuition behind our security proofs. In Sect. 5, we present a full proof of security for \({\mathcal {S}_{ \text {CMB} , \text {SRSA} }}\), which seems to us to be the technically most involved SRSA-based reduction. The proof of \({\mathcal {S}_{ \text {CMB} , \text {SDH} }}\) proceeds analogously and appears in Sect. 6. We then show how to transfer our technique to \({\mathcal {S}_{ \text {CH} }}\) in Sect. 7 by providing a full proof of security of the Cramer–Shoup signature scheme. In the following, we only concentrate on the core idea of our new proofs. When formally proving the schemes secure, we additionally have to put some effort into guaranteeing that the public keys produced by the simulator are indistinguishable from a real public key. To this end the simulator has to randomize certain group elements via exponentiation by random values. Fortunately, this does not pose a serious threat to the overall proof strategy.

4.1 The SRSA-Based Combining Signature Scheme

Let us first consider the SRSA-based schemes, where \(\mathcal {B}\) is given an SRSA challenge \((\hat{u},n)\) with \(\hat{u}\in\mathbb{Z}_{n}^{*}\). Assume that attacker \(\mathcal {A}\) issues q signature queries \(m_{1},\ldots ,m_{q}\in \mathcal {M} _{\mathsf {SIG}}\). As a response to each query m i with i∈[1;q], \(\mathcal {A}\) receives a corresponding signature \(\sigma_{i}=(r_{i},s_{i},e_{i})\in \mathcal {R} _{\mathsf {CMB}}\times QR_{n} \times E\). Recall that the existing security proofs for schemes of the combining class (e.g. [10]) consider two forgers that loosely reduce from the SRSA assumption. This is the case when it holds for \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)) that \(\gcd( e ^{*},\prod^{q}_{i=1} e _{i})\neq1\).Footnote 2 Given that |e ∗|2=l e this means that e ∗=e j for some j∈[1;q]. Let us concentrate on the case that r ∗≠r j . The proof of the remaining case (e ∗=e j , r ∗=r j and m ∗≠m j ) is very similar. It additionally exploits the properties of the combining function. The proofs in [4, 9, 10, 23, 24] work as follows: the simulator \(\mathcal {B}\) at first guesses \(j \stackrel {\$}{\leftarrow } \{1,\ldots,q\}\). By construction, \(\mathcal {B}\) can answer all signature queries but only if \(\mathcal {A}\) outputs a forgery where e ∗=e j it can extract a solution to the SRSA challenge. In all other cases (if e ∗=e i for some i∈{1,…,q}∖{j}), \(\mathcal {B}\) just aborts. Since the number of signature queries q rises polynomially in the security parameter, the probability for \(\mathcal {B}\) to correctly guess j in advance is q −1 and thus not negligible. However, the security reduction loses a factor of q here. Our aim is to improve this reduction step. Ideally, we have that any forgery which contains e ∗∈{e 1,…,e q } helps the simulator to break the SRSA assumption. As a result, the simulator can completely avoid guessing. The main task is to re-design the way \(\mathcal {B}\) computes \(\mathcal {A}\)’s input parameters: for every i∈{1,…,q}, we must have one choice of r i such that \(\mathcal {B}\) can simulate the signing oracle without having to break the SRSA challenge. On the other hand, if \(\mathcal {A}\) outputs (m ∗,(r ∗,s ∗,e ∗)) with e ∗=e i for some i∈[1;q] and r ∗≠r i , \(\mathcal {B}\) must be able to compute a solution to the SRSA challenge. Let us now go into more detail.

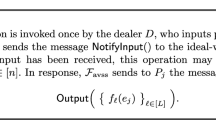

We exploit that the r i and the primes e i are chosen independently at random by the signer. So, they can be specified prior to the signature queries in the simulation. For simplicity, assume that \(\mathcal {B}\) can set up \(\mathcal {A}\) ’s input parameters such that the verification of a signature σ=(r,s,e) always reduces to

Suppose that neither \(\hat{u}\) nor \(f: \mathcal {R} _{\mathsf {CMB}}\rightarrow\mathbb{N}\) are ever revealed to \(\mathcal {A}\). Now, \(\mathcal {B}\)’s strategy to simulate the signing oracle is to define r 1,…,r q such that for every i∈[1;q] it can compute a prime e i ∈E with e i |f(r i ). Without having to break the SRSA assumption, \(\mathcal {B}\) can then compute \(s_{i}=\hat{u}^{f(r_{i})/e_{i}}\) and output the ith signature as (r i ,s i ,e i ). Let us now turn our attention to the extraction phase where \(\mathcal {B}\) is given \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)). By assumption we have e ∗=e i for some i∈[1;q] and r ∗≠r i . \(\mathcal {B}\) wants to have that gcd(e ∗,f(r ∗))=D<e ∗ (or f(r ∗)≠0mode ∗) because then it can find a solution to the SRSA challenge by computing \(a,b\in\mathbb{Z}\setminus\{0\}\) with af(r ∗)/D+be ∗/D=1 using extended Euclidean algorithm and outputting

In the literature this computation is sometimes referred to as Shamir’s trick. \(\mathcal {B}\)’s strategy to guarantee gcd(e ∗,f(r ∗))=D<e ∗ is to ensure that \(e^{*}=e_{i}\not{\mid} f(r^{*})\). Unfortunately, \(\mathcal {B}\) cannot foresee r ∗. Therefore, the best solution is to design f such that \(e_{i}\not| f(r^{*})\) for all r ∗≠r i .

Obviously, \(\mathcal {B}\) makes strong demands on f: the above requirements imply that we must have e i |f(r)⇔r=r i . Additionally f needs to be linear such that it can be embedded in the exponents of two group elements (u,v in the chameleon hash class). We now present our construction of f and argue that it perfectly fulfills all requirements. We define \(f: [0;2^{l_{e}-1}-1]\rightarrow \mathbb{Z}\) as

for \(r_{1},\ldots,r_{q}\in \mathcal {R} _{\mathsf {CMB}}\). Furthermore, e 1,…,e q ∈E must be distinct primes.

Lemma 4

Suppose we are given a set of q primes P={e 1,…,e q } with \(2^{l_{e}-1}\leq e_{i}\leq2^{l_{e}}-1\) for i∈[1;q] and q integers \(r_{1},\ldots,r_{q}\in[0;2^{l_{e}-1}-1]\). Then we have for the above definition of f:

for all k∈[1;q].

Proof

For convenience define \(\bar{e}_{i}=\prod^{q}_{{j=1, j\neq i}}e_{j}\) for i∈[1;q]. Observe that because the e i are all distinct primes we get for i,i′∈[1;q] that

Using this notation, we have for all k∈[1;q] that

For r=r k we immediately get f(r k )=0mode k . Now, assume r≠r k . Since the e i are distinct primes and as |r k −r|<e k , it holds that

which proves the lemma. If we assume that |r i |2<l e +1 for all i∈[1;q] we can also have \(r_{1},\ldots,r_{q}\in[-2^{l_{e}};2^{l_{e}}-1]\). □

4.2 The SDH-Based Combining Signature Scheme

Under the SDH assumption, the situation is very similar. Here we also analyze three possible types of forgeries (m ∗,(r ∗,s ∗,t ∗)): either (1) t ∗∉{t 1,…,t q } or (2) t ∗=t i with i∈[1;q] but r ∗≠r i or (3) t ∗=t i , r ∗=r i (but m ∗≠m i ) with i∈[1;q]. Again, we concentrate on the second case. At the beginning, \(\mathcal {B}\) is given an SDH challenge \(( \hat{g}_{1},\hat {g}^{x}_{1},\hat{g}^{(x^{2})}_{1},\ldots,\hat{g}^{ (x^{q}) }_{1},g_{2},g^{x}_{2} )\). This time, \(\mathcal {B}\) chooses \(\mathit{PK}=g^{x}_{2}\). In the SDH setting, (1) transfers to

In contrast to the SRSA setting, f is now a polynomial with indeterminate x and maximal degree q. Again, \(\mathcal {B}\) must keep f(r,x) and the \(\hat{g}_{1}^{(x^{i})}\) secret from \(\mathcal {A}\). We define f(r,x) with \(f: \mathbb{Z}_{p}\times\mathbb{Z}_{p}[x]\rightarrow\mathbb {Z}_{p}[x]\) to map polynomials of maximal degree q in indeterminate x to polynomials of maximal degree q as

for \(r_{1},\ldots,r_{q}\in \mathcal {R} _{\mathsf {CMB}}\) and distinct \(t_{1},\ldots ,t_{q}\in \mathbb {Z}_{p}\). (Recall that \(\mathcal {R} _{\mathsf {CMB}}\subseteq\mathbb{Z}_{p}\) so f is useful if we choose r i from \(\mathcal {R} _{\mathsf {CMB}}\).) Using the SDH challenge, \(\mathcal {B}\) can easily compute \(\hat{g}_{1}^{f(r,x)}\) since f(r,x) has maximal degree q. In the following, we will, given a degree q polynomial f(r,x) and (x+t), often apply long division to compute a polynomial f′(r,x) of degree q−1 and \(D\in \mathbb {Z}_{p}\) such that f(r,x)=f′(r,x)(x+t)+D. We use (x+t)|f(r,x) to denote that long division results in D=0. Similarly, we use \((x+t)\not{|}f(r,x)\) to denote that D≠0.

Lemma 5

Let a set \(T=\{t_{1},\ldots,t_{q}\}\subseteq\mathbb{Z}_{p}\) and q values \(r_{1},\ldots,r_{q}\in \mathbb {Z}_{p}\) be given. Then the above definition of f(r,x) (4) guarantees that

for all k∈[1;q].

Proof

Define \(\bar{t}_{i}:=\prod^{q}_{k=1,k\neq i}{(t_{k}+x)}\) for i∈[1;q]. Since the t i are all distinct, we get for i,i′∈[1;q] that

Also, observe that for every k∈[1;q] we always have

From this we immediately get for r=r k that (x+t k )|f(r k ,x). On the other hand, for r≠r k , we get that \((x+t_{k})\not{|}(r_{k} - r) \bar{t}_{k}\). However, this also shows that we cannot have (x+t k )|f(r,x) as otherwise \((x+t_{k})\not{|}(f(r,x) - (r_{k} - r) \bar{t}_{k})\). So it must hold that \((x+t_{k})\not{|}f(r)\) which concludes the proof. □

It remains to show how to extract a solution to the SDH challenge from \(\mathcal {A}\)’s forgery. If r≠r k , then long division gives us \(D\in\mathbb{Z}\) with D≠0 and a new polynomial \(\tilde{f}_{t_{k}}(r,x)\) with coefficients in \(\mathbb{Z}\) such that \(f(r,x)=\tilde{f}_{t_{k}}(r,x)(x+t_{k})+D\). Also, \(\tilde{f}_{t_{k}}(r,x)\) has degree <q and can be evaluated with the help of the SDH challenge. Similar to the SRSA class, we can find a solution to the SDH challenge from \(\mathcal {A}\)’s forgery as

4.3 Security of the Chameleon Hash Signature Class

The chameleon hash class is also tightly secure in the SRSA and the SDH setting. For convenience let c i =ch(r i ,m i ) for i∈[1;q] and c ∗=ch(r ∗,m ∗). Altogether there are again three types of forgeries to consider: (1) e ∗∉{e 1,…,e q } (t ∗∉{t 1,…,t q }), (2) e ∗=e i (t ∗=t i ) but c ∗≠c i , and (3) e ∗=e i (t ∗=t i ), c ∗=c i but m ∗≠m i . The proof of (1) is again straight-forward and very similar to the corresponding proof of the combining class. The proof of (3) clearly reduces to the security properties of the chameleon hash function. The proof of (2) requires our new technique to set up f(c) (f(c,x)). Recall Sect. 4 where we analyzed the equations \(s^{e}=\hat{u}^{f(r)}\) and \(f(r)=\sum_{i=1}^{q} r_{i} \prod^{q}_{{j=1,j\neq i}}e_{j} - r \sum_{i=1}^{q} \prod^{q}_{{j=1,j\neq i}}e_{j}\) in the SRSA setting (and \(s^{x+t}=\hat{g}_{1}^{f(r,x)}\) and \(f(r,x)=\sum_{i=1}^{q} r_{i} \prod^{q}_{{j=1,j\neq i}}(x+t_{j}) - r \sum_{i=1}^{q} \prod^{q}_{{j=1,j\neq i}}(x+t_{j})\) in the SDH setting). In the proof of the combining class the r i are random values that can be specified prior to the simulation phase. In the proof of the chameleon hash class we take a similar approach. However, now we use the c i , the output values of the chameleon hash function to set up f. In the initialization phase of the proof we choose q random input pairs \(({m'}_{i},{r'}_{i})\in \mathcal {M} _{\mathsf {CH}}\times \mathcal {R} _{\mathsf {CH}}\), i∈[1;q] to compute the \(c_{i}=\mathsf {CH.Eval}(\mathit{PK}_{\mathsf {CH}},m'_{i},r'_{i})\). Then we prepare the function f(c) (f(c,x)) with C={c 1,…,c q } and a set of q random l e -bit primes (random values \(t_{1},\ldots,t_{q}\in \mathbb {Z}_{p}\)) as in the proofs of the combining class and exploit Lemma 4 (Lemma 5). Next, we embed f(c) (f(c,x)) in the exponents of the two group elements u,v (a,b). In the simulation phase we give the simulator SK CH to map the attacker’s messages m i to the prepared c i by computing \(r_{i}=\mathsf {CH.Coll}(\mathit{SK}_{\mathsf {CH}},r'_{i},m'_{i},m_{i})\). In this way we can successfully simulate the signing oracle. In the extraction phase, the properties of the chameleon hash function guarantee that c ∗∉{c 1,…,c q } (otherwise we can break the security of the chameleon hash function). This ensures that we can find a solution to the SRSA challenge (SDH challenge).

5 Security Analysis of \({\mathcal {S}_{ \text {CMB} , \text {SRSA} }}\)

In the following, we provide a detailed security proof for the SRSA-based combining class. But first we establish a useful lemma on the number of primes with a given bit length.

Lemma 6

Let E be the set of primes in the interval \([2^{l_{e}-1};2^{l_{e}}-1]\). For l e ≥33

Proof

Rosser presented explicit bounds for the prime counting function π(x) in [20]. For x≥55, he showed that

For \(l_{e}\geq33 > 4+ \frac{20}{\ln(2)}\) we have in particular that (a) \(\frac{10+2\ln(2)}{l_{e}\ln(2)}< 1/2\) and \(2^{l_{e}}> 55\). At the same time, since \(l_{e}>9> 1+\frac{5}{\ln(2)}\), we get (b) l e ln(2)>l e ln(2)−4−ln(2)>1. Also, since \(l_{e}>7>\frac{2}{1-\ln(2)}\) we have that (c) l e >l e ln(2)+2. We exploit that \(\pi(2^{l_{e}}-1)=\pi(2^{l_{e}})\) and thus the lower bound for \(\pi(2^{l_{e}})\) must also hold for \(\pi(2^{l_{e}}-1)\). We can now bound the number of l e -bit primes as

□

Now let us proceed to the first tight security proof. The proof is similar to the original proof of the Cramer–Shoup signature scheme.

Lemma 7

Assume we work in the SRSA setting such that the (t SRSA,ϵ SRSA)-SRSA assumption holds and CMB is a (t CMB ,ϵ CMB ,δ CMB )-combining function. Then, the combining signature class as presented in Sect. 3.1 is (q,t,ϵ)-secure against adaptive chosen message attacks provided that

The proof of Lemma 7 is the first step in the proof of Theorem 1. It implies that the original Fischlin and the Zhu’s signature scheme as well as the modified Camenisch–Lysyanskaya are tightly secure against existential forgeries under adaptive chosen message attacks.

Proof

Assume that \(\mathcal {A}\) is a forger that (q,t,ϵ)-breaks the strong existential unforgeability of \({\mathcal {S}_{ \text {CMB} , \text {SRSA} }}\). Then, we can construct a simulator \(\mathcal {B}\) that, by interacting with \(\mathcal {A}\), solves the SRSA problem in time t SRSA with advantage ϵ SRSA. We consider three types of forgers that after q queries m 1,…,m q and corresponding responses (r 1,s 1,e 1),…,(r q ,s q ,e q ) partition the set of all possible forgeries (m ∗,(r ∗,s ∗,e ∗)). In the proof, we treat all types of attackers differently. At the beginning, we let \(\mathcal {B}\) guess with probability at least \(\frac {1}{3}\) which forgery \(\mathcal {A}\) outputs. Lemma 7 then follows by a standard averaging argument. We assume that \(\mathcal {B}\) is given an SRSA challenge instance \(( \hat{u},n )\). Let Pr[S i ] denote the success probability of an attacker to successfully forge signatures in Game i.

Type I Forger

(e ∗∉{e 1,…,e q })

Suppose \(\mathcal {B}\) guesses that \(\mathcal {A}\) is a Type I Forger.

- Game0 :

-

This is the original attack game. By assumption, \(\mathcal {A}\) (q,t,ϵ)-breaks SIG CMB,SRSA when interacting with the signing oracle \(\mathcal {O} (\mathit{SK},\cdot)\). We have that,

$$ \mbox {Pr}[S_{0}]=\epsilon. $$(5) - Game1 :

-

Now, \(\mathcal {B}\) constructs the values u,v,w using the SRSA challenge instead of choosing them randomly from QR n . First, \(\mathcal {B}\) chooses q random primes \(e _{1}, \ldots, e _{q} \stackrel {\$}{\leftarrow } E \) and three random elements \(t '_{0}, t ''_{0} \stackrel {\$}{\leftarrow } \mathbb {Z}_{(n-1)/4}\) and \(t _{0} \stackrel {\$}{\leftarrow } \mathbb{Z}_{3(n-1)/4}\). In the following, let \(\bar{ e }:=\prod^{q}_{k=1}{ e _{k}}\), \(\bar{ e }_{i}:=\prod^{q}_{k=1,k\neq i}{ e _{k}}\) and \(\bar{ e }_{i,j}:=\prod^{q}_{k=1,k\neq i, k\neq j}{ e _{k}}\). The simulator computes \(u =\hat{u}^{2 t _{0}\bar{ e }},\ v =\hat{u}^{2 t '_{0}\bar{ e }},\ w =\hat{u}^{2 t ''_{0}\bar { e }}\) using the SRSA challenge. Since the \(t _{0}, t '_{0}, t ''_{0}\) are not chosen uniformly at random from \(\mathbb{Z}_{p'q'}\), we must analyze the success probability for \(\mathcal {A}\) to detect our construction. Observe that (n−1)/4=p′q′+(p′+q′)/2>p′q′. Without loss of generality let p′>q′. Now, the probability of a randomly chosen \(x\in\mathbb{Z}_{(n-1)/4}\) not to be in \(\mathbb{Z}_{p'q'}\) is

$$\begin{aligned} \mbox {Pr}[x \stackrel {\$}{\leftarrow } \mathbb{Z}_{(n-1)/4},\ x\notin\mathbb {Z}_{p'q'}] =& 1-\frac{|\mathbb{Z}_{p'q'}|}{|\mathbb{Z}_{(n-1)/4}|}=\frac {(p'+q')}{(2p'q'+p'+q')} \\ <& \frac{1}{q'+1}<2^{-(|q'|_2-1)}. \end{aligned}$$With the same arguments we can show that t 0 is also distributed almost uniformly at random in \(\mathbb{Z}_{p'q'}\) and \(\mathbb{Z}_{3p'q'}\). Since the e i are primes smaller than p′ and q′, it holds that \(e _{i} \not {|} p'q'\). Therefore, the distribution of the generators is almost equal to the previous game and we get by a union bound that

$$ \mbox {Pr}[S_{1}]\geq \mbox {Pr}[S_{0}]-3\cdot 2^{-(l_n/2-2)} . $$(6) - Game2 :

-

Now, \(\mathcal {B}\) simulates \(\mathcal {O} (\mathit{SK},\cdot)\) by answering \(\mathcal {A}\)’s signature queries. Subsequently, set z j =z(r j ,m j ) and z ∗=z(r ∗,m ∗). The simulator \(\mathcal {B}\) sets PK=(u,v,w,n) and for all j∈{1,…,q} it chooses a random \(r _{j} \in \mathcal{R}_{\mathsf {CMB}}\) and outputs σ j =(r j ,s j ,e j ) with \(s _{j}= ( u v ^{ r _{j}} w ^{z_{j}} )^{\frac {1}{ e _{j}}} =\hat{u}^{2( t _{0}+ t '_{0} r _{j}+ t ''_{0}z_{j})\bar{e}_{j}}\). The distribution of the so computed values is equal to the previous game and

$$ \mbox {Pr}[S_{2}]=\mbox {Pr}[S_{1}] . $$(7) - Game3 :

-

Now, consider \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)). Define \(\hat{ e }=( t _{0}+ t '_{0} r ^{*}+ t ''_{0}z^{*})\). For \(\mathcal {A}\)’s forgery it holds that \(( s ^{*})^{ e ^{*}}=\hat{u}^{2\bar{ e }\hat{ e }}\). We also have that \(\gcd( e ^{*},2\bar { e }\hat{ e })=\gcd ( e ^{*},\hat{ e })\) since by assumption we know that \(\gcd( e ^{*},2\bar{ e })=1\). We will now analyze the probability for the event \(\gcd( e ^{*},\hat{ e })< e ^{*}\) to happen. If \(\gcd( e ^{*},\hat{ e })= e ^{*}\) (or \(\hat{e}=0\bmod e^{*}\)) \(\mathcal {B}\) simply aborts and restarts. Since |e ∗|2=l e , it holds that gcd(e ∗,p′q′)<e ∗. Write \(t _{0}\in\mathbb{Z}_{3(n-1)/4}\) as t 0=t 0,1+p′q′t 0,2 where t 0,2∈[0;2] and t 0,1∈[0,p′q′−1] and observe that \(\mathcal {A}\)’s view is independent from t 0,2. Let \(T=\hat{ e }-p'q' t _{0,2}\). We now argue that there exists at most one \(\tilde{ t }_{0,2}\in[0;2]\) such that \(T+\tilde{ t }_{0,2}p'q'=0\bmod e ^{*}\). This is crucial because if \(\mathcal {A}\) produces forgeries with \(T+\tilde{ t }_{0,2}p'q'=0\bmod e ^{*}\) for all \(\tilde { t }_{0,2}\in[0;2]\) it always holds that \(\gcd ( e ^{*},\hat{ e })= e ^{*}\) and \(\mathcal {B}\) cannot extract a solution the SRSA challenge (using the techniques described below).

Assume there exists at least one such \(\tilde{ t }_{0,2}\). Then, we have that \(T+\tilde{ t }_{0,2}p'q'=0\bmod e ^{*}\). Let us analyze the remaining possibilities \(\tilde{ t }_{0,2}\pm1 \bmod 3\) and \(\tilde{ t }_{0,2}\pm2 \bmod 3\) as \(A=T+\tilde{ t }_{0,2}p'q'\pm p'q'\bmod e ^{*}\) and \(B=T+\tilde{ t }_{0,2}p'q'\pm2p'q'\bmod e ^{*}\). Since gcd(e ∗,p′q′)<e ∗, we know that p′q′≠0mode ∗. As \(T+\tilde{ t }_{0,2}p'q'=0\bmod e ^{*}\), we must have that A≠0mode ∗. Also, because e ∗ is odd we know that 2p′q′≠0mode ∗ and thus B≠0mode ∗. So, because there can only exist at most one \(\tilde{ t }_{0,2}\in[0;2]\) with \(\gcd ( e ^{*},\hat{ e })= e ^{*}\) and since this \(\tilde{ t }_{0,2}\) is hidden from \(\mathcal {A}\)’s view, \(\mathcal {A}\)’s probability to output it is at most 1/3. This means that with probability at least 2/3, \(\mathcal {B}\) has that \(\gcd( e ^{*},\hat{ e })=d< e ^{*}\). From \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)), \(\mathcal {B}\) can now find a solution to the SRSA challenge by computing \(a,b\in\mathbb{Z}\) with \(\gcd ( e ^{*}/d,2\bar{ e }\hat { e }/d)=a e ^{*}/d+b2\bar { e }\hat{ e }/d=1\) and

$$\begin{aligned} \bigl(\hat{u}^{d/ e ^*}=\hat{u}^a\bigl( s ^* \bigr)^b, e ^*/d \bigr) . \end{aligned}$$Finally, we have that

$$ \mbox {Pr}[S_{3}]\geq2\cdot \mbox {Pr}[S_{2}]/3 $$(8)and

$$ \mbox {Pr}[S_{3}]=\epsilon_{ \text {SRSA} }. $$(9)Plugging in (5)–(9), we get that \(\epsilon\leq\frac{3}{2}\epsilon_{ \text {SRSA} }+3\cdot2^{2-l_{n}/2}\).

Type II Forger

(e ∗=e i and r ∗≠r i )

Now suppose \(\mathcal {B}\) expects \(\mathcal {A}\) to be a Type II Forger. We only present the differences to the previous proof.

- Game1 :

-

First, \(\mathcal {B}\) randomly chooses q distinct l e -bit primes e 1,…,e q and q random elements \(r _{1},\ldots, r _{q}\in\mathcal{R}_{\mathsf {CMB}}\). Additionally, it chooses three random elements \(t _{0}, t '_{0}, t ''_{0}\) from \(\mathbb{Z}_{(n-1)/4}\). Next, \(\mathcal {B}\) computes \(u =\hat{u}^{2 (t_{0}\bar { e }+\sum^{q}_{i=1} r _{i}\bar { e }_{i} )}\), \(v =\hat{u}^{2 (t'_{0}\bar{ e }-\sum ^{q}_{i=1}\bar{ e }_{i} )}\), and \(w =\hat{u}^{2t''_{0}\bar{ e }}\) using the SRSA challenge. Again,

$$ \mbox {Pr}[S_{1}]\geq \mbox {Pr}[S_{0}]-3\cdot 2^{-(l_n/2-2)} . $$(10) - Game2 :

-

Now \(\mathcal {B}\) simulates the signing oracle \(\mathcal {O} (\mathit{SK},\cdot )\). On each signature query m j with j∈{1,…,q}, \(\mathcal {B}\) responds with σ j =(r j ,s j ,e j ) using the pre-computed r j and e j and computing s j as

$$\begin{aligned} s _j =& \bigl( u v ^{ r _j} w ^{z_j} \bigr)^{\frac {1}{ e _j}}= \hat{u}^{2 (( t _0+ t '_0 r _j+ t ''_0z_j)\bar { e }_j +\sum^{q}_{i=1} r _i\bar{ e }_{i,j}- r _j\sum^{q}_{i=1}\bar{ e }_{i,j} )} \\ =& \hat{u}^{2 (( t _0+ t '_0 r _j+ t ''_0z_{j})\bar { e }_j+\sum^{q}_{i=1,i\neq j}( r _i- r _j)\bar{ e }_{i,j} )}. \end{aligned}$$Since we have chosen the e i to be distinct primes, we have by a union bound that

$$ \mbox {Pr}[S_{2}]\geq \mbox {Pr}[S_{1}]- \frac {q^2}{|E|} . $$(11) - Game3 :

-

Now consider \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)). By assumption there is an i∈{1,…,q} with e ∗=e i and r i ≠r ∗. Then we have that

$$\bigl(\bigl( s ^*\bigr)\cdot\hat{u}^{-2 (( t _0+ t '_0 r ^*+ t ''_0z^*)\bar{ e }_i+\sum^{q}_{j=1, j\neq i}( r _j- r ^*)\bar{ e }_{i,j} )} \bigr)^{ e _i}=\hat{u}^{2( r _i- r ^*)\bar { e }_i}. $$Since |r i −r ∗|<e i and e i is an odd prime, we have that gcd(2(r i −r ∗),e i )=1 and as before we can compute \(\hat{u}^{\frac {1}{ e _{i}}}\) which is a solution to the SRSA challenge.

$$ \mbox {Pr}[S_{3}]=\epsilon_{ \text {SRSA} }. $$(12)Summing up (10)–(12), we get that \(\epsilon\leq\epsilon_{ \text {SRSA} }+{q^{2}}/{|E|}+3\cdot2^{2-l_{n}/2}\).

Type III Forger

(e ∗=e i and r ∗=r i )

In case \(\mathcal {B}\) expects \(\mathcal {A}\) to be a Type III Forger, there are only minor differences as compared to the previous proof.

- Game1 :

-

First, \(\mathcal {B}\) randomly chooses q l e -bit primes e 1,…,e q and q random \({z}_{1},\ldots,{z}_{q}\in\mathcal{Z}_{\mathsf {CMB}}\). Then, \(\mathcal {B}\) draws three random elements \(t _{0}, t '_{0}, t ''_{0}\) from \(\mathbb{Z}_{(n-1)/4}\). Next, \(\mathcal {B}\) computes u, v, and w as \(u =\hat{u}^{2 (t_{0}\bar { e }+\sum^{q}_{i=1}z_{i}\bar{ e }_{i} )}\), \(v =\hat{u}^{2t'_{0}\bar{ e }}\), and \(w =\hat{u}^{2 (t''_{0}\bar{ e }-\sum ^{q}_{i=1}\bar{ e }_{i} )}\).

$$ \mbox {Pr}[S_{1}]\geq \mbox {Pr}[S_{0}]-3\cdot 2^{-(l_n/2-2)} . $$(13) - Game2 :

-

This game is equal to the previous game except that we require the e i to be all distinct. We have that

$$ \mbox {Pr}[S_{2}]\geq \mbox {Pr}[S_{1}]-\frac{q^2}{|E|} . $$(14) - Game3 :

-

Now \(\mathcal {B}\) simulates the signing oracle. For each query m j with j∈{1,…,q}, \(\mathcal {B}\) computes r j =z −1(z j ,m j ). If \(r_{j}\notin \mathcal {R} \), \(\mathcal {B}\) aborts. Otherwise \(\mathcal {B}\) outputs the signature σ j =(r j ,s j ,e j ) with s j being computed as

$$\begin{aligned} s _j =& \bigl( u v ^{ r _j} w ^{z_j} \bigr)^{\frac {1}{ e _j}} = \hat{u}^{2 (( t _0+ t '_0 r _j+ t ''_0z_j)\bar{ e }_j +\sum^{q}_{i=1}z_i\bar{ e }_{i,j}-z_j\sum ^{q}_{i=1}\bar{ e }_{i,j} )} \\ =& \hat{u}^{2 (( t _0+ t '_0 r _j+ t ''_0 z_{j})\bar { e }_j+\sum^{q}_{i=1,i\neq j}(z_i-z_j)\bar { e }_{i,j} )}. \end{aligned}$$To bound the probability of \(\mathcal {B}\) to abort observe that the properties of the combining function guarantee that the r j are indistinguishable from uniformly random values over \(\mathcal {R} _{\mathsf {CMB}}\) such that,

$$ \mbox {Pr}[S_{3}]\geq \mbox {Pr}[S_{2}]-q\delta_\mathsf {CMB}. $$(15)When using CMB 1, CMB 2, or CMB 3 the r j are even statistically close to uniform.

- Game4 :

-

This game is like the previous one except that \(\mathcal {B}\) aborts whenever there is a collision such that z i =z(r i ,m i )=z(r i ,m ∗)=z ∗ for some r i . Observe that we must have m ∗≠m i , otherwise \(\mathcal {A}\) just replayed the i-th message/signature pair. For all t CMB -time attackers such a collision happens with probability at most ϵ CMB . Therefore,

$$ \mbox {Pr}[S_{4}]\geq \mbox {Pr}[S_{3}]-\epsilon_\mathsf {CMB}. $$(16)Consider \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,e ∗)). By assumption, there is one index i∈{1,…,q} with e ∗=e i and r ∗=r i . For this index it holds that

$$\begin{aligned} \bigl(\bigl( s ^*\bigr)\cdot\hat{u}^{-2 (( t _0+ t '_0 r ^*+ t ''_0z^*)\bar{ e }_i+\sum^{q}_{j=1, j\neq i}(z_j-z^*)\bar{ e }_{i,j} )} \bigr)^{ e _i} =&\hat {u}^{2(z_i-z^*)\bar{ e }_i}. \end{aligned}$$Since we have excluded collisions, it follows that z i ≠z ∗. As |z i −z ∗|≤e i , \(\mathcal {B}\) can compute \(\hat {u}^{\frac{1}{ e _{i}}}\) as a solution to the SRSA challenge. Finally,

$$ \mbox {Pr}[S_{4}]=\epsilon_{ \text {SRSA} }. $$(17)Summing up (13)–(17), we get that \(\epsilon\leq\epsilon_{ \text {SRSA} }+\epsilon_{\mathsf {CMB}}+q\delta_{\mathsf {CMB}}+q^{2}/|E|+3\cdot2^{2-l_{n}/2}\). Applying Lemma 6 to bound |E| completes the proof. □

Remark 1

As shown in Sect. 3.1 one can, using our terminology, view the Zhu signature scheme as a combining scheme that relies on CMB 3 and Lemma 2. Note that the application of a hash function does not influence δ CMB , solely ϵ CMB is affected. Therefore, for the combining function obtained as a sequential combination of a hash function and CMB 3 to the input message, δ CMB is entirely determined by CMB 3. So, let us in the following solely concentrate on CMB 3. In the original, flawed proof in [23, 24], Zhu uses parameter lengths with l r =l m . As a consequence we only get that δ CMB ≤1. This is problematic in the security proof as for the probability bound of the signature scheme we only know that \(\epsilon\leq\epsilon_{ \text {SRSA} } + 3\epsilon_{\mathsf {CMB}} + 3q\delta_{\mathsf {CMB}} +\frac{3q^{2}}{|E|}+9\cdot 2^{2-l_{n}/2}\). We thus cannot argue that ϵ is negligible and the signature scheme secure. Joye in [14] fixed this problem simply by using parameter sizes l r and l m with l r >l m such that \(\delta_{\mathsf {CMB}}\leq2^{l_{m}-l_{r}}\) is negligible.

6 Security Analysis of \({\mathcal {S}_{ \text {CMB} , \text {SDH} }}\)

In the following we show how to transfer the security proof of the combining class to the SDH setting.

Lemma 8

Assume we work in the SDH setting such that the (t SDH,ϵ SDH)-SDH assumption holds and CMB is a (t CMB ,ϵ CMB ,δ CMB )-combining function. Then, the combining signature class as presented in Sect. 3.1 is (q,t,ϵ)-secure against adaptive chosen message attacks provided that

In particular, this means that the SDH-based Camenisch–Lysyanskaya scheme is tightly secure against existential forgeries under adaptive chosen message attacks.

Proof

The proof of Lemma 8 is the second step in the proof of Theorem 1. We consider three types of forgers that after q queries m 1,…,m q and corresponding responses (r 1,s 1,t 1),…,(r q ,s q ,t q ) partition the set of all possible forgeries (m ∗,(r ∗,s ∗,t ∗)). The simulator \(\mathcal {B}\) is given \(( g_{1},g^{x}_{1},g^{(x^{2})}_{1},\ldots,g^{ (x^{q}) }_{1},g_{2},g^{x}_{2} )\).

Type I Forger

(t ∗∉{t 1,…,t q })

Suppose \(\mathcal {B}\) guesses that \(\mathcal {A}\) is a Type I Forger.

- Game0 :

-

This is the original attack game. By assumption, \(\mathcal {A}\) (q,t,ϵ)-breaks SIG CMB,SDH when interacting with the signing oracle \(\mathcal {O} (\mathit{SK},\cdot)\). We have that,

$$ \mbox {Pr}[S_{0}]=\epsilon. $$(18) - Game1 :

-

Now, \(\mathcal {B}\) constructs the values a,b,d using the SDH challenge instead of choosing them randomly. First, \(\mathcal {B}\) chooses q random values \(t_{1}, \ldots, t_{q} \stackrel {\$}{\leftarrow } \in \mathbb {Z}_{p}\) and three random elements \(t _{0}, t '_{0}, t ''_{0} \stackrel {\$}{\leftarrow } \mathbb{Z}_{p}\). In the following, let \(\bar{t}:=\prod^{q}_{k=1}(t_{k}+x)\), \(\bar{t}_{i}:=\prod^{q}_{k=1,k\neq i}{(t_{k}+x)}\) and \(\bar{t}_{i,j}:=\prod^{q}_{k=1,k\neq i, k\neq j}{(t_{k}+x)}\). The simulator computes \(a=g_{1}^{ t _{0}\bar{t}},\ b=g_{1}^{ t '_{0}\bar{t}},\ d=g_{1}^{ t ''_{0}\bar{t}}\)

Since the \(t _{0}, t '_{0}, t ''_{0}\) are chosen uniformly at random from \(\mathbb {Z}_{p}\), we have

$$ \mbox {Pr}[S_{1}]= \mbox {Pr}[S_{0}] . $$(19) - Game2 :

-

Now, \(\mathcal {B}\) simulates \(\mathcal {O} (\mathit{SK},\cdot)\) by answering \(\mathcal {A}\)’s signature queries. As before, let z j =z(r j ,m j ) and z ∗=z(r ∗,m ∗). The simulator \(\mathcal {B}\) sets \(\mathit{PK}=g_{2}^{x}\) and for all j∈{1,…,q} it chooses a random \(r_{j} \in\mathcal{R}_{\mathsf {CMB}}\) and outputs σ j =(r j ,s j ,t j ) with \(s _{j}= ( a b^{ r _{j}} d^{z_{j}} )^{{1}/{(x+t_{j})}} =g_{1}^{( t _{0}+ t '_{0} r _{j}+ t ''_{0}z_{j})\bar{t}_{j}}\).

$$ \mbox {Pr}[S_{2}]=\mbox {Pr}[S_{1}] . $$(20) - Game3 :

-

Now, consider \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,t ∗)). We have that t ∗∉{t 1,…,t q } and

$$\begin{aligned} e\bigl(s^*,\mathit{PK}g_2^{t^*}\bigr)=e\bigl(ab^{r^*}d^{z(r^*,m^*)},g_2 \bigr) \end{aligned}$$which is equivalent to

$$\begin{aligned} s^* =& \bigl( ab^{r^*}d^{z(r^*,m^*)} \bigr)^{1/(x+t^*)} \\ =& g_1^{\bar{t}(t_0+t'_0r^*+t''_0z(r^*,m^*))/(x+t^*)} . \end{aligned}$$\(\mathcal {B}\) can now find a solution to the SDH challenge by computing \(D\in \mathbb {Z}_{p}\) with D≠0 and a polynomial \(f'(x)\in \mathbb {Z}_{p}[x]\) of degree q−1 such that \(\bar{t}=f'(x)(x+t^{*})+D\). We get that

$$\begin{aligned} g_1^{1/(t^*+x)}= \bigl(\bigl(s^*\bigr)^{1/(t_0+t'_0r^*+t''_0z(r^*,m^*))}g_1^{-f'(x)} \bigr)^{1/D} . \end{aligned}$$Finally, we have that

$$ \mbox {Pr}[S_{3}]=\mbox {Pr}[S_{2}]=\epsilon_{ \text {SDH} }\mbox { .} $$(21)

Type II Forger

(t ∗=t i and r ∗≠r i )

Now suppose \(\mathcal {B}\) expects \(\mathcal {A}\) to be a Type II Forger. We only present the differences to the previous proof.

- Game1 :

-

First, \(\mathcal {B}\) randomly chooses q distinct elements \(t_{1}, \ldots, t_{q}\in \mathbb {Z}_{p}\) and q random elements \(r_{1},\ldots,r_{q}\in\mathcal {R}_{\mathsf {CMB}}\). Additionally, it chooses three random elements \(t _{0}, t '_{0}, t ''_{0}\in \mathbb {Z}_{p}\). Next, \(\mathcal {B}\) computes \(a=g_{1}^{ (t_{0}\bar{t}+\sum^{q}_{i=1}r_{i}\bar {t}_{i} )}\), \(b=g_{1}^{ (t'_{0}\bar{t}-\sum^{q}_{i=1}\bar{t}_{i} )}\), and \(d=g_{1}^{t''_{0}\bar{t}}\) Again,

$$ \mbox {Pr}[S_{1}]=\mbox {Pr}[S_{0}] . $$(22) - Game2 :

-

Now \(\mathcal {B}\) simulates the signing oracle \(\mathcal {O} (\mathit{SK},\cdot )\). On each signature query m j with j∈{1,…,q}, \(\mathcal {B}\) responds with σ j =(r j ,s j ,t j ) using the pre-computed r j and t j and computing s j as

$$ \begin{array}{rcl} s_j= \bigl( a b^{r_j} d^{z_j} \bigr)^{1/(x+t_j)} &=& g_1^{( t _0+ t '_0r_j+ t ''_0z_{j})\bar{t}_j+\sum^{q}_{i=1,i\neq j}(r_i-r_j)\bar{t}_{i,j}}, \\ \mbox {Pr}[S_{2}] &\geq & \mbox {Pr}[S_{1}]- {q^2}/{p} . \end{array} $$(23) - Game3 :

-

Now consider \(\mathcal {A}\)’s forgery (m ∗,(r ∗,s ∗,t ∗)). By assumption there is a k∈{1,…,q} with t ∗=t k and r k ≠r ∗. Then we have that

$$\begin{aligned} s^* =& \bigl( a b^{r^*} d^{ z^{*}} \bigr)^{{1}/{(x+t^*)}}= \bigl(g_1^{( t _0+ t '_0r^*+ t ''_0z^*)\bar{t}+\sum^{q}_{i=1}(r_i-r^*)\bar{t}_{i})} \bigr)^{1/{(x+t^*)}} \\ =& \bigl(g_1^{( t _0+ t '_0r^*+ t ''_0z^*)\bar{t}+\sum^{q}_{i=1,i\neq k}(r_i-r^*)\bar{t}_{i}+(r_k-r^*)\bar {t}_k)} \bigr)^{1/{(x+t_k)}}. \end{aligned}$$(24)Again we can compute D≠0 and f′(x) with \(\bar {t}_{k}=f'(x)(x+t_{k})+D\) using long division. Therefore,

$$\begin{aligned} \bigl(s^*g_1^{-(( t _0+ t '_0r^*+ t ''_0z^*)\bar{t}_k+\sum^{q}_{i=1,i\neq k}(r_i-r^*)\bar{t}_{i,k}+(r_k-r^*)f'(x))} \bigr)^{1/D(r_k-r^*)} =&g_1^{1/(x+t_k)} \end{aligned}$$(25)constitutes a solution to the SDH challenge,

$$ \mbox {Pr}[S_{3}]= \mbox {Pr}[S_{2}]=\epsilon_{ \text {SDH} } . $$(26)

Type III Forger

(t ∗=t i and r ∗=r i )

In case \(\mathcal {B}\) expects \(\mathcal {A}\) to be a Type III Forger, there are only minor differences as compared to the previous proof.

- Game1 :

-