User Details

- User Since

- Jan 11 2017, 4:41 PM (413 w, 6 d)

- Availability

- Available

- IRC Nick

- chicocvenancio

- LDAP User

- Chico Venancio

- MediaWiki User

- Chicocvenancio [ Global Accounts ]

Jul 18 2024

Just to document where we're at with this. Getting labpawspublic to work with the api changes was pretty straightforward and only really required https://github.com/toolforge/labpawspublic/commit/0dda04b09f5a0eda8a9a7054e5dc0e47d7ef9b27. Unfortunately I couldn't figure out why it doesn't build on our singleuser image from source with yarn 3.5 (the default for jupyterlab). Not quite sure how to proceed with debugging this, my current plan is trying to avoid rebuild and go for a prebuilt extension.

Jul 10 2024

@Isaac mentioned paws public links are not working in irc/telegram, this should get the links back.

Jul 3 2024

May 21 2024

May 20 2024

There are a few current problems. I have not had the time to look into the current issue, but Worker exited prematurely sounds like the task was picked up, but something killed the celery process (probably OOM).

May 17 2024

Clearly V2C is not ready for use, except for beta-testers.

Feb 23 2024

Jan 23 2024

@Ita140188 I'd like to strongly pushback on your comment. It is meaningless to say hundreds of people work at WMF implying therefore solutions should be quick if we don't analyse the magnitude of the work to keep the websites of the wikimedia projects working.

Jan 2 2024

Dec 30 2023

FYI google has started to block downloads from video2commons again since around 2023-12-29 22:30.

Dec 19 2023

Dec 18 2023

Dec 11 2023

Jul 7 2023

Running your code I realized that for loop only runs once, so even if it is leaking connections it probably is not your main issue. But since the max_connections are per user they might be coming from another notebook/process.

This is not a PAWS issue as much as a connection limit on the replicas issue. I'm not quite sure what pymysql and Pandas are doing there but it seems connections are leaking beyond each iteration of

def query_wikis(query, df):

wikis = df.database_code.unique().tolist()

combined_result = pd.DataFrame()

for wiki in wikis:

result = pd_query(query, wiki)

result['wiki_db'] = wiki

combined_result = pd.concat([combined_result, result])

return combined_resultJun 13 2023

This is a change in behavior for jupyterlab 4 impacting labpawspublic. The property we are using is having RTC: prepended to it. I cannot figure out where to get the proper path, in fact I cannot find the documentation for jupyterlab's 4.0 api... (3.0 docs).

A quick solution is to remove the RTC: there.

Jun 9 2023

LGTM, just apply the same changes to the other tools.

Jun 8 2023

Jun 7 2023

Hopefully resolved with current updates.

Should be fixed now.

Local uploads should be working again.

May 24 2023

May 22 2023

@taavi I thought that as well, but wikitech docs link to this template, and there is no need for a project beyond the db.

May 21 2023

May 19 2023

Mar 23 2023

Jan 25 2023

Sorry I missed the followup questions, but I think you have solved the mystery. Since I have ipblockexempt it didn't stop me from editing, but its likely my vpn provider was blocked.

Jan 6 2023

Linkback from https://lists.wikimedia.org/hyperkitty/list/wikimedia-l@lists.wikimedia.org/message/DVA7I5TUHSNJAAYKOBD6O77FJT35E2MK/ where I cite this as an example of how WMF has delayed UCoC until the enforcemente guidelines are approved.

Jan 4 2023

Some sections of this, mostly host path parts, will become irrelevant with T308873 other parts remain unclear to me on why we need them. Aside from by default needing a psp, are there known benefits of our current setup?

Jan 3 2023

(my best guess is the authenticator is storing the client-id in the auth state and that has now changed in the upgrade)

Could you try one more time? I've deleted your user from the db, it should be recreated on login for you.

MySQL [s52771__paws]> select identifier from oauth_clients where oauth_clients.allowed_scopes is NULL; +-------------------------------------------------------+ | identifier | +-------------------------------------------------------+ | jupyterhub-user-ISO_3166_Bot | | jupyterhub-user-Xover | | jupyterhub-user-GZWDer | | jupyterhub-user-Qwerfjkl | | jupyterhub-user-Lopullinen | | jupyterhub-user-Ahenches | | jupyterhub-user-Research_Bot | | jupyterhub-user-PonoRoboT | | jupyterhub-user-BlackShadowG | | jupyterhub-user-Oshhhh | | jupyterhub-user-ShalomOrobot | | jupyterhub-user-W2Bot | | jupyterhub-user-Infrastruktur | | jupyterhub-user-Syunsyunminmin | | jupyterhub-user-%D9%84%D9%88%D9%82%D8%A7 | | jupyterhub-user-Hannolans | | jupyterhub-user-AnnikaHendriksen | | jupyterhub-user-EmausBot | | jupyterhub-user-Metalfingers2 | | jupyterhub-user-TholmeBot | | jupyterhub-user-Hamel.husain | | jupyterhub-user-Ainali | | jupyterhub-user-Jklamo | | jupyterhub-user-Ladon | | jupyterhub-user-%D8%A3%D8%A8%D9%88_%D8%AC%D8%A7%D8%AF | | jupyterhub-user-DPLA_bot | | jupyterhub-user-Andrawaag | | jupyterhub-user-Davidjamesadkins | | jupyterhub-user-Hesham_Moharam | | jupyterhub-user-Kekavigi | | jupyterhub-user-Anaka_Satamiya | | jupyterhub-user-AdoroniBot | | jupyterhub-user-MarioGom | | jupyterhub-user-Jahl_de_Vautban | | jupyterhub-user-TomT0m | | jupyterhub-user-Pppery | | jupyterhub-user-Kolja21 | +-------------------------------------------------------+ 37 rows in set (0.002 sec)

Its probably related to the user being running at the time of upgrade, let me try to manually clear the tokens in the db.

This seems to be the new networkpolicy blocking private ip addresses.

Didn't manage to delete the server, just logged out and back in.

More I'm curious, added me where? I'm not in the list of admins in values.yaml, though I have access to the admin tab (well did until today)

Still never known how I had admin before though. I always assumed it was pulling from cloud vps, but that seems unexpected?

https://jupyterhub.readthedocs.io/en/stable/rbac/roles.html# seems to be the new way of defining admins. I see the admin tab but nothing is there for me right now.

Uhh, it now works... Maybe there was a cached logged in SA for me?

That seems fine to me. This is RBAC internal to jupyterhub to do oauth dances between the various components. I'm reading code/docs trying to figure out what needs to change.

Seems the oauth tokens for the internal service accounts in jupyterhub don't have the right scopes, weird that this doesn't seem to be mentioned in the upgrade guide.

Why was a rollback necessary? Is trying to fix the new version an option?

Probably a migration was applied with the upgrade and alembic sees it in the table but not in the migration code of the rolled back version? Nuking the db is always an option, will only disrupt currently running pods and log everyone out. There might be a rollback migration code for the new migration, or it might even be compatible needing only the a DELETE in the revision, that would need to be figured it out if keeping the db is desired.

Dec 16 2022

Things are working again for me. I see no 400 errors in the logs for the past 10 minutes.

I confirm its down for me as well.

Dec 12 2022

Reading the github issue I guess moving to notebook-based culling is the way to improve things, I can try to take a look at that.

Dec 6 2022

Jun 15 2022

the hub pod has some in-memory state, and (worse) some shared state with the proxy pod. https://github.com/jupyterhub/zero-to-jupyterhub-k8s/issues/1364 is main upstream issue on this, I think.

https://github.com/toolforge/paws/pull/173 lgtm, Will make the PR upstream.

https://github.com/jupyterhub/jupyter-rsession-proxy/pull/130 is the upstream PR to track, we can use a fork if it takes a while to get merged.

I created https://github.com/jupyterhub/jupyter-rsession-proxy/issues/129 upstream for this. I think should be a pretty straightforward change there to get things working. As I see it, NB_USER should be the source of truth for users in jupyterhub land.

Jun 14 2022

I think volume persistence is the biggest blocker here, right?

Feels like your firefox is keeping jupyterlab open after it has a disconnect (Very possibly only a disconnect from the nfs), without telling you that it isn't connected.

I think there is something a bit more esoteric going on. If the the pod wasn't running or didn't have access to the filesystem creating a new file would not be possible. Might be browser session, pod v jupyterhub authentication or websocket connection failing. I would look into the hub and proxy logs for any error around the routes to the user pod and in the user pod itself for any error message the next time this happens.

Have you tried using the absolute path?

May 22 2022

Sounds about right to me.

May 21 2022

yeah, we haven't used in a few years.

Apr 21 2022

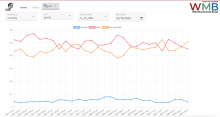

The lack of an uptick in new weekly editors on fawiki during the ip block indicates to me a big difference from ptwiki's implementation. My lack of farsi prevents a deeper investigation, but in ptwiki the interface was hacked to point logged out users at the point of edit. The edit button was changed to point directly to the log-in page and banner explaining the need to create an account linking to the reasoning was put there.

Mar 14 2022

@Isaac indeed. Fixed the link to a permalink to avoid future issues.

Mar 10 2022

Mar 9 2022

Feb 15 2022

6.6.5 was merged.

Jan 13 2022

Other bank transfer methods are also erroring for me.

Jan 12 2022

@EMartin its before we actually make the transaction.

Dec 24 2021

All for the upgrade but just for the record I don't see a path for exploitation here. All requests to openrefine go through the proxy and only authenticated requests pass. And if you're authenticated into a user server, well RCE is the main feature for jupyterhub.

Dec 22 2021

LGTM. Opinionated ways to run the more basic tasks is a good way to reduce the burden on new users.

Dec 15 2021

I guess portaudio19-dev is the missing apt package here.

Dec 2 2021

Thanks to @yuvipanda on the assistance on this. Would've taken me hours to understand it.

Nov 26 2021

Just noting we received a report of this bug in the Portuguese wikipedia telegram group today.

Nov 25 2021

I had to rollback as the new Host validation in openrefine 3.5 broke it with the current settings. I'm not quite sure what best to do here, since we're already proxying it through the jupyter server we could disable the validation. But I think I'll set it up to have the proper value in the next few days.

Nov 24 2021

Nov 18 2021

Nov 17 2021

Merged and deployed