Abstract

The spatial behavior of passersby can be critical to blind individuals to initiate interactions, preserve personal space, or practice social distancing during a pandemic. Among other use cases, wearable cameras employing computer vision can be used to extract proxemic signals of others and thus increase access to the spatial behavior of passersby for blind people. Analyzing data collected in a study with blind (N=10) and sighted (N=40) participants, we explore: (i) visual information on approaching passersby captured by a head-worn camera; (ii) pedestrian detection algorithms for extracting proxemic signals such as passerby presence, relative position, distance, and head pose; and (iii) opportunities and limitations of using wearable cameras for helping blind people access proxemics related to nearby people. Our observations and findings provide insights into dyadic behaviors for assistive pedestrian detection and lead to implications for the design of future head-worn cameras and interactions.

Keywords: Human-centered computing → User studies, Empirical studies in HCI, Mobile devices, Empirical studies in accessibility, Accessibility technologies, Computing methodologies → Computer vision, proxemics, blind people, wearable camera, pedestrian detection, spatial proximity, machine learning

INTRODUCTION

Access to the spatial behavior of passersby can be critical to blind individuals. Passersby proxemic signals, such as change in distance, stance, hip and shoulder orientation, head pose, and eye gaze, can indicate their interest in “initiating, accepting, maintaining, terminating, or altogether avoiding social interactions” [50, 63]. More so, awareness of others’ spatial behavior is essential for preserving one’s personal space. Hayduk and Mainprize [40] demonstrate that the personal space of blind individuals does not differ from that of sighted individuals in size, shape, or permeability. However, their personal space is often violated as sighted passersby, perhaps in an attempt to help, touch them, or grab their mobility aids without consent [19, 103]. Last, health guidelines as with the recent COVID-19 pandemic [74] require one to practice social distancing by maintaining a distance from others of at least 3 feet (1 meter)1 or 6 feet (2 meters)2, presenting unique challenges and risks for the blind community [31, 38]; mainly, due to the fact that spatial behavior of passersby and signage or markers designed to help maintain social distancing are predominantly accessible through sight and thus are, in most cases, inaccessible to blind individuals. Other senses such as hearing and smell could be utilized perhaps to estimate passersby distance and orientation but require close proximity or quiet spaces; in noisy environments, blind people’s perception of surroundings out of the range of touch is limited [28]. More so, mobility aids may work adversely in some cases (e.g., guide dogs are not trained to maintain social distancing [87]).

Why computer vision and wearable cameras.

Assistive technologies for the blind that employ wearable cameras and leverage advances in computer vision, such as pedestrian detection [5, 36, 54, 96], could provide access to spatial behavior of passersby to help blind users increase their autonomy in practicing social distancing or initiating social interaction; the latter motivated our work since it was done right before COVID-19. ‘Speak up’ and ‘Embrace technology’ are two of the tips that the LightHouse Guild provided to people who are blind or have low vision for safely practicing social distancing during COVID-19 [38], mentioning technologies such as Aira [11] and BeMyEyes [27]. However, these technologies rely on sighted people for visual assistance. Thus, beyond challenges around cost and crowd availability, they can pose privacy risks [6, 13, 94]. Prior work, considering privacy concerns for parties that may get recorded, has shown that bystanders tend to be amicable toward assistive uses of wearable cameras [7, 80], especially when data are not sent to servers or stored somewhere [54]. However, little work explores how blind people capture their scenes with wearable cameras and how their data could work with computer vision models. As a result, there are few design guidelines for assistive wearable cameras for blind people.

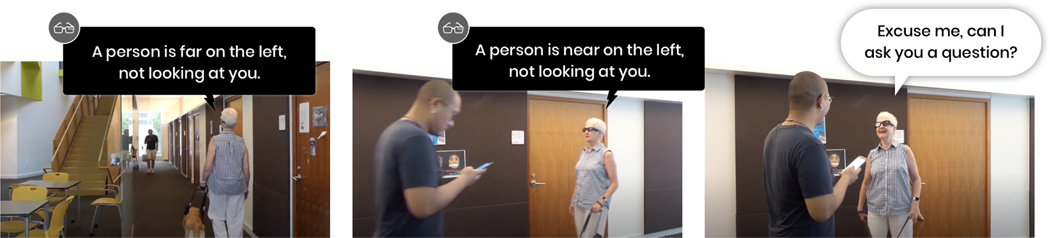

To better understand the opportunities and challenges of employing such technologies for accessing passersby proxemic signals, we collect and analyze video frames, log data, and open-ended responses from an in-person study with blind (N=10) and sighted (N=40) participants3. As shown in Fig. 1, blind participants ask passersby for information while wearing smart glasses with our testbed prototype for real-time pedestrian detection. We explore what visual information about the passerby is captured with the head-worn camera; how well pedestrian detection algorithms can extract proxemic signals in camera streams from blind people; how well the real-time estimates can support blind users at initiating interactions; and what limitations and opportunities head-worn cameras can have in terms of accessing proxemic signals of passersby.

Figure 1:

A pedestrian detection scenario demonstrating the data collection in our study. A blind person, wearing smart glasses with our working prototype, and a sighted person walk toward each other in a corridor. The smart glasses detect the sighted passerby, estimate his proximity, and share his relative location and head pose.

Our exploratory study shows that there are still many limitations to overcome for such assistive technology to be effective. When the pedestrians faces are included, the results are promising. Yet, idiosyncratic movements of blind participants and the limited field of view in current wearable cameras call for new approaches in estimating passersby proximity. Moreover, analyzing images from smart glasses worn by blind participants, we observe variations in their scanning behaviors (i.e., head movement) leading to capturing different body parts of passersby, and sometimes excluding essential visual information (i.e., face), especially when they are near passersby. Blind participants’ qualitative feedback indicates that they found it easy to access proxemics of passersby via smart glasses as this form factor did not require camera aiming manipulation. They generally appreciated the potential and importance of such an wearable camera system, but commented on how experience degraded with errors. They mentioned trying to adapt to these errors by aggregating multiple estimates, instead of relying on a single one. At the same time, they shared their concern that there was no guaranteed way for them to check errors in some visual estimates. These findings help us discuss the implications, opportunities, and challenges of an assistive wearable camera for blind people.

2. RELATED WORK

Our work is informed by prior work in assistive technology for non-visual access of passersby spatial behaviors and existing approaches for estimating proximity beyond the domain of accessibility. For more context, we also share prior observations related to blind individuals’ interactions with wearable and handheld cameras.

2.1. Accessing the Spatial Behavior of Others With a Camera

Prior work on spatial awareness for blind people with wearable cameras and computer vision has mainly focused on tasks related to navigation [29, 55, 68, 70, 99] and object or obstacle detection [4, 55, 56, 64, 70]. We find only a few attempts exploring the spatial behavior of others whom a blind individual may pass by [48, 61] or interact with [51, 91, 96]. For example, McDaniel et al. [61] proposed a haptic belt to notify blind users of the location of a nearby person, whose face is detected from the wearable camera. While closely related to our study, blind participants were not included in the evaluation of this system. Thus, there are no data on how well this technology would work for the intended users. Kayukawa et al. [48], on the other hand, evaluate their pedestrian detection system with blind participants. However, the form factor of their system, a suitcase equipped with a camera and other sensors, is different. Thus, it is difficult to extrapolate their findings for other wearable cameras such as smart glasses that may be more sensitive to blind people’s head and body movements. Designing wearable cameras for helping blind people in social interactions is a longstanding topic [32, 51, 66, 76]. Focusing on this topic, our work is also inspired by Stearns and Thieme [96]’s preliminary insights on the effects of camera positioning, field-of-view, and distortion for detecting people in a dynamic scene. However, they focused on interactions during a meeting (similar to [91]) and analyzed video frames captured by a single blindfolded user, which is not a proxy for blind people [3, 79, 88, 100]. In contrast to our work, the context of a meeting does not allow for extensive movements and distance changes — typical tasks when proxemics of passersby are needed. In our paper, we explore how blind people’s movements and distances can lead to visual characteristics of their camera frames. In this paper, we explore how movements and distance are reflected in cameras’ field of view and related to the performance of the computer vision system.

2.2. Estimating Proximity to Others Beyond Accessibility

Distance between people is key to understanding spatial behavior. Thus, epidemiologists and behavioral scientists have long been interested in automating the estimation of people’s proximity [14, 60]. Often their approaches employ mobile sensors [60] or Wi-Fi signals [75]. For example, Madan et al. [60] recruited participants in a residence hall and tracked foot traffic based on their phones’ signals to understand how their behavior change relates to reported symptoms. While these approaches work well for the intended objective, they are limited in the context of assistive technology for real-time estimates of approaching passersby in both indoor and outdoor spaces.

Since the recent pandemic outbreak [74], spatial proximity estimation (i.e., looking at social distancing) has gained much attention. The computer vision community started looking into this using surveillance cameras [33, 86] or camera-enabled autonomous robots [2]. Using cameras capturing people from the perspective of a third person, they monitor the crowd and detect people who violate social distancing guidelines. However, for blind users, an egocentric perspective is needed. Other approaches include using ultrasonic range sensors that can measure a physical distance between people wearing the sensors (e.g. [41]). This line of work is promising in that the sensors consume low power, but the sensors can help detect only presence and approximate distance. They cannot provide access to other visual proxemics that blind people may care about [54]. More so, they assume that sensors are present on every person. Thus, in our work, we prioritize RGB cameras that can be worn only by the user to estimate presence, distance, and other visual proxemics.

Specifically, we borrow from pedestrian detection approaches in computer vision, but some do not really work for our context. For example, they estimate distances by looking at visual differences of the pedestrian between two images from a stereo-vision camera [43, 69]. They first track the pedestrian and then change the camera angle to position the person in the center of frames [43]. Alternatively, they use two stationary cameras [69]. Neither case is appropriate for our objective. However, approaches that estimate the distance based on a person’s face or eyes captured from an egocentric user perspective could work [30, 83, 104, 110]. For our testbed, we use the face detection model of Zhang et al. [110] to estimate a passerby’s presence, distance, relative position, and head pose.

2.3. Choosing Handheld versus Head-worn Cameras in Assistive Technologies for the Blind

Smartphones and thus handheld cameras are the status quo for assistive technologies for the blind [9, 16, 17, 45, 47, 53, 101, 102, 116], though, there are still many challenges related to camera aiming [9, 17, 45, 47, 52, 53, 102, 116], which can be trickier for a pedestrian detection scenario. Nonetheless, what might start as research ideas quickly translate to real-world applications [1, 11, 21, 23, 24, 26, 27, 57, 84, 92, 98]. However, the use of cameras along with computationally intensive tasks can rapidly heat up the phone and drain its battery [62, 111]. Although this issue is not unique to this form factor, it can harm phone availability that blind users may rely on; many have reported experiencing anxiety when hearing battery warnings in their phones, especially at their work or during commuting [106].

With the promise of hands-free and thus more natural interactions, head-worn cameras are falling on and off the map of assistive technologies for people who are blind or have low vision [37, 42, 58, 78, 95, 97, 113, 115]. This can be partially explained by hardware constraints, such as battery life and weight, limiting their commercial availability [93] which can also relate to factors such as cost, social acceptability, privacy, and form factor [54, 80]. Nonetheless, we see a few attempts in commercial assistive products [1, 11, 26, 71], and people with visual impairments remain amicable towards this technology [117], especially when it resembles a pair of glasses [67] similar to the form factor used in our testbed. However, to our knowledge, there is no prior work looking at head-worn camera interaction data from blind people, especially in the context of accessing proxemics to others. Although wearable cameras do not require wearers to aim a camera, including visual information of interest in the frame can still be challenging for blind people. Prior work with sighted [105] and blind-folded [96] participants indicate that the camera position can lead to different frame characteristics, though such data are not proxy for blind people’s interactions nor related to the pedestrian detection scenario. Thus, collecting and analyzing data from blind people, our work takes a step towards understanding their interactions with a head-worn camera in the context of pedestrian detection.

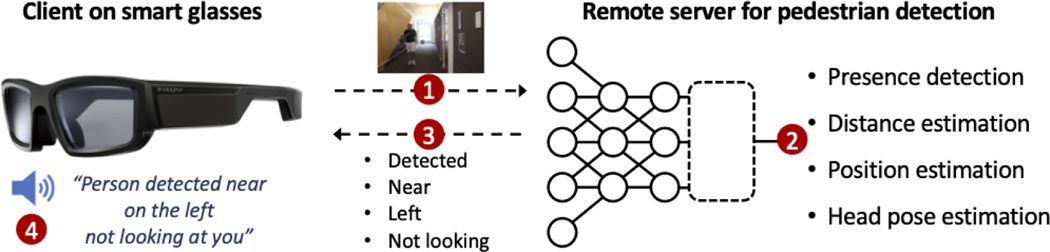

3. GLACCESS: A TESTBED FOR ACCESSING PASSERSBY PROXEMICS

We build a testbed called GlAccess to explore what visual information about passersby is captured by blind individuals with a head-worn camera and how well estimates of passersby’s proxemics can support initiating interactions. As shown in Fig. 2, GlAccess consists of Vuzix Blade smart glasses [18], a Bluetooth earphone, and a computer vision system running on a server. With a sampling rate of one image per second, a photo is sent to the server to detect the presence of a passerby and extract proxemic signals, which are then communicated to the user through text-to-speech (TTS). To mitigate cognitive overload, estimates that remain the same as the last frame are not communicated. Vuzix Blade smart glasses have an 80-degree diagonal angle of camera view and run on Android OS. Our server has eight NVIDIA 2080 TI GPUs running on Ubuntu 16.04. We build a client app on the glasses that is connected to the server through RESTful APIs implemented with Flask [81] and uses the IBM Watson TTS to convert the proxemic signals to audio (e.g., “a person is near on the left, not looking at you.” as shown in Fig. 1). More specifically, our computer vision system estimates the following related to proxemics:

Figure 2:

System diagram describing the procedure of pedestrian detection: (1) The client app on smart glasses captures an image and sends it to the remote server; (2) Once the remote server detects a passerby, it estimates the distance, position, and head pose of the passerby; (3) The output is then sent back to the smart glasses; (4) The smart glasses deliver the output to the blind user via audio.

Presence: A passerby presence is estimated through face detection. We employ a Multi-task Cascaded Convolutional Networks (MTCNNs) [110] pre-trained on 393,703 faces from the WIDER FACE dataset [108], which is a face detection benchmark with a high degree of variability in scale, pose, occlusion, facial expression, makeup, and illumination. The model provides a bounding box where the face is detected in the frame.

Distance: For distance estimation, we adopt a linear approach that converts the height of the bounding box from the MTCNNs face detection model to the distance. Using the Vuzix Blade smart glasses, we collect a total of 240 images with six data contributors from our lab. Each contributor walks down a corridor that is approximately 66 feet (20 meters); this is the same corridor where the study takes place. We take two photos every one meter for a total of 40 photos. We train a linear interpolation function with the height of faces extracted from these photos and the ground truth distances between the contributors and the photo-taker with the smart glasses. This function estimates the distance as a numeric value, which is communicated either more verbosely (e.g., ‘16 feet away’) or less so by stating only ‘near’ or ‘far’ based on a 16 feet (5 meters) threshold4. Participants in our study are exposed to both (i.e., experiencing each for half of their interactions).

Position: We estimate the relative position of a passerby based on the center location of the bounding box of their face detected by the MTCNNs model. A camera frame is divided in three equal-width regions, which are accordingly communicated to the user as ‘left’, ‘middle’, and ‘right’.

Head Pose: We use head pose detection to estimate whether a passerby is looking at the user. More specifically, we build a binary classification layer on top of the MTNNs face detection model and fine-tune it with the 240 images from our internal dataset collected by the six contributors and 432 images from a publicly available head pose dataset [35]. The model estimates whether a passerby is looking at the user or not.

We also simulate future teachable technologies [46, 77] that can be personalized by the user providing faces of family and friends. In those cases, the name of the person can be communicated to the user. Sighted members in our research group volunteered to serve this role in our user study.

Name: We pre-train GlAccess with a total of 144 labeled photos from five sighted lab members so that the system can recognize them in the study setting to simulate a personalized experience. This face recognition is implemented with a SVM classifier that takes face embedding data from the MTCNNs model.

4. USER STUDY AND DATA COLLECTION

To explore the potential and limitations of head-worn cameras for accessing passersby proxemics, we conducted an in-person study with blind and sighted participants (under IRB #STU DY 2019_00000294). We employed a scenario, illustrated in Fig. 3, where blind participants wearing GlAccess were asked to initiate interactions with sighted passersby in an indoor public space. Given the task of asking someone for the number of a nearby office, they walked down a corridor where sighted participants were coming in the opposite direction.

Figure 3:

Diagram of study procedure. A blind participant walked eight times in a corridor that is 66 feet (20 meters) long and 79 inches (2 meters) wide. Each time a different sighted person walked towards them. In four of the eight times, those individuals were sighted study participants. In the other four, they were sighted members in our research lab. In addition to the proxemic signals, GlAccess can recognize the lab members, simulating future teachable applications [46] where users can personalize the model for familiar faces.

4.1. Participants

We recruited ten blind participants; nine were totally blind, and one was legally blind. On average, blind participants were 63.6 years old (SD = 7.6). Five self-identified as female and five as male. Three participants (P4, P8, P10) mentioned having light perception, and four participants reported having experience with wearable cameras such as Aira [11] with frequencies shown in Table 1. Responses to technology attitude questions5 indicate that they are positive about technology in general (mean = 2.02, SD = 0.42) and wearable technology such as smartglasses (mean = 1.89, SD = 0.90).

Table 1:

Demographic information of our participants. Participants with light perception are marked with an asterisk in Vision level.

| PID | Gender | Age | Vision level | Onset age | Experience with wearable cameras |

|---|---|---|---|---|---|

|

| |||||

| P1 | Female | 71 | Totally blind | 8 | Aira (1 year 6 months; a few times a month) |

| P2 | Male | 66 | Totally blind | Birth | Audio-glasses (4 months; twice a month) |

| P3 | Male | 59 | Totally blind | Birth | None |

| P4 | Female | 66 | Totally blind* | 49 | None |

| PS | Female | 65 | Totally blind | Birth | None |

| P6 | Female | 73 | Totally blind | Birth | None |

| P7 | Female | 49 | Totally blind | 14 | None |

| P8 | Male | 60 | Legally blind* | 56 | Aira (1 month; once a month) |

| P9 | Male | 56 | Totally blind | 26 | None |

| P1O | Male | 71 | Totally blind* | 41 | Aira (3 months; a few times a week) |

We also recruited 40 sighted participants to serve as passersby in the study — four sighted participants, one at a time, passed by a blind participant. On average, sighted participants were 25.3 years old (SD = 4.8). Twenty-four self-identified as male and fifteen as female, and one chose not to disclose their gender. Participants’ responses to technology attitude questions5 indicate that they were slightly positive about wearable technology (mean = 1.08, SD = 0.95).

4.2. Procedure

We shared the consent form with blind participants via email to provide ample time to read it, ask questions, and consent prior to the study. Upon arrival, the experimenter asked the participants to complete short questionnaires on demographics, prior experience with wearable cameras, and attitude towards technology. The experimenter read the questions; participants answered them verbally. Blind participants first heard about the system and then practiced walking with it in the study environment and detecting the experimenter as he approached in the opposite direction. During the main data collection session, blind participants were told to walk in the corridor and ask a person, if detected, about the office number nearby. On the other hand, sighted participants, one at a time, were told to simply walk in a corridor with no information about the presence of blind participants in the study. As illustrated in Fig. 3, a blind participant walked in the corridor eight times — four times with four sighted participants, respectively, and four times with four sighted members of our research lab, respectively. Our study was designed not to extend beyond 2 hours.

All sighted participants were recruited on site and thus strangers to blind participants; blind participants did not meet any sighted participants before the study. A stationary camera was used to record the dyadic interactions in addition to the head-worn camera and the server’s logs. Both blind and sighted participants consented to this data collection prior to the study — sighted participants were told that there will be camera recording in the study, which may include their face, but these images will not be publicly available without being anonymized. The study ended with a semi-structured interview for blind participants with questions5 created for eliciting their experience and suggestions towards the form factor, proxemics feedback delivery, error interaction, and factors impacting future uses of such head-worn cameras.

4.3. Data Collection, Annotation, and Analysis

We collected 183-minute-long session recordings from a stationary camera, more than 1,700 camera frames from the smart glasses testbed sent to the server, estimation logs on proxemic signals of sighted passersby that were communicated back to blind participants, and 259-minute-long audio recordings of the post-study interview.

4.3.1. Data annotation.

We first annotated all camera frames captured by the smart glasses worn by blind participants. As shown in Table 2, annotation attributes include the presence of a passerby in the video frame, their relative position and distance from the blind participant, whether their head pose indicates that they were looking towards the participant, and whether there was an interaction. Annotations for the binary attributes, presence, head pose, and interaction, were provided by one researcher as there was no ambiguity. For presence, the annotator marked whether the head, torso, arm(s), and leg(s) of the sighted passerby were captured in the camera frames. Regarding head pose, the annotator checked whether the sighted passerby was looking at the blind user. As for interaction, the annotator marked whether the blind or the sighted participant was speaking while consulting the video recordings from the stationary camera. Annotations for distance and position were provided by two researchers, who first annotated independently and then resolved disagreements. The initial annotations from the two researchers achieved a Cohen’s kappa score of 0.89 and 0.90 for distance and position, respectively. Distance was annotated as < 6ft.: within 6 feet (2 meters), near: farther than 6 feet (2 meters) but within 16 feet (5 meters); far: farther than 16 feet (5 meters). Distance in frames where the passerby was not included was annotated as n/a. As for position, the annotators checked whether the person was on the left, middle, or right of the frame. This relative position was determined based on the location of the center of the person’s face within the camera frame. When the face was not included, the center of included body part was used. The annotators resolved all annotation disagreements together at the end.

Table 2:

Attributes in our annotation.

| Presence | Position | Distance | Head pose | Interaction | |

|---|---|---|---|---|---|

| Annotation | Which body part of the passerby is included? | Where is the passerby located? | How far is the passerby from the user? | Is the passerby looking at user? | Who is speaking? |

| Value | head/torso/arms/legs | left/middle/right | <6ft./near/far | yes/no | blind/sighted |

4.3.2. Data analysis.

For our quantitative analysis, we mainly used descriptive analysis on our annotated data to see what visual information about passersby blind participants captured using the smart glasses and how well our system performed to extract proxemic signals (i.e., the presence, position, distance, and head pose of passersby). We adopted the common performance metrics for machine learning models (i.e., F1 score, precision, and recall) to measure the performance of our system in terms of extracting proxemic signals.

For our qualitative analysis, we first transcribed blind participants’ responses to the open-ended interview questions. Then, we conducted a thematic analysis [20] on the open-ended interview transcripts. One researcher first went through the interview data to formulate emerging themes in a codebook. Then, two researchers iteratively refined the codebook together while applying codes to the transcripts and addressing disagreements. The final codebook had a 3-level hierarchical structure — i.e., level-1: 11 themes; level-2: 20 themes; and level-3: 71 themes.

4.4. Limitations

We simplified our user study to have only one passerby in a corridor since our prototype system was able to detect only one person at a time. In the future, designers should consider handling a case where more than one passerby appears. When more than one passerby is included in a camera frame, a system needs to detect a person of the most interest to blind users and then delivering the person’s proxemics to the users. Such a method should help the users perceive the output, efficiently and quickly. For example, detecting a person within a specific distance range could be a naive but straightforward way.

5. OBSERVATIONS AND FINDINGS

Although our data collection scenario is somewhat limited, the images, videos, and logs collected from 80 pairs of blind and sighted individuals allow us to account for variations within a single blind participant and across multiple blind participants. Our observations, contextualized with blind participants’ feedback, provide rich insights on the potential and limitations of head-worn cameras.

5.1. Visual Information Accessed by a Head-worn Camera

In a real-world setting, where both blind users and other nearby people are constantly in motion, it is important to understand what is being captured by assistive wearable cameras — smart glasses in our case. What is visible dictates what kind of visual information regarding passersby’s proxemics can be extracted. In this section, results are based on our analysis of 3,175 camera frames from the head-worn camera and those ground-truth annotations.

5.1.1. At what distance is a passerby captured by the the camera?

Fig. 4 presents that a relatively high percentage of camera frames captured a passerby at a distance larger than 6 feet in a corridor that was 79 inches wide; this varied across blind participants with some outlier cases being as low as 50%. Within 6 feet, blind participants seemed to have different tendencies of including either the face or any body part of passersby, which average inclusion rates tend to be lower than those when passersby were more than 6 feet away. When sighted passersby were more than 16 feet away (far), part of their body and head were included on average in 98% (SD = 3.5) and 98% (SD = 3.6) of the camera frames, respectively. When sighted passersby were less than 16 feet but more than 6 feet away (near), the inclusion ratios of any body part and head were 98% (SD = 3.5) and 98% (SD = 4.3), respectively. However, when passersby were within 6 feet (2 meters), there was a quick drop in the inclusion rate with high variability across the dyads — passersby’s head and any body part were included on average in 56% (SD = 39.1) and 66% (SD = 39.6) of the camera frames, respectively. For example, the camera of P9, who tended to walk fast, consistently did not capture the passerby at this distance.

Figure 4:

Diagrams presenting inclusion rates of any body part or head of passersby in camera frames per distance. A relatively high percentage of camera frames capture a passerby (face and body) at a distance larger than 6 feet in a corridor that is 79 inches wide; this can vary across blind participants with some outlier cases being as low as 50%. Within 6 feet it can get unpredictable with faces often being included less.

Beyond fast pace, there are other characteristics that can contribute to low inclusion rates such as veering [39] and scanning head movements. We observed little veering, perhaps due to hallway acoustics. However, we observed that many blind participants tended to move their head side to side while walking and more so while interacting with sighted participants to ask them about the nearby office number. This behavior in combination with the low sampling rate of one frame per second seemed to contribute to exclusion of the passersby from the camera frames even when they were in front or next to the blind participants. These findings suggest a departure from naive fixed-rate time-based mechanism (i.e. 1 frame/sec) to more efficient dynamic sampling (e.g. increasing frames on proximity) as well as fields of view and computer vision models that can account for such movements.

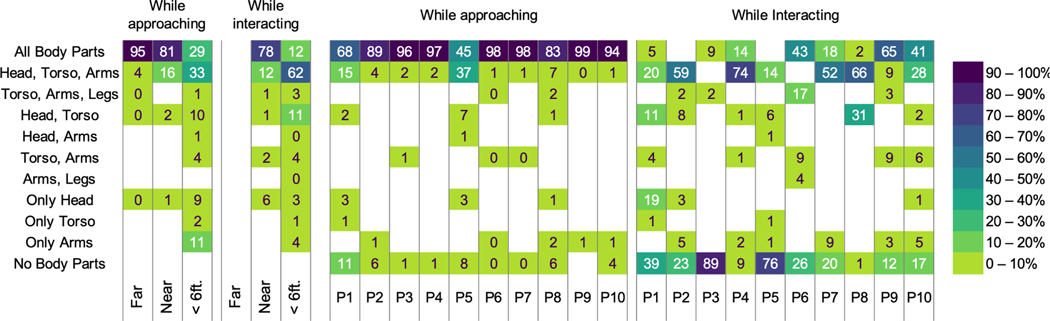

5.1.2. What proportion of a passerby is being captured by the camera while approaching versus while interacting?

The types of visual information and proxemic signals desired by a blind user can differ by proximity to a passerby and whether that person is merely approaching or interacting with them. Nonetheless, what can be accessible will depend on what part of the passersby body is captured by the camera (e.g., head, torso, and hands). In our dataset, we identified 11 distinct patterns. For example, a (Head, Torso) pattern indicates that only the head and torso of a passerby were visible in a camera frame. Fig. 5 shows the average rate for each pattern and whether a passerby was approaching or interacting with a blind participant. It also includes a break-down of rates across participants.

Figure 5:

Heatmap diagram presenting the rates of body inclusion patterns in frames across distances, interactions, and participants.

When passersby were more than 16 feet away (far) and approaching, blind participants tended to capture the passersby in their entirety in more than 81% of the overall frames. This number droped to 29% in the distance within 6 feet, making it difficult to estimate some of their proxemics, but the head presence remained high at 82%. These seemed to be consistent among the participants, except P1 and P5 whose rates were not only lower for including passersby in their entirety (68% and 45%, respectively) but also higher for not including them at all (11% and 8%, respectively).

We also analyzed the frames collected during blind participants’ interactions with sighted passersby, typically within 16 feet (5 meters). Blind participants tended to capture the passersby in their entirety in about 78% of the overall frames for distances between 6 and 16 feet (2–5 meters). Closer than 6 feet, the number quickly dropped to 12%. More importantly, we observed a higher diversity in the inclusion rate across blind participants for what their camera captured when they were interacting with a passersby within 16 feet. Indeed, the breakdown of rates shows that all except P8, who was legally blind, and P4, who reported having a light perception and onset age of 49, did not include the passersby or their head in more than a quarter of their camera frames. For P3, P5, and P6, who had no prior experience with wearable cameras and reported onset age at birth, these rates were even higher reaching up to 91%. When inspecting their videos from the static camera, we find that these participants were indeed next to the passersby interacting with them but they were not facing them.

5.2. Errors in Estimating Passersby Presence, Distance, Position, and Head Pose

We evaluate how well models that rely on face detection can estimate passersby’ proxemic signals (i.e., presence, position, distance, and head pose) in camera frames from blind participants. Specifically, using visual estimates and those ground-truth annotations, we report results in terms of precision, recall, and F1-score (Table 3). These results capture blind participants’ experience with the testbed, and thus provide context for interpreting their responses related to machine learning errors.

Table 3:

Performance of our prototype system on the camera frames from blind participants.

| Attribute | Comparison | Precision | Recall | F1-score |

|---|---|---|---|---|

| Presence | across all frames where a body part was present across all frames where head was present |

1.0 1.0 |

0.33 0.34 |

0.49 0.51 |

| Position | across all frames where someone was present across all frames where someone was detected |

0.90 0.90 |

0.30 0.84 |

0.45 0.87 |

| Distance | across all frames where someone was present across all frames where someone was detected |

0.62 0.62 |

0.25 0.66 |

0.35 0.58 |

| Head pose | across all frames where someone was detected | 0.77 | 0.77 | 0.77 |

Table 3 presents that, when the presence of a passerby was detected, there was actually a passerby (precision=1.0)6. However, the model’s ability to find all the camera frames where a passerby was present is low (recall=0.33) — even when their head was visible in the camera frame (recall=0.34). Our visual inspection on these false negatives informed that, for the majority (80%), this was due to the person being too far (> 16 feet); thus, their face was too small for the model to detect, raising questions such as How far away should a system detect people?

Since the estimation of position, distance, head pose depended on the presence detection, we reported metrics on both (i) frames where passersby were present and (ii) frames where they were first detected. Once passersby were detected, the estimation of their relative position worked relatively well (precision=0.90; recall=0.84). However, the head pose estimation (i.e., whether the passerby is looking at the user) was not as accurate (precision=0.77; recall=0.77). The distance estimation was also challenging (precision=0.62; recall=0.66). This might be partially due to the limited training data, both in size and in diversity. A passerby’s head size in an image, a proxy for estimating the distance, can depend on the blind user’s height and camera angle.

5.3. Participant Feedback on Accessing Proxemics

The majority of blind participants (seven) appreciated having access to proxemics about nearby people through the smart glasses. P4 highlighted, “I liked having the [proxemic] information. That means a lot when I know that there’s a person, or how close they are to you or where they are[.] It’s a good thing to be able to know who’s there and that there’s somebody there. That’s important.” Blind participants also shared certain situations where they can benefit from such assistive cameras (e.g., when nearby people do not provide enough non-visual cues). P2 highlighted, “People are sometimes quite, and [I] don’t know if they are approaching.” Some participants remarked that having access to proxemics related to a near passerby can help them make their decision proactively. For example, P7 mentioned, “With the distance estimations, it gives [me] a couple of cues within range, where [I] can make a decision ‘Do I want to do something or not?’ ”

Participants also envisioned how these visual estimations could enhance their social engagement. For example, P1 said, “It was novel [and] interesting to have it tell me things that I normally wouldn’t even be paying attention to[.] I think it would be a great social interaction thing,” and P4, “It means a lot to be able to know who it is [and] who’s there — that’s a big deal to me for social interaction.” Some participants see its potential in helping them predict the outcome of their attempted social interaction by saying, “If you know somebody is in front of you and looking [at you] or you know [where] to look (left or right), you can probably get their attention more easily” (P5), and “[GlAccess] certainly made it easy to know, when there was a potential [social interaction] — you didn’t talk to the air[.] I would say that I like the ability to possibly predict the outcome of an attempted interaction” (P7). They also envisioned using proxemics detection and person recognition to initiate their communication. P6 said, “Walk down the hall and be able to greet a person by name when they hadn’t spoken to me; that would be cool,” and P7, “Because [I] know that there is at least a person and how far away they are, [I’m] not simply chattering with air, which just occurred to me a couple times [in my daily life].”

On the other hand, our analysis also reveals potential reasons why blind participants might not pay much attention to visual estimates. Since such visual information was mostly inaccessible in their daily lives, they did not rely on the visual information and also was unsure how to utilize them. More specifically, P7 and P9 remarked, “[Getting visual information] never occurred to me. [Not sure if] I would want or need anything more than just listening to the voices around me and hearing the walking around me because I’ve never had that experience,” and “It’s visual feedback that I’m not used to, I never functioned with, so I don’t know, I’m not used to processing or analyzing things in a visual way. I love details, but I just never often think about them much related to the people,” respectively. To address this issue, participants suggested providing training that helps blind users interpret such visual estimates as P7 said, “I would need maybe to be trained on what to do [with the visual feedback].”

5.4. Participant Feedback on the Form Factor

Six participants commented on how easy it was to have the smart glasses on and access information, saying “Just wearing the glasses and knowing that would give you feedback” (P2); “Just have to wear it and listen” (P4); and “Once [I] put on and just walk” (P8). Some supported their statements by proving a rationale such as the head being a natural way to aim towards areas of interest and the fact that they didn’t have to do any manipulations. For example, P4 and P9 commented, “If it’s just straight, then [I’m] gonna see what’s straight in front of [me]. That’s a good benefit[.] Seeing what directly in front of [me] is a bonus” and “I could turn my head to investigate or find out what’s to my right or my left — that part was kind of nice,” respectively. P7 and P10 commented on the manipulation, saying “You don’t have a lot of controlling and manipulating to do” and “And [it] doesn’t take any manipulation — it just feeds new information. And that was easy. It took no interaction manually changed,” respectively.

However, anticipating that some control would be eventually needed, P8 tried to envision how one could access the control panel for the glasses and suggested them being paired with the phone or support voice commands by stating “Altering [it] would be an interface with your phone so that I could [control] it using the voice over on my phone, or using Siri to do it. The voice assistant [would] let you control.” Only one participant (P1) suggested deploying similar functionalities into one’s phone “And just use your rear facing camera and have it as a wearable, a pouch or something like that. Then you just flip that program on [the phone] when you want to use it.”

Other suggestions focused on the sound. At the time, Vuzix Blade did not have a built-in speaker, and their shape conflicted with bone conduction headphones. Four participants quickly pointed out this limitation with statements such as “Bone conduction [would] seem to work [with GlAccess] really good” (P4) and “[Need to keep] your ear canals open there for being able to hear traffic or whatever” (P8). P4 suggested extending the field of view for capturing more visual information, stating “I wonder if the field of vision for the camera could go a little bit wider because then it would catch somebody coming around the side more[.]”

5.5. Participant Feedback on Interactions with the System

Four participants shared concerns on outside noises blocking out the system’s audio feedback, or vice versa. P4 mentioned, “The only time it could be harder [to listen to the system feedback] is in a situation with lots of noise or something. If it’s very noisy, then it’s going to be hard to hear,” and “There’s a limit to how much you can process because you’re walking. When we’re walking[,] we’re doing more than just listening to the [the feedback.] We listen to [others]. Listening is big [to us].” Although GlAccess reported only feedback changed between two contiguous frames, this is possibly why they wanted to control the verbosity of the system feedback. P7 said, “It was reacting to you every couple seconds or literally every second. Until I’m looking for something specific, it [is] a little overwhelming to have all of it coming in at the same time.”

Moreover, blind participants emphasized that they would need to access such proxemic signals in real time, especially when they are walking. They mentioned being misguided by stale estimations caused by network latency at times — P9 commented on this latency issue, “I know about the latency in it. Because when [a person] and I were talking, I knew [the person] walked away, [but GlAccess] still reported that [the person was] in front of me.” To minimize the effects of the latency, some reported that they made quick adaptation, such as “walking slow[ly]” (P1) or “stop[ping] and paus[ing]” (P10), during the study. Nonetheless, the latency issue would make the use of such assistive wearable cameras impractical in the real world, as P8 put, “If I’m walking at a normal pace [and] it’s telling me somebody is near, I’m probably going to be 10 to 15 feet past [due to the latency].”

Participants also suggested providing options for customizing the feedback delivery. For example, P7 wanted the system to detect moments for pausing or muting the feedback delivery, saying “Once a conversation is going on, it’s not reporting everyone else around; [otherwise, it’s] distracting [me].” Also, four blind participants commented on verbosity control, which we explored a bit in our study by implementing two ways of communicating the distance estimation. P2 mentioned, “I like that you had options [for verbosity], and [I] could choose that.” At the same time, we observe that blind people may have different definitions of spatial perception — i.e., the definition of far and near. P4 remarked on this, “ ‘Far’–’near’ thing wasn’t so good. I liked hearing the the number of feet for the distance or my concept of far is different than your concept of far and near[.]”

5.6. Participant Feedback on Estimates and Errors

Seven blind participants mentioned being confident of interpreting person recognition output. It seems that they gained their trust in the person recognition feature since they were able to confirm the recognition result with a person’s voice. P2 said, “I learned [a lab member]’s voice, and when it said ‘[lab member]’, it was her; or at least I thought it was.” Similar to any other machine learning models, however, our prototype system also suffered from errors such as false negatives (Section 5.2). Participants seemed to be familiar with technical devices making errors and understood that it is part of technology improvement, as P1 commented, “If it didn’t work, then I figured that’s just part of the the system growing, so that’s okay. It’s just part of learning how to use it.” To prevent from making their decision based on errors, six participants mentioned aggregating multiple estimates from the system, instead of relying on a singular estimate, after realizing the possibility of errors in visual estimates. P9 highlighted, “If [the] information was not what I felt either incorrect or not certain, I would wait and give the system a chance to focus better or to process it differently.”

Even with errors in visual estimates, blind participants said that it can help them know that “there was somebody [at least]” (P6). On the other hand, seven participants pointed out that there would be no guaranteed way for them to verify the visual estimates — i.e., detecting errors in visual estimates is inaccessible. For example, P3 did not know if there was even an error because “[P3] can’t really recall that where it wasn’t right.” Participants who even experienced something odd mentioned that it was difficult for them check whether that was an error, or not. P4 commented on this issue by saying, “One time [GlAccess] goofed, I think, but I don’t know if that [was an error.] I don’t know if there were more people in the hallway.” Due to the inaccessibility of feedback verification, P10 mentioned being dependent on the system feedback, “Unless someone was there saying that listening to same thing I was listening to and tell me if it was right or wrong, I had to depend on it.” We suspect that these experiences led them to expecting future assistive systems to provide more reliable visual estimates, as P3 commented, “[Future glasses need to] reassure that it’s accurate in telling you like distance [how far] people are.”

5.7. Participant Feedback on Teachable Passerby Recognition

Although blind participants did not experience personalizing their smart glasses in the study, they envisioned that such assistive technologies would be able to recognize their family and friends once they provide their photos to the device. For instance, P1 expected this teachable component by saying, “[Person recognition] means [that] I have to create a database of pictures. And I wouldn’t mind doing that if my friends wanted to.” Recognizing our lab members during the study seemed to help them see the benefits of recognizing their known people via smart glasses. P6 was excited about using this recognition feature to greet her family and friends by their name even “when they hadn’t spoken to [P6].” Also, P10 mentioned training smart glasses to find, ”out of a group, a person that [P10 is] looking for.” P1 commented on sharing photos with other blind people to recognize each other through such smart glasses, saying “When I was at the American Council of the Blind convention, there were rooms full of blind people, [and] none of [us] knew who all was in the room. So wouldn’t it be cool if we all had each other’s pictures [to teach smart glasses about us]?”

6. DISCUSSION

Our exploratory findings from the user study lead to several implications for the design of future assistive systems supporting pedestrian detection, social interactions, and social distancing. We discuss those in this section to stimulate future work on assistive wearable cameras for blind people.

6.1. Implications regarding Accessing Proxemic Signals using Head-Mounted Cameras

Section 5.1 reveals that some camera frames from blind participants did not capture passersby’s face but other body parts (i.e., torso, arms, or legs), especially when they were near the passersby. Although some visual cues such as head pose and facial expressions [116] cannot be extracted from the other body parts, detecting those may help increase accessibility of someone’s presence [109, 112]. Also, we see that proximity sensors can enhance the detection of a person’s presence and distance as those sensors can provide depth information. In particular, such detection can help blind users to practice social distancing, which has posed unique challenges to people with visual impairments [31]. The sensor data however needs to be processed along with person detection, which often requires RGB data from cameras. Future work that focuses on more robust presence estimation for blind people may consider incorporating proximity sensors with cameras.

Regarding delivery of proxemic signals, it is important to note that blind people may have different spatial perception [22] and could thus interpret such proxemics feedback differently, especially if the feedback was provided with adjectives related to spatial perception, such as ‘far’ or ‘near’ (Section 5.5). Other types of audio feedback (e.g., sonification) or sensory stimulus (e.g., haptic) may overcome the limitations that the speech feedback has. On the other hand, blind participants’ experiences with our testbed may have been affected by its performance and thus could differ if an assistive system had more accurate performance or employed different types of feedback. Future work should consider investigating such a potential correlation.

6.2. Implications regarding the Form Factor

Several factors, such as blind people’s tendency of veering [39] and behavior of scanning the environment when using smart glasses (Section 5.1), can change camera input on wearable cameras and thus may affect the performance of detecting proxemic signals. From our study, we observed that the head movement of blind people often led to excluding a passerby from camera frames of a head-worn camera. Although we did not observe blind participants’ veering tendency in our study, the veering, which can vary by blind people [39], could change their camera aiming and consequently affect what is being captured. Also, as discussed in earlier work [22], blind people’s onset age affects the development of their spatial perception. Observing that our blind participants had different onset ages, we suspect that some differences in their spatial perception may explain their different scanning behaviors with smart glasses. Future work should investigate these topics further to enrich our community’s knowledge on these matters.

Moreover, using a wide-angle camera may help assistive systems to capture more visual information (e.g., a passerby coming from the side of a user) and tolerate some variations in blind people’s camera aiming with wearable cameras. The smart glasses in our user study, having only a front view with the limited horizontal range (64-degree angle), are unable to capture and detect people coming from the side or back of a blind user. As described in Table 4, some prior work has employed a wide-angle camera [96] or two cameras side by side [49] to get more visual data, especially for person detection. However, accessibility researchers and designers need to investigate which information requires more attention and how it should be delivered to blind users. Otherwise, blind users may be overwhelmed and often distracted by too much feedback (Section 5.5).

Table 4:

Information about non-handheld cameras designed for helping users with visual impairments access visual surroundings.

| Camera Device | FOV | Camera Placement | Design Purpose |

|---|---|---|---|

|

| |||

| Horizon Smart Glasses | 120-degree horizontal | head-mounted | Aira (agent-support) [11] |

| OrCam MyEye | not available | head-mounted | face recognition & color identification [1] |

| Microsoft HoloLens1 | 67-degree horizontal | head-mounted | person detection [96], stair navigation [114] |

| Ricoh Theta | 190-degree horizontala | head-mounted | person detection [96] |

| ZEDTM2K Stereo | 90-degree horizontal | suitecase-mounted | person detection [48] |

| Vuzix Blade | 64-degree horizontal | head-mounted | person detection [54] |

| RealSense D435 | 70-degree horizontal | suitcase-mounted | person detection [49]b |

It creates a 360-degree panorama by stitching multiple photos.

Two cameras were placed side by side to obtain 135-degree horizontal POV.

In addition, aiming with a wearable camera can vary by where the camera is positioned (e.g., a chest-mounted camera) as earlier work on wearable cameras for sighted people indicates that the position of a camera can lead to different visual characteristics of images [105]. In the context of person detection, prior work investigated assistive cameras for blind people in two different positions (i.e., either on a suitcase [48, 49] or on a user’s head [54, 96]). However, little work explores how camera positions can change the camera input while also affecting blind users’ mental mapping and computer vision models’ performances. We believe that it is important to study how blind people interact with cameras placed in different positions to explore design choices for assistive wearable cameras.

6.3. Implications regarding the Interactions with the System

Data-driven systems (e.g., machine learning models) inherently possess the possibility of generating errors due to several factors, such as data shifts [82] and model fragility [34]. Since the system that study participants experienced merely served as a testbed, it was naturally error prone (Section 5.2), which is largely true for any AI system, and consequently affected blind participants’ experiences. (Section 5.6). In particular, blind participants pointed out inaccessibility of error detection in visual estimates. To help blind users interpret feedback from such data-driven assistive systems effectively, it is imperative to tackle this issue (i.e., How to make such errors accessible to blind users?). One simple solution may be to provide the confidence score of an estimation, but further research is needed to understand how blind people interpret this additional information (i.e., confidence score) and to design effective methods of delivering such information.

Furthermore, different feedback modalities are worth exploring. In this work, we provided visual estimates in speech, but blind participants sometimes found it too verbose and imagined that it would be difficult for them to pay attention to speech feedback in certain situations, e.g. when they are in noisy areas or talking to someone. To prevent their auditory sensory system from being overloaded, other sensory stimuli such as haptic can be employed to provide feedback [15, 90]. It is however important to understand what information each feedback modality can deliver to blind users and how effectively they would perceive the information.

6.4. Implications regarding Teachable Interfaces

Blind participants envisioned personalizing their smart glasses to recognize their family members and friends (Section 5.7). To provide this experience for users, it would be worth exploring teachable interfaces, where users provide their own data to teach their systems [46]. Our community has studied data-driven approaches in several applications (e.g., face recognition [116], object recognition [9, 47, 53], and sound recognition [44]) to investigate the potential and implications of using machine learning in assistive technologies. However, little work explores how blind users interact with teachable interfaces, especially for person recognition. Our future work will focus on this to learn opportunities and challenges of employing teachable interfaces in assistive technologies.

Moreover, in Section 5.5, blind participants suggested enabling future smart glasses to detect specific moments (e.g., sitting in a table or talking with someone) to control feedback delivery. We believe that egocentric activity recognition [59, 65, 107], a widely-studied problem in the computer vision community, can help realize this experience. However, further investigation is required for adopting such models in assistive technologies, especially for blind users. For instance, one recent work proposed an egocentric activity recognition model assuming that input data inherently contains the user’s gaze motion [65]. However, that model may not work on data collected by blind people since the assumption, (i.e., eye gaze), only applies to a certain population (i.e., sighted people).

6.5. Implications regarding Hardware, Privacy Concerns, and Social Acceptance

There are still challenges related to hardware, privacy, and public acceptance, which keep wearable cameras from being employed for assistive technologies. For example, Aira ended their support for Horizon glasses in March 2020, due to hardware limitations [10]. Also, prior work investigated blind users’ privacy concerns over capturing their environments without being given access to what is being captured [8, 12], and social acceptance of assistive wearable cameras as the always-on cameras can capture other people without notification [7, 54, 80].

Beyond the high cost of real-time support from sighted people (e.g., Aira), as of now, there is no effective solution that can estimate the distance between blind users and other people in real time. Computer vision has the potential to provide this proxemic signal in real time on a users’ device. No recordings [54] and privacy-preserving face recognition [25, 85] may help address some privacy issues, but more concerns may arise if assistive wearable cameras need to store visual data (even on the device itself) to recognize the users’ acquaintances. Future work should consider investigating associated privacy concerns and societal issues, as these factors can affect design choices for assistive wearable cameras.

7. CONCLUSION

In this paper, we explored the potential and implications of using a head-worn camera to help blind people access proxemics, such as the presence, distance, position, and head pose, of pedestrians. We built GlAccess that focused on detecting the head of a passerby to estimate proxemic signals. We collected and annotated camera frames and usage logs from ten blind participants, who walked in a corridor while wearing the smart glasses for pedestrian detection and interacting with a total of 80 passersby. Our analysis results show that their smart glasses tended to capture passersby’s head when they were more than 6 feet (2 meters) away from passersby. However, the rate of including the head dropped quickly as blind participants were getting closer to passersby, where we observed variations in their scanning behaviors (i.e., head movements). Blind participants shared that using smart glasses for pedestrian detection was easy since they did not have to manipulate camera aiming. Their qualitative feedback also led to several design implications for future assistive cameras to consider. In particular, we found that errors in visual estimates need to be accessible to blind users to better interpret such outputs for their autonomy. Our future work will explore this accessibility issue in several use cases where blind people can benefit from wearable cameras.

Supplementary Material

ACKNOWLEDGMENTS

We thank Tzu-Chia Yeh for her help in our work presentation and the anonymous reviewers for their constructive feedback on an earlier draft of our work. This work is supported by NIDILRR (#90REGE0008) and Shimizu Corporation.

Footnotes

Minimum distance suggested by the World Health Organization (WHO) [72]

Minimum distance suggested by the Center for Disease Control and Prevention (CDC) in the United States [73]

The data were collected right before COVID-19 with a use case scenario of blind individuals initiating social interactions with sighted people in public spaces. Out team, co-led by a blind researcher, was annotating the data when the pandemic reached our shores. Thus, we felt it was imperative to expand our annotation and analysis to include distance thresholds recommended for social distancing. After all, both scenarios share common characteristics such as need for proximity estimation and initiating interactions to maintain distancing, i.e. recommended ‘Speak up’ strategy by the LightHouse Guild [38].

The 6 feet (2 meters) threshold recommended from CDC for social distancing was not communicated to blind participants in the study as it preceded the COVID-19 pandemic. However, this threshold was added later in the data annotation phase and analysis as it may prove informative for future researchers working towards accessible social distancing systems.

Questionnaire based on [89] is available at https://iamlabumd.github.io/assets2021_lee: −3 indicates the most negative, 0 neutral, and 3 the most positive.

Such a high precision is not surprising since this detection task in our study was an imbalanced classification problem: there were two classes — presence and no presence — with the presence representing the overwhelming majority of the data points since there was always a passerby coming towards the blind participants. More so, there were no visual elements in the study environment that could confuse the model (e.g., photos of people on the walls).

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. Copyrights for components of this work owned by others than the author(s) must be honored. Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires prior specific permission and/or a fee. Request permissions from permissions@acm.org.

Contributor Information

Kyungjun Lee, University of Maryland, College Park, College Park, Maryland, USA.

Daisuke Sato, Carnegie Mellon University, Pittsburgh, Pennsylvania, USA.

Saki Asakawa, New York University, Brooklyn, New York, USA.

Chieko Asakawa, IBM Research, Carnegie Mellon University.

Hernisa Kacorri, University of Maryland, College Park, College Park, Maryland, USA.

REFERENCES

- [1].OrCam MyEye 2. 2021. Help people who are blind or partially sighted. https://www.orcam.com/en/

- [2].University of Maryland A. James Clark School of Engineering. 2020. Sci-Fi Social Distancing? https://eng.umd.edu/news/story/scifi-social-distancing?utm_source=Publicate&utm_medium=email&utm_content=sci-fi-social-distancing&utm_campaign=200911+-+EMAIL [Google Scholar]

- [3].Afonso Amandine, Blum Alan, Jacquemin Christian, Denis Michel, and Brian FG Katz. 2005. A study of spatial cognition in an immersive virtual audio environment: Comparing blind and blindfolded individuals. Georgia Institute of Technology. [Google Scholar]

- [4].Agarwal R, Ladha N, Agarwal M, Majee KK, Das A, Kumar S, Rai SK, Singh AK, Nayak S, Dey S, Dey R, and Saha HN 2017. Low cost ultrasonic smart glasses for blind. In 2017 8th IEEE Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON). 210–213. [Google Scholar]

- [5].Ahmed Subeida, Balasubramanian Harshadha, Stumpf Simone, Morrison Cecily, Sellen Abigail, and Grayson Martin. 2020. Investigating the Intelligibility of a Computer Vision System for Blind Users. In Proceedings of the 25th International Conference on Intelligent User Interfaces (Cagliari, Italy) (IUI ‘20). Association for Computing Machinery, New York, NY, USA, 419–429. 10.1145/3377325.3377508 [DOI] [Google Scholar]

- [6].Ahmed Tousif, Hoyle Roberto, Connelly Kay, Crandall David, and Kapadia Apu. 2015. Privacy concerns and behaviors of people with visual impairments. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems. 3523–3532. [Google Scholar]

- [7].Ahmed Tousif, Kapadia Apu, Potluri Venkatesh, and Swaminathan Manohar. 2018. Up to a Limit? Privacy Concerns of Bystanders and Their Willingness to Share Additional Information with Visually Impaired Users of Assistive Technologies. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies 2, 3 (2018), 1–27. [Google Scholar]

- [8].Tousif Ahmed, Patrick Shaffer, Kay Connelly, David Crandall, and Apu Kapadia. 2016. Addressing physical safety, security, and privacy for people with visual impairments. In Twelfth Symposium on Usable Privacy and Security ( SOUPS 2016). 341–354. [Google Scholar]

- [9].Ahmetovic Dragan, Sato Daisuke, Oh Uran, Ishihara Tatsuya, Kitani Kris, and Asakawa Chieko. 2020. Recog: Supporting blind people in recognizing personal objects. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems. 1–12. [Google Scholar]

- [10].Aira. 2020. Aira Ends Support for Horizon. https://aira.io/march-aira-update

- [11].Aira. 2021. Connecting you to real people instantly to simplify daily life. https://aira.io/

- [12].Akter Taslima, Ahmed Tousif, Kapadia Apu, and Swami Manohar Swaminathan. 2020. Privacy Considerations of the Visually Impaired with Camera Based Assistive Technologies: Misrepresentation, Impropriety, and Fairness. In The 22nd International ACM SIGACCESS Conference on Computers and Accessibility. 1–14. [Google Scholar]

- [13].Akter Taslima, Dosono Bryan, Ahmed Tousif, Kapadia Apu, and Semaan Bryan. 2020. “I am uncomfortable sharing what I can’t see”: Privacy Concerns of the Visually Impaired with Camera Based Assistive Applications. In 29th USENIX Security Symposium ( USENIX Security 20). [Google Scholar]

- [14].Albrecht Gary L, Walker Vivian G, and Levy Judith A. 1982. Social distance from the stigmatized: A test of two theories. Social Science & Medicine 16, 14 (1982), 1319–1327. [DOI] [PubMed] [Google Scholar]

- [15].Azenkot Shiri, Ladner Richard E, and Wobbrock Jacob O. 2011. Smartphone haptic feedback for nonvisual wayfinding. In The proceedings of the 13th international ACM SIGACCESS conference on Computers and accessibility. 281–282. [Google Scholar]

- [16].Balata Jan, Mikovec Zdenek, and Neoproud Lukas. 2015. Blindcamera: Central and golden-ratio composition for blind photographers. In Proceedings of the Mulitimedia, Interaction, Design and Innnovation. 1–8. [Google Scholar]

- [17].Bigham Jeffrey P, Jayant Chandrika, Ji Hanjie, Little Greg, Miller Andrew, Miller Robert C, Miller Robin, Tatarowicz Aubrey, White Brandyn, White Samual, et al. 2010. VizWiz: nearly real-time answers to visual questions. In Proceedings of the 23nd annual ACM symposium on User interface software and technology. ACM, 333–342. [Google Scholar]

- [18].Blade Vuzix. 2021. Augment Reality (AR) Smart Glasses for the Consumer. https://www.vuzix.com/products/blade-smart-glasses

- [19].Branham Stacy M., Abdolrahmani Ali, Easley William, Scheuerman Morgan, Ronquillo Erick, and Hurst Amy. 2017. “Is Someone There? Do They Have a Gun”: How Visual Information about Others Can Improve Personal Safety Management for Blind Individuals. In Proceedings of the 19th International ACM SIGACCESS Conference on Computers and Accessibility (Baltimore, Maryland, USA) (ASSETS ‘17). Association for Computing Machinery, New York, NY, USA, 260–269. 10.1145/3132525.3132534 [DOI] [Google Scholar]

- [20].Braun Virginia and Clarke Victoria. 2006. Using thematic analysis in psychology. Qualitative research in psychology 3, 2 (2006), 77–101. [Google Scholar]

- [21].Lookout by Google. 2021. Use Lookout to explore your surroundings. https://support.google.com/accessibility/android/answer/9031274 [Google Scholar]

- [22].Cleaves Wallace Tand Royal Russell W. 1979. Spatial memory for configurations by congenitally blind, late blind, and sighted adults. Journal of Visual Impairment & Blindness (1979). [Google Scholar]

- [23].Digit-Eyes. 2021. Identify and organize your world. http://www.digit-eyes.com/ [Google Scholar]

- [24].Envision. 2021. Hear what you want to see. https://www.letsenvision.com/ [Google Scholar]

- [25].Erkin Zekeriya, Franz Martin, Guajardo Jorge, Katzenbeisser Stefan, Lagendijk Inald, and Toft Tomas. 2009. Privacy-preserving face recognition. In International symposium on privacy enhancing technologies symposium. Springer, 235–253. [Google Scholar]

- [26].eSight. 2021. Electronic glasses for the legally blind. https://esighteyewear.com/ [Google Scholar]

- [27].Be My Eyes. 2021. Brining sight to blind and low-vision people. https://www.bemyeyes.com/ [Google Scholar]

- [28].Feierabend Martina, Karnath Hans-Otto, and Lewald Jörg. 2019. Auditory Space Perception in the Blind: Horizontal Sound Localization in Acoustically Simple and Complex Situations. Perception 48, 11 (2019), 1039–1057. [DOI] [PubMed] [Google Scholar]

- [29].Fiannaca Alexander, Apostolopoulous Ilias, and Folmer Eelke. 2014. Headlock: A Wearable Navigation Aid That Helps Blind Cane Users Traverse Large Open Spaces. In Proceedings of the 16th International ACM SIGACCESS Conference on Computers and Accessibility (Rochester, New York, USA) (ASSETS ‘14). Association for Computing Machinery, New York, NY, USA, 19–26. 10.1145/2661334.2661453 [DOI] [Google Scholar]

- [30].Flores Arturo, Christiansen Eric, Kriegman David, and Belongie Serge. 2013. Camera distance from face images. In International Symposium on Visual Computing. Springer, 513–522. [Google Scholar]

- [31].American Foundation for the Blind. 2020. Flatten Inaccessibility Study: Executive Summary. https://www.afb.org/research-and-initiatives/flatten-inaccessibility-survey/executive-summary [Google Scholar]

- [32].Gade Lakshmi, Krishna Sreekar, and Panchanathan Sethuraman. 2009. Person localization using a wearable camera towards enhancing social interactions for individuals with visual impairment. In Proceedings of the 1st ACM SIGMM international workshop on Media studies and implementations that help improving access to disabled users. 53–62. [Google Scholar]

- [33].Ghodgaonkar Isha, Chakraborty Subhankar, Banna Vishnu, Allcroft Shane, Metwaly Mohammed, Bordwell Fischer, Kimura Kohsuke, Zhao Xinxin, Goel Abhinav, Tung Caleb, et al. 2020. Analyzing Worldwide Social Distancing through Large-Scale Computer Vision. arXiv preprint arXiv:2008.12363 (2020). [Google Scholar]

- [34].Ian J Goodfellow Jonathon Shlens, and Szegedy Christian. 2014. Explaining and harnessing adversarial examples. arXiv preprint arXiv:1412.6572 (2014). [Google Scholar]

- [35].Gourier Nicolas, Hall Daniela, and Crowley James L. 2004. Estimating face orientation from robust detection of salient facial features. In ICPR International Workshop on Visual Observation of Deictic Gestures. Citeseer. [Google Scholar]

- [36].Grayson Martin, Thieme Anja, Marques Rita, Massiceti Daniela, Cutrell Ed, and Morrison Cecily. 2020. A Dynamic AI System for Extending the Capabilities of Blind People. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI EA ‘20). Association for Computing Machinery, New York, NY, USA, 1–4. 10.1145/3334480.3383142 [DOI] [Google Scholar]

- [37].Grayson Martin, Thieme Anja, Marques Rita, Massiceti Daniela, Cutrell Ed, and Morrison Cecily. 2020. A dynamic AI system for extending the capabilities of blind people. In Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems. 1–4. [Google Scholar]

- [38].Guild Lighthouse. 2020. How People Who Are Blind or Have Low Vision Can Safely Practice Social Distancing During COVID-19. https://www.lighthouseguild.org/newsroom/how-people-who-are-blind-or-have-low-vision-can-safely-practice-social-distancing-during-covid-19

- [39].Guth David and LaDuke Robert. 1994. The veering tendency of blind pedestrians: An analysis of the problem and literature review. Journal of Visual Impairment & Blindness (1994). [Google Scholar]

- [40].Leslie A Hayduk and Steven Mainprize. 1980. Personal space of the blind. Social Psychology Quarterly (1980), 216–223. [PubMed] [Google Scholar]

- [41].Horsley David and Przybyla Richard J. 2020. Ultrasonic Range Sensors Bring Precision to Social-Distance Monitoring and Contact Tracing. https://www.electronicdesign.com/industrial-automation/article/21139839/ultrasonic-range-sensors-bring-precision-to-socialdistance-monitoring-and-contact-tracing [Google Scholar]

- [42].Hwang Alex Dand Peli Eli. 2014. An augmented-reality edge enhancement application for Google Glass. Optometry and vision science: official publication of the American Academy of Optometry 91, 8 (2014), 1021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [43].Kwon Hyukseong, Yoon Youngrock, Park Jae Byung, and Kak AC 2005. Person Tracking with a Mobile Robot using Two Uncalibrated Independently Moving Cameras. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation. 2877–2883. [Google Scholar]

- [44].Jain Dhruv, Ngo Hung, Patel Pratyush, Goodman Steven, Findlater Leah, and Froehlich Jon. 2020. SoundWatch: Exploring Smartwatch-Based Deep Learning Approaches to Support Sound Awareness for Deaf and Hard of Hearing Users. In The 22nd International ACM SIGACCESS Conference on Computers and Accessibility (Virtual Event, Greece) (ASSETS ‘20). Association for Computing Machinery, New York, NY, USA, Article 30, 13 pages. 10.1145/3373625.3416991 [DOI] [Google Scholar]

- [45].Jayant Chandrika, Ji Hanjie, White Samuel, and Bigham Jeffrey P. 2011. Supporting blind photography. In The proceedings of the 13th international ACM SIGACCESS conference on Computers and accessibility. ACM, 203–210. [Google Scholar]

- [46].Kacorri Hernisa. 2017. Teachable Machines for Accessibility. SIGACCESS Access. Comput 119 (Nov. 2017), 10–18. 10.1145/3167902.3167904 [DOI] [Google Scholar]

- [47].Kacorri Hernisa, Kitani Kris M, Bigham Jeffrey P, and Asakawa Chieko. 2017. People with visual impairment training personal object recognizers: Feasibility and challenges. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems. ACM, 5839–5849. [Google Scholar]

- [48].Kayukawa Seita, Higuchi Keita, Guerreiro João, Morishima Shigeo, Sato Yoichi, Kitani Kris, and Asakawa Chieko. 2019. BBeep: A Sonic Collision Avoidance System for Blind Travellers and Nearby Pedestrians. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (Glasgow, Scotland Uk) (CHI ‘19). Association for Computing Machinery, New York, NY, USA, 1–12. 10.1145/3290605.3300282 [DOI] [Google Scholar]

- [49].Kayukawa Seita, Ishihara Tatsuya, Takagi Hironobu, Morishima Shigeo, and Asakawa Chieko. 2020. Guiding Blind Pedestrians in Public Spaces by Understanding Walking Behavior of Nearby Pedestrians. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol 4, 3, Article 85 (Sept. 2020), 22 pages. 10.1145/3411825 [DOI] [Google Scholar]

- [50].Kendon Adam. 1990. Conducting interaction: Patterns of behavior in focused encounters. Vol. 7. CUP Archive. [Google Scholar]

- [51].Krishna Sreekar, Little Greg, Black John, and Panchanathan Sethuraman. 2005. A wearable face recognition system for individuals with visual impairments. In Proceedings of the 7th international ACM SIGACCESS conference on Computers and accessibility. ACM, 106–113. [Google Scholar]

- [52].Lee Kyungjun, Hong Jonggi, Pimento Simone, Jarjue Ebrima, and Kacorri Hernisa. 2019. Revisiting blind photography in the context of teachable object recognizers. In The 21st International ACM SIGACCESS Conference on Computers and Accessibility. 83–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Lee Kyungjun and Kacorri Hernisa. 2019. Hands Holding Clues for Object Recognition in Teachable Machines. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems. 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Lee Kyungjun, Sato Daisuke, Asakawa Saki, Kacorri Hernisa, and Asakawa Chieko. 2020. Pedestrian Detection with Wearable Cameras for the Blind: A Two-Way Perspective. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (Honolulu, HI, USA) (CHI ‘20). Association for Computing Machinery, New York, NY, USA, 1–12. 10.1145/3313831.3376398 [DOI] [PMC free article] [PubMed] [Google Scholar]

- [55].Bing Li, Munoz J Pablo, Xuejian Rong, Jizhong Xiao, Yingli Tian, and Aries Arditi. 2016. ISANA: wearable context-aware indoor assistive navigation with obstacle avoidance for the blind. In European Conference on Computer Vision. Springer, 448–462. [Google Scholar]

- [56].Liu Hong, Wang Jun, Wang Xiangdong, and Qian Yueliang. 2015. iSee: obstacle detection and feedback system for the blind. In Adjunct Proceedings of the 2015 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2015 ACM International Symposium on Wearable Computers. 197–200. [Google Scholar]

- [57].LookTel. 2021. LookTel Money Reader. http://www.looktel.com/moneyreader