Abstract

With the advances in scientific foundations and technological implementations, optical metrology has become versatile problem-solving backbones in manufacturing, fundamental research, and engineering applications, such as quality control, nondestructive testing, experimental mechanics, and biomedicine. In recent years, deep learning, a subfield of machine learning, is emerging as a powerful tool to address problems by learning from data, largely driven by the availability of massive datasets, enhanced computational power, fast data storage, and novel training algorithms for the deep neural network. It is currently promoting increased interests and gaining extensive attention for its utilization in the field of optical metrology. Unlike the traditional âphysics-basedâ approach, deep-learning-enabled optical metrology is a kind of âdata-drivenâ approach, which has already provided numerous alternative solutions to many challenging problems in this field with better performances. In this review, we present an overview of the current status and the latest progress of deep-learning technologies in the field of optical metrology. We first briefly introduce both traditional image-processing algorithms in optical metrology and the basic concepts of deep learning, followed by a comprehensive review of its applications in various optical metrology tasks, such as fringe denoising, phase retrieval, phase unwrapping, subset correlation, and error compensation. The open challenges faced by the current deep-learning approach in optical metrology are then discussed. Finally, the directions for future research are outlined.

Similar content being viewed by others

Introduction

Optical metrology is the science and technology of making measurements with the use of light as standards or information carriers1,2,3. Light is characterized by its fundamental properties, namely, amplitude, phase, wavelength, direction, frequency, speed, polarization, and coherence. In optical metrology, these fundamental properties of light are ingeniously utilized as information carriers of a measurand, enabling a wide range of optical metrology tools that allow the measurement of a wide range of subjects4,5,6. For example, optical interferometry takes advantage of the wavelength of light as a precise dividing marker of length. The speed of light defines the international standard of length, the meter, as the length traveled in vacuum during a time interval of 1/299,792,458 of a second7. As a result, optical metrology is being increasingly adopted in many applications where reliable data about the distance, displacement, dimensions, shape, roughness, surface properties, strain, and stress state of the object under test are required8,9,10. Optical metrology is a broad and interdisciplinary field relating to diverse disciplines such as photomechanics, optical imaging, and computer vision. There is no strict boundary between those fields, and in fact, the term âoptical metrologyâ is often interchangeably used with âoptical measurementâ, in which achieving higher precision, sensitivity, repeatability, and speed is always a priority11,12.

There are a few inventions that revolutionized optical metrology. The first is the invention of laser13,14. The advent of laser interferometry could be traced back to experiments conducted independently in 1962 by Denisyuk15 and Leith and Upatnix16 with the objective of marrying coherent light produced by lasers with Gaborâs holography method17. The use of lasers as a light source in optical metrology marked the first time that such highly controlled light became available as a physical medium to measure the physical properties of samples, opening up new possibilities for optical metrology. The second revolution was initiated with the invention of charged coupled device (CCD) cameras in 1969, which replaced the earlier photographic emulsions by virtue of recording optical intensity signals from the measurand digitally8. The use of the CCD camera as a recording device in optical metrology represented another important milestone: the compatibility of light with electricity, i.e., âlightâ can be converted into âelectrical quantity (current, voltage, etc.)â. This means that the computational storage, access, analysis, and transmission of captured data are easily attainable, leading to the âdigital transitionâ of optical metrology. Computer-based signal processing tools were introduced to automate the quantitative determination of optical metrology data, eliminating the inconvenience associated with the manual, labor-intensive, time-consuming evaluation of fringe patterns18,19,20. Methods such as digital interferometry21, digital holography22, and digital image correlation (DIC)23 have become state of the art by now.

With the digital transition, image processing plays an essential role in optical metrology for the purpose of converting the observed measurements (generally displayed in the form of deformed fringe/speckle patterns) into the desired attributes (such as geometric coordinates, displacements, strain, refractive index, and others) of an object under study. Such information-recovery process is similar to those of computer vision and computational imaging, presenting as an inverse problem that is often ill-posed with respect to the existence, uniqueness, and stability of the solution24,25,26,27. Tremendous progress has been achieved in terms of accurate mathematical modeling (statistical models of noise and the observational data)28, regularization techniques29, numerical methods, and their efficient implementations30. For the field of optical metrology, however, the situation becomes quite different due to the fact that the optical measurements are frequently carried out in a highly controlled environment. Instead of explicitly interpreting optical metrology tasks from the perspective of solving inverse problems (based on a formal optimization framework), mainstream scientists in optical metrology prefer to bypass the ill-posedness and simplify the problem by means of active strategies, such as sample manipulation, system adjustment, and multiple acquisitions31. A typical example is the phase-shifting technique32, which sacrifices the time and effort of capturing multiple fringe patterns to exchange for a deterministic and straightforward solution. Under such circumstances, the phase retrieval problem is well-posed or even over-determined (when the phase-shifting step is larger than 3), and employing more evolved algorithms, such as compressed sensing33 and nonconvex (low-rank) regularization34 seem redundant and unnecessary, especially as they fail to demonstrate clear advantages over classical ones in terms of accuracy, adaptability, speed, and, more importantly, ease-of-use. This gives us the key question and motivation of this review paper: whether machine learning will be the driving force in optical metrology not only provides superior solutions to the growing new challenges but also tolerates imperfect measurement conditions with the least efforts, such as additive noise, phase-shifting error, intensity nonlinearity, motion, and vibration.

In the past few years, we have indeed witnessed the rapid progress on high-level artificial intelligence (AI), where deep representations based on convolutional and recurrent neural network models are learned directly from the captured data to solve many tasks in computer vision, computational imaging, and computer-aided diagnosis with unprecedented performance35,36,37. The early framework for deep learning was established on artificial neural networks (ANNs) in the 1980s38, yet only recently the real impact of deep learning became significant due to the advent of fast graphics processing units (GPUs) and the availability of large datasets39. In particular, deep learning has revolutionized the computer vision community, introducing non-traditional and effective solutions to numerous challenging problems such as object detection and recognition40, object segmentation41, pedestrian detection42, image super-resolution43, as well as medical image-related applications44. Similarly, in computational imaging, deep learning has led to rapid growth in algorithms and methods for solving a variety of ill-posed inverse computational imaging problems45, such as super-resolution microscopy46, lensless phase imaging47, computational ghost imaging48, and image through scattering media49. In this context, researchers in optical metrology have also made significant explorations in this regard with very promising results within just a few short years, as evidenced by the ever-increasing and the respectable number of publications50,51,52,53,54,55. Meanwhile, those research works are scattered rather than systematic, which gives us the second motivation to provide a comprehensive review to understand their principles, implementations, advantages, applications, and challenges. It should be noted that optical metrology covers a wide range of methods and applications today. It would be beyond the scope of this review to discuss all relevant technologies and trends. We, therefore, restrict our focus to phase/correlation measurement techniques, such as interferometry, holography, fringe projection, and DIC. Although phase retrieval and wave-field sensing technologies, such as defocus variation (GerchbergâSaxtonâFienup-type methods56,57), transport of intensity equation (TIE)58,59, aperture modulation60, ptychography61,62, and wavefront sensing (e.g., ShackâHartmann63, Pyramid64, and computational shear interferometry65), has been recently introduced to optical metrology66,67,68, they may be more appropriately placed in the field of âcomputational imagingâ. The reader is referred to the earlier review by Barbastathis et al.45 for more detailed information on this topic. It is also worth mentioning that (passive) stereovision, which extracts depth information from stereo images, is an important branch of photogrammetry that has been extensively studied by the computer vision community. Although stereovision techniques do not strictly fall into the category of optical metrology, due to the fact that many ideas and algorithms in DIC and fringe projection were âborrowedâ from stereovision, they are also included in this review.

The remainder of this review is organized as follows. We start by summarizing the relevant foundations and image formation models of different optical metrology approaches, which are generally required as a priori knowledge in conventional optical metrology methods. Next, we present a general hierarchy of the image-processing algorithms that are most commonly used in conventional optical metrology in the âImage processing in optical metrologyâ section. After a brief introduction to the history and basic concept of deep learning, we recapitulate the advantages of using deep learning in optical metrology tasks by interpreting the concept as an optimization problem. We then present a recollection of the deep learning methods that have been proposed in optical metrology, suggesting the pervasive penetration of deep learning in almost all aspects of the image-processing hierarchy. The âChallengesâ section discusses both technical and implementation challenges faced by the current deep-learning approach in optical metrology. In the âFuture directionsâ section, we give our outlook for the prospects for deep learning in optical metrology. Finally, conclusions and closing remarks are given in the âConclusionsâ section.

Image formation in optical metrology

Optical metrology methods often form images (e.g., fringe/speckle patterns) for processing. Thus image formation is essential to reconstruct various quantities. In most interferometric metrological methods, the image is formed by the coherent superposition of the object and reference beams. As a result, the raw intensity across the object is modulated by a harmonic function, resulting in the bright and dark contrasts, known as fringe patterns. A typical fringe pattern can be written as18,19

where (x, y) refers to the spatial coordinates along the horizontal and vertical directions, A(x, y) is the background intensity, B(x, y) is the fringe amplitude, Ï(x, y) is the phase distribution. In most cases, phase is the primary quantity of the fringe pattern to be retrieved as it is related to the final object quantities of interest, such as surface shape, mechanical displacement, 3D coordinates, and their derivations. The related techniques include classical interferometry, photoelasticity, holographical interferometry, digital holography, etc. On a different note, the fringe patterns can also be created noninterferometrically by overlapping of two periodic gratings as in geometric moiré, or incoherent projection of structured patterns onto the object surface as in fringe projection profilometry (FPP)/deflectometry. As summarized in Fig. 1, though the final fringe patterns obtained in all forms of fringe-based techniques discussed herein are similar in form, the physics behind the image formation process and the meanings of the fringe parameters are different. In DIC, the measured intensity images are speckle patterns of the specimen surface before and after deformation,

where \(\left( {D_x(x,y),D_y(x,y)} \right)\) refers to the displacement vector-field mapping from the undeformed/reference pattern Ir(x, y) to the deformed one Id(x, y). It directly provides full-field displacements and strain distributions of the sample surface. The DIC technique can also be combined with binocular stereovision or stereophotogrammetry to recover depth and out-of-plane deformation of the surface from the displacement field (so-called disparity) by exploiting the unique textures present in two or more images of the object taken from different viewpoints. The image formation processes for typical optical metrology methods are briefly described as follows.

-

(1)

Classical interferometry: In classical interferometry, the fringe pattern is formed by superimposition of two smooth coherent wavefronts, one of which is typically a flat or spherical reference wavefront and the other a distorted wavefront formed and directed by optical components69,70 (Fig. 1a). The phase of the fringe pattern reflects the difference between the ideal reference wavefront and object wavefront. Typical examples of classical interferometry include the use of configurations such as the Michelson, Fizeau, Twyman Green, and Mach-Zehnder interferometers to characterize the surface, aberration, or roughness of optical components with high accuracy, of the order of a fraction of the wavelength.

-

(2)

Photoelasticity: Photoelasticity is a nondestructive, full-field, optical metrology technique for measuring the stress developed in transparent objects under loading71,72. Photoelasticity is based on an optomechanical property, so-called âdouble refractionâ or âbirefringenceâ observed in many transparent polymers. Combined with two circular polarizers (linear polarizer coupled with quarter waveplate) and illuminated with a conventional light source, a loaded photoelastic sample (or photoelastic coating applied to an ordinary sample) can produce fringe patterns whose phases are associated with the difference between the principal stresses in a plane perpendicular to the light propagation direction73 (Fig. 1b).

-

(3)

Geometric moiré/Moiré interferometry: In optical metrology, the moiré technique is defined as the utilization of the moiré phenomenon to measure shape, deformation, or displacements of surfaces74,75. A moiré pattern is formed by the superposition of two periodic or quasi-periodic gratings. One of these gratings is called reference grating, and the other one is object grating mounted or engraved on the surface to be studied, which is subjected to distortions induced by surface changes. For in-plane displacement and strain measurements, moiré technology has evolved from low-sensitivity geometric moiré75,76,77 to high-sensitivity moiré interferometry75,78. In moiré interferometry, two collimated coherent beams interfere to produce a virtual reference grating with high frequencies, which interacts with the object grating to create the moiré pattern with fringes representing subwavelength in-plane displacements per contour (Fig. 1c).

-

(4)

Holographic interferometry: Holography, invented by Gabor17 in the 1940âs, is a technique that records an interference pattern and uses diffraction to reproduce a wavefront, resulting in a 3D image that still has the depth, parallax, and other properties of the original scene. The principle of holography can also be utilized as an optical metrology tool. In holographic interferometry, a wavefront is first stored in the hologram and later interferometrically compared with another, producing fringe patterns that yield quantitative information about the object surface deriving these two wavefronts79,80. This comparison can be made in three different ways that constitute the basic approaches of holographic interferometry: real-time81, double-exposure82, and time-average holographic interferometry83,84 (Fig. 1d), allowing for both qualitative visualization and quantitative measurement of real-time deformation and perturbation, changes of the state between two specific time points, and vibration mode and amplitude, respectively.

-

(5)

Digital holography: Digital holography utilizes a digital camera (CMOS or CCD) to record the hologram produced by the interference between a reference wave and an object wave emanating from the sample85,86 (Fig. 1e). Unlike classical interferometry, the sample may not be precisely in-focus and can even be recorded without using any imaging lenses. The numerical propagation using Fresnel transform or angular spectrum algorithm enables digital refocusing at any depths of the sample without physically moving it. In addition, digital holography also provides an alternative and much simpler way to realize double-exposure87 and time-averaged holographic interferometry88,89, without additional benefits of quantitative evaluation of holographic interferograms and flexible phase-aberration compensation86,90.

-

(6)

Electronic speckle pattern interferometry (ESPI): In ESPI, the tested object generally has an optically rough surface. When illuminated by a coherent laser beam, it will create a speckle pattern with random phase, amplitude, and intensity91,92. If the object is displaced or deformed, the object-to-image distance will change, and the phase of the speckle pattern will change accordingly. In ESPI, two speckle patterns are acquired one each for the undeformed and deformed states, by double exposure, and the absolute difference between these two deformed patterns results in the form of fringes superimposed on the speckle pattern where each fringe contour normally represents a displacement of half a wavelength (Fig. 1f).

-

(7)

Electronic speckle shearing interferometry (shearography): Electronic speckle shearing interferometry, commonly known as shearography, is an optical measurement technique similar to ESPI. However, instead of using a separate known reference beam, shearography uses the test object itself as the reference; and the interference pattern is created by two sheared speckle fields originated from the light scattered by the surface of the object under test93,94. In shearography, the phase encoded in the fringe pattern depicts the derivatives of the surface displacements, i.e., to the strain developed on the object surface (Fig. 1g). Consequently, the anomalies or defects on the surface of the object can be revealed more prominently, rendering shearography one of the most powerful tools for nondestructive testing applications.

-

(8)

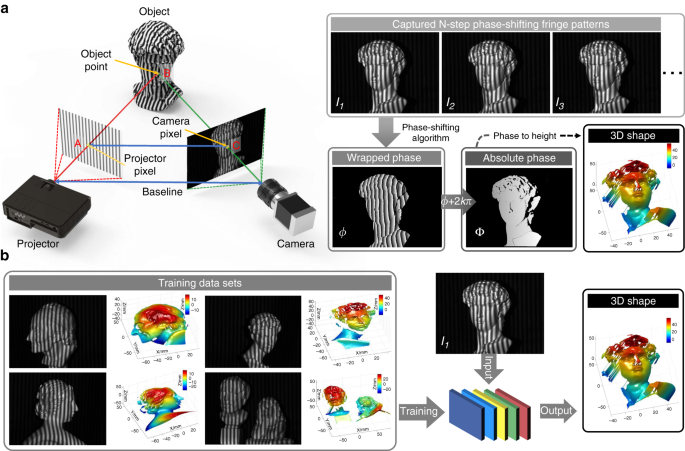

Fringe projection profilometry/deflectometry: Fringe projection is a widely used noninterferometic optical metrology technique for measuring the topography of an object at a certain angle between the observation and the projection point95,96. The sinusoidal pattern in fringe projection techniques is generally incoherently formed by a digital video projector and directly projected onto the object surface. The corresponding distorted fringe pattern is recorded by a digital camera. The average intensity and intensity modulation of the captured fringe pattern are associated with the surface reflectivity and ambient illuminations, and the phase is associated with the surface height32 (Fig. 1h). Deflectometry is another structured light technique similar to FPP, but instead of being produced by a projector, similar types of fringe patterns are displayed on a planar screen and distorted by the reflective (mirror-like) test surface97,98. The phase measured in deflectometry is directly sensitive to the surface slope (similar to shearography), so it is more effective for detecting shape defects99,100.

-

(9)

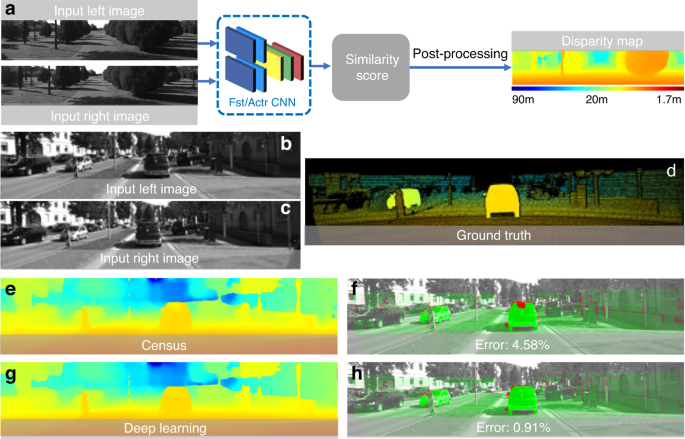

Digital image correction (DIC)/stereovision: DIC is another important noninterferometic optical metrology method that employs image correlation techniques for measuring full-field shape, displacement, and strains of an object surface23,101,102. Generally, the object surface should have a random intensity distribution (i.e., a random speckle pattern), which distorts together with the sample surface as a carrier of deformation information. Images of the object at different loadings are captured with one (2D-DIC)23, or two synchronized cameras (3D-DIC)103, and then these images are analyzed with correlation-based matching (tracking or registration) to extract full-field displacement and strain distributions (Fig. 1i). Unlike 2D-DIC that is limited to in-plane deformation measurement of nominal planar objects, 3D-DIC, also known as stereo-DIC, allows for the measurement of 3D displacements (both in-plane and out-of-plane) for both planar and curved surfaces104,105. 3D-DIC is inspired by binocular stereovision or stereophotogrammetry in the computer vision community, which recovers the 3D coordinates by finding pixel correspondence (i.e., disparity) of unique features that exist in two or more images of the object taken from different points of view106,107. Nevertheless, unlike DIC, in which the displacement vector can be along both x and y directions, in stereophotogrammetry, after epipolar rectification, disparities between the images are along the x direction only108.

a Classical interferometry. b Photoelasticity. c Geometric moiré and moiré interferometry. d Holographic interferometry. e Digital holography. f Electronic speckle shearing interferometry (ESPI). g Shearography. h Fringe projection profilometry (FPP) and deflectometry. i Digital image correlation (DIC) and stereovision

Image processing in optical metrology

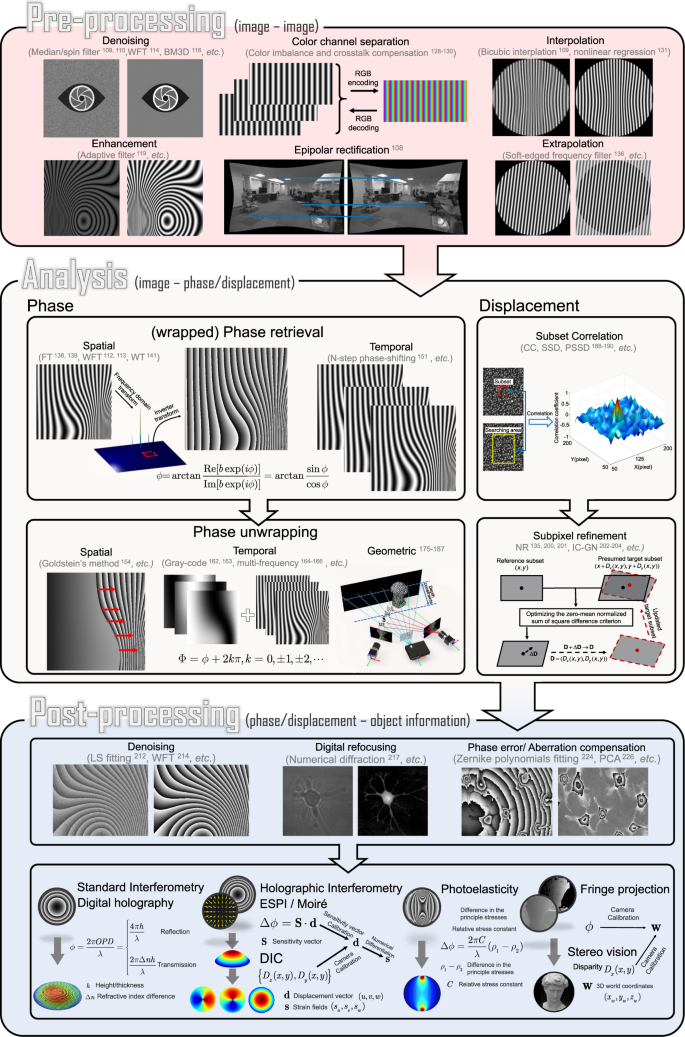

The elementary task of digital image processing in optical metrology can be defined as the conversion of the captured raw intensity image(s) into the desired object quantities taking into account the physical model of the intensity distribution describing the image formation process. In most cases, image processing in optical metrology is not a one-step procedure, and a logical hierarchy of image processing steps should be accomplished. As illustrated in Fig. 2, the image-processing hierarchy typically encompasses three main steps, pre-processing, analysis, and postprocessing, each of which includes a series of mapping functions that are cascaded to form a pipeline structure. For each operation, the corresponding f is an operator that transforms the image-like input into an output of corresponding (possibly resampled) spatial dimensions. Figure 3 shows the big picture of the image-processing hierarchy with various types of algorithms distributed in different layers. Next, we will zoom in one level deeper on each of the hierarchical steps.

The pipeline of a typical optical metrology method (e.g., FPP) encompasses a sequence of distinct operations (algorithms) to process and analyze the image data, which can be further categorized into three main steps: pre-processing (e.g., denoising, image enhancement), analysis (e.g., phase demodulation, phase unwrapping), and postprocessing (e.g., phase-depth mapping)

Image processing in optical metrology is not a one-step procedure. Depending on the purpose of the evaluation, a logical hierarchy of processing steps should be implemented before the desired information can be extracted from the image. In general, the image processing architecture in optical metrology consists of three main steps: pre-processing, analysis, and post-processing.

Pre-processing

The purpose of pre-processing is to assess the quality of the image data and improve the data quality by suppressing or minimizing unwanted disturbances (noise, aliasing, geometric distortions, etc.) before being fed to the following image analysis stage. It takes place at the lowest level (so-called iconic level) of image processing âthe input and output of the corresponding mapping function(s) are both intensity images, i.e., \(f_{anal}:I \to I^\prime\). Representative image pre-processing algorithms in optical metrology includes but not limited to:

-

Denoising: In optical metrology, noise in captured raw intensity data has several sources that are related to the electronic noise of photodetectors and the coherent noise (so-called speckle). Typical numerical approaches to noise reduction include median filter109, spin filter110, anisotropic diffusion111, coherence diffusion112, Wavelet113, windowed Fourier transform (WFT)114,115, block matching 3D (BM3D)116, etc. For more detailed information and comparisons of these algorithms, the reader may refer to the reviews by Kulkarnia and Rastogi117 and Bianco et al.118.

-

Enhancement: Image enhancement is a crucial pre-processing step in intensity-based fringe analysis approaches, such as fringe tracking or skeletonizing. Referring to the intensity model, the fringe pattern may still be disturbed by locally varying background and intensity modulation after denoising. Several algorithms have been developed for fringe pattern enhancement, e.g., adaptive filter119, bidimensional empirical mode decomposition120,121, and dual-tree complex wavelet transform122.

-

Color channel separation: Because a Bayer color sensor-camera captures three monochromatic (red, green, and blue) images at once, color multiplexing techniques are often employed in optical metrology to speed up the image acquisition process123,124,125,126,127. However, the separation of three color channels is not so straightforward due to the coupling and imbalance among the three color channels. Many cross-talk-matrix-based color channel calibration and leakage correction algorithms have been proposed to minimize such side effects128,129,130.

-

Image registration and rectification: Image registration and rectification are aimed at aligning two or more images of the same object to a reference or correcting image distortion due to lens aberration. In stereophotogrammetry, epipolar (stereo) rectification determines a reprojection of each image plane so that pairs of conjugate epipolar lines in both images become collinear and parallel to one of the image axes108.

-

Interpolation: Image interpolation algorithms, such as the nearest neighbor, bilinear, bicubic109, and nonlinear regression131 are necessary when the measured intensity image is sampled at an insufficient dense grid. In DIC, to reconstruct displacements with subpixel accuracy, the correlation criterion must be evaluated at non-integer-pixel locations132,133,134. Therefore, image interpolation is also a key algorithm for DIC to infer subpixel gray values and gray-value gradients in many subpixel displacement registration algorithms, e.g., the NewtonâRaphson method133,134,135.

-

Extrapolation: Image extrapolation, especially fringe extrapolation is often employed in Fourier transform (FT) fringe analysis methods to minimize the boundary artifacts induced by spectrum leakage. Schemes for the extrapolation of the fringe pattern beyond the borders have been reported, such as soft-edged frequency filter136 and iterative FT137.

Analysis

Image analysis is the core component of the image-processing architecture to extract the key information-bearing parameter(s) reflecting the desired physical quantity being measured from the input images. In phase measurement techniques, image analysis refers to the reconstruction of phase information from the fringe-like modulated intensity distribution(s), i.e., \(f_{anal}:I \to \phi\).

-

Phase demodulation: The aim of phase demodulation, or more specifically, fringe analysis, is to obtain the wrapped phase map from the quasi-periodic fringe patterns. Various techniques for fringe analysis have been developed to meet different requirements in diverse applications, which can be broadly classified into two categories:

-

Spatial phase demodulation: Spatial phase-demodulation methods are capable of estimating the phase distribution through a single-fringe pattern. FT138,139, WFT114,115,140, and wavelet transform (WT)141 are classical methods for the spatial carrier fringe analysis. For closed-fringe patterns without the carrier, alternative methods, such as Hilbert spiral transform142,143, regularized phase tracking (RPT)144,145 and frequency-guided sequential demodulation146,147, can be applied provided that the cosinusoidal component of the fringe pattern can be extracted by pre-processing algorithms of denoising, background removal, and fringe normalization. The interested reader may refer to the book by Servin et al.148 for further details.

-

Temporal phase demodulation: Temporal phase-demodulation techniques detect the phase distribution from the temporal variation of fringe signals, as typified by heterodyne interferometry149 and phase-shifting techniques150. Many phase-shifting algorithms have originally been proposed for optical interferometry/holography and later been adapted and extended to fringe projection, for example, standard N-step phase-shifting algorithm151, Hariharan 5-step algorithm21, 2â+â1 algorithm152 etc. The interested reader may refer to the chapter âPhase shifting interferometryâ153 of the book edited by Malacara4 and the review article by Zuo et al.32 for more details about phase-shifting techniques in the contexts of optical interferometry and FPP, respectively.

-

-

Phase unwrapping: No matter which phase-demodulation technique is used, the retrieved phase distribution is mathematically wrapped to the principal value of the arctangent function ranging between âÏ and Ï. The result is what is known as a wrapped phase image, and phase unwrapping has to be performed to remove any 2Ï-phase discontinuities. Phase unwrapping algorithms can be broadly classified into three categories:

-

Spatial phase unwrapping: Spatial phase unwrapping methods use only a single wrapped phase map to retrieve the corresponding unwrapped phase distribution, and the unwrapped phase of a given pixel is derived based on the adjacent phase values. Representative methods include Goldsteinâs method154, reliability-guided method155, Flynnâs method156, minimal Lp-norm method157, and phase unwrapping max-flow/min-cut (PUMA) method158. The interested reader may refer to the book by Ghiglia et al. for more technical details. There are also many reviews on the performance comparisons of different unwrapping algorithms for specific applications159,160,161. Limited by the assumption of phase continuity, spatial phase unwrapping methods cannot fundamentally address the inherent fringe order ambiguity problem when the phase difference between neighboring pixels is greater than Ï.

-

Temporal phase unwrapping: To remove the phase ambiguity, temporal phase unwrapping methods generally generate different or synthetic wavelengths by adjusting flexible system parameters (wavelength, angular separation of light sources, spatial frequency, orientation of the projected fringe patterns) step by step, so that the object can be covered by fringes with different periods. Representative temporal phase unwrapping algorithms include gray-code methods162,163, multi-frequency (hierarchical) methods164,165,166, multi-wavelength (heterodyne) methods167,168,169, and number-theoretical methods170,171,172,173. For more detailed information about these methods, the reader can refer to the comparative review by Zuo et al.174 The advantage of temporal phase unwrapping lies in that the unwrapping is neighborhood-independent and proceeds along the time axis on the pixel itself, enabling an absolute evaluation of the mod-2Ï phase distribution.

-

Geometric phase unwrapping: Geometric phase unwrapping approaches can solve the phase ambiguity problem by exploiting the epipolar geometry of projectorâcamera systems. If the measurement volume can be predefined, depth constraints can be incorporated to preclude some phase ambiguities corresponding to the candidates falling out of the measurement range175,176,177,178,179,180,181,182,183,184,185. Alternatively, an adaptive depth-constraint strategy can provide pixel-wise depth constraint ranges according to the shape of the measured object186. By introducing more cameras, tighter geometry constraints can be enforced so as to guarantee the unique correspondence and improve the unwrapping reliability185,187.

-

In stereomatching techniques, image analysis refers to determining (tracking or matching) the displacement vector of each pixel point between a pair of acquired images, i.e., \(f_{anal}:(I_r,I_d) \to (D_x,D_y)\). In the routine implementation for DIC and stereophotogrammetry, a region of interest (ROI) or subset in the image is specified at first. The subset is further divided into an evenly spaced virtual grid. The similarity is evaluated at each point of the virtual grid in the reference image to obtain the displacement between two subsets. A full-field displacement map can be obtained by sliding the subset in the searching area of the reference image and obtaining the displacement at each location.

-

Subset correlation: In DIC, to quantitatively evaluate the similarity or difference between the selected reference subset and the target subset, several correlation criteria have been proposed, such as cross-correlation (CC), the sum of absolute difference (SAD), the sum of squared difference (SSD), zero-mean normalized cross-correlation criterion (ZNCC), zero-mean normalized sum of squared difference (ZNSSD), and the parametric sum of squared difference (PSSD)188,189,190. The subsequent matching procedure is realized by identifying the peak (or valley) position of the correlation coefficient distribution based on certain optimization algorithms. In stereophotogrammetry, nonparametric costs rely on the local ordering (i.e., Rank191, Census192, and Ordinal measures193) of intensity values, which are more frequently used due to their robustness against radiometric changes and outliers, especially near object boundaries192,193,194.

-

Subpixel refinement: The subset correlation methods mentioned above can only provide integer-pixel displacements. To further improve the measurement resolution and accuracy, many subpixel refinement methods were developed, including intensity interpolation (i.e., the coarseâfine search method)195,196, correlation coefficient curve-fitting133,197, gradient-based method198,199, NewtonâRaphson (NR) algorithm135,200,201, and inverse compositional GaussâNewton (IC-GN) algorithm202,203,204. Among these algorithms, NR and IC-GN are most commonly used for their high registration accuracy and effectiveness in handling high-order surface transformations. However, they suffer from expensive computation cost stemming from their iterative nonlinear optimization and repeated subpixel interpolation. Therefore, accurate initial guesses obtained by integer-pixel subset correlation methods are critical to ensure the rapid convergence205 and reduce the computational cost206. In stereovision, the matching algorithms can be classified as local207,208,209, semi-global210, and global methods211. Local matching methods utilize the intensity information of a local subset centered at the pixel to be matched. Global matching methods take the result obtained by local matching methods as the initial value and then optimize the disparity by minimizing a predefined global energy function. Semi-global matching methods reduce the 2D global energy minimization problem into a 1D one, enabling faster and more efficient implementations of stereomatching.

Postprocessing

In optical metrology, the main task of postprocessing is to further refine the measured phase or retrieved displacement field, and finally transform them into the desired physical quantity of the measured object, i.e., the corresponding operator \(f_{post}:\phi {{{\mathrm{/}}}}(D_x,D_y) \to q\), where q is the desired sample quantity.

-

Denoising: Instead of applying to raw fringe patterns, image denoising can also be used as a postprocessing algorithm to remove noise directly from the retrieved phase distribution. Various phase denoising algorithms have been proposed, such as least-square (LS) fitting212, anisotropic average filter213, WFT214, total variation215, and nonlocal means filter216.

-

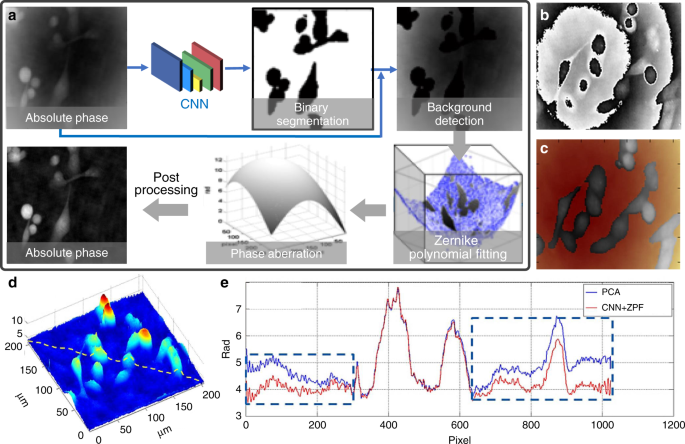

Digital refocusing: The numerical reconstruction of propagating wavefronts by diffraction is a unique feature of digital holography. Since the hologram of the object may not be recorded in the in-focus plane. Numerical diffraction or backpropagation algorithms (e.g., Fresnel diffraction and angular spectrum methods) should be used to obtain a focused image by performing a plane-by-plane refocusing after the image acquisition217,218,219.

-

Error compensation: There are various types of phase errors associated with optical metrology systems, such as phase-shifting error, intensity nonlinearity, and motion-induced error, which can be compensated with different types of postprocessing algorithms60,220,221. In digital holographic microscopy, the microscope objective induces additional phase curvature on the measured wavefront, which needs to be compensated in order to recover the phase information induced by the sample. Typical numerical phase-aberration compensation methods include double exposure222, 2D spherical fitting223 Zernike polynomials fitting224, Fourier spectrum filtering225, and principal component analysis (PCA)226.

-

Quantity transformation: The final step of postprocessing and also the whole measurement chain is to convert the phase or displacement field into the desired sample quantity, such as height, thickness, displacement, stress, strains, and 3D coordinates, based on sample parameters (e.g., refractive index, relative stress constant) or calibrated system parameters (e.g., sensitivity vector and camera (intrinsic, extrinsic) parameters). The optical setup should be carefully designed to optimize the sensitivity with respect to the measuring quantity in order to achieve a successful and efficient measurement227,228.

Finally, it should be mentioned that since optical metrology is a rapidly expanding field in both its scientific foundations and technological developments, the image-processing hierarchy used here cannot provide full coverage of all relevant methods and technologies. For example, phase retrieval and wave-field sensing technologies have shown great promise for inexpensive, vibration-tolerant, non-interferometric, optical metrology of optical surfaces and systems66,67. These methods constitute an important aspect of computational imaging as they often involve solving ill-posed inverse problems. There are also some optical metrology methods based on solving constrained optimization problems with added penalties and relaxations (e.g., RPT phase demodulation144,145 and minimal Lp-norm phase unwrapping methods157), which may make pre- and postprocessing unnecessary. For a detailed discussion on this topic, please refer to the subsection âSolving inverse optical metrology problems: issues and challengesâ.

Brief introduction to deep learning

Deep learning is a subset of machine learning, which is defined as the use of specific algorithms that enable machines to automatically learn patterns from large amounts of historical data, and then utilize the uncovered patterns to make predictions about the future or enable decision making under uncertain intelligently229,230. The key specific algorithm used in machine learning is the ANN, which exploits input data \({{{\mathbf{x}}}} \in {{{\mathcal{X}}}} \subseteq {\Bbb R}^n\) to predict an unknown output \({{{\mathbf{y}}}} \in {{{\mathcal{Y}}}}\). The tasks accomplished by the ANN can be broadly divided as classification tasks or regression tasks, depending on whether y is a discrete label or a continuous value. The objective of machine learning is then to find a mapping function \(f:{{{\mathbf{x}}}} \to {{{\mathbf{y}}}}\). The choice of such functions is given by the neural network models with additional parameters \({{{\mathbf{\theta }}}} \in \Theta\): i.e., \({{{\hat{\mathbf y}}}} = f\left( {{{{\mathbf{x}}}},{{{\mathbf{\theta }}}}} \right) \approx {{{\mathbf{y}}}}\). The goal of this section is to provide a brief introduction to deep learning, as a preparation for the introduction of its applications in optical metrology later on.

Artificial neural network (ANN)

Inspired by the biological neural network (Fig. 4a), ANNs are composed of interconnected computational units called artificial neurons. As illustrated in Fig. 4b, the simplest neural network following the above concept is the perceptron, which consists of only one single artificial neuron231. An artificial neuron takes a bias b and weight vector \({{{\mathbf{w}}}} = \left( {w_1,w_2, \cdots ,w_n} \right)^T\) as parameters \({{{\mathbf{\theta }}}} = \left( {b,w_1,w_2, \cdots ,w_n} \right)^T\) to map the input \({{{\mathbf{x}}}} = \left( {x_1,x_2, \cdots ,x_n} \right)^T\) to the output \(f_P\left( {{{\mathbf{x}}}} \right)\) through a nonlinear activation function Ï as

a Biological neuron model458. b The single-layer perceptron: an artificial neuron calculates the weighted sum (â) of the inputs (based on weights θ1âââθn), and maps them to the output through an activation function. c CNN: convolutional neural network, consists of input layer, convolution layer, pooling layer, full connection layer and output layer. d RNN: recurrent neural network, the input of the hidden layer includes not only the output of the input layer but also the output of the hidden layer at the previous moment. e RBM: Restricted Boltzmann Machines, an undirected probability graph model with an input layer and a hidden layer. f DBM: Deep Boltzmann Machine, consists of several RBM units stacked. The connections between all layers are undirected. g DBN: Deep Belief Network, consists of several DBM units stacked. The connection between the right two layers is undirected, while other connections are directed. h Residual block: consists of two sets of convolutional layers activated by ReLU stacked one above the other

Typical choices for such activation functions are the sign function \(\sigma \left( x \right) = sgn\left( x \right)\), sigmoid function \(\sigma \left( x \right) = \frac{1}{{1\, + \,e^{ - x}}}\), hyperbolic tangent function \(\sigma \left( x \right) = \frac{{e^x\, - \,e^{ - x}}}{{e^x\, + \,e^{ - x}}}\), and rectified linear unit (ReLU) \(\sigma \left( x \right) = \max \left( {0,x} \right)\)232. A single perceptron can only model a linear function, but because of the activation functions and in combination with other neurons, the modeling capabilities will increase dramatically. Arranged in a single layer, it has already been shown that neural networks can approximate any continuous function f(x) on a compact subset of \({\Bbb R}^n\). A single-layer network, also called single-layered perceptron (SLP), is represented as a linear combination of M individual neurons:

where vi is the combination weight of the ith neuron. We can further extend the mathematical specification of SLP by stacking several single-layer networks into a multi-layered perceptron (MLP)233. As the network goes deeper (number of layers increase), the number of free parameters increases, as well as the capability of the network to represent highly nonlinear functions234. We can formalize this mathematically by stacking several single-layer networks into a deep neural network (DNN) with N layers, i.e.

where the circle ⦠is the symbol for the composition of functions. The first layer is referred to as the input layer, the last as the output layer, and the layers in between the input and output are termed as hidden layers. We refer to these using the term âdeepâ, when a neural network contains many hidden layers, hence the term âdeep learningâ.

Neural network training

Having gained basic insights into neural networks and their basic topology, we still need to discuss how to train the neural network, i.e., how its parameters θ are actually determined. In this regard, we need to select the appropriate model topology for the problem to be solved and specify the various parameters associated with the model (known as âhyper-parametersâ). In addition, we need to define a function that assesses the quality of the network parameter set θ, the so-called loss function L, which quantifies the error between the predicted value \({{{\hat{\mathbf y}}}} = f_{{{\mathbf{\theta }}}}\left( {{{\mathbf{x}}}} \right)\) and the true observation y (label)235.

Depending on the type of task accomplished by the network, the loss function can be divided into classification loss and regression loss. Commonly used classification loss functions include hinge loss (\(L_{Hinge} = \mathop {\sum}\nolimits_{i = 1}^n {\max [0,1 - {{{\mathrm{sgn}}}}(y_i)\hat y_i]}\)) and cross-entropy loss \(L_{CE} = - \mathop {\sum}\nolimits_{i = 1}^n {[y_i\log \hat y_i + (1 - y_i)\log (1 - \hat y_i)]}\))236. Since the optical metrology tasks involved in this review mainly belong to regression tasks, here we focus on the regression loss functions. The mean absolute error (MAE) loss (\(L_{MAE} = \frac{1}{n}\mathop {\sum}\nolimits_{i = 1}^n {\left| {y_i - \hat y_i} \right|}\)) and the mean squared error (MSE) loss (\(L_{MSE} = \frac{1}{n}\mathop {\sum}\nolimits_{i = 1}^n {(y_i - \hat y_i)^2}\)) are the two most commonly used loss functions, which are also known as L1 loss and L2 loss, respectively. In image-processing tasks, MSE is usually converted into a peak signal-to-noise ratio (PSNR) metric: \(L_{PSNR} = 10\,{{{\mathrm{log}}}}_{10}\frac{{MAX^2}}{{L_{MSE}}}\), where MAX is the maximum pixel intensity value within the dynamic range of the raw image237. Other variants of L1 and L2 loss include RMSE, Euclidean loss, smooth L1, etc.238. For natural images, the structural similarity (SSIM) index is a representative image fidelity measurement, which judges the structural similarity of two images based on three metrics (luminance, contrast, and structure): \(L_{SSIM} = l({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})c({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})s({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})\)239, where \(l({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})\), \(c({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})\), and \(s({{{\mathbf{y}}}},\widehat {{{\mathbf{y}}}})\) are the similarities of the local patch luminances, contrasts, and structures, respectively. For more details about these loss functions, readers may refer to the article by Wang and Bovik240. With the defined loss function, the objective behind the training process of ANNs can be formalized as an optimization problem241

The learning schemes can be broadly classified into three categories, supervised learning, semi-supervised learning, and unsupervised learning36,242,243,244. Supervised learning dominates the majority of practical applications, in which a neural network model is optimized based on a large amount dataset of labeled data pairs (x, y), and the training process amounts to find the model parameters \(\widehat {{{\mathbf{\theta }}}}\) that best predict the data based on the loss function \(L\left( {\widehat {{{\mathbf{y}}}},{{{\mathbf{y}}}}} \right)\). In unsupervised learning, training algorithms process input data x without corresponding labels y, and the underlying structure or distribution in the data has to be modeled based on the input itself. Semi-supervised learning sits in between both supervised and unsupervised learning, where a large amount of input data x is available and only some of the data is labeled. More detailed discussions about semi-supervised and unsupervised learning can be found in the âFuture directionsâ section.

From perceptron to deep learning

As summarized in Fig. 4, despite the overall upward trend, a broader look at the history of deep learning reveals three major waves of development. Concepts of machine learning and deep learning commenced with the research into the artificial neural network, which was originated from the simplified mathematical model of biological neurons established by McCulloch and Pitts in 1943245. In 1958, Rosenblatt231 proposed the idea of perceptron, which was the first ANN that allows neurons to learn. The emergence of perceptron marked the first peak of neural network development. However, a single-layer perceptron model can only solve linear classification problems and cannot solve simple XOR and XNOR problems246. These limitations caused a major dip in their popularity and stagnated the development of neural networks for nearly two decades.

In 1986, Rumelhart et al.247 proposed the idea of a backpropagation algorithm (BP) for MLP, which constantly updates the network parameters to minimize the network loss based on a chain rule method. It effectively solves the problems of nonlinear classification and learning, leading neural networks into a second development phase of âshallow learningâ and promoting a boom of shallow learning. Inspired by the mammalian visual cortex (stimulated in the restricted visual field)248, LeCun et al.249 proposed the biologically inspired CNN model based on the BP algorithm in 1989, establishing the foundation of deep learning for modern computer vision. During this wave of development, various models like long short-term memory (LSTM) recurrent neural network (RNN), distributed representation, and processing were developed and continue to remain key components of various advanced applications of deep learning to this date. Adding more hidden layers to the network allows a deep architecture to be built, which can accomplish more complex mappings. However, training such a deep network is not trivial because once the errors are back-propagated to the first few layers, they become negligible (so-called gradient vanishing), making the learning process very slow or even fails250. Moreover, the limited computational capacity of the available hardware at that time could not support training large-scale neural networks. As a result, deep learning suffered a second major roadblock.

In 2006, Hinton et al.251,252 proposed a Deep Belief Network (DBN) (the composition of simple, unsupervised networks such as Deep Boltzmann Machines (DBMs)253 (Fig. 4f) or Restricted Boltzmann Machines (RBMs)254 (Fig. 4e)) training approach based on the brain graphical models, trying to overcome the gradient-vanishing problem. They gave the new name âdeep learningâ to multilayer neural network-related learning methods251,252. This milestone revolutionized the approaching prospects in machine learning, leading neural networks into the third upsurge along with the development of computer hardware performance, the development of GPU acceleration technology, and the availability of massive labeled datasets.

In 2012, Krizhevsky et al.255 proposed a deep CNN architecture â AlexNet, which won the 2012 ImageNet competition, making CNN249,256 become the dominant framework for deep learning after more than 20 years of silence. Meanwhile, several new deep-learning network architectures and training approaches (e.g., ReLU232 given by \(\sigma (x) = \max (0,x)\), and Dropout257 that discards a small but random portion of the neurons during each iteration of training to prevent neurons from co-adapting to the same features) were developed to further combat the gradient vanishing and ensure faster convergence. These factors have led to the explosive growth of deep learning and its applications in image analysis and computer vision-related problems. Different from CNN, RNN is another popular type of DNN inspired by the brainâs recurrent feedback system. It provides the network with additional âmemoryâ capabilities for previous data, where the inputs of the hidden layer consist of not only the current input but also the output from the previous step, making it a framework specialized in processing sequential data258,259,260 (Fig. 4d). CNNs and RNNs usually operate on Euclidean data like images, videos, texts, etc. With the diversification of data, some non-Euclidean graph-structured data, such as 3D-point clouds and biological networks, are also considered to be processed by deep learning. Graph neural networks (GNNs), where each node aggregates feature vectors of its neighbors to compute its new feature vector (a recursive neighborhood aggregation scheme), are effective graph representation learning frameworks specifically for non-Euclidean data261,262.

With the focus of more attention and efforts from both academia and industry, different types of deep neural networks have been continuously proposed in recent years with exponential growth, such as VGGNet263 (VGG means âVisual Geometry Groupâ), GoogLeNet264 (using âGoogLeâ instead of âGoogleâ is a tribute to LeNet, one of the earliest CNNs developed by LeCun256), R-CNN (regions with CNN features)265, generative adversarial network (GAN)266, etc. In 2015, the emergence of the residual block (Fig. 4h), containing two convolutional layers activated by ReLU that allow the information (from the input or those learned in earlier layers) to penetrate more into the deeper layers, significantly reduces the vanishing gradient problem as the network gets deeper, making it possible to train large-scale CNNs efficiently267. In 2016, the Google-owned AI company DeepMind shocked the world by beating Lee Se-dol with its AlphaGo AI system, alerting the world to deep learning, a new breed of machine learning that promised to be smarter and more creative than before268. For a more detailed description of the history and development of deep learning, readers can refer to the chronological review article by Schmidhuber39.

Convolutional neural network (CNN)

In the subsection âArtificial neural networkâ, we talked about the simplest DNN, so-called MLPs, which basically consist of multiple layers of neurons, each fully connected to those in the adjacent layers. Each neuron receives some inputs, which are multiplied by their weights, with nonlinearity applied via activation functions. In this subsection, we will talk about CNNs, which are considered an evolution of the MLP architecture that is developed to process data in single or multiple arrays, and thus are more appropriate to handle image-like input. Given the prevalence of CNNs in image processing and analysis tasks, here we briefly review some basic ideas and concepts widely used in CNNs. For a comprehensive introduction to CNN, we refer readers to the excellent book by Goodfellow et al.36.

CNN follows the same pattern as MLP: artificial neurons are stacked in hidden layers on top of each other; parameters are learned during network training with nonlinearity applied via activation functions; the loss \(L\left( {\widehat {{{\mathbf{y}}}},{{{\mathbf{y}}}}} \right)\) is calculated and back-propagated to update the network parameters. The major difference between them is that instead of regular fully connected layers, CNN uses specialized convolution layers to model locality and abstraction (Fig. 5b). At each layer, the input image \({{{\mathbf{x}}}}\) (lexicographically ordered) is convolved with a set of convolutional filters W (note here W represents block-Toeplitz convolution matrix) and added biases b to generate a new image, which is subjected to an elementwise nonlinear activation function Ï (normally use ReLU function \(\sigma (x) = \max (0,x)\)), and the same structure is repeated for each convolution layer k:

The second key difference between CNNs and MLPs is the typical incorporation of pooling layers in CNNs, where pixel values of neighborhoods are aggregated by applying a permutation invariant function, such as the max or mean operation, to reduce the dimensionality of the convolutional layers and allows significant features to propagate downstream without being affected by neighboring pixels (Fig. 5c). The major advantage of such an architecture is that CNNs exploit spatial dependencies in the image and only consider a local neighborhood for each neuron, i.e., the network parameters are shared in such a way that the network performs convolution operations on images. In other words, the idea of a CNN is to take advantage of a pyramid structure to first identify features at the lowest level before passing these features to the next layer, which, in turn, create features of a higher level. Since the local statistics of images are invariant to location, the model does not need to learn weights for the same feature occurring at different positions in an image, making the network equivariant with respect to translations of the input. It makes CNNs especially suitable for processing images captured in optical metrology, e.g., a fringe pattern consisting of sinusoidal signal repeated over different image locations. In addition, it also drastically reduces the number of parameters (i.e., the number of weights no longer depends on the size of the input image) that need to be learned.

Figure 5a shows a CNN architecture for the image-classification task. Every layer of a CNN transforms the input volume to an output volume of neuron activation, eventually leading to the final fully connected layers, resulting in a mapping of the input data to a 1D feature vector. A typical CNN configuration consists of a sequence of convolution and pooling layers. After passing through a few pairs of convolutional and pooling layers, all the features of the image have been extracted and arranged into a long tube. At the end of the convolutional stream of the network, several fully connected layers (i.e., regular neural network architecture, MLP, that discussed in the previous subsection) are usually added to fatten the features into a vector, with which tasks, such as classifications, can be performed. Starting with LeNet256, developed in 1998 for recognizing handwritten characters with two convolutional layers, CNN architectures have evolved since then to deeper CNNs like AlexNet264 (5 convolutional Layers) and VGGNet263 (19 convolutional Layers) and beyond to more advanced and super-deep networks like GoogLeNet264 and ResNet267, respectively. These CNNs have been extremely successful in computer vision applications, such as object detection269, action recognition270, motion tracking271, and pose estimation272.

Fully convolutional network architectures for image processing

Conventionally, CNNs have been used for solving classification problems. Due to the presence of a parameter-rich fully connected layer at the end of the network, typical CNNs throw away spatial information and produce non-spatial outputs. However, for most image-processing tasks that we encountered earlier in the Section âImage processing in optical metrologyâ, the network must have a whole-resolution output with the same or even larger size compared with the input, which is commonly referred to as dense prediction (contrary to the single target category per image)273. Specifically, fully convolutional network architectures without fully connected layers should be used for this purpose, which accepts input of any size, is trained with a regression loss, and produces an output of the corresponding dimensions273,274. Here, we briefly review three representative network architectures with such features.

-

SRCNN: In conventional CNN, the downsampling effect of pooling layers results in an output with a far lower resolution than the input. Thus, a relatively naive and straightforward solution is simply stacking several convolutions layers while skipping pooling layers to preserve the input dimensions. Dong et al.275 firstly adopt this idea and propose SRCNN for the image super-resolution task. SRCNN utilizes traditional upsampling algorithms to obtain low-resolution images and then refine them by learning an end-to-end mapping from interpolated coarse images to high-resolution images of the same dimension but with more details, as illustrated in Fig. 6a. Due to its simple ideal and implementation, SRCNN has gradually become one of the most popular frameworks in image super-resolution276 and been extended to many other tasks such as radar image enhancing277, underwater image high definition display278, and computed tomography279. One major disadvantage of SRCNN is the cost of time and space to keep the whole resolution through the whole network, limiting SRCNN only practical for relatively shallow network structures.

-

FCN: The fully convolutional network (FCN) proposed by Long et al.273 is a popular strategy and baseline for semantic-segmentation tasks. FCN is inspired by the fact that the fully connected layers in classification CNN (Fig. 5) can also be viewed as convolutions with kernels that cover their entire input regions. As illustrated in Fig. 6b, FCN uses the existing classification CNN as the encoder module of the network and replace these fully connected layers into 1âÃâ1 convolution layers (also termed as deconvolution layers) as the decoding module, enabling the CNN to upsample the input feature maps and get pixel-wise output. In FCN, skip connections combining (simply adding) information in fine layers and coarse layers enhances the localization capability of the network, allowing for the reconstruction of accurate fine details that respect global structure. FCN and its variants have achieved great success in the application of dense pixel prediction as required in many advanced computer vision understanding tasks280.

-

U-Net: Ronneberger et al.281 took the idea of FCN one step further and proposed the U-Net architecture, which replaces the one-step upsampling part with a bunch of complimentary upsampling convolutions layers, resulting in a quasi-symmetrical encoder-decoder model architecture. As illustrated in Fig. 6c, the basic structure of U-Net consists of a contractive branch and an expansive branch, which enables multiresolution analysis and general multiscale image-to-image transforms. The contractive branch (encoder) downsamples the image using conventional strided convolution, producing a compressed feature representation of the input image. The expansive branch (decoder), complimentary to the contractive branch, uses upsampling methods like transpose convolution to provide the processed result with the same size as the input. In addition, U-Net features skip connections that concatenate the matching resolution levels of the contractive branch and the expansive branch. Ronnebergerâs U-Net is a breakthrough toward automatic image segmentation and has been successfully applied in many tasks that require image-to-image transforms282.

Since the feature extraction is only performed in low-dimensional space, the computation and spatial complexity of the above encoder-decoder structured networks (FCN and U-Net) can be much reduced. Therefore, the encoder-decoder CNN structure has become the mainstream for image segmentation and reconstruction283. The encoder is usually a classic CNN (Alexnet, VGG, Resnet, etc.) in which downsampling (pooling layers) is adopted to reduce the input dimension so as to generate low-resolution feature maps. The decoder tries to mirror the encoder to upsample these feature representations and restore the original size of the image. Thus, how to perform upsampling is of great importance. Although traditional upsampling methods, e.g., nearest neighbor, bilinear, and bicubic interpolations, are easy to implement, deep-learning-based upsampling methods, e.g., unpooling284, transpose convolution273, subpixel convolution285, has gradually become a trend. All these approaches can be combined with the model mentioned above to prevent the decrease in resolution and obtain a full-resolution image output.

-

Unpooling upsampling: Unpooling upsampling reverts maxpooling by remembering the location of the maxima in the maxpooling layers and in the unpooling layers copy the value to exactly this location, as shown in Fig. 7a.

-

Transposed convolution: The opposite of the convolutional layers are the transposed convolution layers (also misinterpreted as deconvolution layers280), i.e., predicting the possible input based on feature maps sized like convolution output. Specifically, it increases the image resolution by expanding the image by inserting zeros and performing convolution, as shown in Fig. 7b.

-

Sub pixel convolution: The subpixel layer performs upsampling by generating a plurality of channels by convolution and then reshaping them, as Fig. 7c shows. Within this layer, a convolution is firstly applied for producing outputs with M times channels, where M is the scaling factor. After that, the reshaping operation (a.k.a. shuffle) is performed to produce outputs with size M times larger than the original.

As discussed in the Section âImage processing in optical metrologyâ, despite their diversity, the image-processing algorithms used in optical metrology share a common characteristicâthey can be regarded as a mapping operator that transforms the content of arbitrary-sized inputs into pixel-level outputs, which fits exactly with DNNs with a fully convolutional architecture. In principle, any fully convolutional network architectures presented here can be used for a similar purpose. By applying different types of training datasets, they can be trained for accomplishing different types of image-processing tasks that we encountered in optical metrology. This provides an alternative approach to process images such that the produced results resemble or even outperform conventional image-processing operators or their combinations. There are also many other potential desirable factors for such a substitution, e.g., accuracy, speed, generality, and simplicity. All these factors were crucial to enable the fast rise of deep learning in the field of optical metrology.

Invoking deep learning in optical metrology: principles and advantages

Let us return to optical metrology. It is essential that the image formation is properly understood in order to reconstruct the required geometrical or mechanical quantities of the sample, as we discussed in Section âImage formation in optical metrologyâ. In general, the relation between the observed images \({{{\mathbf{I}}}} \in {\Bbb R}^m\) (frame-stacked lexicographically ordered with mâÃâ1 in dimension) and the desired sample parameter (or information-bearing parameter that clearly reflects the desired sample quantity, e.g., phase or displacement field) \({{{\mathbf{P}}}} \in {\Bbb R}^m\) (or \({\Bbb C}^n\)) can be described as

where \({{{\mathcal{A}}}}\) is the (possibly nonlinear) forward measurement operator mapping from the parameter space to the image space, which is given by the physics laws governing the formation of data; \({{{\mathcal{N}}}}\) represents the effect of noise (not necessarily additive). This model seems general enough to cover almost all image formation processes in optical metrology. However, this does not mean that p can be directly obtained from I. More specifically, we have to conclude in general from the effect (i.e., the intensity at the pixel) to its cause (i.e., shape, displacement, deformation, or stress of the surface), suggesting that an inverse problem has to be solved.

Solving inverse optical metrology problems: issues and challenges

Given the forward model represented by Eq. (8), our task is to find the parameters by an approximate inverse of \({{{\mathcal{A}}}}\) (denoted as \(\tilde {{{\mathcal{A}}}}^{ - 1}\)) such that \(\widehat {{{\mathbf{p}}}} = \widehat {{{\mathcal{R}}}}\left( {{{\mathbf{I}}}} \right) = \tilde {{{\mathcal{A}}}}^{ - 1}\left( {{{\mathbf{I}}}} \right) \approx {{{\mathbf{p}}}}\). However, in real practice, there are many problems involved in this process:

-

Unknown or mismatched forward model. The success of conventional optical metrology approaches relies heavily on the precise pre-knowledge about the forward model \({{{\mathcal{A}}}}\), so they are often regarded as model-driven or knowledge-driven approaches. In practical applications, the forward model \({{{\mathcal{A}}}}\) used is always an approximate description of reality, and extending it might be challenging due to a limited understanding of experimental perturbations (noise, aberrations, vibration, motion, nonlinearity, saturation, and temperature variations) and non-cooperative surfaces (shiny, translucent, coated, shielded, highly absorbent, and strong scattering). These problems are either difficult to model or result in a too complicated (even intractable) model with a large number of parameters.

-

Error accumulation and suboptimal solution. As described in the section âImage processing in optical metrologyâ, âdivide-and-conquerâ is a common practice for solving complex problems with a sequence of cascaded image-processing algorithms to obtain the desired object parameter. For example, in FPP, the entire image-processing pipeline is generally divided into several sub-steps, i.e., image pre-processing, phase demodulation, phase unwrapping, and phase-to-height conversion. Although each sub-problem or sub-step becomes simpler and easier to handle, the disadvantages are also apparent: error accumulation and suboptimal solution, i.e., the aggregation of optimum solutions to subproblems may not be equivalent to the global optimum solution.

-

Ill-posedness of the inverse problem. In many computer vision and computational imaging tasks, such as image deblurring24, sparse computed tomography25, and imaging through scattering media27, the difficulty in retrieving the desired information p from the observation I arises from the fact that the operator \({{{\mathcal{A}}}}\) is usually poorly conditioned, and the resulting inverse problem is ill-posed, as illustrated in Fig. 8a. Due to the similar indirect measurement principle, there are also many important inverse problems in optical metrology that are ill-posed, among which the phase demodulation from a single-fringe pattern and phase unwrapping from single wrapped phase distributions are the best known for specialists in optical metrology (Fig. 8b). The simplified model for the intensity distribution of fringe patterns (Eq. (1)) suggests that the observed intensity I results from the integration of several unknown components: the average intensity A(x, y), the intensity modulation B(x, y), and the desired phase function Ï(x, y). Simply put, we do not have enough information to solve the corresponding inverse problem uniquely and stably.

a In computer vision, such as image deblurring, the resulting inverse problem is ill-posed since the forward measurement operator \({{{\mathcal{A}}}}\) mapping from the parameter space to the image space is usually poorly conditioned. The classical approach is to impose certain prior assumptions (smoothing) about the solution p that helps in regularizing its retrieval. b In optical metrology, absolute phase demodulation from a single-fringe pattern exhibits all undesired difficulties of an inverse problem: ill-posedness and ambiguity, which can also be formed as a regularized optimization problem with proper prior assumptions (phase smoothness, geometric constraints) imposed. c Optical metrology uses an âactiveâ approach to transform the ill-posed inverse problem into a well-posed estimation or regression problem: by acquiring additional phase-shifted patterns of different frequencies, absolute phase can be easily determined by multi-frequency phase-shifting and temporal phase unwrapping methods

In the fields of computer vision and computational imaging, the classical approach in solving an ill-posed inverse problem is to reformulate the ill-posed original problem into a well-posed optimization problem by imposing certain prior assumptions about the solution p that helps in regularizing its retrieval:

where || ||2 indicates the Euclidean norm, R(p) is a regularization penalty function that incorporates the prior information about p, such as smoothness286, sparsity in some basis287 or dictionary288. γ is a real positive parameter (regularization parameter) that governs the weight given to the regularization against the need to fit the measurement and should be selected carefully to make an admissible compromise between the prior knowledge and data fidelity. Such an optimization problem can be solved efficiently with a variety of algorithms289,290 and provide theoretical guarantees on the recoverability and stability of the approximate solution to an inverse problem291.

Instead of regularizing the numerical solution, in optical metrology, we prefer to reformulate the original ill-posed problem into a well-posed and adequately stable one by actively controlling the image acquisition process so as to add systematically more knowledge about the object to be investigated into the evaluation process31. Due to the fact that the optical measurements are frequently carried out in a highly controlled environment, such a solution is often more practical and effective. As illustrated by Fig. 8c, by acquiring additional multi-frequency phase-shifted patterns, absolute phase retrieval becomes a well-posed estimation or regression problem, and the simple standard (unconstrainted, regularization-free) least-square methods in regression analysis provides a stable, precise, and efficient solution292,293:

The situation may become very different when we step out of the laboratory and into the complicated environment of the real world294. The active strategies mentioned above often impose stringent requirements on the measurement conditions and the object under test. For instance, high-sensitivity interferometric measurement in general needs a laboratory environment where the thermal-mechanical settings are carefully controlled to preserve beam path conditions and minimize external disturbances. Absolute 3D shape profilometry usually requires multiple fringe pattern projections, which requires that the measurement conditions remain invariant while sequential measurements are performed. However, harsh operating environments where the object or the metrology system cannot be maintained in a steady-state may make such active strategies a luxurious or even unreasonable request. Under such conditions, conventional optical metrology approaches will suffer from severe physical and technical limitations, such as a limited amount of data and uncertainties in the forward model.

To address these challenges, researchers have made great efforts to improve state-of-the-art methods from different aspects over the past few decades. For example, phase-shifting techniques were optimized from the perspective of signal processing to achieve high-precision robust phase measurement and meanwhile minimize the impact of experimental perturbations32,153. Single-shot spatial phase-demodulation methods have been explicitly formulated as a constrained optimization problem similar to Eq. (9) with an extra regularization term enforcing a priori knowledge about the recovered phase (spatially smooth, limited spectral extension, piecewise constant, etc.)140,148. Multi-frequency temporal phase unwrapping techniques have been optimized by utilizing the inherent information redundancy in the average intensity and the intensity modulation of the fringe images, allowing for absolute phase retrieval with the reduced number of patterns32,295. Geometric constraints were introduced in FPP to solve the phase ambiguity problem without additional image acquisition175,183. Despite these extensive research efforts for decades, how to extract the absolute (unambiguous) phase information, with the highest possible accuracy, from the minimum number (preferably single shot) of fringe patterns remains one of the most challenging open problems in optical metrology. Consequently, we are looking forward to innovations and breakthroughs in the principles and methods of optical metrology, which are of significant importance for its future development.

Solving inverse optical metrology problems via deep learning

As a âdata-drivenâ technology that has emerged in recent years, deep learning has received increasing attention in the field of optical metrology and made fruitful achievements in very recent years. Different from the conventional physical model and knowledge-driven approaches that the objective function (Eqs. (9) and (10)) is built based on the image formation model \({{{\mathcal{A}}}}\), in deep-learning approaches, we create a set of true object parameters p and the corresponding raw measured data I, and establish their mapping relation \({{{\mathcal{R}}}}_\theta\) based on a deep neural network with all network parameters θ learned from the dataset by solving the following optimization problem (Fig. 9):