Abstract

Autonomous robots used in infrastructure inspection, space exploration and other critical missions operate in highly dynamic environments. As such, they must continually verify their ability to complete the tasks associated with these missions safely and effectively. Here we present a Bayesian learning framework that enables this runtime verification of autonomous robots. The framework uses prior knowledge and observations of the verified robot to learn expected ranges for the occurrence rates of regular and singular (e.g., catastrophic failure) events. Interval continuous-time Markov models defined using these ranges are then analysed to obtain expected intervals of variation for system properties such as mission duration and success probability. We apply the framework to an autonomous robotic mission for underwater infrastructure inspection and repair. The formal proofs and experiments presented in the paper show that our framework produces results that reflect the uncertainty intrinsic to many real-world systems, enabling the robust verification of their quantitative properties under parametric uncertainty.

Similar content being viewed by others

Introduction

Mobile robots are increasingly used to perform critical missions in extreme environments, which are inaccessible or hazardous to humans1,2,3,4. These missions range from the inspection and maintenance of offshore wind-turbine mooring chains and high-voltage cables to nuclear reactor repair and deep-space exploration5,6.

Using robots for such missions poses major challenges2,7. First and foremost, the robots need to operate with high levels of autonomy, as in these harsh environments their interaction and communication with human operators is severely restricted. Additionally, they frequently need to make complex mission-critical decisions, with errors endangering not just the robotâitself an expensive asset, but also the important system or environment being inspected, repaired or explored. Last but not least, they need to cope with the considerable uncertainty associated with these missions, which often comprise one-off tasks or are carried out in settings not encountered before.

Addressing these major challenges is the focus of intense research worldwide. In the UK alone, a recent £44.5M research programme has tackled technical and certification challenges associated with the use of robotics and AI in the extreme environments encountered in offshore energy (https://orcahub.org), space exploration (https://www.fairspacehub.org), nuclear infrastructure (https://rainhub.org.uk), and management of nuclear waste (https://www.ncnr.org.uk). This research has initiated a step change in the assurance and certification of autonomous robotsânot least through the emergence of new concepts such as dynamic assurance8 and self-certification9 for robotic systems.

Dynamic assurance requires a robot to respond to failures, environmental changes and other disruptions not only by reconfiguring accordingly10, but also by producing new assurance evidence which guarantees that the reconfigured robot will continue to achieve its mission goals8. Self-certifying robots must continually verify their health and ability to complete missions in dynamic, risk-prone environments9. In line with the âdefence in depthâ safety engineering paradigm11, this runtime verification has to be performed independently of the front-end planning and control engine of the robot.

Despite these advances, current dynamic assurance and self-certification methods rely on quantitative verification techniques (e.g., probabilistic12,13 and statistical14 model checking) that do not handle well the parametric uncertainty that autonomous robots encounter in extreme environments. Indeed, quantitative verification operates with stochastic models that demand single-point estimates of uncertain parameters such as task execution and failure rates. These estimates capture neither epistemic nor aleatory parametric uncertainty. As such, they are affected by arbitrary estimation errors whichâbecause stochastic models are often nonlinearâcan be amplified in the verification process15, and may lead to invalid robot reconfiguration decisions, dynamic assurance and self-certification.

In this paper, we present a robust quantitative verification framework that employs Bayesian learning techniques to overcome this limitation. Our framework requires only partial and limited prior knowledge about the verified robotic system, and exploits its runtime observations (or lack thereof) to learn ranges of values for the system parameters. These parameter ranges are then used to compute the quantitative properties that underpin the robotâs decision making (e.g., probability of mission success, and expected energy usage) as intervals thatâunique to our frameworkâcapture the parametric uncertainty of the mission. Our framework is underpinned by probabilistic model checking, a technique that is broadly used to assess quantitative properties, e.g., reliability, performance and energy cost of systems exhibiting stochastic behaviour. Such systems include autonomous robots from numerous domains16, e.g., mobile service robots17, spacecraft18, drones19 and robotic swarms20. While we present a case study involving an autonomous underwater vehicle (AUV), the generalisability of our approach stems from the broad adoption of probabilistic model checking for the modelling and verification of this wide range of autonomous robots. As such, we anticipate that our results are applicable to autonomous agents across all these domains.

We start by introducing our robust verification framework, which comprises Bayesian techniques for learning the occurrence rates of both singular events (e.g., catastrophic failures and completion of one-off tasks) and events observed regularly during system operation. Next, we describe the use of the framework for an offshore wind turbine inspection and maintenance robotic mission. Finally, we discuss the framework in the context of related work, and we suggest directions for further research.

Results

Proposed framework

We developed an end-to-end verification framework for the online computation of bounded intervals for continuous-time Markov chain (CMTC) properties that correspond to key dependability and performance properties of autonomous robots. The verification framework integrates interval CTMC model checking21 with two new interval Bayesian inference techniques that we introduce in the Methods section. The former technique, Bayesian inference using partial priors (BIPP), computes estimate bounded intervals for the occurrence rates of singular events such as the successful completion of one-off robot tasks, or catastrophic failures. The latter technique, Bayesian inference using imprecise probability with sets of priors (IPSP), produces estimate bounded intervals for the occurrence rates of regular events encountered by an autonomous robot.

As shown in Fig. 1, the verification process underpinning the application of our framework involves devising a parametric CTMC model that captures the structural aspects of the system under verification through a System Modeller. This activity is typically performed once at design time (i.e., before the system is put into operation) by engineers with modelling expertise. By monitoring the system under verification after deployment, our framework enables observing both the occurrence of regular events and the lack of singular events during times when such events could have occurred (e.g., a catastrophic failure not happening when the system performs a dangerous operation). Our online BIPP Estimator and IPSP Estimator use these observations to calculate expected ranges for the rates of the monitored events, enabling a Model Generator to continually synthesise up-to-date interval CTMCs that model the evolving behaviour of the system.

The integration of Bayesian inference using partial priors (BIPP) and Bayesian inference using imprecise probability with sets of priors (IPSP) with interval continuous-time Markov chain (CMTC) model checking supports the online robust quantitative verification and reconfiguration of autonomous systems under parametric uncertainty.

The interval CTMCs, which are synthesised from the parametric CTMC model, are then continually verified by the PRISM-PSY Model Checker 22, to compute value intervals for key system properties. As shown in Fig. 1 and illustrated in the next section, these properties range from dependability (e.g., safety, reliability and availability)23 and performance (e.g., response time and throughput) properties to resource use and system utility. Finally, changes in the value ranges of these properties may prompt the dynamic reconfiguration of the system by a Controller module responsible for ensuring that the system requirements are satisfied at all times.

Offshore infrastructure maintenance

We demonstrate how our online robust verification and reconfiguration framework can support an AUV to execute a structural health inspection and cleaning mission of the substructure of an offshore wind farm. Similar scenarios for AUV use in remote, subsea environments have been described in other large-scale robotic demonstration projects, such as the PANDORA EU FP7 project24. Compared to remotely operated vehicles that must be tethered with expensive oceanographic surface vessels run by specialised personnel, AUVs bring important advantages, including reduced environmental footprint (since no surface vessel consuming fuel is needed), reduced cognitive fatigue for the involved personnel, increased frequency of mission execution, and reduced operational and maintenance cost.

The offshore wind farm comprises multiple floating wind turbines, with each turbine being a buoyant foundation structure secured to the sea bed with floating chains tethered to anchors weighing several tons. Wind farms with floating wind turbines offer increased wind exploitation (since they can be installed in deeper waters where winds are stronger and more consistent), reduced installation costs (since there is no need to build solid foundations), and reduced impact on the visual and maritime life (since they are further from the shore)25.

The AUV is deployed to collect data about the condition of kââ¥â1 floating chains to enable the post-mission identification of problems that could affect the structural integrity of the asset (floating chain). When the visual inspection of a chain is hindered due to accumulated biofouling or marine growth, the AUV can use its on-board high-pressure water jet to clean the chain and continue with the inspection task24.

The high degrees of aleatoric uncertainty in navigation and the perception of the marine environment entail that the AUV might fail to clean a chain. This uncertainty originates from the dynamic conditions of the underwater medium that includes unexpected water perturbations coupled with difficulties in scene understanding due to reduced visibility and the need to operate close to the floating chains. When this occurs, the AUV can retry the cleaning task or skip the chain and move to the next.

Stochastic mission modelling

Figure 2 shows the parametric CMTC model of the floating chain inspection and cleaning mission. The AUV inspects the ith chain with rate r inspect and consumes energy eins. The chain is clean with probability pc and the AUV travels to the next chain with rate r travel consuming energy et, or the chain needs cleaning with probability 1âââpc. When the AUV attempts the cleaning (xiâ=â1), the task succeeds with chain-dependent rate \({r}_{i}^{{{{{{{{\rm{clean}}}}}}}}}\), causes catastrophic damage to the floating chain or itself with rate r damage or fails with chain-dependent rate \({r}_{i}^{{{{{{{{\rm{fail}}}}}}}}}\). If the cleaning fails, the AUV prepares to retry with known and fixed rate \({r}^{{{{{{{{\rm{prepare}}}}}}}}}\) requiring energy ep, and it either retries cleaning (xiâ=â1) or skips the current chain and moves to chain iâ+â1 (xiâ=â0). After executing the tasks on the kth chain, the AUV returns to its base and completes the mission.

CTMC of the floating chain cleaning and inspection mission, where e1, e2, â¦, ek represent the mean energy required to clean chains 1, 2, â¦, k, respectively. The AUV inspects a chain with rate r inspect, consuming energy eins, prepares to retry the chain cleaning task with rate \({r}^{{{{{{{{\rm{prepare}}}}}}}}}\), consuming energy ep, and travels to the next chain with rate r travel, consuming energy et. During an inspection, the chain is clean with probability pc, and x1,âx2,ââ¦,âxkâââ{0,â1} denote the control parameters used by the AUV controller to synthesise a plan. The rate \({r}_{i}^{{\mathsf{fail}}}\) corresponds to a regular event and is therefore modelled using Bayesian inference using imprecise probability with sets of priors (IPSP) from (27) and (28). The rates \({r}_{i}^{{\mathsf{clean}}}\) and r damage correspond to singular events and are thus modelled using Bayesian inference using partial priors (BIPP) from (7) and (8).

Since the AUV can fail to clean the i-th chain with non-negligible probability and multiple times, this is a regular event whose transition rate \({r}_{i}^{{{{{{{{\rm{fail}}}}}}}}}\) is modelled using the IPSP estimator from (7) and (8). In contrast, the AUV is expected to not cause catastrophic damage but, with extremely low probability, may do so only once (after which the AUV and/or its mission are likely to be revised); thus, the corresponding transition rates \({r}_{i}^{{{{{{{{\rm{clean}}}}}}}}}\) and r damage are modelled using the BIPP estimator from (14) and (15). The other transition rates, i.e., those for inspection (r inspect), travelling (r travel) and preparation (\({r}^{{{{{{{{\rm{prepare}}}}}}}}}\)), are less influenced by the chain conditions and therefore assumed to be known, e.g., from previous trials and missions; hence, we fixed these transition rates.

When cleaning is needed for the ith chain, the AUV controller synthesises a plan by determining the control parameter xiâââ{0, 1} for all remaining chains i,âiâ+â1,ââ¦k so that the system requirements in Table 1 are satisfied.

Robust verification results

We demonstrate our solution for robust verification and adaptation using a mission in which the AUV was deployed to inspect and, if needed, clean six chains placed in a hexagonal arrangement (Fig. 3). We used mâ=â3 and the BIPP estimator (7) and (8) for the transition rates \({r}_{i}^{{{{{{{{\rm{clean}}}}}}}}}\) and r damage, which correspond to singular events. For \({r}_{i}^{{{{{{{{\rm{clean}}}}}}}}}\), we used \({\epsilon }_{1}=0.12+{{{{{{{\mathcal{U}}}}}}}}(0,0.12)\), \({\theta }_{1}=0.10+{{{{{{{\mathcal{U}}}}}}}}(0,0.001)\), \({\epsilon }_{2}=0.90+{{{{{{{\mathcal{U}}}}}}}}(0,0.90)\), \({\theta }_{2}=0.85+{{{{{{{\mathcal{U}}}}}}}}(0,0.0085)\), where \({{{{{{{\mathcal{U}}}}}}}}(x,y)\) denotes a continuous uniform distribution with x and y being its minimum and maximum values, respectively. For r damage, we used \({\epsilon }_{1}=10^{-8}+{{{{{{{\mathcal{U}}}}}}}}(0,10^{-8})\), \({\theta }_{1}=0.88+{{{{{{{\mathcal{U}}}}}}}}(0,0.0088)\), \({\epsilon }_{2}=10^{-7}+{{{{{{{\mathcal{U}}}}}}}}(0,10^{-7})\), \({\theta }_{2}\,=\,0.10\,+\,{{{{{{{\mathcal{U}}}}}}}}(0,0.001)\). For \({r}_{i}^{{{{{{{{\rm{fail}}}}}}}}}\), we used \({t}^{(0)}=[10+{{{{{{{\mathcal{U}}}}}}}}(0,10)]\) and \({\lambda }^{(0)}=[0.0163+{{{{{{{\mathcal{U}}}}}}}}(0,0.00163)]\). During the mission execution, the AUV performs the model checking at every cleaning attempt so that runtime observations are incorporated into the decision making process entailing also that the currently synthesised plan is not necessarily used at subsequent chains. Hence, the AUV only needs to check system configurations where at least the current chain is to be cleaned, thus halving the number of configurations to be checked (since configurations with xiâ=â0 need not be checked). If all of these checks that consider xiâ=â1 fail to satisfy the requirements from Table 1, then the AUV decides to skip the current chain and proceed to inspect and clean the next chain.

a Simulated AUV mission involving the inspection of six wind farm chains and, if required, their cleaning. i Start of mission; ii cleaning chain 3; iii cleaning final chain. At this point, the AUV cleaned three chains, skipped one, and one chain did not require cleaning. b Plots of the outcome of the model checking carried out by the AUV at chain 3. Each row shows the configurations against the requirements. iâiii Results during the first attempt at cleaning chain 3. ivâvi Results during the second attempt at cleaning. viiâix Results at the third and successful attempt at cleaning the chain. The configurations decorated with the red cross signify configurations violating the energy requirement R2 while configurations highlighted in yellow denote the chosen configuration for the corresponding attempt.

If a cleaning attempt at chain i failed, the AUV integrates this observation in (27) and (28), and performs model checking to determine whether to retry the cleaning or skip the chain. Since the AUV has consumed energy for the failed cleaning attempt, the energy available is reduced accordingly, which in turn can reduce the number of possible system configurations that can be employed and need checking. The observation of a failed attempt reduces the lower bound for the reliability of cleaning xi, and may result in a violation of the reliability requirement R1 (Table 1), which may further reduce the number of feasible configurations. If the AUV fails to clean chain i repeatedly, this lower bound will continue to decrease, potentially resulting in the AUV having no feasible configuration, and having to skip the current chain. Although skipping a chain overall decreases the risk of a catastrophic failure (as the number of cleaning attempts is reduced), leaving uncleaned chains will incur additional cost as a new inspection mission will need to be launched, e.g., using another AUV or human personnel.

Figure 3 shows a simulated run of the AUV performing an inspection and cleaning mission (Fig. 3a). At each chain that requires cleaning, the AUV decides whether to attempt to clean or skip the current chain. Figure 3b provides details of the probabilistic model checking carried out during the inspection and cleaning of chain 3 (Fig. 3a, ii). Overall, the AUV performed multiple attempts to clean chain 3, succeeding on the third attempt.

The results of the model checking analyses for these attempts are shown in successive columns in Fig. 3b, while each row depicts the analysis of one of the requirements from Table 1. A system configuration is feasible if it satisfies requirements R1âthe AUV will not encounter a catastrophic failure with a probability of at least 0.95, and R2âthe expected energy consumption does not exceed the remaining AUV energy. Lastly, if multiple configurations satisfy requirements R1 and R2, then the winner is the configuration that maximises the number of chains cleaned. If there is still a tie, the configuration is chosen randomly from those that clean the most chains.

In the AUVâs first attempt at chain 3 (Fig. 3b (iâiii)), all the configurations are feasible, so configuration 1 (highlighted, and corresponding to the highest number of chains cleaned) is selected. This attempt fails, and a second assessment is made (Fig. 3b (ivâvi)). This time, only system configurations 2â8 are feasible, and as configurations 2, 3, and 5 maximise R3, a configuration is chosen randomly from this subset (in this case, configuration 3). This attempt also fails, and on the third attempt (Fig. 3b (viiâix)), only configurations 4â8 are feasible, with 5 maximising R3, and the AUV adopts this configuration and succeeds in cleaning the chain.

In this AUV mission instance, the AUV controller is concerned with cleaning the maximum number of chains and ensuring the AUV returns safely. In other variants of our AUV mission, the system properties from requirements R1 and R2 could also be used to determine a winning configuration in the event of a tie between multiple feasible configurations. For example, it might be optimal for the AUV to consume minimal energy in this scenario. Thus, the energy consumption from requirement R2 can be used as a metric to choose a configuration as a tie-breaker.

We also measured the overheads associated with executing the online verification process. Figure 4 shows the computation overheads incurred by the RBV framework for executing the AUV-based mission. The values comprising each boxplot have been collected over 10 independent runs. Each value denotes the time consumed for a single online robust quantitative verification and reconfiguration step when the AUV attempts to clean the indicated chain. For instance, the boxplot associated with the âChain 1â (âChain 2â) label on the x-axis signifies that the AUV attempts to clean chain 1 (chain 2) and corresponds to the time consumed by the RBV framework to analyse 64 (32) configurations. Overall, the time overheads are reasonable for the purpose of this mission. Since the AUV has more configurations to analyse at the earlier stages of the mission (e.g., when inspecting chain 1), the results follow the anticipated exponential pattern. The number of configurations decreases by half each time the AUV progresses further into the mission and moves to the next chain. Another interesting observation is that the length of each boxplot is small, i.e., the lower and upper quartiles are very close, indicating that the RBV framework showcases a consistent behaviour in the time taken for its execution.

The consumed time comprises (1) the time required to compute the posterior estimate bounds of the modelled transition rates, \({r}_{i}^{{{{{{{{\rm{clean}}}}}}}}}\), r fail, 1ââ¤âiââ¤âk, and r damage, using the BIPP and IPSP estimators; (2) the time required to compute the value intervals for requirements R1 and R2 using the probabilistic model checker Prism-Psy 22; and (3) the time needed to find the best configuration satisfying requirements R1 and R2, and maximising requirement R3. Our empirical analysis provided evidence that the execution of the BIPP and IPSP estimators and the selection of the best configuration have negligible overheads with almost all time incurred by Prism-Psy. This outcome is not surprising and is aligned with the results reported in22 concerning the execution overheads of the model checker.

Additional information about the offshore infrastructure maintenance experiments, including details about the experimental methodology, is provided in Supplementary Methods 2 of the Supplementary material. The simulator used for the AUV mission, developed on top of the open-source MOOS-IvP middleware26, and a video showing the execution of this AUV mission instance are available at http://github.com/gerasimou/RBV.

Discussion

Unlike single-point estimators of Markov model parameters27,28,29,30, our Bayesian framework provides interval estimates that capture the inherent uncertainty of these parameters, enabling the robust quantitative verification of systems such as autonomous robots. Through its ability to exploit prior knowledge, the framework differs fundamentally from, and is superior to, a recently introduced approach to synthesising intervals for unknown transition parameters based on the frequentist theory of simultaneous confidence intervals15,31,32. Furthermore, instead of applying the same estimator to all Markov model transition parameters like existing approaches, our framework is the first to handle parameters corresponding to singular and regular events differently. This is an essential distinction, especially for the former type of parameter, for which the absence of observations violates a key premise of existing estimators. Our BIPP estimator avoids this invalid premise, and computes two-sided bounded estimates for singular CTMC transition ratesâa considerable extension of our preliminary work to devise one-sided bounded estimates for the singular transition probabilities of discrete-time Markov chains33.

The proposed Bayesian framework is underpinned by the theoretical foundations of imprecise probabilities34,35 and Conservative Bayesian Inference (CBI)36,37,38 integrated with recent advances in the verification of interval CTMCs22. In particular, our BIPP theorems for singular events extend CBI significantly in several ways. First, BIPP operates in the continuous domain for a Poisson process, while previous CBI theorems are applicable to Bernoulli processes in the discrete domain. As such, BIPP enables the runtime quantitative verification of interval CTMCs, and thus the analysis of important properties that are not captured by discrete-time Markov models. Second, CBI is one-side (upper) bounded, and therefore only supports the analysis of undesirable singular events (e.g., catastrophic failures). In contrast, BIPP provides two-sided bounded estimates, therefore also enabling the analysis of âpositiveâ singular events (e.g., the completion of difficult one-off tasks). Finally, BIPP can operate with any arbitrary number of confidence bounds as priors, which greatly increases the flexibility of exploiting different types of prior knowledge.

As illustrated by its application to an AUV infrastructure maintenance mission, our robust quantitative verification framework removes the need for precise prior beliefs, which are typically unavailable in many real-world verification tasks that require Bayesian inference. Instead, the framework enables the exploitation of Bayesian combinations of partial or imperfect prior knowledge, which it uses to derive informed estimation errors (i.e., intervals) for the predicted model parameters. Combined with existing techniques for obtaining this prior knowledge, e.g., the Delphi method and its variants39 or reference class forecasting40, the framework increases the trustworthiness of Bayesian inference in highly uncertain scenarios such as those encountered in the verification of autonomous robots.

Based on recent survey papers41,42,43 that provide in-depth discussions on the challenges and opportunities in the field of autonomous robot verification, it has become evident that a common taxonomy emerges, primarily revolving around two key dimensions. The first dimension centres on the specification of properties under verification, which includes various types of temporal logic languages41. The second dimension pertains to how system behaviours are modeled/structured. In this regard, formal models such as Belief Desire Intention, Petri Nets, and finite state machines, along with their diverse extensions, have emerged as popular approaches to capturing the intricate dynamics of autonomous systems. Our approach falls within the category of methods utilising continuous stochastic logic (CSL) and CTMCs for the verification of robots. However, unlike the existing methods from this category44,45, we introduced treatments of the model parameters uncertainty via robust Bayesian learning methods, and integrated them with recent research on interval CMTC model checking.

Another important approach for verifying the behaviour of autonomous agents under uncertainty uses hidden Markov models (HMMs)46,47,48. HMM-based verification supports the analysis of stochastic systems whose true state is not observable, and can only be estimated (with aleatoric uncertainty given by a predefined probability distribution) through monitoring a separate process whose observable state depends on the unknown state of the system. In contrast, our verification framework supports the analysis of autonomous agents whose true state is observable but for which the rates of transition between these known states are affected by epistemic uncertainty and need to be learnt from system observations (as shown in Fig. 1). As such, HMM-based verification and our robust verification framework differ substantially by tackling different types of autonomous agent uncertainty. Because autonomous agents may be affected by both types of uncertainty, the complementarity of the two verification approaches can actually be leveraged by using our BIPP and IPSP Bayesian estimators in conjunction with HMM-based verification, i.e., to learn the transition rates associated with continuous-time HMMs that model the behaviour of an autonomous agent. Nevertheless, achieving this integration will first require the development of generic continuous-time HMM verification techniques since, to the best of our knowledge, only verification techniques and tools for the verification of discrete-time HMMs are currently available.

Although our method demonstrates promising potential, it is not without limitations. One limitation is scalabilityâas the complexity of the robotâs behaviour and the environment grow, the number of unknown parameters to be estimated at runtime may increase, leading to increased computational overheads for our Bayesian estimators. Additionally, the method requires a certain level of expertise to construct the underlying CTMC model structure. This demands understanding both the robotâs dynamics and the environment in order to model them as a CTMC, making the approach less accessible to those without specialised knowledge. Last but not least, a challenge inherent to all Bayesian methods involves the acquisition of appropriate priors. While our robust Bayesian estimators mitigate this issue by eliminating the need for complete and precise prior knowledge, establishing the required partial and vague priors can still pose challenges. These limitations suggest important areas for future work.

Methods

Quantitative verification

Quantitative verification is a mathematically based technique for analysing the correctness, reliability, performance and other key properties of systems with stochastic behaviour49,50. The technique captures this behaviour into Markov models, formalises the properties of interest as probabilistic temporal logic formulae over these models, and employs efficient algorithms for their analysis. Examples of such properties include the probability of mission failure for an autonomous robot, and the expected battery energy required to complete a robotic mission.

In this paper, we focus on the quantitative verification of continuous-time Markov chains (CMTCs). CTMCs are Markov models for continuous-time stochastic processes over countable state spaces comprising (i) a finite set of states corresponding to real-world states of the system that are relevant for the analysed properties; and (ii) the rates of transition between these states. We use the following definition adapted from the probabilistic model checking literature49,50.

Definition 1

A continuous-time Markov chain is a tuple

where S is a finite set of states, s0âââS is the initial state, and \({{{{{{{\bf{R}}}}}}}}:S\times S\to {\mathbb{R}}\) is a transition rate matrix such that the probability that the CTMC will leave state siâââS within tâ>â0 time units is \(1-{e}^{-t\cdot {\sum }_{{s}_{k}\in S\setminus \{{s}_{i}\}}{{{{{{{\bf{R}}}}}}}}({s}_{i},{s}_{k})}\) and the probability that the new state is sjâââS⧹{si} is \({p}_{ij}={{{{{{{\bf{R}}}}}}}}({s}_{i},{s}_{j})\,/\,{\sum }_{{s}_{k}\in S\setminus \{{s}_{i}\}}\,{{{{{{{\bf{R}}}}}}}}({s}_{i},{s}_{k})\).

The range of properties that can be verified using CTMCs can be extended by annotating the states and transitions with non-negative quantities called rewards.

Definition 2

A reward structure over a CTMC \({{{{{{{\mathcal{M}}}}}}}}=(S,{s}_{0},{{{{{{{\bf{R}}}}}}}})\) is a pair of functions \((\underline{\rho },{{{{{{{\boldsymbol{\iota }}}}}}}})\) such that \(\underline{\rho }:S\to {{\mathbb{R}}}_{\ge 0}\) is a state reward function (a vector), and \({{{{{{{\boldsymbol{\iota }}}}}}}}:S\times S\to {{\mathbb{R}}}_{\ge 0}\) is a transition reward function (a matrix).

CTMCs support the verification of quantitative properties expressed in CSL51 extended with rewards50.

Definition 3

Given a set of atomic propositions AP, aâââAP, pâââ[0,â1], \(I\subseteq {{\mathbb{R}}}_{\ge 0}\), \(r,t\in {{\mathbb{R}}}_{\ge 0}\) andâââââââ{â¥,â>â,â<â,ââ¤}, a CSL formula Φ is defined by the grammar:

Given a CTMC \({{{{{{{\mathcal{M}}}}}}}}=(S,{s}_{0},{{{{{{{\bf{R}}}}}}}})\) with states labelled with atomic propositions from A P by a function L :âSâââ2AP, and a reward structure \((\underline{\rho },{{{{{{{\boldsymbol{\iota }}}}}}}})\) over \({{{{{{{\mathcal{M}}}}}}}}\), the CSL semantics is defined with a satisfaction relation ⧠over the states and paths (i.e., feasible sequences of successive states) of \({{{{{{{\mathcal{M}}}}}}}}\) 49. The notation sâ§Î¦ means âΦ is satisfied in state sâ. For any state sâââS, we have:

-

sââ§ât r u e, sâ§a iff aâââL(s), sââ§â¬âΦ iffâ¬â(sâ§Î¦), and sâ§Î¦1ââ§âΦ2 iff sââ§âΦ1 and sââ§âΦ2;

-

\(s\,\vDash \,{{{{{{{{\mathcal{P}}}}}}}}}_{\bowtie p}[X\,\Phi ]\) iff the probability x that Φ holds in the state following s satisfies xâââp (probabilistic next formula);

-

\(s \, \vDash \, {{{{{{{{\mathcal{P}}}}}}}}}_{\bowtie p}[{\Phi }_{1}\,{U}^{I}\,{\Phi }_{2}]\) iff, across all paths starting at s, the probability x of going through only states where Φ1 holds until reaching a state where Φ2 holds at a time tâââI satisfies xâââp (probabilistic until formula);

-

\(s\,\vDash \, {{{{{{{{\mathcal{S}}}}}}}}}_{\bowtie p}[\Phi ]\) iff, having started in state s, the probability x of \({{{{{{{\mathcal{M}}}}}}}}\) reaching a state where Φ holds in the long run satisfies xâââp (probabilistic steady-state formula);

-

the instantaneous (Râr[I =t]), cumulative (Râr[C â¤t]), future-state (Râr[FâΦ]) and steady-state (Râr[S]) reward formulae hold iff, having started in state s, the expected reward x at time instant t, cumulated up to time t, cumulated until reaching a state where Φ holds, and achieved at steady state, respectively, satisfies xâââr.

Probabilistic model checkers such as PRISM52 and Storm53 use efficient analysis techniques to compute the actual probabilities and expected rewards associated with probabilistic and reward formulae, respectively. The formulae are then verified by comparing the computed values to the bounds p and r. Furthermore, the extended CSL syntax P=?[XâΦ], P=?[Φ1âU IâΦ2], R=?[I =t], etc. can be used to obtain these values from the model checkers.

While the transition rates of the CTMCs verified in this way must be known and constant, advanced quantitative verification techniques21 support the analysis of CTMCs whose transition rates are specified as intervals. The technique has been used to synthesise CTMCs corresponding to process configurations and system designs that satisfy quantitative constraints and optimisation criteria22,32,54, under the assumption that these bounded intervals are available. Here we introduce a Bayesian framework for computing these intervals in ways that reflect the parametric uncertainty of real-world systems such as autonomous robots.

Bayesian learning of CTMC transition rates

Given two states si and sj of a CTMC such that transitions from si to sj are possible and occur with rate λ, each transition from si to sj is independent of how state si was reached (the Markov property). Furthermore, the time spent in state si before a transition to sj is modelled by a homogeneous Poisson process of rate λ. Accordingly, the likelihood that âdataâ collected by observing the CTMC shows n such transitions occurring within a combined time t spent in state si is given by the conditional probability:

In practice, the rate λ is typically unknown, but prior beliefs about its value are available (e.g., from domain experts or from past missions performed by the system modelled by the CTMC) in the form of a probability (density or mass) function f(λ). In this common scenario, the Bayes Theorem can be used to derive a posterior probability function that combines the likelihood l(λ) and the prior f(λ) into a better estimate for λ at time t:

where the Lebesgue-Stieltjes integral from the denominator is introduced to ensure that f(λâ£data) is a probability function. We note, we use Lebesgue-Stieltjes integration to cover in a compact way both continuous and discrete prior distributions f(λ), as these integrals naturally reduce to sums for discrete distributions. We calculate the posterior estimate for the rate λ at time t as the expectation of (3):

where we use capital letters for random variables and lower case for their realisations.

Interval Bayesian inference for singular events

In the autonomous-robot missions considered in our paper, certain events are extremely rare, and treated as unique from a modelling viewpoint. These events include major failures (after each of which the system is modified to remove or mitigate the cause of the failure), and the successful completion of difficult one-off tasks. Using Bayesian inference to estimate the CTMC transition rates associated with such events is challenging because, with no observations of these events, the posterior estimate is highly sensitive to the choice of a suitable prior distribution. Furthermore, only limited domain knowledge is often available to select and justify a prior distribution for these singular events.

To address this challenge, we develop a Bayesian inference using partial priors (BIPP) estimator that requires only limited, partial prior knowledge instead of the complete prior distribution typically needed for Bayesian inference. For one-off events, such knowledge is both more likely to be available and easier to justify. BIPP provides bounded posterior estimates that are robust in the sense that the ground truth rate values are within the estimated intervals.

To derive the BIPP estimator, we note that for one-off events the likelihood (2) becomes

because nâ=â0. Instead of a prior distribution f(λ) (required to compute the posterior expectation (4)), we assume that we only have limited partial knowledge consisting of mââ¥â2 confidence bounds on f(λ):

where 1ââ¤âiââ¤âm, θiâ>â0, and \(\mathop{\sum }\nolimits_{i = 1}^{m}{\theta }_{i}=1\). The use of such bounds is a common practice for safety-critical systems. As an example, the IEC61508 safety standard 55 defines safety integrity levels (SILs) for the critical functions of a system based on the bounds for their probability of failure on demand (pfd): pfd between 10â2 and 10â 1 corresponds to SIL 1, pfd between 10â3 and 10â2 corresponds to SIL 2, etc.; and testing can be used to estimate the probabilities that a critical function has different SILs. We note that P r(λââ¥âϵ0)â=âP r(λââ¤âϵm)â=â1 and that, when no specific information is available, we can use ϵ0â=â0 and ϵmâ=â+ââ.

The partial knowledge encoded by the constraints (6) is far from a complete prior distribution: an infinite number of distributions f(λ) satisfy these constraints, and the result below provides bounds for the estimate rate (4) across these distributions.

Theorem 1

The set Sλ of posterior estimate rates (4) computed for all prior distributions f(λ) that satisfy (6) has an infinum λl and a supremum λu given by:

Before providing a proof for Theorem 1, we note that the values λl and λu can be computed using numerical optimisation software packages available, for instance, within widely used mathematical computing tools like MATLAB and Maple. For applications where computational resources are limited or the BIPP estimator is used online with tight deadlines, the following corollaries (whose proofs are provided in our supplementary material) give closed-form estimator bounds for mâ=â3 (with mâ=â2 as a subcase).

Corollary 1

When mâ=â3, the bounds (7) and (8) satisfy:

and

Corollary 2

Closed-form BIPP bounds for mâ=â2 can be obtained by setting ϵ2â=âϵ1 and θ2â=â0 in (9) and (10).

To prove Theorem 1, we require the following Lemma and Propositions.

Lemma 1

If l(ââ â) is the likelihood function defined in (5), then \(g:(0,\infty )\to {\mathbb{R}}\), g(w)â=âwââ âl â1(w) is a concave function.

Proof

Since \(g(w)=w\cdot \left(-\frac{\ln w}{t}\right)\) and tâ>â0, the second derivative of g satisfies

Thus, g(w) is concave. â¡

Proposition 1

With the notation from Theorem 1, there exist m values λ1âââ(ϵ0,âϵ1], λ2âââ(ϵ1,âϵ2], â¦, λmâââ(ϵmâ1,âϵm] such that \(\sup {{{{{{{{\mathcal{S}}}}}}}}}_{\lambda }\) is the posterior estimate (4) obtained by using as prior the m-point discrete distribution with probability mass f(λi)â=âP r(λâ=âλi)â=âθi for iâ=â1,â2,ââ¦,âm.

Proof

Since f(λ)â=â0 for λâââ[ϵ0,âϵm], the Lebesgue-Stieltjes integration from the objective function (4) can be rewritten as:

The first mean value theorem for integrals (e.g.,56 p. 249]) ensures that, for every iâ=â1,â2,ââ¦,âm, there are points \({\lambda }_{i},{\lambda }_{i}^{{\prime} }\in [{\epsilon }_{i-1},{\epsilon }_{i}]\) such that:

or, after simple algebraic manipulations of the previous results,

Using the shorthand notation wâ=âl(λ) for the likelihood function (5) (hence wâ>â0), we define \(g:(0,\infty )\to {\mathbb{R}}\), g(w)â=âwââ âl â1(w). According to Lemma 1, g(ââ â) is a concave function, and thus we have:

where the inequality step (17) is obtained by applying Jensenâs inequality36,57.

We can now use (13), (14) and (18) to establish an upper bound for the objective function (12):

This upper bound is attained by selecting an m-point discrete distribution fu(λ) with probability mass θi at λâ=âλi, for iâ=â1,â2,ââ¦,âm (since substituting f(ââ â) from (12) with this fu(ââ â) yields the rhs result of (19)). As such, maximising this bound reduces to an optimisation problem in the m-dimensional space of (λ1,âλ2,ââ¦,âλm)âââ(ϵ0,âϵ1]âÃâ(ϵ1,âϵ2]âÃââ¯âÃâ(ϵmâ1,âϵm]. This optimisation problem can be solved numerically, yielding a supremum (rather than a maximum) for \({{{{{{{{\mathcal{S}}}}}}}}}_{\lambda }\) in the case when the optimised prior distribution has points located at λiâ=âϵiâ1 for iâ=â1,â2,ââ¦,âm. â¡

Proposition 2

With the notation from Theorem 1, there exist m values x1,âx2,ââ¦,âxmâââ[0,â1] such that \(\inf {{{{{{{{\mathcal{S}}}}}}}}}_{\lambda }\) is the posterior estimate (4) obtained by using as prior the (mâ+â1)-point discrete distribution with probability mass f(ϵ0)â=âP r(λâ=âϵ0)â=âx1 θ1, f(ϵi)â=âP r(λâ=âϵi)â=â(1âââxi)θiâ+âxi+1 θi+1 for 1â¤iâ<âm, and f(ϵm)â=âP r(λâ=âϵm)â=â(1âââxm)θm.

Proof

We reuse the reasoning steps from Proposition 1 up to inequality (17), which we replace with the following alternative inequality derived from the Converse Jensenâs Inequality58,59 and the fact that g(w) is a concave function (cf. Lemma 1):

We can now establish a lower bound for (12):

where xi is defined as:

The result (22) is essentially in the same form as the result obtained by using a 2m-point distribution in which, for each interval [ϵiâ1,âϵi], there are two points located at λâ=âϵiâ1 and λâ=âϵi and the probability mass associated with these points is xi θi and (1âââxi)θi respectively. Intuitively, xi is the ratio of splitting the probability mass θi between the two points since, according to (23), xiâââ[0,â1].

Furthermore, the points on the boundaries of two successive intervals are overlapping, which effectively reduces the number of points from 2m to mâ+â1. Expanding (22) yields an (mâ+â1)-point discrete distribution fl(λ) with probability mass fl(ϵ0)â=âx1 θ1, fl(ϵi)â=â(1âââxi)θiâ+âxi+1 θi+1 for 1â¤iâ<âm and fl(ϵm)â=â(1âââxm)θm. As such, minimising (22) reduces to an m-dimensional optimisation problem in x1,âx2,ââ¦,âxm, which can be solved numerically given other model parameters. Finally, since (6) requires that ϵiâ1â<âλiâ¤Ïµi, we have 0ââ¤âxiâ<â1, and thus the posterior estimate is an infimum (rather than a minimum) of \({{{{{{{{\mathcal{S}}}}}}}}}_{\lambda }\) when the solution of the optimisation problem corresponds to a combination of x1,âx2,ââ¦,âxm values that includes one or more values of 1. â¡

We can now prove Theorem 1. In the Supplementary Methods 1 of the supplementary material, we use this result to prove Corollaries 1 and 2.

Proof

Proof of Theorem 1. Propositions 1 and 2 imply that the set of posterior estimates λ over all priors that satisfy the constraints (6) has:

-

1.

the infinum λl from (7), obtained by using the prior f(λ) from Proposition 2 in (4);

-

2.

the supremum λu from (8), obtained by using the prior f(λ) from Proposition 1 in (4). â¡

BIPP estimator evaluation

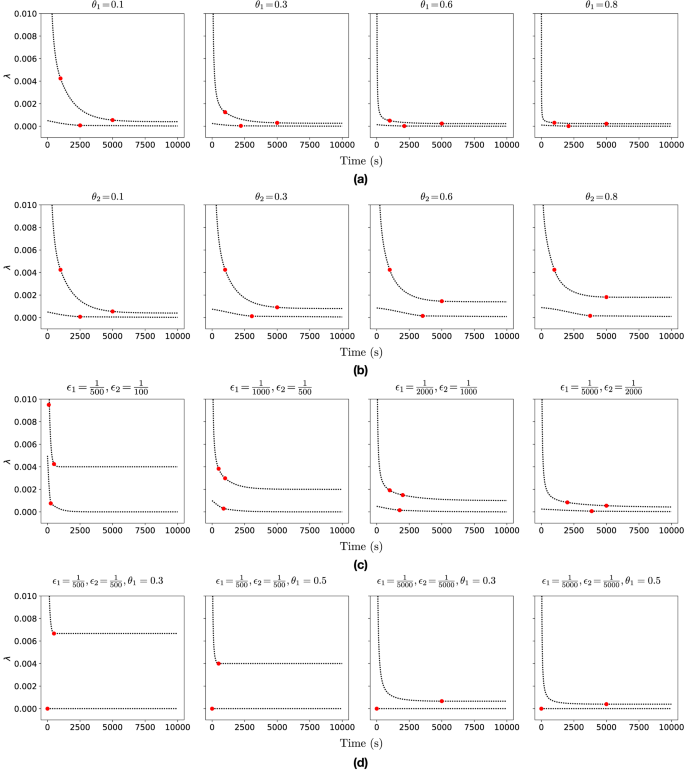

Figure 5 shows the results of experiments we carried out to evaluate the BIPP estimator in scenarios with mâ=â3 (Fig. 5aâc) and mâ=â2 (Fig. 5d) confidence bounds by varying the characteristics of the partial prior knowledge. For mâ=â3, the upper bound computed by the estimator exhibits a three-stage behaviour as the time over which no singular event occurs increases. These stages correspond to the three λu regions from (10). They start with a steep λu decrease for \(t < \frac{1}{{\epsilon }_{2}}\) in stage 1, followed by a slower λu decreasing trend for \(\frac{1}{{\epsilon }_{2}}\le t\le \frac{1}{{\epsilon }_{1}}\) in stage 2, and approaching the asymptotic value \(\frac{{\epsilon }_{1}({\theta }_{1}+{\theta }_{2})}{{\theta }_{1}}\) as the mission progresses through stage 3. Similarly, the lower bound λl demonstrates a two-stage behaviour, as expected given its two-part definition (9), with the overall value approaching 0 as the mission continues and no singular event modelled by this estimator (e.g., a catastrophic failure) occurs.

Systematic experimental analysis of the BIPP estimator showing the bounds λl and λu of the posterior estimates for the occurrence probability of singular events for the duration of a mission. Each plot shows the effect of different partial prior knowledge encoded in (6) on the calculation of the lower (7) and upper (8) posterior estimate bounds. The red circles indicate the time points when the different formulae for the lower and upper bounds in (9) and (10), respectively, become active. a BIPP estimator for mâ=â3, θ1âââ{0.1,â0.3,â0.6,â0.8}, θ2â=â0.1, \({\epsilon }_{1}=\frac{1}{5000}\), \({\epsilon }_{2}=\frac{1}{1000}\). b BIPP estimator for mâ=â3, θ1â=â0.1, θ2âââ{0.1,â0.3,â0.6,â0.8}, \({\epsilon }_{1}=\frac{1}{5000}\), \({\epsilon }_{2}=\frac{1}{1000}\). c BIPP estimator for mâ=â3, θ1â=â0.3, θ2â=â0.3, \(\left({\epsilon }_{1},{\epsilon }_{2}\right)\in \left\{\left(\frac{1}{500},\frac{1}{100}\right),\left(\frac{1}{1000},\frac{1}{500}\right),\left(\frac{1}{2000},\frac{1}{1000}\right),\left(\frac{1}{5000},\frac{1}{2000}\right)\right\}\) d BIPP estimator for mâ=â2, θ1âââ{0.3,â0.5}, θ2â=â0, \(\left({\epsilon }_{1},{\epsilon }_{2}\right)\in \left\{\left(\frac{1}{500},\frac{1}{500}\right),\left(\frac{1}{5000},\frac{1}{5000}\right)\right\}\).

Figure 5a shows the behaviour of the estimator for different θ1 values and fixed θ2, ϵ1, and ϵ2 values. For higher θ1 values, more probability mass is allocated to the confidence bound (ϵ0,âϵ1], yielding a steeper decrease in the upper bound λu and a lower λu value at the end of the mission. The lower bound λl presents limited variability across the different θ1 values, becoming almost constant and close to 0 as θ1 increases.

A similar decreasing pattern is observed in Fig. 5b, which depicts the results of experiments with θ1, ϵ1, and ϵ2 fixed, and θ2 variable. The upper bound λu in the long-term is larger for higher θ2 values, resulting in a wider posterior estimate bound as λu converges towards its theoretical asymptotic value.

Allocating the same probability mass to the confidence bounds, i.e., θ1â=âθ2â=â0.3 and changing the prior knowledge bounds ϵ1 and ϵ2 affects greatly the behaviour of the BIPP estimator (Fig. 5c). When ϵ1 and ϵ2 have relatively high values compared to the duration of the mission (e.g., see the first three plots in Fig. 5c), the upper bound λu of the BIPP estimator rapidly converges to its asymptotic value, leaving no room for subsequent improvement as the mission progresses. Similarly, the earlier the triggering point for switching between the two parts of the lower bound λl calculation (9), the earlier λl reaches a plateau close to 0.

Finally, Fig. 5d shows experimental results for the special scenario comprising only mâ=â2 confidence bounds. In this scenario, replacing θ2â=â0 in (9) as required by Corollary 2 gives a constant lower bound λlâ=â0 irrespective of the other BIPP estimator parameters. As expected, the upper bound λu demonstrates a twofold behaviour, featuring a rapid decrease until \(t=\frac{1}{\epsilon 1}\), followed by a steady state behaviour where \({\lambda }_{u}=\frac{{\epsilon }_{1}}{{\theta }_{1}}\).

Interval Bayesian inference for regular events

For CTMC transitions that correspond to regular events within the modelled system, we follow the common practice60 of using a Gamma prior distribution for each uncertain transition rate λ:

The Gamma distribution is a frequently adopted conjugate prior distribution for the likelihood (2) and, if the prior knowledge assumes an initial value λ (0) for the transition rate, the parameters αâ>â0 and βâ>â0 must satisfy

The posterior value λ (t) for the transition rate after observing n transitions within t time units is then obtained by using the prior (24) in the expectation (4), as in the following derivation adapted from classical Bayesian theory60:

where t (0)â=âβ. This notation reflects the way in which the posterior rate λ (t) is computed as a weighted sum of the mean rate \(\frac{n}{t}\) observed over a time period t, and of the prior λ (0) deemed as trustworthy as a mean rate calculated from observations over a time period t (0). When t (0)ââªât (either because we have low trust in the prior λ (0) and thus t (0)âââ0, or because the system was observed for a time period t that is much longer than t (0)), the posterior (26) reduces to the maximum likelihood estimator, i.e. \({\lambda }^{(t)}\simeq \frac{n}{t}\). In this scenario, the observations fully dominate the estimator (26), with no contribution from the prior.

The selection of suitable values for the parameters t (0) and λ (0) of the traditional Bayesian estimator (26) is very challenging. What constitutes a suitable choice often depends on unknown attributes of the environment, or several domain experts may each propose different values for these parameters. In line with recent advances in imprecise probabilistic modelling34,35,61, we address this challenge by defining a robust transition rate estimator for Bayesian inference using imprecise probability with sets of priors (IPSP). The IPSP estimator uses ranges \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\) and \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\) (corresponding to the environmental uncertainty, or to input obtained from multiple domain experts) for the two parameters instead of point values.

The following theorem quantifies the uncertainty that the use of parameter ranges for t (0) and λ (0) induces on the posterior rate (26). This theorem specialises and builds on generalised Bayesian inference results34 that we adapt for the estimation of CTMC transition rates.

Theorem 2

Given uncertain prior parameters \({t}^{(0)}\in [{\underline{t}}^{(0)},\overline{t}^{(0)}]\) and \({\lambda }^{(0)}\in [{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\), the posterior rate λ (t) from (26) can range in the interval \([{\underline{\lambda }}^{(t)},\overline{\lambda }^{(t)}]\), where:

and

Proof

To find the extrema for the posterior rate λ (t), we first differentiate (26) with respect to λ (0):

As t (0)â>â0 and tâ>â0, this derivative is always positive, so

and

We now differentiate the quantity that needs to be minimised in (29) with respect to t (0):

As this derivative is non-positive for \({\underline{\lambda }}^{(0)}\in \left(0,\frac{n}{t}\right]\) and positive for \({\underline{\lambda }}^{(0)} > \frac{n}{t}\), the minimum from (29) is attained for \({t}^{0}={\overline{t}}^{(0)}\) in the former case, and for \({t}^{0}={\underline{t}}^{(0)}\) in the latter case, which yields the result from (27). Similarly, the derivative of the quantity to maximise in (30), i.e.,

is non-positive for \({\overline{\lambda }}^{(0)}\in \left(0,\frac{n}{t}\right]\) and positive for \({\overline{\lambda }}^{(0)} > \frac{n}{t}\), so the maximum from (30) is attained for \({t}^{0}={\underline{t}}^{(0)}\) in the former case, and for \({t}^{0}={\overline{t}}^{(0)}\) in the latter case, which yields the result from (28) and completes the proof. â¡

IPSP estimator evaluation

Figure 6 shows the results of experiments we performed to analyse the behaviour of the IPSP estimator in scenarios with varying ranges for the prior knowledge \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\) and \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\). A general observation is that the posterior rate intervals \([{\underline{\lambda }}^{(t)},{\overline{\lambda }}^{(t)}]\) become narrower as the mission progresses, irrespective of the level of trust assigned to the prior knowledge, i.e., across all columns of plots (which correspond to different \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\) intervals) from Fig. 6a. Nevertheless, this trust level affects how the estimator incorporates observations into the calculation of the posterior interval. When the trust in the prior knowledge is weak (in the plots from the leftmost columns of Fig. 6a), the impact of the prior knowledge on the posterior estimation is low, and the IPSP calculation is heavily influenced by the observations, resulting in a narrow interval. In contrast, when the trust in the prior knowledge is stronger (in the plots from the rightmost columns), the contribution of the prior knowledge to the posterior estimation becomes higher, and the IPSP estimator produces a wider interval.

a IPSP estimator results showing the impact of different sets of priors \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\) and \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\). In each plot, the blue dotted line (ââ â â â â) and green dashed line (âââââââ) show the posterior estimation bounds \({\underline{\lambda }}^{(t)}\) and \(\overline{\lambda }^{(t)}\) for narrow and wide \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\) intervals, respectively. Each column of plots corresponds to assigning different strength to the prior knowledge, ranging from uninformative (leftmost column) to strong belief (rightmost column). The first row shows scenarios in which the actual rate \(\overline{\lambda }=3\) belongs to the prior knowledge interval \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\). In the second and third rows, the prior intervals overestimate and underestimate \(\overline{\lambda }\), respectively. b IPSP estimator results illustrating the behaviour of IPSP across different actual rate values \(\overline{\lambda }\in \{0.03,0.3,3,30\}\). The experiments were carried out for \([{\underline{t}}^{(0)},\overline{t}^{(0)}]=[1000,1000]\) and included both narrow and wide \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\) intervals, which are shown in blue dotted lines (ââ â â â â) and green dashed lines (âââââââ), respectively. In all experiments, the unknown actual rate \(\overline{\lambda }\) was in the prior interval \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\).

In the experiments from the first row of plots in Fig. 6a, the (unknown) actual rate \(\overline{\lambda }=3\) belongs to the prior knowledge interval \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\). As a result, the posterior rate interval \([{\underline{\lambda }}^{(t)},\overline{\lambda }^{(t)}]\) progressively becomes narrower, approximating \(\overline{\lambda }\) with high accuracy. As expected, the narrower prior knowledge (blue dotted line) produces a narrower posterior rate interval than the wider and more conservative prior knowledge (green dashed line).

When the prior knowledge interval \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\) overestimates or underestimates the actual rate \(\overline{\lambda }\) (second and third rows of plots from Fig. 6a, respectively), the ability of IPSP to adapt its estimations to reflect the observations heavily depends on the characteristics of the sets of priors. For example, if the width of the prior knowledge \([{\underline{\lambda }}^{(0)},\overline{\lambda }^{(0)}]\) is close to \(\overline{\lambda }\) and t (0)ââªât, then IPSP more easily approaches \(\overline{\lambda }\), as shown by the narrow prior knowledge (blue dotted line) in Fig. 6a for \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\in \{[5,15],[75,125],[750,1250]\}\). In contrast, wider narrow prior knowledge (green dashed line) combined with higher levels of trust in the prior, e.g., \([{\underline{t}}^{(0)},\overline{t}^{(0)}]\in \{[1500,2500]\}\), entails that more observations are needed for the posterior rate to approach the actual rate \(\overline{\lambda }\). When the actual rate is, in addition, nonstationary, change-point detection methods can be employed to identify these changes62,63 and recalibrate the IPSP estimator. Finally, Fig. 6b shows the behaviour of IPSP for different actual rate \(\overline{\lambda }\) values, i.e., \(\overline{\lambda }\in \{0.03,0.3,3,30\}\). As \(\overline{\lambda }\) increases, more observations are produced in the same time period, resulting in a smoother and narrower posterior bound estimate.

Data availability

The data supporting the RBV findings and a video of the robotic mission in simulation are available at https://gerasimou.github.io/RBV.

Code availability

All code developed in this project is freely available at http://github.com/gerasimou/RBV.

References

The Headquarters for Japanâs Economic Revitalization. New Robot Strategy: Japanâs Robot Strategy. Prime Ministerâs Office of Japan (2015).

SPARCâThe Partnership for Robotics in Europe. Robotics 2020 multi-annual roadmap for robotics in Europe. eu-robotics (2016).

Science and Technology Committee. Robotics and Artificial Intelligence. Committee Reports of UK House of Commons (2016).

Christensen, H. et al. A roadmap for us roboticsâfrom internet to robotics 2020 edition. Found. Trends Robot. 8, 307â424 (2021).

Richardson, R. et al. Robotic and autonomous systems for resilient infrastructure. UK-RAS White Papers© UK-RAS (2017).

UK Robotics & Autonomous Systems Network. Space Robotics & Autonomous Systems: Widening the horizon of space exploration. UK-RAS White Papers© UK-RAS (2018).

Lane, D., Bisset, D., Buckingham, R., Pegman, G. & Prescott, T. New foresight review on robotics and autonomous systems. Tech. Rep. No. 2016.1. (Lloydâs Register Foundation, London, UK, 2016).

Calinescu, R. et al. Engineering trustworthy self-adaptive software with dynamic assurance cases. IEEE Trans. Softw. Eng. 44, 1039â1069 (2017).

Robu, V., Flynn, D. & Lane, D. Train robots to self-certify as safe. Nature 553, 281â281 (2018).

Calinescu, R., Ghezzi, C., Kwiatkowska, M. & Mirandola, R. Self-adaptive software needs quantitative verification at runtime. Commun. ACM 55, 69â77 (2012).

International Nuclear Safety Advisory Group. Defence in Depth in Nuclear Safety (INSAG 10) (1996).

Kwiatkowska, M. Quantitative verification: models, techniques and tools. In Proc. 6th joint meeting of the European Software Engineering Conference and the ACM SIGSOFT Symposium on the Foundations of Software Engineering (ESEC/FSE), 449â458 (ACM Press, 2007).

Katoen, J.-P. The Probabilistic Model Checking Landscape. In Proc. of the 31st Annual ACM/IEEE Symposium on Logic in Computer Science, LICS â16, 31â45 (ACM, New York, NY, USA, 2016). https://doi.org/10.1145/2933575.2934574.

Legay, A., Delahaye, B. & Bensalem, S. Statistical model checking: an overview. In (eds Barringer, H. et al.) Runtime Verification, vol. 6418 of LNCS, 122â135 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2010).

Calinescu, R. et al. Formal verification with confidence intervals to establish quality of service properties of software systems. IEEE Trans. Reliab. 65, 107â125 (2016).

Kwiatkowska, M., Norman, G. & Parker, D. Probabilistic model checking and autonomy. Annu. Rev. Control Robot. Autonomous Syst. 5, 385â410 (2022).

Lacerda, B., Faruq, F., Parker, D. & Hawes, N. Probabilistic planning with formal performance guarantees for mobile service robots. Int. J. Robot. Res. 38, 1098â1123 (2019).

Nardone, V., Santone, A., Tipaldi, M. & Glielmo, L. Probabilistic model checking applied to autonomous spacecraft reconfiguration. In IEEE Metrology for Aerospace (MetroAeroSpace), 556â560 (IEEE, 2016).

Fraser, D. et al. Collaborative models for autonomous systems controller synthesis. Form. Asp. Comput. 32, 157â186 (2020).

Liu, W. & Winfield, A. F. Modeling and optimization of adaptive foraging in swarm robotic systems. Int. J. Robot. Res. 29, 1743â1760 (2010).

Brim, L., Ceska, M., Drazan, S. & Safranek, D. Exploring parameter space of stochastic biochemical systems using quantitative model checking. In Computer Aided Verification (CAV), 107â123 (2013).

Ceska, M., Pilar, P., Paoletti, N., Brim, L. & Kwiatkowska, M. PRISM-PSY: Precise GPU-accelerated parameter synthesis for stochastic systems. In (eds Chechik, M. & Raskin, J.-F.) Tools and Algorithms for the Construction and Analysis of Systems, vol. 9636 of LNCS, 367â384 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2016).

Avizienis, A., Laprie, J., Randell, B. & Landwehr, C. Basic concepts and taxonomy of dependable and secure computing. IEEE Trans. Dependable Secur. Comput. 1, 11â33 (2004).

Lane, D. M. et al. PANDORA-persistent autonomy through learning, adaptation, observation and replanning. IFAC-PapersOnLine 48, 238â243 (2015).

Myhr, A., Bjerkseter, C., Ã gotnes, A. & Nygaard, T. A. Levelised cost of energy for offshore floating wind turbines in a life cycle perspective. Renew. Energy 66, 714â728 (2014).

Benjamin, M. R., Schmidt, H., Newman, P. M. & Leonard, J. J. Autonomy for unmanned marine vehicles with MOOS-IvP. In (ed Seto, M. L.) Marine Robot Autonomy, 47â90 (Springer, 2013). https://doi.org/10.1007/978-1-4614-5659-9_2.

Epifani, I., Ghezzi, C., Mirandola, R. & Tamburrelli, G. Model evolution by run-time parameter adaptation. In Proc. of the 31st Int. Conf. on Software Engineering, 111â121 (IEEE, Washington, DC, USA, 2009).

Filieri, A., Ghezzi, C. & Tamburrelli, G. A formal approach to adaptive software: continuous assurance of non-functional requirements. Form. Asp. Comput. 24, 163â186 (2012).

Calinescu, R., Rafiq, Y., Johnson, K. & Bakir, M. E. Adaptive model learning for continual verification of non-functional properties. In Proc. of the 5th Int. Conf. on Performance Engineering, 87â98 (ACM, NY, USA, 2014).

Filieri, A., Grunske, L. & Leva, A. Lightweight adaptive filtering for efficient learning and updating of probabilistic models. In Proc. of the 37th Int. Conf. on Software Engineering, 200â211 (IEEE Press, Piscataway, NJ, USA, 2015).

Calinescu, R., Johnson, K. & Paterson, C. FACT: A probabilistic model checker for formal verification with confidence intervals. In (eds Chechik, M. & Raskin, J.-F.) Tools and Algorithms for the Construction and Analysis of Systems, 540â546 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2016).

Calinescu, R., ÄeÅ¡ka, M., Gerasimou, S., Kwiatkowska, M. & Paoletti, N. RODES: a robust-design synthesis tool for probabilistic systems. In Quantitative Evaluation of Systems: 14th International Conference, QEST 2017, Berlin, Germany, September 5-7, 2017, Proceedings 14, 304â308 (Springer, 2017).

Zhao, X. et al. Probabilistic model checking of robots deployed in extreme environments. In Proc. of the 33rd AAAI Conference on Artificial Intelligence, vol. 33, 8076â8084 (Honolulu, Hawaii, USA, 2019).

Walter, G. & Augustin, T. Imprecision and prior-data conflict in generalized Bayesian inference. J. Stat. Theory Pract. 3, 255â271 (2009).

Walter, G., Aslett, L. & Coolen, F. P. A. Bayesian nonparametric system reliability using sets of priors. Int. J. Approx. Reason. 80, 67â88 (2017).

Bishop, P., Bloomfield, R., Littlewood, B., Povyakalo, A. & Wright, D. Toward a formalism for conservative claims about the dependability of software-based systems. IEEE Trans. Softw. Eng. 37, 708â717 (2011).

Strigini, L. & Povyakalo, A. Software fault-freeness and reliability predictions. In (eds Bitsch, F., Guiochet, J. & Kaâniche, M.) Computer Safety, Reliability, and Security, vol. 8153 of LNCS, 106â117 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2013).

Zhao, X., Salako, K., Strigini, L., Robu, V. & Flynn, D. Assessing safety-critical systems from operational testing: a study on autonomous vehicles. Inf. Softw. Technol. 128, 106393 (2020).

Ishikawa, A. et al. The max-min delphi method and fuzzy delphi method via fuzzy integration. Fuzzy Sets Syst. 55, 241â253 (1993).

Flyvbjerg, B. Curbing optimism bias and strategic misrepresentation in planning: reference class forecasting in practice. Eur. Plan. Stud. 16, 3â21 (2008).

Araujo, H., Mousavi, M. R. & Varshosaz, M. Testing, validation, and verification of robotic and autonomous systems: a systematic review. ACM Trans. Softw. Eng. Methodol. 32, 1â61 (2023).

Luckcuck, M., Farrell, M., Dennis, L. A., Dixon, C. & Fisher, M. Formal specification and verification of autonomous robotic systems: a survey. ACM Comput. Surv. 52, 1â41 (2019).

Gleirscher, M., Foster, S. & Woodcock, J. New opportunities for integrated formal methods. ACM Comput. Surv. 52, 1â36 (2019).

Gerasimou, S., Calinescu, R., Shevtsov, S. & Weyns, D. UNDERSEA: an exemplar for engineering self-adaptive unmanned underwater vehicles. In IEEE/ACM 12th Int. Symp. on Software Engineering for Adaptive and Self-Managing Systems, 83â89 (2017).

Younes, H. L., Kwiatkowska, M., Norman, G. & Parker, D. Numerical vs. statistical probabilistic model checking. Int. J. Softw. Tools Technol. Transf. 8, 216â228 (2006).

Zhang, L., Hermanns, H. & Jansen, D. N. Logic and model checking for hidden Markov models. In (ed Wang, F.) Formal Techniques for Networked and Distributed Systems - FORTE 2005, 98â112 (Springer Berlin Heidelberg, Berlin, Heidelberg, 2005).

Hernández, N., Eder, K., Magid, E., Savage, J. & Rosenblueth, D. A. Marimba: a tool for verifying properties of hidden markov models. In (eds Finkbeiner, B., Pu, G. & Zhang, L.) Automated Technology for Verification and Analysis, 201â206 (Springer International Publishing, Cham, 2015).

Wei, W., Wang, B. & Towsley, D. Continuous-time hidden Markov models for network performance evaluation. Perform. Eval. 49, 129â146 (2002). Performance 2002.

Baier, C., Haverkort, B., Hermanns, H. & Katoen, J. P. Model-checking algorithms for continuous-time Markov chains. IEEE Trans. Softw. Eng. 29, 524â541 (2003).

Kwiatkowska, M., Norman, G. & Parker, D. Stochastic model checking. In International Conference on Formal Methods for Performance Evaluation. 220â270 (2007).

Aziz, A., Sanwal, K., Singhal, V. & Brayton, R. Verifying continuous time Markov chains. In Computer Aided Verification, 269â276 (Springer, 1996).

Kwiatkowska, M., Norman, G. & Parker, D. PRISM 4.0: Verification of probabilistic real-time systems. In Proc. of the 23rd Int. Conf. on Computer Aided Verification, vol. 6806 of LNCS, 585â591 (Springer, 2011).

Dehnert, C., Junges, S., Katoen, J.-P. & Volk, M. A Storm is coming: a modern probabilistic model checker. In 29th International Conference on Computer Aided Verification (CAV), 592â600 (2017).

Calinescu, R., Ceska, M., Gerasimou, S., Kwiatkowska, M. & Paoletti, N. Efficient synthesis of robust models for stochastic systems. J. Syst. Softw. 143, 140â158 (2018).

International Electrotechnical Commission. IEC 61508âFunctional safety of electrical/electronic/programmable electronic safety-related systems (2010).

Gradshteyn, I. S. & Ryzhik, I. M. Definite integrals of elementary functions. In (eds Zwillinger, D. & Moll, V.) Table of Integrals, Series, and Products (Elsevier Science, 2015), 8th edn.

Jensen, J. L. W. V. Sur les fonctions convexes et les inégalités entre les valeurs moyennes. Acta Math. 30, 175â193 (1906).

Lah, P. & RibariÄ, M. Converse of Jensenâs inequality for convex functions. Publ. Elektroteh. Fak. Ser. Mat. Fiz. 412/460, 201â205 (1973).

KlariÄIÄ Bakula, M., PeÄariÄ, J. & PeriÄ, J. On the converse Jensen inequality. Appl. Math. Comput. 218, 6566â6575 (2012).

Bernardo, J. M. & Smith, A. F. M. Bayesian Theory (Wiley, 1994).

Krpelik, D., Coolen, F. P. & Aslett, L. J. Imprecise probability inference on masked multicomponent system. In International Conference Series on Soft Methods in Probability and Statistics, 133â140 (Springer, 2018).

Epifani, I., Ghezzi, C. & Tamburrelli, G. Change-point detection for black-box services. In Proc. of the 18th ACM SIGSOFT Int. Symp. on Foundations of Software Engineering, FSE â10, 227â236 (ACM, New York, NY, USA, 2010).

Zhao, X., Calinescu, R., Gerasimou, S., Robu, V. & Flynn, D. Interval change-point detection for runtime probabilistic model checking. In 2020 35th IEEE/ACM International Conference on Automated Software Engineering (ASE), 163â174 (IEEE, 2020).

Acknowledgements

This project has received funding from the ORCA-Hub PRF project âCOVEâ, the Assuring Autonomy International Programme, the UKRI project EP/V026747/1 âTrustworthy Autonomous Systems Node in Resilienceâ, and the European Unionâs Horizon 2020 project SESAME (grant agreement No. 101017258).

Author information

Authors and Affiliations

Contributions

X.Z.: Conceptualisation, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Software, Supervision, Validation, Writingâoriginal draft, Writingâreview and editing; S.G.: Conceptualisation, Data curation, Funding acquisition, Investigation, Methodology, Project administration, Software, Supervision, Validation, Visualisation, Writingâoriginal draft, Writingâreview and editing; R.C.: Conceptualisation, Formal analysis, Funding acquisition, Methodology, Project administration, Supervision, Visualisation, Writingâoriginal draft, Writingâreview and editing; C.I.: Data curation, Investigation, Validation, Visualisation, Writingâoriginal draft, Writingâreview and editing; V.R.: Conceptualisation, Funding acquisition, Methodology, Project administration, Supervision, Writingâreview and editing; D.F.: Conceptualisation, Funding acquisition, Methodology, Project administration, Supervision, Writingâreview and editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Communications Engineering thanks Koorosh Aslansefat and the other, anonymous, reviewer for their contribution to the peer review of this work. Primary Handling Editors: Alessandro Rizzo and Mengying Su.

Additional information

Publisherâs note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the articleâs Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the articleâs Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhao, X., Gerasimou, S., Calinescu, R. et al. Bayesian learning for the robust verification of autonomous robots. Commun Eng 3, 18 (2024). https://doi.org/10.1038/s44172-024-00162-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s44172-024-00162-y