Abstract

Detection and diagnosis of colon polyps are key to preventing colorectal cancer. Recent evidence suggests that AI-based computer-aided detection (CADe) and computer-aided diagnosis (CADx) systems can enhance endoscopists' performance and boost colonoscopy effectiveness. However, most available public datasets primarily consist of still images or video clips, often at a down-sampled resolution, and do not accurately represent real-world colonoscopy procedures. We introduce the REAL-Colon (Real-world multi-center Endoscopy Annotated video Library) dataset: a compilation of 2.7âM native video frames from sixty full-resolution, real-world colonoscopy recordings across multiple centers. The dataset contains 350k bounding-box annotations, each created under the supervision of expert gastroenterologists. Comprehensive patient clinical data, colonoscopy acquisition information, and polyp histopathological information are also included in each video. With its unprecedented size, quality, and heterogeneity, the REAL-Colon dataset is a unique resource for researchers and developers aiming to advance AI research in colonoscopy. Its openness and transparency facilitate rigorous and reproducible research, fostering the development and benchmarking of more accurate and reliable colonoscopy-related algorithms and models.

Similar content being viewed by others

Background & Summary

Colorectal cancer (CRC) remains a significant global health concern, with approximately two million new cases detected annually1,2. Since more than 95% of CRC originates from premalignant (adenomas) polyps, their detection and removal can substantially reduce the incidence and mortality of CRC3,4. Colonoscopy, a well-established screening procedure, has positively impacted CRC incidence in countries where it has been introduced5. However, the inherent variability in the quality of colonoscopy due to its high dependence on human skill and vigilance poses challenges to its effectiveness as a screening tool6,7.

In response to these challenges, Artificial Intelligence (AI) has emerged as a promising tool for augmenting human capabilities during live colonoscopy procedures8. Its potential as a reliable tool for improving performances and standardizing screening is increasingly being recognized9. Evidence from randomized controlled trials highlights the effectiveness of computer-aided polyp detection (CADe) systems in preventing missed polyps, thereby enhancing the quality of colonoscopy procedures10,11. Furthermore, interest in computer-aided diagnosis (CADx) has grown, given its potential to assist real-time decision-making on polyp optical characterization12,13.

Significant challenges remain despite the promising advancements in this field, driven primarily by large MedTech corporations. The high cost and logistical complexities of acquiring and labeling large video recording datasets have limited the involvement of academic centers, with the scientific projects stemming from universities focusing primarily on small-size collections of still images or short video clips14,15,16,17,18,19,20,21,22,23,24,25. However, colonoscopy CAD(e/x) systems require the integration of video understanding algorithms that can execute a range of computer vision functions, including image and video classification, object classification, detection, and segmentation. Tabulated in Tables 1 and 2 is a summary of available datasets for open research for polyp localization and classification. Only two datasets include information on polyp size (SUN and PICCOLO datasets), no dataset is dedicated to polyp tracking and no dataset offers a comprehensive annotation of full, unaltered colonoscopy procedures. Instead, open datasets often focus on short video clips that include frames with polyps and omit extensive portions of colonoscopy videos without polyps (negative frames), or they sample only a small fraction of these negative frames. In contrast, the reality of colonoscopy videos features 80â90% negative frames (as also illustrated in Fig. 4), which are important for realistic AI model benchmarking and training as outlined by recent literature23,26. Furthermore, video frames from public datasets are seldom not at native spatial-temporal resolution and typically sourced from a limited number of centers.

Physicians do not perform tasks such as polyp detection and classification in the real world by statically evaluating still or nearly perfect images or short video clips but instead via a process of temporal visual information reasoning7,27. The discrepancy between the available datasets and the real-world scenario inevitably affects both the design and development of CAD(e/x) algorithms, with a large part of academic research works to date still focusing on frame-by-frame approaches placing little emphasis on live processing speed and latency or full-procedure evaluation. Thus resulting in sub-optimal learning and unrealistic AI model performance assessments13,23,26,28. Similarly, open research challenges18,28,29,30,31,32, primarily centered on the accuracy of polyp detection, segmentation, and classification tasks, have gradually shifted their focus towards enhancing model robustness, speed and efficiency. However these challenges, employing datasets detailed in Tables 1 and 2, inherently reflect the above mentioned limitations by not fully capturing the dynamic and complex nature of real-world colonoscopy procedures.

This paper aims to bridge the gap between open and privately-funded research by introducing a comprehensive, multi-center dataset of unaltered, real-world colonoscopy videos. The REAL-Colon (Real-world multi-center Endoscopy Annotated video Library) dataset comprises recordings of sixty colonoscopies. In creating this dataset, a consortium comprising Sano Hospital in Hyogo, Japan; University Hospital of St. Pölten, Austria; and Ospedale Nuovo Regina Margherita in Rome, Italy, each provided a subset of fifteen patients. These patient cases were drawn from distinct clinical studies wherein colonoscopy procedures were documented on video as part of the study protocol. Additionally, Cosmo Intelligent Medical Devices contributed by supplementing the dataset with fifteen patient cases from one of their sponsored studies conducted within the United States. Crucially, Cosmo Intelligent Medical Devices also annotated the entire dataset of 2.7âM image frames to the highest quality standard.

For each video, each colorectal polyp has been annotated with a bounding box in each video frame where it appeared by trained medical image annotations specialists, supervised by expert gastroenterologists to ensure their accuracy and consistency. Polyp information, including histology, size, and anatomical site, has been recorded, double-checked by annotation specialists and at least an experienced gastroenterologists, and reported with several other clinical variables. Patient variables obtained from electronic case report forms (eCRF), including endoscope brand and bowel cleanliness score (BBPS) have also been collected for each video. As illustrated in Tables 1 and 2, the REAL-Colon dataset stands unparalleled in its scale, quality, and diversity, offering a singular asset for researchers and developers dedicated to pushing the boundaries of AI in colonoscopy. Furthermore, REAL-Colon uniquely enables comprehensive benchmarking of polyp detection algorithms against the backdrop of authentic, unedited full-procedure videos, markedly distinguishing our contribution from existing datasets.

Methods

Data cohorts

The REAL-Colon dataset is a compilation of colonoscopy video recordings that combines diverse endoscopy practices across various geographical regions, thereby enhancing the heterogeneity of the physicians' maneuvers captured during the procedures. Each colonoscopy has been recorded in its entirety, from start to finish, at maximum resolution, devoid of any pauses or interruptions. In the dataset, the first clinical study, denoted as â001â, is a trial sponsored by Cosmo Intelligent Medical Devices. It pertains to the regulatory approval of an AI medical device platform, GI Genius, in the United States. This randomized controlled trial (identified as NCT03954548 in ClinicalTrials.gov) was conducted from February 2020 to May 2021 in Italy, the United Kingdom, and the United States11. However, only patients from the three participating U.S. clinical centers were included in the REAL-Colon dataset. The second clinical study, tagged as â002â in the dataset, is a single-center, prospective non-profit study (identifier NCT04884581 in ClinicalTrials.gov) conducted in Italy from May 2021 to July 202133. In addition to these structured pre-registered studies, two clinical centers contributed video-recorded colonoscopies from their internal acquisition campaigns designed for scientific research. The first campaign, termed â003â in the dataset, recruited patients from the University Hospital of St. Pölten, Austriaâs Gastroenterology and Hepatology and Rheumatology department. The second campaign, labeled â004â, involved patients from the Gastrointestinal Center, Sano Hospital, Hyogo, Japan.

Before participating, all patients from the four data acquisition efforts provided written consent for their anonymised data to be used in research studies. The two clinical studies (â001â and â002â) and the two acquisition campaigns (â003â and â004â) each received the necessary approvals from their respective Ethical Committees or local Institutional Review Boards. These were as follows: â001â - Mayo Clinic (approval number: 19-007492), â002â - Comitato Etico Lazio 1 (611/CE Lazio1), â003â - Ethikkommission Niederösterreich (GS1-EK-3/196-2021), â004â - Sano Hospital (202209-02). All relevant patient data during the acquisition were recorded via an electronic case report form (eCRF) system.

The participants from all four cohorts were patients aged 40 years or above undergoing colonoscopy for primary CRC screening, post-polypectomy surveillance, positive fecal immunochemical tests, or symptom/sign-based diagnosis. Exclusion criteria included a history of CRC or inflammatory bowel disease, a history of colonic resection, emergency colonoscopy, or ongoing antithrombotic therapy. Standard resection techniques were utilized throughout each colonoscopy procedure to excise detected polyps. Endoscopists documented various polyp characteristics, including size, anatomical location, and morphological traits, in accordance with the Paris classification34. Subsequently, each polyp, whether resected or biopsied, underwent expert pathological analysis. Resected tissue samples confirmed histologically as colorectal polyps were classified per the Revised Vienna Classification35. By integrating data from these four diverse cohorts across six medical centers spanning three continents, we have strived to build a comprehensive, heterogeneous dataset that robustly represents real-world colonoscopy practices.

Video recording

The video acquisition process was executed to preserve the quality of the original footage and was the same for the four cohorts. Professional video recorders, capable of capturing YUV video streams with 4:2:2 chroma subsampling and 10-bit depth, were used to ensure no loss in color or resolution. These recorders effectively captured the original streams at a resolution of 1920âÃâ1080 (interlaced), resulting in high-definition video material. Standard endoscopy equipment manufactured by Olympus and Fujifilm was used. Following the recording, videos were compressed using the high-quality Apple ProRes codec to ensure the preservation of the image quality while reducing the overall file size. The videos were subsequently converted into individual frames using the ffmpeg software tool, opting for JPEG to balance image quality with file size, thereby managing the overall dataset size. This conversion was carried by configuring the -qscale:v, -qmin, and -qmax options to 1, thus minimizing compression impact while avoiding the large file sizes associated with lossless formats.

Anonymization protocol

We established a full-anonymization protocol before transferring videos and clinical data from individual studies to Cosmo Intelligent Medical Devices for dataset labeling and compilation. This protocol entailed the removal of all direct identifiers, including personal contact details. Specifically, videos were edited at source to eliminate any on-screen data from the Electronic Medical Records (EMR) local system, ensuring that frames were free from any information that could reveal patient or procedure identities. Additionally, all direct identifiers were removed at source from electronic Case Report Forms (eCRFs) and all dataset frames cropped to the endoscopeâs field of view. Consequently, only demographic quasi-identifiers such as age and sex were shared with Cosmo Intelligent Medical Devices. This full-anonymization protocol, supported by stringent security and privacy measures, effectively reduced data stewardship obligations under GDPR and HIPAA terms.

Dataset design

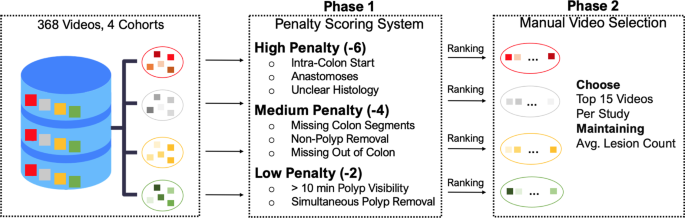

The selection of our dataset from an initial pool of 368 video recordings across four distinct cohorts aimed to create a diverse and representative dataset. To achieve this, we implemented a two-step strategy to curate a set of 60 videos, with fifteen videos independently sampled from each cohort, as depicted in Fig. 1.

Flowchart outlining the two-phase selection process for creating the REAL-Colon dataset from 368 video recordings across four distrinct cohorts. Phase 1 applies a penalty scoring system based on video and histological criteria, leading to Phase 2, where the 15 videos per cohort are manually selected, after ranking, to ensure diversity and representation while maintaining the cohort average lesion count.

Our method was grounded in a penalty scoring system in the first selection phase (Fig. 1, Phase 1). We established three penalty categories: high (â6), medium (â4), and low (â2). Each penalty point was cumulative and calculated based on the deviation from ideal recording conditions. Low penalties were given to videos showing polyps visible for more than ten minutes, as such instances would skew the video duration towards long resection maneuvers. Simultaneous polyp removal also attracted a low penalty. This situation, where more than one polyp is resected with the same instrument, can lead to ambiguous associations between polyps shown in the video recordings and histological descriptions of polyps.

Medium penalties were assigned to videos that omitted out-of-colon sequences before and after the procedure. These segments of the video are important as AI algorithms must operate effectively beyond the colon, and their omission could potentially bias the recordings. Situations where all colon sections were not identifiable during withdrawal or when non-polyp biopsies and polypectomies were performed also received a medium penalty.

Videos that initiated directly within the colon, displayed anastomoses, or did not have available histological information for all excised lesions, were subjected to high penalties. Importantly, high penalty points were given when non-polyps (lymphoid follicles, lymphoid aggregates, ulcers, lipomas, or healthy tissue) histological results were unclear. This could imply random biopsies, incorrect resections, or ambiguous histology, all of which could lead to confusion or misinterpretation of the data.

During the second phase (Fig. 1, Phase 2), our selection favored videos with the least penalties. We balanced this with the need to maintain a representative dataset. For this reason, even among the videos with the highest penalties, we ensured the average lesion count within each selected cohort approximated the total datasetâs average. This procedure preserved the distribution of lesions in our final selection, mirroring the original dataset. The highest penalty within our curated REAL-Colon dataset was â4.

Given their critical role in CAD model training, no specific criteria were initially set for histology ground truth classifications (adenoma, non-adenoma). However, recognizing their importance, we conducted a retrospective review and found that the distribution of these classifications was in line with the originating clinical studies, confirming the validity of our approach.

Clinical data

The REAL-Colon dataset is structured such that each video corresponds to an individual patient, and may or may not contain instances of colon polyps. As such, there are clinical values that pertain to the patient and others that are specific to the polyps. Clinical information associated with each video includes patient demographics such as age and sex and technical details of the endoscope used, including the brand, the frames per second (fps) of the video, and the number of polyps resected during the procedure.

An essential quality parameter at the patient level is the evaluation of the colonâs cleanliness. Before a colonoscopy, patients are administered a laxative to eliminate solid waste from the digestive tract swiftly. The effectiveness of this preparation procedure is routinely evaluated using a clinical scale known as the Boston Bowel Preparation Scale (BBPS)5. This scale, utilized by endoscopists and ranging from 0 to 9, provides a measure of the quality of bowel preparation following cleansing procedures, thereby enhancing the clinical relevance of the dataset.

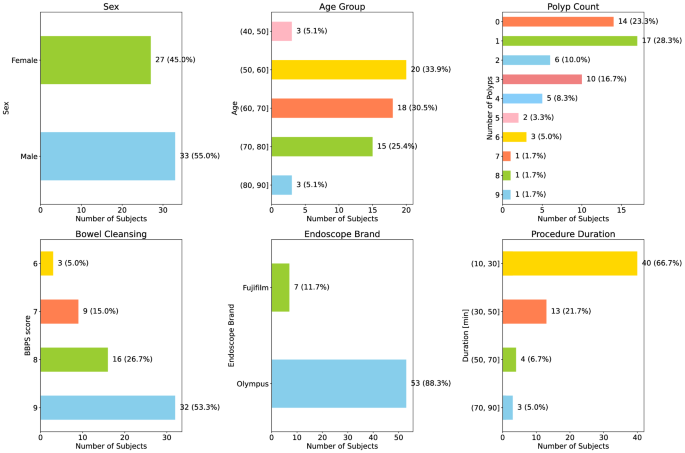

The dataset encompasses a broad range of patients, with an average age of 64.6â±â10.7 and a male predominance of 55%. Notably, 14 out of the 60 videos (representing 23%) within the dataset do not contain any polyps, which underscores the variety of clinical scenarios captured.

For a comprehensive understanding and visualization of the distribution of these variables within our dataset, we direct readers to Fig. 2. This figure presents histograms detailing these parameters, thereby providing a more holistic view of the dataset.

Polyp detection annotation

A team of ten medical image annotation specialists executed a comprehensive bounding box annotation protocol under the supervision of expert gastroenterologists. A key aspect of the protocol involved annotating the polyp, beginning from its total resection and proceeding in reverse frame by frame until its initial appearance. This approach facilitated lesion tracking across sequential frames, even when the lesions were temporarily invisible.

To accomplish the task, the team employed a specialized, in-house annotation tool from Cosmo Intelligent Medical Devices. Although not available for public use, this tool mirrors the functionalities of many other video annotation software, enabling the sequential identification of polyps and the application of bounding boxes around them within each frame. A short video showcasing the bounding box annotation process with this tool has been included as Supplementary Material at https://doi.org/10.6084/m9.figshare.25315261.

To ensure accuracy and consistency in the polyp annotation process, we adopted an iterative approach. Expert gastroenterologists used a visualization tool that allowed for pausing, fast-forwarding, and slowing down to review each polyp annotation. Any issues identified were addressed during weekly collaborative meetings by returning the annotations to the specialists for correction. These sessions provided a platform for collaboration, promoting valuable exchanges between the annotation team and the supervising expert gastroenterologists.

This procedure culminated in a dataset comprising 2,757,723 frames and 132 excised colorectal polyps. The process yielded a total of 351,264 bounding box annotations.

Polyp histopathological information

Each annotation of polyps underwent cross-verification against its corresponding eCRF for each patient. The objective of this validation process, supervised by an expert gastroenterologist, was to eliminate any potential discrepancies between the video annotations and the corresponding histological data.

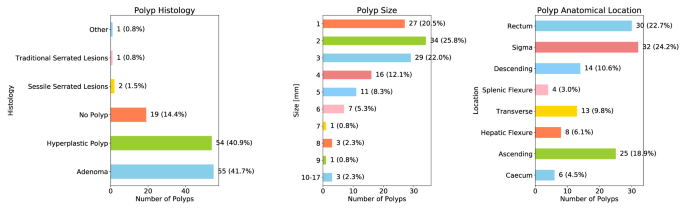

The dataset incorporated detailed information for each polyp, including size, colon anatomical location, and histopathological results. The distribution of this data is represented by histograms in Fig. 3. Notably, the histological analysis indicated that out of 132 resected polyps, 19 (14%) were not histologically classified as polyps and named âNo Polypâ. These were identified as lymphoid follicles, lymphoid aggregates, ulcers, lipomas, or healthy tissue.

Moreover, the dataset presents comprehensive histological data and maintains a balanced distribution between adenomatous and non-adenomatous polyps, constituting 40% each. One polyp was classified as âother,â which was identified as a fibrous anal polyp.

Data Records

The complete REAL-Colon dataset36 is now available for download on Figshare at https://doi.org/10.25452/figshare.plus.22202866. The dataset has been made available under the Creative Commons Attribution (CC BY) license, facilitating both educational and research applications. Users are encouraged to acknowledge this paper when utilizing the dataset in their work. The Supplementary Material37 for this work can be found at https://doi.org/10.6084/m9.figshare.25315261.

Bounding box annotations for each ground-truth polyp detection in the dataset were stored in the MS COCO format38. An XML file was generated for each frame, following the MS COCO template. The dataset includes the following components:

-

60 compressed folders titled SSS-VVV_frames, each containing frames from a specific recording.

-

60 compressed folders titled SSS-VVV_annotations, each encompassing the annotations for each frame.

-

A video_info.csv file, providing metadata for each video. This includes unique video ID, video FPS, number of frames, original cohort (from 001 to 004), patientâs age and sex, number of polyps discovered in the video, BBPS score, and the brand of the endoscope used.

-

A lesion_info.csv file, offering metadata for each polyp, such as unique polyp ID, the unique video ID it belongs to, polyp size (in millimeters), the polypâs colon location, histology, and extended histology, which presents additional information from the histological report in the eCRF.

-

A dataset_description.md file, which is a README file providing an overview of the dataset.

Technical Validation

Polyp dynamics and characteristics

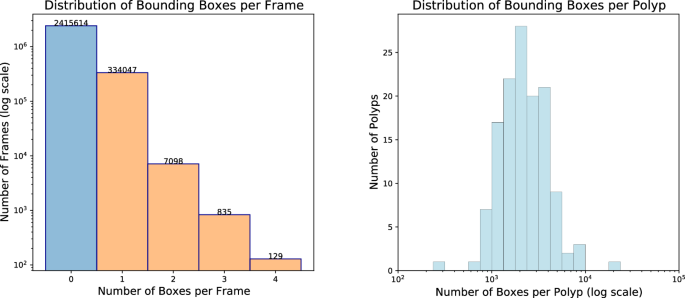

Figure 4 (left) displays the distribution of bounding boxes per frame in the REAL-Colon dataset36. Of 2,757,723 total frames, 87.6% (2,415,614) do not contain any bounding box annotation, while 12.4% (342,109) feature at least one from the 132 excised colorectal polyps. Notably, less than 0.3% of frames contain multiple polyps â 2.7% of positive frames - peaking at four. When considering only the frames within the first 5âseconds of polyp appearance, the occurrence of multiple polyps increases to 7.9%. This is relevant prior information for learning-based computer vision algorithms and highlights the distinct nature of detection, tracking, and classification tasks in colonoscopy compared to standard computer vision tasks that often involve simultaneous detection of numerous classes or objects, as seen in MS COCO or Kinetics datasets.

Figure 4 (right) illustrates the distribution of bounding boxes per polyp, indicating a mean appearance duration of 1âminute and 15âseconds per polyp, with the longest enduring over 12âminutes.

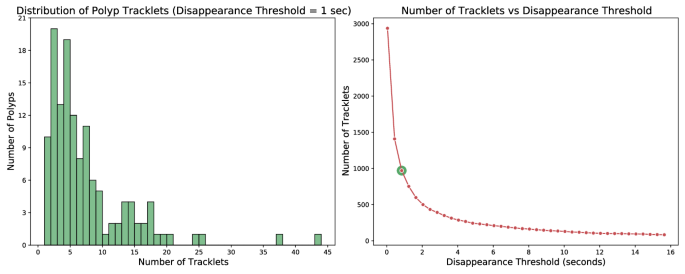

The bounding box detections corresponding to a single polyp are not necessarily contiguous. They may fracture into numerous tracklets as polyps can momentarily disappear from view. This phenomenon is elucidated on the left side of Fig. 5, which showcases a histogram of the number of tracklets into which the dataset polyps are divided. In this figure, the criterion for identifying a new tracklet is the absence of a bounding box instance for that polyp for more than one second. It can be noticed that utilizing this criterion, only 10 out of 132 polyps have a continuous appearance without disappearing from view for more than 1âsecond. This underlines the relevance of the task of polyp tracking for real-time colonoscopy applications where accurately re-identify tracklets is essential to prevent the need to restart temporal analysis with each polyp disappearance. Furthermore, the right side of Fig. 5 presents the total number of tracklets that emerge when applying different temporal thresholds. For instance, when applying a 15-second threshold, over 100 tracklets persist, possibly signifying the most challenging cases.

Left: Histogram displaying the number of tracklets per polyp, using a 1-second threshold to identify separate tracklets. The x-axis represents the number of tracklets associated with each polyp, while the y-axis shows the count of polyps with that number of tracklets. Right: Plot illustrating the decrease in the number of tracklets as a function of the disappearance threshold. Here, the x-axis signifies the disappearance threshold in seconds, which determines when a new tracklet is created once a polyp disappears for longer than the threshold duration. The y-axis reports the resulting number of tracklets.

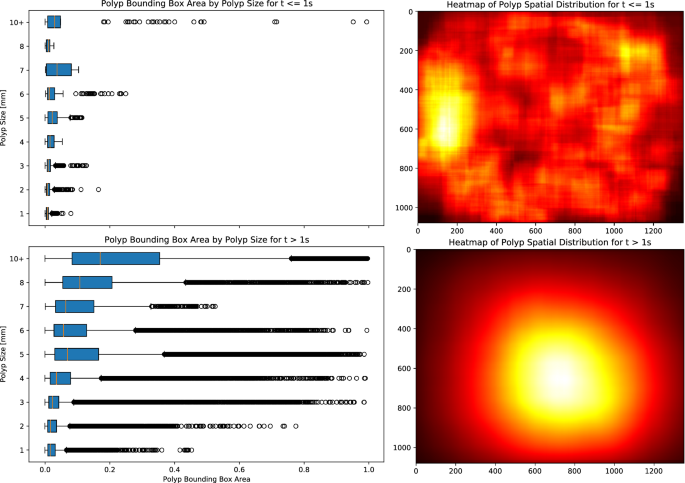

Figure 6 (right) presents two heatmaps illustrating the spatial distribution of polyps within the frames, stratified by their time of appearance: the initial second and thereafter. The heatmaps emphasize that endoscopists tend to frame polyps closer to the center of the image as time progresses, despite their initial scattered appearance. In contrast, Fig. 6 (left) exhibits two boxplots contrasting the bounding box dimensions over time with the actual sizes (in millimeters) as indicated in the dataset. These plots reveal that irrespective of their actual sizes, all bounding boxes initially have smaller dimensions during early detection. Additionally, it can be observed that as detection progresses, all polyps are characterized by numerous bounding boxes occupying more than 50% of the image. This indicates that determining polyp size solely based on bounding box dimensions is challenging and requires additional reasoning on contextual spatial-temporal information.

Boxplots contrasting actual polyp sizes with bounding box dimensions (left) and heatmaps depicting bounding box placements (right) during the early phase of appearance (â¤1âs) and afterwards (>1âs). In the early frames, polyps are captured within small bounding boxes scattered across the colon. As time progresses, the endoscopist centralizes the polyps in the frame, leading to larger and more variable in dimensions bounding boxes.

Polyp detection

This section presents a technical validation conducted to evaluate polyp detection in the REAL-Colon dataset, primarily focusing on verifying the quality and usefulness of the dataset. For this task, we leveraged an off-the-shelf SSD object detection model39, as implemented in the NVIDIAâs DeepLearningExamples repository. Comprehensive training and testing code is accessible via our GitHub repositories at https://github.com/cosmoimd/DeepLearningExamples.

Our experiments do not merely target the absolute performance of the proposed method on the REAL-Colon dataset; instead, we focus on evaluating the datasetâs utility for this specific task. Specifically, we aimed to ascertain the influence of possessing a substantial sample set for each polyp on the accuracy of the trained model. Moreover, we conducted multiple training experiments incorporating varying proportions of negative frames from each video (1%, 5%, 10% and 100% of negative frames from each video) in the training dataset to evaluate their impact on model performance.

We partitioned the first 10 videos from each cohort into the training set (videos 00X-001 to 00X-010), the following two videos into the validation set (videos 00X-011 and 00X-012), and the remaining three videos into the test set (videos 00X-013 to 00X-015). For each model training session, we used a batch size of 96 and an image resolution of 300âÃâ300. Standard augmentation techniques, including scaling, random cropping, horizontal flipping, and image normalization, were applied, using the same parameters as those utilized for the MS COCO Dataset in the repository. All models were trained and tested on an Nvidia Tesla V100 GPU.

In Table 3, we report performance in terms of Average Precision (AP), measuring the mean AP across a range of IoU thresholds from 0.5 to 0.95 (measuring overlap between model bounding box predictions and GTs), in steps of 0.05. AP50 and AP75, instead, specifically refer to the model Average Precision at IoU thresholds of 0.5 and 0.75, respectively, and indicate more accurate detection performance. For every model, we also report the False Positive Rate (FPR) and the True Positive Rate (TPR) per video, defined as the percentage of negative frames in which models erroneously detected bounding boxes, indicating the rate of false alarms on negative frames, and the percentage of positive frames in which at least a bounding box was detected. Throughout the training process, the validation set was used to monitor the AP at various epochs, and the best-performing model on the validation set throughout the training was selected. All experiments were tested on the same test set of 591,647 frames, including all frames (with and without boxes) from 12 test videos.

The initial analysis presented in Table 3 highlights that incorporating a greater number of positive samples, despite them representing identical polyps, significantly boosts the modelâs performance. Subsequent rows detail the effects of varying negative sample proportions in the training set, indicating optimal SSD model performance with the full inclusion of available negative images alongside all positive frames. Although the current strategy prioritizes this comprehensive approach, future advancements might benefit from a predefined negative-to-positive frame ratio per training epoch to refine the training process further. The FPR and TPR results also underscore accuracy improvements by fully incorporating negative frames during training. The FPR is a critical metric because false alerts can lead to operator fatigue and distractions. Minimizing FPR throughout the entire procedure, while maintaining a high TPR, is essential to develop robust CADe systems. The REAL-Colon dataset enables such evaluations, facilitating the optimization of these key performance indicators.

In Table 4, we present the performance of the best model from Table 3 (last row), detailing AP (Average Precision) and Average Recall (AR) across a spectrum of IoU thresholds from 0.5 to 0.95. This analysis encompasses different subsets of positive polyp frames within the test set, specifically distinguishing between frames containing diminutive or non-diminutive polyps, adenomatous polyps (including adenoma and traditional serrated adenoma (TSA) polyps) versus non-adenomatous polyps (including polyps with sessile serrated lesion (SSL) and hyperplastic (HP) histology), and excluding polyps not falling into these two sub-categories. Additionally, by integrating polyp anatomical location information with histology data, we were able to compute performance metrics for hyperplastic polyps in the sigmoid-rectum compared to all other locations. This distinction is crucial because hyperplastic polyps in the sigmoid-rectum exhibit different biological behaviors and cancer risk profiles compared to those in other parts of the colon, representing an example of the importance of location-specific performance evaluation for more precise and clinically relevant AI model assessments. Ideally, a robust model should demonstrate uniformly high performance across these varied classifications. Future efforts should concentrate on exploring augmentation techniques, algorithmic modifications, and training strategies to ensure such robust performance across all categories.

In Table 4, we present the performance of the top-performing model from Table 3, specifically focusing on frames from the initial second and first three seconds of polyp appearance, rather than evaluating all positive frames. This early detection window is critical, and the REAL-Colon dataset facilitates such analysis due to its frame-by-frame annotation at full temporal resolution. The results underscore the difficulty in accurately identifying polyps during these initial moments. Maintaining high early detection accuracy, while maintaining a low false positive rate throughout the video, despite rapid movements, washing, cleaning, and the appearance of polyp-like structures, presents a significant challenge. The seemingly low AP results reported in Table 3 were anticipated, as the REAL-Colon dataset mirrors the complex real-world conditions. We advocate for dedicated research efforts to enhance model performance on this real-world benchmark, starting with the comparison of more advanced object detection models against the simple SSD baseline results documented in this work.

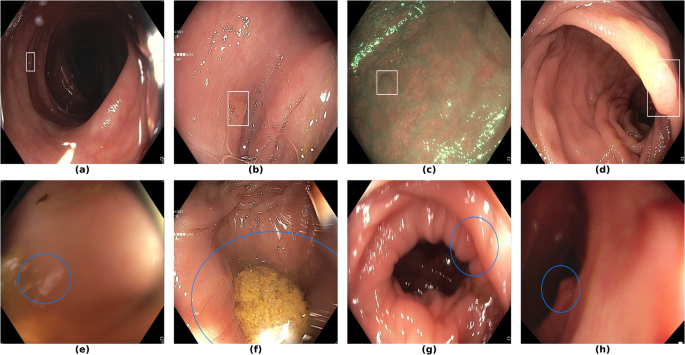

Finally, in Fig. 7, we display examples of false negatives and false positives from the test set, generated by the best performing model. To visually assess the performance on a whole video, we have uploaded a 60-minute colonoscopy video featuring 6 polyps, the longest in our test set, at https://doi.org/10.6084/m9.figshare.25315261 (with predictions marked in cyan and ground truth boxes in white). The image examples illustrate how the model struggles with small, occluded, or poorly imaged polyps, and generates false positives in areas that visually resemble polyps, often due to motion or suboptimal imaging Table 5.

Sample images from the testing dataset, with results from the best performing model. White boxes are the ground truth annotations, blue ellipses are the model predictions. In the first row, examples of false negative polyps are shown: (a) a small and distant polyp, (b) a polyp partially covered by water/bubbles, (c) a polyp framed in blue light, (d) a large polyp near the image boundary and overexposed. In the second row, examples of false positive detections are shown: (e) the model activates on a artifact due to stain and motion blur, (f) the model activates on a solid residue, (g) the model activates on an area of the colonic mucosa that is not well inflated, (h) the model activates on a dark and distant area of the colonic mucosa whose shape is similar to a polyp.

Code availability

To facilitate the process of downloading and exploring the dataset, we have made available a set of useful Python codes on our GitHub repository at https://github.com/cosmoimd/real-colon-dataset. These scripts facilitate easy access to the data and assist in its analysis, enabling users to reproduce all the plots presented in this paper. Code for the training and testing of the polyp detection models can be found separately at https://github.com/cosmoimd/DeepLearningExamples.

References

Sung, H. et al. Global cancer statistics 2020: Globocan estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 71, 209â249 (2021).

Morgan, E. et al. Global burden of colorectal cancer in 2020 and 2040: incidence and mortality estimates from globocan. Gut 72, 338â344 (2023).

Bretthauer, M. et al. Effect of colonoscopy screening on risks of colorectal cancer and related death. N. Engl. J. Med. 387, 1547â1556 (2022).

Zorzi, M. Adenoma detection rate and colorectal cancer risk in fecal immunochemical test screening programs: An observational cohort study. Ann. Intern. Med. 176, 303â310 (2023).

Dekker, E. & Rex, D. K. Advances in crc prevention: Screening and surveillance. Gastroenterology 154, 1970â1984 (2018).

Kaminski, M. F., Robertson, D. J., Senore, C. & Rex, D. K. Optimizing the quality of colorectal cancer screening worldwide. Gastroenterology 158, 404â417 (2020).

Cherubini, A. & East, J. E. Gorilla in the room: Even experts can miss polyps at colonoscopy and how ai helps complex visual perception tasks. Dig. Liver Dis. 55, 151â153 (2023).

Ahmad, O. F. et al. Artificial intelligence and computer-aided diagnosis in colonoscopy: current evidence and future directions. Lancet Gastroenterol. Hepatol. 4, 71â80 (2019).

Berzin, T. M. Position statement on priorities for artificial intelligence in gi endoscopy: a report by the asge task force. Gastrointest. Endosc. 92, 951â959 (2020).

Repici, A. Efficacy of real-time computer-aided detection of colorectal neoplasia in a randomized trial. Gastroenterology 159, 512â520.e7 (2020).

Wallace, M. B. Impact of artificial intelligence on miss rate of colorectal neoplasia. Gastroenterology (2022).

Spadaccini, M. Computer-aided detection versus advanced imaging for detection of colorectal neoplasia: a systematic review and network meta-analysis. Lancet Gastroenterol. Hepatol. 6, 793â802 (2021).

Biffi, C. A novel ai device for real-time optical characterization of colorectal polyps. NPJ Digit. Med. 5, 84 (2022).

Bernal, J., Sánchez, J. & Vilariño, F. Towards automatic polyp detection with a polyp appearance model. Pattern Recognit. 45, 3166â3182 (2012).

Silva, J., Histace, A., Romain, O., Dray, X. & Granado, B. Toward embedded detection of polyps in wce images for early diagnosis of colorectal cancer. Int. J. Comput. Assist. Radiol. Surg. 9, 283â293 (2014).

Bernal, J. Wm-dova maps for accurate polyp highlighting in colonoscopy: Validation vs. saliency maps from physicians. Comput. Med. Imaging Graph. 43, 99â111 (2015).

Tajbakhsh, N., Gurudu, S. R. & Liang, J. Automated polyp detection in colonoscopy videos using shape and context information. IEEE Trans. Med. Imaging 35, 630â644 (2015).

Angermann, Q. et al. Towards real-time polyp detection in colonoscopy videos: Adapting still frame-based methodologies for video sequences analysis. In Proc. 4th Int. Workshop CARE and 6th Int. Workshop CLIP, MICCAI 2017, 29â41 (Springer, 2017).

Mesejo, P. et al. Computer-aided classification of gastrointestinal lesions in regular colonoscopy. IEEE Trans. Med. Imaging 35, 2051â2063 (2016).

Jha, D. et al. Kvasir-seg: A segmented polyp dataset. In Proc. 26th Int. Conf. MultiMedia Modeling, MMM 2020, 451â462 (2020).

Sánchez-Peralta, L. F. et al. Piccolo white-light and narrow-band imaging colonoscopic dataset: a performance comparative of models and datasets. Appl. Sci. 10, 8501 (2020).

Li, K. et al. Colonoscopy polyp detection and classification: Dataset creation and comparative evaluations. PLoS ONE 16, e0255809 (2021).

Misawa, M. et al. Development of a computer-aided detection system for colonoscopy and a publicly accessible large colonoscopy video database (with video). Gastrointest. Endosc. 93, 960â967 (2021).

Ma, Y., Chen, X., Cheng, K., Li, Y. & Sun, B. Ldpolypvideo benchmark: a large-scale colonoscopy video dataset of diverse polyps. In Proc. 24th Int. Conf. Med. Image Comput. Comput. Assist. Intervent., MICCAI 2021, 387â396 (2021).

Ali, S. et al. A multi-centre polyp detection and segmentation dataset for generalisability assessment. Sci. Data 10, 75 (2023).

Nogueira-RodrÃguez, A., Glez-Peña, D., Reboiro-Jato, M. & López-Fernández, H. Negative samples for improving object detectionâa case study in ai-assisted colonoscopy for polyp detection. Diagnostics 13, 966 (2023).

Reverberi, C. et al. Experimental evidence of effective human-ai collaboration in medical decision-making. Sci. Rep. 12, 14952 (2022).

Ali, S. et al. Assessing generalisability of deep learning-based polyp detection and segmentation methods through a computer vision challenge. Sci. Rep. 14, 2032 (2024).

Bernal, J. et al. Comparative validation of polyp detection methods in video colonoscopy: results from the miccai 2015 endoscopic vision challenge. IEEE Trans. Med. Imaging 36, 1231â1249 (2017).

Jha, D. et al. Medico multimedia task at mediaeval 2020: Automatic polyp segmentation. arXiv preprint arXiv:2012.15244 (2020).

Hicks, S. et al. Medico multimedia task at mediaeval 2021: Transparency in medical image segmentation. In Proc. MediaEval 2021 CEUR Workshop (2021).

Hicks, S. et al. Medai: Transparency in medical image segmentation. Nordic Machine Intelligence 1, 1â4 (2021).

Hassan, C., Balsamo, G., Lorenzetti, R., Zullo, A. & Antonelli, G. Artificial intelligence allows leaving-in-situ colorectal polyps. Clin. Gastroenterol. Hepatol. 20, 2505â2513.e4 (2022).

Participants in the Paris Workshop. The Paris endoscopic classification of superficial neoplastic lesions: esophagus, stomach, and colon. Gastrointestinal Endoscopy 58, S3âS43 (2003).

Schlemper, R. J. et al. The Vienna classification of gastrointestinal epithelial neoplasia. Gut 47, 251â255 (2000).

Biffi, C. et al. Real-colon dataset. Figshare https://doi.org/10.25452/figshare.plus.22202866 (2024).

Biffi, C. et al. Supplementary materials for real-colon: A dataset for developing real-world ai applications in colonoscopy. Figshare https://doi.org/10.6084/m9.figshare.25315261 (2024).

Lin, T.-Y. et al. Microsoft coco: Common objects in context. In Proc. 13th Eur. Conf. Comput. Vision, ECCV 2014, 740â755 (2014).

Liu, W. et al. Ssd: Single shot multibox detector. In Proc. 14th Eur. Conf. Comput. Vision, ECCV 2016, 21â37 (2016).

Acknowledgements

We wish to express our gratitude to Antonella Melina and Michela Ruperti for their assistance in coordinating the data acquisition and labeling process across the four data cohorts, to the Data Annotation Team at Cosmo Intelligent Medical Devices for their diligent efforts in annotating the dataset, to Erica Vagnoni and Stefano Bianchi for the development of the annotation software tool, and to Gabriel Marchese Aizenman for testing the GitHub repositories released with this work.

Author information

Authors and Affiliations

Contributions

C.B., P.S., and A.C. conceived the dataset, C.B. and P.S. curated the dataset and conducted the experiments. G.A., S.B., C.H., D.H, M.I., and A.M. collected the data. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

C.B., P.S., and A.C. are affiliated with Cosmo Intelligent Medical Devices, the developer of the GI Genius medical device. C.H. is consultant for Medtronic and Fujifilm.

Additional information

Publisherâs note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the articleâs Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the articleâs Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Biffi, C., Antonelli, G., Bernhofer, S. et al. REAL-Colon: A dataset for developing real-world AI applications in colonoscopy. Sci Data 11, 539 (2024). https://doi.org/10.1038/s41597-024-03359-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-024-03359-0