Chapter 1: Introduction

Uploaded by

Robin MichaelCopyright:

Available Formats

Chapter 1: Introduction

Uploaded by

Robin MichaelOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Chapter 1: Introduction

Uploaded by

Robin MichaelCopyright:

Available Formats

HAND GESTURE BASED 3D GAME

TABLE OF CONTENTS

CHAPTER 1: INTRODUCTION 4

1.1 Project overview 5

1.2 Organisation profile 5

1.3 Objectives of the Project 7

CHAPTER 2: REQUIREMENTS AND ANALYSIS 7

2.1 Preliminary Investigation 9

2.2 Existing System 9

2.3 Proposed System 9

2.4 Feasibility Analysis 10

2.4.1 Economic Feasibility 10

2.4.2 Technical Feasibility 10

2.4.3 Behavioural Feasibility 11

2.4.4 Ethical Feasibility 11

2.5 Planning and scheduling 11

CHAPTER 3: SYSTEM CONFIGUARATION 13

3.1 Software / Hardware Specification 14

3.2 Functional Specification and User Characteristics 14

3.2.1 Use Case Diagram 15

3.3 Tools and Platform Used 18

CHAPTER 4: SYSTEM DESIGN 24

4.1 Basic Modules/Module Description 25

4.2 Procedural Design 26

SAINTGITS COLLEGE OF ENGINEERING 1

HAND GESTURE BASED 3D GAME

4.2.1 Use Case Diagram 26

4.2.2 System Flow chart 27

4.2.3 System Data Flow Diagram 29

4.3 User Interface Design 32

4.3.1 Input Design 32

4.3.2 Output Design 33

4.4 Algorithms used 34

4.4.1 Mediapipe 34

4.4.2 Single Shot Detector 36

CHAPTER 5: AGILE DOCUMENTATION 38

5.1 Agile Roadmap/Schedule 39

5.2 Agile Project Plan 41

5.3 Agile User Story 41

5.4 Agile Sprint Backlog 41

5.5 Agile Test Plan 43

CHAPTER 6: IMPLEMENTATION AND TESTING 44

6.1 Implementation Approach 45

6.1.1 Implementation methods 46

6.1.2 Implementation plan 46

6.2 Testing Method 46

CHAPTER 7: CONCLISION & FUTURE SCOPE 50

7.1 Introduction 51

7.2 Limitations of The System 51

7.3 Future Scope 52

SAINTGITS COLLEGE OF ENGINEERING 2

HAND GESTURE BASED 3D GAME

CHAPTER 8: APPENDICES 53

8.1 Source code 54

8.2 Screenshots 71

CHAPTER 9: REFERENCES 74

9.1 References 75

SAINTGITS COLLEGE OF ENGINEERING 3

HAND GESTURE BASED 3D GAME

LIST OF FIGURES

Fig 2.5 Gantt Chart 12

Fig 3.1 Use Case Symbols 17

Fig 3.2 Use Case Diagram 17

Fig 3.1 Use Case Symbols 17

Fig 3.3 SSD Architecture 23

Fig 4.4.2 SSD Architecture 37

Fig 5.2 Agile Project Plan 41

Fig 8.2.1 Mediapipe hand recognition 71

Fig 8.2.2 Unity 3D enemy bot 71

Fig 8.2.3 Game pause 72

Fig 4.4.1 Mediapipe hands 72

Fig 4.4.1 Mediapipe hands 72

Fig 4.4.1 Mediapipe hands 73

SAINTGITS COLLEGE OF ENGINEERING 4

HAND GESTURE BASED 3D GAME

CHAPTER 1

INTRODUCTION

SAINTGITS COLLEGE OF ENGINEERING 5

HAND GESTURE BASED 3D GAME

1.1 Project Overview

This system aims at developing an application, for gesture recognition. The camera or

webcam connected to the system can be used to recognize the human hand gesture. Based

on the analysis made by the application done on recognizing the human Hand Gestures, the

operations on the Game will be performed with the game’s default gaming controls. The

application contains a set of instruction to recognize the human hand gestures. The gestures

are to be done on the palms of the hands. The system will comprise of 3 modules namely

user interface, gestures recognition & analysis. The user interface module provides all the

necessary graphical user interface to the user to register the arms positions which will be

used to perform gestures. The gestures recognition module can be used to recognize the

gestures. At last the analysis module will analyze the human hand gesture and perform

game controls based on the calculated analysis. The system is also useful for players who

may have injury or factures. The user can be able to perform multiple function using

gesture with same gestures based on analysis. This will provide a better interaction and a

better perception to players and make it more interesting to play the games. A completely

robust hand gesture recognition system is still under heavy research and development. This

system work can serve as small step towards an extendible foundation for future work

1.2 Organization Profile

SAINTGITS COLLEGE OF ENGINEERING 6

HAND GESTURE BASED 3D GAME

SAINTGITS COLLEGE OF ENGINEERING Saintgits College of Engineering is a self-

financing technical institution located at Kottayam district of Kerala. The college was

established in 2002. Saintgits is approved by All India Council for Technical Education

and affiliated to APJ Abdul Kalam Technological University, Kerala. The institute is

accredited by NBA in 2016 for 3 years for 5 UG programs and in 2017 for 3 years for

Master of Computer Application program. The college was founded by a group of well-

known academicians. They are pioneering educators, having unmatched experience in the

field of education with a belief that the continuous search for knowledge is the sole path to

success. The primary focus of the institution is to expose the young minds to the world of

technology, instilling in them confidence and fortitude to face new challenges that enable

them to excel in their chosen fields. The college inculcates the development of all facets of

the mind culminating in an intellectual and balanced personality. A team of dedicated and

caring faculty strives to widen the student’s horizon of learning thereby achieving excellent

results for every student. With a scientifically planned methodology combined with a team

of handpicked faculty the best in the teaching profession and the state-of-the-art

infrastructure, the quality of the engineering education at Saintgits is unparalleled in the

region. The institute has turned into a benchmark for others to emulate. With 100% seats

filled from the year of inception itself, and feel confident that Saintgits can serve even

better with every passing year. Saintgits College of Engineering, right from inception, has

been maintaining high levels of standards in academic and extra-curricular realms of

activities. Saintgits offer a B Tech Degree course in 9 engineering disciplines, and

Master’s Degree courses in Engineering, Computer Applications and Business

Administration.

1.3 Objectives of the Project

The primary objective of this system shows how a computer game can be played by using

human gestures. The secondary objective of this system is to create a system that makes a

player to play a game without a physical controller. This system aims at developing an

application, for gesture recognition. The camera or webcam connected to the system can be

used to recognize the human hand gesture. Based on the analysis made by the application

done on recognizing the human Hand Gestures, the operations on the Game will be

performed with the game’s default gaming controls. The application contains a set of

SAINTGITS COLLEGE OF ENGINEERING 7

HAND GESTURE BASED 3D GAME

instruction to recognize the human hand gestures. The gestures are to be done on the palms

of the hands. The system will comprise of 3 modules namely user interface, gestures

recognition & analysis. The user interface module provides all the necessary graphical user

interface to the user to register the arms positions which will be used to perform gestures.

The gestures recognition module can be used to recognize the gestures.

CHAPTER 2

REQUIREMENTS AND ANALYSIS

SAINTGITS COLLEGE OF ENGINEERING 8

HAND GESTURE BASED 3D GAME

2.1 Preliminary Investigation

Preliminary investigation is a problem-solving activity that requires intensive

communications between the system users and system developers. It does various

feasibility studies. In these studies, a rough figure of the system activities can be obtained,

from which decisions about the strategies to be followed for effective system study and

analysis can be taken At preliminary investigation an initial picture about the system

working is got from the information got from the study, the data collection methods were

identified. Right from the investigation about the system many existing drawbacks of the

system could be identified, which helped a lot in the later stages of more rigorous study

and analysis of the manual system. The most critical phase of managing system projects is

planning. To launch a system investigation, we need a master plan detailing the steps to be

taken, the people to be questioned and the outcome expected.

2.2 Existing System

Video games are among the most popular forms of entertainment in the modern world.

However, many gamers with physical disabilities are impeded by traditional controllers.

While accessories such as motion controllers, VR, MR headsets and enlarged buttons exist,

many accessible gaming setups can end up costing hundreds of dollars. The average cost of

SAINTGITS COLLEGE OF ENGINEERING 9

HAND GESTURE BASED 3D GAME

a simple controller is in the range of 4000 Rs/- to 6000 Rs/- in the market. Most of these

kinds of controllers are limited to certain specific consoles like Motion controllers

exclusive for Play-station series, adaptive controllers for X-box series and standard

keyboard and mouse for PC systems. The maintenance cost of these controllers is also

high. These controllers are can stay awake for 4 to 6 hours for wireless and average

lifespan of these controllers are about 2 years due to heavy gaming. And whenever playing

games with these controllers for long duration can cause several physical pains for the

player, most commonly Arthritis and Tendonitis pain, Carpal tunnel syndrome, Tennis

elbow pain and whenever using expensive immersive experience gaming accessories like

MR or VR headsets can cause eyestrains.

2.3 Proposed System

The proposed system covers all the major drawbacks of existing system. The system

provides and enhanced gaming experience with zero cost. The navigation control of the

system is completely based players palm’s 21 landmark positions. Most laptop computers

and many desktops come equipped with a webcam, so naturally, that would be the starting

point and because of these initial cost and maintenance of the system is very low. The

system with proposed game can also act as an exercise for the player instead of playing the

game by keeping his hands in stationary by these the blood circulation will continue

instead of freezing. The system is also supported by wide variety of platforms and

consoles. By navigating through hands, the system provides more immersive gaming

experience making the players more addicted to the game. The system provides accurate

gesture movement s responses in real-time due the underlying powerful backend algorithm

which uses multiple models for effective analysis.

2.4 Feasibility Analysis

Feasibility analysis is a system proposal according to its workability, impact on the

organization, ability to meet client and user needs and efficient use of resources. The key

considerations that are involved in the feasibility analysis are:

• Economic feasibility

• Technical feasibility

• Behavioural feasibility

SAINTGITS COLLEGE OF ENGINEERING 10

HAND GESTURE BASED 3D GAME

• Ethical Feasibility

2.4.1 ECONOMIC FEASIBILITY

Economic analysis is the most frequently used method for evaluating the effectiveness of

the candidate system. Most commonly known as cost/benefit analysis, the procedure is to

determine the benefits and savings that are expected from a candidate system, otherwise

further alterations will have to be made, if it is to have a chance of being approved. The

proposed system is cost effective because of its experimental and user-friendly interface.

The administrator can directly view and change the records.

2.4.2 TECHNICAL FEASIBILITY

Technical feasibility examines the work for the project be done with correct equipment,

existing software technology and available personnel. The important advantage of the

system is that it is platform independent.

2.4.3 BEHAVIOURAL FEASIBILITY

The proposed project would be beneficial to all organizations that, it satisfies the objectives

when developed and installed. All the behavioural aspects are considered carefully, thus

the project is behaviourally feasible and it can also be implemented easily and is very user

friendly. The project is inherently resistant to change and computer has been known to

facilitate changes. An estimate should be made of how strong the user is likely to move

towards the development of computerized system. These are various levels of users in

order to ensure proper authentication, authorization and security of sensitive data of

organization.

2.4.4 ETHICAL FEASIBILITY

The aspect of this study is to check the level of acceptance of the system by the user. This

includes the process of training the user to use the system efficiently. The user most not

feel threatened by the system, instead he/she must accept it as a necessity. The level of

acceptance by the user depends on the methods that are employed to educate the user about

the system and to make them familiar with it

2.5 PLANNING AND SCHEDULING

SAINTGITS COLLEGE OF ENGINEERING 11

HAND GESTURE BASED 3D GAME

The purpose of Project Plan is to define all the techniques, procedures, and methodologies

that will be used in the project to assure timely delivery of the software that meets

specified requirements within project resources. This will be reviewing and auditing the

software products and activities to verify that they comply with the applicable procedures

and standards and providing the software project and other appropriate managers with the

results of these reviews and audits

Gantt chart

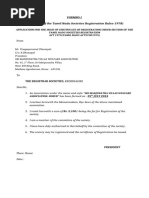

A Gantt chart is a horizontal bar chart developed as a production control tool in 1917 by

Henry L. Gantt, an American engineer and social scientist. Frequently used in project

management, a Gantt chart provides a graphical illustration of a schedule that helps to plan,

coordinate, and track specific tasks in a project. A Gantt chart, or Harmon gram, is a type

of bar chart that illustrates a project schedule.

Fig 2.5: Gantt Chart

SAINTGITS COLLEGE OF ENGINEERING 12

HAND GESTURE BASED 3D GAME

CHAPTER 3

SAINTGITS COLLEGE OF ENGINEERING 13

HAND GESTURE BASED 3D GAME

SYSTEM CONFIGURATION

3.1 Software / Hardware Specification

Hardware

The selection of hardware is very important in the and proper working of an existence

software. When selecting hardware, the size and capacity requirements are also important.

Below is some of hardware that is required by the system:

Processor: I3 processor of 2.0 GHz or more

RAM: 4 GB DDR4

Hard Disk Space: 2 GB free hard disk space

Input Device: Webcam with at least 5 mega pixels

Output Device: Monitor with 1920 x 1080 resolution

Software

SAINTGITS COLLEGE OF ENGINEERING 14

HAND GESTURE BASED 3D GAME

We require much different software to make the application which is in making to work

efficiently. It is very important to select the appropriate software so that the software works

properly. Below is the software that are required to make the new system.

Operating System: Windows 10 / 8.1

Drivers/packages: Python-OpenCV

3.2 Functional Specification and User Characteristics

UML Diagram

UML is a way of visualizing a software program using a collection of diagrams. The

notation has evolved from the work of Grady Booch, James Rumbaugh, Ivar Jacobson, and

the Rational Software Corporation to be used for object-oriented design, but it has since

been extended to cover a wider variety of software engineering projects. Today, UML is

accepted by the Object Management Group (OMG) as the standard for modeling software

development. UML stands for Unified Modeling Language. UML 2.0 helped extend the

original UML specification to cover a wider portion of software development efforts.

including agile practices: Improved integration between structural models like class

diagrams and behavior models like activity diagrams, added the ability to define a

hierarchy and decompose a software system into components and sub- components. The

original UML specified nine diagrams; UML 2.x brings that number up to 13.

The four new diagrams are called: communication diagram, composite structure diagram,

interaction overview diagram, and timing diagram. It also renamed state chart diagrams to

state machine diagrams, also known as state diagrams.

Types of UML Diagrams

The current UML standards call for 13 different types of diagrams: class, activity, object,

use case, sequence, package, state, component, communication, composite structure,

interaction overview, timing, and deployment. These diagrams are organized into two

distinct groups: structural diagrams and behavioural or interaction diagrams.

Structural UML diagrams

Class diagram

Package diagram

SAINTGITS COLLEGE OF ENGINEERING 15

HAND GESTURE BASED 3D GAME

Object diagram

Component diagram

Composite structure diagram

Behavioural UML diagrams

Activity diagram

Sequence diagram

Use case diagram

State diagram

Communication diagram

Interaction overview diagram

Timing diagram

3.2.1 Use Case Diagram

To model a system, the most important aspect is to capture the dynamic behavior. Dynamic

behavior means the behavior of the system when it is running/operating. Only static

behavior is not sufficient to model a system, rather dynamic behaviour is more important

than static behaviour. In UML, there are five diagrams available to model the dynamic

nature and use case diagram is one of them. Now as we have to discuss that the use case

diagram is dynamic in nature, there should be some internal or external factors for making

the interaction. These internal and external agents are known as actors. Use case diagrams

consists of actors, use cases and their relationships. The diagram is used to model the

system/subsystem of an application. A single use case diagram captures a particular

functionality of a system. Hence to model the entire system, a number of use case diagrams

are used. The purpose of use case diagram is to capture the dynamic aspect of a system.

Use case diagrams are used to gather the requirements of a system including internal and

external influences. These requirements are mostly design requirements. Hence, when a

system is analysed to gather its functionalities, use cases are prepared and actors are

identified. When the initial task is complete, use case diagrams are modelled to present the

outside view.

Actor

SAINTGITS COLLEGE OF ENGINEERING 16

HAND GESTURE BASED 3D GAME

Actor in a use case diagram is an entity that performs a role in one given system.

This could be a person, organization or an external system and usually drawn like

skeleton shown below.

Use case

A use case represents a function or an action within the system. It’s drawn as an

oval and named with function.

System

The system is used to define the scope of the use case and drawn as a rectangle.

This an optional element but useful when we are visualizing large systems.

Relationship

Illustrate relationships between an actor and a use case with a simple line. For

relationships among use cases, use arrows labelled either "uses" or "extends." A

"uses" relationship indicates that one use case is needed by another in order to

perform a task. An "extends" relationship indicates alternative options under a

certain use case.

A use case diagram is a graphic depiction of the interactions among the elements of a

system. In software and systems engineering, a use case is a list of actions or event steps,

typically defining the interactions between a role and a system, to achieve a goal. The

actorcan be a human or other external system. In this system, Admin are the Actor. They

are represented as follows.

SAINTGITS COLLEGE OF ENGINEERING 17

HAND GESTURE BASED 3D GAME

Fig 3.1 Use Case Symbols

Fig 3.2 Use Case Diagram

3.3 Tools and Platform Used

Unity 3D

A game engine provides a main framework and common functions for developing games,

which is the core of controlling games. Since the first advent of the Doom game engine in

1993, game engine technology has experienced nearly 20 years of evolution. Game engine

initially only supported 2D, now fully supports 3D, lifelike images, massively multiplayer

online game, artificial intelligence and mobile platforms. Some representative game

engines are Quake, Unreal Tournament, Source, BigWorld and CryENGINE, etc. The

internal implementation techniques in Game engines have some differences, but their

ultimate goal is the same to improve the efficiency of game development. To

comprehensively grasp the general development ideas of a game, it is necessary for

choosing a typical game engine to study deep. Unity3D is a popular 3D game engine in

SAINTGITS COLLEGE OF ENGINEERING 18

HAND GESTURE BASED 3D GAME

recent years, which is particularly suitable for independent game developers and small

teams. It mainly comprises eight products such as the Unity, Unity Pro, Asset Server, iOS,

iOS Pro, Android and Android Pro [6]. Without writing complex codes, programmers can

quickly develop a scene by using the visual integrated development environment of

Unity3D. In the popular iPhone game list, the games developed by Unity3D take a large

proportion, such as Plants vs. Zombies, Ravensword:The Fallen King. In particular,

Unity3D also provides the Union and Asset Store selling platforms for game developers.

Unity3D has special advantages in easily programming a game. For example, platform-

related operations are encapsulated in its internal, the complex game object-relations are

managed by different visual views, and JavaScript, C # or Boo scripting languages are

applied to program a game. A script program will be automatically compiled into a .NET

DLL file, so the three scripting languages, in essence, have the same performance, their

execution speed is 20 times faster than traditional JavaScript. These script languages have

good cross platform ability as well. That means developers can deploy games on different

platforms such as Windows, Mac, Xbox 360, PlayStation 3, Wii, iPad, iPhone and

Android. In addition, games can run on the Web by installing a plug-in. Another feature of

the Unity3D is that game resources and objects can be imported or exported in the form of

a package, which can easily make different game projects share development works.

Therefore, using package can greatly improve efficiency in game development. In addition

to resource material files, specific functions can be packaged, such as AI, network

operation, character control, etc.

C#

For the past two decades, C and C++ have been the most widely used language for

developing commercial and business software. While both languages provide the

programmers with a tremendous amount of fine-grained control, this flexibility comes at a

cost to productivity. C# is a new computer programming language developed by Microsoft

Corporation, USA .C# is fully object oriented language like C++, JA VA etc. It is simple

and efficient; it is derived from the popular C and C++ languages. Compare with a

languages such as Microsoft Visual Basic, equivalent C and C++ applications often take

longer to develop. Due to the complexity and long cycle time associated with these

languages, many C and C++ programmers have been searching for a language offering

better balance between power and productivity. There are languages today that raise

SAINTGITS COLLEGE OF ENGINEERING 19

HAND GESTURE BASED 3D GAME

productivity by sacrificing the flexibility that C and C++ programmers often require. Such

solution constrain the developer too much (for example, by omitting a mechanism for low-

level code control) and provide least common-denominator capabilities. They do not easily

inter-operate with pre-existing systems, and they do not always mesh with current Web

programming practices. The ideal solution for C and C++ programmers would be rapid

development combined with the power to access all the functionality of the underlying

platform. They want an environment that is completely in sync with emerging Web

standards end one that provides easy integration with existing applications. Additionally, C

and C++ developers would like the ability to code at low level when and if the need arise.

The Microsoft solution to this problem is a language called C# (pronounced by "C Sharp

"). C# is a modem, object-oriented language that enables programmers to quickly build a

wide range of application for the new Microsoft .NET platform, which provides tools and

services that fully exploit both computing and communications. Because of its elegant

object-oriented design, C# is a great choice for architecting a wide range of components-

from high-level business objects to systems-level applications. Using simple C# language

constructs, these components can be converted into MEL Web services, allowing them to

be invoked across the Internet, from any language running on any operating systems. More

than anything else, C# is designed to bring rapid development to the C++ programmer

without sacrificing the power and control that have been a hallmark of 'c and C++'.

Because of this heritage, C# has a high degree of fidelity with 'C and C++'. Developers

familiar with these languages can quickly become productive in C#. A large number of

computer languages, starting from FORTRAN developed in 1957 to the object-oriented

language Java introduced in 1995, arc being used for various applications. The choice of a

language depends up on many factors such as hardware environment, business

environment, user requirements and so on. The primary motivation while developing each

of this language has been the concern that it able to handle the increasing complexity of

programs that are robust, durable and maintainable.

Python

Python is an interpreted, object-oriented, high-level programming language with dynamic

semantics. Its high-level built in data structures, combined with dynamic typing and

dynamic binding, make it very attractive for Rapid Application Development, as well as

for use as a scripting or glue language to connect existing components together. Python's

SAINTGITS COLLEGE OF ENGINEERING 20

HAND GESTURE BASED 3D GAME

simple, easy to learn syntax emphasizes readability and therefore reduces the cost of

program maintenance. Python supports modules and packages, which encourages program

modularity and code reuse. The Python interpreter and the extensive standard library are

available in source or binary form without charge for all major platforms, and can be freely

distributed. Often, programmers fall in love with Python because of the increased

productivity it provides. Since there is no compilation step, the edit-test-debug cycle is

incredibly fast. Debugging Python programs is easy: a bug or bad input will never cause a

segmentation fault. Instead, when the interpreter discovers an error, it raises an exception.

When the program doesn't catch the exception, the interpreter prints a stack trace. A source

level debugger allows inspection of local and global

variables, evaluation of arbitrary expressions, setting breakpoints, stepping through the

code a line at a time, and so on. The debugger is written in Python itself, testifying to

Python's introspective power. On the other hand, often the quickest way to debug a

program is to add a few print statements to the source: the fast edit-test-debug cycle makes

this simple approach very effective.

Open CV

OpenCV (Open-Source Computer Vision Library) is an open-source computer vision and

machine learning software library. OpenCV was built to provide a common infrastructure

for computer vision applications and to accelerate the use of machine perception in the

commercial products. Being a BSD licensed product, OpenCV makes it easy for businesses

to utilize and modify the code. The library has more than 2500 optimized algorithms,

which includes a comprehensive set of both classic and state-of the-art computer vision

and machine learning algorithms. These algorithms can be used to detect and recognize

faces, identify objects, classify human actions in videos, track camera movements, track

moving objects, extract 3D models of objects, produce 3D point clouds from stereo

cameras, stitch images together to produce a high resolution image of an entire scene, find

similar images from an image database, remove red eyes from images taken using flash,

follow eye movements, recognize scenery and establish markers to overlay it with

augmented reality, etc.

It has C++, Python, Java and MATLAB points of interaction and supports

Windows, Linux, Android and Mac OS. OpenCV inclines for the most part towards

SAINTGITS COLLEGE OF ENGINEERING 21

HAND GESTURE BASED 3D GAME

constant vision applications and exploits MMX and SSE guidelines when accessible. A

full-highlighted CUDA and OpenCL points of interaction are overall effectively developed

right now. There are more than 500 calculations and around 10 fold the number of

capacities that make or backing those calculations. OpenCV is composed locally in C++

and has a templated interface that works consistently with STL compartments. OpenCV

has more than 47 thousand people of user community and estimated number of downloads

exceeding 18 million. The library is used extensively in companies, research groups and by

governmental bodies. Along with well-established companies like Google, Yahoo,

Microsoft, Intel, IBM, Sony, Honda, Toyota that employ the library, there are many start-

ups such as Applied Minds, VideoSurf, and Zeitera, that make extensive use of OpenCV.

OpenCV’s deployed uses span the range from stitching street view images together,

detecting intrusions in surveillance video in Israel, monitoring mine equipment in China,

helping robots navigate and pick up objects at Willow Garage, detection of swimming pool

drowning accidents in Europe, running interactive art in Spain and New York, checking

runways for debris in Turkey, inspecting labels on products in factories around the world

on to rapid face detection in Japan. It has C++, Python, Java and MATLAB interfaces and

supports Windows, Linux, Android and Mac OS. OpenCV leans mostly towards real-time

vision applications and takes advantage of MMX and SSE instructions when available. A

full-featured CUDA and OpenCL interfaces are being actively developed right now. There

are over 500 algorithms and about 10 times as many functions that compose or support

those algorithms. OpenCV is written natively in C++ and has a templated interface that

works seamlessly with STL containers

Numpy

The Python programming language was not at first intended for mathematical processing,

yet pulled in the consideration of the logical and designing local area from the get-go, so a

specific vested party called lattice sig was established in 1995 determined to characterize a

cluster figuring bundle. Among its individuals was Python planner and maintainer Guido

van Rossum, who carried out expansions to Python's grammar (specifically the ordering

language structure) to make cluster processing simpler. An execution of a network bundle

was finished by Jim Fulton, then summed up by Jim Hugunin to become Numeric,[5]

additionally differently called Numerical Python expansions or NumPy.[6][7] Hugunin, an

alumni understudy at Massachusetts Institute of Technology joined the Corporation for

SAINTGITS COLLEGE OF ENGINEERING 22

HAND GESTURE BASED 3D GAME

National Research Initiatives (CNRI) to chip away at JPython in 1997leaving Paul Dubois

of Lawrence Livermore Public Laboratory (LLNL) to take over as maintainer Other early

donors incorporate David Ascher, Konrad Hinsen and Travis Oliphant Another bundle

called Numarray was composed as a more adaptable trade for Numeric.[8] Like Numeric,

it is presently belittled Numarray had quicker activities for enormous exhibits, yet was

more slow than Numeric on little ones, so for a period the two bundles were utilized for

various use cases. The last form of Numeric v24.2 was delivered on 11 November 2005

and numarray v1.5.2 was delivered on 24 August 2006

Single Shot Detector (SSD)

SSD may be a single-shot detector. It no delegated region proposal network and predicts

the boundary boxes and therefore the classes directly from feature maps in one single pass.

To enhance accuracy, SSD introduces: small convolutional filters to predict object classes

and offsets to default boundary boxes. SSD is meant for object detection in real-time.

Faster RCNN uses a neighbourhood proposal network to make boundary boxes and utilizes

those boxes to classify objects. While it's considered the start-of-the-art in accuracy, the

entire process runs at 7 frames per second. Far below what real-time operation needs. SSD

accelerates the method by eliminating the necessity for the region proposal network. To

recover the drop by accuracy, SSD applies a couple of improvements including multi-scale

features and default boxes. These improvements allow SSD to match the Faster R-CNN’s

accuracy using lower resolution images, which further pushes the speed higher. Consistent

with the subsequent comparison, it achieves the real-time operation speed and even beats

the accuracy of the Faster R-CNN.(Accuracy is measured because the mean average

precision map: the precision of the predictions.).

In deep learning, a convolutional neural network (CNN/ConvNet) is a class of

deepneuralnetworks, most commonly applied to analyze visual imagery. Now when we

think of a neural networkwe think about matrix multiplications but that is not the case with

ConvNet. It uses a special techniquecalled Convolution. Now in mathematics convolution

is a mathematical operation on twofunctionsthatproduces a third function that expresses

how the shape of one is modified by the other. InConvolutionalNeural Network we use the

following steps: First, we take a picture, video, Live CCTV cameras input. Then we

divide the image into various regions. We’ll then consider each region as a separate

SAINTGITS COLLEGE OF ENGINEERING 23

HAND GESTURE BASED 3D GAME

image. Pass of these regions (images) to the CNN and classify them into various classes.

Once we've divided each region into its corresponding class, we will combine of

theseregionstourge the first image with the detected objects. The matter with using this

approach is that the objects within the image can have different aspectratios and spatial

locations. as an example, in some cases the thing could be coveringmost oftheimage, while

in others the thing might only be covering a little percentage of theimage. Theshapes of the

objects may additionally vary (happens tons in real-life use cases)

Fig 3.3 SSD Architecture

SAINTGITS COLLEGE OF ENGINEERING 24

HAND GESTURE BASED 3D GAME

CHAPTER 4

SYSTEM DESIGN

4.1 Basic Modules/Module Description

There is only one user in the system it is the player.

PLAY

Start

The player starts the game by showing either his left or right hand. Whenever his

hand is shown into the webcam the underlying algorithm will detect the palm and

then a small message is passed to the unity game server through an UDP protocol

to instantiate the game.

SAINTGITS COLLEGE OF ENGINEERING 25

HAND GESTURE BASED 3D GAME

Play

The user controls the player by moving is hands. This is achieved by the underlying

algorithm which detects the hand by its model and then extract palm landmarks

with another model which takes first models output as its input. The extracted

landmarks are then given to a function to calculate its position with respect to the

screen space.

These calculated results are then bind together and sent to the unity game engine

via an UDP protocol and once these data is received the game engine scripts extract

these values and process these values for immersive user control experience.

Pause

When during gameplay user gets tired of his hand always placing in a particular

position. In this scenario user can take his hands of and whenever his hand is not

shown for 5 seconds the system will pause the game and user can resume the game

by showing his hands again.

Score

The user will play against an opponent who will try to outsmart the player by

hitting the tennis ball to make it hit on the wall behind the player. The player

should prevent this action and need to hit the ball to make it hit on opponent’s wall.

Whenever user hits the wall behind the opponent the user gets 1 point and at the

same time when the opponent hits he will get 1 point.

Finish

The first person who will reach a score of 10 will win the game.

4.2 Procedural Design

A design methodology combines a systematic set of rules for creating a program design

with diagramming tools needed to represent it. Procedural design is best used to model

programs that have an obvious flow of data from input to output. It represents the

architecture of a program as aset of interacting processes that pass data from one to

another. The two major diagramming tools used in procedural design are data flow

diagrams and structure charts

SAINTGITS COLLEGE OF ENGINEERING 26

HAND GESTURE BASED 3D GAME

4.2.1 Flow Charts

The flowchart is a graphic technique specifically developed for using dataflow. The

flowchart is a pictorial representation that uses predefined symbols to describe dataflow of

a system about its logic. Flowcharts were first used in the early 20th century to describe

engineering and manufacturing systems. With the rise of computer programming, the

system flowchart has become a valuable tool for depicting the flow of control through a

computer system and where decisions are made that affect the flow. Computer

programming requires careful planning and logical thinking. Programmers need to

thoroughly understand a task before beginning to code. System flowcharts were heavily

used in the early days of programming to help system designers visualize all the decisions

that needed to be addressed. Other tools have since been introduced that may be more

appropriate for describing complex systems. One of these tools is pseudocode, which uses

a combination of programming language syntax and English-like natural language to

describe how a task will be completed. Many system designers find pseudocode easier to

produce and modify than a complicated flowchart. However, flowcharts are still used for

many business application

Basic Symbols

SAINTGITS COLLEGE OF ENGINEERING 27

HAND GESTURE BASED 3D GAME

4.2.2 System Flow chart

SAINTGITS COLLEGE OF ENGINEERING 28

HAND GESTURE BASED 3D GAME

SAINTGITS COLLEGE OF ENGINEERING 29

HAND GESTURE BASED 3D GAME

4.2.3 System Data Flow Diagram

The data flow diagram (DFD) is one of the most important tools used by system analysis.

A DFD is also known as "Bubble Chart" has the purpose of clarifying system requirements

and identifying major transformations that will become programs in system design phase.

So, it is the starting point of the design phase that functionally decomposes the requirement

specifications down to the lowest level of detail. Data flow diagrams are made up of a

number of symbols, which represent system components. Most data flow modelling

methods use four kinds of symbols. These symbols used to represent four kinds of the

system components. Processes, data stores, data flows and external entities. Circles in DFD

represent processes. Data flow is represented by a thin line in the DFD and each data store

has a unique name and square or rectangle represents external entities. Constructing a DFD

Several rules of thumb are used in drawing a DFD. Process should be named and

numbered for easy reference. Each name should be representative of the process. The

direction of flow is from top to bottom and left to right. When a process is exploded into

lower level details, they are numbered. The names of data stores, sources and destinations

are written Process and data flow in capital letters. Names have the first letter of each word

capitalized. To construct a, DFD we use,

• Arrow

• Circles

• Pen Ended Box

• Squares

An arrow identifies the data flow in motion. It is pipeline through which information is

flown like the rectangle in the flow chart. A circle stands for process that converts data into

information. An open-ended box represents a data store, data at rest or a temporary

repository of data. A square defines a source or destination of system data.

Five rules for constructing a DFD

• Arrows should not cross each other

• Squares, circles and files must be names

• Decomposed data flow squares and circles can have same names.

SAINTGITS COLLEGE OF ENGINEERING 30

HAND GESTURE BASED 3D GAME

• Choose meaningful names for data flow

• Draw all data flows around the outside of the diagram

An arrow identifies the Data Flow in motion.

It is a pipeline through which information is flown like

the rectangle in the flowchart.

A circle stands for that converts data into information

An open-ended box represents a data store, data at test or

a temporary repository of data.

A square defines a source or destination of system

SAINTGITS COLLEGE OF ENGINEERING 31

HAND GESTURE BASED 3D GAME

Context Level

Level 1

SAINTGITS COLLEGE OF ENGINEERING 32

HAND GESTURE BASED 3D GAME

4.3 User Interface Design

The system is the most creative and challenging phase of life cycle. It is an approach for

the creation of the proposed system, which will help in system coding. It is vital for

efficient data base management. It provides the understanding of procedural details

necessary for implementing the system. A number of subsystems are to be identified which

constitute the whole system. From the project management point of view software design

is conducted in two steps: Preliminary design is concerned with the transformation of

requirements into data andsoftware architecture. Design starts with the system

requirements specification and convertit into physical reality during the development.

Import design factors such as reliability,response time, throughput of the system etc.

should be taken into account. Database tablesare design by using all necessary fields in a

compact and correct manner. Care should betaken to avoid redundant data field. Design is

the only way where requirements are actuallytranslated into finished software product or

system.

4.3.1 Input Design

Input design is one of the most expensive phases of the operation of computerized system

and often the major problem of a usually. A larger number of problems with a system can

be traced back to fault input design and methods. Needless to say, therefore that output

data is the block of a system and has to be analysed and designed consideration. It is the

process of converting the user-oriented description of into a computer-based business

information system inputs of input design is to create to a programmer-oriented

specification. The objective errors. An input layout that is easy to follow and prevent

operator. It covers all phases of input from creation of initial data into actual entry of the

data to the system for processing. The input design is the link that ties the system into

world of its users. The user interface design is very important for any application. The

interface design defines how the software communication within itself, to system that

interpreted with it and with human who use it. The goal of designing input data is to make

the automation as easy and free from errors as possible. For providing a good input design

for the application easy data input and selection features are adopted. The input design

requirements such as user friendliness, also considered for the development of the project.

At right time are

SAINTGITS COLLEGE OF ENGINEERING 33

HAND GESTURE BASED 3D GAME

Requirements of Form Design:

Identification and wording.

Maximum readability and use

Physical factors

Order of data items.

Easy of data entry

Size and arrangement.

Use of instructions

4.3.2 Output Design

A quality output is one, which meets the requirements of the end user and presents the

information clearly. In any system results of processing are communicated to the user and

to the other systems through outputs. In the output design it is determined how the

information is to be displayed for immediate need and also the hard copy output. It is the

most important and direct source information to the user. Thus, output design generally

refers to the result and information that are generated by the system. For many end users

output is the main reason for developing the system and the basis on which they are

evaluate the usefulness of application. The objective of a system finds its shape in terms of

the output. The analysis of the objective of the system leads to determination of outputs.

Outputs of a system can take various forms. The most common are reports, Screens,

Printed form, Animations etc. The outputs also vary in terms of their contents, frequency,

timing and format. The users of the output, its purpose and sequence of details to be are all

considered. The output forms a system in the justification for its existence. If the outputs

are inadequate in anyway, the system itself is inadequate. The basic requirements of output

are that it should be accurate, timely and appropriate, in terms of content, medium and

layout for its intended purpose. Hence it is necessary to design output so that the objectives

of the system are met in the best possible manner. The outputs are in the form of reports

When designing output, the system analyst must accomplish things like, to determine what

information to be present, to decide whether to display or print the information and select

the output medium to distribute the output to intended recipients. The output is the most

important and direct source of information to the user. So it should be provided in a most

SAINTGITS COLLEGE OF ENGINEERING 34

HAND GESTURE BASED 3D GAME

efficient formatted way. An efficient and intelligent output of the system improves the

relationship between the user and the system and help in decision making.

4.4 Algorithms used

4.4.1 Mediapipe

The ability to perceive the shape and motion of hands can be a vital component in

improving the user experience across a variety of technological domains and platforms.

For example, it can form the basis for sign language understanding and hand gesture

control, and can also enable the overlay of digital content and information on top of the

physical world in augmented reality. While coming naturally to people, robust real-time

hand perception is a decidedly challenging computer vision task, as hands often occlude

themselves or each other (e.g. finger/palm occlusions and hand shakes) and lack high

contrast patterns. MediaPipe Hands is a high-fidelity hand and finger tracking solution. It

employs machine learning (ML) to infer 21 3D landmarks of a hand from just a single

frame. Whereas current state-of-the-art approaches rely primarily on poAlgorithmrful

desktop environments for inference, our method achieves real-time performance on a

mobile phone, and even scales to multiple hands. Algorithm hope that providing this hand

perception functionality to the wider research and development community will result in an

emergence of creative use cases, stimulating new applications and new research avenues.

MediaPipe Hands utilizes an ML pipeline consisting of multiple models working together:

A palm detection model that operates on the full image and returns an oriented hand

bounding box. A hand landmark model that operates on the cropped image region defined

by the palm detector and returns high-fidelity 3D hand keypoints. This strategy is similar

to that employed in our MediaPipe Face Mesh solution, which uses a face detector together

with a face landmark model. Providing the accurately cropped hand image to the hand

landmark model drastically reduces the need for data augmentation (e.g. rotations,

translation and scale) and instead allows the network to dedicate most of its capacity

towards coordinate prediction accuracy. In addition, in our pipeline the crops can also be

generated based on the hand landmarks identified in the previous frame, and only when the

landmark model could no longer identify hand presence is palm detection invoked to

relocalize the hand. The pipeline is implemented as a MediaPipe graph that uses a hand

SAINTGITS COLLEGE OF ENGINEERING 35

HAND GESTURE BASED 3D GAME

landmark tracking subgraph from the hand landmark module, and renders using a

dedicated hand renderer subgraph. The hand landmark tracking subgraph internally uses a

hand landmark subgraph from the same module and a palm detection subgraph from the

palm detection module.To detect initial hand locations, Algorithm designed a single-shot

detector model optimized for mobile real-time uses in a manner similar to the face

detection model in MediaPipe Face Mesh. Detecting hands is a decidedly complex task:

our lite model and full model have to work across a variety of hand sizes with a large scale

span (~20x) relative to the image frame and be able to detect occluded and self-occluded

hands. Whereas faces have high contrast patterns, e.g., in the eye and mouth region, the

lack of such features in hands makes it comparatively difficult to detect them reliably from

their visual features alone. Instead, providing additional context, like arm, body, or person

features, aids accurate hand localization. Our method addresses the above challenges using

different strategies. First, Algorithm train a palm detector instead of a hand detector, since

estimating bounding boxes of rigid objects like palms and fists is significantly simpler than

detecting hands with articulated fingers. In addition, as palms are smaller objects, the non-

maximum suppression algorithm works Algorithmll even for two-hand self-occlusion

cases, like handshakes. Moreover, palms can be modelled using square bounding boxes

(anchors in ML terminology) ignoring other aspect ratios, and therefore reducing the

number of anchors by a factor of 3-5. Second, an encoder-decoder feature extractor is used

for bigger scene context awareness even for small objects (similar to the RetinaNet

approach). Lastly, Algorithm minimize the focal loss during training to support a large

amount of anchors resulting from the high scale variance. With the above techniques,

Algorithm achieve an average precision of 95.7% in palm detection. Using a regular cross

entropy loss and no decoder gives a baseline of just 86.22%. After the palm detection over

the whole image our subsequent hand landmark model performs precise keypoint

localization of 21 3D hand-knuckle coordinates inside the detected hand regions via

regression, that is direct coordinate prediction. The model learns a consistent internal hand

pose representation and is robust even to partially visible hands and self-occlusions. To

obtain ground truth data, Algorithm have manually annotated ~30K real-world images with

21 3D coordinates, as shown below (Algorithm take Z-value from image depth map, if it

exists per corresponding coordinate). To better cover the possible hand poses and provide

additional supervision on the nature of hand geometry, Algorithm also render a high-

SAINTGITS COLLEGE OF ENGINEERING 36

HAND GESTURE BASED 3D GAME

quality synthetic hand model over various backgrounds and map it to the corresponding 3D

coordinates.

Fig 4.4.1 Mediapipe hands

4.4.2 Single Shot Detector

The Single Shot MultiBox Detector (SSD) is one of the fastest algorithms in the current

target detection field. It has achieved good results in target detection but there are problems

such as poor extraction of features in shallow layers and loss of features in deep layers. In

this paper, we propose an accurate and efficient target detection method, named Single

Shot Object Detection with Feature Enhancement and Fusion (FFESSD), which is to

enhance and exploit the shallow and deep features in the feature pyramid structure of the

SSD algorithm. To achieve it we introduced the Feature Fusion Module and two Feature

Enhancement Modules, and integrated them into the conventional structure of the SSD.

Experimental results on the PASCAL VOC 2007 dataset demonstrated that FFESSD

achieved 79.1% mean average precision (mAP) at the speed of 54.3 frame per second

SAINTGITS COLLEGE OF ENGINEERING 37

HAND GESTURE BASED 3D GAME

(FPS) with the input size 300 × 300, while FFESSD with a 512 × 512 sized input achieved

81.8% mAP at 30.2 FPS. The proposed network shows state-of-the-art mAP, which is

better than the conventional SSD, Deconvolutional Single Shot Detector (DSSD), Feature-

Fusion SSD (FSSD), and other advanced detectors. On extended experiment, the

performance of FFESSD in fuzzy target detection was better than the conventional SSD. In

recent years, a lot of target detection algorithms based on the convolutional neural network

(CNN) have been proposed to solve the problem of poor accuracy and real-time

performance of commonly used traditional target detection algorithms. Target detection

algorithms based on convolutional neural networks have been divided into two categories

according to the number of feature layers extracted from different scales. The first is the

single scale characteristic detector type, such as region with CNN feature (R-CNN) , Fast

Region-based Convolutional Network method (Fast R-CNN) [6], Faster R-CNN, Spatial

Pyramid Pooling Networks (SPP-NET) , and You Only Look Once (YOLO) , and the other

is the multi-scale characteristic detector type such as Single Shot Multibox Detector

(SSD) , Deconvolutional Single Shot Detector (DSSD) , Feature Pyramid Networks

(FPN) , and Feature-Fusion SSD (FSSD) . The former type detects targets of different sizes

under a single scale feature, which is a limitation to detection of targets that are too large or

too small; the latter type extracts features from different scale feature layers for target

classification and location, which improves the detection effect. Among various target

detection methods, SSD is relatively fast and accurate because it uses multiple convolution

layers of different scales for target detection. SSD takes the Visual Geometry

Group(VGG16) as the basic network, and adopts a pyramid structure feature layer group

(multi-scale feature layer) for classification and positioning. It uses features extracted from

shallow networks to detect smaller targets, and larger targets are detected by deeper

networks features. However, SSD does not consider the relationships between the different

layers so that semantic information in different layers is not taken full advantage of. It

might cause the problem named “Box-in-Box” , which means that a single target is

detected by two overlapping boxes. In addition, the feature semantic information extraction

by shallow networks is less and might not have enough capability to detect small targets

SAINTGITS COLLEGE OF ENGINEERING 38

HAND GESTURE BASED 3D GAME

Fig 4.4.2 SSD Architecture

CHAPTER 5

AGILE DOCUMENTATION

SAINTGITS COLLEGE OF ENGINEERING 39

HAND GESTURE BASED 3D GAME

5.1 Agile Roadmap/Schedule

The product roadmap provides a strategy and plan for product development. It’s driven by

short and long-term company goals and communicates how and when the product will help

achieve those goals. When done effectively, the product roadmap reduces uncertainty

about the future and keeps product teams focused on the highest priority product

initiatives. There are always a million ideas and opportunities that product teams could be

pursuing. The product roadmap shows everyone which to focus on. In addition, the

roadmap helps product leaders communicate the product vision and strategy to senior

executives, sales and marketing teams, and customers, and manage expectations about

when significant product milestones will be completed. When stakeholders don’t feel heard

or are uncertain about where the product is going, they may begin to doubt the strategy,

which can lead to a toxic work environment. The product roadmap aligns the key

stakeholders on product goals, strategy, and development timelines. The product roadmap

typically illustrates the following key elements:

Product strategy and goals

What products and features will be built

When those product features will be built

Who is responsible for building those products and features

Introduction to Scrum

Scrum is an agile process for managing complex projects, especially software development

that has dynamic and highly emergent requirements. Scrum software development

SAINTGITS COLLEGE OF ENGINEERING 40

HAND GESTURE BASED 3D GAME

proceeds to its completion via a series of iterations called Sprint. Small teams consisting of

a 6-10 people (it may vary) divide their work into “mini projects (iterations)” that have

duration of about one – four weeks during which a limited number of detailed user stories

are done.

Scrum Role

There are three roles in the scrum framework:

1. Product owner

Product owner represents the products stakeholders and the role of the customer.

There are one product owner who conveys the overall mission. Managing the

product backlog and accepting completed increments of work. The product owner

clearly expresses the product backlog to achieve goals and missions, ensuring that

the product backlog is visible, transparent, and clear to all.

2. Scrum Master

Servant leader to the product owner, development team and organization. Scrum

master protect the team by doing anything possible to help the team perform at the

highest level. The Scrum master is responsible for making sure a Scrum team lives

by the values and practices of Scrum, and for removing any impediments to the

progress of the team. As such, she should shield the team from external

interferences, and ensure that the Scrum process is followed, including issuing

invitations to the daily Scrum meetings.

3. Development Team

Development team is responsible for delivering potentially shippable product

increments every sprint. Team has form 3-9 members required to build the product

increments. The Scrum team consists of a group of people developing the software

product. There is no personal responsibility in Scrum, the whole team fails or

succeeds as a single entity.

Daily Scrum Meeting

SAINTGITS COLLEGE OF ENGINEERING 41

HAND GESTURE BASED 3D GAME

Daily Scrum meeting is a short everyday meeting, each team member who explains their

works during this meeting, each team member should briefly provide the answers of the

following three questions:

What has he or she accomplished since the last daily Scrum meeting?

What is he or she is going to accomplish until the next Scrum meeting?

What are the impediments that prevent him or her from accomplishing his or

her tasks?

All team members should attend and they should stand during the meeting. The daily

Scrum meeting should ideally not last more than 15 minutes. On the other no issues or

concerns raised during the meeting are allowed to be ignored due to the lack of time.

Issuesor concerns ought to be recorded by the Scrum Master and needs to be specifically

handled after the meeting.

5.2 Agile Project Plan

The project will go through the following stages of development in its software

development life cycle

Fig 5.2 Agile Project Plan

5.3 Agile User Story

SAINTGITS COLLEGE OF ENGINEERING 42

HAND GESTURE BASED 3D GAME

In software development and product management, a user story is an informal, natural

language description of one or more features of a software system. A user story is a tool

used in Agile software development to capture a description of a software feature from an

end-user perspective. A user story describes the type of user, what they want and why. A

user story helps to create a simplified description of a requirement. User stories are often

recorded on index cards, on Post-it notes, or in project management software. Depending

on the project, user stories may be written by various stakeholders such as clients, users,

managers or development team members.

5.4 Agile Sprint Backlog

In the product development, a sprint is a set period of time during which specific work has

to be completed and made ready for review. Each sprint begin with a planning meeting.

During the meeting the product owner (the person requesting the work) and the

development team agree upon exactly what work will be accomplished during the sprint.

The development team has the final say when it comes to determining how much workcan

realistically be accomplished during the sprint, and the product owner has the final say on

what criteria need to be met for the work to be approved and accepted. The duration of

asprint is determined by the scrum master, the team’s facilitator. Once the team reaches a

consensus for how many days a sprint should last, all future sprints should be the same. In

our project contains six sprint and two weeks considered as one sprint.

Product Backlog

The agile product backlog in Scrum is a prioritized features list, containing short

descriptions of all functionality desired in the product. When applying Scrum, it’s not

necessary to start a project with a lengthy, upfront effort to document all requirements. In

the simplest definition the Scrum Product Backlog is simply a list of all things that needs to

be done within the project. It replaces the traditional requirements specification artifacts.

Sprint Planner

Any Sprint starts with planning. In the sprint planning meeting, a small chunk from the top

of the product backlog are pulled and it is decided how to implement those pieces. The

pieces, generally called user stories are taken into the sprint and points are provided to

them as per their complexity. Some specific points are taken to be completed within a

SAINTGITS COLLEGE OF ENGINEERING 43

HAND GESTURE BASED 3D GAME

given duration. After taking the user stories to the sprint, all the user stories are broken

down into sub tasks and the sub tasks are assigned to the team in “To Do” state.

Sometimes,all the user stories are not pulled during the sprint planning meeting. Some of

the stories can be pulled in the middle of the scrum when any user story is completed

before the time. Sprint Planning is time-boxed to a maximum of sixty hours for a two-

week Sprint. For shorter Sprints, the event is usually shorter. The Scrum Master ensures

that the event takes place and that attendants understand its purpose. The Scrum Master

teaches the Scrum Team to keep it within the time-box. Sprint planner of

Sprint Review Meeting

Sprint Review Meeting is held at the end of each Sprint and used as an overview. During

the meeting, the team evaluates the results of the work, usually in a form of demo of newly

implemented features. Sprint Review Meeting shouldn’t be treated as a formal meeting

with detailed reports. Sprint Review Meeting is just a logical conclusion of a Sprint of the

week. One shouldn’t spend more than 2 hours to prepare for the meeting.

Burndown Chart

A burndown chart is a graphical representation of work left to do versus time. The

outstanding work is often on the vertical axis, with time along the horizontal. That is, it is a

run chart of outstanding work. It is useful for predicting when all of the work will be

completed. It is often used in agile software development methodologies such as Scrum.

However, burn down charts can be applied to any project containing measurable progress

over time. Burn down chart is a team’s effort and progress are denoted in a graphical

manner, which shows where we are in the sprint. The X-axis represent the project/iteration

timeline and the Y-axis represent the time estimates for the work remaining. The ideal line

is a straight line that connects the start point to the end point. At the start point, the

idealline shows the sum of the estimates for all the tasks (work) that needs to be completed

5.5 Agile Test Plan

A test plan is a work agreement between QA, the developer, and the product manager. A

single page of succinct information allows team members to review fully and provide

necessary input for QA testing. The single-page test plan includes specific details on who,

what, where, when, and how software development code is tested. It must be in a readable

SAINTGITS COLLEGE OF ENGINEERING 44

HAND GESTURE BASED 3D GAME

format and include only the most necessary information on what you’re testing, where,

when, and how. The purpose of your test plan is to provide developer input to QA and

historical documentation for reference as needed.

CHAPTER 6

IMPLEMENTATION AND TESTING

SAINTGITS COLLEGE OF ENGINEERING 45

HAND GESTURE BASED 3D GAME

6.1 Implementation Approaches

The implementation is one phase of software development. Implementation is that stage in

the project where theoretical design is turned into working system. Implementation

involves placing the complete and tested software system into actual work environment.

Implementation is concerned with translating design specification with source code. The

primary goal of implementation is to write the source code to its specification that can be

achieved by making the source code clear and straight forward as possible. Implementation

means the process of converting a new or revised system design into operational one. The

implementation is the final stage and it’s an important phase. It involves the individual

programming, system testing, user training and the operational running of developed

proposed system that constitute the application subsystems. One major task of preparing

for implementation is education of users, which should really have been taken place much

earlier in the project when they were being involved in the investigation and design work.

During this implementation phase system actually takes physical shape. Depending on the

size of the organization and its requirements the implementation is divided into three parts:

Stage Implementation

SAINTGITS COLLEGE OF ENGINEERING 46

HAND GESTURE BASED 3D GAME

Here system is implemented in stages. The whole system is not implemented at once. Once

the user starts working with system and is familiar with it, then a stage is introduced and

implemented. Also, the system is usually updated regularly until a final system is sealed.

Direct Implementation

The proposed new system is implemented directly and the user starts working on the new

System. The shortcoming, if any, faced are then rectified later.

Parallel Implementation

Was implemented on approach of prototype model whose functionality was in- creased day

by day, as the client was given full liberty in choosing his needs and gets to the maximum

benefit out of the system developed. Implementation is that process plan where the

theoretical design is put into real test. All the theoretical and practical works are now

implemented as a working system. This is most crucial stage in the life cycle of a project.

The project may be accepted or rejected depending on how it gathers confidence among

the users. The implementation stage involves the following tasks.

6.1.1 Implementation methods

Implementation of software refers to final installation of package in the real environment,

to the satisfaction of the intended users and the successful operation of the system.

Implementation is the stage of the project where the theoretical design is turned into a

working system. Implementation includes all those activities that takes place to convert

from the old system to new one. Proper implementation is essential to provide a reliable

system to meet the organizational requirements.

6.1.2 Implementation plan

The Implementation Plan describes how the information system will be deployed,

installed and transitioned into an operational system. The plan contains an overview of the

system, a brief description of the major tasks involved in the implementation, the overall

resources needed to support the implementation effort, and any site-specific

implementation requirements. The plan is developed during the Design Phase and is

SAINTGITS COLLEGE OF ENGINEERING 47

HAND GESTURE BASED 3D GAME

updated during the Development Phase the final version is provided in the Integration and

Test Phase and is used for guidance during the implementation phase.

6.2 Testing Methods

Testing is the process of examining the software to compare the actual behaviour with that

of the excepted behaviour. The major goal of software testing is to demonstrate that faults

are not present. In order to achieve this goal, the tester executes the program with the intent

of finding errors. Though testing cannot show absence of errors but by not showing their

presence it is considered that these are not present. System testing is the first Stage of

implementation, which is aimed at ensuring that the system works accurately and

efficiently before live operations commences. Testing is vital to the success of the system.

System testing makes a logical assumption that if all the parts of the system are correct and

the goal will be successfully achieved. A series of testing are performed for the proposed

system before the proposed system is ready for user acceptance testing.

Software testing is an integral part of to ensure software quality, some software

organizations are reluctant to include testing in their software cycle, because they are afraid

of the high cost associated with the software testing. There are several factors that attribute

the cost of software testing. Creating and maintaining large number of test cases is a time-

consuming process.

Once the source code has been generated, the program should be executed before the

customer gets it with the specific intend of fining and removing all errors, test must be

designed using disciplined techniques. Testing techniques provides the systematic

guidance for designing to uncover the errors in the program behaviour function and

performance the following steps to be done:

• Execute the integral logic of the software components

• Execute the input and output domains of the program to uncover errors

Software reliability is defined as the probability that the software will not undergo failure

for a specified time under specified condition. Failure is the inability of a system or a

component to perform a required function according to its specification. Different levels of

testing were employed for software to make an error free, fault free and reliable. Basically,

in software testing four type of testing methods are adopted

SAINTGITS COLLEGE OF ENGINEERING 48

HAND GESTURE BASED 3D GAME

Levels of testing

• Unit Testing

• Integration Testing

• Validations

• System Testing

Unit testing

In this each module is tested individually before integrating it to the final system. Unit test

focuses verification in the smallest unit of software design in each module. This is also

known as module testing as here each module is tested to check whether it is producing the

desired output and to see if any error occurs. Unit testing is commonly automated, but may

still be performed manually. The objective in unit testing is to isolate a unit and validate its

correctness. A manual approach to unit testing may employ a step-by- step instructional

document. However, automation is efficient for achieving this, and enables the many

benefits listed in this article. Conversely, if not planned carefully, a careless manual unit

test case may execute as an integration test case that involves many software components,

and thus preclude the achievement of most if not all of the goals established for unit

testing. Unit testing focuses verification efforts even in the smallest unit of software design

in each module. This is known as “module testing”.

The modules of this project are tested separately. This testing is carried out in the

programming style itself. In this testing each module is focused to work satisfactorily as

regard to expected output from the module. There are some validation checks for the fields.

Unit testing gives stress on the modules of the project independently of one another, to find

errors. Different modules are tested against the specifications produced during the design

of the modules. Unit testing is done to test the working of individual modules with test

servers. Program unit is usually small enough that the programmer who developed it can

test it in a great detail. Unit testing focuses first on that the modules to locate errors. These

errors are verified and corrected and so that the unit perfectly fits to the project.

Integration testing

SAINTGITS COLLEGE OF ENGINEERING 49

HAND GESTURE BASED 3D GAME

Integration testing (sometimes called integration and testing, abbreviated I and T) is the

phase in software testing in which individual software modules are combined and tested as

a group. It occurs after unit testing and before validation testing. Integration testing takes

as its input modules that have been unit tested, groups them in larger aggregates, applies

tests defined in an integration test plan to those aggregates, and delivers as its output the

integrated system ready for system testing. The purpose of integration testing is to verify

functional, performance, and reliability requirements placed on major design items.

System testing

System testing of software or hardware is testing conducted on a complete, integrated

system to evaluate the system’s compliance with its specified requirements. System testing

falls within the scope of black-box testing, and as such, should require no knowledge of the

inner design of the code or logic. As a rule, system testing takes, as its input, all of the

“integrated” software components that have passed integration testing and also the