ELEC3028 (EL334) Digital Transmission: Building 53

ELEC3028 (EL334) Digital Transmission: Building 53

Uploaded by

Pius OdabaCopyright:

Available Formats

ELEC3028 (EL334) Digital Transmission: Building 53

ELEC3028 (EL334) Digital Transmission: Building 53

Uploaded by

Pius OdabaOriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

ELEC3028 (EL334) Digital Transmission: Building 53

ELEC3028 (EL334) Digital Transmission: Building 53

Uploaded by

Pius OdabaCopyright:

Available Formats

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

ELEC3028 (EL334) Digital Transmission

Half of the unit: Information Theory MODEM (modulator and demodulator) Professor Sheng Chen: Building 53, Room 4005 E-mail: sqc@ecs.soton.ac.uk Lecture notes from: Course Oce (ECS Student Services) or Download from: http://www.ecs.soton.ac.uk/sqc/EL334N/ http://www.ecs.soton.ac.uk/sqc/EL334/

Other half: CODEC (source coding, channel coding) by Prof. Lajos Hanzo

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Whats New (Should be Old) in Communications

Imagine a few scenarios: In holiday, use your fancy mobile phone to take picture and send it to a friend In airport waiting for boarding, switch on your laptop and go to your favourite web side Or watch World Cup with your mobile phone Do you know these words: CDMA, multicarrier, OFDM, space-time processing, MIMO, turbo coding Broadband, WiFI, WiMAX, intelligent network, smart antenna, IP telephone In this introductory course, we should go through some A B C ... of Digital Communication

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Wireless and Mobile Networks

Current/future: 2G GSM, 3G UMTS (universal mobile telecommunication system), and MBS (mobile broadband system) being developed for B3G or 4G mobile system

User mobility

GSM/HSCSD

GSM

GSM/GPRS

Fast mobile Solow mobile Movable Fixed

MBS GSM/EDGE

HIPERLAN UMTS BISDN

155 M Service data rate (bps)

ISDN

144 k 384 k 9.6 k 64 k 2M

Some improved 2G, HSCSD: high-speed circuit switched data, GPRS: general packet radio service, EDGE: enhanced data rates for GSM evolution. Also, HIPERLAN: high performance radio local area network

20 M

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

General Comments on Communications

Aim of telecommunications: to communicate information between geographically separated locations via a communications channel of adequate quality (at certain rate reliably)

input

channel

output

The transmission will be based on digital data, which is obtained from (generally) analogue quantities by 1. sampling (Nyquist: sampling with at least twice the maximum frequency), and 2. quantisation (introduction of quantisation noise through rounding o) Transmitting at certain rate requires certain spectral bandwidth Here channel means whole system, which has certain capacity, the maximum rate that can be used to transmit information through the system reliably

4

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

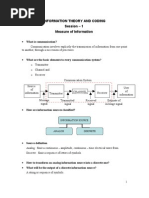

General Transmission Scheme

A digital transmission scheme generally involves:

input source encoding channel encoding modu lation channel output source decoding channel decoding demodu lation

Input/output are considered digital (analogue sampled/quantised) CODEC, MODEM, channel (transmission medium) Your 3G mobile phone, for example, contains a pair of transmitter and receiver (together called transceiver), consisting of a CODEC and MODEM

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

What is Information

Generic question: what is information? How to measure it (unit)? Generic digital source is characterised by: Source alphabet (message or symbol set): m1, m2, , mq Probability of occurrence (symbol probabilities): p1, p2, , pq e.g. binary equiprobable source m1 = 0 and m2 = 1 with p1 = 0.5 and p2 = 0.5 Symbol rate (symbols/s or Hz) Probabilistic interdependence of symbols (correlation of symbols, e.g. does mi tell us nothing about mj or something?) At a specic symbol interval, symbol mi is transmitted correctly to receiver What is amount of information conveyed from transmitter to receiver? The answer: 1 I(mi) = log2 = log2 pi (bits) pi

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Concept of Information

Forecast: tomorrow, rain in three dierent places: 1. Raining season in a tropical forest 2. Somewhere in England 3. A desert where rarely rains Information content of an event is connected with uncertainty or inverse of probability. The more unexpected (smaller probability) the event is, the more information it contains Information theory (largely due to Shannon) Measure of information Information capacity of channel coding as a means of utilising channel capacity

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Shannon Limit

We know dierent communication system designs achieve dierent performance levels and we also know system performance is always limited by the available signal power, the inevitable noise and the need to limit bandwidth What is the ultimate performance limit of communication systems, underlying only by the fundamental physical nature? Shannons information theory addresses this question

Shannons theorem: If the rate of information from a source does not exceed the capacity of a communication channel, then there exists a coding technique such that the information can be transmitted over the channel with arbitrarily small probability of error, despite the presence of noise

In 1992, two French Electronics professors developed practical turbo coding, which approaches Shannon limit (transmit information at capacity rate)

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Information Content

Source with independent symbols: m1, m2, , mq , and probability of occurrence: p1 , p2 , , pq Denition of information: amount of information in ith symbol mi is dened by 1 I(mi) = log2 = log2 pi pi Note the unit of information: bits ! Properties of information Since probability 0 pi 1, I(mi) 0: information is nonnegative If pi > pj , I(mi) < I(mj ): the lower the probability of a source symbol, the higher the information conveyed by it I(mi) 0 as pi 1: symbol with probability one carries no information I(mi) as pi 0: symbol with probability zero carries innite amount of information (but it never occurs)

9

(bits)

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Physical Interpretation

Information content of a symbol or message is equal to minimum number of binary digits required to encode it and, hence, has a unit of bits Binary equiprobable symbols: m1, m2 0, 1, minimum of one binary digit (one bit) is required to represent each symbol Equal to information content of each symbol: I(m1) = I(m2) = log2 2 = 1 bit Four equiprobable symbols: m1, m2, m3, m4 00, 01, 10, 11 minimum of two bits is required to represent each symbol Equal to information content of each symbol: I(m1) = I(m2) = I(m3) = I(m4) = log2 4 = 2 bits In general, q equiprobable symbols mi, 1 i q, minimum number of bits to represent each symbol is log2 q Equal to information content of each symbol: I(mi) = log2 q bits Use log2 q bits for each symbol is called Binary Coded Decimal Equiprobable case: mi, 1 i q, are equiprobable BCD is good Non-equiprobable case ?

10

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Information of Memoryless Source

Source emitting a symbol sequence of length N . A memoryless Source implies that each message emitted is independent of the previous messages Assume that N is large, so symbol mi appears pi N times in the sequence Information contribution from ith symbol mi

Ii = (pi N ) log2 1 pi

Total information of symbol sequence of length N

Itotal =

q X i=1

Ii =

q X i=1

pi N log2

1 pi

Average information per symbol (entropy) is

q X Itotal 1 = pi log2 N pi i=1

(bits/symbol)

11

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Entropy

Memoryless source entropy is dened as the average information per symbol:

H =

q X i=1

X 1 pi log2 pi = pi log2 pi i=1

(bits/symbol)

Source emitting at rate of Rs symbols/sec has information rate:

R = Rs H

(bits/sec)

If each symbol is encoded by log2 q bits, i.e. binary coded decimal, average output bit rate is Rs log2 q. Note information rate R is always smaller or equal to the average output bit rate of the source1 ! source: alphabet of q symbols

1

symbol rate Rs

E

log2 q bits (BCD)

actual bit rate: Rs log2 q R

Hint: H log2 q

12

ELEC3028 Digital Transmission Overview & Information Theory

S Chen

Summary

Overview of a digital communication system: system building blocks Appreciation of information theory Information content of a symbol, properties of information Memoryless source with independent symbols: entropy and source information rate

13

You might also like

- Information TheoryDocument30 pagesInformation TheorySuhas Ns50% (2)

- Basic Concept of CommunicationDocument9 pagesBasic Concept of CommunicationShuvodip Das100% (5)

- DDR4 Design VerificationDocument11 pagesDDR4 Design VerificationbindutusharNo ratings yet

- Information Theory and CodingDocument84 pagesInformation Theory and CodingsonaliNo ratings yet

- DCS Module 1Document46 pagesDCS Module 1Sudarshan GowdaNo ratings yet

- Ece-V Sem-Information Theory & Coding-Unit1,2,3,4,5,6,7,8Document218 pagesEce-V Sem-Information Theory & Coding-Unit1,2,3,4,5,6,7,8Quentin Guerra80% (5)

- 01-Syllabus and IntroDocument21 pages01-Syllabus and IntroM Khaerul NaimNo ratings yet

- Lecture Note v1.2Document107 pagesLecture Note v1.2cephasmawuenaagrohNo ratings yet

- Chapter-3-Introduction To Digital CommunicationDocument45 pagesChapter-3-Introduction To Digital Communicationgebrezihertekle38181470No ratings yet

- Unit III AcsDocument91 pagesUnit III Acskarthikeyan.vNo ratings yet

- Measurement of InfomationDocument17 pagesMeasurement of InfomationYashasvi MittalNo ratings yet

- 18ec61 DCDocument146 pages18ec61 DCSadashiv BalawadNo ratings yet

- Ece-V-Information Theory & Coding (10ec55) - NotesDocument217 pagesEce-V-Information Theory & Coding (10ec55) - NotesMahalakshmiMNo ratings yet

- Dgital Communication LecturesDocument202 pagesDgital Communication LecturesmgoldiieeeeNo ratings yet

- Amount of Information I Log (1/P)Document2 pagesAmount of Information I Log (1/P)NaeemrindNo ratings yet

- Instructor: Course Webpage: Lab Assistant: Textbook: GradingDocument20 pagesInstructor: Course Webpage: Lab Assistant: Textbook: GradingteredoxNo ratings yet

- Introduction To Digital CommunicationDocument21 pagesIntroduction To Digital CommunicationAJay LevantinoNo ratings yet

- Basics of Communication SystemsDocument55 pagesBasics of Communication SystemsMarielle Lucas GabrielNo ratings yet

- Ece-V-Information Theory & Coding (10ec55) - NotesDocument199 pagesEce-V-Information Theory & Coding (10ec55) - NotesTapasRoutNo ratings yet

- 30820-Communication Systems: Week 1 - Lecture 1-3 (Ref: Chapter 1 of Text Book)Document26 pages30820-Communication Systems: Week 1 - Lecture 1-3 (Ref: Chapter 1 of Text Book)Tooba SaharNo ratings yet

- Chapter1 Lect1Document20 pagesChapter1 Lect1aswardiNo ratings yet

- Infotheory&Coding BJS CompiledDocument91 pagesInfotheory&Coding BJS CompiledTejus PrasadNo ratings yet

- CS6304 NotesDocument155 pagesCS6304 NotescreativeNo ratings yet

- Chapter1 Lect1Document14 pagesChapter1 Lect1muyucel3No ratings yet

- Question Bank: P A RT A Unit IDocument13 pagesQuestion Bank: P A RT A Unit Imgirish2kNo ratings yet

- DC HandoutsDocument51 pagesDC HandoutsneerajaNo ratings yet

- Introduction and NoiseDocument65 pagesIntroduction and NoiseBAHARUDIN BURAHNo ratings yet

- Chapter 3 FECDocument10 pagesChapter 3 FECSachin KumarNo ratings yet

- Communication PDFDocument0 pagesCommunication PDFwww.bhawesh.com.npNo ratings yet

- Section ADocument70 pagesSection AAyaneNo ratings yet

- Network Protocol and ArchitectureDocument84 pagesNetwork Protocol and ArchitectureKidus SeleshiNo ratings yet

- 2 marsk-ITCDocument8 pages2 marsk-ITClakshmiraniNo ratings yet

- S-72.1140 Transmission Methods in Telecommunication Systems (5 CR)Document46 pagesS-72.1140 Transmission Methods in Telecommunication Systems (5 CR)chinguetas_82600No ratings yet

- DC 5th sem july-2016-qp-solutionDocument22 pagesDC 5th sem july-2016-qp-solutionhajiraa.2903No ratings yet

- Analog To Digital ConversionDocument37 pagesAnalog To Digital ConversionSharanya VaidyanathNo ratings yet

- Introduction To Digital CommunicationDocument21 pagesIntroduction To Digital CommunicationAries Richard RosalesNo ratings yet

- The Information Theory: C.E. Shannon, A Mathematical Theory of Communication'Document43 pagesThe Information Theory: C.E. Shannon, A Mathematical Theory of Communication'vishvajeet dhawaleNo ratings yet

- Fundamentals of Digital Communications and Data TransmissionDocument114 pagesFundamentals of Digital Communications and Data TransmissionFiroz AminNo ratings yet

- S.72-227 Digital Communication Systems: Cyclic CodesDocument29 pagesS.72-227 Digital Communication Systems: Cyclic CodesSuranga SampathNo ratings yet

- Chapter 3 - 1 Physical LayerDocument30 pagesChapter 3 - 1 Physical Layerhenok metaferiaNo ratings yet

- Book BELATTARDocument11 pagesBook BELATTARazer31rrNo ratings yet

- 6th Sem Question Bank EVEN'11Document27 pages6th Sem Question Bank EVEN'11tutulkarNo ratings yet

- InfThe L2Document15 pagesInfThe L2Harshit LalpuraNo ratings yet

- ECT 306 ITC MODULE 1 (2019 Scheme)Document70 pagesECT 306 ITC MODULE 1 (2019 Scheme)nivedithapalakkadNo ratings yet

- EE324-Digital Communication: Department of Electrical Engineering, FET, Gomal UniversityDocument33 pagesEE324-Digital Communication: Department of Electrical Engineering, FET, Gomal UniversityUMAIR LEONo ratings yet

- Measure of InformationDocument92 pagesMeasure of InformationManojNo ratings yet

- Computer Network NotesDocument181 pagesComputer Network NotesKunal RanaNo ratings yet

- Fundamental Limits and Basic Information Theory: Computer CommunicationsDocument10 pagesFundamental Limits and Basic Information Theory: Computer CommunicationsMahadevNo ratings yet

- DCSP-1: Introduction: - Jianfeng FengDocument39 pagesDCSP-1: Introduction: - Jianfeng Fengfaroo28No ratings yet

- 2 - The Digital Deluge-1micro ComputerDocument42 pages2 - The Digital Deluge-1micro ComputersantanooNo ratings yet

- Communication I1Document38 pagesCommunication I1Binod Pokhrel SharmaNo ratings yet

- Ece-V-Information Theory & Coding (10ec55) - NotesDocument217 pagesEce-V-Information Theory & Coding (10ec55) - NotesPrashanth Kumar0% (1)

- DC (UNIT -II)Document40 pagesDC (UNIT -II)singhsahab5027No ratings yet

- 3-Assignment I - A1 DIGITAL ASSINMENTDocument2 pages3-Assignment I - A1 DIGITAL ASSINMENTRaj GaneshNo ratings yet

- Analytical Modeling of Wireless Communication SystemsFrom EverandAnalytical Modeling of Wireless Communication SystemsNo ratings yet

- Measurements-Based Radar Signature Modeling: An Analysis FrameworkFrom EverandMeasurements-Based Radar Signature Modeling: An Analysis FrameworkNo ratings yet

- Tutorial Letter 101/3/2024: Basic NumeracyDocument17 pagesTutorial Letter 101/3/2024: Basic Numeracygouws.charlize657No ratings yet

- Smart Storage Administrator (SSA) - Quick Guide To Determine SSD Power On HoursDocument3 pagesSmart Storage Administrator (SSA) - Quick Guide To Determine SSD Power On HoursarpinavyaNo ratings yet

- 2 Predictive PolicingDocument4 pages2 Predictive PolicingAizle Fuentes EresNo ratings yet

- Department of Computer Engineering Css Sem Vi Academic Year 2021-2022Document2 pagesDepartment of Computer Engineering Css Sem Vi Academic Year 2021-2022VIDIT SHAHNo ratings yet

- Cs 201 Important Material For Viva PreparationDocument9 pagesCs 201 Important Material For Viva PreparationGulfam ShahzadNo ratings yet

- The Challenges of TodayDocument5 pagesThe Challenges of TodayqamaradeelNo ratings yet

- Savage Worlds - Fanmade Rules CompendiumDocument67 pagesSavage Worlds - Fanmade Rules CompendiumLeandro Jardim100% (3)

- Ergonomic Assessment in The WorkplaceDocument21 pagesErgonomic Assessment in The Workplaceingahmad523No ratings yet

- Transient Voltage Suppressor (TVS) DiodesDocument24 pagesTransient Voltage Suppressor (TVS) DiodesGiovanni GonzalezNo ratings yet

- Rock Star Manual PDFDocument78 pagesRock Star Manual PDFNorlan JoinerNo ratings yet

- Introduction To Computer Graphics (Week 2)Document18 pagesIntroduction To Computer Graphics (Week 2)Mashavia AhmadNo ratings yet

- MessageDocument68 pagesMessageAndrei joacaNo ratings yet

- NetBackup and VCSDocument6 pagesNetBackup and VCSmohantysNo ratings yet

- Veritrade Resumen ADEX-0 PE I 20190907131336Document50 pagesVeritrade Resumen ADEX-0 PE I 20190907131336isabelNo ratings yet

- Quiz-1 Syllabus of Embedded Systems DesignDocument20 pagesQuiz-1 Syllabus of Embedded Systems DesignNamratha BNo ratings yet

- Ctrl70a-V101 Adjustment ManualDocument42 pagesCtrl70a-V101 Adjustment Manualpaul3178No ratings yet

- Implementation UCD AU19B1014Document26 pagesImplementation UCD AU19B1014Sheikh Muhammed TadeebNo ratings yet

- k3569 MosfetDocument7 pagesk3569 MosfetDaniel Galvez MataNo ratings yet

- 69kV CT Ritz 2Document4 pages69kV CT Ritz 2hansamvNo ratings yet

- Datasheet Ims4 Awos WebDocument6 pagesDatasheet Ims4 Awos WebNguyễn Văn TrungNo ratings yet

- Unit 4 File Organisation in DBMS: Structure Page NosDocument26 pagesUnit 4 File Organisation in DBMS: Structure Page NosAkash KumarNo ratings yet

- CMP Part 2Document6 pagesCMP Part 2api-239564306No ratings yet

- CPD Log BookDocument6 pagesCPD Log BookBen MusimaneNo ratings yet

- Senator International PTY LTD Paia Manual - CleanedDocument24 pagesSenator International PTY LTD Paia Manual - Cleanedsamad aliNo ratings yet

- Introduction To Computing by David Evans: Programming - David EvansDocument18 pagesIntroduction To Computing by David Evans: Programming - David Evanssd1642No ratings yet

- Back To Back Order FlowDocument3 pagesBack To Back Order FlowSadasiva MNo ratings yet

- Adjust Axial Bently Nevada ProbesDocument3 pagesAdjust Axial Bently Nevada Probesmic-gr100% (2)

- UNIT 5 Part1Document101 pagesUNIT 5 Part1Mahammad SHAIKNo ratings yet