Soft Computing Book

Soft Computing Book

Uploaded by

ATISH KUMARCopyright:

Available Formats

Soft Computing Book

Soft Computing Book

Uploaded by

ATISH KUMAROriginal Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Copyright:

Available Formats

Soft Computing Book

Soft Computing Book

Uploaded by

ATISH KUMARCopyright:

Available Formats

lOMoARcPSD|41723852

SOFT Computing Notes

Mtech Computer Science (JC Bose University of Science and Technology, YMCA)

Scan to open on Studocu

Studocu is not sponsored or endorsed by any college or university

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361)

SOFT COMPUTING

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

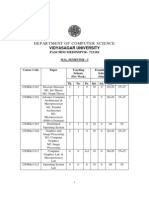

Soft Computing (CS361) Syllabus

Syllabus

Department of CSE, ICET 2

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Syllabus

Course No. Course Name L-T-P Credits Year of Introduction

CS361 SOFT COMPUTING 3-0-0-3 2015

Course Objectives

To introduce the concepts in Soft Computing such as Artificial Neural Networks, Fuzzy logic

based systems, genetic algorithm-based systems and their hybrids.

Syllabus

Introduction to Soft Computing, Artificial Neural Networks, Fuzzy Logic and Fuzzy systems,

Genetic Algorithms, hybrid systems.

Expected Outcome

Student is able to

1. Learn about soft computing techniques and their applications.

2. Analyze various neural network architectures.

3. Define the fuzzy systems.

4. Understand the genetic algorithm concepts and their applications.

5. Identify and select a suitable Soft Computing technology to solve the problem; construct a

Solution and implement a Soft Computing solution.

Text Books

1. S.N.Sivanandam and S.N.Deepa, Principles of soft computing-Wiley India.

2. Timothy J. Ross, Fuzzy Logic with engineering applications-Wiley India.

References

1. N. K. Sinha and M. M. Gupta, Soft Computing & Intelligent Systems: Theory &

Applications-Academic Press /Elsevier. 2009.

2. Simon Haykin, Neural Network- A Comprehensive Foundation- Prentice Hall International,

Inc.

3. R. Eberhart and Y. Shi, Computational Intelligence: Concepts to Implementation, Morgan

Kaufman/Elsevier, 2007.

4. Ross T.J. , Fuzzy Logic with Engineering Applications- McGraw Hill.

5. Driankov D., Hellendoorn H. and Reinfrank M., An Introduction to Fuzzy Control- Narosa

Pub.

6. Bart Kosko, Neural Network and Fuzzy Systems- Prentice Hall, Inc., Englewood Cliffs

7. Goldberg D.E., Genetic Algorithms in Search, Optimization, and Machine Learning

AddisonWesley.

Department of CSE, ICET 3

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Syllabus

Course Plan

Sem.Exam

Module Contents Hours

Marks%

Introduction to Soft Computing

Artificial neural networks - biological neurons, Basic models

I 08 15%

of artificial neural networks – Connections, Learning,

Activation Functions, McCulloch and Pitts Neuron, Hebb

network.

Perceptron networks – Learning rule – Training and testing

II 08 15%

algorithm, Adaptive Linear Neuron, Back propagation

Network – Architecture, Training algorithm.

FIRST INTERNAL EXAM

Fuzzy logic - fuzzy sets - properties - operations on fuzzy

III 07 15%

sets, fuzzy relations - operations on fuzzy relations.

Fuzzy membership functions, fuzzification, Methods of

IV membership value assignments – intuition – inference – 07 15%

rank ordering, Lambda –cuts for fuzzy sets, Defuzzification

methods.

SECOND INTERNAL EXAM

Truth values and Tables in Fuzzy Logic, Fuzzy propositions,

Formation of fuzzy rules - Decomposition of rules –

V 08 20%

Aggregation of rules, Fuzzy Inference Systems - Mamdani

and Sugeno types, Neuro-fuzzy hybrid systems –

characteristics – classification.

Introduction to genetic algorithm, operators in genetic

VI algorithm - coding - selection - cross over – mutation, 08 20%

Stopping condition for genetic algorithm flow, Genetic neuro

hybrid systems, Genetic-Fuzzy rule based system.

END SEMESTER EXAMINATION

Department of CSE, ICET 4

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Syllabus

Question Paper Pattern

1. There will be five parts in the question paper – A, B, C, D, E

2. Part A

a. Total marks : 12

b. Four questions each having 3 marks, uniformly covering modules I and II; All four

questions have to be answered.

3. Part B

a. Total marks : 18

b. Three questions each having 9 marks, uniformly covering modules I and II; Two

questions have to be answered. Each question can have a maximum of three subparts

4. Part C

a. Total marks : 12

b. Four questions each having 3 marks, uniformly covering modules III and

IV; All four questions have to be answered.

5. Part D

a. Total marks : 18

b. Three questions each having 9 marks, uniformly covering modules III and IV; Two

questions have to be answered. Each question can have a maximum of three subparts

6. Part E

a. Total Marks: 40

b. Six questions each carrying 10 marks, uniformly covering modules V and VI; four

questions have to be answered.

c. A question can have a maximum of three sub-parts.

7. There should be at least 60% analytical/numerical/design questions.

Department of CSE, ICET 5

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Module – 1

• Introduction to Soft Computing

• Artificial neural networks

• Biological neurons

• Basic models of artificial neural networks

o Connections

o Learning

o Activation Functions

• McCulloch and Pitts Neuron

• Hebb network.

Department of CSE, ICET

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1.1 Introduction to Soft Computing

Two major problem solving techniques are:

• Hard computing

It deals with precise model where accurate solutions are achieved.

Figure 1.1: Hard Computing

• Soft computing

It deals with approximate model to give solution for complex problems. The term “soft

computing" was introduced by Professor Lorfi Zadeh with the objective of exploiting the

tolerance for imprecision, uncertainty and partial truth to achieve tractability, robustness,

low solution cost and better rapport with reality. The ultimate goal is to be able to emulate

the human mind as closely as possible. It is a combination of Genetic Algorithm, Neural

Network and Fuzzy Logic.

Figure 1.2: Soft Computing

Department of CSE, ICET 7

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1.2 Biological Neurons

Figure 1.3: Schematic diagram of a biological neuron

The biological neuron consists of main three parts:

Soma or cell body-where cell nucleus is located

Dendrites-where the nerve is connected to the cell body

Axon-which carries the impulses of the neuron

Dendrites are tree like networks made of nerve fiber connected to the cell body. An Axon is a

single, long connection extending from the cell body and carrying signals from the neuron. The

end of axon splits into fine strands. It is found that each strand terminated into small bulb like

organs called as synapse. It is through synapse that the neuron introduces its signals to other

nearby neurons. The receiving ends of these synapses on the nearby neurons can be found both

on the dendrites and on the cell body. There are approximately 104 synapses per neuron in the

human body. Electric impulse is passed between synapse and dendrites. It is a chemical process

which results in increase/decrease in the electric potential inside the body of the receiving cell.

If the electric potential reaches a thresh hold value, receiving cell fires & pulse / action

potential of fixed strength and duration is send through the axon to synaptic junction of the

cell. After that, cell has to wait for a period called refractory period.

Department of CSE, ICET 8

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Figure 1.4: Mathematical model of artificial neuron

Biological neuron Artificial neuron

Cell Neuron

Dendrites Weights or interconnections

Soma Net input

Axon Output

Table 1.1: Terminology relationships between biological and artificial neurons

In this model net input is calculated as

𝑛

𝑦𝑖𝑛 = 𝑥1 𝑤1 + 𝑥2 𝑤2 + ⋯ + 𝑥𝑛 𝑤𝑛 = ∑ 𝑥𝑖 𝑤𝑖

𝑖=1

th

Where, i representes i processing element. The activation function applied over it to calculate

the output. The weight represents the strength of synapses connecting the input and output.

1.3 Artificial neural networks

An artificial neural network (ANN) is an efficient information processing system which

resembles the characteristics of biological neural network. ANNs contain large number of

highly interconnected processing elements called nodes or neurons or units. Each neuron is

connected with other by connection link and each connection link is associated with weights

which contain information about the input signal. This information is used by neuron net to

solve a particular problem. ANNs have ability to learn, recall and generalize training pattern or

Department of CSE, ICET 9

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

data similar to that of human brain. The ANN processing elements called neurons or artificial

neurons.

Figure 1.5: Architecture of a simple artificial neuron net

Each neuron has an internal state of its own, called activation or activity level of neuron which

is the function of the inputs the neuron receives. The activation signal of a neuron is

transmitted to other neurons. A neuron can send only one signal at a time which can be

transmitted to several neurons.

Consider the figure 1.5, here X1 and X2 are input neurons, Y is the output neuron W1 and W2

are the weights net input is calculated as

𝑦𝑖𝑛 = 𝑥1 𝑤1 + 𝑥2𝑤2

where x1 and x2 are the activation of the input neurons X1 and X2, i.e., is the output of the input

signals. The output y of the output neuron Y can be obtained by applying activations over the

net input.

𝑦 = 𝑓(𝑦𝑖𝑛 )

Output = Function (net input calculated)

The function to be applied over the net input is called activation function. The net input

calculation is similar to the calculation of output of a pure linear straight line equation y=mx

Figure 1.6: Neural net of pure linear equation

Department of CSE, ICET 10

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Figure 1.7: Graph for y = mx

The weight involve in the ANN is equivalent to the slope of the straight line.

1.4 Comparison between Biological neuron and Artificial neuron

Term Brain Computer

Speed Execution time is few milliseconds Execution time is few nano seconds

Perform several parallel operations

Perform massive parallel operations

Processing simultaneously. It is faster the

simultaneously

biological neuron

Number of Neuron is 1011 and

Size and number of interconnections is 1015. It depends on the chosen

complexity So complexity of brain is higher than application and network designer.

computer

• Information is stored in

interconnections or in synapse • Stored in continuous memory

strength. location.

Storage

• New information is stored without • Overloading may destroy older

capacity

destroying old one. locations.

• Sometimes fails to recollect • Can be easily retrieved

information

Department of CSE, ICET 11

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

• No fault tolerance

• Fault tolerant

• Information corrupted if the

Tolerance • Store and retrieve information

network connections

even interconnections fails

disconnected.

• Accept redundancies

• No redundancies

Depends on active chemicals and

Control CPU

neuron connections are strong or

mechanism Control mechanism is very simple

weak

Table 1.2: Comparison between Biological neuron and Artificial neuron

Characteristics of ANN:

It is a neurally implemented mathematical model

Large number of processing elements called neurons exists here.

Interconnections with weighted linkage hold informative knowledge.

Input signals arrive at processing elements through connections and connecting weights.

Processing elements can learn, recall and generalize from the given data.

Computational power is determined by the collective behavior of neurons.

o ANN is a connection models, parallel distributed processing models, self-organizing

systems, neuro-computing systems and neuro - morphic system.

1.5 Evolution of neural networks

Year Neural network Designer Description

1943 McCulloch and McCulloch and Arrangement of neurons is combination

Pitts neuron Pitts of logic gate. Unique feature is thresh

hold

1949 Hebb network Hebb If two neurons are active, then their

connection strengths should be increased.

1958, Perceptron Here the weights on the connection path

Frank Rosenblatt,

1959, can be adjusted.

Block, Minsky

1962,

and Papert

1988,

Department of CSE, ICET 12

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1960 Adaline Widrow and Hoff Here the weights are adjusted to reduce

the difference between the net input to

the output unit and the desired output.

1972 Kohonen self- Kohonen Inputs are clustered to obtain a fired

organizing feature output neuron.

map

1982, Hopfield network John Hopfield and Based on fixed weights.

1984, Tank Can act as associative memory nets

1985,

1986,

1987

1986 Rumelhart, • Multilayered

Back propagation

Hinton and • Error propagated backward from

network

Williams output to the hidden units

1988 Counter Grossberg Similar to kohonen network.

propagation

network

1987- Adaptive Carpenter and Designed for binary and analog inputs.

1990 resonance Grossberg

Theory(ART)

1988 Broomhead and Resemble back propagation network, but

Radial basis

Lowe activation function used is Gaussian

function network

function.

1988 Neo cognitron Fukushima For character recognition.

Table 1.3: Evolution of neural networks

1.6 Basic models of artificial neural networks

Models are based on three entities

• The model’s synaptic interconnections.

• The training or learning rules adopted for updating and adjusting the connection

weights.

• Their activation functions

Department of CSE, ICET 13

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1.6.1 Connections

The arrangement of neurons to form layers and the connection pattern formed within and

between layers is called the network architecture. There exist five basic types of connection

architecture.

They are:

1. Single layer feed forward network

2. Multilayer feed-forward network

3. Single node with its own feedback

4. Single-layer recurrent network

5. Multilayer recurrent network

Feed forward network: If no neuron in the output layer is an input to a node in the same

layer / proceeding layer.

Feedback network: If outputs are directed back as input to the processing elements in the

same layer/proceeding layer.

Lateral feedback: If the output is directed back to the input of the same layer.

Recurrent networks: Are networks with feedback networks with closed loop.

1. Single layer feed forward network

Layer is formed by taking processing elements and combining it with other processing

elements. Input and output are linked with each other Inputs are connected to the

processing nodes with various weights, resulting in series of outputs one per node.

Department of CSE, ICET 14

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Figure 1.8: Single-layer feed-forward network

When a layer of processing nodes is formed the inputs can be connected to these nodes

with various weights, resulting in a serious of outputs, one per node. This is called single

layer feedforward network.

2. Multilayer feed-forward network

This network is formed by the interconnection of several layers. Input layer receives

input and buffers input signal. Output layer generated output. Layer between input and

output is called hidden layer. Hidden layer is internal to the network. There are Zero to

several hidden layers in a network. More the hidden layer more is the complexity of

network, but efficient output is produced.

Figure 1.9: Multilayer feed-forward network

Department of CSE, ICET 15

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

3. Single node with its own feedback

It is a simple recurrent neural network having a single neuron with feedback to itself.

Figure 1.10: Single node with own feedback

4. Single layer recurrent network

A single layer network with feedback from output can be directed to processing element

itself or to other processing element/both.

Figure 1.11: Single-layer recurrent network

5. Multilayer recurrent network

Processing element output can be directed back to the nodes in the preceding layer,

forming a multilayer recurrent network.

Department of CSE, ICET 16

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Figure 1.12: Multilayer recurrent network

Maxnet –competitive interconnections having fixed weights.

Figure 1.13: Competitive nets

On-center-off-surround/lateral inhibition structure – each processing neuron

receives two different classes of inputs- “excitatory” input from nearby processing

elements & “inhibitory” elements from more distantly located processing elements. This

type of interconnection is shown below

Figure 1.14: Lateral inhibition structure

Department of CSE, ICET 17

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1.6.2 Learning

Learning or Training is the process by means of which a neural network adapts itself to a

stimulus by making proper parameter adjustments, resulting in the production of desired

response.

Two broad kinds of learning in ANNs is:

i) Parameter learning – updates connecting weights in a neural net.

ii) Structure learning – focus on change in the network.

Apart from these, learning in ANN is classified into three categories as

i) Supervised learning

ii) Unsupervised learning

iii) Reinforcement learning

i) Supervised learning

The Learning here is performed with the help of a teacher. Example: Consider the

learning process of a small child. Child doesn’t know how to read/write. Their each and

every action is supervised by a teacher. Actually a child works on the basis of the output

that he/she has to produce. In ANN, each input vector requires a corresponding target

vector, which represents the desired output. The input vector along with target vector is

called training pair. Input vector results in output vector. The actual output vector is

compared with desired output vector. If there is a difference means an error signal is

generated by the network. It is used for adjustment of weights until actual output

matches desired output.

Figure 1.15: Supervised learning

Department of CSE, ICET 18

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

ii) Unsupervised learning

Learning is performed without the help of a teacher. Example: tadpole – learn to swim

by itself. In ANN, during training process, network receives input patterns and organize

it to form clusters.

Figure 1.16: Unsupervised learning

From the above Fig.1.16 it is observed that no feedback is applied from environment to

inform what output should be or whether they are correct. The network itself discover

patterns, regularities, features/ categories from the input data and relations for the input

data over the output. Exact clusters are formed by discovering similarities &

dissimilarities so called as self – organizing.

iii) Reinforcement learning

It is similar to supervised learning. Learning based on critic information is called

reinforcement learning & the feedback sent is called reinforcement signal. The network

receives some feedback from the environment. Feedback is only evaluative.

Figure 1.17: Reinforcement learning

The external reinforcement signals are processed in the critic signal generator, and the

obtained critic signals are sent to the ANN for adjustment of weights properly to get

critic feedback in future.

Department of CSE, ICET 19

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

1.6.3 Activation Functions

To make work more efficient and for exact output, some force or activation is given. Like

that, activation function is applied over the net input to calculate the output of an ANN.

Information processing of processing element has two major parts: input and output. An

integration function (f) is associated with input of processing element.

Several activation functions are there.

1. Identity function: It is a linear function which is defined as

𝑓(𝑥) = 𝑥 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥

The output is same as the input.

2. Binary step function: This function can be defined as

1 𝑖𝑓 𝑥 ≥ 𝜃

𝑓(𝑥) = {

0 𝑖𝑓 𝑥 < 𝜃

Where, θ represents thresh hold value. It is used in single layer nets to convert the net

input to an output that is binary (0 or 1).

3. Bipolar step function: This function can be defined as

1 𝑖𝑓 𝑥 ≥ 𝜃

𝑓(𝑥) = {

−1 𝑖𝑓 𝑥 < 𝜃

Where, θ represents threshold value. It is used in single layer nets to convert the net input

to an output that is bipolar (+1 or -1).

4. Sigmoid function: It is used in Back propagation nets.

Two types:

a) Binary sigmoid function: It is also termed as logistic sigmoid function or unipolar

sigmoid function. It is defined as

1

𝑓(𝑥) =

1 + 𝑒 −𝜆𝑥

where, λ represents steepness parameter. The derivative of this function is

Department of CSE, ICET 20

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

𝑓′(𝑥) = 𝜆𝑓(𝑥)[1 − 𝑓(𝑥)]

The range of sigmoid function is 0 to 1.

b) Bipolar sigmoid function: This function is defined as

2 1 − 𝑒 −𝜆𝑥

𝑓(𝑥) = − 1 =

1 + 𝑒 −𝜆𝑥 1 + 𝑒 −𝜆𝑥

Where λ represents steepness parameter and the sigmoid range is between -1 and +1.

The derivative of this function can be

𝜆

𝑓 ′ (𝑥) = [1 + 𝑓(𝑥)][1 − 𝑓(𝑥)]

2

It is closely related to hyperbolic tangent function, which is written as

𝑒 𝑥 − 𝑒 −𝑥

ℎ(𝑥) = = 𝑥

𝑒 + 𝑒 −𝑥

1 − 𝑒 −2𝑥

ℎ(𝑥) = =

1 + 𝑒 −2𝑥

The derivative of the hyperbolic tangent function is

ℎ′ (𝑥) = [1 + ℎ(𝑥)][1 − ℎ(𝑥)]

5. Ramp function: The ramp function is defined as

1 𝑖𝑓𝑥 > 1

𝑓(𝑥) = {𝑥 𝑖𝑓 0 ≤ 𝑥 ≤ 1

0 𝑖𝑓 𝑥 < 0

The graphical representation of all these function is given in the upcoming figure 1.18

Department of CSE, ICET 21

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

Figure 1.18: Depiction of activation functions: (A) identity function; (B) binary step function; (C) bipolar step

function; (D) binary sigmoidal function; (E) bipolar sigmoidal function; (F) ramp function.

1.7 McCulloch and Pitts Neuron

It is discovered in 1943 and usually called as M-P neuron. M-P neurons are connected by

directed weighted paths. Activation of M-P neurons is binary (i.e) at any time step the neuron

may fire or may not fire. Weights associated with communication links may be excitatory

(wgts are positive)/inhibitory (wgts are negative). Threshold plays major role here. There is a

fixed threshold for each neuron and if the net input to the neuron is greater than the threshold

then the neuron fires. They are widely used in logic functions. A simple M-P neuron is shown

in the figure. It is excitatory with weight w (w>0) / inhibitory with weight –p (p<0). In the Fig.,

Department of CSE, ICET 22

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

inputs from x1 to xn possess excitatory weighted connection and X n+1 to xn+m has inhibitory

weighted interconnections.

Figure 1.19: McCulloch-Pins neuron model

Since the firing of neuron is based on threshold, activation function is defined as

1 𝑖𝑓 𝑦𝑖𝑛 ≥ 𝜃

𝑓(𝑥) = {

0 𝑖𝑓 𝑦𝑖𝑛 < 𝜃

For inhibition to be absolute, the threshold with the activation function should satisfy the

following condition:

θ > nw –p

Output will fire if it receives “k” or more excitatory inputs but no inhibitory inputs where

kw ≥ θ>(k-1) w

The M-P neuron has no particular training algorithm. An analysis is performed to determine

the weights and the threshold. It is used as a building block where any function or phenomenon

is modeled based on a logic function.

1.8 Hebb network

Donald Hebb stated in 1949 that “In brain, the learning is performed by the change in the

synaptic gap”. When an axon of cell A is near enough to excite cell B, and repeatedly or

permanently takes place in firing it, some growth process or metabolic change takes place in

Department of CSE, ICET 23

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

one or both the cells such that A’s efficiency, as one of the cells firing B, is increased.

According to Hebb rule, the weight vector is found to increase proportionately to the product of

the input and the learning signal. In Hebb learning, two interconnected neurons are ‘on’

simultaneously. The weight update in Hebb rule is given by

wi(new) = wi (old)+ xi y

Hebbs network is suited more for bipolar data. If binary data is used, the weight updation

formula cannot distinguish two conditions namely:

1. A training pair in which an input unit is “on” and the target value is “off”.

2. A training pair in which both the input unit and the target value is “off”.

Training algorithm

The training algorithm is used for the calculation and adjustment of weights. The flowchart for

the training algorithm of Hebb network is given below

Step 0: First initialize the weights. Basically in this network they may be set to zero, i.e., w; =

0, for i= 1 to n where "n" may be the total number of input neurons.

Step 1: Steps 2-4 have to be performed for each input training vector and target output pair,

s: t.

Step 2: Input units activations are set. Generally, the activation function of input layer is

identity function: xi = si for i=1 to n

Step 3: Output units activations are set: y = t.

Step 4: Weight adjustments and bias adjustments are performed:

wi(new) = wi(old)+xiy

b(new)=b(old)+y

In step 4, the weight updation formula can be written in vector form as

w(new) = w(old)+y

Hence, Change in weight is expressed as

Δw = xy

Department of CSE, ICET 24

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 1

As a result,

w(new)=w(old)+Δw

Hebb rule is used for pattern association, pattern categorization, pattern classification and over

a range of other areas.

Flowchart of Training algorithm

Figure 1.20: Flowchart of Hebb training algorithm

Department of CSE, ICET 25

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Module – 2

• Perceptron networks

o Learning rule

o Training and testing algorithm

• Adaptive Linear Neuron

• Back propagation Network

o Architecture

o Training algorithm

Department of CSE, ICET

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.1 Perceptron networks

2.1.1 Theory

Perceptron networks come under single-layer feed-forward networks and are also called

simple perceptrons. Various types of perceptrons were designed by Rosenblatt (1962) and

Minsky-Papert (1969, 1988).

The key points to be noted in a perceptron network are:

1. The perceptron network consists of three units, namely, sensory unit (input unit),

associator unit (hidden unit), and response unit (output unit).

2. The sensory units are connected to associator units with fixed weights having values 1, 0

or -l, which are assigned at random.

3. The binary activation function is used in sensory unit and associator unit.

4. The response unit has an activation of l, 0 or -1. The binary step with fixed threshold ɵ is

used as activation for associator. The output signals that are sent from the associator unit

to the response unit are only binary.

5. The output of the perceptron network is given by

𝑦 = 𝑓(𝑦𝑖𝑛 )

where 𝑓(𝑦𝑖𝑛 ) is activation function and is defined as

1 𝑖𝑓𝑦𝑖𝑛 > 𝜃

𝑓(𝑦𝑖𝑛 ) = { 0 𝑖𝑓 − 𝜃 ≤ 𝑦𝑖𝑛 ≤ 𝜃

−1 𝑖𝑓 𝑦𝑖𝑛 < −𝜃

6. The perceptron learning rule is used in the weight updation between the associator unit

and the response unit. For each training input, the net will calculate the response and it

will determine whether or not an error has occurred.

7. The error calculation is based on the comparison of the values of targets with those of

the ca1culated outputs.

8. The weights on the connections from the units that send the nonzero signal will get

adjusted suitably.

9. The weights will be adjusted on the basis of the learning rule an error has occurred for a

particular training patterns .i.e..,

Department of CSE , ICET 27

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

𝑤𝑖 (𝑛𝑒𝑤) = 𝑤𝑖 (𝑜𝑙𝑑) + 𝛼 𝑡𝑥𝑖

𝑏(𝑛𝑒𝑤) = 𝑏(𝑜𝑙𝑑) + 𝛼 𝑡

If no error occurs, there is no weight updation and hence the training process may be

stopped. In the above equations, the target value "t" is +1 or-l and α is the learning rate. In

general, these learning rules begin with an initial guess at the weight values and then

successive adjustments are made on the basis of the evaluation of an objective function.

Eventually, the learning rules reach a near optimal or optimal solution in a finite number of

steps.

Figure 2.1: Original perceptron network

A Perceptron network with its three units is shown in above figure. The sensory unit can be

a two-dimensional matrix of 400 photodetectors upon which a lighted picture with

geometric black and white pattern impinges. These detectors provide a binary (0) electrical

signal if the input signal is found to exceed a certain value of threshold. Also, these detectors

are connected randomly with the associator unit. The associator unit is found to consist of a

set of subcircuits called feature predicates. The feature predicates are hardwired to detect the

specific feature of a pattern and are equivalent to the feature detectors. For a particular

feature, each predicate is examined with a few or all of the responses of the sensory unit. It

can be found that the results from the predicate units are also binary (0 or 1). The last unit,

i.e. response unit, contains the pattern recognizers or perceptrons. The weights present in the

input layers are all fixed, while the weights on the response unit are trainable.

Department of CSE , ICET 28

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.1.2 Perceptron Learning Rule

Learning signal is the difference between desired and actual response of a neuron. The

perceptron learning rule is explained as follows:

Consider a finite "n" number of input training vectors, with their associated target (desired)

values x(n) and t(n), where “n” ranges from 1 to N. The target is either +1 or -1. The output

''y" is obtained on the basis of the net input calculated and activation function being applied

over the net input.

1 𝑖𝑓𝑦𝑖𝑛 > 𝜃

𝑦 = 𝑓 (𝑦𝑖𝑛 ) = { 0 𝑖𝑓 − 𝜃 ≤ 𝑦𝑖𝑛 ≤ 𝜃

−1 𝑖𝑓 𝑦𝑖𝑛 < −𝜃

The weight updation in case of perceptron learning is as shown.

If y ≠ t, then

𝑤(𝑛𝑒𝑤) = 𝑤(𝑜𝑙𝑑) + 𝛼 𝑡𝑥 (𝛼 − 𝑙𝑒𝑎𝑟𝑛𝑖𝑛𝑔 𝑟𝑎𝑡𝑒)

else,

𝑤(𝑛𝑒𝑤) = 𝑤(𝑜𝑙𝑑)

The weights can be initialized at any values in this method. The perceptron rule convergence

theorem states that “ If there is a weight vector W such that 𝑓(𝑥(𝑛)𝑊) = 𝑡(𝑛), for all n

then for any starting vector w1, the perceptron learning rule will convergence to a weight

vector that gives the correct response for all training patterns, and this learning takes place

within a finite number of steps provided that the solution exists”.

2.1.3 Architecture

Figure 2.2: Single classification perceptron network

Department of CSE , ICET 29

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Here only the weights between the associator unit and the output unit can be adjusted, and

the weights between the sensory and associator units are fixed.

2.1.4 Flowchart for Training Process

Figure 2.3: Flowchart for perceptron network with single output

Department of CSE , ICET 30

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.1.5 Perceptron Training Algorithm for Single Output Classes

Step 0: Initialize the weights and the bias (for easy calculation they can be set to zero). Also

initialize the learning rate α (0< α ≤ 1). For simplicity α is set to 1.

Step 1: Perform Steps 2-6 until the final stopping condition is false.

Step 2: Perform Steps 3-5 for each training pair indicated by s:t.

Step 3: The input layer containing input units is applied with identity activation functions:

𝑥𝑖 = 𝑠𝑖

Step 4: Calculate the output of the network. To do so, first obtain the net input:

𝑦𝑖𝑛 = 𝑏 + ∑ 𝑥𝑖 𝑤𝑖

𝑖=1

Where "n" is the number of input neurons in the input layer. Then apply activations over the

net input calculated to obtain the output:

1 𝑖𝑓𝑦𝑖𝑛 > 𝜃

𝑦 = 𝑓(𝑦𝑖𝑛 ) = { 0 𝑖𝑓 − 𝜃 ≤ 𝑦𝑖𝑛 ≤ 𝜃

−1 𝑖𝑓 𝑦𝑖𝑛 < −𝜃

Step 5: Weight and bias adjustment: Compare the value of the actual (calculated) output and

desired (target) output.

If y ≠ t, then

𝑤𝑖 (𝑛𝑒𝑤) = 𝑤𝑖 (𝑜𝑙𝑑) + 𝛼 𝑡𝑥𝑖

𝑏(𝑛𝑒𝑤) = 𝑏(𝑜𝑙𝑑 ) + 𝛼 𝑡

else,

𝑤𝑖 (𝑛𝑒𝑤) = 𝑤𝑖 (𝑜𝑙𝑑)

𝑏(𝑛𝑒𝑤) = 𝑏(𝑜𝑙𝑑)

Step 6: Train the network until there is no weight change. This is the stopping condition for

the network. If this condition is not met, then start again from Step 2.

Department of CSE , ICET 31

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.1.6 Perceptron Network Testing Algorithm

Step 0: The initial weights to be used here are taken from the training algorithms (the final

weights obtained during training).

Step 1: For each input vector X to be classified, perform Steps 2-3.

Step 2: Set activations of the input unit.

Step 3: Obtain the response of output unit.

𝑦𝑖𝑛 = ∑ 𝑥𝑖 𝑤𝑖

𝑖=1

1 𝑖𝑓𝑦𝑖𝑛 > 𝜃

𝑦 = 𝑓(𝑦𝑖𝑛 ) = { 0 𝑖𝑓 − 𝜃 ≤ 𝑦𝑖𝑛 ≤ 𝜃

−1 𝑖𝑓 𝑦𝑖𝑛 < −𝜃

Thus, the testing algorithm tests the performance of network. In the case of perceptron

network, it can be used for linear separability. Here the separating line may be based on the

value of threshold that is, the threshold used in the activation function must be a non

negative value.

The condition for separating the response from the region of positive to region of zero is

𝑤1 𝑥1 + 𝑤2 𝑥2 + 𝑏 > 𝜃

The condition for separating the response from the region of zero to region of negative is

𝑤1 𝑥1 + 𝑤2 𝑥2 + 𝑏 < −𝜃

The conditions above are stated for a single layer perceptron network with two input

neurons and one output neuron and one bias.

2.2 Adaptive Linear Neuron

2.2.1 Theory

The units with linear activation function are called linear units. A network with a single

linear unit is called an Adaline (adaptive linear neuron). That is, in an Adaline, the input-

output relationship is linear. Adaline uses bipolar activation for its input signals and its

target output. The weights between the input and the output are adjustable. The bias in

Department of CSE , ICET 32

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Adaline acts like an adjustable weight, whose connection is from a unit with activations

being always 1. Adaline is a net which has only one output unit. The Adaline network may

be trained using delta rule. The delta rule may also be called as least mean square (LMS)

rule or Widrow-Hoff rule. This learning rule is found to minimize the mean squared error

between the activation and the target value.

2.2.2 Delta Rule for Single Output Unit

The perceptron learning rule originates from the Hebbian assumption while the delta rule is

derived from the gradient descendent method (it can be generalized to more than one layer).

Also, the perceptron learning rule stops after a finite number of leaning steps, but the

gradient-descent approach continues forever, converging only asymptotically to the solution.

The delta rule updates the weights between the connections so as to minimize the difference

between the net input to the output unit and the target value. The major aim is to minimize

the error over all training patterns. This is done by reducing the error for each pattern, one at

a time.

The delta rule for adjusting the weight of i th pattern (i = 1 to n) is

𝛥𝑤𝑖 = 𝛼(𝑡 − 𝑦𝑖𝑛 )𝑥𝑖

Where Δwi is the weight change; α the learning rate; x the vector of activation of input unit;

yin the net input to output unit, i.e., 𝑦𝑖𝑛 = ∑𝑛𝑖=1 𝑥𝑖 𝑤𝑖 ; t the target output. The delta rule in

case of several output units for adjusting the weight from ith input unit to the jth output unit

(for each pattern) is

𝛥𝑤𝑖𝑗 = 𝛼(𝑡𝑗 − 𝑦𝑖𝑛𝑗 )𝑥𝑖

2.2.3 Architecture

Adaline is a single unit neuron, which receives input from several units and also from one

unit called bias. The basic Adaline model consists of trainable weights. Inputs are either of

the two values (+ 1 or -1) and the weights have signs (positive or negative). Initially,

random weights are assigned. The net input calculated is applied to a quantizer transfer

function (possibly activation function) that restores the output to + 1 or -1. The Adaline

Department of CSE , ICET 33

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

model compares the actual output with the target output and on the basis of the training

algorithm, the weights are adjusted.

Figure 2.4: Adaline model

2.2.4 Flowchart for Training Process

Department of CSE , ICET 34

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Figure 2.5: Flowchart for Adaline training process

2.2.5 Training Algorithm

Step 0: Weights and bias are set to some random values but not zero. Set the learning rate

parameter α.

Step 1: Perform Steps 2-6 when stopping condition is false.

Step 2: Perform Steps 3-5 for each bipolar training pair s:t.

Department of CSE , ICET 35

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Step 3: Set activations for input units i = 1 to n.

𝑥𝑖 = 𝑠𝑖

Step 4: Calculate the net input to the output unit.

𝑦𝑖𝑛 = 𝑏 + ∑ 𝑥𝑖 𝑤𝑖

𝑖=1

Step 5: Update the weights and bias for i= 1 to n

𝑤𝑖 (𝑛𝑒𝑤) = 𝑤𝑖 (𝑜𝑙𝑑 ) + 𝛼(𝑡 − 𝑦𝑖𝑛 )𝑥𝑖

𝑏(𝑛𝑒𝑤) = 𝑏(𝑜𝑙𝑑) + 𝛼(𝑡 − 𝑦𝑖𝑛 )

Step 6: If the highest weight change that occurred during training is smaller than a specified

tolerance then stop the training process, else continue. This is the rest for stopping condition

of a network.

2.2.6 Testing Algorithm

Step 0: Initialize the weights. (The weights are obtained from the training algorithm.)

Step 1: Perform Steps 2-4 for each bipolar input vector x.

Step 2: Set the activations of the input units to x.

Step 3: Calculate the net input to the output unit:

𝑦𝑖𝑛 = 𝑏 + ∑ 𝑥𝑖 𝑤𝑖

Step 4: Apply the activation function over the net input calculated:

1 𝑖𝑓 𝑦𝑖𝑛 ≥ 0

𝑦= {

−1 𝑖𝑓 𝑦𝑖𝑛 < 0

2.3 Back propagation Network

2.3.1 Theory

The back propagation learning algorithm is one of the most important developments in

neural networks (Bryson and Ho, 1969; Werbos, 1974; Lecun, 1985; Parker, 1985;

Department of CSE , ICET 36

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Rumelhart, 1986). This learning algorithm is applied to multilayer feed-forward networks

consisting of processing elements with continuous differentiable activation functions. The

networks associated with back-propagation learning algorithm are also called back-

propagation networks. (BPNs). For a given set of training input-output pair, this algorithm

provides a procedure for changing the weights in a BPN to classify the given input patterns

correctly. The basic concept for this weight update algorithm is simply the gradient descent

method. This is a methods were error is propagated back to the hidden unit. Back

propagation network is a training algorithm.

The training of the BPN is done in three stages - the feed-forward of the input training

pattern, the calculation and back-propagation of the error, and updation of weights. The

testing of the BPN involves the computation of feed-forward phase only. There can be more

than one hidden layer (more beneficial) but one hidden layer is sufficient. Even though the

training is very slow, once the network is trained it can produce its outputs very rapidly.

2.3.2 Architecture

Figure 2.6: Architecture of a back propagation network

A back-propagation neural network is a multilayer, feed-forward neural network consisting

of an input layer, a hidden layer and an output layer. The neurons present in the hidden and

output layers have biases, which are the connections from the units whose activation is

always 1. The bias terms also acts as weights. During the back propagation phase of

learning, signals are sent in the reverse direction. The inputs sent to the BPN and the output

Department of CSE , ICET 37

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

obtained from the net could be either binary (0, 1) or bipolar (-1, +1). The activation

function could be any function which increases monotonically and is also differentiable.

2.3.3 Flowchart

The terminologies used in the flowchart and in the training algorithm are as follows:

x = input training vector (x1,….,xi,….xn)

t = target output vector (t1,….,tk,….tm)

α= learning rate parameter

xi = input unit i. (Since the input layer uses identity activation function, the input and output

signals here are same.)

v0i = bias on jth hidden unit

w0k = bias on kth output unit

zj=hidden unit j. The net input to zj is

𝑧𝑖𝑛𝑗 = 𝑣0𝑗 + ∑ 𝑥𝑖 𝑣𝑖𝑗

𝑖=1

and the output is

𝑧𝑗 = 𝑓(𝑧𝑖𝑛𝑗 )

yk = output unit k. The net input to yk is

𝑦𝑖𝑛𝑘 = 𝑤0𝑘 + ∑ 𝑧𝑗 𝑤𝑗𝑘

𝑗=1

and the output is

𝑦𝑘 = 𝑓(𝑦𝑖𝑛𝑘 )

δk = error correction weight adjustment for wjk that is due to an error in unit yk, which is

back-propagated to the hidden units that feed into unit yk

δj = error correction weight adjustment for vij that is due to the back-propagation of error to

the hidden unit is zj.

Department of CSE , ICET 38

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

The commonly used activation functions are binary, sigmoidal and bipolar sigmoidal

activation functions. These functions are used in the BPN because of the following

characteristics: (i) Continuity (ii) Differentiability iii) Non decreasing monotonic.

Department of CSE , ICET 39

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Figure 2.7: Flowchart for back - propagation network training

2.3.4 Training Algorithm

Step 0: Initialize weights and learning rate (take some small random values).

Department of CSE , ICET 40

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Step 1: Perform Steps 2-9 when stopping condition is false.

Step 2: Perform Steps 3-8 for each training pair.

Feedforward Phase 1

Step 3: Each input unit receives input signal xi and sends it to the hidden unit (i = l to n).

Step 4: Each hidden unit zj (j = 1 to p) sums its weighted input signals to calculate net input:

𝑧𝑖𝑛𝑗 = 𝑣0𝑗 + ∑ 𝑥𝑖 𝑣𝑖𝑗

𝑖=1

Calculate output of the hidden unit by applying its activation functions over 𝑧𝑖𝑛𝑗

(binary or bipolar sigmoidal activation function):

𝑧𝑗 = 𝑓(𝑧𝑖𝑛𝑗 )

and send the output signal from the hidden unit to the input of output layer units.

Step 5: For each output unit 𝑦𝑘 (k = 1 to m), calculate the net input:

𝑦𝑖𝑛𝑘 = 𝑤0𝑘 + ∑ 𝑧𝑗 𝑤𝑗𝑘

𝑗=1

and apply the activation function to compute output signal

𝑦𝑘 = 𝑓(𝑦𝑖𝑛𝑘 )

Back-propagation of error (Phase II)

Step 6: Each output unit 𝑦𝑘 (k=1 to m) receives a target pattern corresponding to the input

training pattern and computes the error correction term:

𝛿𝑘 = (𝑡𝑘 − 𝑦𝑘 )𝑓 ′ (𝑦𝑖𝑛𝑘 )

The derivative 𝑓 ′ (𝑦𝑖𝑛𝑘 ) can be calculated as in activation function section. On the basis of

the calculated error correction term, update the change in weights and bias:

∆𝑤𝑗𝑘 = 𝛼𝛿𝑘 𝑧𝑗 ; ∆𝑤0𝑘 = 𝛼𝛿𝑘

Also, send 𝛿𝑘 to the hidden layer backwards.

Department of CSE , ICET 41

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Step 7: Each hidden unit (𝑧𝑗 = 1 to p) sums its delta inputs from the output units:

𝛿𝑖𝑛𝑗 = ∑ 𝛿𝑘 𝑤𝑗𝑘

𝑘=1

The term 𝛿𝑖𝑛𝑗 gets multiplied with the derivative of 𝑓(𝑧𝑖𝑛𝑗 ) to calculate the error term:

𝛿𝑗 = 𝛿𝑖𝑛𝑗 𝑓 ′ (𝑧𝑖𝑛𝑗 )

The derivative 𝑓 ′ (𝑧𝑖𝑛𝑗 ) can be calculated as activation function section depending on

whether binary or bipolar sigmoidal function is used. On the basis of the calculated 𝛿𝑗 ,

update the change in weights and bias:

∆𝑣𝑖𝑗 = 𝛼𝛿𝑗 𝑥𝑖 ; ∆𝑣0𝑗 = 𝛼𝛿𝑗

Weight and bias updation (Phase IIl):

Step 8: Each output unit (yk, k = 1 to m) updates the bias and weights:

𝑤𝑗𝑘 (𝑛𝑒𝑤) = 𝑤𝑗𝑘 (𝑜𝑙𝑑) + 𝛥𝑤𝑗𝑘

𝑤0𝑘 (𝑛𝑒𝑤) = 𝑤0𝑘 (𝑜𝑙𝑑) + 𝛥𝑤0𝑘

Each hidden unit (zj; j = 1 to p) updates its bias and weights:

𝑣𝑖𝑗 (𝑛𝑒𝑤) = 𝑣𝑖𝑗 (𝑜𝑙𝑑) + 𝛥𝑣𝑖𝑗

𝑣0𝑗 (𝑛𝑒𝑤) = 𝑣0𝑗 (𝑜𝑙𝑑) + 𝛥𝑣0𝑗

Step 9: Check for the stopping condition. The stopping condition may be certain number of

epochs reached or when the actual output equals the target output.

2.4 Madaline

Department of CSE , ICET 42

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Department of CSE , ICET 43

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.5 Radial Basis Function Network

Department of CSE , ICET 44

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.6 Time Delay Neural Network

2.7 Functional Link Networks

Department of CSE , ICET 45

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.8 Tree Neural Networks

2.9 Wavelet Neural Networks

Department of CSE , ICET 46

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

2.10 Advantages of Neural Networks

1. Mimicks human control logic.

2. Uses imprecise language.

3. Inherently robust.

4. Fails safely.

5. Modified and tweaked easily.

2.11 Disadvantages of Neural Networks

1. Operator's experience required.

2. System complexity.

2.12 Applications of Neural Networks

1. Automobile and other vehicle subsystems, such as automatic transmissions, ABS and cruise

control (e.g. Tokyo monorail).

2. Air conditioners.

3. Auto focus on cameras.

4. Digital image processing, such as edge detection.

5. Rice cookers.

6. Dishwashers.

7. Elevators.

Department of CSE , ICET 47

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 2

Department of CSE , ICET 48

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

Module – 3

• Fuzzy logic

• Fuzzy sets

o Properties

o Operations on fuzzy sets

• Fuzzy relations

o Operations on fuzzy relations

Department of CSE , ICET

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.13 Fuzzy logic

Imprecise and vague data Decisions

Fuzzy Logic System

Figure 3.1: A fuzzy logic system accepting imprecise data and providing a decision

In 1965 Lotfi Zadeh, published his famous paper “Fuzzy sets”. This new logic for representing

and manipulating fuzzy terms was called fuzzy logic, and Zadeh became the Master/Father of

fuzzy logic.

Fuzzy logic is the logic underlying approximate, rather than exact, modes of reasoning. It

operates on the concept of membership. The membership was extended to possess various

"degrees of membership" on the real continuous interval [0, l].

In fuzzy systems, values are indicated by a number (called a truth value) ranging from 0 to l,

where 0.0 represents absolute falseness and 1.0 represents absolute truth.

Figure 3.2: (a) Boolean Logic (b) Multi-valued Logic

2.14 Classical sets(Crisp sets)

A classical set is a collection of objects with certain characteristics. For example, the user may

define a classical set of negative integers, a set of persons with height less than 6 feet, and a set

of students with passing grades. Each individual entity in a set is called a member or an element

of the set.

There are several ways for defining a set. A set may be defined using one of the following:

1. The list of all the members of a set may be given.

Example A= {2,4,6,8,10} (Roaster form)

2. The properties of the set elements may be specified.

Example A = {x|x is prime number < 20} (Set builder form)

3. The formula for the definition of a set may be mentioned. Example

Department of CSE, ICET 50

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

xi + 1

A = {xi = , i = 1 to 10, where xi = 1}

5

4. The set may be defined on the basis of the results of a logical operation.

Example A = {x|x is an element belonging to P AND Q}

5. There exists a membership function, which may also be used to define a set. The

membership is denoted by the letter 𝜒 and the membership function for a set A is given by

(for all values of x).

1, 𝑖𝑓 𝑥 ∈ 𝐴

𝜒𝐴 (𝑥) = {

0, 𝑖𝑓 𝑥 ∉ 𝐴

The set with no elements is defined as an empty set or null set. It is denoted by symbol Ø.

The set which consist of all possible subset of a given set A is called power set

𝑃(𝐴) = {𝑥|𝑥 ⊆ 𝐴}

2.14.1 Properties

1. Commutativity

𝐴 ∪ 𝐵 = 𝐵 ∪ 𝐴; 𝐴 ∩ 𝐵 = 𝐵 ∩ 𝐴

2. Associativity

𝐴 ∪ (𝐵 ∪ 𝐶) = (𝐴 ∪ 𝐵) ∪ 𝐶; 𝐴 ∩ (𝐵 ∩ 𝐶) = (𝐴 ∩ 𝐵) ∩ 𝐶

3. Distributivity

𝐴 ∪ (𝐵 ∩ 𝐶) = (𝐴 ∪ 𝐵) ∩ (𝐴 ∪ 𝐶)

𝐴 ∩ (𝐵 ∪ 𝐶) = (𝐴 ∩ 𝐵) ∪ (𝐴 ∩ 𝐶)

4. Idempotency

𝐴 ∪ 𝐴 = 𝐴; 𝐴 ∩ 𝐴 = 𝐴

5. Transitivity

𝐼𝑓 𝐴 ⊆ 𝐵 ⊆ 𝐶, 𝑡ℎ𝑒𝑛 𝐴 ⊆ 𝐶

6. Identity

𝐴 ∪ ∅ = 𝐴, 𝐴 ∩ ∅ = ∅

𝐴 ∪ 𝑋 = 𝑋, 𝐴 ∩ 𝑋 = 𝐴

7. Involution (double negation)

𝐴̿ = 𝐴

8. Law of excluded middle

𝐴 ∪ 𝐴̅ = 𝑋

Department of CSE, ICET 51

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

9. Law of contradiction

𝐴 ∩ 𝐴̅ = ∅

10. DeMorgans law

|𝐴 ∩ 𝐵 | = 𝐴̅ ∪ 𝐵̅ ; |𝐴

̅̅̅̅̅̅̅ ∪ 𝐵 | = 𝐴̅ ∩ 𝐵̅ ;

̅̅̅̅̅̅̅

2.14.2 Operations on Classical sets

1. Union

The union between two sets gives all those elements in the universe that belong to either

set A or set B or both sets A and B. The union operation can be termed as a logical OR

operation. The union of two sets A and B is given as

𝐴 ∪ 𝐵 = {𝑥|𝑥 ∈ 𝐴 𝑜𝑟 𝑥 ∈ 𝑏}

The union of sets A and B is illustrated by the Venn diagram shown below

Figure 3.3: Union of two sets

2. Intersection

The intersection between two sets represents all those elements in the universe that

simultaneously belong to both the sets. The intersection operation can be termed as a

logical AND operation. The intersection of sets A and B is given by

𝐴 ∩ 𝐵 = {𝑥|𝑥 ∈ 𝐴 𝑎𝑛𝑑 𝑥 ∈ 𝑏}

The intersection of sets A and B is illustrated by the Venn diagram shown below

Figure 3.4: Intersection of two sets

Department of CSE, ICET 52

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

3. Complement

The complement of set A is defined as the collection of all elements in universe X that

do not reside in set A, i.e., the entities that do not belong to A. It is denoted by A and is

defined as

𝐴̅ = {𝑥|𝑥 ∉ 𝐴, 𝑥 ∈ 𝑋}

where X is the universal set and A is a given set formed from universe X. The

complement operation of set A is show below

Figure 3.5: Complement of set A

4. Difference (Subtraction)

The difference of set A with respect to ser B is the collection of all elements in the

universe that belong to A but do not belong to B, i.e., the difference set consists of all

elements that belong to A bur do not belong to B. It is denoted by A l B or A- B and is

given by

𝐴|𝐵 𝑜𝑟 (𝐴 − 𝐵) = {𝑥|𝑥 ∈ 𝐴 𝑎𝑛𝑑 𝑥 ∉ 𝐵} = 𝐴 − (𝐴 ∩ 𝐵)

The vice versa of it also can be performed

𝐵|𝐴 𝑜𝑟 (𝐵 − 𝐴) = 𝐵 − (𝐵 ∩ 𝐴) = {𝑥|𝑥 ∈ 𝐵 𝑎𝑛𝑑 𝑥 ∉ 𝐴}

The above operations are shown below

(A) (B)

Figure 3.6: (A) Difference A|B or (A-B); (B) Difference B|A or (B-A)

Department of CSE, ICET 53

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.14.3 Function Mapping of Classical Sets

Mapping is a rule of correspondence between set-theoretic forms and function theoretic

forms. A classical set is represented by its characteristic function 𝜒𝐴 (𝑥) where x is the

element in the universe.

Now consider X and Y as two different universes of discourse. If an element x contained in

X corresponds to an element y in Y. it is called mapping from X to Y, i.e., f: X→Y. On the

basis of this mapping, the characteristics function is defined as

1, 𝑥∈𝐴

𝜒𝐴 (𝑥) = {

0, 𝑥∉𝐴

where 𝜒𝐴 is the membership in set A for element x in the universe. The membership concept

represents mapping from an element x in universe X to one of the two elements in universe

Y (either to element 0 or 1).

Let A and B be two sets in universe X. The function-theoretic forms of operations performed

between these two sets are given as follows:

1. Union

𝜒𝐴∪𝐵 (𝑥) = 𝜒𝐴 (𝑥) ∨ 𝜒𝐵 (𝑥) = 𝑚𝑎𝑥[𝜒𝐴 (𝑥), 𝜒𝐵 (𝑥)]

where ∨ indicates max operator.

2. Intersection

𝜒𝐴∩𝐵 (𝑥) = 𝜒𝐴 (𝑥) ∧ 𝜒𝐵 (𝑥) = 𝑚𝑖𝑛[𝜒𝐴 (𝑥), 𝜒𝐵 (𝑥)]

where ∧ indicates min operator.

3. Complement

𝜒𝐴̅ (𝑥) = 1 − 𝜒𝐴 (𝑥)

2.15 Fuzzy sets

A fuzzy set 𝐴 in the universe of discourse U can be defined as

~

𝐴 = {(𝑥, µ𝐴 (𝑥)) | 𝑥 ∈ 𝑋}

~ ~

Department of CSE, ICET 54

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

where µ𝐴 (𝑥) is the degree of membership of x in 𝐴 and it indicates the degree that x belongs to

~ ~

𝐴 . In the fuzzy theory, fuzzy set A of universe X is defined by function µ𝐴 (𝑥) called the

~ ~

membership function of set A.

µ𝐴 (𝑥): X → [0, 1], where µ𝐴 (𝑥) = 1 if x is totally in A;

~ ~

µ𝐴 (𝑥) = 0 if x is not in A;

~

0 < µ𝐴 (𝑥) < 1 if x is partly in A.

~

This set allows a continuum of possible choices. For any element x of universe X, membership

function A(x) equals the degree to which x is an element of set A. This degree, a value

between 0 and 1, represents the degree of membership, also called membership value, of

element x in set A.

Figure 3.7: Boundary region of a fuzzy set

From figure 3.7 it can be noted that "a" is clearly a member of fuzzy set P, "c" is clearly not a

member of fuzzy set P and the membership of "b" is found to be vague. Hence "a" can take

membership value 1, "c" can take membership value 0 and "b" can take membership value

between 0 and 1 [0 to 1], say 0.4, 0.7, etc. This is said to be a partial membership of fuzzy set P.

There are other ways of representation of fuzzy sets; all representations allow partial

membership to be expressed. When the universe of discourse U is discrete and finite, fuzzy

set 𝐴 is given as follows:

~

𝑛

µ𝐴 (𝑥1 ) µ𝐴 (𝑥2 ) µ𝐴 (𝑥3 ) µ𝐴 (𝑥𝑖 )

~ ~ ~

𝐴={ + + + ⋯ } = {∑ ~ }

~ 𝑥1 𝑥2 𝑥3 𝑥𝑖

𝑖=1

Department of CSE, ICET 55

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.15.1 Properties

Fuzzy sets follow the same properties as crisp sets except for the law of excluded middle

and law of contradiction.

That is, for fuzzy set 𝐴

~

𝐴 ∪ 𝐴̅ = 𝑈; 𝐴 ∩ 𝐴̅ = ∅

~ ~ ~ ~

1. Commutativity

𝐴 ∪ 𝐵 = 𝐵 ∪ 𝐴; 𝐴 ∩ 𝐵 = 𝐵 ∩ 𝐴

~ ~ ~ ~ ~ ~ ~ ~

2. Associativity

𝐴 ∪ (𝐵 ∪ 𝐶 ) = (𝐴 ∪ 𝐵 ) ∪ 𝐶

~ ~ ~ ~ ~ ~

𝐴 ∩ (𝐵 ∩ 𝐶 ) = (𝐴 ∩ 𝐵 ) ∩ 𝐶

~ ~ ~ ~ ~ ~

3. Distributivity

𝐴 ∪ (𝐵 ∩ 𝐶 ) = (𝐴 ∪ 𝐵 ) ∩ (𝐴 ∪ 𝐶 )

~ ~ ~ ~ ~ ~ ~

𝐴 ∩ (𝐵 ∪ 𝐶 ) = (𝐴 ∩ 𝐵 ) ∪ (𝐴 ∩ 𝐶 )

~ ~ ~ ~ ~ ~ ~

4. Idempotency

𝐴 ∪ 𝐴 = 𝐴; 𝐴 ∩ 𝐴 = 𝐴

~ ~ ~ ~ ~ ~

5. Transitivity

𝐼𝑓 𝐴 ⊆ 𝐵 ⊆ 𝐶 , 𝑡ℎ𝑒𝑛 𝐴 ⊆ 𝐶

~ ~ ~ ~ ~

6. Identity

𝐴 ∪ ∅ = 𝐴 𝑎𝑛𝑑 𝐴 ∪ 𝑈 = 𝑈

~ ~ ~

𝐴 ∩ ∅ = ∅ 𝑎𝑛𝑑 𝐴 ∩ 𝑈 = 𝐴

~ ~ ~

7. Involution (double negation)

𝐴̿ = 𝐴

~ ~

8. DeMorgans law

̅̅̅̅̅̅̅

|𝐴 ∩ 𝐵 | = 𝐴̅ ∪ 𝐵̅ ; |𝐴

̅̅̅̅̅̅̅

∪ 𝐵 | = 𝐴̅ ∩ 𝐵̅ ;

~ ~ ~ ~ ~ ~ ~ ~

Department of CSE, ICET 56

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.15.2 Operations on fuzzy sets

1. Union

The union of fuzzy sets 𝐴 and 𝐵 , denoted by 𝐴 ∪ 𝐵 is defined as

~ ~ ~ ~

𝜇𝐴∪𝐵 (𝑥) = 𝑚𝑎𝑥 [µ𝐴 (𝑥), µ𝐵 (𝑥)] = µ𝐴 (𝑥) ∨ µ𝐵 (𝑥) 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥 ∈ 𝑈

~ ~ ~ ~ ~ ~

where ∨ indicates max operator. The Venn diagram for union operation of fuzzy sets

𝐴and 𝐵 is shown below figure.

~ ~

Figure 3.8: Union of fuzzy sets 𝐴 and 𝐵

~ ~

2. Intersection

The union of fuzzy sets 𝐴 and 𝐵 , denoted by 𝐴 ∩ 𝐵 , is defined as

~ ~ ~ ~

𝜇𝐴∩𝐵 (𝑥) = 𝑚𝑖𝑛 [µ𝐴 (𝑥), µ𝐵 (𝑥)] = µ𝐴 (𝑥) ∧ µ𝐵 (𝑥) 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥 ∈ 𝑈

~ ~ ~ ~ ~ ~

where ∧ indicates min operator. The Venn diagram for intersection operation of fuzzy

sets 𝐴 and 𝐵 is shown below figure.

~ ~

Figure 3.9: Intersection of fuzzy sets 𝐴and 𝐵

~ ~

Department of CSE, ICET 57

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

3. Complement

When µ𝐴 (𝑥) ∈ [0,1], the complement of 𝐴, denoted as 𝐴̅ is defined by,

~ ~

𝜇𝐴̅ (𝑥) = 1 − µ𝐴 (𝑥) 𝑓𝑜𝑟 𝑎𝑙𝑙 𝑥 ∈ 𝑈

~ ~

The Venn diagram for complement operation of fuzzy set 𝐴 is shown below figure.

~

Figure 3.10: Complement of fuzzy set 𝐴

~

4. More Operations on Fuzzy Sets

a. Algebraic sum

The algebraic sum (𝐴 + 𝐵 ) of fuzzy sets, fuzzy sets 𝐴𝑎𝑛𝑑 𝐵 is defined as

~ ~ ~ ~

𝜇𝐴+𝐵 (𝑥) = µ𝐴 (𝑥) + µ𝐵 (𝑥) − µ𝐴 (𝑥). µ𝐵 (𝑥)

~ ~ ~ ~ ~ ~

b. Algebraic product

The algebraic product (𝐴. 𝐵 ) of fuzzy sets, fuzzy sets 𝐴 𝑎𝑛𝑑 𝐵 is defined as

~ ~ ~ ~

𝜇𝐴.𝐵 (𝑥) = µ𝐴 (𝑥). µ𝐵 (𝑥)

~~ ~ ~

c. Bounded sum

The bounded sum (𝐴 ⊕ 𝐵 ) of fuzzy sets, fuzzy sets 𝐴 𝑎𝑛𝑑 𝐵 is defined as

~ ~ ~ ~

𝜇𝐴⊕𝐵 (𝑥) = min {1, µ𝐴 (𝑥) + µ𝐵 (𝑥)}

~ ~ ~ ~

d. Bounded difference

The bounded difference (𝐴 ⊙ 𝐵 ) of fuzzy sets, fuzzy sets 𝐴 𝑎𝑛𝑑 𝐵 is defined as

~ ~ ~ ~

𝜇𝐴⊙𝐵 (𝑥) = max {0, µ𝐴 (𝑥) − µ𝐵 (𝑥)}

~ ~ ~ ~

Department of CSE, ICET 58

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.16 Classical relations

A classical binary relation represents the presence or absence of a connection or interaction

between the elements of two sets.

• Cartesian Product of Relation

An ordered r-tuple is an ordered sequence of r-elements expressed in the form (a1, a2, a3, ... ,

ar). An unordered tuple is a collection of r-elements without any restrictions in order. For r =

2, the r-tuple is called an ordered pair. For crisp sets A1, A2, ... , Ar, the set of all r-tuples (a1,

a2, a3, ... , ar), where a1∈ A1, a2 ∈ A2 ... , ar ∈ Ar is called me Cartesian product of A1,A2 .. ,Ar

and is denoted by A1 x A2 x ... x Ar.

Consider two universes X and Y; their Cartesian product X x Y is given by

𝑋 × 𝑌 = {(𝑥, 𝑦)| 𝑥 ∈ 𝑋, 𝑦 ∈ 𝑌}

Here the Cartesian product forms an ordered pair of every 𝑥 ∈ 𝑋 with every 𝑦 ∈ 𝑌. Every

element in X is completely related to every element in Y. The characteristic function,

denoted by χ, gives the strength of the relationship between ordered pair of elements in each

universe. If it takes unity as its value, then complete relationship is found; if the value is

zero, then there is no relationship, i.e.,

1, (𝑥, 𝑦) ∈ 𝑋 × 𝑌

𝜒𝑋×𝑌 (𝑥, 𝑦) = {

0, (𝑥, 𝑦) ∉ 𝑋 × 𝑌

When the universes or sets are finite, then the relation is represented by a matrix called

relation matrix. An r-dimensional relation matrix represents an r-ary relation. Thus, binary

relations are represented by two-dimensional matrices.

Consider the elements defined in the universes X and Y as follows:

X={2,4,6} Y= {p,q,r}

The Cartesian product of these two sets leads to

X × Y= {(p, 2), (p, 4), (p, 6), (q, 2), (q, 4), (q, 6), (r, 2), (r, 4), (r, 6)}

From this set one may select a subset such that

R= {(p, 2), (q, 4), (r, 4), (r, 6)}

Department of CSE, ICET 59

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

Subset R can be represented using a coordinate diagram as shown in below figure

Figure 3.11: Coordinate diagram of a relation

The relation could equivalently be represented using a matrix as follows

R P Q R

2 1 0 0

4 0 1 1

6 0 0 1

The relation between sets X and Y may also be expressed by mapping representations as

shown in below figure.

Figure 3.12: Mapping representation of a relation

A binary relation in which each element from set X is not mapped to more than one element

in second set Y is called a function and is expressed as

𝑅: 𝑋 → 𝑌

The characteristic function is used to assign values of relationship in the mapping of the

Cartesian space X × Y to the binary values (0, 1) and is given by

Department of CSE, ICET 60

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

1, (𝑥, 𝑦) ∈ 𝑅

𝜒𝑅 (𝑥, 𝑦) = {

0, (𝑥, 𝑦) ∉ 𝑅

The figure 3.12 (A) and (B) show the illustration of 𝑅: 𝑋 → 𝑌

(A) (B)

Figure 3.13: Illustration of 𝑹: 𝑿 → 𝒀

The constrained Cartesian product for sets when r = 2 (i.e., A×A=A2) is called identity

relation, and the unconstrained Cartesian product for sets when r = 2 is called universal

relation.

Consider set A= {2,4,6}.

Then universal relation (UA) and identity relation (IA) are given as follows:

UA = {(2,2),(2,4),(2,6),(4,2),(4,4),(4,6),(2,6),(4,6),(6,6)}

IA = {(2,2),(4,4),(6,6)}

• Cardinality of Classical Relation

Consider n elements of the universe X being related to m elements of universe Y. When the

cardinality of X= 𝑛𝑋 and the cardinality of Y =𝑛𝑌 , then the cardinality of relation R between

the two universe is

𝑛𝑋×𝑌 = 𝑛𝑋 × 𝑛𝑌

The cardinality of the power set P(X × Y) describing the relation is given by

𝑛𝑃(𝑋×𝑌) = 2(𝑛𝑋𝑛𝑌 )

Department of CSE, ICET 61

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

2.16.1 Operations on classical relations

Let R and S be two separate relations on the Cartesian universe X ×Y. The null relation and

the complete relation are defined by the relation matrices ØR and ER. An example of a 3 X 3

form of the ØR and ER matrices is given below:

0 0 0 1 1 1

∅𝑅 = [0 0 0] and 𝐸𝑅 = [1 1 1]

0 0 0 1 1 1

1. Union

R ∪ S → χR∪S (x, y): χR∪S (x, y) = max[χR (x, y), χS (x, y)]

2. Intersection

R ∩ S → χR∩S (x, y): χR∩S (x, y) = min[χR (x, y), χS (x, y)]

3. Complement

̅ → χR̅ (x, y) ∶ χR̅ (x, y) = 1 − χR̅ (x, y)

R

4. Containment

R ⊂ S → χR (x, y): χR (x, y) ≤ χS (x, y)

5. Identity

∅ → ∅R and X → ER

• Composition of Classical Relations

Let R be a relation that maps elements from universe X to universe an e a relation that maps

elements from universe Y to universe Z

𝑅 ⊆𝑋×𝑌 𝑎𝑛𝑑 𝑆⊆𝑌×𝑍

The composition operations are of two types:

1. Max-min composition

The max-min composition is defined by the function theoretic expression as

𝑇= 𝑅∘𝑆

𝜒𝑇 (𝑥, 𝑧) = ⋁𝑦∈𝑌[χR (x, y) ∧ χS (y, z)]

2. Max-product composition

The max-product composition is defined by the function theoretic expression as

Department of CSE, ICET 62

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

𝑇 = 𝑅∘𝑆

𝜒𝑇 (𝑥, 𝑧) = ⋁𝑦∈𝑌[χR (x, y) . χS (y, z)]

2.17 Fuzzy relations

A fuzzy relation is a fuzzy set defined on the Cartesian product of classical sets {X I, X2, ... Xn}

where tuples (x1, x2, xn) may have varying degrees of membership µ R (x1,x2, .. , xn) within the

relation.

𝑅(𝑋1 , 𝑋2 , … , 𝑋𝑛 ) = ∫ µ𝑅 (𝑥1 , 𝑥2, … , 𝑥𝑛 )|(𝑥1 , 𝑥2, … , 𝑥𝑛 ), 𝑥𝑖 ∈ 𝑋𝑖

𝑋1 ,𝑋2 ,…,𝑋𝑛

A fuzzy relation between two sets X and Y is called binary fuzzy relation and is denoted by

R(X,Y). A binary relation R(X,Y) is referred to as bipartite graph when X≠Y. The binary

relation on a single set X is called directed graph or digraph. This relation occurs when X=Y

and is denoted as R(X,X) or R(X2).

Let

𝑋 = {𝑥1 , 𝑥2, … , 𝑥𝑛 } 𝑎𝑛𝑑 𝑌 = {𝑦1, 𝑦2 , … , 𝑦𝑛 }

~ ~

Fuzzy relation 𝑅 (𝑋, 𝑌 ) can be expressed by an n × m matrix as follows:

~ ~ ~

µ𝑅 (𝑥1, 𝑦1 ) µ𝑅 (𝑥1, 𝑦2 ) . . µ𝑅 (𝑥1 , 𝑦𝑚 )

µ𝑅 (𝑥2 , 𝑦1 ) µ𝑅 (𝑥2 , 𝑦2 ) . . µ𝑅 (𝑥2 , 𝑦𝑚 )

𝑅 (𝑋, 𝑌) = . . .

~ ~ ~

. . .

[µ𝑅 (𝑥𝑛 , 𝑦1 ) µ𝑅 (𝑥𝑛 , 𝑦2 ) µ𝑅 (𝑥𝑛 , 𝑦𝑚 )]

2.17.1 Operations on fuzzy relations

1. Union

µ𝑅 ∪𝑆 (x, y) = max [µ𝑅 (x, y), µ𝑆 (x, y)]

~ ~ ~ ~

2. Intersection

µ𝑅 ∩𝑆 (x, y) = min [µ𝑅 (x, y), µ𝑆 (x, y)]

~ ~ ~ ~

Department of CSE, ICET 63

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

3. Complement

µ𝑅̅ (x, y) = 1 − µ𝑅 (x, y)

~ ~

4. Containment

𝑅 ⊂ 𝑆 → µ𝑅 (x, y) ≤ µ𝑆 (x, y)

~ ~ ~ ~

5. Inverse

The inverse of a fuzzy relation R on X × Y is denoted by R-1. It is a relation on Y × X

defined by 𝑅−1 (𝑦, 𝑥) = 𝑅(𝑥, 𝑦) for all pairs(𝑦, 𝑥) ∈ 𝑌 × 𝑋.

6. Projection

For a fuzzy relation R(X,Y), let [𝑅 ↓ 𝑌] denote the projection of R onto Y. Then [𝑅 ↓ 𝑌]

is a fuzzy relation in Y whose membership function is defined by

(𝑥, 𝑦)

𝜇[𝑅↓𝑌] (𝑥, 𝑦) = 𝑚𝑎𝑥 𝜇𝑅

𝑥 ~

• Fuzzy Composition

Let 𝐴 be a fuzzy set on universe X and 𝐵 be a fuzzy set on universe Y. The Cartesian

~ ~

product over 𝐴 and 𝐵 results in fuzzy relation 𝑅 and is contained within the entire

~ ~ ~

(complete) Cartesian space, i.e.,

𝐴×𝐵 = 𝑅

~ ~ ~

where

𝑅 ⊂𝑋×𝑌

~

The membership function of fuzzy relation is given by

µ𝑅 (x, y) = µ𝐴×𝐵 (x, y) = min [µ𝐴 (x), µ𝐵 (y)]

~ ~ ~ ~ ~

There are two types of fuzzy composition techniques:

1. Fuzzy max-min composition

There also exists fuzzy min-max composition method, but the most commonly used

technique is fuzzy max-min composition. Let 𝑅 be fuzzy relation on Cartesian

~

space 𝑋 × 𝑌, and 𝑆 be fuzzy relation on Cartesian space𝑌 × 𝑍.

~

Department of CSE, ICET 64

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

The max-min composition of R(X,Y) and S(Y,Z), denoted by 𝑅(𝑋, 𝑌) ° 𝑆(𝑌, 𝑍) is

defined by T(X,Z) as

µ 𝑇 (x, z) = µ𝑅°𝑆 (x, z) = max {min [µ𝑅 (x, y), µ𝑆 (y, z)]}

~ ~ ~ y∈Y ~ ~

= ⋁𝑦∈𝑌 [µ𝑅 (x, y) ∧ µ𝑆 (y, z)] ∀𝑥 ∈ 𝑋, 𝑧 ∈ 𝑍

~ ~

The min-max composition of R(X,Y) and S(Y,Z), denoted by 𝑅(𝑋, 𝑌)°𝑆(𝑌, 𝑍)is defined

by T(X,Z) as

µ 𝑇 (x, z) = µ𝑅°𝑆 (x, z) = min {max [µ𝑅 (x, y), µ𝑆 (y, z)]}

~ ~ ~ y∈Y ~ ~

= ⋀𝑦∈𝑌 [µ𝑅 (x, y) ∨ µ𝑆 (y, z)] ∀𝑥 ∈ 𝑋, 𝑧 ∈ 𝑍

~ ~

From the above definition it can be noted that

̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅̅

𝑅(𝑋, 𝑌)°𝑆(𝑌, 𝑍) = ̅̅̅̅̅̅̅̅̅̅ ̅̅̅̅̅̅̅̅̅

𝑅(𝑋, 𝑌)°𝑆(𝑌, 𝑍)

2. Fuzzy max-product composition

The max-product composition of R(X,Y) and S(Y,Z), denoted by 𝑅(𝑋, 𝑌)°𝑆(𝑌, 𝑍)is

defined by T(X,Z) as

µ 𝑇 (x, z) = µ𝑅 . 𝑆 (x, z) = min [µR (x, y). µ𝑆 (y, z)]

~ ~ ~ y∈Y ~ ~

= ⋁𝑦∈𝑌 [µ𝑅 (x, y). µ𝑆 (y, z)]

~ ~

The properties of fuzzy composition can be given as follows:

𝑅 ° 𝑆≠ 𝑆 ° 𝑅

~ ~ ~ ~

−1

(𝑅 ° 𝑆) = 𝑆 −1 ° 𝑅−1

~ ~ ~ ~

(𝑅 ° 𝑆) ° 𝑀 = 𝑅° (𝑆 ° 𝑀)

~ ~ ~ ~ ~ ~

2.18 Advantages of Fuzzy logic

6. Mimicks human control logic.

7. Uses imprecise language.

8. Inherently robust.

Department of CSE, ICET 65

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 3

9. Fails safely.

10. Modified and tweaked easily.

2.19 Disadvantages of Fuzzy logic

3. Operator's experience required.

4. System complexity.

2.20 Applications of Fuzzy logic

8. Automobile and other vehicle subsystems, such as automatic transmissions, ABS and cruise

control (e.g. Tokyo monorail).

9. Air conditioners.

10. Auto focus on cameras.

11. Digital image processing, such as edge detection.

12. Rice cookers.

13. Dishwashers.

14. Elevators.

15. Washing machines and other home appliances.

16. Video game artificial intelligence.

17. Language filters on message boards and chat rooms for filtering out offensive text.

18. Pattern recognition in Remote Sensing.

19. Fuzzy logic has also been incorporated into some microcontrollers and microprocessors.

20. Bus Time Tables.

21. Predicting genetic traits. (Genetic traits are a fuzzy situation for more than one reason).

22. Temperature control (heating/cooling).

23. Medical diagnoses.

24. Predicting travel time.

25. Antilock Braking System.

Department of CSE, ICET 66

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

Soft Computing (CS361) Module 4

Module – 4

• Fuzzy membership functions

• Fuzzification

• Methods of membership value assignments

o Intuition

o Inference

o Rank ordering

• Lambda –cuts for fuzzy sets

• Defuzzification methods

Department of CSE ,ICET

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

4.1 Fuzzy membership functions

Membership function defines the fuzziness in a fuzzy set irrespective of the elements in the set,

which are discrete or continuous. A fuzzy set 𝐴 in the universe of discourse X can be defined

~

as a set of ordered pairs:

𝐴 = {(𝑥, µ𝐴 (𝑥)) | 𝑥 ∈ 𝑋}

~ ~

where µ𝐴 (.) is called membership function of 𝐴 . The membership function µ𝐴 (.) maps X to the

~ ~ ~

membership space M, ie., µ𝐴 ∶ 𝑋 → 𝑀. The membership value ranges in the interval [0, 1] ie.,

~

the range of the membership function is a subset of the non-negative real numbers whose

supremum is finite.

The three main basic features involved in characterizing membership function are the following.

1. Core

The core of a membership function for some fuzzy set 𝐴 is defined as that region of

~

universe that is characterized by complete membership in the set 𝐴 . The core has elements x

~

of the universe such that

µ𝐴 (𝑥) = 1

~

The core of a fuzzy set may be an empty set.

2. Support

The support of a membership function for a fuzzy set 𝐴 is defined as that region of

~

universe that is characterized by a non zero membership in the set 𝐴 .

~

µ𝐴 (𝑥) > 0

~

A fuzzy set whose support is a single element in X with µ𝐴 (𝑥) = 1 is referred to as a fuzzy

~

singleton.

Department of CSE, ICET 68

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852

3. Boundary

The support of a membership functions as the region of universe containing elements that

have a non zero but not complete membership. The boundary comprises those elements of x

of the universe such that

0 < 𝜇𝐴 (𝑥) < 1

The boundary elements are those which possess partial membership in the fuzzy set 𝐴 .

~

Figure 4.1: Features of membership functions

A fuzzy set whose membership function has at least one element x in the universe whose

membership value is unity is called normal fuzzy set. The element for which the membership is

equal to 1 is called prototypical element. A fuzzy set where no membership function has its

value equal to 1 is called subnormal fuzzy set.

Figure 4.2: (A) Normal fuzzy set and (B) subnormal fuzzy set

A convex fuzzy set has a membership function whose membership values are strictly

monotonically increasing or strictly monotonically decreasing or strictly monotonically

Department of CSE, ICET 69

Downloaded by Preeti (mduofficial.com@gmail.com)

lOMoARcPSD|41723852