Flood Prediction Using Supervised Machine Learning Algorithms

Uploaded by

hemanthsivaprasadpadalaFlood Prediction Using Supervised Machine Learning Algorithms

Uploaded by

hemanthsivaprasadpadala5th International Conference on Smart Electronics and Communication (ICOSEC 2024)

IEEE Xplore Part Number: CFP24V90-ART; ISBN: 979-8-3315-0440-3

Flood Prediction using Supervised Machine

Learning Algorithms

2024 5th International Conference on Smart Electronics and Communication (ICOSEC) | 979-8-3315-0440-3/24/$31.00 ©2024 IEEE | DOI: 10.1109/ICOSEC61587.2024.10722348

S.Ramasamy P.Ranjith Kumar

M.Vimala Professor,

Assistant Professor,

Assistant Professor, Department of ECE,

Department of ECE, Department of ECE,

P.S.R Engineering College, P.S.R Engineering College,

P.S.R Engineering College, Sivakasi - 626 140

Sivakasi - 626 140

Sivakasi - 626 140 ranjithkumar@psr.edu.in

sramasamyece90@gamil.com

vimala@psr.edu.in

N.Najmul Sahi A.Rajesh S.Siddharth,

UG Student, UG Student, UG Student,

Department of ECE, Department of ECE, Department of ECE,

P.S.R Engineering College, P.S.R Engineering College, P.S.R Engineering College,

Sivakasi - 626 140 Sivakasi - 626 140 Sivakasi - 626 140

najmulsahins@gmail.com rajesha28122002@gmail.com siddhuss102@gmail.com

Abstract—The most frequent type of calamity is flooding, unlabeled dataset and reinforcement learning algorithm, self-trained

which happens when water overflows and submerges normally on rewards and punishment mechanisms. The supervised learning

dry terrain. Flood prediction models, which are typically based algorithms are Logistic Regression, Decision Tree, Random Forest,

on historic data and specified thresholds, are intended to Support Vector Machine, Naive Bayes, , and so on [4]. On the basis

forecast when the water level will exceed a predetermined of the above study, it is observed that the supervised learning

threshold. To minimize the complex numerical articulations of methods are showing the best results for prediction, which motivates

actual flood cycles, Machine Learning (ML) methods generate us to choose the same for this article. In view of past precipitation

predictions about future events that are far more accurate than information, the best of the two methodologies is picked for

predictions made by humans. In this paper, various supervised expectation [5] dependable deep learning and ML model for a real-

machine learning algorithms are implemented. Among many time flood detection system. It uses convolutional neural networks,

ML techniques, classification is a widely used one. This paper random forests, and naive Bayes to identify water levels and

uses various supervised learning algorithms, such as Logistic evaluate floods that may have humanitarian implications before they

Regression, Random Forest, XGB classifier, ExtraTree happen [6]. There are many advantages to the machine learning

classifier, LGBM classifier, and CatBoost classifier. Based on techniques for flood forecasting that are suggested in [7]. Then, it

performance, supervised learning algorithm for flood develops an intuitive web interface system using an Intelligent

prediction is analyzed and most appropriate models is Hydro Informatics Integration Platform to enhance online

predicted. This particular model can be effectively utilized by forecasting and flood risk management. This is achieved by utilizing

both the government and the general public to properly predict machine learning, visualization, and system development

floods in advance. methodologies. The most promising short- and long-term flood

prediction methods are presented in [8]. An investigation is also

Keywords—Flood Prediction, Machine Learning Algorithms given to the significant advancements in improving the caliber of

flood prediction models. The researchers found that the best

strategies for enhancing ML techniques were data decomposition,

I. INTRODUCTION hybridization, ensemble modeling, and optimization. [9] A useful

flood modeling framework for simulations was implemented. With

Flood is the most common disaster, causing losses of human life, the use of a hybrid hydraulic model and algorithms for learning, the

infrastructure, damage to property, agriculture, and livestock. The structure offers a novel, quick, effective, and expandable method for

process of forecasting and estimating the occurrence, intensity, and determining the level of floods. Thus, two machine learning models

potential impact of flooding events in a particular area is known as were used. [10] presented Random Forest (RF) as a competitive

flood forecasting. The aim of flood prediction is to provide prior substitute for Support Vector Machine (SVM) that often beats SVM

information to communities, authorities, and individuals, allowing in flood prediction models. [11] developed an expert prediction

them to take preventive measures and mitigate the potential damage model by utilizing the self-adaptive Evolutionary Extreme Learning

caused by floods. To predict the flood, a machine learning model is (ELM) and a non-tuned machine learning method. Water level

needed to analyze complex datasets, identify patterns, and improve prediction is the main use of the SaE-primary ELM. Creating such

prediction accuracy. Machine learning offers a wide range of models for water level prediction and monitoring is a crucial

approaches for prediction. Machine learning methods are utilized to optimization issue in water resources management and flood

predict floods by finding patterns in a set of data [1]. Supervised, prediction. [12] examined the effectiveness of random forest (RF),

unsupervised, and reinforcement learning are the three categories artificial neural network (ANN), and support vector machine (SVM)

into which machine learning approaches fall. [2]. In a supervised in general applications to floods and discovered that RF provided

learning algorithm, a dataset is given as input to the algorithm, the greatest results. [13] The support vector machine was utilized to

which is then processed and optimized to meet a set of specific forecast floods. They did point out, though, that even though their

outputs [3]. Unsupervised learning algorithm to analyze and cluster

979-8-3315-0440-3/24/$31.00 ©2024 IEEE 1093

Authorized licensed use limited to: VIT University- Chennai Campus. Downloaded on December 26,2024 at 13:43:15 UTC from IEEE Xplore. Restrictions apply.

5th International Conference on Smart Electronics and Communication (ICOSEC 2024)

IEEE Xplore Part Number: CFP24V90-ART; ISBN: 979-8-3315-0440-3

objective model performs better than the benchmark models in the combining numerous weak learners, where each subsequent learner

absence of cutting-edge flood monitoring technology, it still requires corrects the errors made by its predecessor.

a lot of work. [14] A prediction model was developed using rainfall Gradient Descent: Instead of training new models randomly,

data to forecast the frequency of floods brought on by precipitation. XGBoost builds trees sequentially by minimizing a predefined loss

Based on the range of rainfall in specific locations, the model function. It uses gradient descent optimization techniques to

predicts if a "flood" will happen. Using information on rainfall from minimize the loss and improve the overall model performance.

districts in India, the forecast model will run. The dataset was trained

using a variety of methods, including Multilayer perceptron, support Regularization: Overfitting is avoided by XGBoost using

vector machine, K-nearest neighbor, and linear regression. The MLP regularization techniques. The objective function of the XGBoost

algorithm performed with a precision of 97.30%. Based mostly on classifier is L1 (Lasso) and L2 (Ridge) regularization factors, which

upstream stage observation, ML models may forecast flood stages penalize complex models and promote simplicity.

at a major gauge station [15]. The case study for this analysis is the Handling Missing Values: Preprocessing is not always necessary

lower Parma River in Italy, and a 9-hour forecast horizon was used. because XGBoost has built-in skills to manage missing values by

Three machine learning algorithms were compared and processing learning how to treat them during training.

speed: support vector regression (SVR), multi-layer perceptron

(MLP). D. LGBM Classifier:

To improve performance of flood prediction using

supervised learning models. The article's contribution includes (i)

The LGBM (Light Gradient Boosting Machine) Classifier is a

supervised learning algorithm famed for its speed, efficiency, and

implementing various supervised machine learning models (ii)

high performance, especially with large datasets. It belongs to the

comparing the performance of logic regression with Random Forest,

family of gradient-boosting algorithms, like XGBoost, but with

ExtraTree classifier, LGBM classifier, and CatBoost classifier.

specific optimizations that enhance its speed and reduce memory

II. METHODOLOGY usage.

A. Logistic regression (LR) E. CatBoost Classifier:

The CatBoost Classifier is designed for classification

Logistic regression predicts the probability of a binary outcome. tasks in supervised machine learning algorithms and is particularly

In contrast with linear regression, which predicts continuous values, known for its robustness, efficiency, and capability to handle

logistic regression is designed for classification problems where the categorical features without extensive preprocessing. Developed by

dependent variable has two possible outcomes (e.g., yes/no, 0/1, Yandex, CatBoost stands for "categorical boosting" and is based on

true/false). gradient boosting techniques like XGBoost and LightGBM.

Binary Classification: The link between one or more independent

variables is modeled by logistic regression. III. PERFORMANCE METRICS

Performance metrics used to evaluate the experiment results are

Sigmoid Function: It employs the sigmoid or logistic function to

accuracy, precision, recall, and F1 score.

squash the output between 0 and 1. This function ensures that

predictions fall within the valid probability range.

Accuracy is the model's ability to predict correctly by the total

Decision Boundary: The model uses a decision boundary to classify number of classes

data points. For example, in a two-dimensional space, the decision 𝐶𝑜𝑟𝑟𝑒𝑐𝑡 𝑃𝑟𝑒𝑑𝑖𝑐𝑡𝑖𝑜𝑛

boundary could be a line separating the two classes, maintaining the Accuracy = ∗ 100%

𝑇𝑜𝑡𝑎𝑙 𝐶𝑙𝑎𝑠𝑠𝑒𝑠

integrity of the specifications.

Precision refers to the proximity of the two measured values

B. Random Forests (RF):

𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑇𝑟𝑢𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

Random Forest (RF) algorithm is an ensemble method, that Precision =

𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑇𝑟𝑢𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠 + 𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝐹𝑎𝑙𝑠𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

integrates numerous models to produce high resilience and

prediction.

Ensemble of Decision Trees: Random Forest is the multiples of Recall measures a model’s ability to detect positives

decision trees. All the trees are trained with a random subset of data 𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑇𝑟𝑢𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒

Recall =

and attributes, hence the name "random" forest. 𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝑇𝑟𝑢𝑒 𝑃𝑜𝑠𝑖𝑡𝑣𝑒𝑠 + 𝑁𝑢𝑚𝑏𝑒𝑟 𝑜𝑓 𝐹𝑎𝑙𝑠𝑒 𝑃𝑜𝑠𝑖𝑡𝑖𝑣𝑒𝑠

Bootstrapping (bagging): It uses bootstrapping, a technique that can

F1 Score refers to quantifying the accuracy

be replaced with multiple subsets of the original dataset.

2 ∗ (𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 ∗ 𝑆𝑝𝑒𝑐𝑖𝑓𝑖𝑐𝑖𝑡𝑦 )

Random Feature Selection: At each decision tree node, only a subset F1 Score =

(𝑃𝑟𝑒𝑐𝑖𝑠𝑖𝑜𝑛 ∗ 𝑆𝑝𝑒𝑐𝑖𝑓𝑖𝑐𝑖𝑡𝑦)

of the attributes is considered for splitting. This random selection of

features helps reduce correlation among trees and improve diversity IV. EXPERIMENTAL RESULTS

in the forest.

A. Dataset

Averaging: In classification tasks, every tree in the forest casts a vote The purpose of this article is to predict the flood using a supervised

to determine the prediction. For regression tasks, the average of the learning algorithm. Here, a flood prediction dataset is used, which is

predictions from each individual tree is utilized to determine the available in the Kaggle dataset and contains monsoon intensity,

final prediction. topography drainage, river management, deforestation,

urbanization, climate change, dam quality, siltation, agricultural

C. XGB Classifier: practices, encroachments, ineffective disaster preparedness,

The XGBoost Classifier is a supervised machine learning model drainage systems, coastal vulnerability, landslides, watersheds,

that offers excellent performance and regularization techniques, deteriorating infrastructure, population score, wetland loss,

making it suitable for a wide range of applications. inadequate planning, and political factors. By providing this input to

different models of supervised machine learning algorithms, The

Gradient Boosting: The XGBoost classifier is an ensemble learning

models undergo preprocessing in order to extract patterns with the

technique-based boosting concept. Boosting involves successively

979-8-3315-0440-3/24/$31.00 ©2024 IEEE 1094

Authorized licensed use limited to: VIT University- Chennai Campus. Downloaded on December 26,2024 at 13:43:15 UTC from IEEE Xplore. Restrictions apply.

5th International Conference on Smart Electronics and Communication (ICOSEC 2024)

IEEE Xplore Part Number: CFP24V90-ART; ISBN: 979-8-3315-0440-3

highest levels of accuracy, precision, recall, and F1-score. The

dataset is split in a ratio between training and testing. Then the

supervised learning algorithm is compared with all the results by

considering the confusion matrix, and the performance metrics

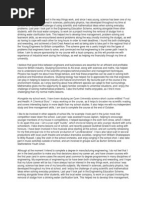

values are determined as shown in Table 1. Figs. 1 and 2 display the

datasets' shape values and quantile-quantile (QQ) plots.

Fig. 2: shows the SHAP values

Fig 3. shows the correlation matrix

B. Comparison table for the various machine learning

Models

The table shows the experimental results of various

machine learning models.

Fig. 1. shows the quantile–quantile (QQ) Plots

979-8-3315-0440-3/24/$31.00 ©2024 IEEE 1095

Authorized licensed use limited to: VIT University- Chennai Campus. Downloaded on December 26,2024 at 13:43:15 UTC from IEEE Xplore. Restrictions apply.

5th International Conference on Smart Electronics and Communication (ICOSEC 2024)

IEEE Xplore Part Number: CFP24V90-ART; ISBN: 979-8-3315-0440-3

Table 1 shows the comparison of various Models V. CONCLUSION

Python is used to implement this model, and the "SK-learn" module

Models Accuracy Precision Recall F1- is utilized along with many other utilities that provide flexible tools

Score for model fitting, preprocessing, model selection, and model

assessment. After feature selection and scaling, the training data set

Logistic 0.9987 0.95 0.98 0.96 is fed to the Logistic Regression, Random Forest, ExtraTree, XGB,

Regression LGBM, and CatBoost classifier, and the performance of the

Random classifier are evaluated. From the observation, it is noted that

0.8937 0.93 0.88 0.91

Forest logistic regression outperforms than other algorithms.

Classifier

ExtraTree 0.9115 0.9 0.81 0.85 REFERENCES

classifier [1] Sarker I H. Furhad M. H and Nowrozy R, “AI-Driven cybersecurity:

XGB 0.9287 0.92 0.95 0.93 an overview, security intelligence modeling and research directions”

Classifier SN COMPUT. SCI. Vol.2, no. 173, 2021.

https://doi.org/10.1007/s42979-021-00557-0

LGBM 0.9167 0.89 0.91 0.92

[2] Mohssen Mohammed, Muhammad Badruddin Khan, Eihab Bashier

Classifier and Mohammed Bashier, “Machine learning: algorithms and

CatBoost applications”, CRC Press, 2016.

0.9748 0.91 0.94 0.92

Classifier https://doi.org/10.1201/9781315371658

[3] Botvinick M, Ritter S, Wang J.X. Kurth-Nelson Z. Blundell C. and

Table 1 displays the models' performance evaluation utilizing F1 Hassabis D, “ Reinforcement Learning, Fast and Slow’, Trends Cogn.

score, accuracy, recall, and precision. Logistic Regression have Sci. , vol. 23, no. 5, pp 408–422, 2019.

accuracy 0.9987 which is 0.0239 higher than CatBoost Classifier, [4] Taiwo, O. A, “Types of Machine Learning Algorithms, New Advances

0.082 higher than LGBM Classifier, 0.07 higher than XGB in Machine Learning” Yagang Zhang (Ed.), ISBN: 978-953-307-034-

6, InTech, University of Portsmouth United Kingdom. Pp 3 – 31, 2010.

classifier, 0.0872 higher than Extra Tree classifier, 0.105 higher than

[5] K. Vamshi, S. K. S, B. R. Muralidhar, N. Manjunath, and P. Savitha,

random forest classifier. The Precision value for Logistic regression

“A Review on Rainfall Prediction using Machine Learning and Neural

is 0.95 which is 0.02 higher than Random forest, recall 0.98 which Network,” pp. 2763–2769, 2021.

is 0.03 higher than XGB classifier, F1-score is 0.96 which is 0.03

[6] A. O. Hashi, A. A. Abdirahman, M. A. Elmi, and S. Z. Mohd, “A Real-

higher than XGB classifier. Fig 3. shows the graphical Time Flood Detection System Based on Machine Learning Algorithms

representation of various supervised learning Algorithm with Emphasis on Deep Learning,” vol. 69, no. 5, pp. 249–256, 2021,

doi: 10.14445/22315381/IJETT-V69I5P232

[7] Chang, K. Hsu, and L. Chang, “Flood Forecasting Using Machine

Learning Methods”, February 2019 , ISBN 978-3-03897-548-9 .

1

[8] Mosavi, P. Ozturk, and K. W. Chau, “Flood prediction using machine

0.98 learning models: Literature review,” Water (Switzerland), vol. 10, no.

0.96 11, pp. 1–40, 2018, doi: 10.3390/w10111536.

0.94 [9] Hosseiny, F. Nazari, V. Smith, and C. Nataraj, “OPEN A Framework

Performance

for Modeling Flood Depth Using a Hybrid of Hydraulics and Machine

0.92 Learning,” Sci. Rep., pp. 1–14, 2020, doi: 10.1038/s41598-020-65232-

0.9 5

0.88 [10] S. Tehrany, B. Pradhan, and M. N. Jebur, “Flood susceptibility

mapping using a novel ensemble weights-of-evidence and support

0.86 vector machine models in GIS,” J. Hydrol., vol. 512, pp. 332–343, May

0.84 2014, doi: 10.1016/j.jhydrol.2014.03.008

0.82 [11] M. Yaseen and I. Ebtehaj, “Hybrid Data Intelligent Models and

0.8 Applications for Water Level Prediction,” no. 1, pp. 121–139, doi:

10.4018/978-1- 5225-4766-2.ch006.

0 1 2 3 4 5 6

[12] T. Bui, T. A. Tuan, H. Klempe, B. Pradhan, and I. Revhaug, “Spatial

Models prediction models for shallow landslide hazards: a comparative

assessment of the efficacy of support vector machines, artificial neural

networks, kernel logistic regression, and logistic model tree,”

Landslides, vol. 13, pp. 361–378, 2015

[13] Han, L. Chan, and N. Zhu, “Flood forecasting using support vector

Accuracy Precision Recall F1-Score machines,” J.Hydroinformatics, vol. 9, no. 4, pp. 267–276, Oct. 2007,

doi: 10.2166/hydro.2007.027

[14] A. Vinothini, L. Kruthiga, and U. Monisha, “Prediction of Flash Flood

using Rainfall by MLP Classifier,” no. 1, pp. 425–429, 2020, doi:

Fig 3 clearly represents the Logistic regression have outperformed 10.35940/ijrte.F9880.059120.

than Random forest, Extra Tree, XGB, LGBM and CatBoost [15] Dazzi, R. Vacondio, and P. Mignosa, “ FloodStage Forecasting Using

classifier. Machine-Learning Methods : A Case Study on the Parma River (

Italy),” 2021.

[16] Demaris A, “A Tutorial in Logistic Regression Published by: National

Council on Family Relations”, J. Marriage Fam. 1995, 57, 956–968.

979-8-3315-0440-3/24/$31.00 ©2024 IEEE 1096

Authorized licensed use limited to: VIT University- Chennai Campus. Downloaded on December 26,2024 at 13:43:15 UTC from IEEE Xplore. Restrictions apply.

You might also like

- Personal Statement Manufacture EngineeringNo ratings yetPersonal Statement Manufacture Engineering2 pages

- Identifying Flood Prediction Using Machine Learning TechniquesNo ratings yetIdentifying Flood Prediction Using Machine Learning Techniques4 pages

- Prediction Analysis of Floods Using Machine Learning Algorithms (NARX & SVM)No ratings yetPrediction Analysis of Floods Using Machine Learning Algorithms (NARX & SVM)11 pages

- IRJET_Flood_Prediction_and_Rainfall_AnalNo ratings yetIRJET_Flood_Prediction_and_Rainfall_Anal5 pages

- Water: Flood Prediction Using Machine Learning Models: Literature ReviewNo ratings yetWater: Flood Prediction Using Machine Learning Models: Literature Review40 pages

- (IJCST-V10I2P14) :prof. A. D. Wankhade, Bhagyashri Jaiswal, Divya Gupta, Mahima Gadodiya, Sanket RautNo ratings yet(IJCST-V10I2P14) :prof. A. D. Wankhade, Bhagyashri Jaiswal, Divya Gupta, Mahima Gadodiya, Sanket Raut4 pages

- In-Depth review on machine learning models for long-term flood forecastingNo ratings yetIn-Depth review on machine learning models for long-term flood forecasting19 pages

- Comparative_Analysis_of_Deep_Learning_Algorithm_for_Flood_Prediction_Probability_With_and_Without_Feature_SelectionNo ratings yetComparative_Analysis_of_Deep_Learning_Algorithm_for_Flood_Prediction_Probability_With_and_Without_Feature_Selection5 pages

- Real-Time Flood Prediction Using Physics-Informed Neural Networks and Rainfall-Runoff DataNo ratings yetReal-Time Flood Prediction Using Physics-Informed Neural Networks and Rainfall-Runoff Data5 pages

- Flood Forecasting Using Committee Machine With Intelligent Systems A Framework For Advanced Machine Learning ApproachNo ratings yetFlood Forecasting Using Committee Machine With Intelligent Systems A Framework For Advanced Machine Learning Approach11 pages

- Low Cost IoT Based Flood Monitoring System Using Machine Learning and Neural Networks Flood Alerting and Rainfall PredictionNo ratings yetLow Cost IoT Based Flood Monitoring System Using Machine Learning and Neural Networks Flood Alerting and Rainfall Prediction7 pages

- Flood Prediction Using Logistic RegressionNo ratings yetFlood Prediction Using Logistic Regression6 pages

- Paper 55-Flood Prediction Using Hydrologic and ML Based ModelingNo ratings yetPaper 55-Flood Prediction Using Hydrologic and ML Based Modeling14 pages

- Flood Succour Forecasting and Predictive Analytics of Flood Using Data MiningNo ratings yetFlood Succour Forecasting and Predictive Analytics of Flood Using Data Mining13 pages

- Flood Detection and Prediction in Ethiopia Using Recurrent Neural NetworksNo ratings yetFlood Detection and Prediction in Ethiopia Using Recurrent Neural Networks12 pages

- Comparative Study of Very Short-Term Flood ForecasNo ratings yetComparative Study of Very Short-Term Flood Forecas37 pages

- Detection of Flood Images Using Different ClassifiersNo ratings yetDetection of Flood Images Using Different Classifiers5 pages

- Flood Prediction Using Ensemble Machine Learning ModelNo ratings yetFlood Prediction Using Ensemble Machine Learning Model6 pages

- Data Driven Modelling For Real-Time Flood ForecastingNo ratings yetData Driven Modelling For Real-Time Flood Forecasting8 pages

- Ghiridhariyer Efficiency of Flash Flood Prediction by XGBoost and Random Forest Using 15 Minutes & 1 Hour Time Period Sensor Data.No ratings yetGhiridhariyer Efficiency of Flash Flood Prediction by XGBoost and Random Forest Using 15 Minutes & 1 Hour Time Period Sensor Data.23 pages

- Flood Prediction Using Rainfall-Flow Pattern in Data-Sparse Watersheds100% (1)Flood Prediction Using Rainfall-Flow Pattern in Data-Sparse Watersheds12 pages

- Prediction of Floods in Kerala Using Hybrid Model of CNN and LSTMNo ratings yetPrediction of Floods in Kerala Using Hybrid Model of CNN and LSTM7 pages

- A Real-Time Flood Detection System Based On Machine Learning Algorithms With Emphasis OnNo ratings yetA Real-Time Flood Detection System Based On Machine Learning Algorithms With Emphasis On8 pages

- BMS Institute of Technology and Management Department of MCA100% (1)BMS Institute of Technology and Management Department of MCA10 pages

- A deep-learning-technique-based data-driven model for accurate and rapid flood predictions in temporal and spatial dimensionsNo ratings yetA deep-learning-technique-based data-driven model for accurate and rapid flood predictions in temporal and spatial dimensions18 pages

- Flood Detection and Avoidance by Using Iot: © 2022, Irjedt Volume: 04 Issue: 05 - May-2022No ratings yetFlood Detection and Avoidance by Using Iot: © 2022, Irjedt Volume: 04 Issue: 05 - May-202213 pages

- Rainfall Prediction With Agricultural Soil Analysis Using Machine LearningNo ratings yetRainfall Prediction With Agricultural Soil Analysis Using Machine Learning11 pages

- Rainfall Prediction System: (Peer-Reviewed, Open Access, Fully Refereed International Journal)No ratings yetRainfall Prediction System: (Peer-Reviewed, Open Access, Fully Refereed International Journal)7 pages

- A Web Based Belief Rule Based Expert SystemNo ratings yetA Web Based Belief Rule Based Expert System8 pages

- Assessing Surface Water Flood Risks in Urban Areas UsingNo ratings yetAssessing Surface Water Flood Risks in Urban Areas Using14 pages

- 2024 - 25 Term 1 Cluster Exam Time Table FinalNo ratings yet2024 - 25 Term 1 Cluster Exam Time Table Final3 pages

- [FREE PDF sample] Instructional Leadership A Research Based Guide to Learning in Schools Anita Woolfolk ebooks100% (4)[FREE PDF sample] Instructional Leadership A Research Based Guide to Learning in Schools Anita Woolfolk ebooks32 pages

- LearnEnglish Reading B2 Cultural Expectations and Leadership PDF100% (1)LearnEnglish Reading B2 Cultural Expectations and Leadership PDF4 pages

- AI-Human-Resources™-Executive-Summary (1)No ratings yetAI-Human-Resources™-Executive-Summary (1)15 pages

- Practical Research 1 - Writing A Research Title (MELC9) - LASNo ratings yetPractical Research 1 - Writing A Research Title (MELC9) - LAS6 pages

- Further Statistics 1 - October 2020 Shadow Paper MS (PDF) (1)No ratings yetFurther Statistics 1 - October 2020 Shadow Paper MS (PDF) (1)7 pages

- Journal Club Presentation: Presented by Jaskomal M. SC 1 Year100% (1)Journal Club Presentation: Presented by Jaskomal M. SC 1 Year14 pages

- Evaluating Preferential Voting Methods For Multiple Class ClassificationNo ratings yetEvaluating Preferential Voting Methods For Multiple Class Classification6 pages

- Structuring Your Presentation - Linking The PartsNo ratings yetStructuring Your Presentation - Linking The Parts2 pages

- COT DLL 2ND Determining The Worth of Ideas Listened To100% (4)COT DLL 2ND Determining The Worth of Ideas Listened To10 pages

- (Ebook) Ultimate Review for the Neurology Boards by Alexander Rae-Grant et al. ISBN 9781620700815, 1620700816 - Download the ebook now and read anytime, anywhere100% (1)(Ebook) Ultimate Review for the Neurology Boards by Alexander Rae-Grant et al. ISBN 9781620700815, 1620700816 - Download the ebook now and read anytime, anywhere61 pages

- Strategy For Strengthening Character Education in Muhammadiyah Boarding School YogyakartaNo ratings yetStrategy For Strengthening Character Education in Muhammadiyah Boarding School Yogyakarta7 pages