DataScienceLab2017_Блиц-доклад

- 1. Sample based generative models for speech synthesis Дмитро Бєлєвцов @ IBDI

- 2. Frame-based business 1.Split the waveform into overlapping frames

- 3. Frame-based business 1.Split the waveform into overlapping frames 2.Extract spectral features from each frame

- 4. Frame-based business 1.Split the waveform into overlapping frames 2.Extract spectral features from each frame 3.Model the distribution of these parameters

- 5. Frame-based business 1.Split the waveform into overlapping frames 2.Extract spectral features from each frame 3.Model the distribution of these parameters 4.Generate parameters

- 6. Frame-based business 1.Split the waveform into overlapping frames 2.Extract spectral features from each frame 3.Model the distribution of these parameters 4.Generate parameters 5.Convert parameters back to the waveform

- 7. Frame-based business ● 100x lower time resolution ● Phase-invariant ● Naturally motivated ● Highly compressed ● Separated from pitch Pros:

- 8. Frame-based business ● 100x lower time resolution ● Phase-invariant ● Naturally motivated ● Highly compressed ● Separated from pitch Pros: ● Highly compressed ● Synthesis introduces unnaturalness Cons:

- 9. WaveNet

- 10. WaveNet ● Deep

- 12. WaveNet ● Deep ● Residual ● Convolutional

- 13. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based

- 14. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based ● Probabilistic

- 15. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based ● Probabilistic ● Conditional

- 16. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based ● Probabilistic ● Conditional ● Generative

- 17. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based ● Probabilistic ● Conditional ● Generative ● Auto-regressive

- 18. WaveNet ● Deep ● Residual ● Convolutional ● Sample-based ● Probabilistic ● Conditional ● Generative ● Auto-regressive

- 19. How does it work? dilated causal convolutions

- 20. How does it work?

- 21. Trained like a CNN

- 22. Generates like an RNN (with limited memory)

- 23. So how is it? ● Direct waveform generation ● State-of-the-art timbre quality ● CNN-like training Pros:

- 24. So how is it? ● Direct waveform generation ● State-of-the-art timbre quality ● CNN-like training Pros: ● Slow generation (40x slower than realtime on commodity CPU) * ● Sensitive to local condition ● Large memory footprint ● Hard to interpret ● Missing details Cons:

- 26. SampleRNN

- 27. SampleRNN

- 28. SampleRNN

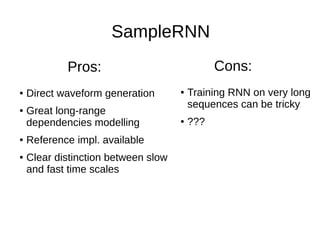

- 29. SampleRNN ● Direct waveform generation ● Great long-range dependencies modelling ● Reference impl. available ● Clear distinction between slow and fast time scales Pros: ● Training RNN on very long sequences can be tricky ● ??? Cons:

- 30. Papers to check out ● WaveNet: A Generative Model for Raw Audio (Oord et al. 2016) ● Fast Wavenet Generation Algorithm (Paine et al. 2016) ● Deep Voice: Real-time Neural Text-to-Speech (Arik et al. 2017) ● A Neural Parametric Singing Synthesizer (Blaauw et al. 2017) ● SamplerRNN: An Unconditional End-To-End Neural Audio Generation Model (Mehri et al. 2017) ● Char2wav: End-To-End Speech Synthesis (Sotelo et al. 2017)

- 31. Thanks!