Credit Card Fraud Detection - Anomaly Detection

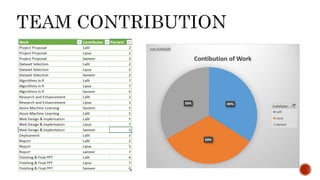

- 1. Team 7 Lalit Jain Lipsa Panda Sameer Goel

- 2. Data Points that deviates from what is standard ,normal or expected or do not conform an expected pattern.This seems easy, why even worry about it?

- 3. The answer is yes if the following three conditions are met. 1. You have labeled training data 2. Anomalous and normal classes are balanced ( say at least 1:5) 3. Data is not auto correlated. ( That one data point does not depend on earlier data points. This often breaks in time series data).

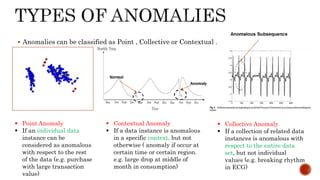

- 4. Anomalies can be classified as Point , Collective or Contextual . Point Anomaly If an individual data instance can be considered as anomalous with respect to the rest of the data (e.g. purchase with large transaction value) Collective Anomaly If a collection of related data instances is anomalous with respect to the entire data set, but not individual values (e.g. breaking rhythm in ECG) Contextual Anomaly If a data instance is anomalous in a specific context, but not otherwise ( anomaly if occur at certain time or certain region. e.g. large drop at middle of month in consumption)

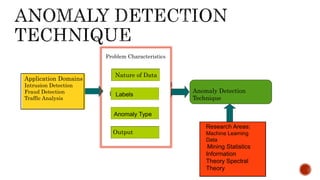

- 5. Application Domains Intrusion Detection Fraud Detection Traffic Analysis Labels Anomaly Type Nature of Data Output Problem Characteristics Anomaly Detection Technique Research Areas: Machine Learning Data Mining Statistics Information Theory Spectral Theory

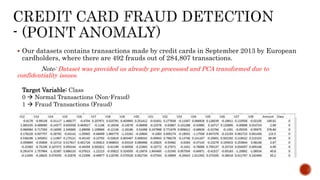

- 6. Our datasets contains transactions made by credit cards in September 2013 by European cardholders, where there are 492 frauds out of 284,807 transactions. Note: Dataset was provided us already pre processed and PCA transformed due to confidentiality issues. Target Variable: Class 0 Normal Transactions (Non-Fraud) 1 Fraud Transactions (Fraud)

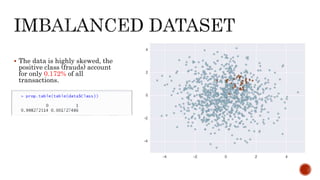

- 7. The data is highly skewed, the positive class (frauds) account for only 0.172% of all transactions.

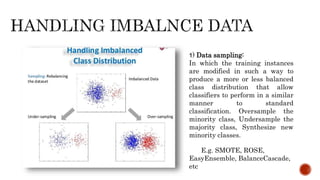

- 8. 1) Data sampling: In which the training instances are modified in such a way to produce a more or less balanced class distribution that allow classifiers to perform in a similar manner to standard classification. Oversample the minority class, Undersample the majority class, Synthesize new minority classes. E.g. SMOTE, ROSE, EasyEnsemble, BalanceCascade, etc

- 9. 2) Algorithmic modification: This procedure is oriented towards the adaptation of base learning methods to be more attuned to class imbalance issues 3) Cost-sensitive learning: This type of solutions incorporate approaches at the data level, at the algorithmic level, or at both levels combined, considering higher costs for the misclassification of examples of the positive class with respect to the negative class, and therefore, trying to minimize higher cost errors E.g. CostSensitiveClassifier.

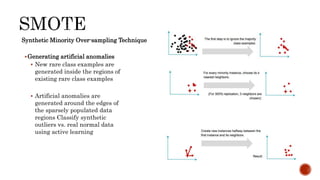

- 10. Generating artificial anomalies New rare class examples are generated inside the regions of existing rare class examples Artificial anomalies are generated around the edges of the sparsely populated data regions Classify synthetic outliers vs. real normal data using active learning Synthetic Minority Over-sampling Technique

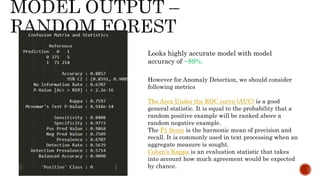

- 12. Looks highly accurate model with model accuracy of ~89%. However for Anomaly Detection, we should consider following metrics The Area Under the ROC curve (AUC) is a good general statistic. It is equal to the probability that a random positive example will be ranked above a random negative example. The F1 Score is the harmonic mean of precision and recall. It is commonly used in text processing when an aggregate measure is sought. Cohen’s Kappa is an evaluation statistic that takes into account how much agreement would be expected by chance.

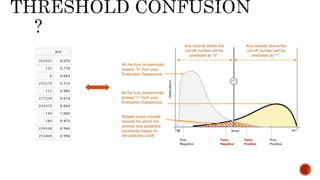

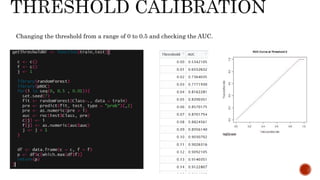

- 14. Changing the threshold from a range of 0 to 0.5 and checking the AUC.

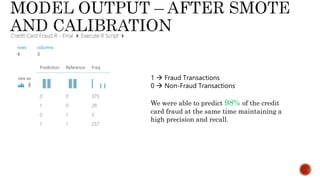

- 15. 1 Fraud Transactions 0 Non-Fraud Transactions We were able to predict 98% of the credit card fraud at the same time maintaining a high precision and recall.

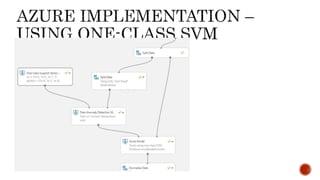

- 17. One Class SVM is an Azure implementation for Anomaly detection This module is used when it is easy to obtain training data from one class, such as acceptable transactions, but difficult to obtain sufficient samples of the targeted anomalies. For example, if you need to detect fraudulent transaction, you might not have enough examples of fraud to train the model, but have many examples of good transactions. Source: https://msdn.microsoft.com/en-us/library/azure/dn913103.aspx

- 19. Demo 1. Live Credit Card Fraud Detection – (RF-SMOTE) 2. Single Transaction – (One Class SVM) 3. Batch Execution

- 20. 1. (G.E.A.P.A. Batista, R.C. Prati, M.C. Monard, A study of the behaviour of several methods for balancing machine learning training data, SIGKDD Explorations 6 (1) (2004) 20–29. doi: 10.1145/1007730.1007735, N.V. Chawla, K.W. Bowyer, L.O. Hall, W.P. Kegelmeyer, SMOTE: synthetic minority over-sampling technique, Journal of Artificial Intelligent Research 16 (2002) 321–357. doi: 10.1613/jair.953). 2. (B. Zadrozny, C. Elkan, Learning and making decisions when costs and probabilities are both unknown, in: Proceedings of the 7th International Conference on Knowledge Discovery and Data Mining (KDD’01), 2001, pp. 204–213.). 3. (P. Domingos, Metacost: a general method for making classifiers cost–sensitive, in: Proceedings of the 5th International Conference on Knowledge Discovery and Data Mining (KDD’99), 1999, pp. 155–164., B. Zadrozny, J. Langford, N. Abe, Cost–sensitive learning by cost–proportionate example weighting, in: Proceedings of the 3rd IEEE International Conference on Data Mining (ICDM’03), 2003, pp. 435–442.) 4. https://www.analyticsvidhya.com/blog/2016/03/practical-guide-deal-imbalanced- classification-problems/