Glint with Apache Spark

- 1. June 13

- 4. Big Data -- Digital Data growth…

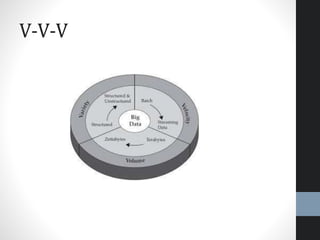

- 5. V-V-V

- 6. Use Cases

- 9. Legacy Architecture Pain Points • Report arrival latency quite high - Hours to perform joins, aggregate data • Existing frameworks cannot do both • Either, stream processing of 100s of MB/s with low latency • Or, batch processing of TBs of data with high latency • Expressibility of business logic in Hadoop MR is challenging • Lack of interactive SQL

- 10. SPARK OVERVIEW

- 11. Why Spark Separate, fast, Map-Reduce-like engine In-memory data storage for very fast iterative queries Better Fault Tolerance Combine SQL, Streaming and complex analytics Runs on Hadoop, Mesos, standalone, or in the cloud Data sources -> HDFS, Cassandra, HBase and S3

- 12. Consumed Apps…

- 13. In Memory - Spark vs Hadoop Improve efficiency over MapReduce 100x in memory , 2-10x in disk Up to 40x faster than Hadoop

- 14. Spark Stack

- 15. Spark Eco System RDBMS Streaming SQL GraphX BlinkDB Hadoop Input Format Apps Distributions: - CDH - HDP - MapR - DSE Tachyon MLlib

- 16. Benchmarking

- 17. RESILIENT DISTRIBUTED DATA (RDD)

- 18. Resilient Distributed Data (RDD) Immutable + Distributed+ Catchable+ Lazy evaluated Distributed collections of objects Can be cached in memory across cluster nodes Manipulated through various parallel operations

- 19. QUICK DEMO

- 20. RDD Types RDD

- 21. RDD Operation

- 24. Task Scheduler , DAG • Pipelines functions within a stage • Cache-aware data reuse & locality • Partitioning-aware to avoid shuffles rdd1.map(splitlines).filter("ERROR") rdd2.map(splitlines).groupBy(key) rdd2.join(rdd1, key).take(10)

- 25. Fault Recovery & Checkpoints • Efficient fault recovery using Lineage • log one operation to apply to many elements (lineage) • Recomputed lost partitions on failure • Checkpoint RDDs to prevent long lineage chains during fault recovery

- 26. SPARK CLUSTER

- 27. Cluster Support • Standalone – a simple cluster manager included with Spark that makes it easy to set up a cluster • Apache Mesos – a general cluster manager that can also run Hadoop MapReduce and service applications • Hadoop YARN – the resource manager in Hadoop 2

- 28. Spark Cluster Overview o Application o Driver program o Cluster manage o Worker node o Executor o Task o Job o Stage

- 29. Spark On Mesos

- 30. Spark on YARN

- 31. Job Flow

- 33. Spark SQL • Seamlessly mix SQL queries with Spark programs • Load and query data from a variety of sources • Standard Connectivity through (J)ODBC • Hive Compatibility

- 34. Streaming • Scalable high-throughput streaming process of live data • Integrate with many sources • Fault-tolerant- Stateful exactly-once semantics out of box • Combine streaming with batch and interactive queries

- 35. MLib • Scalable Machine learning library • Iterative computing -> High Quality algorithm 100x faster than hadoop • Algorithms (Mlib 1.3): • linear SVM and logistic regression • classification and regression tree • random forest and gradient-boosted trees • recommendation via alternating least squares • clustering via k-means, Gaussian mixtures, • power iteration clustering • topic modeling via latent Dirichlet allocation • singular value decomposition • linear regression with L1- and L2-regularization • isotonic regression • multinomial naive Bayes • frequent itemset mining via FP-growth • basic statistics • feature transformations

- 36. GraphX- Unifying Graphs and Tables • Spark’s API For Graph and Graph-parallel computation • Graph abstraction: a directed multigraph with properties attached to each vertex and edge • Seamlessly works with both graph and collections

- 37. SPARK STREAMING

- 38. Spark Streaming

- 39. Batches… • Chop up the live stream into batches of X seconds • Spark treats each batch of data as RDDs and processes them using RDD operations • Finally, the processed results of the RDD operations are returned in batches

- 40. Dstream (Discretized Streams) DStream is represented by a continuous series of RDDs

- 41. Micro Batch (Near Real Time) Micro Batch

- 42. Window Operation & Checkpoint

- 44. Spark Streaming + SQL Streaming SQL

- 45. Quick Run Spark UI

- 46. Spark with Storm

- 47. Quick Recap • Why Spark? Spark Features? • What is RDD? • Fault – tolerance model • Spark Extensions/Stack? • Micro- Batch?

- 48. Clients…

- 49. Thank You….

- 51. BDAS - Berkeley Data Analytics Stack https://amplab.cs.berkeley.edu/software/ BDAS, the Berkeley Data Analytics Stack, is an open source software stack that integrates software components being built by the AMPLab to make sense of Big Data.

- 52. Optimization • groupBy is costlier – use mapr() or reduceByKey() • RDD storage level MEMOR_ONLY is better

- 54. RDDs vs Distributed Shared Mem

- 55. SQL Optimization

Editor's Notes

- http://www.meetup.com/devops-bangalore/events/222155834/ http://www.meetup.com/lspe-in/events/212250542/

- http://www.business-software.com/wp-content/uploads/2014/09/Spark-Storm.jpg

- https://spark-summit.org/2013/wp-content/uploads/2013/10/Tully-SparkSummit4.pdf

- Spark Summit2015-sample sldies

- http://opensource.com/business/15/1/apache-spark-new-world-record

- Transformations (eg: map, filter, group by) : Create a new dataset from an existing one Actions ( eg: count, collect, save) : Return a value to the driver program after running a computation on the dataset

- Chop up the live stream into batches of X seconds. Spark treats each batch of data as RDDs and processes them using RDD operations. Finally, the processed results of the RDD operations are returned in batches Spark Streaming brings Spark's language-integrated API to stream processing, letting you write streaming applications the same way you write batch jobs. It supports both Java and Scala. Spark Streaming lets you reuse the same code for batch processing, join streams against historical data, or run ad-hoc queries on stream state Spark Streaming can read data from HDFS, Flume, Kafka, Twitter and ZeroMQ. Since Spark Streaming is built on top of Spark, users can apply Spark's in-built machine learning algorithms (MLlib), and graph processing algorithms (GraphX) on data streams

- Chop up the live stream into batches of X seconds. Spark treats each batch of data as RDDs and processes them using RDD operations. Finally, the processed results of the RDD operations are returned in batches

- https://spark.apache.org/docs/latest/streaming-programming-guide.html

- https://www.sigmoid.com/fault-tolerant-streaming-workflows/

- http://www.aerospike.com/blog/what-the-spark-introduction/