AlexNet and so on...

- 2. AlexNet Contribution • Relu Function • Momentum Function • Local Normalization • Data Augmentation • Dropout • Overlapping Pooling • Train with GPU https://galoismilk.org/2017/12/31/cnn-%EC%95%84%ED%82%A4%ED%85%8D%EC%B3%90-%EB%A6%AC%EB%B7%B0-alexnet/

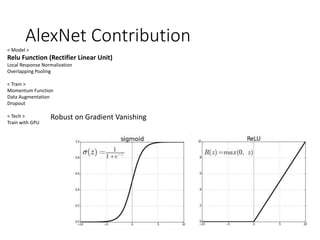

- 3. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU Robust on Gradient Vanishing

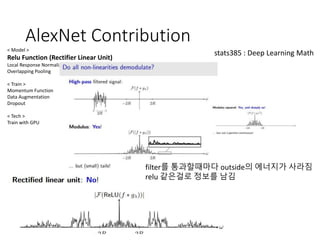

- 4. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU filter를 통과할때마다 outside의 에너지가 사라짐 relu 같은걸로 정보를 남김 stats385 : Deep Learning Math

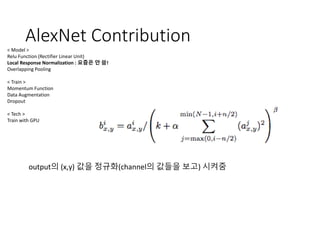

- 5. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization : 요즘은 안 씀! Overlapping Pooling < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU output의 (x,y) 값을 정규화(channel의 값들을 보고) 시켜줌

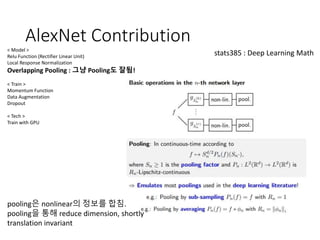

- 6. AlexNet Contribution Pooling Overlapping Pooling < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling : 그냥 Pooling도 잘됨! < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU

- 7. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling : 그냥 Pooling도 잘됨! < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU pooling은 nonlinear의 정보를 합침. pooling을 통해 reduce dimension, shortly translation invariant stats385 : Deep Learning Math

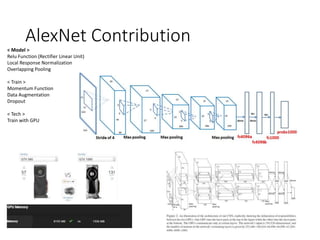

- 8. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Momentum Function Data Augmentation Dropout < Tech > Train with GPU

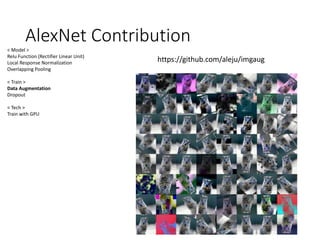

- 10. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Data Augmentation Dropout < Tech > Train with GPU https://github.com/aleju/imgaug

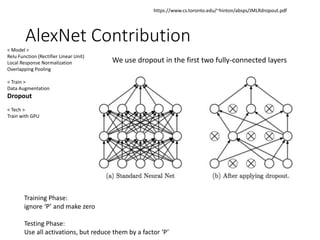

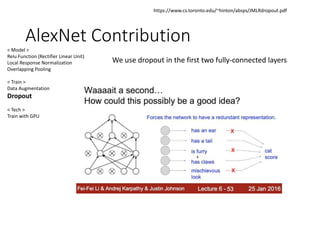

- 11. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Data Augmentation Dropout < Tech > Train with GPU We use dropout in the first two fully-connected layers https://www.cs.toronto.edu/~hinton/absps/JMLRdropout.pdf Training Phase: ignore ‘P’ and make zero Testing Phase: Use all activations, but reduce them by a factor ‘P’

- 12. AlexNet Contribution < Model > Relu Function (Rectifier Linear Unit) Local Response Normalization Overlapping Pooling < Train > Data Augmentation Dropout < Tech > Train with GPU We use dropout in the first two fully-connected layers https://www.cs.toronto.edu/~hinton/absps/JMLRdropout.pdf

- 13. AlexNet Result