Algorithmic Music Recommendations at Spotify

- 1. Algorithmic Music Discovery at Spotify Chris Johnson @MrChrisJohnson January 13, 2014 Monday, January 13, 14

- 2. Who am I?? •Chris Johnson – Machine Learning guy from NYC – Focused on music recommendations – Formerly a graduate student at UT Austin Monday, January 13, 14

- 3. What is Spotify? • • On demand music streaming service “iTunes in the cloud” Monday, January 13, 14 3

- 4. Section name Monday, January 13, 14 4

- 5. Data at Spotify.... • 20 Million songs • 24 Million active users • 6 Million paying users • 8 Million daily active users • 1 TB of compressed data generated from users per day • 700 node Hadoop Cluster • 1 Million years worth of music streamed • 1 Billion user generated playlists Monday, January 13, 14 5

- 6. Challenge: 20 Million songs... how do we recommend music to users? Monday, January 13, 14 6

- 7. Recommendation Features • Discover (personalized recommendations) • Radio • Related Artists • Now Playing Monday, January 13, 14 7

- 8. 8 How can we find good recommendations? • Manual Curation • Manually Tag Attributes • Audio Content, Metadata, Text Analysis • Collaborative Filtering Monday, January 13, 14

- 9. Collaborative Filtering - “The Netflix Prize” Monday, January 13, 14 9

- 10. Collaborative Filtering 10 Hey, I like tracks P, Q, R, S! Well, I like tracks Q, R, S, T! Then you should check out track P! Nice! Btw try track T! Image via Erik Bernhardsson Monday, January 13, 14

- 11. Section name Monday, January 13, 14 11

- 12. Difference between movie and music recs • Scale of catalog 60,000 movies Monday, January 13, 14 20,000,000 songs 12

- 13. Difference between movie and music recs • Repeated consumption Monday, January 13, 14 13

- 14. Difference between movie and music recs • Music is more niche Monday, January 13, 14 14

- 15. “The Netflix Problem” Vs “The Spotify Problem •Netflix: Users explicitly “rate” movies •Spotify: Feedback is implicit through streaming behavior Monday, January 13, 14 15

- 16. Section name Monday, January 13, 14 16

- 17. Explicit Matrix Factorization •Users explicitly rate a subset of the movie catalog •Goal: predict how users will rate new movies Movies Users Chris Inception Monday, January 13, 14 17

- 18. Explicit Matrix Factorization 18 •Approximate ratings matrix by the product of lowdimensional user and movie matrices Minimize RMSE (root mean squared error) • ? 1 2 ? 5 • • • 3 ? ? ? 2 5 ? 3 ? ? ? 1 2 5 4 = user = user rating for movie latent factor vector = item latent factor vector Monday, January 13, 14 X Y Inception Chris • • • = bias for user = bias for item = regularization parameter

- 19. Implicit Matrix Factorization 19 •Replace Stream counts with binary labels – 1 = streamed, 0 = never streamed •Minimize weighted RMSE (root mean squared error) using a function of stream counts as weights 10001001 00100100 10100011 01000100 00100100 10001001 • • • • = 1 if user = user =i tem Monday, January 13, 14 streamed track latent factor vector latent factor vector X else 0 Y • • • = bias for user = bias for item = regularization parameter

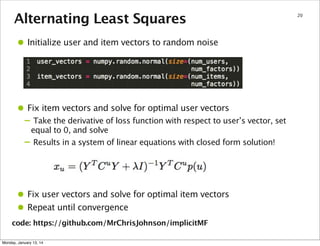

- 20. Alternating Least Squares • Initialize user and item vectors to random noise • Fix item vectors and solve for optimal user vectors – Take the derivative of loss function with respect to user’s vector, set – equal to 0, and solve Results in a system of linear equations with closed form solution! • Fix user vectors and solve for optimal item vectors • Repeat until convergence code: https://github.com/MrChrisJohnson/implicitMF Monday, January 13, 14 20

- 21. Alternating Least Squares • Note that: • Then, we can pre-compute – – once per iteration and only contain non-zero elements for tracks that the user streamed Using sparse matrix operations we can then compute each user’s vector efficiently in time where is the number of tracks the user streamed code: https://github.com/MrChrisJohnson/implicitMF Monday, January 13, 14 21

- 22. Alternating Least Squares code: https://github.com/MrChrisJohnson/implicitMF Monday, January 13, 14 22

- 23. How do we use the learned vectors? •User-Item score is the dot product •Item-Item similarity is the cosine similarity •Both operations have trivial complexity based on the number of latent factors Monday, January 13, 14 23

- 24. Latent Factor Vectors in 2 dimensions Monday, January 13, 14 24

- 25. Section name Monday, January 13, 14 25

- 26. Scaling up Implicit Matrix Factorization with Hadoop Monday, January 13, 14 26

- 27. Hadoop at Spotify 2009 Monday, January 13, 14 27

- 28. Hadoop at Spotify 2014 700 Nodes in our London data center Monday, January 13, 14 28

- 29. Implicit Matrix Factorization with Hadoop Map step 29 Reduce step item vectors item%L=0 item vectors item%L=1 user vectors u%K=0 u%K=0 i%L=0 u%K=0 i%L=1 ... u%K=0 i % L = L-1 u%K=0 user vectors u%K=1 u%K=1 i%L=0 u%K=1 i%L=1 ... ... u%K=1 ... ... ... ... u % K = K-1 i%L=0 ... ... u % K = K-1 i % L = L-1 user vectors u % K = K-1 item vectors i % L = L-1 u % K = K-1 all log entries u%K=1 i%L=1 Figure via Erik Bernhardsson Monday, January 13, 14

- 30. Implicit Matrix Factorization with Hadoop 30 One map task Distributed cache: All user vectors where u % K = x Distributed cache: All item vectors where i % L = y Mapper Emit contributions Reducer New vector! Map input: tuples (u, i, count) where u%K=x and i%L=y Figure via Erik Bernhardsson Monday, January 13, 14

- 31. Implicit Matrix Factorization with Spark 31 Spark Vs Hadoop http://www.slideshare.net/Hadoop_Summit/spark-and-shark Monday, January 13, 14

- 32. Section name Monday, January 13, 14 32

- 33. Approximate Nearest Neighbors code: https://github.com/Spotify/annoy Monday, January 13, 14 33

- 34. Ensemble of Latent Factor Models 34 Figure via Erik Bernhardsson Monday, January 13, 14

- 35. AB-Testing Recommendations Monday, January 13, 14 35

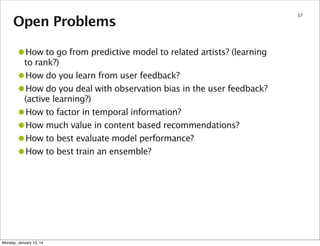

- 36. Open Problems •How to go from predictive model to related artists? (learning to rank?) How do you learn from user feedback? How do you deal with observation bias in the user feedback? (active learning?) How to factor in temporal information? How much value in content based recommendations? How to best evaluate model performance? How to best train an ensemble? • • • • • • Monday, January 13, 14 36

- 37. Section name 37 Thank You! Monday, January 13, 14

- 38. Section name Monday, January 13, 14 38

- 39. Section name Monday, January 13, 14 39

- 40. Section name Monday, January 13, 14 40

- 41. Section name Monday, January 13, 14 41

- 42. Section name Monday, January 13, 14 42