Apache hive

- 1. Apache Hive

- 2. Origin • Hive was Initially developed by Facebook. • Data was stored in Oracle database every night • ETL(Extract,Transform,Load) was performed on Data • The Data growth was exponential – By 2006 1TB /Day – By 2010 10 TB /Day – By 2013 about 5000,000,000 per day..etc And there was a need to find some way to manage the data “effectively”.

- 3. What is Hive • Hive is a Data warehouse infrastructure built on top of Hadoop that can compile SQL Quires as Map Reduce jobs and run the jobs in the cluster. • Suitable for semi and structured databases. • Capable to deal with different storage and file formats. • Provides HQL(SQL like Query Language) What Hive is not • Does not use complex indexes so do not response in seconds • But it scales very well , it works with data of peta byte order • It is not independent and its performance is tied hadoop

- 4. Hive RDBMS SQL Interface SQL Interface Focus on analytics May focus on online or analytics. No transactions. Transactions usually supported. Partition adds, no random INSERTs. In‐Place updates not natively supporte d (but are possible). Random INSERT and UPDATE supp orted. Distributed processing via map/reduce. Distributed processing varies by v endor (if available). Scales to hundreds of nodes . Seldom scale beyond 20 nodes. Built for commodity hardware. Often built on proprietary hardwa re (especially when scaling out). Low cost per peta byte. What’s a peta byte?

- 5. – A Data Warehouse is a database specific for analysis and reporting purpose. • OLAP vs OLTP – DW is needed in OLAP. – We want report and Summary not live data of transactions for continuing the operate. – We need reports to make operations better not to conduct and operations. – We use ETL to populate data in DW. Brief about Data Warehouse

- 6. How Hive Works? • Hive Built on top of Hadoop – Think HDFS and Map Reduce • Hive stored data in the HDFS • Hive compile SQL Quires into Map Reduce jobs and run the jobs in the Hadoop cluster

- 11. Internal Components • Compiler and Planner – It compiles and checks the input query and create an execution plan. • Optimizer – It optimizes the execution plan before it runs • Execution Engine – Runs the Execution plan . It is guaranteed that execution plan is DAG.

- 12. • Hive Queries are implicitly converted to map- reduce code by hive Engine • Compiler translates all the quires into a directed acyclic graph of map-reduce jobs • These map-reduce jobs are send to hadoop for execution.

- 13. • External Interface – Hive Client – Web UI – API • JDBC and ODBC • Thrift Server – Client API to execute HiveQl Statemnts • Metastore – System Catalog • All Components of hive Interact with Meta store

- 14. • Hive Data Model • Hive Database – Data Model • Hive Structure data into a well defined database concept i.e. tables ,columns and rows ,Partitions ,buckets etc...

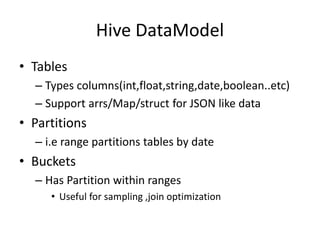

- 16. Hive DataModel • Tables – Types columns(int,float,string,date,boolean..etc) – Support arrs/Map/struct for JSON like data • Partitions – i.e range partitions tables by date • Buckets – Has Partition within ranges • Useful for sampling ,join optimization

- 17. metastore • Database – Namespace containing a set of tables • Table – Contains list of columns and their types and serDe info • Partition – Each partition can have its own columns , SerDe and storage info – Mapping to HDF Directories • Statistics – Info about the databse

- 18. Hive Physical Layout • Warehouse Directory in HDFS – /user/hive/warehouse • Tables row data is stored in warehouse subdirectories • Partition creates subdirectories within table directories • Actual data is stored in flat files – Control char-delimited text – Or Sequence Files – With Custom Serializer /Deserializer (SerDe), files can use arbitrary format

- 19. • Normal Tables are created under warehouse directory. (source Data migrates to warehouse) • Normal Tables are directly visible through hdfs directory browsing. • On Dropping a normal table, the source data and table meta data both are deleted. • External Tables read directly from hdfs files. • External tables not visible in warehouse directory. • On Dropping an external table, only the meta data is deleted but not the source data.

- 20. • Hive QL supports Joins on only equality expressions. Complex boolean expressions, inequality conditions are not supported. • More than 2 tables can be joined. • Number of map-reduce jobs generated for a join depend on the columns being used. • If same col is used for all the tables, then n=1 • Otherwise n>1 • HiveQL Doesn’t follow SQL-92 standard • Lack support No Materialized views • No Transaction level support • Limited Sub-query support

- 21. Quick Refresher on Joins First Last Id Ram C 11341 Sita B 11342 Lak D 11343 Man K 10045 cid price Quantity 1041 200.40 3 11341 4534.34 4 11345 2345.45 3 11341 2346.45 6 customer customer SELECT * FROM customer join order ON customer.id = order.cid; Joins match values from one table against values in another table.

- 22. Hive Join Strategies Type Approach Pros Cons Shuffle Join . Join keys are shuffled using map/reduce and joins perf ormed join side Works regardless of data size or layout. Most resource‐ intensive and slo west join type. Broadcast Join Small tables are loaded into memory in all nodes, ma pper scans through the larg e table and joins. Very fast, single s can through large st table. All but one table must be small e nough to fit in R AM. Sort-‐ Merge-‐ Bucket Join Mappers take advantage of co‐loca1on of keys to do efficient joins. Very fast for tabl es of any size. Data must be sor ted and bucketed ahead of time.

- 23. Shuffle Joins in Map Reduce First Last Id Ram C 11341 Sita B 11342 Lak D 11343 Man K 10045 cid price Quantity 1041 200.40 3 11341 4534.34 4 11341 2346.45 6 11345 2345.45 3 customer customer Iden1cal keys shuffled to the same reducer. Join done reduce‐side. Expensive from a network u1liza1on standpoint.

- 24. • Star schemas use dimension tables small enough to fit in RAM. • Small tables held in memory by all nodes. • Single pass through the large table. • Used for star-schema type joins common in DW.

- 28. Controlling Data Locality with Hive • Bucketing: • Hash partition values into a configurable number of buckets. • Usually coupled with sorting. • Skews: • Split values out into separate files. • Used when certain values are frequently seen. Replication Factor: • Increase replication factor to accelerate reads. • Controlled at the HDFS layer. • Sorting: • Sort the values within given columns. • Greatly accelerates query when used with ORCFile filter pushdown.

- 30. Hive Persistence Formats • Built-in Formats: – ORCFile – RCFile – Avro – Delimited Text – Regular Expression – S3 Logfile – Typed Bytes • 3rd-Party Addons: – JSON – XML

- 31. Loading Data in Hive • Sqoop – Data transfer from external RDBMS to Hive. – Sqoop can load data directly to/from HCatalog. • Hive LOAD – Load files from HDFS or local file system. – Format must agree with table format. • Insert from query – CREATE TABLE AS SELECT or INSERT INTO. • WebHDFS + WebHCat – Load data via REST APIs.

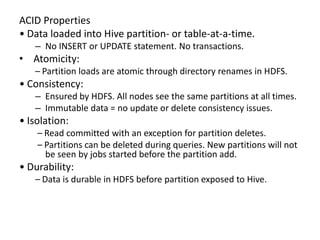

- 32. ACID Properties • Data loaded into Hive partition- or table-at-a-time. – No INSERT or UPDATE statement. No transactions. • Atomicity: – Partition loads are atomic through directory renames in HDFS. • Consistency: – Ensured by HDFS. All nodes see the same partitions at all times. – Immutable data = no update or delete consistency issues. • Isolation: – Read committed with an exception for partition deletes. – Partitions can be deleted during queries. New partitions will not be seen by jobs started before the partition add. • Durability: – Data is durable in HDFS before partition exposed to Hive.

- 33. Handling Semi-Structured Data • Hive supports arrays, maps, structs and unions. • SerDes map JSON, XML and other formats natively into Hive.

- 34. Join Optimizations • Performance Improvements in Hive 0.11: • New Join Types added or improved in Hive 0.11: – In-memory Hash Join: Fast for fact-to-dimension joins. – Sort-Merge-Bucket Join: Scalable for large-table to large-table joins. • More Efficient Query Plan Generation – Joins done in-memory when possible, saving map- reduce steps. – Combine map/reduce jobs when GROUP BY and ORDER BY use the same key. • More Than 30x Performance Improvement for Star Schema Join

- 36. Fundamental Questions • What is your primary use case? – What kind of queries and filters? • How do you need to access the data? – What information do you need together? • How much data do you have? – What is your year to year growth? • How do you get the data?

- 37. HDFS Characteristics • Provides Distributed File System – Very high aggregate bandwidth – Extreme scalability (up to 100 PB) – Self-healing storage – Relatively simple to administer • Limitations – Can’t modify existing files – Single writer for each file – Heavy bias for large files ( > 100 MB)

- 38. Choices for Layout • Partitions – Top level mechanism for pruning – Primary unit for updating tables (& schema) – Directory per value of specified column • Bucketing – Hashed into a file, good for sampling – Controls write parallelism • Sort order – The order the data is written within file

- 39. Example Hive Layout • Directory Structure warehouse/$database/$table • Partitioning /part1=$partValue/part2=$partValue • Bucketing /$bucket_$attempt (eg. 000000_0) • Sort – Each file is sorted within the file

- 40. Layout Guidelines • Limit the number of partitions – 1,000 partitions is much faster than 10,000 – Nested partitions are almost always wrong • Gauge the number of buckets – Calculate file size and keep big (200-500MB) – Don’t forget number of files (Buckets * Parts) • Layout related tables the same way – Partition – Bucket and sort order

- 41. Normalization • Most databases suggest normalization – Keep information about each thing together – Customer, Sales, Returns, Inventory tables • Has lots of good properties, but… – Is typically slow to query • Often best to denormalize during load – Write once, read many times – Additionally provides snapshots in time.

- 42. Choice of Format • Serde – How each record is encoded? • Input/Output (aka File) Format – How are the files stored? • Primary Choices – Text – Sequence File – RCFile – ORC

- 43. Text Format • Critical to pick a Serde – Default - ^A’s between fields – JSON – top level JSON record – CSV – commas between fields (on github) • Slow to read and write • Can’t split compressed files – Leads to huge maps • Need to read/decompress all fields

- 44. Sequence File • Traditional Map Reduce binary file format – Stores keys and values as classes – Not a good fit for Hive, which has SQL types – Hive always stores entire row as value • Splittable but only by searching file – Default block size is 1 MB • Need to read and decompress all fields

- 45. RC (Row Columnar) File • Columns stored separately – Read and decompress only needed ones – Better compression • Columns stored as binary blobs – Depends on meta store to supply types • Larger blocks – 4 MB by default – Still search file for split boundary

- 46. ORC (Optimized Row Columnar) • Columns stored separately • Knows types – Uses type-specific encoders – Stores statistics (min, max, sum, count) • Has light-weight index – Skip over blocks of rows that don’t matter • Larger blocks – 256 MB by default – Has an index for block boundaries

- 47. Compression • Need to pick level of compression – None – LZO or Snappy – fast but sloppy – Best for temporary tables – ZLIB – slow and complete – Best for long term storage

- 48. Default Assumption • Hive assumes users are either: – Noobies – Hive developers • Default behavior is always finish – Little Engine that Could! • Experts could override default behaviors – Get better performance, but riskier • We’re working on improving heuristics

- 49. Shuffle Join • Default choice – Always works (I’ve sorted a petabyte!) – Worst case scenario • Each process – Reads from part of one of the tables – Buckets and sorts on join key – Sends one bucket to each reduce • Works everytime!

- 50. Map Join • One table is small (eg. dimension table) – Fits in memory • Each process – Reads small table into memory hash table – Streams through part of the big file – Joining each record from hash table • Very fast, but limited

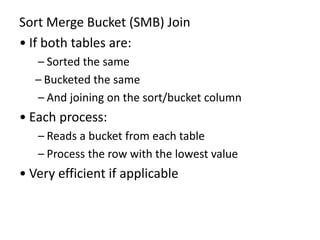

- 51. Sort Merge Bucket (SMB) Join • If both tables are: – Sorted the same – Bucketed the same – And joining on the sort/bucket column • Each process: – Reads a bucket from each table – Process the row with the lowest value • Very efficient if applicable

- 52. Performance Question • Which of the following is faster? – select count(distinct(Col)) from Tbl – select count(*) from (select distict(Col) from Tbl)

- 54. Answer • Surprisingly the second is usually faster In the first case: – Maps send each value to the reduce – Single reduce counts them all In the second case: – Maps split up the values to many reduces – Each reduce generates its list – Final job counts the size of each list – Singleton reduces are almost always BAD

- 55. Communication is Good! • Hive doesn’t tell you what is wrong. – Expects you to know! – “Lucy, you have some ‘splaining to do!” • Explain tool provides query plan – Filters on input – Numbers of jobs – Numbers of maps and reduces – What the jobs are sorting by – What directories are they reading or writing

- 56. The explanation tool is confusing. – It takes practice to understand. – It doesn’t include some critical details like partition pruning. • Running the query makes things clearer! – Pay attention to the details – Look at JobConf and job history files

- 57. Skew • Skew is typical in real datasets. • A user complained that his job was slow – He had 100 reduces – 98 of them finished fast – 2 ran really slow • The key was a boolean…

- 59. • SerDeSerDe is short for serialization/deserialization. • It controls the format of a row. • Serialized format: – Delimited format (tab, comma, ctrl-a …) – Thrift Protocols – ProtocolBuffer* • Deserialized (in-memory) format: – Java Integer/String/ArrayList/HashMap – Hadoop Writable classes – User-defined Java Classes (Thrift, ProtocolBuffer*)

Editor's Notes

- https://cwiki.apache.org/confluence/display/Hive/Design

- http://www.slideshare.net/AdamKawa/a-perfect-hive-query-for-a-perfect-meeting-hadoop-summit-2014?related=5