Apache storm vs. Spark Streaming

- 1. Apache Storm and Spark Streaming Compared P. Taylor Goetz, Hortonworks @ptgoetz

- 2. Honestly... • I know a lot more about Apache Storm than I do Apache Spark Streaming. • I've been involved with Apache Storm, in one way or another, since it was open-sourced. • I'm admittedly biased.

- 3. But... • A number of articles/papers comparing Apache Storm and Spark Streaming are inaccurate in terms of Storm’s features and performance characteristics. • Code and configuration for those studies is not available, so independent verification is impossible. • Claims don't match real-world observations.

- 4. But... • There is an inherent “Home Team Advantage” in any benchmark comparison. • Without open source code, any benchmark claims are essentially marketing fluff, and should be taken with a grain or two of NaCl. • Any benchmark claim should be independently verifiable.

- 5. Spark Streaming Paper • Compares Spark Streaming (Micro-Batch) to Core Storm (One-at-a-Time) • A more appropriate comparison would have been with Storm’s Trident (Micro-Batch) API • Trident mentioned only in passing (on pages 3 and 12) http://www.eecs.berkeley.edu/Pubs/TechRpts/2012/EECS-2012-259.pdf

- 6. Spark Streaming Paper • Benchmark code/configuration not publicly available • Performance claims not independently verifiable http://www.eecs.berkeley.edu/Pubs/TechRpts/2012/EECS-2012-259.pdf

- 7. Spark Streaming Paper • Granted, the Spark Streaming paper is almost 2 years old and written at a time when Trident was relatively new. • However, that paper is often cited when comparing Apache Storm and Spark Streaming, particularly in terms of performance. • A lot can change in 2 years. http://www.eecs.berkeley.edu/Pubs/TechRpts/2012/EECS-2012-259.pdf

- 8. Streaming and batch processing are fundamentally different.

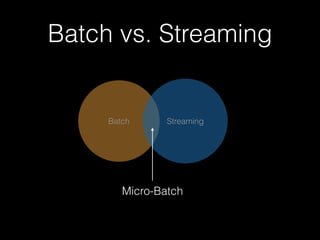

- 9. Batch vs. Streaming • Storm is a stream processing framework that also does micro-batching (Trident). • Spark is a batch processing framework that also does micro-batching (Spark Streaming).

- 10. Batch vs. Streaming Batch Streaming

- 11. Batch vs. Streaming Batch Streaming Micro-Batch

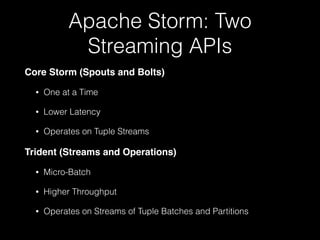

- 12. Apache Storm: Two Streaming APIs Core Storm (Spouts and Bolts)! • One at a Time • Lower Latency • Operates on Tuple Streams Trident (Streams and Operations)! • Micro-Batch • Higher Throughput • Operates on Streams of Tuple Batches and Partitions

- 13. Language Options Core Storm Storm Trident Spark Streaming • Java • Clojure • Scala • Python • Ruby • others* • Java • Clojure • Scala • Java • Scala • Python *Storm’s Multi-Lang feature allows the use of virtually any programming language.

- 14. Reliability Models Core Storm Storm Trident Spark Streaming At Most Once Yes Yes No At Least Once Yes Yes No* Exactly Once No Yes Yes* *In some node failure scenarios, Spark Streaming falls back to at-least-once processing or data loss.

- 15. Programing Model Core Storm Storm Trident Spark Streaming Stream Primitive Tuple Tuple, Tuple Batch, Partition DStream Stream Source Spouts Spouts, Trident Spouts HDFS, Network Computation/ Transformation Bolts Filters, Functions, Aggregations, Joins Transformation, Window Operations Stateful Operations No (roll your own) Yes Yes Output/ Persistence Bolts State, MapState foreachRDD

- 16. Production Deployments Apache Storm Spark Streaming • Too many to list http:// storm.incubator.apache.org/ documentation/Powered- By.html • Sharethrough http:// engineering.sharethrough.com/blog/ 2014/06/27/sharethrough-at-spark- summit-2014-spark-streaming-for- realtime-auctions/

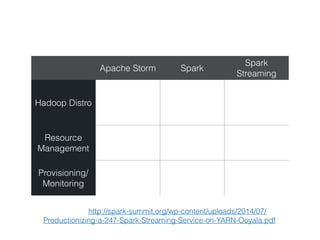

- 17. Support Apache Storm Spark Spark Streaming Hadoop Distro Hortonworks, MapR Cloudera, MapR, Hortonworks (preview) Hortonworks, Cloudera, MapR Resource Management YARN, Mesos YARN, Mesos YARN*, Mesos Provisioning/ Monitoring Apache Ambari Cloudera Manager ? *With issues: http://spark-summit.org/wp-content/uploads/2014/07/ Productionizing-a-247-Spark-Streaming-Service-on-YARN-Ooyala.pdf

- 19. Worker Failure: Spark Streaming "So if a worker node fails, then the system can recompute the lost from the the left over copy of the input data. However, if the worker node where a network receiver was running fails, then a tiny bit of data may be lost, that is, the data received by the system but not yet replicated to other node(s)." Only HDFS-backed data sources are fully fault tolerant. https://spark.apache.org/docs/latest/streaming-programming- guide.html#fault-tolerance-properties

- 20. Worker Failure: Spark Streaming https://databricks.com/blog/2015/01/15/improved-driver-fault-tolerance-and- zero-data-loss-in-spark-streaming.html Solution?: Write Ahead Logs (SPARK-3129) • Enabling WAL requires DFS (HDFS, S3) — no such requirement with Storm • Incurs a performance penalty that adds to overall latency • Full fault tolerance still requires a data source that can replay data (e.g. Kafka)! • Architectural band aid?

- 21. Worker Failure: Apache Storm • If a supervisor node fails, Nimbus will reassign that node's tasks to other nodes in the cluster. • Any tuples sent to a failed node will time out and be replayed (In Trident, any batches will be replayed). • Delivery guarantees dependent on a reliable data source.

- 22. Data Source Reliability • A data source is considered unreliable if there is no means to replay a previously-received message. • A data source is considered reliable if it can somehow replay a message if processing fails at any point. • A data source is considered durable if it can replay any message or set of messages given the necessary selection criteria. ! (These are my terms.)

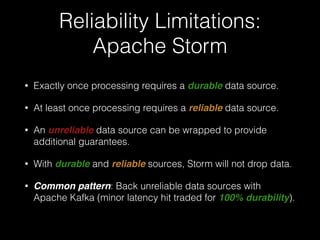

- 23. Reliability Limitations: Apache Storm • Exactly once processing requires a durable data source. • At least once processing requires a reliable data source. • An unreliable data source can be wrapped to provide additional guarantees. • With durable and reliable sources, Storm will not drop data. • Common pattern: Back unreliable data sources with Apache Kafka (minor latency hit traded for 100% durability).

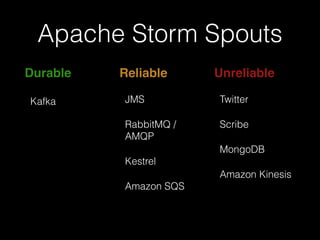

- 24. Apache Storm Spouts Durable! Kafka Reliable! JMS RabbitMQ / AMQP Kestrel Amazon SQS Amazon Kinesis Unreliable! Twitter Scribe MongoDB

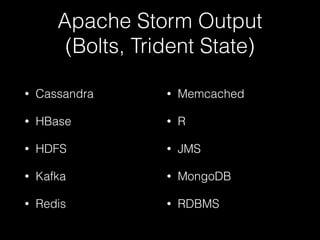

- 25. Apache Storm Output (Bolts, Trident State) • Cassandra • HBase • HDFS • Kafka • Redis • Memcached • R • JMS • MongoDB • RDBMS

- 26. Apache Storm + Kafka Apache Kafka is an ideal source for Storm topologies. It provides everything necessary for: • At most once processing • At least once processing • Exactly once processing Apache Storm includes Kafka spout implementations for all levels of reliability. Kafka Supports a wide variety of languages and integration points for both producers and consumers.

- 27. Reliability Limitations: Spark Streaming • Fault tolerance and reliability guarantees require HDFS-backed data source. • Moving data to HDFS prior to stream processing introduces additional latency. • Network data sources (Kafka, etc.) are vulnerable to data loss in the event of a worker node failure. https://spark.apache.org/docs/latest/streaming-programming- guide.html#fault-tolerance-properties

- 28. Performance “The main reason cited by Tathagata for Spark's performance gain over Storm is the aggregation of small records that occurs through the mechanics of RDDs.” http://www.cs.duke.edu/~kmoses/cps516/dstream.html In other words: Micro-Batching

- 29. Performance http://www.cs.duke.edu/~kmoses/cps516/dstream.html Storm capped at 10k msgs/sec/node? Spark Streaming 40x faster than Storm? Others may disagree…

- 31. Netty Transport • Introduced in Apache Storm 0.9.0 • Faster, pure Java alternative for 0MQ • Yahoo! Engineering announcement: http://yahooeng.tumblr.com/post/ 64758709722/making-storm-fly- with-netty • Performance Test Code: https://github.com/yahoo/storm- perf-test Netty 0mq

- 32. STORM-297 • Introduced in Apache Storm 0.9.2-incubating • Big performance boost, especially for small messages • JIRA Discussion: https://issues.apache.org/jira/ browse/STORM-297 • Performance Test Code: https://github.com/yahoo/storm- perf-test

- 33. Benchmarking Storm • 5 nodes on AWS (m1.large - not very powerful) • 1 ZooKeeper, 1 Nimbus, 3 Supervisors • Storm Core API and Trident API benchmarks • Is Trident API slower than Core API? https://github.com/ptgoetz/storm-benchmark

- 34. Is Trident API slower than Core API? • On low-power hardware with 3 supervisor nodes… • Core API: ~150k msg./sec. with ~80 ms. latency • Trident API: ~300k msg./sec. with ~250 ms. latency • Higher throughput possible with increased latency • Better performance with bigger hardware

- 35. Is Spark + Spark Streaming a "Lambda Architecture in a Box?" • No! • Lambda is a lot more than batch + streaming. • Lambda is powerful when applied correctly, but is not right for every use case. • Spark and Spark Streaming have overlapping programming models for batch and micro-batch. • The rest is up to you (as it is with Storm).

- 36. Final Thoughts In general (not specific to Spark Streaming):! • Beware any claim that A is X times faster than B. • Performance is a matter of proper tuning for the use case at hand. • Any system can be hobbled to look bad in a benchmark.

- 37. Recommendation • It is up to you, and your specific use case. • Consider fault tolerance. Is data loss acceptable? • Consider all facets and make informed decisions. • Rely on your own benchmarks

- 38. Questions?

- 39. Thank you!