Architecture 2020 - eComputing 2019-07-01

- 1. INFINITE POSSIBILITIES ARCHITECTURE 2020 JORNADAS ECOMPUTING’19 JORGE HIDALGO JULY 2019

- 2. Copyright © 2019 Accenture. All rights reserved. 2 AGILE DEVOPS CLOUD-NATIVE VALUE PRIORITISED DELIVER–REFLECT–IMPROVE DECOUPLED & DISTRIBUTED RESILIENT BY DESIGN AUTOMATED PIPELINES CROSS-FUNCTIONAL TEAMS PRODUCT AND CUSTOMER CENTRIC BUILT-IN QUALITY DECENTRALISED EXECUTION ELASTIC SUPPLY OF RESOURCES M O D E R N E N G I N E E R I N G

- 3. Copyright © 2019 Accenture. All rights reserved. 3 WHAT IS CLOUD-NATIVE? CLOUD-NATIVE is a new approach to the design, construction and operations of modern applications that need to adapt to a rapidly changing technology landscape and increasingly challenging business conditions Cloud-native applications are those that Run on multi-tenant cloud-native application platforms Broker the consumption of cloud-native middleware services, managed by the service providers Are developed using cloud-native software delivery processes In addition, cloud-native applications are solidly founded over Agile and DevOps practices, and adhere to modern architecture design patterns like the TWELVE-FACTOR APP (*), MICROSERVICES, CONTAINERS or SERVERLESS (more about them later) (*) The Twelve-Factor App: https://12factor.net

- 4. Copyright © 2019 Accenture. All rights reserved. 4 CLOUD-NATIVE VALUE PROPOSITION CLOUD-NATIVE (and cloud computing in general) enables cost savings, business agility, speed to market and elasticity of application runtimes, in a technology landscape where many, if not all, of platform innovations are happening in the cloud, or are cloud-only COST SAVINGS SPEED TO MARKET BUSINESS AGILITY ELASTICITY • Lower capital and operational expenses • Pay-per-use • Economies of scale • API ecosystem • Productivity and speed • Deploy faster, iterate faster, experiment without regret • React faster to changing business landscape • Reduction of time from idea to business value • Broad geographic availability • Faster availability to customers • Capacity only when needed • Ability to handle sudden load changes • Survive infrastructure failures • “Infinite” computing capacity

- 5. Copyright © 2019 Accenture. All rights reserved. 5 CLOUD-NATIVE TURN POINT When put altogether, CLOUD-NATIVE shakes the ground of known enterprise IT, shifting priorities and therefore, the requirements and expectations for modern platforms CLIENT-SERVER APPLICATIONS POINT-TO-POINT CALLS MESSAGING AND BUSES DISTRIBUTED TRANSACTIONS B2B AND B2C DATA CENTER CENTRAL, RIGID IT ORGANIZATION BY PROJECT SILOED BIZ, DEV, QA AND OPS SECURITY AS SEPARATE FUNCTION STABILITY ENTERPRISE SCALE OMNI-CHANNEL SERVICE MESH API AND EVENT STREAMS BLOCKCHAIN ECOSYSTEMS AND MARKETPLACES CLOUD AND SERVERLESS DEMOCRATIZED, SELF-SERVICE IT ORGANIZATION BY PRODUCT AGILE + DEVOPS DEVSECOPS AGILITY GLOBAL SCALE TRADITIONAL PLATFORM FOCUS CLOUD-NATIVE PLATFORM FOCUS

- 6. Copyright © 2019 Accenture. All rights reserved. 6 CLOUD-NATIVE OR CLOUD-MIGRANT? Although many companies have embraced and adopted cloud computing, not all of them are cloud-native – Cloud-natives, as opposed to Cloud-migrants (*), view the cloud in a very different way, and only Cloud-natives can unleash its full potential CLOUD-NATIVES CLOUD-MIGRANTS Cloud-natives leverage cloud computing for cloud-optimised workloads and delivery processes • Realisation that cloud is a fundamentally new platform that changes how software is designed and delivered • New application architectures that maximise the use of cloud for larger optimisation of cost, speed and availability • Integration of cloud computing into the entire software delivery life-cycle • All managed services are leveraged as a way to reduce development and operations effort/cost • CLOUD-NATIVES ARE INNOVATORS Cloud-migrants leverage the cloud for workloads and delivery processes designed pre-cloud era • Application design and architecture is mostly unchanged • Software delivery processes are mostly unchanged • Makes only partial use of cloud computing capabilities (typically only infrastructure) • Infrastructure topology is mostly unchanged • Infrastructure managed services are used as if provisioned resources were in someone else’s data centre • CLOUD-MIGRANTS REPURPOSE EXISTING SOLUTIONS (*) Cloud-migrant as a concept is also commonly referred to as “lift & shift”

- 8. Copyright © 2019 Accenture. All rights reserved. 8 CLOUD-NATIVE ARCHITECTURES Cloud-native architectures are founded on new architectural paradigms and principles which truly represent the revolutionary evolution that cloud-native represents Virtual Machines & Containers Infrastructure as Code Advanced Deployment Models Languages and Frameworks Reactive Microservices Serverless Service Mesh Blockchain Cloud Computing Microservices

- 9. Copyright © 2019 Accenture. All rights reserved. 9 CLOUD-NATIVE ARCHITECTURES Cloud-native architectures are founded on new architectural paradigms and principles which truly represent the revolutionary evolution that cloud-native represents Virtual Machines & Containers Infrastructure as Code Advanced Deployment Models Languages and Frameworks Reactive Microservices Serverless Service Mesh Blockchain Cloud Computing Microservices BREAKING THE MONOLITH

- 10. CLOUD COMPUTING

- 11. Copyright © 2019 Accenture. All rights reserved. 11 CLOUD COMPUTING CLOUD COMPUTING (*) is a model for enabling ubiquitous, convenient, on-demand access to a shared pool of configurable computing resources that can be rapidly provisioned and released with minimal management effort or service provider interaction These are the essential characteristics of cloud computing RESOURCE POOLING BROAD NETWORK ACCESS MEASURED SERVICE RAPID ELASTICITY ON-DEMAND / SELF-SERVICE (*) Cloud computing definition and characteristics by NIST: USA National Institute of Standards and Technology Ability to share computing resources across tenants, reducing idle time and TCoO Ability to publish services anywhere, anytime, with maximum control and observability Pay only for the services in use, in the exact amount in which they are used Ability to rapidly scale up/down, or in/out, computing resources Ability to provision computing resources when needed, with minimal waiting time

- 12. INFRASTRUCTURE AS A SERVICE Applies to System Infrastructure e.g. compute, storage, network,… IaaS Copyright © 2019 Accenture. All rights reserved. 12 CLOUD COMPUTING Cloud computing service models are named according to the abstraction levels that are available to consumers SOFTWARE AS A SERVICE Applies to Applications e.g. email, agenda, online shop, bank app, CRM,… SaaS PLATFORM AS A SERVICE Applies to Application Infrastructure e.g. service runtimes, DB, messaging, APIs,… PaaS

- 13. Copyright © 2019 Accenture. All rights reserved. 13 CLOUD COMPUTING This diagram compares a classic, on premise deployment with the service models in cloud computing, and to which extent management is transferred into the service provider Provider ManagedConsumer Managed ON PREMISES (for comparison) INFRASTRUCTURE AS A SERVICE PLATFORM AS A SERVICE SOFTWARE AS A SERVICE Network Storage Servers Virtualisation Operating System Database System Software Application Architecture Application Network Storage Servers Virtualisation Operating System Database System Software Application Architecture Application Network Storage Servers Virtualisation Operating System Database System Software Application Architecture Application Network Storage Servers Virtualisation Operating System Database System Software Application Architecture Application APPLICATION abstraction APPLICATION INFRASTRUCTURE abstraction SYSTEM INFRASTRUCTURE abstraction

- 14. VIRTUAL MACHINES & CONTAINERS

- 15. Copyright © 2019 Accenture. All rights reserved. 15 VIRTUAL MACHINES & CONTAINERS Virtual machines and containers are technology options to carry on multiple, distributed workloads in isolation, facilitating the optimised usage of computing resources fast stand up faster stand up create/destroy on demand HARDWARE HOST OS Virtual Machines HYPERVISOR VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App Containers HARDWARE HOST OS HYPERVISOR VM Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App GUEST OS - SHARED CONTAINER CONTROLLER/SCHEDULER increased isolation increased density

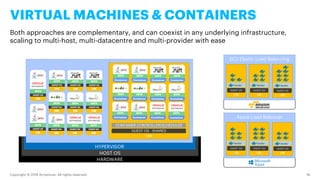

- 16. Copyright © 2019 Accenture. All rights reserved. 16 VIRTUAL MACHINES & CONTAINERS Both approaches are complementary, and can coexist in any underlying infrastructure, scaling to multi-host, multi-datacentre and multi-provider with ease HARDWARE HOST OS HYPERVISOR VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM GUEST OS DEPS App VM Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App Container DEPS App GUEST OS - SHARED CONTAINER CONTROLLER/SCHEDULER EC2 Elastic Load Balancing VM GUEST OS VM GUEST OS VM GUEST OS Azure Load Balancer VM GUEST OS VM GUEST OS VM GUEST OS

- 18. Infrastructure as code is pivotal to optimised IT operations in the cloud, maximizing computing resources utilisation while reducing operational expenses Key aspects of the relevance of infrastructure as code today are • Number of computing nodes is growing exponentially with each computing generation • No longer viable to manage resources on a 1:1 basis ► Cattle vs. Pets • Resources are transient, disposable, elastically provisioned when required, discarded when no longer needed • Resources are centrally managed, simplifying OS patch, software distribution and audit activities • Hardware and software inventories are accurate and always up-to-date Copyright © 2019 Accenture. All rights reserved. 18 INFRASTRUCTURE AS CODE 1980 Mainframe 1990 Client/Server 2000 Datacentre 2010+ Cloud

- 19. Copyright © 2019 Accenture. All rights reserved. 19 INFRASTRUCTURE AS CODE Infrastructure as code relies on workflows based on recipes and images, which are under configuration management, curated and catalogued, ready to use, and fast to instantiate This approach lead to production-like environments available for the entire SDLC Base ImagesInfrastructure Recipes model operating system, hardware, network, disk, application software, data Dockerfile Cookbook build & validate Docker image Amazon AMI store Image Repository Docker Registry Amazon Marketplace Nexus Repository download & use VM Container DEPS App Container DEPS App GUEST OS VM Container DEPS App Container DEPS App GUEST OS VM Container DEPS App Container DEPS App GUEST OS VM Container DEPS App Container DEPS App GUEST OS

- 21. Copyright © 2019 Accenture. All rights reserved. 21 ADVANCED DEPLOYMENT MODELS Advanced deployment models allows for maximised resilience and application uptime, distributing computing resources for redundancy and scaling them out elastically based on actual or expected needs • Use AWS ELB and Route 53 to balance incoming traffic across regions and across instances within every region • Use AWS ELB and Auto Scaling to modify the number of running instances depending on runtime conditions • Use container orchestration (e.g. Docker + Kubernetes) to keep the number of instances per service at the desired state, even when a host or even a whole region goes down Route 53 Elastic Load Balancing Zone 1 VM GUEST OS VM GUEST OS VM GUEST OS MASTER Elastic Load Balancing Zone 2 VM GUEST OS VM GUEST OS VM GUEST OS MASTER Elastic Load Balancing Zone 3 VM GUEST OS VM GUEST OS VM GUEST OS MASTER Elastic Load Balancing Zone 4 VM GUEST OS VM GUEST OS VM GUEST OS MASTER

- 22. Copyright © 2019 Accenture. All rights reserved. 22 ADVANCED DEPLOYMENT MODELS Another significant strength is that this approach simplifies the process of applying updates without any application downtime – for example BLUE/GREEN DEPLOYMENT The new release (green) is deployed while the previous (blue) is still in service, and once is available, traffic is switched from blue to green and blue is removed – in some cases blue is left in standby for some time as a failover mechanism Blue/green – before the update VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Blue/green – step 1 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Blue/green – step 2 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Blue/green – step 3 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Blue/green – final state VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 Incoming traffic Incoming traffic Incoming traffic Incoming traffic Blue/green – step 4 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0

- 23. Copyright © 2019 Accenture. All rights reserved. 23 ADVANCED DEPLOYMENT MODELS Another significant strength is that this approach simplifies the process of applying updates without any application downtime – for example ROLLING UPDATES The new release (R+1) is deployed while the previous (R) is still in service, and traffic is balanced across all available instances regardless of the version – therefore during some time, some users get responses from R+1 while others get them from R Rolling – before the update VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Rolling – step 1 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Rolling – final state VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 Incoming traffic Incoming traffic Incoming traffic 100% traffic 50% traffic 50% traffic 100% traffic Rolling – step 2 VM GUEST OS Service A v1.1.0 VM GUEST OS VM GUEST OS Service A v1.1.0 Service A v1.2.0 Rolling – step 4 VM GUEST OS VM GUEST OS VM GUEST OS Service A v1.1.0 Service A v1.2.0 Service A v1.2.0 Service A v1.1.0 Service A v1.2.0 Rolling – step 3 VM GUEST OS VM GUEST OS VM GUEST OS Service A v1.1.0 Service A v1.2.0 Service A v1.1.0 Service A v1.2.0

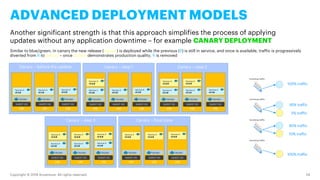

- 24. Copyright © 2019 Accenture. All rights reserved. 24 ADVANCED DEPLOYMENT MODELS Another significant strength is that this approach simplifies the process of applying updates without any application downtime – for example CANARY DEPLOYMENT Similar to blue/green, in canary the new release (canary) is deployed while the previous (R) is still in service, and once is available, traffic is progressively diverted from R to canary – once canary demonstrates production quality, R is removed Canary – before the update VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Canary – step 1 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Canary – step 2 VM GUEST OS Service A v1.1.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Canary – step 3 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 VM GUEST OS Service A v1.1.0 Service A v1.2.0 Canary – final state VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 VM GUEST OS Service A v1.2.0 Incoming traffic Incoming traffic Incoming traffic Incoming traffic 100% traffic 95% traffic 5% traffic 90% traffic 10% traffic 100% traffic

- 26. BUILD & DEPENDENCY MNG Copyright © 2019 Accenture. All rights reserved. 26 LANGUAGES AND FRAMEWORKS The cloud-native technology landscape, although immensely rich and diverse, provides with some exemplar and popular choices, widely adopted by the industry and communities CONTAINERS & ORCHESTRATION STANDARDS & SPECIFICATIONS DATA PERSISTENCE FRAMEWORKS & LIBRARIESLANGUAGES & RUNTIMES Spring Boot Spring Cloud Express Spring Data APPLICATION SERVERS DATA & MESSAGINGQUALITY & TESTING CLOUD PLATFORMS This map is not exhaustive of every available option, but representative of common stacks used by projects

- 27. Lifecycle Copyright © 2019 Accenture. All rights reserved. 27 LANGUAGES AND FRAMEWORKS All these technology options are usually assembled in stacks, which tend to be, once proven successful, repeatedly and consistently applied in different solutions This map is not exhaustive of every available option, but representative of common stacks used by projects FE API BE Data Lifecycle FE API BE Express Containers Data Containers Express

- 29. Copyright © 2019 Accenture. All rights reserved. 29 BREAKING THE MONOLITH To achieve the desirable state of being a cloud- native application, software architecture design must also change to adapt to the new paradigm The service layer is where the the core computational business logic of a website, mobile application, software or information system, resides Features developed in the backend are exposed as services that are indirectly accessed by users, typically through a frontend application, an API, or through new and innovative user interaction channels as chatbots or extended reality applications Frontend App Chatbot XR API Gateway Backend Services Data Storage Data Storage Data Storage

- 30. Copyright © 2019 Accenture. All rights reserved. 30 BREAKING THE MONOLITH In traditional IT, MONOLITHS have been the way in which we have developed, packaged and deployed the software bits for the service layers – big chunks of functionality with multiple responsibilities and usually a single point of failure in a system As IT has evolved, new, innovative approaches for building systems have emerged MONOLITHS MINILITHS MICROSERVICES SERVERLESS

- 31. MICROSERVICES

- 32. Copyright © 2019 Accenture. All rights reserved. 32 MICROSERVICES MICROSERVICES (*) is an architectural approach that fosters rapid development, continuous delivery and robust and resilient software These are the basics principles underpinned by every microservice-based architecture Capable of self-monitoring and self-healing Automated and independent lifecycle Fine grained, with clear and distinct responsibilities Encapsulated business logic and data Loosely coupled to other microservices in the system Can scale out independently to other microservices in the system Can be distributed and re-located trivially and independently (*) Microservices defined: https://martinfowler.com/articles/microservices.html

- 33. Copyright © 2019 Accenture. All rights reserved. 33 MICROSERVICES MICROSERVICE BOUNDARIES are defined around the perimeter of the business logic and data managed inside each of them – therefore, there is absolutely no direct interaction with the business logic or data from other microservices Monolith Data Storage Data Repository Business Logic API Interface Presentation Layer Data Storage Data Repository Business Logic API Interface Presentation Layer Data Storage Data Repository Business Logic API Interface Data Storage Data Repository Business Logic API Interface Data Storage Data Repository Business Logic API Interface Order Service (*) Shipping Service (*) Payment Service (*) Catalogue Service (*) (*) Purely mentioned as examples of possible names of microservices

- 34. Copyright © 2019 Accenture. All rights reserved. 34 MICROSERVICES To ensure that boundaries are correctly defined, microservices are usually built by following DOMAIN-DRIVEN DESIGN principles • Functions are mapped around business capabilities and interactions (Conway’s Law) • Bounded contexts are defined around them • Context boundaries define the contracts ruling service interactions • Context boundaries determine the transactional (ACID) boundaries • Typically a data model per microservice • Typically interactions driven by lightweight protocols (MQ, REST) • Multiple versions may coexist at a given point in time (evolutionary API design) ShippingOrder Payment Wishlist …Catalogue (*) Purely mentioned as examples of possible names of microservices

- 35. Copyright © 2019 Accenture. All rights reserved. 35 MICROSERVICES & OUTER ARCHITECTURE With many pieces in a system, changing in number or physical location, concerns that were previously not relevant, are now primary concerns that must be addressed with great care These concerns, which define the platform capabilities, are the OUTER ARCHITECTURE (*) Centralized Configuration Service Registry and Service Discovery Load Balancing Service Resiliency and Fault Tolerance Distributed Access Control Distributed Logs Distributed Tracing Distributed Metrics The ability to manage and distribute changes to configuration settings safely across services instances Service instances register themselves so they are discoverable by other services which depend on them Incoming traffic is distributed across a pool of (registered) service instances to enable horizontal scaling The system is capable of isolating failures before they cascade causing major downtimes or misbehaviour Single sign-on, federation and other concerns related with encryption, authentication and authorization The ability to collect and visualize logs pertaining to every service in the system regardless of space and time Tracing interactions from the perspective of the user or business transaction, spanning across services The ability to collect and visualize performance and business metrics, set alarms and respond to incidents (*) This is not an exhaustive list of Outer Architecture capabilities

- 36. Copyright © 2019 Accenture. All rights reserved. 36 MICROSERVICES & CONTAINERS Although not strictly a requirement, a microservice-based architecture works much better when used along with a container based platform at runtime Physical deployment in a container platform (highlights the main application infrastructure elements) Public Subnet (DMZ) NAT / API Gateway Bastion host (remote administrative access) Application Subnet VM GUEST OS MASTER VM GUEST OS MASTER VM GUEST OS VM GUEST OS VM GUEST OS VM GUEST OS VM GUEST OS VM GUEST OS VM GUEST OS VM GUEST OS NODE NODE NODE NODE NODE NODE NODE NODE VM GUEST OS VM GUEST OS NODE NODE VM GUEST OS VM GUEST OS NODE NODE VM GUEST OS VM GUEST OS PERSISTENT STORAGE PERSISTENT STORAGE VM GUEST OS VM GUEST OS IMAGE REGISTRY IMAGE REGISTRY Internet Gateway

- 37. Copyright © 2019 Accenture. All rights reserved. 37 MICROSERVICES & CONTAINERS Although not strictly a requirement, a microservice-based architecture works much better when used along with a container based platform at runtime Logical deployment in a container platform (everything is a container) Internet Gateway Service A Service C Service B Service D Container Container Container Container Balancer Container Container Container Container Container Balancer Container Container Container Container Container ContainerContainer Container Container Balancer Container Balancer Container Container

- 39. Copyright © 2019 Accenture. All rights reserved. 39 REACTIVE MICROSERVICES Reactive microservices are a specific kind of microservices which follows the principles underpinned by the REACTIVE MANIFESTO (*) As with any other microservice, they are designed to be responsive, resilient and elastic – however, they have some fundamental, differentiating characteristics In larger numbers, they are easier to choreograph than point-to-point, REST-based microservices, resulting in easier to observe flows and interaction diagrams They are event-based, with foundations based on data streaming and distributed messaging, resulting in higher responsiveness to user demands They are message-driven, relying on asynchronous message-passing, non-blocking communications, and back-pressure, to handle load and flow control Reactive microservices are even more loosely coupled, without any insights into which service produces the needed data, or consumes the produced data Can scale out massively, breaking the limits of point-to-point, REST-based microservices (e.g. network overhead due to high number of HTTP connections) (*) The Reactive Manifesto: https://www.reactivemanifesto.org/

- 40. Copyright © 2019 Accenture. All rights reserved. 40 REACTIVE MICROSERVICES Organizationally, reactive microservice landscapes tend to be less chaotic, ordered around the data streams SRV 1 SRV 2 SRV 3 SRV 4 SRV 5 SRV 6 SRV 7 Classic, point-to-point, REST-based microservices SRV 1 SRV 2 SRV 3 Data stream produced by Service 1 Data stream produced by Service 2 Data stream produced by Service 3 SRV 5 Data stream produced by Service 5 Observes data from Service 3 Observes data from Service 1 Reactive microservices Observes data from Service 2

- 41. SERVERLESS

- 42. Copyright © 2019 Accenture. All rights reserved. 42 SERVERLESS SERVERLESS (*) is the ultimate evolution of cloud-native architecture styles, in which all components in the system run on resources which are completely abstracted, fully managed by the cloud provider, seamlessly unified in a new platform There are servers, of course, as well as other computing resources, but they are nowhere to be seen, simplifying architecture definition, provisioning, deployment and operations (*) Serverless: https://en.wikipedia.org/wiki/Serverless_computing Highly cost effective, as billing depends solely on time in use No need to provision any computing resource, all processes are automated by default No servers “on sight” means no resources to setup, tune, patch, scale or distribute Since most (if not all) of technical concerns are abstracted, code focus on business logic Fully-managed resources do not expose any access to the underlying platform

- 43. Copyright © 2019 Accenture. All rights reserved. 43 SERVERLESS Components in a Serverless architecture are different to any other microservice-based architecture Functions (as a Service, FaaS) Ephemeral microservices which spawn solely to process an event – as events come in, they scale out based on volume, and when there is no usage, they cease to exist Event Stream An event stream which provides a constant stream of events to which functions subscribe and act upon – might be produced from external REST API calls, data changes, or any other source Storage Any data storage provided and managed by the cloud platform – might be simple disk storage, highly scalable K/V data stores, or any other kind API Gateway API Gateway provided and managed by the cloud platform, used to relay external events to the functions in the system – might be REST or streaming API calls from external world (with built-in auth./auth.) direct data transformation from incoming API call API calls transformed into an event stream Data changes transformed into an event stream Functions executed responding to events in any stream Functions change data but are themselves stateless

- 44. SERVICE MESH

- 45. Copyright © 2019 Accenture. All rights reserved. 45 SERVICE MESH A SERVICE MESH (*) is a way to control how different elements of a software system communicate and share data with one another, raising in popularity after microservice- based systems have grown in size an complexity, since previous approaches to implement outer architecture concerns are either limited or harder to do in the right way (*) Service Mesh: https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh Lower-level INFRASTRUCTURE layer to handle inter-service communications, monitoring, security and the rest of outer architecture concerns Cleaner SEPARATION OF CONCERNS between each service logic and the infrastructure logic related with outer architecture concerns PROXY instances, attached to every service instance, provide with service mesh capabilities in a language- and framework-independent way As a result, microservice-based systems are easier to configure, easier to observe and easier to manage through the so-called platform CONTROL PLANE

- 46. Copyright © 2019 Accenture. All rights reserved. 46 SERVICE MESH These are the main highlights of Service Mesh capabilities around outer architecture concerns and platform orchestration (*) Service Mesh: https://www.redhat.com/en/topics/microservices/what-is-a-service-mesh Service Registry Traffic Routing Load Balancing Service Resiliency and Fault Tolerance Distributed Access Control Observability Proxies handle service registry and keep-alive signals, instead of polluting service code with registration logic Instead of multiple (hundreds) of load balancers, all traffic routing is handled centrally through the proxies Sophisticated layer 7 load balancing which makes advanced deployment techniques trivial and finer grained Proxies handle inter-service call failures and timeouts through a set of rules, not explicitly in service code Applied not only at the perimeter of the network, but also with RBAC and encryption for inter-service calls All logs, traces and metrics, are automatically collected by proxies and published to the control plane

- 47. Copyright © 2019 Accenture. All rights reserved. 47 SERVICE MESH This diagram depicts how a service mesh like ISTIO (*) works, on top of the container platform and close to each service, handling all network interactions and observability (*) Adapted from “What is Istio”: https://istio.io/docs/concepts/what-is-istio/ ISTIO IN A NUTSHELL: • ENVOY – Proxy, deployed as a sidecar container inside each pod, which handles network traffic embedding these features: service discovery, load balancing, network protocol proxy, TLS encryption, fault tolerance, fault injection, health checks, staged rollouts and telemetry broadcast • MIXER – Component that enforces access control and usage policies, and collects telemetry data, which can be extended through adapters which interface with multiple environment backends • PILOT – High-level service discovery, traffic routing and resiliency management, abstracting the complexity of low-level and environment- specific APIs • GALLEY – Configuration ingestion, validation, processing and distribution component, again abstracting environment-specific mechanisms • CITADEL – Handles strong inter-service and end- user authentication, with built-in identity and credential management, upgrading unencrypted traffic inside the service mesh Service A Service B HTTP, gRCP, TCP (optionally with mutual TLS) Envoy Envoy Adapter AdapterMixer GalleyPilot Citadel configuration data to proxies configuration data TLS certificates to proxies policy checks, telemetry Control plane Data plane

- 48. BLOCKCHAIN

- 49. Copyright © 2019 Accenture. All rights reserved. 49 BLOCKCHAIN BLOCKCHAIN is a collection of several technologies already existing but put together in a new fundamental way, enabling new solution approaches Distributed transactions Consensus management, including ability to veto transactions by parties Journaling data stores (append-only) Cryptography, at the lowest level, ensuring no tampering of information A key characteristic of Blockchain systems, is that there are NO CENTRAL AUTHORITIES, although different parties may have different roles in the system, with varying abilities to run commands and to read information depending on the corresponding levels of clearance

- 50. Copyright © 2019 Accenture. All rights reserved. 50 BLOCKCHAIN In BLOCKCHAIN there are three basic concepts required to understand how it works TRANSACTION – Information stored in the system, typically an instalment of an agreement between two participating parties BLOCK – A set of transactions grouped with a cryptographic unique identifier (hash) and the link to the previous block in the chain NODE – Computing resources where information is stored and distributed to other nodes (peers) in the network As there is no central authority in a Blockchain system, every single node contains a complete copy of all the transactions, building TRUST AND TRANSPARENCY across the peers Being distributed, DATA CONSISTENCY IS EVENTUAL, and solutions have to be designed with that in mind, e.g. veto ability, offline nodes, integration of late transactions, etc. Copyright © 2019 Accenture. All rights reserved. 56 BLOCKCHAIN In BLOCKCHAIN there are three basic concepts required to understand how it works TRANSACTION – Information stored in the system, typically an instalment of an agreement between two participating parties BLOCK – A set of transactions grouped with a cryptographic unique identifier (hash) and the link to the previous block in the chain NODE – Computing resources where information is stored and distributed to other nodes (peers) in the network As there is no central authority in a Blockchain system, every single node contains a complete copy of all the transactions, building TRUST AND TRANSPARENCY across the peers Being distributed, DATA CONSISTENCY IS EVENTUAL, and solutions have to be designed with that in mind, e.g. veto ability, offline nodes, integration of late transactions, etc.

- 51. ANY QUESTIONS?