Использование AzureDevOps при разработке микросервисных приложений

- 1. 1

- 2. 2 Azure DevOps for MicroServices App – 6 months of Project Evolution Igor Sychev Solution Architect in MSTD BY https://github.com/SychevIgor/pronet_docker

- 3. 3 Introduction to our project • Asp.Net Core based backend microservices • Reactjs based frontend pp • The App should be hosted in Azure (AKS- Managed Kubernetes) • Azure DevOps “sold” as part of fully Azure native app development process. • Azure Monitor (Application Insights and Log Analytics) “sold” as Azure native monitoring tools • No clear scope -> Time and Material type of project

- 4. 4 eShopOnContainers as a reference architecture • Used to start, but not covered CI/CD/DEVOPS/ALM questions at the moment https://github.com/dotnet-architecture/eShopOnContainers/issues/949 • Used Mono Repository (developed mainly by 1-2 devs at a time)

- 6. 6 Initial “Trade off” idea – Mono repository • Situation: o Domain boundaries not identified, because scope was not well defined o Team without experience with docker and microservices o Team(20+ devs/qa) distributed across 4 locations • Risk: o To high level of required skills to begin o High risk of “slow start” • Risk Mitigation: o Start from Mono Repository and sacrifice CI/CD for a 1-2 sprints o After 1-2 sprints – replace Mono repository with sets of repositories for each microservice Initial Mono repo converted to Meta repository, with git submodules

- 7. 7 MonoRepo -> MonoBuild • Problem was not in Mono Repository, but in Builds o Each commit/push generated new build. o Each build takes 20 minutes. o Include/Exclude filters for folders doesn’t helped, because “Docker-Compose build” build/pushed to registry images all at once • Can’t release microservices independently • Hard to test microservices with different versions with MonoRepo

- 8. 8 Mono Build -> Mono Release problem • Can’t deploy microservices independently • Advises about “git diffs” – is too sophisticated and not 100% guarantees.

- 10. 10 General Idea

- 11. 11 After 2 sprints: Meta Repository + Set of Micro Repositories

- 12. 12 Micro Repositories -> Microservices Build

- 13. 13 Build Monitoring Board • It’s hard to track all BI/Builds for all microservice independently • Dashboards – solved this issue. • P.S. don’t stop build – delete. Otherwise will be marked as red in a report.

- 14. 14 Microservices Build -> Independent Releases

- 15. 15 Release Monitoring Board • It’s hard to track all CD/Releases for all microservice independently • Dashboards – solved this issue.

- 16. 16 Challenge of managing CI/CD • For 8 Microservices – 8 CI pipelines for docker build, and 8 to create helm charts. • For 8 Microservices – 8 CD pipelines, with 5 Environment each. • Q: What was the challenge? • A: Make all CI/CD identical for easy/fast modification and improvement. • Q: How? • A: o Standardize names across different “layers”. Names of repositories should match names of Build Artifacts, match k8s Deployments/Services/Pods. o Standardize repository structure(where will be code, where will be helm charts) o Clone CI/CD from one Microservices, replace Variables and Git Repository o Use YAML based Builds o Use Task Groups

- 17. 17 Small Tricks – variables from Libraries in Build

- 18. 18 Small Tricks – variables from Libraries in Release

- 19. 19 Small Tricks – Task Groups in Builds/Release

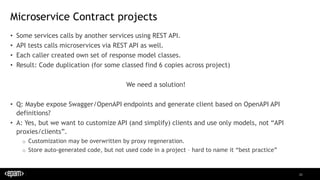

- 20. 20 Microservice Contract projects • Some services calls by another services using REST API. • API tests calls microservices via REST API as well. • Each caller created own set of response model classes. • Result: Code duplication (for some classed find 6 copies across project) We need a solution! • Q: Maybe expose Swagger/OpenAPI endpoints and generate client based on OpenAPI API definitions? • A: Yes, but we want to customize API (and simplify) clients and use only models, not “API proxies/clients”. o Customization may be overwritten by proxy regeneration. o Store auto-generated code, but not used code in a project – hard to name it “best practice”

- 21. 21 Nuget packages for Contract projects

- 22. 22 Nuget as simple as

- 23. 23 Nuget Package Build/Publish monitoring

- 24. 24 Tests/Code Coverage Board • It’s hard to track all Tests pass rate/ code coverage for all microservice independently • Dashboard – solved this

- 27. 29 Designer based Builds (and Releases) • Initially we used Designer based builds, because “Click- >Click” is faster to start • Designer based Builds don’t require commit/push changes to git repo. • YAML based builds were introduced relatively recently and we were not confident that it’s not yet another Silverlight, WinPhone • YAML based releases were not available -> no consistency between CI and CD

- 28. 30 YAML based CI • On a BUILD2019 Microsoft shows “unified pipelines”, triggered us to create YAML based builds. * o Migration of 5 builds takes 2h (without YAML builds skills) o YAML builds – give us 99.9% compatibility between microservices (1 variable is different) o We store YAML build definitions under source control -> CI as Code (infrastructure as Code)

- 30. 32 Build in Container • Why, if It’s slower?! o Have you ever seen errors/bug because on one machine software was older/newer than on others? o Have you ever asked- what this .dll doing here? o Do your developers all get the 100% same(cloned) machines? o I can build my app in CI, but not locally! o Concurrency tests may work locally but fail in docker.

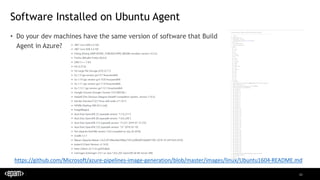

- 31. 33 Software Installed on Ubuntu Agent • Do your dev machines have the same version of software that Build Agent in Azure? https://github.com/Microsoft/azure-pipelines-image-generation/blob/master/images/linux/Ubuntu1604-README.md

- 32. 34 Tests in Container • Why, if It’s slower? o Yes, but apps will be executed in a container, not on your machine!

- 33. 35 Build/Test in Docker as simple as

- 35. 37 Run MicroServices App Locally from Meta Repository • Multiple Docker-Compose files in a meta repository and in each Microservice Repository • Meta repo: o Run all o Run back o Run front o Run all tests o Run back tests o Run front tests • For Microservices o Run microservices o Run microservice test

- 36. 38 Special Override file to run docker-compose locally • Require to store local ports mapping • In a cluster we used 80-443, but we can’t map on the same port more than 1 MicroService-> we need custom ports mapping from Container ports to Host Ports

- 37. 39 Integration Tests in container

- 38. 40 Our approach with Docker-Compose • Build image with tests • Pull and Run images with dependencies • Pro: o Code executed in a similar containerized environment • Contra: o Execution is slower than without containerization

- 39. 41 Alternative using Docker.Dotnet and testenvironment-docker • Pro: o Test infrastructure as code on C# • Contra: o Require more lines o To create a container, container with test require an access to Docker Engine API. o To test React application backend, dev require C# skills. https://github.com/Deffiss/testenvironment-docker

- 40. 42 Nodejs unavailable, or you must can build/deploy app without CI/CD

- 41. 43 NodeJs.org unavailable • Once, 24h before the release, CI started failing • Analyses shows: nodejs stopped responding. • No guarantees, that nodejs guys will restore it on time with any SLA, because it’s free service. • Conclusion: you always should know how to run CI/CD from localhost in such cases.

- 42. 44 SonarQube and technical Debt journey

- 43. 45 Considerations • We will manage technical Debt using SonarQube • Scans will happen inside docker image as well as builds/tests • Will be used Epams installation of SonarQube o This project assumed to be 100% independent from EPAM environment, except of Azure subscription, but SonarQube installation was also excluded later during development. • All Microservices must be scanned and tracked (Tests also must be included and covered) • Will be used 1 (ONE) SonarQube project to track everything. o Individual Microservices will be tracked additionally as branches.

- 44. 46

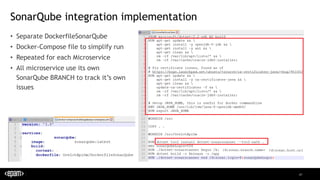

- 45. 47 SonarQube integration implementation • Separate DockerfileSonarQube • Docker-Compose file to simplify run • Repeated for each Microservice • All microservice use its own SonarQube BRANCH to track it’s own issues

- 46. 48 As an Architect I want to run update issues from all Microservices in one command Run each collection sequentially: Run all in one file:

- 47. 75 Infrastructure as Code ->CI/CD

- 48. 76 Infrastructure repository for ARM Templates • Azure infrastructure modified only from Azure DevOps CD (releases) • Infrastructure separated on 2 pieces: o DevOps infrastructure (Azure Container Registry, Azure Key Vault) 2 params sets (1 for all EPAM environments and 1 for Customer’s environment) o Main Infrastructure (AKS, Application Insights, Log Analytics) 4+ params sets (dev/qa/uat/prod,etc)

- 49. 78 ARM Template Validation in CI

- 50. 79 Failed ARM template validation example: • Network plugin for AKS can’t be modified • Delete and Recreate • Problem was found in CI, without CD(real deployment)

- 51. 83 Helm

- 52. 84 Each Microservice released via Helm

- 53. 85 Example of Helm template (used to generate k8s yaml file)

- 54. 86 Helm template Validation Both Helm Lint and Dry-Run didn’t catch dummy mistake with “ in Environment Variables Unclear how to automatically validate Helm Charts before the deployment

- 55. 87 Helm Values per environment and Infrastructure as Code • Helm Value per environment implement Infrastructure as Code • Release(CD) process become simpler across all Microservices

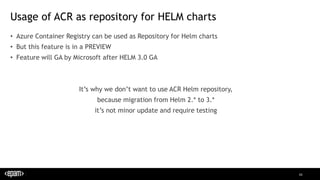

- 56. 88 Usage of ACR as repository for HELM charts • Azure Container Registry can be used as Repository for Helm charts • But this feature is in a PREVIEW • Feature will GA by Microsoft after HELM 3.0 GA It’s why we don’t want to use ACR Helm repository, because migration from Helm 2.* to 3.* it’s not minor update and require testing

- 57. 89 Helm: Why tiller version is important?!

- 58. 90 One day, our Release Pipelines started crushing https://status.dev.azure.com/_history

- 59. 91 Next day: Azure DevOps reported as healthy • Currently used in CD process: • Last succeeded:

- 60. 92 Why version had changed? https://github.com/helm/helm/releases

- 61. 93 Tiller version on cluster higher than on Helm on Client • Once, we updated Tiller on clusters • But some releases were installed on Dev before upgrade and waited approve and installation to QA

- 62. 94 Summary: Helm/Tiller Version is important! Helm higher than Tiller almost always will not work Tiller higher than Helm will not work

- 63. 103 Performance troubleshooting or why distributed logging is important

- 64. 104 Once, team reported performance issue • In an application was added Application Insights for such moments • Find a lot of nested API calls

- 65. 105 What’s inside http requests? • “Count_” should equal to 1 • 1 unique request for data per unique ID • How to solve: caching http requests.

- 66. 106 Dummy example how in .Net Core

- 67. 125 Not clear how to test ModSecurity • Ok, it’s clear how to test that ModSecurity running But do ModSecurity protects?