A data analyst view of Bigdata

- 1. Introduction to Bigdata Venkat Reddy

- 2. • • • • • • • • • • What is Bigdata Sources of Bigdata What can be done with Big data? Handling Bigdata MapReduce Hadoop Hadoop components Hadoop ecosystem Big data example Other bigdata use cases Bigdata Analysis Course Venkat Reddy Contents 2

- 3. • • • • Excel : Have you ever tried a pivot table on 500 MB file? SAS/R : Have you ever tried a frequency table on 2 GB file? Access: Have you ever tried running a query on 10 GB file SQL: Have you ever tried running a query on 50 GB file Bigdata Analysis Course Venkat Reddy How much time did it take? 3

- 4. Can you think of… • What if we get a new data set like this, every day? • What if we need to execute complex queries on this data set everyday ? • Does anybody really deal with this type of data set? • Is it possible to store and analyze this data? • Yes google deals with more than 20 PB data everyday Bigdata Analysis Course Venkat Reddy • Can you think of running a query on 20,980,000 GB file. 4

- 5. • • • • • Google processes 20 PB a day (2008) Way back Machine has 3 PB + 100 TB/month (3/2009) Facebook has 2.5 PB of user data + 15 TB/day (4/2009) eBay has 6.5 PB of user data + 50 TB/day (5/2009) CERN’s Large Hydron Collider (LHC) generates 15 PB a year That’s right Bigdata Analysis Course Venkat Reddy Yes….its true 5

- 6. • • • • • • • • • • • • • • Email users send more than 204 million messages; Mobile Web receives 217 new users; Google receives over 2 million search queries; YouTube users upload 48 hours of new video; Facebook users share 684,000 bits of content; Twitter users send more than 100,000 tweets; Consumers spend $272,000 on Web shopping; Apple receives around 47,000 application downloads; Brands receive more than 34,000 Facebook 'likes'; Tumblr blog owners publish 27,000 new posts; Instagram users share 3,600 new photos; Flickr users, on the other hand, add 3,125 new photos; Foursquare users perform 2,000 check-ins; WordPress users publish close to 350 new blog posts. And this is one year back….. Damn!! Bigdata Analysis Course Venkat Reddy In fact, in a minute… 6

- 7. What is a large file? • Traditionally, many operating systems and their underlying file system implementations used 32-bit integers to represent file sizes and positions. Consequently no file could be larger than 232-1 bytes (4 GB). • In many implementations the problem was exacerbated by treating the sizes as signed numbers, which further lowered the limit to 231-1 bytes (2 GB). • Files larger than this, too large for 32-bit operating systems to handle, came to be known as large files. What the … Bigdata Analysis Course Venkat Reddy • If you are using a 32 bit OS then 4GB is a large file 7

- 8. Definition of Bigdata Bigdata Analysis Course Venkat Reddy Sorry …There is no single standard definition… 8

- 9. Bigdata … • • • • • • • • Capture Curate Store Search Share Transfer Analyze and to create visualizations Bigdata Analysis Course Venkat Reddy Any data that is difficult to 9

- 10. • Collection of data sets so large and complex that it becomes difficult to process using on-hand database management tools or traditional data processing applications • “Big Data” is the data whose scale, diversity, and complexity require new architecture, techniques, algorithms, and analytics to manage it and extract value and hidden knowledge from it… BTW is it Bigdata/big data/Big data/bigdata/BigData /Big Data? Bigdata Analysis Course Venkat Reddy Bigdata means 10

- 11. Bigdata is not just about size • Volume • Data volumes are becoming unmanageable • Data complexity is growing. more types of data captured than previously • Velocity • Some data is arriving so rapidly that it must either be processed instantly, or lost. This is a whole subfield called “stream processing” Bigdata Analysis Course Venkat Reddy • Variety 11

- 12. • • • • Relational Data (Tables/Transaction/Legacy Data) Text Data (Web) Semi-structured Data (XML) Graph Data • Social Network, Semantic Web (RDF), … • Streaming Data • You can only scan the data once • Text, numerical, images, audio, video, sequences, time series, social media data, multi-dim arrays, etc… Bigdata Analysis Course Venkat Reddy Types of data 12

- 13. • • • • • • Social media brand value analytics Product sentiment analysis Customer buying preference predictions Video analytics Fraud detection Aggregation and Statistics • Data warehouse and OLAP • Indexing, Searching, and Querying • Keyword based search • Pattern matching (XML/RDF) Bigdata Analysis Course Venkat Reddy What can be done with Bigdata? • Knowledge discovery • Data Mining • Statistical Modeling 13

- 14. But, datasets are huge, complex and difficult to process What is the solution? Bigdata Analysis Course Venkat Reddy Ok..…. Analysis on this bigdata can give us awesome insights 14

- 15. Handling bigdata- Parallel computing • Imagine a 1gb text file, all the status updates on Facebook in a day • Now suppose that a simple counting of the number of rows takes 10 minutes. • What do you do if you have 6 months data, a file of size 200GB, if you still want to find the results in 10 minutes? • Parallel computing? • Put multiple CPUs in a machine (100?) • Write a code that will calculate 200 parallel counts and finally sums up • But you need a super computer Bigdata Analysis Course Venkat Reddy • Select count(*) from fb_status 15

- 16. Handling bigdata – Is there a better way? • Till 1985, There is no way to connect multiple computers. All systems were Centralized Systems. • After 1985,We have powerful microprocessors and High Speed Computer Networks (LANs , WANs), which lead to distributed systems • Now that we have a distributed system that ensures a collection of independent computers appears to its users as a single coherent system, can we use some cheap computers and process our bigdata quickly? Bigdata Analysis Course Venkat Reddy • So multi-core system or super computers were the only options for big data problems 16

- 17. • We want to cut the data into small pieces & place them on different machines • Divide the overall problem into small tasks & run these small tasks locally • Finally collate the results from local machines • So, we want to process our bigdata in a parallel programming model and associated implementation. • This is known as MapReduce Bigdata Analysis Course Venkat Reddy Distributed computing 17

- 18. • Processing data using special map() and reduce() functions • The map() function is called on every item in the input and emits a series of intermediate key/value pairs(Local calculation) • All values associated with a given key are grouped together • The reduce() function is called on every unique key, and its value list, and emits a value that is added to the output(final organization) Bigdata Analysis Course Venkat Reddy MapReduce…. Programming Model 18

- 19. Bigdata Analysis Course Venkat Reddy Mummy ‘s MapReduce 19

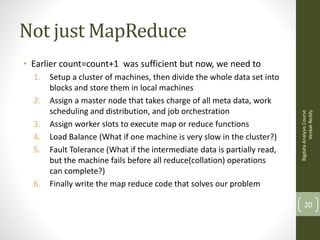

- 20. Not just MapReduce 1. 2. 3. 4. 5. 6. Setup a cluster of machines, then divide the whole data set into blocks and store them in local machines Assign a master node that takes charge of all meta data, work scheduling and distribution, and job orchestration Assign worker slots to execute map or reduce functions Load Balance (What if one machine is very slow in the cluster?) Fault Tolerance (What if the intermediate data is partially read, but the machine fails before all reduce(collation) operations can complete?) Finally write the map reduce code that solves our problem Bigdata Analysis Course Venkat Reddy • Earlier count=count+1 was sufficient but now, we need to 20

- 21. Ok..…. Analysis on bigdata can give us awesome insights I found a solution, distributed computing or MapReduce But looks like this data storage & parallel processing is complicated What is the solution? Bigdata Analysis Course Venkat Reddy But, datasets are huge, complex and difficult to process 21

- 22. Hadoop • Hadoop is a bunch of tools, it has many components. HDFS and MapReduce are two core components of Hadoop • makes our job easy to store the data on commodity hardware • Built to expect hardware failures • Intended for large files & batch inserts • MapReduce • For parallel processing Bigdata Analysis Course Venkat Reddy • HDFS: Hadoop Distributed File System • So Hadoop is a software platform that lets one easily write and run applications that process bigdata 22

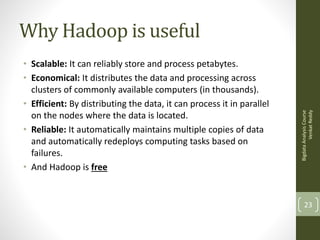

- 23. • Scalable: It can reliably store and process petabytes. • Economical: It distributes the data and processing across clusters of commonly available computers (in thousands). • Efficient: By distributing the data, it can process it in parallel on the nodes where the data is located. • Reliable: It automatically maintains multiple copies of data and automatically redeploys computing tasks based on failures. • And Hadoop is free Bigdata Analysis Course Venkat Reddy Why Hadoop is useful 23

- 24. So what is Hadoop? • Hadoop is a platform/framework • Which allows the user to quickly write and test distributed systems • Which is efficient in automatically distributing the data and work across machines Bigdata Analysis Course Venkat Reddy • Hadoop is not Bigdata • Hadoop is not a database 24

- 25. Ok..…. Analysis on bigdata can give us awesome insights I found a solution, distributed computing or MapReduce But looks like this data storage & parallel processing is complicated Ok, I can use Hadoop framework…..I don’t know Java, how do I write MapReduce programs? Bigdata Analysis Course Venkat Reddy But, datasets are huge, complex and difficult to process 25

- 26. MapReduce made easy • Hive: • Pig: • Pig is a high-level platform for processing big data on Hadoop clusters. • Pig consists of a data flow language, called Pig Latin, supporting writing queries on large datasets and an execution environment running programs from a console • The Pig Latin programs consist of dataset transformation series converted under the covers, to a MapReduce program series Bigdata Analysis Course Venkat Reddy • Hive is for data analysts with strong SQL skills providing an SQL-like interface and a relational data model • Hive uses a language called HiveQL; very similar to SQL • Hive translates queries into a series of MapReduce jobs • Mahout • Mahout is an open source machine-learning library facilitating building scalable matching learning libraries 26

- 27. Bigdata Analysis Course Venkat Reddy Hadoop ecosystem 27

- 28. Bigdata Analysis Course Venkat Reddy Bigdata ecosystem 28

- 29. Bigdata example • The Business Problem: • Analyze this week’s stack overflow datahttp://stackoverflow.com/ • What are the most popular topics in this week? • Find out some simple descriptive statistics for each field • Total questions • Total unique tags • Frequency of each tag etc., • The ‘tag’ with max frequency is the most popular topic • Lets use Hadoop to find these values, since we can’t rapidly process this data with usual tools Bigdata Analysis Course Venkat Reddy • Approach: 29

- 30. Bigdata example: Dataset Bigdata Analysis Course Venkat Reddy 7GB text file, contains questions and respective tags 30

- 31. Bigdata Analysis Course Venkat Reddy Move the dataset to HDFS • The file size is 6.99GB, it has been automatically cut into several pieces/blocks, size of the each block is 64MB • This can be done by just using a simple command bin/hadoop fs -copyFromLocal /home/final_stack_data stack_data *Data later copied into Hive table 31

- 32. Bigdata Analysis Course Venkat Reddy Data in HDFS: Hadoop Distributed File System • Each block is 64MB total file size is 7GB, so total 112 blocks 32

- 33. Processing the data Here is our query MapReduce is about to start Bigdata Analysis Course Venkat Reddy • What are the total number of entries in this file? 33

- 34. Bigdata Analysis Course Venkat Reddy Map reduce jobs in progress 34

- 35. The execution time The result Bigdata Analysis Course Venkat Reddy runtime • Note: I ran Hadoop on a very basic machine(1.5 GB RAM , i3 processor on,32bit virtual machine). • This example is just for demo purpose, the same query will take much lesser time, if we are running on a multi node cluster setup 35

- 36. Bigdata example: Results • ‘C’ happens to be most popular tag • It took around 15 minutes to get these insights Bigdata Analysis Course Venkat Reddy • The query returns , means there are nearly 6 million stack overflow questions and tags • Similarly we can run other map reduce jobs on the tags to find out most frequent topics. 36

- 37. • In the above example, we have the stack overflow questions and corresponding tags • Can we use some supervised machine learning technique to predict the tags for the new questions? • Can you write the map reduce code for Naïve Bayes algorithm/Random forest? • How is Wikipedia highlighting some words in your text as hyperlinks? • How can YouTube suggest you relevant tags after you upload a video? • How is amazon recommending you a new product? • How are the companies leveraging bigdata analytics? Bigdata Analysis Course Venkat Reddy Advanced analytics… 37

- 38. Bigdata use cases • • • Amazon has been collecting customer information for years--not just addresses and payment information but the identity of everything that a customer had ever bought or even looked at. While dozens of other companies do that, too, Amazon’s doing something remarkable with theirs. They’re using that data to build customer relationship • • • Corporations and investors want to be able to track the consumer market as closely as possible to signal trends that will inform their next product launches. LinkedIn is a bank of data not just about people, but how people are making their money and what industries they are working in and how they connect to each other. Bigdata Analysis Course Venkat Reddy Ford collects and aggregates data from the 4 million vehicles that use in-car sensing and remote app management software The data allows to glean information on a range of issues, from how drivers are using their vehicles, to the driving environment that could help them improve the quality of the vehicle 38

- 39. Bigdata use cases • • • • Largest retail company in the world. Fortune 1 out of 500 Largest sales data warehouse: Retail Link, a $4 billion project (1991). One of the largest “civilian” data warehouse in the world: 2004: 460 terabytes, Internet half as large Defines data science: What do hurricanes, strawberry Pop-Tarts, and beer have in common? • • • Includes financial and marketing applications, but with special focus on industrial uses of big data When will this gas turbine need maintenance? How can we optimize the performance of a locomotive? What is the best way to make decisions about energy finance? Bigdata Analysis Course Venkat Reddy AT&T has 300 million customers. A team of researchers is working to turn data collected through the company’s cellular network into a trove of information for policymakers, urban planners and traffic engineers. The researchers want to see how the city changes hourly by looking at calls and text messages relayed through cell towers around the region, noting that certain towers see more activity at different times 39

- 40. -Venkat Reddy Bigdata Analysis Course Venkat Reddy Thank you 40