Big data.pptx

- 1. BIG DATA

- 2. WHAT IS BIG DATA ? • Big data refers to data sets that are too large or complex to be dealt with by traditional data-processing application software. • The definition of Big Data is data that contains greater variety, arriving in increasing volumes and with more velocity.

- 3. HISTORY OF BIG DATA 1999 VMware began selling VMware Workstation, allowing users to set up virtual machines. 2002-2006 Amazon Web Services (AWS) launched as a free service. AWS started offering web-based computing infrastructure services, now known as cloud computing. 2018 Leading data center operators started the migration to 400G data speeds. 2021 Data center speeds have exceeded to 1,000G.

- 4. HISTORY OF BIG DATA 1983 IBM released its first commercially available relational database, DB2. 1989 Implementation of the Python programming language began. 1998 Carlo Strozzi developed NoSQL, an open-source relational database.

- 5. SMALL DATA VS BIG DATA Small data Data in the range of tens or hundreds of Gigabytes Contains less noise as data is less collected in a controlled manner It requires batch-oriented processing pipelines. Database – SQL Structured data in tabular format with fixed schema(Relational) Data can be optimized manually(human powered) BIG DATA Size of Data is more than Terabytes the quality of data is not guaranteed It has both batch and stream processing pipelines Database - NoSQL Numerous variety of data set including tabular data, text, audio, images, video, logs, JSON etc.(Non Relational) Requires machine learning techniques for data optimization

- 6. Current Situation Of The Digital World

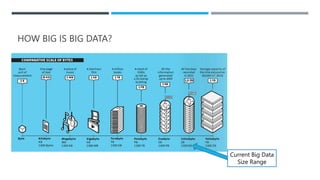

- 7. HOW BIG IS BIG DATA? Current Big Data Size Range

- 8. 3 V’S OF BIG DATA • Volume • The amount of data matters. • With big data, you’ll have to process high volumes of low-density, unstructured data. • This can be data of unknown value, such as Twitter data feeds, clickstreams on a web page or a mobile app, or sensor-enabled equipment. • Velocity • Velocity is the fast rate at which data is received and (perhaps) acted on. • Normally, the highest velocity of data streams directly into memory versus being written to disk. • Some internet-enabled smart products operate in real time or near real time and will require real-time evaluation and action. • Variety • Variety refers to the many types of data that are available. • Traditional data types were structured and fit neatly in a relational database. • Sometimes data comes in new unstructured data types.

- 9. 5 V’S OF BIG DATA

- 10. DATA ANALYTICS PROCESS Big data analytics describes the process of uncovering trends, patterns, and correlations in large amounts of raw data to help make data-informed decisions. Hadoop, Spark, and NoSQL databases databases were created for the storage storage and processing of big data. Process Data Clean Data Analyze Data Collect Data

- 11. BIG DATA WORKFLOW • Integrate - • Big data brings together data from many disparate sources and applications. • Traditional data integration mechanisms (ETL) generally aren’t up to the task. • During integration, you need to bring in the data, process it, and make sure it’s formatted and available in a form that your business analysts can get started with. • Manage • Big data requires storage. Your storage solution can be in the cloud, on premises, or both. • Many people choose their storage solution according to where their data is currently residing. • The cloud is gradually gaining popularity because it supports your current compute requirements and enables you to spin up resources as needed. • Analyze • Your investment in big data pays off when you analyze and act on your data. • Get new clarity with a visual analysis of your varied data sets. • Build data models with machine learning and artificial intelligence.

- 12. IMPACT OF BIG DATA • Big data provides useful (if being processed appropriately) and the latest information/insights for businesses so that they can make smarter and faster decisions. • big data also has a huge impact on today’s workforce as well. • A whitepaper by Seagate IDC predicted that the global datasphere will be 175 ZB by the year 2025. • Big data is really the future of businesses and also have a positive impact on the society, economy, and the workforce. • The use of big data is leading to more jobs , new standards and regulatory structures.

- 13. BIG DATA MARKET FORECAST • The Big Data Market size is projected to grow from USD 162.6 billion in 2021 to 273.4 USD billion in 2026, at a Compound Annual Growth Rate (CAGR) of 11.0% during the forecast period. • Rise in data connectivity through cloud computing and incorporation of digital transformation in top-level strategies. • The massive growth of data and an increase in the number of mobile apps and IoT devices are the factors contributing to the growth of the big data market.

- 15. BENEFITS OF BIG DATA • Better decision making • Greater innovations • Improvement in education sector • Product price optimization • Recommendation engines • Life-Saving application in the healthcare industry

- 16. FUTURE OF BIG DATA • Data volumes will continue to increase and migrate to the cloud. • Machine learning will continue to change the landscape. • Data scientists and CDOs(Chief Data Officers) will be in high demand. • Privacy will remain a hot issue. • Fast data and actionable data will come to the forefront.

- 17. THANK YOU