Building a MLOps Platform Around MLflow to Enable Model Productionalization in Just a Few Minutes

- 1. Building a MLOps platform around mlflow to enable model productionalization in just a few minutes Milan Berka ML Engineer @ DataSentics

- 2. Agenda I. Few words about DataSentics II. Motivation - common data analytical problems we encounter III. MLOps – how do we understand it, what it means for getting data scientist’s model into production within our platform IV. Demo V. Lessons learned and next steps

- 3. Few words about us Agile Data Analytics platform Make data science and machine learning have a real impact on organizations across the world - demystify the hype and black magic surrounding AI/ML and bring to life transparent production-level data science solutions and products delivering tangible impact and innovation. European Data Science Center of Excellence based in Prague ▪ Machine learning and cloud data engineering boutique ▪ Helping customers build end-to-end data solutions in cloud ▪ Incubator of ML-based products ▪ 80 specialist (data science, data/software engineering) ▪ Partner of Databricks & Microsoft

- 4. Problems we commonly encounter At our own data-driven products and at our customers: To combat these problems, we assembled a dedicated team: TechSentics, whose goal is to systematically scale the technical quality of data driven products and deliver ML systems which tackle those problems in context of enterprise security and other processes. We want a small agile team of data scientists and engineers to be able to prototype new advanced analytics use cases using diverse internal and external data in weeks and bring them into production in a few months We need high quality data for our model development, and we need to have access to the data! We want the feature store! We don’t want to buy magical AI black box solutions. We want our own open platform We want to focus on the use cases and logic, not the DevOps and plumbing We put together a team of data scientists, they create a ML model, everyone tells how performant it is, but despite this, it never gets beyond the experimentation phase and never really affects anything. As data scientists, we feel that software engineers and software architects sometimes do not understand us! Data scientists need real production data for the experimentation and model development. Model != code, need different skills to make, different tests to apply! Sometimes it takes hours to get a reasonable model, sometimes it takes weeks or more! There is a rich cloud-based service offering that claim to address the problems, but there is always some stitching required to make it work.

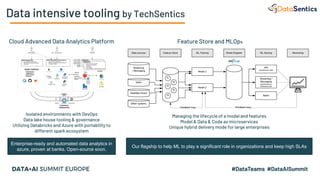

- 5. Data intensive tooling by TechSentics Cloud Advanced Data Analytics Platform Feature Store and MLOps Isolated environments with DevOps Data lake house tooling & governance Utilizing Databricks and Azure with portability to different spark ecosystem Managing the lifecycle of a model and features Model & Data & Code as microservices Unique hybrid delivery mode for large enterprises Enterprise-ready and automated data analytics in azure, proven at banks. Open-source soon. Our flagship to help ML to play a significant role in organizations and keep high SLAs

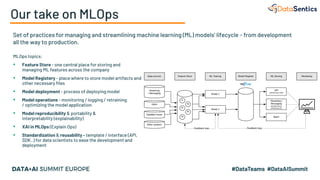

- 6. Our take on MLOps Set of practices for managing and streamlining machine learning (ML) models' lifecycle - from development all the way to production. MLOps topics: ▪ Feature Store - one central place for storing and managing ML features across the company ▪ Model Registery - place where to store model artifacts and other necessary files ▪ Model deployment - process of deploying model ▪ Model operations - monitoring / logging / retraining / optimizing the model application ▪ Model reproducibility & portability & interpretability (explainability) ▪ XAI in MLOps (Explain Ops) ▪ Standardization & reusability - template / interface (API, SDK..) for data scientists to ease the development and deployment

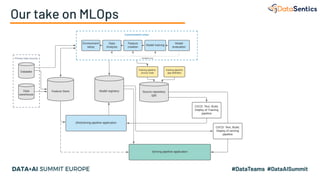

- 7. Our take on MLOps

- 8. Demo

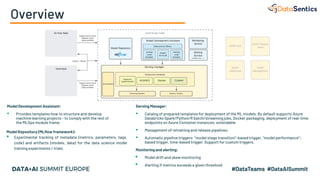

- 9. Overview Model Development Assistant: ▪ Provides templates how to structure and develop machine learning projects - to comply with the rest of the MLOps module frame. Model Repository (MLflow framework): ▪ Experimental tracking of metadata (metrics, parameters, tags, code) and artifacts (models, data) for the data science model training experiments / trials. Serving Manager: ▪ Catalog of prepared templates for deployment of the ML models. By default supports Azure Databricks Spark/Python/R batch/streaming jobs, Docker packaging, deployment of real-time endpoints on Azure Container Instances; extendable ▪ Management of retraining and release pipelines. ▪ Automatic pipeline triggers: “model stage transition”-based trigger, “model performance”- based trigger, time-based trigger. Support for custom triggers. Monitoring and alerting: ▪ Model drift and skew monitoring ▪ Alerting if metrics exceeds a given threshold

- 10. Lessons Learned & Next steps Lessons learned • MLOps is not easy to adopt, requires a lot of involvement and is still rather open topic • mlflow in its open source form is quite naked and there is a lot to be developed. Especially surprising is the artifact store “bypass” • Data Scientists are not always super-used to some common software practices such as git • Writing ML tests can be tricky • Models can be trained directly in Automation tools ☺ • There is a lot of interest from both internal and external teams => we shipped the platform to our customers as well Next steps for our platform: - One centralized MLOps module interface & front-end, instead of multiple interfaces for each component (replacing the need to interact with the Azure DevOps (or other similar provider), mlflow, ACI, Azure Monitor front-ends for certain parts of the workflow). - Introduce ExplainOps component, providing explanations for obtained predictions. - Richer deployment offering (canary deployments, wider target environment type support) - Tighter feature store integration

- 11. Call to action As mentioned, because of the high interest, we are about to… … open-source the infrastructural parts of the platform! Let’s push this forward together! We are looking for first 2-3 companies willing to use it in challenging environment & contribute.

- 12. Platform Team - kudos Jan ProchazkaTomas Kresal Anastasia Lebedeva Jiri Koutny Michal Halenka Marek Lipan Nikola Valesova Michal Mlaka Oldrich Vlasic Ondrej Havlicek Jan Perina Samuel Messa

- 13. Thank you

- 14. Feedback Your feedback is important to us. Don’t forget to rate and review the sessions.