CC -Unit4.pptx

- 1. AZURE CLOUD AND CORE SERVICES • Azure Synapse Analytics-HDInsight-Azure Data bricks - Usage of Internet of Things (IoT) Hub-IoT Central-Azure Sphere-Azure Cloud shell and Mobile Apps.

- 2. Azure Synapse Analytics • Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing and big data analytics. • It gives you the freedom to query data on your terms, using either server less or dedicated options—at scale. • Azure Synapse brings these worlds together with a unified experience to ingest, explore, prepare, transform, manage and serve data for immediate BI and machine learning needs.

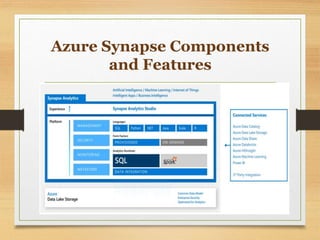

- 4. Azure Synapse Components and Features • Azure Synapse Analytics is a limitless analytics service that brings together data integration, enterprise data warehousing and big data analytics. • It gives you the freedom to query data on your terms, using either server less or dedicated options—at scale. • Azure Synapse brings these worlds together with a unified experience to ingest, explore, prepare, transform, manage and serve data for immediate BI and machine learning needs.

- 5. Azure Synapse Components and Features • Synapse Analytics is basically an analytics service that has a virtually unlimited scale to support analytics workloads • Synapse Workspaces (in preview as of Sept 2020) provides an integrated console to administer and operate different components and services of Azure Synapse Analytics • Synapse Analytics Studio is a web-based IDE to enable code-free or low-code developer experience to work with Synapse Analytics • Synapse supports a number of languages like SQL, Python, .NET, Java, Scala, and R that are typically used by analytic workloads • Synapse supports two types of analytics runtimes – SQL and Spark (in preview as of Sept 2020) based that can process data in a batch, streaming, and interactive manner • Synapse is integrated with numerous Azure data services as well, for example, Azure Data Catalog, Azure Lake Storage, Azure Databricks, Azure HDInsight, Azure Machine Learning, and Power BI • Synapse also provides integrated management, security, and monitoring related services to support monitoring and operations on the data and services supported by Synapse • Data Lake Storage is suited for big data scale of data volumes that are modeled in a data lake model. This storage layer acts as the data source layer for Synapse. Data is typically populated in Synapse from Data Lake Storage for various analytical purposes

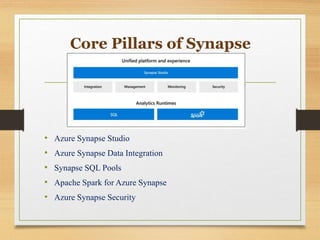

- 6. Core Pillars of Synapse • Azure Synapse Studio • Azure Synapse Data Integration • Synapse SQL Pools • Apache Spark for Azure Synapse • Azure Synapse Security

- 7. Features • Centralized Data Management • Workload Isolation • Machine Learning Integration • Supply chain forecasting • Inventory reporting • Predictive maintenance • Anomaly detection

- 8. Benefits • Accelerate Analytics & Reporting • Better BI & Data Visualization • Increased IT productivity • Limitless Scaling

- 9. Azure HDInsight • What is Azure HDInsight? • Azure HDInsight Features • Azure HDInsight Architecture • Azure HDInsight Metastore Best Practices • Azure HDInsight Migration • Azure HDInsight Security and DevOps • Azure HDInsight Uses • Data Warehousing • Internet of Things (IoT) • Data Science • Hybrid Cloud

- 10. Azure HDInsight • Azure HDInsight is a service offered by Microsoft, that enables us to use open source frameworks for big data analytics. • Azure HDInsight allows the use of frameworks like Hadoop, Apache Spark, Apache Hive, LLAP, Apache Kafka, Apache Storm, R, etc., for processing large volumes of data. • These tools can be used on data to perform extract, transform, and load (ETL,) data warehousing, machine learning, and IoT.

- 11. Azure HDInsight Features • Cloud and on-premises availability • Scalable and economical • Security • Monitoring and analytics • Global availability • Highly productive

- 12. Azure HDInsight Architecture • It is recommended that you migrate an on-premises Hadoop cluster to Azure HDInsight using multiple workload clusters rather than a single cluster. • On-demand transient clusters are used so that the clusters are deleted after the workload is complete. • In HDInsight clusters, as storage-and-compute can be used from Azure Storage, Azure Data Lake Storage, or both, it is best to separate data storage from processing.

- 13. Azure HDInsight Metastore • The Apache Hive Metastore is an important aspect of the Apache Hadoop architecture since it serves as a central schema repository for other big data access resources including Apache Spark, Interactive Query (LLAP), Presto, and Apache Pig.

- 14. Types of HDInsight Metastore • A default metastore can be created for free for any cluster type, but if one is created it cannot be shared. • The use of custom metastores is recommended for production clusters since they can be created and removed without loss of metadata. It is suggested to use a custom metastore to isolate compute and metadata and to periodically back it up.

- 15. Azure HDInsight Migration • Script migration or replication can be used to migrate Hive metastore. • Migration Using DB Replication • Migrating over TLS • Migrating offline

- 16. Azure HDInsight Uses • Data Warehousing • Internet of Things (IoT) • Data Science • Hybrid Cloud

- 17. Azure Data bricks • Azure Databricks is a data analytics platform optimized for the Microsoft Azure cloud services platform. • Azure Databricks offers three environments: 1.Databricks SQL 2.Databricks data science and engineering 3.Databricks machine learning

- 18. Databricks SQL • Databricks SQL provides a user-friendly platform. • This helps analysts, who work on SQL queries, to run queries on Azure Data Lake, create multiple virtualizations, and build and share dashboards.

- 19. Databricks Data Science and Engineering • Databricks data science and engineering provide an interactive working environment for data engineers, data scientists, and machine learning engineers. • The two ways to send data through the big data pipeline are: 1.Ingest into Azure through Azure Data Factory in batches 2.Stream real-time by using Apache Kafka, Event Hubs, or IoT Hub

- 20. Databricks Machine Learning • Databricks machine learning is a complete machine learning environment. • It helps to manage services for experiment tracking, model training, feature development, and management. • It also does model serving.

- 21. Pros and Cons of Azure Databricks Pros • It can process large amounts of data with Databricks and since it is part of Azure; the data is cloud-native. • The clusters are easy to set up and configure. • It has an Azure Synapse Analytics connector as well as the ability to connect to Azure DB. • It is integrated with Active Directory. • It supports multiple languages. Scala is the main language, but it also works well with Python, SQL, and R.

- 22. Pros and Cons of Azure Databricks Cons • It does not integrate with Git or any other versioning tool. • It, currently, only supports HDInsight and not Azure Batch or AZTK.

- 23. Databricks SQL • Databricks SQL allows you to run quick ad-hoc SQL queries on Data Lake. Integrating with Azure Active Directory enables to run of complete Azure-based solutions by using Databricks SQL. • The interface that allows the automation of Databricks SQL objects is REST API. 1. Data Management 2. Computation Management 3. Authorization

- 24. Data Management It has three parts: • Visualization: A graphical presentation of the result of running a query • Dashboard: A presentation of query visualizations and commentary • Alert: A notification that a field returned by a query has reached a threshold

- 25. Computation Management Here, we will know about the terms that will help to run SQL queries in Databricks SQL. • Query: A valid SQL statement • SQL endpoint: A resource where SQL queries are executed • Query history: A list of previously executed queries and their characteristics

- 26. Authorization • User and group: The user is an individual who has access to the system. The set of multiple users is known as a group. • Personal access token: An opaque string is used to authenticate to the REST API. • Access control list: Set of permissions attached to a principal that requires access to an object. ACL (Access Control List) specifies the object and actions allowed in it.

- 27. Databricks Data Science & Engineering • Databricks Data Science & Engineering is, sometimes, also called Workspace. It is an analytics platform that is based on Apache Spark. • Databricks Data Science & Engineering comprises complete open- source Apache Spark cluster technologies and capabilities.

- 28. Databricks Data Science & Engineering Spark in Databricks Data Science & Engineering includes the following components: • Spark SQL and DataFrames: This is the Spark module for working with structured data. A DataFrame is a distributed collection of data that is organized into named columns. It is very similar to a table in a relational database or a data frame in R or Python. • Streaming: This integrates with HDFS, Flume, and Kafka. Streaming is real- time data processing and analysis for analytical and interactive applications. • MLlib: It is short for Machine Learning Library consisting of common learning algorithms and utilities including classification, regression, clustering, collaborative filtering, dimensionality reduction as well as underlying optimization primitives. • GraphX: Graphs and graph computation for a broad scope of use cases from cognitive analytics to data exploration. • Spark Core API: This has the support for R, SQL, Python, Scala, and Java.

- 29. Databricks Machine Learning With Databricks machine learning, we can: • Train models either manually or with AutoML • Track training parameters and models by using experiments with MLflow tracking • Create feature tables and access them for model training and inference • Share, manage, and serve models by using Model Registry

- 30. Usage of Internet of Things (IoT) Hub • Azure IoT Hub allows full-featured and scalable IoT solutions. Virtually, any device can be connected to Azure IoT Hub and it can scale up to millions of devices. • Events can be tracked and monitored, such as the creation, failure, and connection of devices. • Azure IoT hub is a managed IoT service which is hosted in the cloud. It allows bi-directional communication between IoT applications and the devices it manages. • This cloud-to-device connectivity means that you can receive data from your devices, but you can also send commands and policies back to the devices

- 31. Usage of Internet of Things (IoT) Hub Azure IoT Hub provides, • • Device libraries for the most commonly used platforms and languages for easy device connectivity. • • Secure communications with multiple options for device-to- cloud and cloud-to-device hyper-scale communication. • • Queryable storage of per-device state information as well as meta-data.

- 32. The lifecycle of devices with IoT Hub

- 33. The lifecycle of devices with IoT Hub • Plan Operators can create a device metadata scheme that allows them to easily carry out bulk management operations. • Provision New devices can be securely provisioned to IoT Hub and operators can quickly discover device capabilities. The IoT Hub identity registry is used to create device identities and credentials. • Configure Device management operations, such as configuration changes and firmware updates can be done in bulk or by direct methods, while still maintaining system security.

- 34. The lifecycle of devices with IoT Hub • Monitor Operators can be easily alerted to any issues arising and at the same time the device collection health can be monitored, as well as the status of any ongoing operations. • Retire Devices need to be replaced, retired or decommissioned. The IoT Hub identity registry is used to withdraw device identities and credentials.

- 35. Device management patterns IoT Hub supports a range of device management patterns including, • Reboot • Factory reset • Configuration • Firmware update • Reporting progress and status These patterns can be extended to fit your exact situation. Alternatively, new patterns can be designed based on these templates.

- 36. Connecting your devices You can build applications which run on your devices and interact with IoT Hub using the Azure IoT device SDK. Windows, Linux distributions, and real-time operating systems are supported platforms. Supported languages currently include, • C • C# • Java • Python • Node.js.

- 37. Services • Messaging Patterns • Device data can be routed • Message routing and event grid • Building end-to-end solutions • Security