Computer history

- 1. There is no easy answer to this question due to the many different classifications of computers. The first mechanical computer, created by Charles Babbage in 1822, doesn't really resemble what most would consider a computer today. Therefore, this document has been created with a listing of each of the computer firsts, starting with the Difference Engine and leading up to the computers we use today. Note: Early inventions which helped lead up to the computer, such as the abacus, calculator, and tablet machines, are not accounted for in this document. When was the first computer invented?

- 2. The word "computer" was first recorded as being used in 1613 and originally was used to describe a human who performed calculations or computations. The definition of a computer remained the same until the end of the 19th century, when the industrial revolution gave rise to machines whose primary purpose was calculating. The word "computer" was first used

- 3. In 1822, Charles Babbage conceptualized and began developing the Difference Engine, considered to be the first automatic computing machine. The Difference Engine was capable of computing several sets of numbers and making hard copies of the results. Babbage received some help with development of the Difference Engine from Ada Lovelace, considered by many to be the first computer programmer for her work and notes on the Difference Engine. Unfortunately, because of funding, Babbage was never able to complete a full-scale functional version of this machine. In June of 1991, the London Science Museum completed the Difference Engine No 2 for the bicentennial year of Babbage's birth and later completed the printing mechanism in 2000. First mechanical computer or automatic computing engine concept

- 4. In 1837, Charles Babbage proposed the first general mechanical computer, the Analytical Engine. The Analytical Engine contained an Arithmetic Logic Unit (ALU), basic flow control, and integrated memory and is the first general-purpose computer concept. Unfortunately, because of funding issues, this computer was also never built while Charles Babbage was alive. In 1910, Henry Babbage, Charles Babbage's youngest son, was able to complete a portion of this machine and was able to perform basic calculations.

- 5. The Z1 was created by German Konrad Zuse in his parents' living room between 1936 and 1938. It is considered to be the first electro- mechanical binary programmable computer, and the first really functional modern computer. First programmable computer

- 6. The Turing machine was first proposed by Alan Turing in 1936 and became the foundation for theories about computing and computers. The machine was a device that printed symbols on paper tape in a manner that emulated a person following a series of logical instructions. Without these fundamentals, we wouldn't have the computers we use today. First concepts of what we consider a modern computer

- 7. The first electric programmablecomputer The Colossus was the first electric programmable computer, developed by Tommy Flowers, and first demonstrated in December 1943. The Colossus was created to help the British code breakers read encrypted German messages.

- 8. Short for Atanasoff-Berry Computer, the ABC began development by Professor John Vincent Atanasoff and graduate student Cliff Berry in 1937. Its development continued until 1942 at the Iowa State College (now Iowa State University). The ABC was an electrical computer that used vacuum tubes for digital computation, including binary math and Boolean logic and had no CPU. On October 19, 1973, the US Federal Judge Earl R. Larson signed his decision that the ENIAC patent by J. Presper Eckert and John Mauchly was invalid and named Atanasoff the inventor of the electronic digital computer. The ENIAC was invented by J. Presper Eckert and John Mauchly at the University of Pennsylvania and began construction in 1943 and was not completed until 1946. It occupied about 1,800 square feet and used about 18,000 vacuum tubes, weighing almost 50 tons. Although the Judge ruled that the ABC computer was the first digital computer, many still consider the ENIAC to be the first digital computer because it was fully functional. The first digital computer

- 9. The TX-O (Transistorized Experimental computer) is the first transistorized computer to be demonstrated at the Massachusetts Institute of Technology in1956. The first minicomputer In 1960, Digital Equipment Corporation released its first of many PDP computers, the PDP-1. The first desktop and mass-market computer In 1964, the first desktop computer, the Programma 101, was unveiled to the public at the New York World's Fair. It was invented by Pier Giorgio Perotto and manufactured by Olivetti. About 44,000 Programma 101 computers were sold, each with a price tag of $3,200. In 1968, Hewlett Packard began marketing the HP 9100A, considered to be the first mass-marketed desktop computer. The first transistor computer

- 10. The first workstation Although it was never sold, the first workstation is considered to be the Xerox Alto, introduced in 1974. The computer was revolutionary for its time and included a fully functional computer, display, and mouse. The computer operated like many computers today utilizing windows, menus and icons as an interface to its operating system. Many of the computer's capabilities were first demonstrated in The Mother of All Demos byDouglas Engelbart on December 9, 1968. The firstmicroprocessor Intel introduces the first microprocessor, the Intel 4004 on November 15, 1971. The firstmicro-computer The Vietnamese-French engineer, André Truong Trong Thi, along with Francois Gernelle, developed the Micral computer in 1973. Considered as the first "micro-computer", it used the Intel 8008 processor and was the first commercial non-assembly computer. It originally sold for $1,750.

- 11. In 1975, Ed Roberts coined the term "personal computer" when he introduced the Altair8800. Although the first personal computer is considered by many to be the KENBAK-1, which was first introduced for $750 in 1971. The computer relied on a series of switches for inputting data and output data by turning on and off a series of lights. The first personal computer

- 12. The IBM 5100 is the first portable computer, which was released on September 1975. The computer weighed 55 pounds and had a five inch CRT display, tape drive, 1.9MHz PALM processor, and 64KB of RAM. In the picture is an ad of the IBM 5100 taken from a November 1975 issue of Scientific America. The first truly portable computer or laptop is considered to be the Osborne I, which was released on April 1981 and developed by Adam Osborne. The Osborne I weighed 24.5 pounds, had a 5-inch display, 64 KB of memory, two 5 1/4" floppy drives, ran theCP/M 2.2 operating system, included a modem, and cost US$1,795. The IBM PC Division (PCD) later released the IBM portable in 1984, it's first portable computer that weighed in at 30 pounds. Later in 1986, IBM PCD announced it's firstlaptop computer, the PC Convertible, weighing 12 pounds. Finally, in 1994, IBM introduced the IBM ThinkPad 775CD, the first notebook with an integrated CD-ROM. The first laptop or portablecomputer

- 13. The Apple I (Apple 1) was the first Apple computer that originally sold for $666.66. The computer kit was developed by Steve Wozniak in 1976 and contained a 6502 8-bit processor and 4 kb of memory, which was expandable to 8 or 48 kb using expansion cards. Although the Apple I had a fully assembled circuit board the kit still required apower supply, display, keyboard, and case to be operational. Below is a picture of an Apple I from an advertisement by Apple. The first Apple computer

- 14. IBM introduced its first personal computer called the IBM PC in 1981. The computer was code named and still sometimes referred to as the Acorn and had a 8088processor, 16 KB of memory, which was expandable to 256 and utilized MS-DOS. The firstPC clone The Compaq Portable is considered to be the first PC clone and was release in March 1983 by Compaq. The Compaq Portable was 100% compatible with IBM computers and was capable of running any software developed for IBM computers. The first IBMpersonal computer

- 15. The firstmultimedia computer In 1992, Tandy Radio Shack became one of the first companies to release a computer based on the MPC standard with its introduction of the M2500 XL/2 and M4020 SX computers. Other computer company firsts Below is a listing of some of the major computers companies first computers. Commodore - In 1977, Commodore introduced its first computer, the "Commodore PET". Compaq - In March 1983, Compaq released its first computer and the first 100% IBM compatible computer, the "Compaq Portable." Dell - In 1985, Dell introduced its first computer, the "Turbo PC." Hewlett Packard - In 1966, Hewlett Packard released its first general computer, the "HP-2115." NEC - In 1958, NEC builds its first computer, the "NEAC 1101." Toshiba - In 1954, Toshiba introduces its first computer, the "TAC" digital computer.

- 16. A single person did not create the Internet that we know and use today. Below is a listing of different people who have helped contribute to and develop the Internet. Theidea The initial idea of the Internet is credited to Leonard Kleinrock after he published his first paper entitled "Information Flow in Large Communication Nets" on May 31, 1961. In 1962, J.C.R. Licklider became the first Director of IPTO and gave his vision of a galactic network. Also, with ideas from Licklider and Kleinrock, Robert Taylor helped create the idea of the network that later became ARPANET. Initialcreation The Internet as we know it today first started being developed in the late 1960's in California in the United States. In the summer of 1968, the Network Working Group (NWG) held its first meeting, chaired by Elmer Shapiro, at the Stanford Research Institute (SRI). Other attendees included Steve Carr, Steve Crocker, Jeff Rulifson, and Ron Stoughton. In the meeting, the group discussed solving issues related to getting hosts to communicate with each other. In December 1968, Elmer Shapiro with SRI released a report "A Study of Computer Network Design Parameters." Based on this work and earlier work done by Paul Baran,Thomas Marill and others, Lawrence Roberts and Barry Wessler created the Interface Message Processor (IMP) specifications. Bolt Beranek and Newman, Inc. (BBN) was later awarded the contract to design and build the IMP subnetwork. Who invented the Internet?

- 17. General public learns about Internet The UCLA (University of California, Los Angeles) put out a press release introducing the public to the Internet on July 3, 1969. First networkequipment On August 29, 1969, the first network switch and the first piece of network equipment called "IMP" (Interface Message Processor) is sent to UCLA. On September 2, 1969, the first data moves from the UCLA host to the switch. The picture to the right is Leonard Kleinrock next to the IMP.

- 18. On Friday October 29, 1969 at 10:30 p.m., the first Internet message was sent from computer science Professor Leonard KleinRock's laboratory at UCLA, after the second piece of network equipment was installed at SRI. The connection not only enabled the first transmission to be made, but is also considered the first Internetbackbone. The first message to be distributed was "LO", which was an attempt at "LOGIN" byCharley S. Kline to log into the SRI computer from UCLA. However, the message was unable to be completed because the SRI system crashed. Shortly after the crash, the issue was resolved, and he was able to log into the computer. The first message andnetwork crash

- 19. E-mail is developed Ray Tomlinson sends the first network e-mail in 1971. It's the first messaging system to send messages across a network to other users. TCP is developed Vinton Cerf and Robert Kahn design TCP during 1973 and later publish it with the help of Yogen Dalal and Carl Sunshinein December of 1974 in RFC 675. Most people consider these two people the inventors of the Internet. First commercial network A commercial version of ARPANET, known as Telenet, is introduced in 1974 and considered to be the first Internet Service Provider (ISP). Ethernet is conceived Bob Metcalfe develops the idea of Ethernet in 1973. The Modemis introduced Dennis Hayes and Dale Heatherington released the 80-103A Modem in 1977. TheModem and their subsequent modems become a popular choice for home users to connect to the Internet and get online.

- 20. TCP/IP is created In 1978, TCP splits into TCP/IP, driven by Danny Cohen, David Reed, and John Shochto support real-time traffic. The creation of TCP/IP help create UDP and is later standardized into ARPANET on January 1, 1983. Today TCP/IP is still the primary protocolused on the Internet. DNS is introduced Paul Mockapetris and Jon Postel introduce DNS in 1984, which also introduces thedomain name system. The first Internet domain name, symbolics.com, is registered on March 15, 1985 by Symbolics, a Massachusetts computer company.

- 21. Firstcommercial dial-upISP The first commercial Internet Service Provider (ISP) in the US, known as "The World", is introduced in 1989. The World was the first ISP to be used on what we now consider to be the Internet. HTML In 1990, Tim Berners-Lee develops HTML, which made a huge contribution to how we navigate and view the Internet today. WWW Tim Berners-Lee introduces WWW to the public on August 6,1991. The World Wide Web (WWW) is what most people today consider the "Internet" or a series of sites and pages that are connected with links. The Internet had hundreds of people who helped develop the standards and technologies used today, but without the WWW, the Internet would not be as popular as it is today.

- 22. Mosaic is the first widely used graphical World Wide Web browser developed and first released on April 22, 1993 by the NCSA with the help of Marc Andreessen and Eric Bina. A big competitor to Mosaic was Netscape, which was released a year later. Today, most of the Internet browsers we use today, e.g. Internet Explorer, Chrome, Firefox, etc., got their inspiration from the Mosaic browser. JavaandJavaScript Originally known as oak, Java is a programming language developed by James Gosling and others at Sun Microsystems. It was first introduced to the public in 1995 and today is widely used to create Internet applications and other software programs. JavaScript was developed by Brendan Eich in 1995 and originally known as Live Script. It was first introduced to the public with Netscape Navigator 2.0 and was renamed to JavaScript with the release of Netscape Navigator 2.0B3. JavaScript is an interpreted client-side scripting language that allows a web designer the ability to insert code into their web page. First graphical Internet browser

- 23. In a technical sense, a server is an instance of a computer program that accepts and responds to requests made by another program, known as a client. Less formally, any device that runs server software could be considered a server as well. Servers are used to manage network resources. For example, a user may setup a server to control access to a network, send/receive e- mail, manage print jobs, or host a website. Some servers are committed to a specific task, often referred to as dedicated. As a result, there are a number of dedicated server categories, like print servers, file servers, network servers, and database servers. However, many servers today are shared servers which can take on the responsibility of e-mail, DNS, FTP, and even multiple websites in the case of a web server. Because they are commonly used to deliver services that are required constantly, most servers are never turned off. Consequently, when servers fail, they can cause the network users and company many problems. To alleviate these issues, servers are commonly high-end computers setup to be fault tolerant. Server Examples of servers There are many classifications of servers. Below is a list of the most common types of servers. i. Application server ii. Blade server iii. Cloud server iv. Database server v. Dedicated server vi. File server vii. Print server viii. Proxy server ix. Standalone server x. Web server

- 24. An input device is any hardware device that sends data to a computer, allowing you to interact with and control the computer. The picture shows a Logitech trackball mouse, an example of an input device. The most commonly used or primary input devices on a computer are the keyboard and mouse. However, there are dozens of other devices that can also be used to input data into the computer. Below is a list of computer input devices that can be utilized with a computer or a computing device. Input Device

- 25. Audio conversion device Barcode reader Biometrics (e.g. fingerprint scanner) Business Card Reader Digital camera and Digital Camcorder Electroencephalography (EEG) Finger (with touchscreen or Windows Touch) Gamepad, Joystick, Paddle, Steering wheel, and Microsoft Kinect Gesture recognition Graphics tablet Keyboard Light gun and light pen scanner Magnetic ink (like the ink found on checks) Magnetic stripe reader Medical imaging devices (e.g., X-Ray, CAT Scan, and Ultrasound images) Microphone (using voice speech recognition or biometric verification) MIDI keyboard Types of input devices MICR Mouse, touchpad, or other pointing device Optical Mark Reader (OMR) Paddle Pen or Stylus Punch card reader Remote Scanner Sensors (e.g. heat and orientation sensors) Sonar imaging devices Touchscreen Voice (using voice speech recognition or biometric verification) Video capture device VR helmet and gloves Webcam Yoke

- 26. An output device is any peripheral that receives data from a computer, usually for display, projection, or physical reproduction. For example, the image shows an inkjet printer, an output device that can make a hard copy of any information shown on your monitor. Another example of an output device is a computer monitor, which displays an image that is received from the computer. Monitors and printers are two of the most common output devices used with a computer. Output device

- 27. Types of output devices 3D Printer Braille embosser Braille reader Flat panel GPS Headphones Computer Output Microfilm (COM) Monitor Plotter Printer (Dot matrix printer, Inkjet printer, and Laser printer) Projector Sound card Speakers Speech-generating device (SGD) TV Video card

- 28. Short for Global Positioning System, GPS is a network of satellites that helps users determine a location on Earth. The thought of GPS was conceived after the launch of Sputnik in1957. In 1964, the TRANSIT system became operational on U.S. Polaris submarines and allowed for accurate positioning updates. Later this became available for commercial use in1967. The picture shows an example of the GARMIN nuvi 350, a GPS used to find locations while driving. On September 1, 1983 Soviet jets shot down a civilian Korean Air Lines Flight 007 flying from New York to Seoul and kill all 269 passengers and crew. As a result of this mistake, President Ronald Regan orders the U.S. military to make Global Positioning System (GPS) available for civilian use. Today, with the right equipment or software, anyone can establish a connection to these satellites to establish his or her location within 50 to 100 feet. GPS

- 29. Often no larger than a toaster and only weighing a few pounds, a projector is an output device that can take images generated by a computer and reproduce them on a large, flat (usually lightly colored) surface. For example, projectors are used in meetings to help ensure that all participants can view the information being presented. The picture is that of a View Sonic projector. Projector

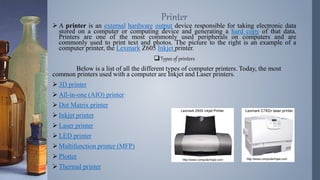

- 30. A printer is an external hardware output device responsible for taking electronic data stored on a computer or computing device and generating a hard copy of that data. Printers are one of the most commonly used peripherals on computers and are commonly used to print text and photos. The picture to the right is an example of a computer printer, the Lexmark Z605 Inkjet printer. Types of printers Below is a list of all the different types of computer printers. Today, the most common printers used with a computer are Inkjet and Laser printers. 3D printer All-in-one (AIO) printer Dot Matrix printer Inkjet printer Laser printer LED printer Multifunction printer (MFP) Plotter Thermal printer Printer

- 31. The first mechanical printer was invented by Charles Babbage, for use with theDifference Engine, which Babbage developed in 1822. Babbage's printer utilized metal rods with printed characters on each rod to print text on rolls of paper that were fed through the device. While inkjet printers started being developed in the late 1950s, it wasn't until the late 1970s that inkjet printers were able to reproduce good quality digital images. These better quality inkjet printers were developed by multiple companies, including Canon,Epson, and Hewlett-Packard. In the early 1970s, Gary Starkweather invented the laser printer while working at Xerox. He modified a Xerox 7000 copier to essentially be a laser printer, by having a laser output electronic data onto paper. However, it wasn't until 1984 that laser printers became more widely and economically available when Hewlett-Packard introduced the first low- cost laser printer, the HP LaserJet. The following year, Apple introduced the Apple LaserWriter laser printer, adding the PostScript technology to the printer market. History of the printer

- 32. › There are different interfaces or ways a printer can connect to the computer. Today, the most common cable and ways a printer connects to a computer is by using a USB or Wi-Fi connection. Below is a full list of cables and interfaces used to connect a computer to a printer. › Cat5 › Firewire › MPP-1150 › Parallel port › SCSI › Serial port › USB › Wi-Fi USB CABLE AND PORT

- 33. Short for television a TV or telly is an electronics device that receives a visual and audible signal and plays it to the viewer. Although debated, the TV is often credited as being invented by Vladimir Zworykin a Russian-born American who worked for Westinghouse, and Philo Taylor Farnsworth, a boy in Beaver City, Utah. Vladimir held the patent for the TV, but it was Farnsworth who was the first person to first successfully transmit a TV signal on September 7, 1927. The picture of the Panasonic TH-58PZ750U is a good example of a television. TV

- 34. A Floppy Disk Drive, also called FDD or FD for short, is a computer disk drive that enables a user to save data to removable diskettes. Although 8" disk drives were first made available in 1971, the first real disk drives used were the 5 1/4" floppy disk drives, which were later replaced with the 3 1/2" floppy disk drives. A 5 1/4"floppy disk was capable of storing between 360KB and 1.2MB of data, and the 3 1/2" floppy disk was capable of storing between 360KB and 1.44MB of data. For both sizes of floppy disk, the amount of data that could be stored was dependent on whether the disk was single or double sided and whether the disk was regular or high density. Today, due to their extremely limited capacity, computers no longer come equipped with floppy disk drives. This technology has largely been replaced with CD-R, DVD-R, and flash drives. FDD

- 35. Alternatively known as a display adapter, graphics card, video adapter, video board, or video controller, a video card is an IC or internal board that creates a picture on a display. Without a video card, you would not be able to see this page. Video card

- 36. Video card ports The picture above is an example of a video card with three connections, or video ports, on the back. VGA connector S-Video connector DVI connector In the past, VGA or SVGA was the most popular connection used with computer monitors.Today, most flat panel displays use the DVI connector or HDMI connector (not pictured above). Video card expansion slots In the picture above, the video card is inserted into the AGP expansion slot on the computer motherboard. Over the development of computers, there have been several types of expansion slots used for video cards. Today, the most common expansion slot for video cards is PCIe, which replaced AGP, which replaced PCI, which replaced ISA. Note: Some OEM computers and motherboards may have a video card on-board or integrated into the motherboard.

- 37. Short for speech-generating device, an SGD, is an electronic output device that is used to help individuals with severe speech impairments or other issues that result in difficulty in communicating. For example, SGDs can be utilized by those who have Lou Gehrig's disease (such as astrophysicistSteven Hawking, shown right) to supplement or replace speech or writing for them. Speech- generating devices also find use with children who are suspected of having speech deficiencies. How do they work? Although SGDs vary in their design, most consist of several pages of words or symbols on a touch screen that the user may choose. As the individual makes their choices, suggestions are made for the next symbol or word based on what they might want to say. Additionally, the communicator can navigate the various pages manually if the predictive system fails. Most speech-generating can produce electronic voice output by utilizing either speech synthesis or via digital recordings of someone speaking. SGD

- 38. 1. A speaker is a term used to describe the user who is giving vocal commands to a software program. 2. A hardware device connected to a computer's sound cardthat outputs sound generated by the computer. The picture shows the Altec Lansing VS2221 speakers with subwooferand resemble what most computer speakers look like today. When computers were originally released, they had on-board speakers that generated a series of different tones and beeps. As multimedia and games became popular, higher quality computer speakers began to be released that required additional power. Because computer sound cards are not powerful enough to power a nice set of speakers, today's speakers are self-powered, relatively small in size, and contain magnetic shielding. Rating a speaker Speakers are rated in Frequency response, Total Harmonic Distortion, and Watts. The Frequency response is the rate of measurement of the highs and lows of the sounds the speaker produces. Total Harmonic Distortion (THD) is the amount of distortion created by amplifying the signal. The Watts is the amount of amplification available for the speakers. Speaker

- 39. Alternatively referred to as an audio output device, sound board, or audio card. A sound card is an expansion card or IC for producing sound on a computer that can be heard through speakers or headphones. Although the computer does not need a sound device to function, they are included on every machine in one form or another, either in an expansion slot (sound card) or on the motherboard (onboard). Sound Card

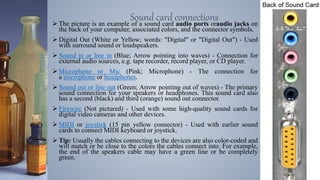

- 40. The picture is an example of a sound card audio ports oraudio jacks on the back of your computer, associated colors, and the connector symbols. Digital Out (White or Yellow; words: "Digital" or "Digital Out") - Used with surround sound or loudspeakers. Sound in or line in (Blue; Arrow pointing into waves) - Connection for external audio sources, e.g. tape recorder, record player, or CD player. Microphone or Mic (Pink; Microphone) - The connection for a microphone or headphones. Sound out or line out (Green; Arrow pointing out of waves) - The primary sound connection for your speakers or headphones. This sound card also has a second (black) and third (orange) sound out connector. Firewire (Not pictured) - Used with some high-quality sound cards for digital video cameras and other devices. MIDI or joystick (15 pin yellow connector) - Used with earlier sound cards to connect MIDI keyboard or joystick. Tip: Usually the cables connecting to the devices are also color-coded and will match or be close to the colors the cables connect into. For example, the end of the speakers cable may have a green line or be completely green. Sound card connections

- 41. Games Audio CDs and listening to music Watch movies Audio conferencing Creating and playing Midi Educational software Business presentations Record dictations Voice recognition Uses of a computer sound card

- 42. A plotter is a computer hardware device much like a printer that is used for printing vector graphics. Instead of toner, plotters use a pen, pencil, marker, or another writing tool to draw multiple, continuous lines onto paper rather than a series of dots like a traditional printer. Though once widely used forcomputer-aided design, these devices have more or less been phased out by wide-format printers. Plotters are used to produce a hard copy of schematics and other similar applications. Advantages of plotters Plotters can work on very large sheets of paper while maintaining high resolution. They can print on a wide variety of flat materials including plywood, aluminum, sheet steel, cardboard, and plastic. Plotters allow the same pattern to be drawn thousands of time without any image degradation. Disadvantages Plotters are quite large when compared to a traditional printer. Plotters are also much more expensive than a traditional printer. Plotter

- 43. Sometimes referred to as earphones, headphones are a hardware device that either plugs into your computer (line out) or your speakers to privately listen to audio without disturbing anyone else. The picture is an example of a USB headset fromLogitech with a microphone and a popular solution for computer gaming. Headphones

- 44. Sometimes abbreviated as FPD, a flat-panel display is a thin screen display found on allportable computers and is the new standard fordesktop computers. Unlike (CRT) monitors, flat- panel displays use liquid-crystal display (LCD) or light- emitting diode (LED) technology to make them much lighter and thinner compared to a traditionalmonitor. The picture shows an example of an ASUSflat-panel display. Flat-panel display

- 45. A braille reader, also called a braille display, is an electronic device that allows a blind person to read the text displayed on a computer monitor. The computer sends the text to the output device, where it is converted to Braille and "displayed" by raising rounded pins through a flat surface on the machine. Braille readers come in various forms, including large units (about the size of a computer keyboard) and smaller units, designed to work with laptops and tablet computers. There are also Braille reader apps for smartphones and tablets that work in conjunction with a Bluetooth-connected Braille output device. Note: Some apps and devices also allow text input via the braille reader. Braille Reader

- 46. Bluetooth is a computing and telecommunications industry specification that describes how devices such as mobile phones, computers, or personal digital assistants can communicate with each other. Bluetooth is an RF technology that operates at 2.4 GHz, has an effective range of 32-feet (10 meters) (this range can change depending on the power class), and has a transfer rate of 1 Mbpsand throughput of 721 Kbps. How is Bluetooth used? A good example of how Bluetooth could be used is the ability to connect a computer to a cell phone without any wires or special connectors. The picture is an example of a USBBluetooth adapter from SMC. Below are some other examples of how Bluetooth can be used i. Bluetooth headphones - Headphones that can connect to any Bluetooth device. ii. Bluetooth keyboard and mouse - Wireless keyboards and mice. iii. Bluetooth speaker - Speakers that can connect to any Bluetooth audio device. iv. Bluetooth car - A car with Bluetooth can make hands-free calls in the car. v. Bluetooth watch or health monitor - Bluetooth wrists devices can transmit data over Bluetooth to other devices. vi. Bluetooth lock - Door lock that can allow you to remotely lock and unlock a door. Bluetooth

- 47. Created by Charles Hull in 1984, the 3D printer is a printing device that creates a physical object from a digital model using materials such as metal alloys, polymers, or plastics. An object's design typically begins in a computer aided design (CAD) software system, where its blueprint is created. The blueprint is then sent from the CAD system to the printer in a file format known as a Stereolithography (STL), which is typically used in CAD systems to design 3D objects. The printer then reads the blueprint in cross-sections and begin the process of recreating the object just as it appears in the computer aided design. In the picture below is an example of a 3D printer called the FlashForge. 3D Printer

- 48. 3D printers are used in many disciplines--aerospace engineering, dentistry, archaeology, biotechnology, and information systems are a few examples of industries that utilize them. As an example, a 3D printer might be used in the field of archaeology to physically reconstruct ancient artifacts that have been damaged over time, thus eliminating the need of a mold. Application of 3D printers

- 49. A yoke is a hardware input device used with computer games, such as flight simulation games, that allow a player to fly up or down, or back or forth by pulling or pushing the device. The picture shows an example of a flight yoke by Saitekand is a good example what a typical yoke that connects to your computer. Yoke

- 50. A webcam is a hardware camera connected to a computer that allows anyone connected to theInternet to view either still pictures or motion videoof a user or other object. The picture of theLogitech Webcam C270 is a good example of what a webcam may look. Today, most webcams are either embedded into the display with laptopcomputers or connected to the USB or FireWire port on the computer. Webcam

- 51. The first webcam is considered to be the XCoffee, also known as the Trojan Room coffee pot. The camera started in 1991 with the help of Quentin Stafford-Fraser and Paul Jardetzky and connected to the Internet in November of 1993 with the help of Daniel Gordon and Martyn Johnson. The camera monitored a coffee pot outside the Trojan Room in the University of Cambridge, so people didn't have to make trips to the coffee pot when it didn't have any coffee. After being mentioned in the press, the website had over 150,000 people online watching the coffee pot. The webcam went offline August 22, 2001. The picture to the left is an example of how the XCoffee appeared online. Note: Unlike a digital camera and digital camcorder, a webcam does not have any built-in storage. Instead, it is always connected to a computer and uses the computer hard drive as its storage.

- 52. Short for Virtual Reality, VR is a computer-generated artificial environment that allows a user to view, explore, and manipulate the environment and a term popularized by Jaron Lanier. Virtual Reality is a computer generated reality manipulated and explored using various input devices such as goggles, headphones, gloves, or a computer. Using these devices a user can browse throughout a virtual world or pickup and manipulate virtual objects. The picture shows a NASA employee using a VR system. VR

- 53. › Internal or external device that connects from the computer or device to a video camera or similar device capable of capturing a video signal. The video capturedevice is then capable of taking that video signal and converting it to a stored video format, allowing you to store, modify, and show video on a computer. The picture shows an example of an external video capture device from Geniatech that connects your digital camcorder to your computer using USB. There are also internal video capture devices that can be installed into your computer to capture video. Video capture

- 54. 1. In chat based communication such as IRC, voice is a feature used in moderated chat where users with a microphone to talk to one another, and those without, to spectate. This form of chat is used to cut back on typed questions or comments. 2. Voice chat is a real-time online discussion using amicrophone instead of typing. 3. Your voice can also be an input device with voice recognition to control your computer. Voice

- 55. Developed in August 1988, by Jarkko Oikarinen, IRC is short for Internet Relay Chat and is a popular chat service still in use today. IRC enables users to connect to a server using a software program or web service and communicate with each other live. For example, the Computer Hope chat roomuses an IRC server to allow its users to talk and ask computer questions live. To connect and chat with other IRC users, you must either have an IRC client or a web interface that connects you to IRC servers. There are numerous software IRC clients that enable users to connect and communicate to other IRC servers. We suggest the HydraIRC program. Below is a listing of some of the IRC commands that can be used while connected to an IRC server. Although most of these commands will work with most IRC clients and IRC servers, some commands may be invalid. IRC

- 56. A touchscreen is a monitor or other flat surface with a sensitive panel directly on the screen that registers the touch of a finger as an input. Instead of being touch-sensitive, some touchscreens use beams across the screen to create a grid that senses the presence of a finger. A touchscreen allows the user to interact with a device without a mouse or keyboard and is used with smartphones, tablets, computer screens, and information kiosks. Touchscreen history The idea of a touchscreen was first described and published by E.A. Johnson in 1965. It wasn't until the early 1970s that the first touchscreen was developed by Frank Beck and Bent Stumpe, engineers at CERN. Together they developed a transparent touchscreen that was first used in 1973. The first resistive touchscreen was developed by George Samuel Hurst in 1975 but wasn't produced and used until 1982. Touchscreen

- 57. Note: Not all touchscreens act the same, and any of the below actions may react differently on your device. Tap - A gentle touch or tap of the screen with a finger once to open an app or select an object. When comparing with a traditional computer a tap is the same action clicking themouse performs. Double-tap - Depending on where you are a double-tap can have different functions. For example, in a browser double-tapping the screen zooms into where you double- tapped. Double-tapping text in a text editor selects a word or section of words. Touch and hold - Gently touching and holding your finger over an object selects orhighlights the object. For example, you could touch and hold an icon and then drag it somewhere else on the screen or highlight text. Drag - After you have touched and held anything on the screen while keeping the your finger on the screen drag that object or highlight in any direction and then lift your finger off of the screen to drop it in place or stop highlighting. Swipe - Swiping your finger across the screen scrolls the screen in that direction. For example, pressing the finger at the bottom of the screen and quickly moving it up (swiping) scrolls the screen down. Pinch - Placing two fingers on the screen and then pinching them together zooms out and pinching your fingers together and then moving them away from each other zooms into where you started. How do you use the touchscreen?

- 58. 1. With hardware, a scanner or optical scanner is a hardwareinput device for a computer. Scanners allow a user to take a printed picture, drawing, or document and convert it into a digital file (either an image or a text file) so that it can stored, viewed, and edited on a computer. The picture shows an example of a flatbed photo scanner, the Epson V300. How is a scanner connected? A scanner can be connected to a computer using many different interfaces although today is most commonly connected to a computer using USB. Firewire Parallel SCSI USB Scanner

- 59. There are also other types of scanners that can be used with a computer. Sheetfed scanner - scanner that scans paper by feeding paper into the scanner. Handheld scanner - scanner that scans information by holding the scanner and dragging it over a page you want to scan. Card scanner - scanner designed to scan business cards. Note: A handheld scanner should not be confused with a barcode reader. 2. With software, a scanner may refer to any program that scans computer files for errors or other problems. A good example is an anti-virus program, which scans the files on the computer for any computer viruses or other malware. Other types of computer scanners

- 60. 1. When referring to a connection a remote connection or to connect remotely is another way of saying remote access. 2. A hardware device that allows a user to control a device or object in another location. For example, the TV remote allows a person to change the channel, volume, or turn on and off the TV without having to get up and press the buttons on the front of the TV. Dr. Robert Adler of Zenith invented the first cordless TV remote control in 1956, before that TV remotes had wires attached from the TV to the remote. The picture is an example of a remote, the Harmony One Universal Remote from Logitech. Remote

- 61. Early method of data storage used with early computers. Punch cards also known as Hollerith cards and IBM cards are paper cards containing several punched holes that were punched by hand or machine to represent data. These cards allowed companies to store and access information by entering the card into the computer. The picture is an example of a woman using a punch card machine to create a punch card. Punch card

- 62. Using a punch card machine like that shown in the picture above, data can be entered into the card by punching holes on each column to represent onecharacter. Below is an example of a punch card. How did punch cards work?

- 63. Once a card has been completed or the return key has been pressed the card technically "stores" that information. Because each card could only hold so much data if you wrote a program using punch cards (one card for each line of code) you would have a stack of punch cards that needed to remain in order. To load the program or read a punch card data, each card is inserted into a punch card reader that input the data from the card into a computer. As the card is inserted, the punch card reader starts on the left-top-side of the card and reads vertically starting at the top and moving down. After the card reader has read a column it moves to the next column. How can a human read a punch card? Most of the later punch cards printed at the top of the card what each card contained. So, for these cards you could examine the top of the card to see what was stored on the card. If an error was noticed on the card it would be re-printed. If no data was printed at the top of the card the human would need to know what number represented and then manually translate each column. If you are familiar with modern computers, this would be similar to knowing that binary " 01101000" and "01101001" are equal to "104" and "105", which in ASCII put together spells "hi".

- 64. How were punch cards used? Punch cards are known to be used as early as 1725 for controlling textile looms. The cards were later used to store and search for information in 1832 by Semen Korsakov. Later in 1890, Herman Hollerith developed a method for machines to record and store information on punch cards to be used for the US census. He later formed the company we know as IBM. Why were punch cards used? Early computers could not store files like today's computers. So, if you wanted to create a data file or a program the only way to use that data with other computers was to use a punch card. After magnetic media was created and began to be cheaper punch cards stopped being used. Are punch cards still used? Punch cards were the primary method of storing and retrieving data in the early 1900s, and began being replaced by other storage devices in the 1960s and today are rarely used or found.

- 65. Short for Optical Mark Reading or Optical Mark Recognition, OMR is the process of gathering information from human beings by recognizing marks on a document. OMR is accomplished by using a hardware device (scanner) that detects a reflection or limited light transmittance on or through piece of paper. OMR allows for the processing of hundreds or thousands of physical documents per hour. For example, students may recall taking tests or surveys where they filled in bubbles on paper (shown right) with pencil. Once the form had been completed, a teacher or teacher's assistant would feed the cards into a system that grades or gathers information from them. OMR

- 66. Short for Musical Instrument Digital Interface, MIDI is a standard for digitally representing and transmitting sounds that was first developed in the 1980s. The MIDI sound is played back through the hardware device or computer either through a synthesized audio sound or a waveform stored on the hardware device or computer. The quality of how MIDI sounds when played back by the hardware device or computer depends upon that device's capability. Many older computer sound cards have a MIDI port, as shown in the top right picture. This port allows musical instrument devices to be connected to the computer, such as a MIDI keyboard or a synthesizer. Before connecting any MIDI devices to the computer, you need to purchase a cable that takes the MIDI/Game port connection into the standard 5-pin DIN midi connector or a USB to MIDI converter. If you do not have a MIDI port, the most common way today to connect a MIDI device to a computer is to use a USB to MIDI port cable. Tip: The file extension .mid is used to save a MIDI file. MIDI

- 67. Sometimes abbreviated as mic, a microphone is a hardwareperipheral originally invented by Emile Berliner in 1877 that allows computer users to input audio into their computers. The picture is an example of Blue Microphones Yeti USB Microphone - Silver Edition and an example of a high-quality computer microphone. Most microphones connect to the computer using the "mic" port on the computer sound card. See our sound card definition for further information about these ports and an example of what they look like on your computer. Higher quality microphones or microphones with additional features such as the one shown on this page will connect to the USB port. What is a microphone used for on a computer? Below is a short list of all the different uses a microphone could be used for on a computer. Voice recorder, VoIP, Voice recognition,Computer gaming,Online chatting Recording voice for dictation, singing, and podcasts Recording musical instruments How does a microphone input data into a computer? Because the microphone sends information to the computer, it is considered an input device. For example, when a microphone is used to record a voice or music the information it records can be stored on the computer and played back later. Another great example of how a microphone is an input device is with voice recognition which uses your voice to command the computer at what task to perform. Microphone

- 68. A magnetic card reader is a device that can retrieve stored information from a magnetic card either by holding the card next to the device or swiping the card through a slot in the device. The picture is an example of a magnetic card reader and a good example of the type of card reader you would see at most retail stores. Magnetic card reader

- 69. When referring to computers and security, biometrics is the identification of a person by the measurement of their biological features. For example, a user identifying themselves to a computer or building by their finger print or voice is considered a biometrics identification. When compared to apassword, this type of system is much more difficult to fake since it is unique to the person. Below is a listing of all known biometric devices. Other common methods of a biometrics scan are a person's face, hand, iris, and retina. Types of biometric devices Face scanner - Biometric face scanners identify a person by taking measurements of a person face. For example, the distance between the persons chin, eyes, nose, and mouth. These types of scanners can be very secure assuming they are smart enough to distinguish between a picture of a person and a real person. Hand scanner - Like your finger print, the palm of your hand is also unique to you. A biometric hand scanner will identify the person by the palm of their hand. Finger scanner - Like the picture shown on this page a biometric finger scanner identifies the person by their finger print. These can be a secure method of identifying a person, however, cheap and less sophisticated finger print scanners can be duped a number of ways. For example, in the show Myth Busters they were able to fake a finger print using a Gummy Bear candy treat. Retina or iris scanner - A biometric retina or iris scanner identifies a person by scanning the iris or retina of their eyes. These scanners are more secure biometric authentication schemes when compared to the other devices because there is no known way to duplicate the retina or iris. Voice scanner - Finally, a voice analysis scanner will mathematically break down a person's voice to identify them. These scanners can help improve security but with some less sophisticated scanners can be bypassed using a tape recording. Biometrics