Deep learning with tensorflow

- 1. Hands-on Deep Learning with TensorFlow GDG Ahmedabad Women Techmakers

- 2. Interest Google NGRAM & GoogleTrends Trend of “Deep Learning” in Google Web Searches

- 3. Hype or Reality? Quotes I have worked all my life in Machine Learning, and I have never seen one algorithm knock over benchmarks like Deep Learning – Andrew Ng (Stanford &Baidu) Deep Learning is an algorithm which has no theoretical limitations of what it can learn; the more data you give and the more computational time you provide, the better it is – Geoffrey Hinton (Google) Human-level artificial intelligence has the potential to help humanity thrive more than any invention that has come before it – DileepGeorge (Co-Founder Vicarious) For a very long time it will be a complementary tool that human scientists and human experts can use to help them with the things that humans are not naturally good – Demis Hassabis (Co-Founder DeepMind)

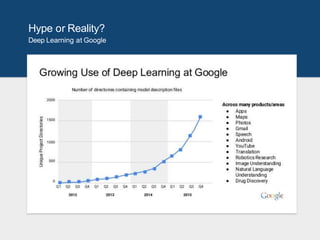

- 4. Hype or Reality? Deep Learning at Google

- 5. Hype or Reality? Deep Learning at Google

- 7. What is Learning? A closer look at how Humans learn

- 8. Basic Terminologies • Features • Labels • Examples • Labelled example • Unlabelled example • Models (Train and Test) • Classification model • Regression model

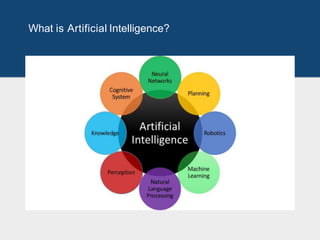

- 9. What is Artificial Intelligence?

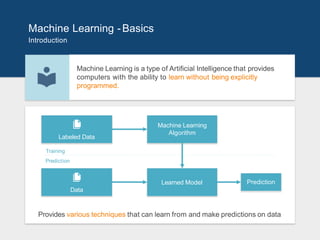

- 10. Machine Learning -Basics Introduction Machine Learning is a type of Artificial Intelligence that provides computers with the ability to learn without being explicitly programmed. Machine Learning Algorithm Learned Model Data Prediction Labeled Data Training Prediction Provides various techniques that can learn from and make predictions on data

- 11. Machine Learning -Basics Learning Approaches Supervised Learning: Learning with a labeled training set Example: email spam detector with training set of already labeled emails Unsupervised Learning: Discovering patterns in unlabeled data Example: cluster similar documents based on the text content Reinforcement Learning: learning based on feedback or reward Example: learn to play chess by winning or losing

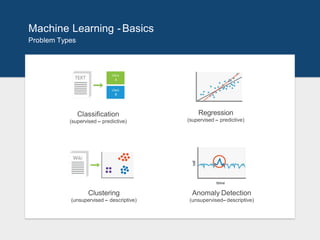

- 12. Machine Learning -Basics Problem Types Regression (supervised – predictive) Classification (supervised – predictive) Anomaly Detection (unsupervised– descriptive) Clustering (unsupervised – descriptive)

- 13. What is DeepLearning? Part of the machine learning field of learning representations of data. Exceptional effective at learning patterns. Utilizes learning algorithms that derive meaning out of data by using a hierarchy of multiple layers that mimic the neural networks of our brain. If you provide the system tons of information, it begins to understand it and respond in useful ways.

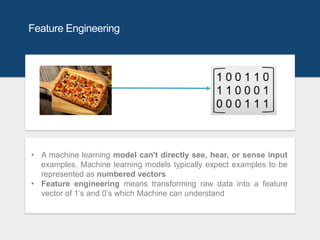

- 14. Feature Engineering • A machine learning model can't directly see, hear, or sense input examples. Machine learning models typically expect examples to be represented as numbered vectors • Feature engineering means transforming raw data into a feature vector of 1’s and 0’s which Machine can understand

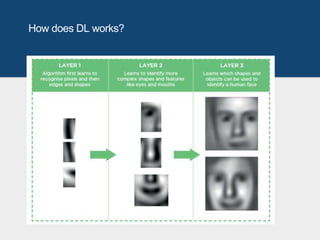

- 16. How does DL works?

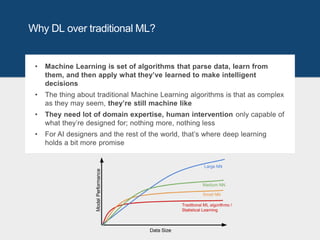

- 17. Why DL over traditional ML? • Machine Learning is set of algorithms that parse data, learn from them, and then apply what they’ve learned to make intelligent decisions • The thing about traditional Machine Learning algorithms is that as complex as they may seem, they’re still machine like • They need lot of domain expertise, human intervention only capable of what they’re designed for; nothing more, nothing less • For AI designers and the rest of the world, that’s where deep learning holds a bit more promise

- 18. Why DL over traditional ML? • Deep Learning requires high-end machines contrary to traditional Machine Learning algorithms • Thanks GPUs and TPUs • No more Feature Engineering!! • ML: most of the applied features need to be identified by an domain expert in order to reduce the complexity of the data and make patterns more visible to learning algorithms to work • DL: they try to learn high-level features from data in an incremental manner.

- 19. Why DL over traditional ML? • The problem solving approach: • Deep Learning techniques tend to solve the problem end to end • Machine learning techniques need the problem statements to break down to different parts to be solved first and then their results to be combine at final stage • For example for a multiple object detection problem, Deep Learning techniques like Yolo net take the image as input and provide the location and name of objects at output • But in usual Machine Learning algorithms uses SVM, a bounding box object detection algorithm then HOG as input to the learning algorithm in order to recognize relevant objects

- 20. What changed? Old wine innew bottles Big Data (Digitalization) Computation (Moore’s Law, GPUs) Algorithmic Progress

- 21. When to use DL or not over Others? 1. Deep Learning outperforms other techniques if the data size is large. But with small data size, traditional Machine Learning algorithms are preferable 2. Finding large amount of “Good” data is always a painful task but hopefully not now on, Thanks to the all new Google Dataset Search Engine 3. Deep Learning techniques need to have high end infrastructure to train in reasonable time 4. When there is lack of domain understanding for feature introspection, Deep Learning techniques outshines others as you have to worry less about feature engineering 5. Model Training time: a Deep Learning algorithm may take weeks or months where as, traditional Machine Learning algorithms take few seconds or hours 6. Model Testing time: DL takes much lesser time as compare to ML 7. DL never reveals the “how and why” behind the output- it’s a Black Box 8. Deep Learning really shines when it comes to complex problems such as image classification, natural language processing, and speech recognition 9. Excels in tasks where the basic unit (pixel, word) has very little meaning in itself, but the combination of such units has a useful meaning

- 22. Got interested?? Applications of Deep Learning

- 23. Try it yourself: https://www.clarifai.com/demo

- 25. Try it yourself: https://www.paralleldots.com/sentiment-analysis

- 29. The Big Players Indian Startups

- 32. TensorFlow ● TensorFlow is an open-source library for Machine Intelligence ● It was developed by the Google Brain and released in 2015 ● It provides high-level APIs to help implement many machine learning algorithms and develop complex models in a simpler manner. ● What is a tensor? ● A mathematical object, analogous to but more general than a vector, represented by an array of components that are functions of the coordinates of a space. ● TensorFlow computations are expressed as stateful dataflow graphs. ● The name TensorFlow derives from the operations that such neural networks perform on multidimensional data arrays known as ‘tensors’.

- 33. Why TensorFlow? This is a dialogue between 2 persons on “Why Tensorflow?” • Person 1: Well it’s an ML framework!! • Person 2: But isn’t it is a complex one, I know a few which are very simple and easy to use like Sci-Kit learn, PyTorch, Keras, etc. Why to use Tensoflow? • Person 1: Ok, Can you implement your own Model in Sci-Kit learn and scale it if you want? • Person 2: No. Ok but then for Deep Learning, why not to use Keras or PyTorch? It has so many models already available in it. • Person 1: Tensorflow is not only limited to implementing your own models. It also has lot many models available in it. And apart from that you can do a large scale distributed model training without writing complex infrastructure around your code or develop models which need to be deployed on mobile platforms. • Person 2: Ok. Now I understand “Why Tensorflow?”

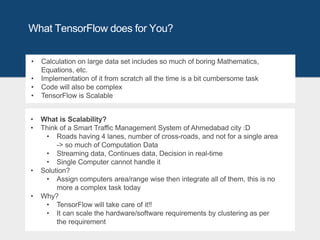

- 34. What TensorFlow does for You? • What is Scalability? • Think of a Smart Traffic Management System of Ahmedabad city :D • Roads having 4 lanes, number of cross-roads, and not for a single area -> so much of Computation Data • Streaming data, Continues data, Decision in real-time • Single Computer cannot handle it • Solution? • Assign computers area/range wise then integrate all of them, this is no more a complex task today • Why? • TensorFlow will take care of it!! • It can scale the hardware/software requirements by clustering as per the requirement • Calculation on large data set includes so much of boring Mathematics, Equations, etc. • Implementation of it from scratch all the time is a bit cumbersome task • Code will also be complex • TensorFlow is Scalable

- 35. What TensorFlow does for You? • Creates own environment, takes care of everything you will need! • Manage memory allocations • Create some variable, you can scale it, make them global • Statistical and Deep Learning both methods can be implemented • 3D list, computation of Graph is fast because of the very powerful and Optimised Data Structure • Good for Research and Testing • Useful for Production level coding

- 37. How do you Classify these Points?

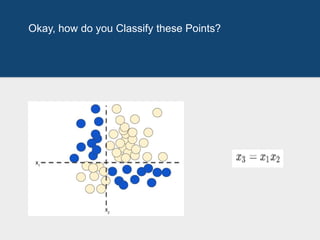

- 38. Okay, how do you Classify these Points?

- 39. Okay okay, but now? Non linearities are tough to model. In complex datasets, the task becomes very cumbersome. What is the solution?

- 40. Inspired by the human Brain An artificial neuron contains a nonlinear activation function and has several incoming and outgoing weighted connections. Neurons are trained to filter and detect specific features or patterns (e.g. edge, nose) by receiving weighted input, transforming it with the activation function und passing it to the outgoing connections.

- 41. Modelling a Linear Equation

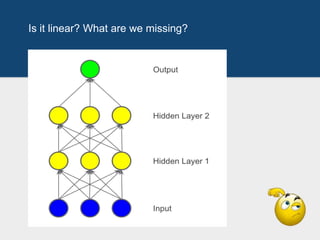

- 42. How to deal with Non-linear Problems? We added a hidden layer of intermediary values. Each yellow node in the hidden layer is a weighted sum of the blue input node values. The output is a weighted sum of the yellow nodes.

- 43. Is it linear? What are we missing?

- 44. Activation Functions Non-linearity is needed to learn complex (non-linear) representations of data, otherwise the NN would be just a linear function.

- 47. Gradient Descent Gradient Descent finds the (local) minimum of the cost function (used to calculate the output error) and is used to adjust the weights

- 48. Gradient Descent • Convex problems have only one minimum; that is, only one place where the slope is exactly 0. That minimum is where the loss function converges • The gradient descent algorithm then calculates the gradient of the loss curve at the starting point. In brief, a gradient is a vector of partial derivatives • A gradient is a vector and hence has magnitude and direction • The gradient always points in the direction of the minimum. The gradient descent algorithm takes a step in the direction of the negative gradient in order to reduce loss as quickly as possible

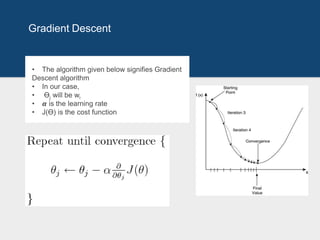

- 49. Gradient Descent • The algorithm given below signifies Gradient Descent algorithm • In our case, • Өj will be wi • 𝞪 is the learning rate • J(Ө) is the cost function

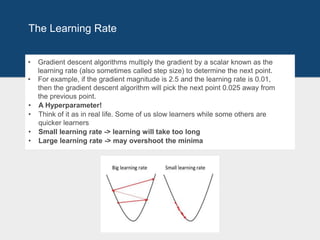

- 51. The Learning Rate • Gradient descent algorithms multiply the gradient by a scalar known as the learning rate (also sometimes called step size) to determine the next point. • For example, if the gradient magnitude is 2.5 and the learning rate is 0.01, then the gradient descent algorithm will pick the next point 0.025 away from the previous point. • A Hyperparameter! • Think of it as in real life. Some of us slow learners while some others are quicker learners • Small learning rate -> learning will take too long • Large learning rate -> may overshoot the minima

- 52. But how the model will LEARN? BACKPROPAGATION

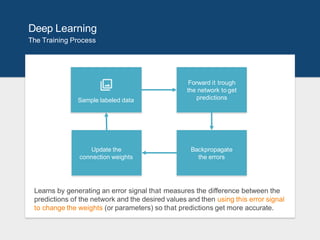

- 53. Deep Learning The Training Process Forward it trough the network to get predictionsSample labeled data Backpropagate the errors Update the connection weights Learns by generating an error signal that measures the difference between the predictions of the network and the desired values and then using this error signal to change the weights (or parameters) so that predictions get more accurate.

- 54. Still not so Perfect! Backprop can go wrong • Vanishing Gradients: • The gradients for the lower layers (closer to the input) can become very small. In deep networks, computing these gradients can involve taking the product of many small terms • Exploding Gradients: • If the weights in a network are very large, then the gradients for the lower layers products of many large terms. In this case you can have exploding gradients: gradients that get too large to converge

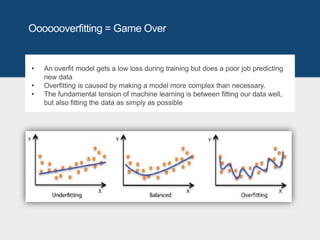

- 55. Ooooooverfitting = Game Over • An overfit model gets a low loss during training but does a poor job predicting new data • Overfitting is caused by making a model more complex than necessary. • The fundamental tension of machine learning is between fitting our data well, but also fitting the data as simply as possible

- 56. Solution Dropout Regularization It works by randomly "dropping out" unit activations in a network for a single gradient step. The more you drop out, the stronger the regularization: 0.0 -> No dropout regularization. 1.0 -> Drop out everything. The model learns nothing values between 0.0 and 1.0 -> More useful

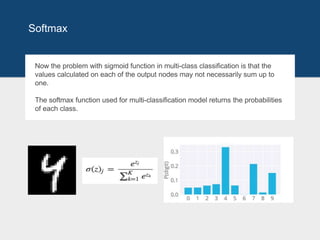

- 57. Softmax Now the problem with sigmoid function in multi-class classification is that the values calculated on each of the output nodes may not necessarily sum up to one. The softmax function used for multi-classification model returns the probabilities of each class.

- 58. Game Time!! Visit kahoot.it Game PIN: 508274

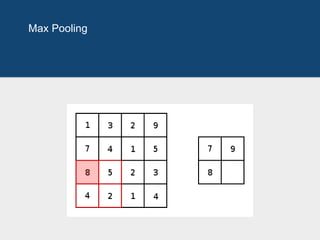

- 59. Convolutional Neural Nets (CNN) Convolution layer is a feature detector that automagically learns to filter out not needed information from an input by using convolution kernel. Pooling layers compute the max or average value of a particular feature over a region of the input data (downsizing of input images). Also helps to detect objects in some unusual places and reduces memory size.

- 60. Convolution…! ;)

- 61. Convolution

- 62. Max Pooling

- 63. Let’s build our first CNN Visit: https://colab.research.google.com/drive/1arAJnnTn0wI3KoSSJ Hg_Hjw40VPPMtP0

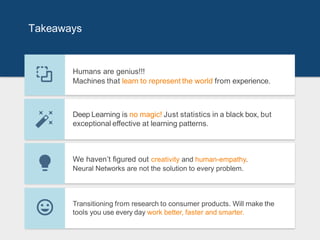

- 64. Takeaways Humans are genius!!! Machines that learn to represent the world from experience. Deep Learning is no magic! Just statistics in a black box, but exceptional effective at learning patterns. We haven’t figured out creativity and human-empathy. Neural Networks are not the solution to every problem. Transitioning from research to consumer products. Will make the tools you use every day work better, faster and smarter.

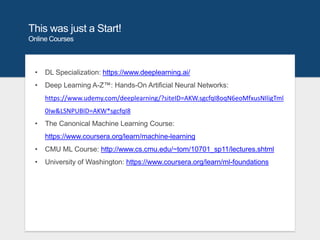

- 65. This was just a Start! Online Courses • DL Specialization: https://www.deeplearning.ai/ • Deep Learning A-Z™: Hands-On Artificial Neural Networks: https://www.udemy.com/deeplearning/?siteID=AKW.sgcfqI8oqN6eoMfxusNIligTml 0Iw&LSNPUBID=AKW*sgcfqI8 • The Canonical Machine Learning Course: https://www.coursera.org/learn/machine-learning • CMU ML Course: http://www.cs.cmu.edu/~tom/10701_sp11/lectures.shtml • University of Washington: https://www.coursera.org/learn/ml-foundations

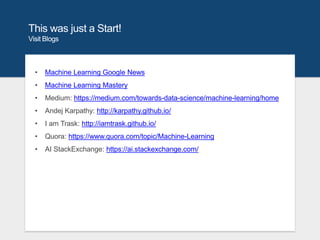

- 66. This was just a Start! Visit Blogs • Machine Learning Google News • Machine Learning Mastery • Medium: https://medium.com/towards-data-science/machine-learning/home • Andej Karpathy: http://karpathy.github.io/ • I am Trask: http://iamtrask.github.io/ • Quora: https://www.quora.com/topic/Machine-Learning • AI StackExchange: https://ai.stackexchange.com/

- 67. This was just a Start! Useful Links (Extremely!) • Over 200 of the Best Machine Learning, NLP, and Python Tutorials: https://medium.com/machine-learning-in-practice/over-200-of-the-best- machine-learning-nlp-and-python-tutorials-2018-edition-dd8cf53cb7dc • Awesome Deep Learning: https://github.com/ChristosChristofidis/awesome- deep-learning • Machine Learning Glossary: https://developers.google.com/machine- learning/glossary/

- 68. References • https://towardsdatascience.com/why-deep-learning-is-needed-over- traditional-machine-learning-1b6a99177063 • https://iamtrask.github.io/2015/07/12/basic-python-network/ • https://www.youtube.com/watch?v=BmkA1ZsG2P4 • https://www.slideshare.net/LuMa921/deep-learning-a-visual- introduction?from_action=save • https://developers.google.com/machine-learning/crash-course/

- 70. Happy Learning! Charmi Chokshi AI and Data Enthusiast Final year ICT Engineering Student at Ahmedabad University Let’s Connect! • LinkedIn • Github GDG Ahmedabad Women Techmakers

Editor's Notes

- It takes 20 years to make an overnight success In the case of Google Books Ngram Viewer, the text to be analyzed comes from the vast amount of books Google has scanned in from public libraries to populate their Google Books search engine. The y-axis indicates the relative frequency, in percentage terms, of the text being searched

- Study about them

- Study where exactly it is used

- Artificial Intelligence is on a rage! All of a sudden every one, whether understands or not, is talking about it. Understanding the latest advancements in artificial intelligence can seem overwhelming, but it really boils down to two very popular concepts Machine Learning and Deep Learning. But lately, Deep Learning is gaining much popularity due to it’s supremacy in terms of accuracy when trained with huge amount of data.

- Mimics human behaviour, intelligence using logics and rules

- Human: learn from experiences Machine: follows instruction When machine try to learn from exp. (data for it) that is ML

- Supervised…learning with the help of a teacher Reinfor…when we get start..gifts..trophy for doing good work…encourage them to do it

- Can you guess features for elephant? The more features you have…the more it is good

- A deep neural network consists of a hierarchy of layers, whereby each layer transforms the input data into more abstract representations (e.g. edge -> nose -> face). The output layer combines those features to make predictions. In the example of image recognition it means identifying light/dark areas before categorizing lines and then shapes to allow face recognition.

- The “Big Data Era” of technology will provide huge amounts of opportunities for new innovations in deep learning. ISRO Example

- GPU has become a integral part now to execute any Deep Learning algorithm TPU (ASIC) by Google specifically for neural network implementation This eliminates the need of domain expertise and hard core feature extraction

- Harder problems such as video understanding and natural language processing will be successfully tackled by deep learning algorithms

- ML: test time increases on increasing the size of data

- Img search system….automatic tagging…fb Allows us to search img via standard query

- Google photos…find objects inside the image Uses CNN.. I am not a robot…why always find roads?...they are using us !!

- LSTM Movie reviews/ratings, new product interests

- Mri, ct scan, fmri…my senior

- Buy,sell,,make predictions based on market data stream, portfolio allocation, and risk profiles Useful in both Short term treding and long term investing

- In malls, image processing, person identification, activity recognition….go towards which direction, which shop past purches history, recommendation system Find best selling stretegy

- the first class of Launchpad Accelerator India Google took 10 Indian startups under its wings to mentor them in the use of artificial intelligence and machine learning through its India-focused program Launchpad Accelerator, event was on august 31st Key criteria for selection required India-only startups to be solving for India’s needs, with the use of advanced technologies such as AI/ML. healthcare, agriculture, and fintech. Uncanny Vision(Vehicle Analytics, People Analytics and Object Analytics), MultiBhashi(NLP), OliveWear(health), Vassar Labs(agriculture using satellite data) Wysa: AI-based chat therapy for mental health, CareNx: Smartphone enabled fetal heart monitor for early detection of fetal asphyxia in babies Signzy: solution ensuring digital trust using AI & Blockchain to provide smart e-verification and risk prediction.

- Do you have any problem statement or you are working currently on that which can be solved using DL?

- There are number of diff open source tools for dl like, tf, theano, caffe, keras, neone, brain storm

- A feature cross is a synthetic feature that encodes nonlinearity in the feature space by multiplying two or more input features together. (The term cross comes from cross product.)

- The first hierarchy of neurons that receives information in the visual cortex are sensitive to specific edges while brain regions further down the visual pipeline are sensitive to more complex structures such as faces.

- Is the model linear?

- we use gradient descent to update the parameters of our model. the resulting plot of loss vs. w1 will always be convex. In other words, the plot will always be bowl-shaped, kind of like this:

- LSTM Movie reviews/ratings, new product interests

- "squashes" a K-dimensional vector of arbitrary real values to a K-dimensional vector of real values,