ESXpert strategies VMware vSphere

- 1. ESXpert Strategies in Constructing & Administering VMware vSphere Greg Shields Partner & Principal Technologist Concentrated Technology www.ConcentratedTech.com

- 2. This slide deck was used in one of our many conference presentations. We hope you enjoy it, and invite you to use it within your own organization however you like. For more information on our company, including information on private classes and upcoming conference appearances, please visit our Web site, www.ConcentratedTech.com . For links to newly-posted decks, follow us on Twitter: @concentrateddon or @concentratdgreg This work is copyright ©Concentrated Technology, LLC

- 3. 44% of Virtualization Deployments Fail According to a CA announcement from 2007. Inability to quantify ROI Insufficient administrator training Expectations not aligned with results Success = Measure performance Diligent inventory Load distribution Thorough investigation of technology

- 4. 55% Experience More Problems than Benefits with Virtualization According to an Interop survey in May, 2009. Lack of visibility Lack of tools to troubleshoot performance problems Insufficient education on virtual infrastructure software Statistics: 27% could not visualize / manage performance 25% cite training shortfalls 21% unable to secure the infrastructure 50% say that implementation costs are too high

- 5. Conclusion: Virtualization is Harder than it Looks!

- 6. Conclusion: Virtualization is Harder than it Looks! Solution: Elevate your Experience. Become an ESXpert !

- 7. WARNING: Prepare Yourself! Conversation Ahead! Everyone ’s requirements are different. Everyone ’s environment is different. We need to hear about it. We all learn (even me). This is a strategies session. The other four ESXpert sessions are demo-heavy. Here, very little demo. There, very few slides. Today ’s answers to leave with… How do you best construct the environment? What are the common mistakes? How should you connect the pieces?

- 8. Class Discussion So, what ’s the biggest mistake you’ve made so far with your virtual infrastructure?

- 9. Six Steps in a Typical Virtualization Implementation Step 0: Environment Assessment Step 1: Constructing Virtualization Step 2: Backups Expansion Step 3: Virtualization to Private Cloud Step 4: Virtualization at the Desktop Step 5: DR Implementation

- 10. Step 0 Environment Assessment

- 11. This Part of My Presentation Used to be Super Tech Heavy, Until… One day it finally dawned on me… Virtualization isn ’t all about the infrastructure. Virtualization is the infrastructure . “ Virtualization” today is equivalent to “A Server” or “A Couple of Servers” just a few years ago. You think of your virtual environment like you thought of your servers just a few years ago. Thus, architecting your virtual environment requires a look at its business drivers – e.g. how it enables your business.

- 12. Questions to Ask Yourself What are your reasons for virtualizing? How many physical servers will you virtualize? What are your expectations for VM consolidation ratios? 5:1, 10:1, 15:1, greater?

- 13. Class Discussion At what consolidation ratio does virtualization start paying for itself? 5:1 ? 10:1 ? 20:1 ? More ???

- 14. The Classic Cost Savings Reduced purchasing rate for new servers Reduced electricity consumption Both for servers, and for cooling Reduced hardware maintenance and management costs Termination of hardware leases Reduced cost of downtime Reduction in count of OS licenses Reduction in space/power/cooling costs

- 15. Potentially Unexpected Costs Geometrically increasing rate of new server creation (VM sprawl). New license costs. New hardware costs. Scaling the environment Complexity Internal IT process complexity. Monitoring complexity. Problem resolution complexity. Why-does-processor-overuse-cause-a-network-issue complexity?

- 16. Class Discussion How has virtualization changed your IT processes?

- 17. Quantifiable Success Measurements Maximize hardware utilization Recognize an X:1 consolidation ratio atop virtual hosts. Recognize an X% resource utilization atop virtual hosts. Reduce server sprawl Reduce servers under management by X% Reduce new server purchase rate by X% Consolidate administrative touch points Reduce administration time per server by X% Reduce number of administrators by X% Minimize downtime Reduce workload downtime to X%

- 18. Step 1 Constructing Virtualization

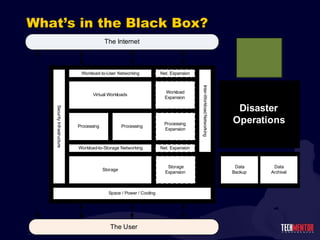

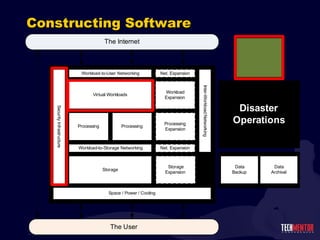

- 19. Virtualization and IT Services Users connect into an IT Services Delivery Infrastructure. The Private Cloud They also connect to the Internet for IT services. The Cloud Cloud Services FAR MORE INTERESTING : You ’re responsible for what’s in the “black box”.

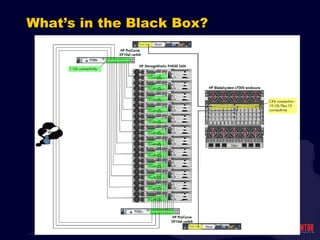

- 20. What ’s in the Black Box?

- 21. What ’s in the Black Box?

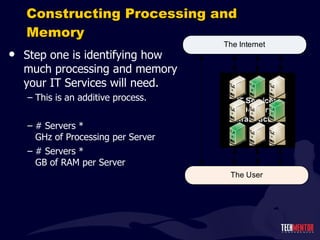

- 22. Constructing Processing and Memory Step one is identifying how much processing and memory your IT Services will need. This is an additive process. # Servers * GHz of Processing per Server # Servers * GB of RAM per Server

- 23. Constructing Processing and Memory Step 1½ is identifying… … growth capacity … burst capacity … cluster reserve capacity VMware recommendations suggest 75% of capacity as maximum utilization during steady state.

- 24. Constructing Processing and Memory Step 2 is converting those numbers into specifications for servers or blades. Example: HP Proliant BL460c G6 blade 2x Intel Xeon 2.53GHz processors Up to 192GB (!) RAM Brocade 8GB FC HBA Dual-port 10Gig-E 2x 146GB RAID 0/1 drives (Just as an aside, isn ’t it crazy that you can now buy a server with more RAM than disk???)

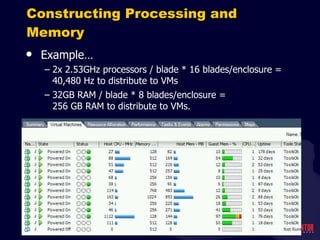

- 25. Constructing Processing and Memory Example… 2x 2.53GHz processors / blade * 16 blades/enclosure = 40,480 Hz to distribute to VMs 32GB RAM / blade * 8 blades/enclosure = 256 GB RAM to distribute to VMs.

- 26. Class Discussion These last few slides have shown us how to measure capacity. But, how can we really measure demand ?

- 27. Constructing Expandability Your environment will tend to always grow. More IT services. More virtual machines. More business activities. Virtualization quantifies decision points for expansion. We ’re nearing 75%, expand! We can ’t maintain cluster reserve, expand! Later sessions discuss gotcha ’s you’ll encounter as you expand (processing, network, storage, etc.)

- 29. Constructing Networking Six types of virtual networking Workload-to-user networking Workload-to-storage networking Inter-workload networking Virtual environment-to-backups networking Backups-to-archival networking Virtual environment-to-DR networking Combinations of 1Gig-E and 10Gig-E are now becoming the norm. More throughput for higher-demands. Right-size cost to needs.

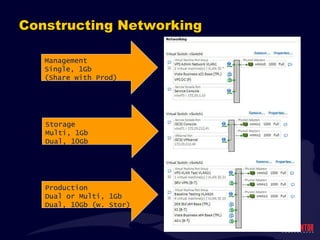

- 30. Constructing Networking Storage Multi, 1Gb Dual, 10Gb Production Dual or Multi, 1Gb Dual, 10Gb (w. Stor) Management Single, 1Gb (Share with Prod)

- 31. Networking Gotcha ’s ESX networking is per-host. Ensure that every host is configured correctly. Changing configuration on one will not affect another. ESX network monitoring is per-host. Network conditions on one host do not impact others. … except when VMs are interrelated, although this is outside the ESX layer. Balance network segregation with consolidation. Segregate out traffic by type. Consolidate traffic within type. VLANs are your friend. CRITICAL: Networking is dynamic .

- 32. Management Connection(s) An ESXi Server requires at minimum a single Management Connection. Used for connecting ESXi to vSphere Used for SSH connections for Remote Support Can be used for VMotion (though generally as a last resort).

- 33. Class Discussion Why is it a good idea to use a separate management connection?

- 34. Class Discussion Why is it a good idea to use a separate management connection? Separates vCenter management traffic from production networking traffic. Places lower-trust traffic into lower-trust network. Prevents regular users from access to the management functions of vCenter LIVE DRAW: Separating out management connections.

- 35. vSphere Networking ESX vSwitches… … are typically associated with one or more physical NICs; however, don ’t necessarily need to be. … are Layer 2 devices. … can support NIC teaming, either failover mode or via load balancing.

- 36. vSphere Networking ESX vSwitches… … are typically associated with one or more physical NICs; however, don ’t necessarily need to be. … are Layer 2 devices. … can support NIC teaming, either failover mode or via load balancing. No outside connection? Why would you want to do this?

- 37. vSphere Networking ESX vSwitches… … are typically associated with one or more physical NICs; however, don ’t necessarily need to be. … are Layer 2 devices. … can support NIC teaming, either failover mode or via load balancing. No outside connection? Why would you want to do this? Why is this fundamentally important?

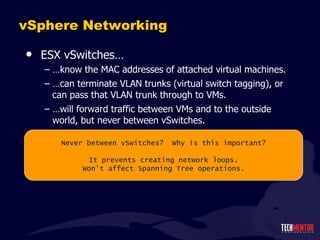

- 38. vSphere Networking ESX vSwitches… … know the MAC addresses of attached virtual machines. … can terminate VLAN trunks (virtual switch tagging), or can pass that VLAN trunk through to VMs. … will forward traffic between VMs and to the outside world, but never between vSwitches. Never between vSwitches? Why is this important?

- 39. vSphere Networking ESX vSwitches… … know the MAC addresses of attached virtual machines. … can terminate VLAN trunks (virtual switch tagging), or can pass that VLAN trunk through to VMs. … will forward traffic between VMs and to the outside world, but never between vSwitches. Never between vSwitches? Why is this important? It prevents creating network loops. Won ’t affect Spanning Tree operations.

- 40. vSphere Networking Two types of port groups: VMKernel port groups Virtual machine port groups Five uses: VM production networking vMotion iSCSI NFS Host management

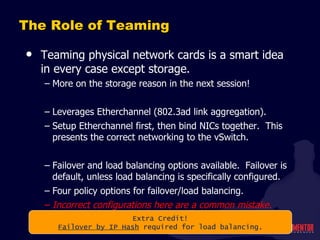

- 41. The Role of Teaming Teaming physical network cards is a smart idea in every case except storage. More on the storage reason in the next session! Leverages Etherchannel (802.3ad link aggregation). Setup Etherchannel first, then bind NICs together. This presents the correct networking to the vSwitch. Failover and load balancing options available. Failover is default, unless load balancing is specifically configured. Four policy options for failover/load balancing. Incorrect configurations here are a common mistake.

- 42. The Role of Teaming Teaming physical network cards is a smart idea in every case except storage. More on the storage reason in the next session! Leverages Etherchannel (802.3ad link aggregation). Setup Etherchannel first, then bind NICs together. This presents the correct networking to the vSwitch. Failover and load balancing options available. Failover is default, unless load balancing is specifically configured. Four policy options for failover/load balancing. Incorrect configurations here are a common mistake. Extra Credit! Failover by IP Hash required for load balancing.

- 43. Class Discussion What about NIC consolidation? When is it appropriate to use VLANs?

- 44. Class Discussion What about NIC consolidation? When is it appropriate to use VLANs? PRO: Reduces NIC count requirements PRO: Lowers hardware costs PRO: Reduces network complexity at the ESX layer PRO: Plays perfectly with 10Gig-E PRO: Trivial to configure

- 45. Class Discussion What about NIC consolidation? When is it appropriate to use VLANs? PRO: Reduces NIC count requirements PRO: Lowers hardware costs PRO: Reduces network complexity at the ESX layer PRO: Plays perfectly with 10Gig-E PRO: Trivial to configure CON: Increases network complexity at the Cisco layer CON: Greater potential for network saturation. Excessive traffic on one VLAN causes problems for all others. CON: Security concerns. Some (obtuse) VLAN exploits believed in the wild. CON: Trivial to misconfigure

- 46. VST vs. EST vs. VGT Three types of VLAN tagging in ESX. Virtual Switch Tagging External Switch Tagging Virtual Guest Tagging

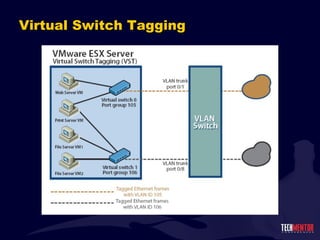

- 47. VST vs. EST vs. VGT Virtual Switch Tagging Physical switch treats vSwitch like any other switch, tagging traffic as it moves into the vSwitch ESX Server uses VLAN tags to direct traffic to port group Physical switch ports are configured as VLAN trunks. Each ESX server must have a port group defined for each VLAN ID.

- 49. EST vs. VST vs. VGT External Switch Tagging Physical switch passes untagged traffic for a single VLAN through each port. In Cisco, this means physical switch ports are configured as access ports assigned to a particular VLAN. Most physical switch ports are configured in this way. In this mode, ESX has a different vSwitch for each VLAN. Each vSwitch has its own individual uplink to a physical NIC. Port groups can be used to control traffic shaping and security policies, but they don ’t affect VLAN ops.

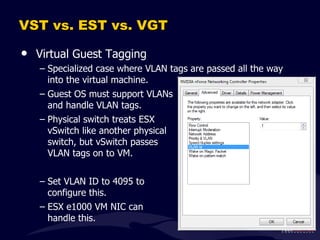

- 51. VST vs. EST vs. VGT Virtual Guest Tagging Specialized case where VLAN tags are passed all the way into the virtual machine. Guest OS must support VLANs and handle VLAN tags. Physical switch treats ESX vSwitch like another physical switch, but vSwitch passes VLAN tags on to VM. Set VLAN ID to 4095 to configure this. ESX e1000 VM NIC can handle this.

- 52. Why? Where? When? VST: If you plan to consolidate NICs via VLAN aggregation This is growing more common EST: If your network core/distribution layer cannot handle multiple and simultaneous VLANS This is growing less common VGT: A VM needs to simultaneously be on multiple VLANs, but you don ’t want multiple NICs This is rare VGT and VST can be used simultaneously

- 54. Class Discussion What ’s the best SAN for virtualization?

- 55. Constructing Storage Selected SAN medium does not appear to be based on virtual platform construction. Result: You ’re probably stuck with what you’ve got. Source: http://www.emc.com/collateral/ analyst-reports/2009-forrester-storage-choices -virtual-server.pdf

- 56. Five Capabilities to Look for in a SAN 2. Processing Redundancy 3. Networking Redundancy 4. Cross-node Disk Redundancy 1. Disk Redundancy, a.k.a RAID

- 57. Five Capabilities to Look for in a SAN 5. Site-to-Site Replication

- 58. Nice Features Storage-level thin provisioning. This is different (and augments) ESX-level thin-provisioning. Storage-level snapshots. Can be useful for data backup and replication Storage-level volume replication & cloning. Ensure that storage-level management activities are completed on storage processors. Eliminates impact on ESX processors. Trivial scalability. You want to “snap-and-go” additional storage as needed. Yesteryear ’s big iron storage is waning in popularity everywhere except where already invested .

- 59. But Irrespective, a SANs a SAN. ESX treats most SAN connections pretty much the same. SCSI Block SCSI iSCSI

- 60. SAN Connections , are a Completely Different Story Your goal: 100% SAN Uptime. Redundancy in the SAN itself Redundant connections to storage Redundant paths to storage Verification that paths exist on all ESX servers LIVE DRAW: Sketching out a SAN design. Who would like to offer theirs up as an example?

- 61. Four Steps in Storage Provisioning LUN is provisioned at the SAN LUN is masked/unmasked/routed to ESX hosts VMFS/(NFS) datastore is provisioned across ESX hosts Virtual disks are provisioned for virtual machines

- 63. Class Discussion How do you resolve these common bottlenecks? Network contention Type and rotation speed of drives Connection redundancy and aggregation Spindle contention Connection medium Administrative complexity

- 64. Thick versus Thin vSphere 4.0 (finally) brought thin provisioning to ESX servers. Only use as much storage as you need. Thin provisioning adds a tiny I/O penalty, but only during the first write to an unwritten file block. This is essentially only when your server needs more disk. Some applications are not supported on thin provisioned disks: Microsoft Exchange is one. Thin provisioning eliminates wasted storage, but requires monitoring to use . If your volume runs out of space, your VMs will fail. VMware suggests that today thick and thin provisioning have almost equal performance.

- 65. Disk Defragmentation Windows ’ native disk defragger is disabled by default on all Windows Servers. This in an of itself will create a performance bottleneck if not enabled on those servers. Enabling in-VM disk defragmentation will significantly reduce overall performance. Same as in-VM anti-virus, in-VM backup, other in-VM scanning and heavy I/O services. For VDI environments, the defragger is enabled by default on Windows desktops. Consider virtualization-aware solutions for VMDK defragmentation.

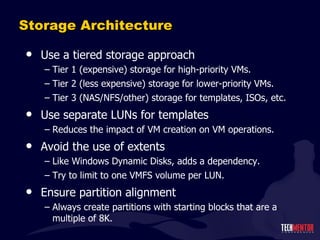

- 66. Storage Architecture Use a tiered storage approach Tier 1 (expensive) storage for high-priority VMs. Tier 2 (less expensive) storage for lower-priority VMs. Tier 3 (NAS/NFS/other) storage for templates, ISOs, etc. Use separate LUNs for templates Reduces the impact of VM creation on VM operations. Avoid the use of extents Like Windows Dynamic Disks, adds a dependency. Try to limit to one VMFS volume per LUN. Ensure partition alignment Always create partitions with starting blocks that are a multiple of 8K.

- 67. Class Discussion What are the big storage gotcha ’s for virtual environments?

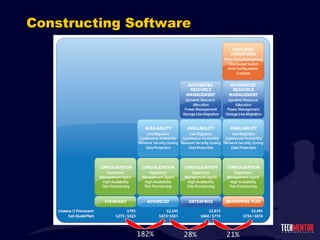

- 69. Constructing Software 182% 28% 21%

- 70. Class Discussion … and yet the hypervisor itself is only the start! What other classes of software are needed in order to fully manage a virtual environment?

- 71. Step 2 Backups Expansion

- 73. Virtualization ’s Backups Problem A TechTarget reader survey in September, 2009 asked the question: In a VMware ESX environment, which system management functions do you find most challenging? 30% - Backing up virtual machine data 25% - Server availability monitoring and metrics 28% - Storage management 21% - Change and configuration management 36% - I/O bottlenecks 21% - Network capacity and utilization monitoring 26% - Capacity planning and monitoring

- 74. Virtual Backups and “Perspective” Virtualization introduces the concept of “perspective” with backups. Essentially, there is now more than one location from where backups can be sourced. Different locations enable the gathering of different kinds of data. Some perspectives don ’t work well for some data. Some perspectives gather data that isn ’t easily restorable. Some perspectives introduce performance problems. Some perspectives don ’t scale well. Some perspectives can/will corrupt data as it is backed up.

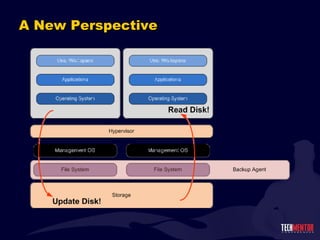

- 75. Backup ’s Many Perspectives

- 76. Backup ’s Many Perspectives

- 77. Backup ’s Many Perspectives

- 78. Backup ’s Many Perspectives

- 79. Backup ’s Many Perspectives

- 80. Backup ’s Many Perspectives

- 81. Agent-in-the-VM Pro ’s No change in practice from all-physical environments. Essentially all existing technology will continue to function. Easy ability to restore individual files and folders or application objects. Con ’s Problems with scalability. Problems of performance. No change in ability to restore whole machine.

- 82. Agent-on-the-Host Pro ’s Capable of point-in-time entire-machine backup as a single file. Less impact on performance. Greater scalability. Con ’s Extra steps required to restore individual files or application objects. Agent support required to successfully back up application objects. Backed up files are exceptionally large.

- 83. Agent-in-the-Storage Pro ’s Complete offloading of backup processing to storage device. Single location for all backups, fewer management touch points. Con ’s Extra steps required to restore individual files or application objects. Agent support required to successfully back up application objects. Comparatively limited agent and software support. Backed up files are exceptionally large.

- 86. Agent-in-the-File System Pro ’s Every transaction can be captured, whether from an application, a file or folder, or an application object. Single location for all backups of all types. Continuous Data Protection for all data types. Item-level compression and de-duplication of data. Restoring an individual email or file is no different than an entire VM. Con ’s This is an entirely new mechanism to do backups. Relies firstly on disk-based storage, secondly on tape-based storage.

- 87. The “Fourth Perspective” This “fourth perspective” in fact eliminates perspective entirely by unifying all backups under a single solution. Works well for all types of data, from files and folders, to application objects, to entire virtual machines. Gathers data in a way that makes it easily and quickly restorable to virtually any point in the past. Eliminates the “daily” in daily backups. Backs up with very little impact to host or VM performance. Scales with the number of VMs per host, and to many hosts. Ensures that application objects remain uncorrupted through its position within the file system driver.

- 88. Class Discussion Which perspective(s) do you use? Why? (Hint: The correct answer doesn ’t need to be a single perspective!)

- 89. Step 3 Virtualization to Private Cloud

- 90. What Makes a Private Cloud? WARNING: A bit of marketecture ahead. But that ’s OK…

- 91. What Makes a Private Cloud? WARNING: A bit of marketecture ahead. But that ’s OK… A Private Cloud enables… … availability for individual IT services. … flexibility in managing services, as well as deploying new services. … scalability when physical resources run out. … hardware resource optimization , to ensure that you ’re getting the most out of your investment. … resiliency to protect against large-scale incidents. … globalization capacity , enabling the IT infrastructure to be distributed wherever it is needed.

- 92. Thanks, but No, Really... What Really Makes a Private Cloud? A Private Cloud at its core is little more than… A virtualization technology… … some really good management tools… … and their integration with business processes.

- 93. Thanks, but No, Really... What Really Makes a Private Cloud? A Private Cloud at its core is little more than… A virtualization technology… … some really good management tools… … and their integration with business processes. “ While VMs are the mechanism in which IT services are provided, the Private Cloud infrastructure is the platform that enables those VMs to be created and managed based on business drivers.” Source: My new (and free!) book, Private Clouds: Selecting the Right Hardware for a Scalable Virtual Infrastructure Which you can download from… http://www.realtimepublishers.com

- 94. A Private Cloud is Essentially a Resource Pool

- 95. A Private Cloud is a Further Abstraction from Simple Virtualization

- 96. Rather than focusing on virtual machines and virtualizing, a Private Cloud focuses on the resources . A Private Cloud is a Further Abstraction from Simple Virtualization

- 97. Private Cloud: The User ’s Perspective A Private Cloud is perhaps easiest explained from the user ’s perspective.

- 98. Private Cloud: The User ’s Perspective A Private Cloud is perhaps easiest explained from the user ’s perspective. Users connect into a local IT Services Delivery Infrastructure. The Private Cloud They also connect to the Internet for IT services. The Cloud Cloud Services The Private Cloud The Cloud

- 99. Why is this Fundamentally Important? Because, at the end of the day, your users should care less about how their IT services are delivered. They can be delivered locally or remotely. As long as those services are delivered securely and in an always-on fashion, users are enabled to accomplish the tasks and activities of business. Its our job to manage what ’s in the black box . Availability – Resource Optimization Flexibility – Resource Quantification Scalability – Globalization & Failover

- 100. Why is this Fundamentally Important? Because, at the end of the day, your users should care less about how their IT services are delivered. They can be delivered locally or remotely. As long as those services are delivered securely and in an always-on fashion, users are enabled to accomplish the tasks and activities of business. Its our job to manage what ’s in the black box . Availability – Resource Optimization Flexibility – Resource Quantification Scalability – Globalization & Failover … and, arguably, what ’s in “The Cloud” as well. But that’s a topic for another day.

- 101. Private Cloud: Availability Live Migration means VMs can run anywhere. IT can no longer think of service availability by individual server. Users need not worry where services are hosted, only that they ’re available . The Private Cloud is constructed with the necessary resources to maintain service availability.

- 102. Private Cloud: Flexibility “ Just a few virtual hosts” quickly becomes a Private Cloud as the scale of its hardware increases. A Private Cloud is a collection of resources that can be reconfigured at any time A Private Cloud is always prepared to incorporate new services immediately. IT ’s former technical hurdles need are no longer a business agility drag.

- 103. Private Cloud: Scalability A Private Cloud and its hardware are seamlessly scalable. New hardware should trivially “snap” into the environment. No operations impact. No extra engineering. No delay. There before you need them. More hardware equals more resources.

- 104. Private Cloud: Resource Optimization A Private Cloud uses its available resources at a maximum level. Hardware utilization is balanced to protect against overuse. Policies ensure resource availability for VM needs. Resource requirements and capacity are plannable.

- 105. Resource Optimization A Private Cloud ’s Resource Pools are infinitely malleable. “ Project X contributed 30% in $$s to buying the hardware, so we’re going to ensure Project X always has 30%.” “ \\ServerA needs more processing power. Let’s supply that power.” “ Business Unit Y is about to expand and they anticipate that they’ll need another 20 VMs, we’ll need to expand our environment to suit.” Resource Pools bring rationality to IT ’s traditional “guess and check” mentality. Your gut probably doesn ’t like this concept. But this is a good thing. Your boss loves it.

- 106. Private Cloud: Resource Quantification Resources become quantifiable units within the virtual platform. Blade Enclosure 1 supplies 40,480 MHz of processing, 256 GB of RAM. Virtual Machine \\server1 needs 2,048 MHz of processing, 4 GB of RAM Resource assignment evolves from “gut feeling” to numerical supply and demand values.

- 107. Resource Quantification Each hardware component in a Private Cloud contributes a finite level of capacity to the Resource Pool. Servers contribute processing and memory Storage contributes disk space Networking contributes throughput

- 108. Resource Quantification Each hardware component in a Private Cloud contributes a finite level of capacity to the Resource Pool. Servers contribute processing and memory Storage contributes disk space Networking contributes throughput Virtual machines assert the quantity of resources they need at every point in time. The Private Cloud supplies these resources. You supply the Private Cloud with hardware. It tells you when you need more. You add more, or you restrict VMs (with notable results).

- 109. Resource Quantification Exceptionally Important: It is the job of the Private Cloud to abstract each of these contributions and assertions into a numerical value. Numerical values represent supply and demand for resources. Hardware adds to resource supply. Virtual machines exert resource demand. “You need a VM? How big?” Quantitatively meeting supply to demand is what Private Cloud computing is all about. This is simple addition and subtraction. This should not be an arcane art.

- 110. Doing this Successfully Requires The Right Hardware

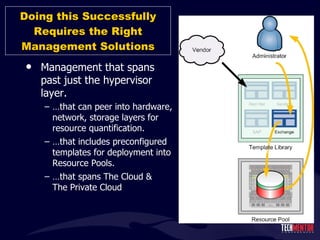

- 111. Doing this Successfully Requires the Right Management Solutions Management that spans past just the hypervisor layer. … that can peer into hardware, network, storage layers for resource quantification. … that includes preconfigured templates for deployment into Resource Pools. … that spans The Cloud & The Private Cloud

- 112. Doing this Successfully Requires the Right Division of Labor IT Architects and External Service Providers define and construct service templates. IT Administrators manage resources. Service Consumers request and deploy templates from the Service Catalog.

- 113. How Do You Get There? Remember: A Private Cloud at its core is little more than… A virtualization technology… … some really good management tools… … and their integration with business processes.

- 114. How Do You Get There? Remember: A Private Cloud at its core is little more than… A virtualization technology… … some really good management tools… … and their integration with business processes. You ’ll need those three things. You ’ll also need a set of hardware that is designed with virtualization and Private Cloud computing in mind. “ You don’t want to be ‘white boxing’ your virtual environment, do you? That was a bad idea the last time!”

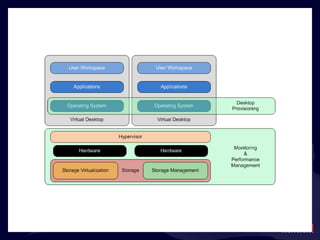

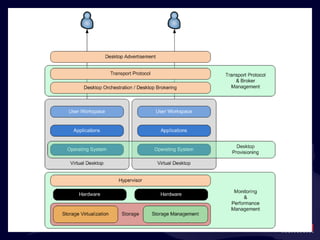

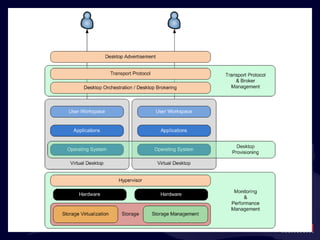

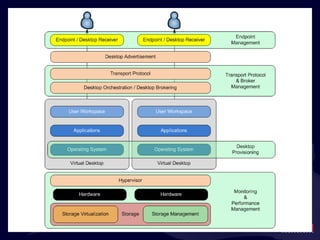

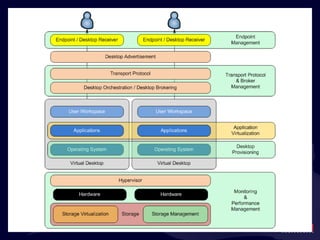

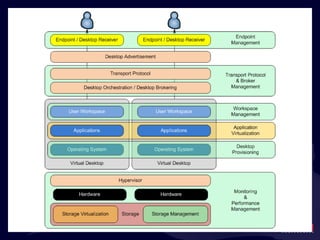

- 115. Step 4 Virtualization at the Desktop

- 119. Products in this Space: Hypervisors VMware vSphere Citrix XenSource Microsoft Hyper-V Sun VirtualBox Parallels Bare Metal & Containers

- 123. Products in this Space: Storage HP & HP / LeftHand Dell & Dell Equalogic IBM EMC NetApp Starwind Software et al

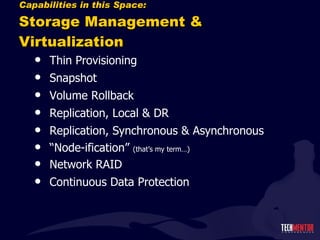

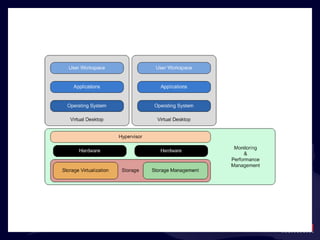

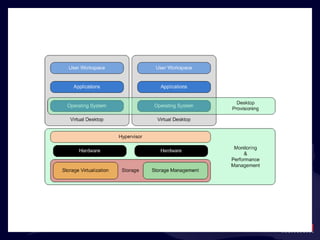

- 124. Capabilities in this Space: Storage Management & Virtualization Thin Provisioning Snapshot Volume Rollback Replication, Local & DR Replication, Synchronous & Asynchronous “ Node-ification” (that’s my term…) Network RAID Continuous Data Protection

- 127. Products in this Space: Monitoring & Performance Mgmt. First-Party Solutions vKernel eG Innovations Veeam Vizioncore Akorri CiRBA (This space is swiftly growing)

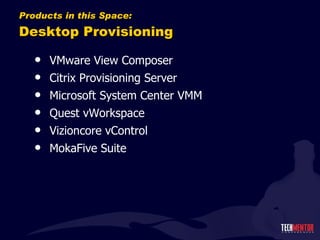

- 130. Products in this Space: Desktop Provisioning VMware View Composer Citrix Provisioning Server Microsoft System Center VMM Quest vWorkspace Vizioncore vControl MokaFive Suite

- 133. Products in this Space: Transport Protocols Microsoft RDP Citrix ICA & HDX VMware PCoIP, Extended RDP Quest Experience Optimized Protocol (EOP)

- 136. Products in this Space: Desktop Brokers & Advertisement Microsoft RD Session Broker, RD Web Access Citrix XenDesktop VMware View Connection Server Quest vWorkspace AppPortal Ericom WebConnect MokaFive

- 139. Products in this Space: Endpoints / Desktop Receivers Traditional PCs, via client access Pano Logic Wyse NComputing

- 142. Products in this Space: Application Virtualization Microsoft App-V Citrix XenApp VMware Thinstall Symantec Workspace Virtualization

- 145. Products in this Space: Workspace Management Microsoft Roaming Profiles RES Software PowerFuse AppSense Management Suite Tranxition LiveManage RingCube vDesk

- 147. Step 5 DR Implementation

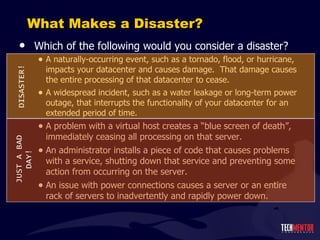

- 149. What Makes a Disaster? Which of the following would you consider a disaster? A naturally-occurring event, such as a tornado, flood, or hurricane, impacts your datacenter and causes damage. That damage causes the entire processing of that datacenter to cease. A widespread incident, such as a water leakage or long-term power outage, that interrupts the functionality of your datacenter for an extended period of time. A problem with a virtual host creates a “blue screen of death”, immediately ceasing all processing on that server. An administrator installs a piece of code that causes problems with a service, shutting down that service and preventing some action from occurring on the server. An issue with power connections causes a server or an entire rack of servers to inadvertently and rapidly power down.

- 150. What Makes a Disaster? Which of the following would you consider a disaster? A naturally-occurring event, such as a tornado, flood, or hurricane, impacts your datacenter and causes damage. That damage causes the entire processing of that datacenter to cease. A widespread incident, such as a water leakage or long-term power outage, that interrupts the functionality of your datacenter for an extended period of time. A problem with a virtual host creates a “blue screen of death”, immediately ceasing all processing on that server. An administrator installs a piece of code that causes problems with a service, shutting down that service and preventing some action from occurring on the server. An issue with power connections causes a server or an entire rack of servers to inadvertently and rapidly power down. DISASTER! JUST A BAD DAY!

- 151. What Makes a Disaster? Your business ’ decision to “declare a disaster” and move to “disaster operations” is a major one. The technologies that are used for disaster protection are different than those used for HA. More complex. More expensive. Failover and failback processes involve more thought.

- 152. Class Discussion Are you here yet? Are you planning a DR implementation? Do you already have one? Do you care?

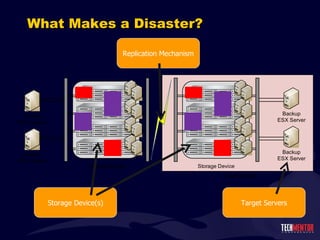

- 153. What Makes a Disaster? At a very high level, disaster recovery for virtual environments is three things: A storage mechanism A replication mechanism A set of target servers to receive virtual machines and their data

- 154. What Makes a Disaster? Storage Device(s) Replication Mechanism Target Servers

- 155. Thing 1: A Storage Mechanism Typically, two SANs in two different locations Fibre Channel , iSCSI, FCoE Usually similar model or manufacturer. This is often necessary (although not required) for replication mechanism to function property. Backup SAN doesn ’t necessarily need to be of the same size as the primary SAN Replicated data isn ’t always full set of data. You may not need disaster recovery for everything.

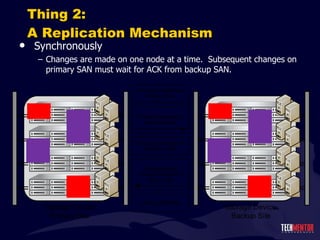

- 156. Thing 2: A Replication Mechanism Replication between SANs must occur. There are two commonly-accepted ways to accomplish this…. Synchronously Changes are made on one node at a time. Subsequent changes on primary SAN must wait for ACK from backup SAN. Asynchronously Changes on backup SAN will eventually be written. Are queued at primary SAN to be transferred at intervals.

- 157. Thing 2: A Replication Mechanism Synchronously Changes are made on one node at a time. Subsequent changes on primary SAN must wait for ACK from backup SAN.

- 158. Thing 2: A Replication Mechanism Asynchronously Changes on backup SAN will eventually be written. Are queued at primary SAN to be transferred at intervals.

- 159. Class Discussion Which Would You Choose…? Synchronous Assures no loss of data. Requires a high-bandwidth and low-latency connection. Write and acknowledgement latencies impact performance. Requires shorter distances between storage devices. Asynchronous Potential for loss of data during a failure. Leverages smaller-bandwidth connections, more tolerant of latency. No performance impact. Potential to stretch across longer distances. Your Recovery Point Objective makes this decision…

- 160. Thing 2: A Replication Mechanism Replication processing can occur at the… Storage Layer Replication processing is handled by the SAN itself. Often agents are installed to virtual hosts or machines to ensure crash consistency. Easier to set up, fewer moving parts. More scalable. Concerns about crash consistency. OS / Application Layer Replication processing is handled by software in the VM OS. This software also operates as the agent. More challenging to set up, more moving parts. More installations to manage/monitor. Scalability and cost are linear. Fewer concerns about crash consistency.

- 161. Thing 3: Target Servers Finally is a set of target servers in the backup site.

- 162. Thing 3: Target Servers Finally is a set of target servers in the backup site. Also needed is a management mechanism to fail over servers when disaster strikes. VMware ’s solution here is Site Recovery Manager. However, SRM is only one of many (and sometimes overlapping) solutions that can be implemented.

- 163. Multi-Site Tactics Ensure networking remains available as VMs migrate from primary to backup site. Crossing subnets also means changing IP address, subnet mask, gateway, etc, at new site. This can be automatically done by using DHCP and dynamic DNS, or must be manually updated. DNS replication is also a problem. Clients will require time to update their local cache. Consider reducing DNS TTL or clearing client cache. Ensure that enough throughput is available for replication requirements. Use vendor-supplied throughput calculators to “guess” this value. Be prepared to augment down the road.

- 164. Six Steps in a Typical Virtualization Implementation Step 0: Environment Assessment Step 1: Constructing Virtualization Step 2: Backups Expansion Step 3: Virtualization to Private Cloud Step 4: Virtualization at the Desktop Step 5: DR Implementation

- 165. ESXpert Strategies in Constructing & Administering VMware vSphere Greg Shields Partner & Principal Technologist Concentrated Technology www.ConcentratedTech.com Please fill out evaluations, or they won ’t let me go ! !!!

- 166. This slide deck was used in one of our many conference presentations. We hope you enjoy it, and invite you to use it within your own organization however you like. For more information on our company, including information on private classes and upcoming conference appearances, please visit our Web site, www.ConcentratedTech.com . For links to newly-posted decks, follow us on Twitter: @concentrateddon or @concentratdgreg This work is copyright ©Concentrated Technology, LLC

Editor's Notes

- #2: MGB 2003 © 2003 Microsoft Corporation. All rights reserved. This presentation is for informational purposes only. Microsoft makes no warranties, express or implied, in this summary.

- #4: Source: http://www.virtualization.info/2007/03/44-of-companies-unable-to-declare-their.html

- #5: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #8: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #9: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #11: Greg Shields

- #12: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #13: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #14: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #15: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #16: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #17: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #18: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #19: Greg Shields

- #30: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #32: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #59: http://www.infoworld.com/d/virtualization/virtualization-cost-savings-hard-come-interop-survey-finds-196?source=IFWNLE_nlt_wrapup_2009-05-20

- #72: Greg Shields

- #74: Greg Shields

- #75: Greg Shields

- #76: Greg Shields

- #77: Greg Shields

- #78: Greg Shields

- #79: Greg Shields

- #80: Greg Shields

- #81: Greg Shields

- #82: Greg Shields

- #83: Greg Shields

- #84: Greg Shields

- #85: Greg Shields

- #86: Greg Shields

- #87: Greg Shields

- #88: Greg Shields

- #89: Greg Shields

- #90: Greg Shields

- #91: Greg Shields

- #92: Greg Shields

- #93: Greg Shields

- #94: Greg Shields

- #95: Greg Shields

- #96: Greg Shields

- #97: Greg Shields

- #100: Greg Shields

- #101: Greg Shields

- #106: Greg Shields

- #108: Greg Shields

- #109: Greg Shields

- #110: Greg Shields

- #111: Greg Shields

- #112: Greg Shields

- #113: Greg Shields

- #114: Greg Shields

- #115: Greg Shields

- #116: Greg Shields

- #117: Greg Shields

- #118: Greg Shields

- #119: Greg Shields

- #120: Greg Shields

- #121: Greg Shields

- #122: Greg Shields

- #123: Greg Shields

- #124: Greg Shields

- #125: Greg Shields

- #126: Greg Shields

- #127: Greg Shields

- #128: Greg Shields

- #129: Greg Shields

- #130: Greg Shields

- #131: Greg Shields

- #132: Greg Shields

- #133: Greg Shields

- #134: Greg Shields

- #135: Greg Shields

- #136: Greg Shields

- #137: Greg Shields

- #138: Greg Shields

- #139: Greg Shields

- #140: Greg Shields

- #141: Greg Shields

- #142: Greg Shields

- #143: Greg Shields

- #144: Greg Shields

- #145: Greg Shields

- #146: Greg Shields

- #147: Greg Shields

- #148: Greg Shields

- #166: MGB 2003 © 2003 Microsoft Corporation. All rights reserved. This presentation is for informational purposes only. Microsoft makes no warranties, express or implied, in this summary.