Etl2

- 1. ETL There’s a New Sheriff in Town: Oracle OR… Not Just another Pretty Face Presented by: Bonnie O’Neil

- 2. Introduction ETL (Extract, Transformation and Loading) is having a paradigm shift. Previously, ETL was done with tools outside the database. Ab Initio, Data Stage and Informatica were kings of the ETL world. However, there were major disadvantages to this paradigm. Third party ETL tools required a separate box because they had no controls on the consumption of CPU resources. Third party ETL tools were not integrated into the database. This meant that ETL developers became experts in running the tool, but often they had no knowledge on how the tool related to the database. These tools often did not keep up with the new releases of Oracle and would not take advantage of Oracle’s new features. In order to create fast and efficient ETL processes, you needed to know both.

- 3. Introduction Oracle 9i is changing the ETL paradigm. Oracle 9i supports such important features as: • External tables • MERGE statements (INSERT/UPDATE) • Conditional multi-table inserts • Pipelined Table functions • Native Compilation of PL/SQL programs

- 4. Introduction With External Tables, you can now read flat files in parallel into a virtual table. This virtual table can be accessed just as any other table. Flat Files Virtual Table With the MERGE statement, you can replace a complex “update the row if it exists otherwise insert it” logic with one easy to understand statement. With conditional multi-table inserts, I can read a row from an external table and insert it into many tables based upon conditions. I can replace most ETL processes with a relatively simple INSERT statement. Pipelined table functions allow Oracle to pass a set of rows to a function and send the results out after each row is finished. With Native Compilation of PL/SQL programs, I can now take a slow PL/SQL program and compile it into C code and then link it to the database.

- 5. External Tables The first component of the new Oracle ETL package is External Tables. External Tables are flat files that Oracle can read and treat as regular tables. • No indexes can be on External tables. • External Tables are read only ( No DML allowed ) In the past, you had to load the data to temporary tables utilizing SQLLOADER, do some transformations, and then load the data into tables. Now, you can just read the data straight from flat files. This eliminates much overhead with rollback segments, writing out data to the temporary table and Redo Log activity. If you have enough memory, you can cache the table in memory. Additionally, you can specify a degree of parallelism for accessing the external flat files.

- 6. External Tables Steps to using external tables. • Create a directory for accessing the flat files. This is required by the access driver. CREATE DIRECTORY extdir AS ‘/u01/app/oracle/extfiles’; • Grant access to the directory GRANT read,write ON DIRECTORY extdir TO scott; This Directory object is used in the External Table clause: • Using the LOCATION clause for input files • To specify the location for output files.

- 7. External Tables External tables are based upon a SQLLOADER format. In the future, a format of EXPORT / IMPORT will also be supported. KEYWORD: CREATE TABLE …. ORGANIZATION EXTERNAL TYPE: type oracle_loader create table tag_ext( log_type varchar2(60), user_id varchar2(128), trans_id varchar2(64), trans_name varchar2(128), dttm timestamp(4)) organization external ( type oracle_loader default directory extdir access parameters … DEFAULT DIRECTORY specifies the directory to use for input/output files if no location is specified.

- 8. External Tables Access Parameters - Contains two sections • Record Format Contains information about the record such as the format of the records, names of output files, and what rules are used to exclude data from being loaded. … access parameters ( records delimited by newline load when log_type=‘T1’ badfile extdir:’t1.bad’ nodiscardfile logfile extdir:’t1.log’ skip 1 fields terminated by '|' … Use SKIP statement if your flat file contains a header row. Output files can contain %p (process number) or %a (agent number) as part of the filename to create unique output files.

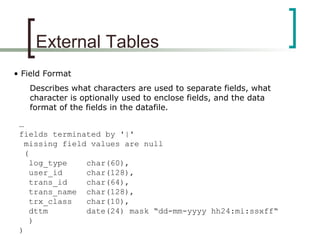

- 9. External Tables • Field Format Describes what characters are used to separate fields, what character is optionally used to enclose fields, and the data format of the fields in the datafile. … fields terminated by '|' missing field values are null ( log_type char(60), user_id char(128), trans_id char(64), trans_name char(128), trx_class char(10), dttm date(24) mask “dd-mm-yyyy hh24:mi:ssxff“ ) )

- 10. External Tables Use the LOCATION clause to specify the filenames of the input files. REJECT LIMIT specifies the maximum number of rejected records that are allowed. This number applies to each parallel slave used to query the data. PARALLEL specifies the number of access drivers that are started to process the datafiles. … location(‘t1.dat’,‘t2.dat’) ) reject limit unlimited parallel 2 /

- 11. External Tables Data Dictionary Views: • DBA_EXTERNAL_TABLES • DBA_EXTERNAL_LOCATIONS Some DDL statements are allowed on external tables: REJECT LIMIT, PARALLEL, DEFAULT LOCATION, ACCESS PARAMETERS, LOCATION, ADD/MODIFY/DROP COLUMN, RENAME TO Performance Issues: Fixed width is faster than delimited fields Not writing log, bad and discard files No conditions clauses

- 12. Merge Statement Before Oracle 9i, a common load, which entailed updating a row if it existed and if it does not insert the row, required two statements or writing PL/SQL code. This data load now can be done in a single statement. This statement is sometimes called an UPSERT – a cross between an update and an insert statement. • The merge fires any Insert or Update Triggers. • Cannot update the same row of the target table multiple times in the same MERGE statement. ORA-30926: unable to get a stable set of rows in the source tables

- 13. Merge Statement MERGE INTO tag t USING tag_ext x ON (t.user_id=x.user_id AND t.trans_id=x.trans_id) WHEN NOT MATCHED THEN INSERT(log_type,user_id,trans_id,trans_name,dttm) VALUES(x.log_type,x.user_id,x.trans_id,x.trans_name,x.dttm) WHEN MATCHED THEN UPDATE SET log_type=x.log_type, trans_name=x.trans_name, dttm=x.dttm Update cannot update columns Used in the ON condition Source can be a table or the results of a query

- 14. Merge Statement Common Errors: • Running merge statement and get ORA-00904: <column_name>: invalid identifier CAUSE: The reason for this is because you specified the column name in ON clause and the UPDATE clause. Columns used in the ON clause for the join cannot be updated.

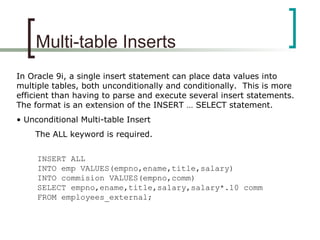

- 15. Multi-table Inserts In Oracle 9i, a single insert statement can place data values into multiple tables, both unconditionally and conditionally. This is more efficient than having to parse and execute several insert statements. The format is an extension of the INSERT … SELECT statement. • Unconditional Multi-table Insert The ALL keyword is required. INSERT ALL INTO emp VALUES(empno,ename,title,salary) INTO commision VALUES(empno,comm) SELECT empno,ename,title,salary,salary*.10 comm FROM employees_external;

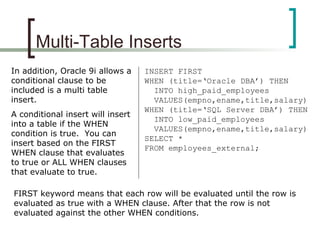

- 16. Multi-Table Inserts In addition, Oracle 9i allows a conditional clause to be included is a multi table insert. A conditional insert will insert into a table if the WHEN condition is true. You can insert based on the FIRST WHEN clause that evaluates to true or ALL WHEN clauses that evaluate to true. INSERT FIRST WHEN (title=‘Oracle DBA’) THEN INTO high_paid_employees VALUES(empno,ename,title,salary) WHEN (title=‘SQL Server DBA’) THEN INTO low_paid_employees VALUES(empno,ename,title,salary) SELECT * FROM employees_external; FIRST keyword means that each row will be evaluated until the row is evaluated as true with a WHEN clause. After that the row is not evaluated against the other WHEN conditions.

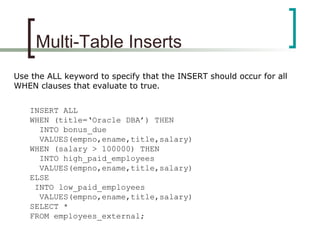

- 17. Multi-Table Inserts Use the ALL keyword to specify that the INSERT should occur for all WHEN clauses that evaluate to true. INSERT ALL WHEN (title=‘Oracle DBA’) THEN INTO bonus_due VALUES(empno,ename,title,salary) WHEN (salary > 100000) THEN INTO high_paid_employees VALUES(empno,ename,title,salary) ELSE INTO low_paid_employees VALUES(empno,ename,title,salary) SELECT * FROM employees_external;

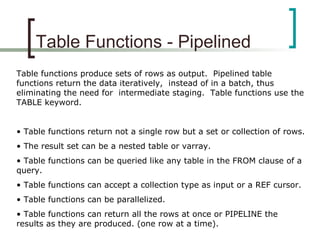

- 18. Table Functions - Pipelined Table functions produce sets of rows as output. Pipelined table functions return the data iteratively, instead of in a batch, thus eliminating the need for intermediate staging. Table functions use the TABLE keyword. • Table functions return not a single row but a set or collection of rows. • The result set can be a nested table or varray. • Table functions can be queried like any table in the FROM clause of a query. • Table functions can accept a collection type as input or a REF cursor. • Table functions can be parallelized. • Table functions can return all the rows at once or PIPELINE the results as they are produced. (one row at a time).

- 19. Table Functions - Pipelined KEYWORD: PIPELINED PIPELINED functions use less memory because the object cache doesn’t have to materialize the entire result set. PIPELINED functions can accept a REF Cursor as an input parameter. CREATE FUNCTION managerlist( cur cursor_emp_pkg.emp_cur ) RETURN emp_type_table PIPELINED IS … The keyword pipe row returns the row immediately rather than waiting for all rows to be processed. Table functions can perform the complex transformations in an efficient manner.

- 20. Table Functions - Pipelined -- Create a Type to define the result type collection CREATE OR REPLACE TYPE emp_type AS OBJECT( empno number(4), ename varchar2(10), job varchar2(9), Sal number(7,2)) / -- Create a collection used as the return type Create type emp_type_table as table of emp_type / -- Create a REF CURSOR as a package variable CREATE OR REPLACE PACKAGE cursor_emp_pkg AS type emp_rec is record ( empno number(4), ename varchar2(10), job varchar2(9), sal number(7,2)); type strong_emp_cur is ref cursor return emp_rec; End; /

- 21. Table Functions - Pipelined -- Create Function CREATE FUNCTION DBA_LIST(cur cursor_emp_pkg.strong_emp_cur) RETURN emp_type_table PIPELINED IS out_rec emp_type := emp_type(NULL,NULL,NULL,NULL); in_rec cur%ROWTYPE; BEGIN LOOP FETCH cur INTO in_rec; EXIT WHEN cur%NOTFOUND; IF in_rec.job = 'DBA' THEN out_rec.empno := in_rec.empno; out_rec.ename := in_rec.ename; out_rec.job := in_rec.job; out_rec.sal := in_rec.sal; PIPE ROW(out_rec); out_rec.sal := in_rec.sal *.10; PIPE ROW(out_rec); END IF; END LOOP; CLOSE cur; RETURN; END; /

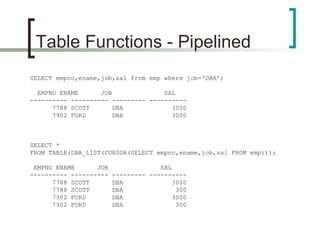

- 22. Table Functions - Pipelined SELECT empno,ename,job,sal from emp where job=‘DBA’; EMPNO ENAME JOB SAL ---------- ---------- --------- ---------- 7788 SCOTT DBA 3000 7902 FORD DBA 3000 SELECT * FROM TABLE(DBA_LIST(CURSOR(SELECT empno,ename,job,sal FROM emp))); EMPNO ENAME JOB SAL ---------- ---------- --------- ---------- 7788 SCOTT DBA 3000 7788 SCOTT DBA 300 7902 FORD DBA 3000 7902 FORD DBA 300

- 23. Table Functions - Pipelined OBSERVATIONS: Explain plan output for Pipelined Table Functions Rows Execution Plan ------- --------------------------------------------------- 0 SELECT STATEMENT GOAL: CHOOSE 4 VIEW 4 COLLECTION ITERATOR (PICKLER FETCH) OF 'DBA_LIST‘ Although the number of records returned is twice as many, the SQL*Net roundtrips to/from client remained the same. Many more recursive calls for the pipelined function.

- 24. Native Compilation Before Oracle 9i, PL/SQL programs were compiled to P-code and interpreted at runtime. Interpreted languages are much slower than compiled languages. This interpreted feature allows PL/SQL to be portable. Oracle 9i offers an option to turn the PL/SQL code into C code automatically. You write the same PL/SQL code and Oracle will covert it, compile it and execute it when the PL/SQL code is called. SQL statements do not run much faster. NO CODE CHANGES IN THE PL/SQL CODE!

- 25. Native Compilation To utilize Native Compilation, you must set several INIT.ORA parameters. plsql_compiler_flags=‘NATIVE’ • Default is INTERPRETED (can be changed at session level) plsql_native_make_utility=‘/usr/ccs/bin/make’ plsql_native_make_file_name= ‘/u01/app/oracle/product/9.2.0/plsql/spnc_makefile.mk’ plsql_native_library_dir=‘/u01/app/oracle/lib’ • Location where shared libraries are created plsql_native_c_compiler=‘/opt/SUNWspro/bin/cc’ plsql_native_linker=‘/usr/sbin/link’

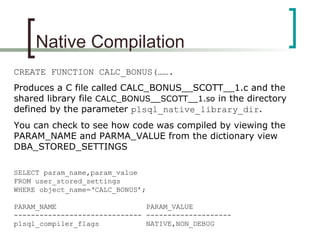

- 26. Native Compilation CREATE FUNCTION CALC_BONUS(……. Produces a C file called CALC_BONUS__SCOTT__1.c and the shared library file CALC_BONUS__SCOTT__1.so in the directory defined by the parameter plsql_native_library_dir. You can check to see how code was compiled by viewing the PARAM_NAME and PARMA_VALUE from the dictionary view DBA_STORED_SETTINGS SELECT param_name,param_value FROM user_stored_settings WHERE object_name=‘CALC_BONUS’; PARAM_NAME PARAM_VALUE ------------------------------ -------------------- plsql_compiler_flags NATIVE,NON_DEBUG

- 27. Native Compilation Check metalink for latest patches and documents regarding NATIVE compilation. There are known bugs (unexpected features) with compiling on a client versus the server. • Error compiling from client. PLS-00923: Native compilation falied: make:spdtexmk:? Also, issues with 32 bit versus 64 bit O/S’s. On Sun 2.8, I ran into a problem that had to be fixed by setting an environment variable and bouncing the listener.

- 28. Conclusion The ETL paradigm shift is under way. We will start seeing more ETL be done inside the database as opposed to outside the database. With the ETL processing done at the database level, Oracle 9i can take advantage of resource allocations using resource groups. These changes will eventually result in a simpler and more efficient ETL process at a lower cost of ownership. Oracle 9i offers vast improvements in ETL functionality. • Economics • Efficiency • Performance

- 29. Thank You Suzanne Riddell President Apex Solutions, Inc. 303 216 9491...office 303 809 4914...cell suzanne@apexsolutions.com www.apexsolutions.com "The Business Intelligence Source"