From dev to prod: Kubernetes on AWS (short ver.)

- 1. From dev to prod: Kubernetes on AWS (short ver.) Yusuke KUOKA from うどん県 ChatWork (http://www.chatwork.com/) @mumoshu

- 2. Our goals, tooling and automation

- 3. Goals

- 7. Tooling

- 8. My recommendation * kube-aws from coreos/coreos-kubernetes: for bootstrapping production k8s clusters * kubernetes/minikube: for running local k8s cluster * fabric8io/docker-iptables-redirector and * jtblin/aws-mock-metadata and * docker-compose: for emulating AWS environment (to make 169.254.169.254 accessible from fluentd, dd-agent containers) * nginx-ingress-controller(kubernetes/contrib): to replace our in-house ingress implementation w/ static service discovery * DaemonSets for Fluentd, Datadog Agent: for unified logging & monitoring experience from dev to prod * Concourse CI on AWS: for CI&CD

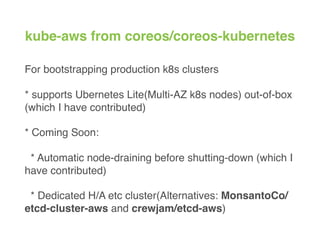

- 10. kube-aws from coreos/coreos-kubernetes For bootstrapping production k8s clusters * supports Ubernetes Lite(Multi-AZ k8s nodes) out-of-box (which I have contributed) * Coming Soon: * Automatic node-draining before shutting-down (which I have contributed) * Dedicated H/A etc cluster(Alternatives: MonsantoCo/ etcd-cluster-aws and crewjam/etcd-aws)

- 11. kube-aws Caveats * kube-aws doesn’t support cluster update * We recreate the k8s cluster each time we want to make a change other than scaling-out of k8s nodes

- 12. Kubernetes? Caveats * IMHO there is no way to achieve H/A w/ an Etcd cluster in Tokyo region * You need at least 3 Etcd nodes in 3 AZs to deal with AZ failures (prevent split-brain scenarios) * Typically only 2 of 3 AZs in Tokyo region are visible / available to you * With 2 nodes in 2 AZs, you’ll eventually end up with a split-brain * With 1 node: Do you want to get called in midnight?

- 13. H/A Etcd Cluster * Work-around: 2 Etcd Clusters + 2 Kubernetes Clusters See: https://github.com/coreos/coreos-kubernetes/ pull/525#issuecomment-225089742 * I’m jealous of you in us-east-1 (5 AZs!) * GCP is coming to Tokyo in 2016 (How many zones?)

- 15. kubernetes/minikube For running full-featured local k8s clusters on developers’ laptops * Supports the `ServiceAccount` admission control out of box, which is required to make Ingress Controller work * built-on top of improved version of redspread/localkube * Very active development: The DNS issue I have reported fixed in a day or two

- 16. Emulating EC2 metadata service `docker-compose up -d` to run: * fabric8io/docker-iptables-redirector * jtblin/aws-mock-metadata in the minikubeVM for emulating AWS EC2 metadata service w/ iptables magic to make 169.254.169.254 accessible from fluentd, dd-agent containers in your local development env.

- 17. Bash & Makefile scripting… * `kube-chawork start` to automatically: * install missing binaries(minikube, kubectl, gcloud-sdk) on your MacBook * start minikubeVM * start the metadata service running docker-compose against minikubeVM * finally run `kubectl create -f <all the deployments and daemonsets>.yaml`

- 19. nginx-ingress-controller … from kubernetes/contrib, to replace our in-house ingress implementation w/ STATIC service discovery

- 20. Logging & Monitoring * DaemonSet for fluentd + GCP Stackdriver Logging * Personal Datadog account + DaemonSet for Datadog Agent(datadog/dd-agent:kubernetes) For unified logging & monitoring experience from dev to prod * Every developer can experiment in logging & monitoring with his/her local environment

- 21. Concourse CI * CI with `pipelines` as the first class citizen * To run E2E testing when one of our micro service’s application code or docker base images are updated? * Alternatives: GoCD, Wercker, Jenkins v2

- 22. Concourse Caveats * Usually requires CloudFoundry’s BOSH for deployment * No time learning BOSH * We have developed/open-sourced concourse-aws to deploy it with terraform: https://github.com/mumoshu/concourse-aws/

- 23. TODOs / WISHes

- 24. Multiple containers in a Pod * Our `app` pod has 1 image containing processes for: http server, php, smtp relay with buffering, etc. * SMTP relay embedded to prevent web/non-web transactions from failing when our mail server is temporary down * A pod shares network: Each container in pod can reach each other through `localhost` * Extracting the `smtp relay` image makes sense

- 25. SSO * Single-sign-on to private Docker registries * Single-sign-on to Kubernetes cluster * Google’s IdP? Auth0? Dex?

- 26. VPN connection * VPN to connect Kubernetes’ private network for debugging (like Kontena’s VPN)

- 27. Less painful H/A Etcd/Kubernetes * Typically we have only 2 AZs available in AWS’s Tokyo Region(ap-northeast-1) * In short, there is no way to achieve H/A with a single Kubernetes cluster in Tokyo(We have 1 k8s cluster for each AZ for now) * Ubernetes to rescue?? Does it allow us managing multiple k8s clusters from one place=API endpoint?

- 28. Thanks! Yusuke KUOKA / @mumoshu An Infrastructure Engineer @ ChatWork We’re hiring!