IoT Meetup Oslo - AI on Edge Devices

- 1. AI on Edge Devices Experiences building a Smart Security Camera @markawest

- 3. Who Am I? @markawest • IoT Hobbyist.

- 4. Who Am I? @markawest • IoT Hobbyist. • Data Scientist Manager.

- 5. Who Am I? • IoT Hobbyist. • Data Scientist Manager. • Leader javaBin (Norwegian Java User Group). @markawest

- 9. Requirements @markawest Functional • Monitor activity in the garden. • Send warning when activity detected. • Live video stream. Non-functional • In place as soon as possible. • Low cost. • Portable.

- 10. Pi Zero Camera Motivation Pi Zero Camera Adding AWS The Movidius NCS Conclusion @markawest

- 12. Software : Motion @markawest • Open source motion detection software. • Excellent performance on the Raspberry Pi Zero. • Built-in Web Server for streaming video. • Detected activity or ‘motion’ triggers events.

- 13. How the Motion Software works 1 2 3 4 1 2 3 How Motion Works @markawest

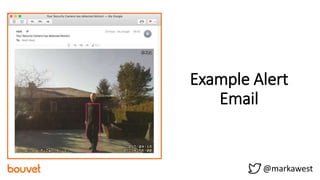

- 14. How the Motion Software works Example Alert Email @markawest

- 15. Example False Alarms from Motion @markawest cat cloud

- 16. Adding AWS to the Camera Motivation Pi Zero Camera Adding AWS The Movidius NCS Conclusion @markawest

- 17. Adding a Smart Filter @markawest cat person cat

- 18. AWS Rekognition @markawest cat person cat • Image Analysis as a Service, offering a range of API’s. • Built upon Deep Neural Networks. • Many alternatives: Google Vision, Microsoft Computer Vision, Clarafai.

- 20. 3. Trigger warning email (if snapshot contains a person) 2. Snapshot analysed via AWS Rekognition 1. Camera pushes snapshot to AWS 4. Email alert sent (with snapshot) @markawest Adding AWS Rekognition to the Camera

- 21. AWS IAM AWS Rekognition AWS Simple Email Service AWS S3 (storage) Smart Camera AWS Pipeline AWS Step Function (workflow) Upload Trigger 1 2 4 5 6 calls 3 uses uses @markawest Upload Trigger

- 22. AWS IAM AWS Rekognition AWS S3 (storage) AWS Lambda Functions AWS Step Function (workflow) Upload Trigger calls uses uses @markawest Upload Trigger AWS Simple Email Service

- 23. Smart Camera with AWS Demo @markawest cat person cat

- 24. Smart Camera with AWS Evaluation Positives • Reduced False Positive emails. • AWS pipeline is «on demand», scalable and flexible. • Low cost of project startup and experimentation. • Satisfies project requirements. Negatives • Result is only as good as the snapshots generated by Motion. • Monthly cost grows after first year. • AWS Rekognition doesn’t cope well with «noisy» environments. • AWS Rekognition is a «Black Box». @markawest

- 25. The Movidius NCS Motivation Pi Zero Camera Adding AWS The Movidius NCS Conclusion @markawest

- 26. Introducing the Intel Movidius NCS • USB stick for speeding up Deep Learning inference on constrained devices. • Contains a low power, high performance VPU. • Supports Caffe and TensorFlow models. @markawest

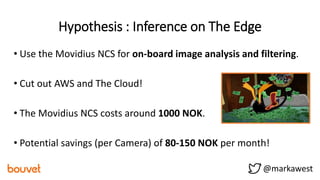

- 27. Hypothesis : Inference on The Edge • Use the Movidius NCS for on-board image analysis and filtering. • Cut out AWS and The Cloud! • The Movidius NCS costs around 1000 NOK. • Potential savings (per Camera) of 80-150 NOK per month! @markawest

- 28. Movidius NCS Work Flow @markawest Secure a pre- trained Deep Learning Model Step One NCS API SDKMobilenet-SSD (20 categories)

- 29. Movidius NCS Work Flow @markawest Secure a pre- trained Deep Learning Model Compile Model to a Graph file for use with the NCS. Step One Step Two NCS Tools SDKMobilenet-SSD (20 categories)

- 30. Movidius NCS Work Flow @markawest Secure a pre- trained Deep Learning Model Compile Model to a Graph file for use with the NCS. Deploy Graph file to NCS and start inferring. Step One Step Two Step Three NCS Tools SDK NCS API SDKMobilenet-SSD (20 categories)

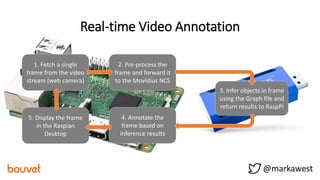

- 31. Real-time Video Annotation @markawest NCS API SDK 1. Fetch a single frame from the video stream (web camera) 2. Pre-process the frame and forward it to the Movidius NCS 3. Infer objects in frame using the Graph file and return results to RaspPi 4. Annotate the frame based on inference results 5. Display the frame in the Raspian Desktop

- 34. Movidius NCS Performance Analysis @markawest Pi Camera (threaded*) USB Camera Pi 3 B+ 4.2 FPS 4.48 FPS Pi Zero 1.5 FPS 1 FPS Pi Zero (headless**) 2.5 FPS 1.5 FPS * Image I/O moved into seperate thread from main processing. ** Booting directly to the command line (no Raspian desktop).

- 35. Movidius NCS Evaluation Positives • Every single frame is processed. • No ongoing costs. • Bring your own model. • Faster than Rasp Pi + OpenCV alone. Negatives • Slow performance on Pi Zero. • No pipeline, less scalable. • Potentially higher hardware cost. • Future for the Movidius NCS? @markawest

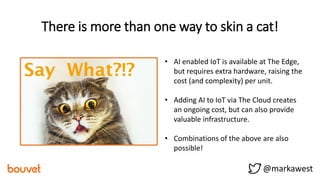

- 37. @markawest There is more than one way to skin a cat! • AI enabled IoT is available at The Edge, but requires extra hardware, raising the cost (and complexity) per unit. • Adding AI to IoT via The Cloud creates an ongoing cost, but can also provide valuable infrastructure. • Combinations of the above are also possible!

- 38. Google Edge TPU Accelerator - Debian Linux, TensorFlow Lite. - 65mm x 30mm. - Compatible with Raspberry Pi. - Coming soon (autumn 2018)! https://aiyprojects.withgoogle.com/edge-tpu @markawest “Player Two has entered the game!!”

- 40. Further Reading 1. Pi Zero Camera with Motion 2. Adding AWS to the Pi Zero Camera Part 1 3. Adding AWS to the Pi Zero Camera Part 2 4. Movidius NCS Quick Start 5. Using the Movidius NCS with the Pi Camera 6. Using the Movidius NCS with the Pi Zero @markawest

Editor's Notes

- But first, who the devil am I? As you can see from my twitter handle my name is Mark West, and I’m an English living here in Oslo, Norway.

- Speaking for me is a hobby that I do to learn and share my own knowledge and experiences. In the past couple of years I have spoken at a range of conference across Europe and the US. The good news is that this is the first time I have spoken at NDC. This is also the first time I have given this specific talk so I am excited to hear your feedback. So lets get started!

- Speaking for me is a hobby that I do to learn and share my own knowledge and experiences. In the past couple of years I have spoken at a range of conference across Europe and the US. The good news is that this is the first time I have spoken at NDC. This is also the first time I have given this specific talk so I am excited to hear your feedback. So lets get started!

- Speaking for me is a hobby that I do to learn and share my own knowledge and experiences. In the past couple of years I have spoken at a range of conference across Europe and the US. The good news is that this is the first time I have spoken at NDC. This is also the first time I have given this specific talk so I am excited to hear your feedback. So lets get started!

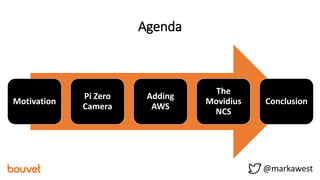

- Here is the Agenda for my talk. As you can see it is split into four sections.

- Here is the Agenda for my talk. As you can see it is split into four sections.

- Here is the Agenda for my talk. As you can see it is split into four sections.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- Here is the Agenda for my talk. As you can see it is split into four sections.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- Ok, so how did the AWS processing work? Here’s a simplified run through. Firstly an image is pushed from the PiZero Camera to Amazon’s s3 storage. A small unit of code or Lambda Function is triggered by the upload. It in turn triggers a Step Function. The Step Function orchestrates further Lambda Functions into a workshop. The first Lambda Function makes a call to Rekognition to evaluate the picture. The second Lambda Function uses the Simple Email Service to send the alert email. Finally, all components in the workflow use Identity Access management to make sure that they have access to the components they need to use. For example, the Lambda Function that sends an email needs access to both the Simple Email Service and to s3 in order to attach the image file to the email

- Ok, so how did the AWS processing work? Here’s a simplified run through. Firstly an image is pushed from the PiZero Camera to Amazon’s s3 storage. A small unit of code or Lambda Function is triggered by the upload. It in turn triggers a Step Function. The Step Function orchestrates further Lambda Functions into a workshop. The first Lambda Function makes a call to Rekognition to evaluate the picture. The second Lambda Function uses the Simple Email Service to send the alert email. Finally, all components in the workflow use Identity Access management to make sure that they have access to the components they need to use. For example, the Lambda Function that sends an email needs access to both the Simple Email Service and to s3 in order to attach the image file to the email

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- Here is the Agenda for my talk. As you can see it is split into four sections.

- The SDK Tools allows you to convert your trained Deep Learning Model in to a "graph" that the NCS can understand. This would be done as part of the development process. The SDK API allows you to work with your graph at run-time - loading your graph onto the NCS and then performing inference on data (i.e. real time image analysis).

- The SDK Tools allows you to convert your trained Deep Learning Model in to a "graph" that the NCS can understand. This would be done as part of the development process. The SDK API allows you to work with your graph at run-time - loading your graph onto the NCS and then performing inference on data (i.e. real time image analysis).

- The SDK Tools allows you to convert your trained Deep Learning Model in to a "graph" that the NCS can understand. This would be done as part of the development process. The SDK API allows you to work with your graph at run-time - loading your graph onto the NCS and then performing inference on data (i.e. real time image analysis).

- The SDK Tools allows you to convert your trained Deep Learning Model in to a "graph" that the NCS can understand. This would be done as part of the development process. The SDK API allows you to work with your graph at run-time - loading your graph onto the NCS and then performing inference on data (i.e. real time image analysis).

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered. < 1 FPS with Raspi 3 B+

- Here is the Agenda for my talk. As you can see it is split into four sections.

- USB Type-C* (data/power)Dimensions65 mm x 30 mm* Compatible with Raspberry Pi boards at USB 2.0 speeds only. Supported Operating Systems Debian Linux Supported Frameworks TensorFlow Lite

- So how does Motion work? Well it basically monitors the video stream from the camera. Each frame is compared to the previous, in order to find out how many pixels (if any) differ. If the total number of changed pixels is greater than a given threshold, a motion alarm is then triggered.