Long Short Term Memory

- 1. LSTM (Long short-term Memory) Cheng Zhan Data Scientist

- 2. • We are the sum total of our experiences. None of us are the same as we were yesterday, nor will be tomorrow. (B.J. Neblett) • memorization: every time we gain new information, we store it for future reference. • combination: not all tasks are the same, so we couple our analytical skills with a combination of our memorized, previous experiences to reason about the world. Human Learning

- 3. Outline • Review of RNN • SimpleRNN • LSTM (forget, input and output gates) • GRU (reset and update gates) • LSTM • Motivation • Introduction • Code example

- 4. Review of RNN • Jack Ma is a Chinese business magnate, and his native language is ____

- 5. Review of RNN • Jack Ma is a Chinese business magnate, and his native language is ____

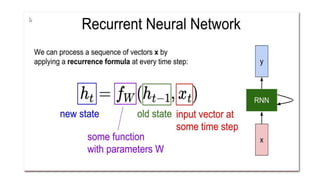

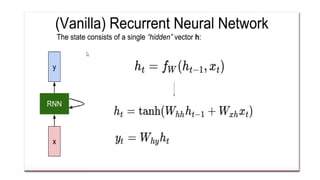

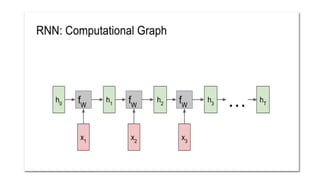

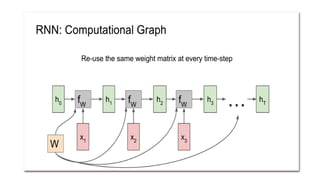

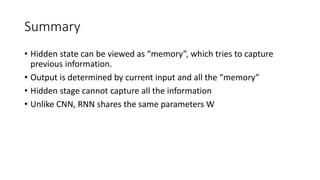

- 13. Summary • Hidden state can be viewed as “memory”, which tries to capture previous information. • Output is determined by current input and all the “memory” • Hidden stage cannot capture all the information • Unlike CNN, RNN shares the same parameters W

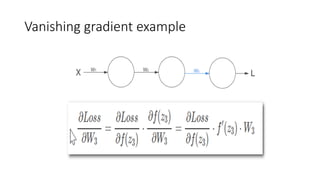

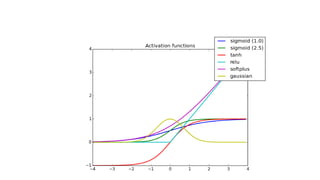

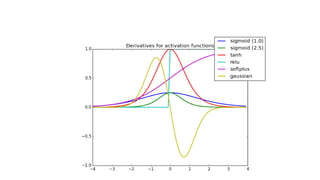

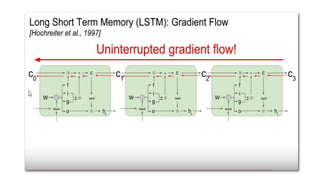

- 17. Solutions • Adding Skip Connections through Time • Removing Connections • Changing Activation Functions • LSTMs

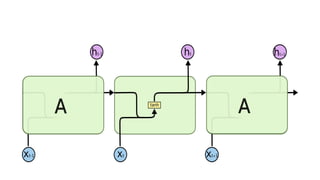

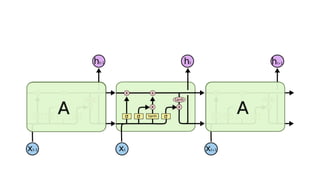

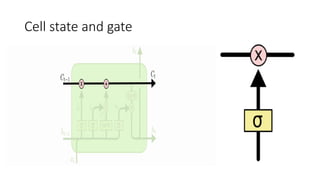

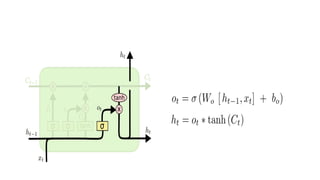

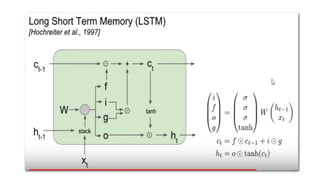

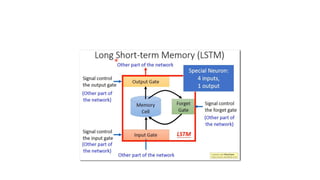

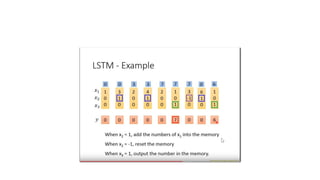

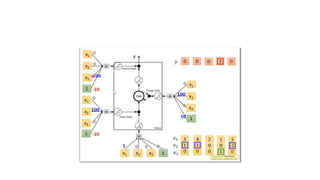

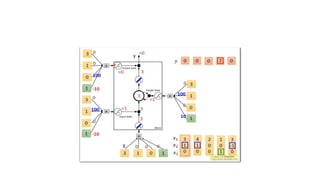

- 23. Cell state and gate

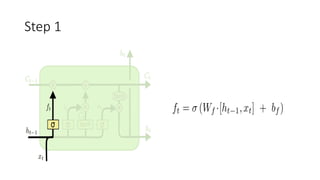

- 24. Step 1

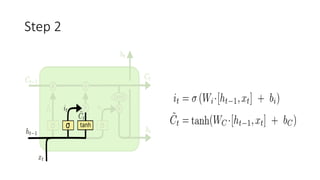

- 25. Step 2

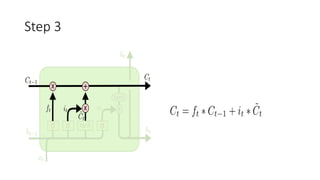

- 26. Step 3

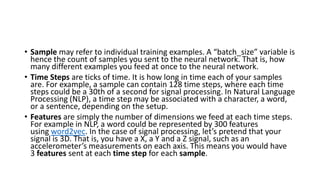

- 45. • Sample may refer to individual training examples. A “batch_size” variable is hence the count of samples you sent to the neural network. That is, how many different examples you feed at once to the neural network. • Time Steps are ticks of time. It is how long in time each of your samples are. For example, a sample can contain 128 time steps, where each time steps could be a 30th of a second for signal processing. In Natural Language Processing (NLP), a time step may be associated with a character, a word, or a sentence, depending on the setup. • Features are simply the number of dimensions we feed at each time steps. For example in NLP, a word could be represented by 300 features using word2vec. In the case of signal processing, let’s pretend that your signal is 3D. That is, you have a X, a Y and a Z signal, such as an accelerometer’s measurements on each axis. This means you would have 3 features sent at each time step for each sample.

- 46. • http://harinisuresh.com/2016/10/09/lstms/ • http://colah.github.io/posts/2015-08-Understanding-LSTMs/ • http://cs231n.stanford.edu/slides/2018/cs231n_2018_lecture10.pdf • https://machinelearningmastery.com/time-series-prediction-lstm- recurrent-neural-networks-python-keras/ • https://www.youtube.com/watch?v=xCGidAeyS4M • https://www.youtube.com/watch?v=rTqmWlnwz_0