Machine Learning

- 2. What is it ? ● Machine Learning ● Making a program Curious ! ● Teach it to decide on its own. ● Give it some intelligence Make a program to label documents according to contents : Sports , Technology, History, Geography, Politics etc...

- 3. What is it ? Step 1. Download a lot of documents from the web Step 2. Label Them ! Labeling is quite a painful task. Somehow our program should be able to distinguish b/w the various categories. Teach the program using examples (Training set) and make sure it makes intelligent decision in real world situations. Question ! How many and what examples ?

- 4. Example All kinds of “unlabeled” data

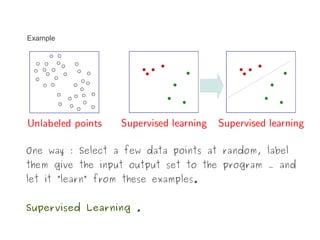

- 5. Example One way : Select a few data points at random, label them give the input output set to the program … and let it “learn” from these examples. Supervised Learning .

- 6. Example One way : Select a few data points at random, label them give the input output set to the program … and let it “learn” from these examples. BUT ! Keep in mind (memory) the location of other labeled points. Semi Supervised & Active learning !

- 7. Example Got a better generalization this time ! Didn't we ??

- 8. Active Learning Somehow make the set of training examples smaller & results, more accurate.

- 9. So how to make Training set smaller & smarter ?? Select the Training examples which are most uncertain … instead of doing it at random . The program asks Queries from the “Oracle” in the form of unlabeled instances to be labeled. In this way, the active learner aims to achieve high accuracy using as few labeled instances as possible, thereby minimizing the cost of obtaining labeled data. Eg. Query the unlabeled point that is: Closest to the boundary. OR Most Uncertain OR Most likely to decrease overall certainty. Etc etc.

- 10. How does the learner ask queries ? There are several different problem scenarios in which the learner may be able to ask queries. For example:

- 11. Membership Query Synthesis The learner may request labels for any unlabeled instance in the input space, including (and typically assuming) queries that the learner generates de novo, rather than those sampled from some underlying natural distribution. BUT sometimes the queries to label are quite awkward ! *De novo means from the source,fresh & itself.

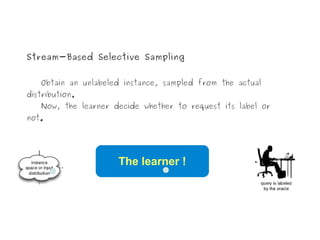

- 12. Stream-Based Selective Sampling Obtain an unlabeled instance, sampled from the actual distribution. Now, the learner decide whether to request its label or not. The learner !

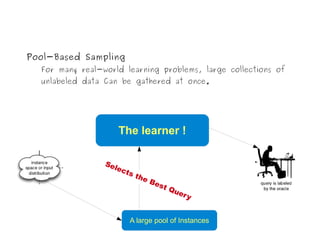

- 13. Pool-Based Sampling For many real-world learning problems, large collections of unlabeled data Can be gathered at once. The learner ! Se le cts t he Be st Qu er y A large pool of Instances

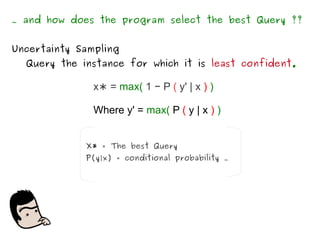

- 14. … and how does the program select the best Query ?? Uncertainty Sampling Query the instance for which it is least confident. x∗ = max( 1 − P ( y' | x ) ) Where y' = max( P ( y | x ) ) X* = The best Query P(y|x) = conditional probability …

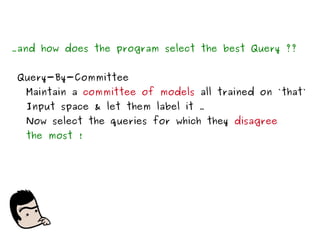

- 15. …and how does the program select the best Query ?? Query-By-Committee Maintain a committee of models all trained on `that` Input space & let them label it … Now select the queries for which they disagree the most !

- 16. For measuring the level of disagreement: Yi :: ranges over all possible labelings. V (yi ) :: number of “votes” that a label receives from among the committee members’ predictions. C :: Committee size ! … and then there are a lot of other algorithms also !

- 17. The Algorithm… 1.Start with a large pool of unlabeled data Select the single most informative instance to be labeled by the oracle Add the labeled query to the Training set Re-train using this newly acquired knowledge Goto 1

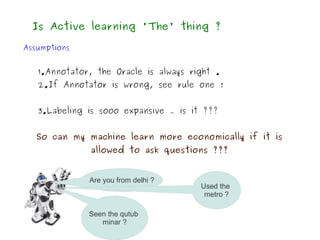

- 18. Is Active learning 'The' thing ? Assumptions 1.Annotator, the Oracle is always right . 2.If Annotator is wrong, see rule one ! 3.Labeling is sooo expansive … is it ??? So can my machine learn more economically if it is allowed to ask questions ??? Are you from delhi ? Used the metro ? Seen the qutub minar ?

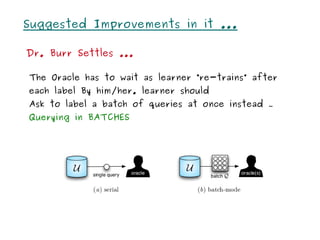

- 19. Suggested Improvements in it ... Dr. Burr Settles ... The Oracle has to wait as learner “re-trains” after each label By him/her. learner should Ask to label a batch of queries at once instead … Querying in BATCHES

- 20. Suggested Improvements in it ... Dr. Burr Settles ... Oracles are not always right … They can be fatigued Error in instruments etc CrowdSourcing on web You just played a fun game : Tag as many rockstars in the pic as you can in one minute Challenge your friends Like on facebook ...meanwhile the learner was learning from your labels … thanku Oracle !

- 21. Suggested Improvements in it ... Dr. Burr Settles ... Goal: to minimize the overall of training an accurate model. Simply reducing the number of labeled instances Wont help. Cost Sensitive Active Learning approaches explicitly Account for varying labeling costs while selecting Queries. eg. Kapoor et al. Proposed a decision-theoratic approach. Takes into account both labeling & misclassification cost. Assumption: Cost of labeling prop. To length.

- 22. Suggested Improvements in it ... Dr. Burr Settles ... If labeling cost is not known, Try to predict the real, unknown annotation cost based on a few simple “meta features” on the instances. Research has shown that these learned cost-models are significantly better than simpler cost heuristics (e.g., a linear function of length).

- 23. Active Learning :: Practical Examples Drug Design Unlabeled Points :: A large (really large) pool of Chemical Compounds. Label :: Active (binds to a target) or Not. Getting a label :: The Experiment.

- 24. Active Learning :: Practical Examples Pedestrian Detection

- 25. Conclusion Machines should be able to do all the things we hate … & machine learning will play a big role in achieving this goal. And to make machine learning faster and cheaper … active learning is the key ! Machine/Active learning is a very good area for research ! Machines will become Intelligent and wage a war against Humanity !

- 26. Thank You :) Do Check out http://en.akinator.com