Managing storage on Prem and in Cloud

- 1. Managing Storage Across Public and Private Resources Howard Marks Chief Scientist DeepStorage, LLC

- 2. Your not so Humble Speaker • 30 years of consulting and writing for trade press • Now at NetworkComputing.com • Chief Scientist DeepStorage, LLC. • Independent test lab and analysts • Co-Host Greybeards on Storage podcast @DeepStorageNet on Twitter Hmarks@DeepStorage.Net

- 3. Our Workshop in Three Acts • The evolution of on-site storage management • Storage in the public cloud • Managing the potential dumpster fire of hybrid cloud

- 4. The evolution of on-site storage management Act One

- 5. The Storage Silo is Broken • Expensive • Inflexible • All servers on VMAX • Unresponsive • Weeks to provision a LUN

- 6. The Storage Administrator's Creed 1. Do not lose data 2. Do not run out of space 3. Do not lose data 4. Provide reasonable performance 5. Do not lose data 6. There is no rule number 6 7. Do not lose data

- 8. Storage Administratus Neanderthalus • Key skills: • Deriving performance from multiple spindles • Fibre Channel • Arcane to all • Just bizarre to a network engineer • Obscure, modal CLI(s)

- 9. Why Are Storage Admin’s Paranoid? • Storage is persistent • Therefore storage screw-ups are persistent • We also clean up after users • Most restores are about user error

- 10. Old Storage skills erode in value • Flash based arrays are smarter than any admin • IP storage replacing Fibre Channel • HCI threatens the array itself • GUIs and APIs replace CLI(s) • Platform APIs • Vmware VASA • Openstack Cinder • Powershell • RESTful for general integration

- 11. A world on silos • Hardware doesn’t define silos • People and process do • DevOpps ≈Weaton’s law • Don’t be a Nixon

- 12. Application Silos • The application owners solution – Eliminate the storage team • Oracle Exadata • VDI, HCI

- 13. The 21st Century Storage Admin • Ensures users have capacity • Large LUN/Datastore • Delegated pools • Matching performance requirements to platforms • Data protection • Every organization needs a designated paranoid

- 14. Storage Policy Based Management and VVOLs • Storage array publishes capabilities • Keys/values on storage container • Senior admin defines storage policies • Range of acceptable values for capabilities • Sets default policy for new VVOLs • VM admin chooses policy at VM creation • VVOL provisioned by VASA Provider • From resources meeting policy VASA 2.0 Provider vCenter Server

- 15. Act Two Managing storage in the public cloud

- 16. Cloud Storage Options • Object stores • Ephemeral storage with compute instances • Block storage for instances • Data services • SaaS storage

- 17. Object Storage: Scaling out for capacity • Greater scale than file systems • Shared nothing across many servers/nodes • n-Way replication or erasure codes • Billions of objects • HTTP Get/Put semantics • Objects generally immutable • Overwrite creates new version • APIs consolidating around S3 and/or Swift Object Store Get Put

- 18. Cold Storage • Reduced cost object storage for reduced access • 0.5-1¢/mo typical vs 1-4¢ for standard • Extended retrieval times and charges • AWS Glacier .4¢/GB/Mo – min 3 mo • Standard retrieval 3-5hr 1¢/GB • Expedited retrieval 1-5 min 3¢/GB • Azure cool tier 1¢/GB/Mo - 1¢ • Google • Nearline 1¢/GB/Mo - 1¢/GB to retrieve • Coldline 0.7¢/GB/Mo - 5¢/GB to retrieve

- 19. Azure Storage Options • Blob – Object Storage • Append blobs extensible by append (logs) • Page blobs support random I/O (1 TB max size) • Block – Managed volumes leverage page blobs • Table – Key-value NoSQL • File – SMB to Azure VMs • REST API for remote access

- 20. Azure Durability • Local – 3-way replicated in a single data center • Zone – 3 copies in 2 or 3 data centers • Same region or cross-region • Block blog only • Geo – 3 copies in local plus 3 in 2nd region • RPO ~15 min, eventually consistent, no SLA • Faster read only access to 2nd region (extra charge)

- 21. AWS Storage Options • S3 object storage • Glacier cold storage • Elastic block storage • Many variations • Elastic File System • Linux/NFS only

- 22. Google Cloud Platform • Cloud Storage – Object • Persistent Disk – Block for VM (Multi-reader)

- 23. Comparing Block Options IOPS/Disk Size GB Throughput Notes Azure Prem 500-5,000 128-1,024 1-200MBps Managed, Thick provisioned Azure Std 3-500 32-1,024 100 MBps Plus transaction costs Google Persistent Disk Up to 3000 1GB-64TB 120-180MBps Perf/GB Google SSD 15-40,000 64TB max 240-800MBps AWS EBS (GP) Up to 3,000 1GB-16TB 160MBps Perf/GB, Thin provisioned AWS EBS Provisioned IOPS Up to 20,000 1GB-16TB 320MBps

- 25. Public Cloud Storage Limitations • Volume capacity and IOPS limited • Performance inconsistent • Volumes generally single mount to compute instance • Resiliency limited to region/zone • Limited snapshot capability

- 26. Is Public Cloud Where Storage Virtualization Actually Succeeds? • Use object for block/file • Aggregate simple volumes • Greater performance/capacity • Multiple VM access • Tiering • Cross region replication • Data reduction

- 27. SSD Caching • The simplest case • Compute instances have SSD • Frequently comes with at no cost • But ephemeral • Use SSD as read cache • Linux bcache or dm-cache • Commercial products • WD Flashsoft

- 28. SoftNAS • Cached iSCSI/SMB/NFS from block and/or object • AWS, Azure marketplaces • On-site as cloud gateway • Replicates between zones/regions w/HA • Data reduction (ZFS) • Multitenant, secure • 2FA, encrypt at rest, AD integration Etc.

- 29. Weka.io MatrixFS • High performance distributed file system • Makes ephemeral storage resilient • Replicates data • Spans availability zones

- 30. SaaS Storage • Most organization’s first foot in the cloud • Storage just part of the service • Google G-Suite • Office 365 • SalesForce • Vertical apps

- 31. What Makes Sync 'n' Share Enterprise • Centralized administration • Authentication integration • 2-step or 2-factor authentication for mobile • Unique accounts for partners • Active Directory or other LDAP • Frequently via SAML connector • Reporting • Auditing - Collaboration - Manual file locking - Notes, comments

- 32. Sharing Security • Password protected links • Prevents forwarding email w/link • Access control lists • For internal and eternal users • Link expiration • Remote Wipe • Generally for iOS/Android • MDM/app wrappers • Watermarks, file expiration, copy prevention

- 33. Integration With Existing Infrastructure • Application integration • Outlook - O365 - Provide access to data sources - SharePoint - NAS data - Content Management - Gateway vs copied

- 34. Many Players from Different Angles • Cloud platform players • Microsoft OneDrive/Office365, Google Drive • Mass market Sync ‘n’ Share • Dropbox, Box • Enterprise Infrastructure • Citrix Sharefile, Syncplicity • Mobile device management/security • VMware AirWatch • Open Source • OwnCloud

- 35. Public Cloud (Saas) Model • Data and authentication in service • SP holds encryption keys • Creates an independent repository • Limited access for eDiscovery, other search

- 36. Hybrid Deployment • Shifts some function to on-premises • Authentication alone • Data repositories • Provides flexibility, greater control Group User

- 37. On-Premises Group User • Tightest control • Simple integration with infrastructure • Data available for eDiscovery, Etc. • Requires most IT time/management

- 38. Sync n Share vs File Services • File services won’t go away • Filer provides an authoritative location • File and record locking manage access • Desktop grows from 128GB to ? • VDI user profiles require them

- 39. Truly Unified Storage • Sync ‘n’ share another protocol for NAS • One authoritative repository • Local users access vis SMB/NFS • Remote via Sync ’n’ Share, WebDAV • Leverage SMB/NFS NAS infrastructure • Anti-Virus, Archiving, Etc. • Multi-site Sync

- 40. SaaS Backup • SaaS providers and enterprise both backup • To protect data against system failure • Enterprises also backup • To satisfy retention mandates • The archive • To protect against user error • Explicit • Ransomware

- 41. Select SaaS Backup Solutions • Datto Backupify • Office 365, Google Apps, Salesforce • EMC Spanning • Veeam Backup for Office 365 • Backs up to Veeam repository (VM) • Exchange only, no OneDrive, Sharepoint

- 42. Act Three Connecting On-Site storage to the cloud

- 43. Cloudbursting • Cloud=elastic compute • We need 4X web servers in Dec. • So spin up in the cloud • Web servers send SQL query • On-Site database • Latency, slow, Fail

- 44. Data has Gravity • Applications are small, data is large • As data accumulates it attracts applications • Latency determines application performance • But applications share data • Bursting to the cloud therefore problematical

- 45. Cloud Storage Gateways • Provide iSCSI/File interface to object • Cache for performance • 1st Gen primarily about backup • StorSimple (Microsoft) • TwinStrata (EMC) • NetApp AltaVault

- 46. Storage Vendors Say Cloud • VSA in cloud • Can replicate/dedupe • Array adjacent to cloud • NetApp Private Storage • Tier to object • Isilon CloudPools • EMC Cloud Tiering Appliance • Many others • Sync to object • NetApp CloudSync

- 47. Marketecture

- 48. Cloud File Systems • SMB/NFS gateways • Multiple locations • Cloud instances • Data in object store • Users see common file system • “Unlimited” scale • Snapshots replace backup*

- 49. Cloud File System Providers • Nasuni • SaaS – gateway and storage $/GB • Mobile apps • Panzura • Real time file/record locking • Avere • High performance

- 50. We Need Data AntiGravity • Data acting like it’s in two places at once • Relies on: • Caching • Ideally with pre-fetch/puch • Shared snapshots • High bandwidth net • May avoid transfer/egress costs

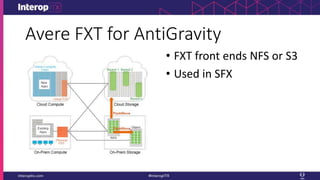

- 51. Avere FXT for AntiGravity • FXT front ends NFS or S3 • Used in SFX

- 52. ClearSky Data CloudEdge • The love child of primary storage and the cloud • On-Site writeback caching appliance, iSCSI • 10Gbps MetroEthernet to PoP • PoP 2nd layer write through cache • Public backing store (S3)

- 53. Secondary Metro PoP Primary Metro PoP 2x EPLiSCSI/FC Customer SAN Backing Cloud Customer Data Center SQL Splunk VMs SQL Splunk VMs SQL Splunk VMsSQL Splunk VMs SQL Splunk VMs End Users The ClearSky architecture Public Cloud Cloud Edge

- 54. Clearsky CloudEdge Benefits • RPO zero all data in PoP before ACK • Unlimited free snapshots and clones • Multiple locations in metro act like one array • Including Colo or virtual CloudEdge • Very high bandwidth/low latency • Clone on prem DB, instantly available in cloud

- 55. Summing Up • Storage management is becoming data management • Hardware runs itself • Someone must still be the guardian • Cloud storage provides basics • Storage virtualization the solution? • Connections getting easier • Latency remains an issue

- 56. Tips • Start with low hanging fruit • Backup to cloud • Archive • DRaaS • Auto-tier from NAS • Caching can provide anti-gravity

Editor's Notes

- Glacier does WORM no 17-4a plus transfer out costs of 5-9¢/GB if out of AWS

- Snapshots in minutes not hours

- Azure P30 $135/mo S30 20.48 std unmanaged 5¢ LRS 6-12 GRS RA-GRS transactions 3.6¢ per million Google has local SSD NVMe option 680K read 360K Write IOPS 1TB SSD $170/mo

- Note Box for networks only provides R/O access to external users that are not Box subscruibers

- Spanning now Insight partners (Privite equity)

- First described bu

- First described by Dave McCrory in 2010