MySQL Replication Performance Tuning for Fun and Profit!

- 1. Copyright © 2017, Oracle and/or its afliates. All rights reserved. MySQL Replicatio erfirmaoce Tuoiog fir Fuo aod rifit! 26ith Sepitember 2017 Víitir Oliveira (viitir.s.p.iliveira@iracle.cim) Seoiir erfirmaoce Eogioeer 1

- 2. Safe Harbor Statement The following is intended to outline our general product directon. It is intended for informaton purposes only, and may not be incorporated into any contract. It is not a commitment to deliver any material, code, or functonality, and should not be relied upon in making purchasing decisions. The development, release, and tming of any features or functonality described for Oracle’s products remains at the sole discreton of Oracle. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2

- 3. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Replicaton Performance Asynchronous Replicaton Group Replicaton Impact of the latest improvements Conclusion Contents 3 1 2 3 4 5 This session is about Asynchronous and Group Replicaton performance and the optons that may help support the most demanding workloads. Some of the latest development eforts to improve the performance of MySQL replicaton will also be presented.

- 4. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Replicatio perfirmaoce ● MySQL Replicaton overview ● Replicaton performance ● Replicaton delay 1

- 5. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.1 MySQL Replicatio Overview (1/3) Replicatio – MySQL Replicaton transfers database changes from one server to its replicas. Sitarits wheo itraosactios are prepared – Replicaton preserves the data changes in a binary log fle on the master just before the transactons are ready to commit; Traosfers daitabase chaoges io a bioary lig – The binary log fle contains an engine-agnostc representaton of the data changes; – It is sent to replicas whenever they request it or can be used for backup, point-in-tme recovery and integraton purposes. 5

- 6. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.1 MySQL Replicaton Overview (2/3) 6 binary Log INSERT ... DB relay log INSERT ... binary log INSERT... DB User Applicaton “INSERT...” networknetwork Server A Server B

- 7. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.1 MySQL Replicaton Overview (3/3) Sitaitemeoit- vs Riw-based bioary ligs – The binlog can either carry the statements issued by the user or the deterministc row- changes resultng from those statements; Are asyochrioiusly applied – The slaves stream the binary log from the master to an intermediate fle on the slaves, the relay log, which is discarded once it is no longer needed; – Workloads are applied asynchronously using a best efort approach; it competes for resources with other workloads on the slaves. Usiog siogle ir mult-ithreaded schedulers – The slaves scan the relay log and apply the data changes to the database using one of the available appliers: single-threaded, DATABASE or LOGICAL_CLOCK. 7

- 8. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.2 Replicatio perfirmaoce (1/3) erfirmaoce – Performance can be many things, lets focus on concrete metrics like transacton ithriughpuit and laiteocy. (efciently commit as many transactons per second/hour as possible, having the smallest delay between the moment a transactons starts untl all its efects are seen) Twi meitrics fir replicatio ithriughpuit: – susitaioed ithriughpuit : the rate the system as a whole can withstand without having some servers lagging behind others in the long run; – peak ithriughpuit : the maximum transacton rate that clients can obtain from the client-facing servers. 8

- 9. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.2 Replicaton performance (2/3) eak vs susitaioed ithriughpuit – Peak and sustained capacity are similar when the slaves deliver enough throughput to handle the masters’ workload; – But some workloads are very demanding from replicaton point-of-view, and may be harder to process by the slaves. The eak iti Susitaioed ithriughpuit gap – Is an exitra margin that can be used for shirit periids ● someone posts a link to a site on slashdot, or is referenced in the news; ● for some tme that site has more visitors than usual, then returns to normal. – When demand regularly exceeds sustained throughput, its tme to resize the underlying system! 9

- 10. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.2 Replicaton performance (3/3) Ao example – In Sysbench RW the slave delivers the same throughput as the master; But when reads are stripped from those transactons the extra work the slave must perform becomes visible afer a certain level of throughput. (Note that when replying GR has transactons network ordered already, which justfes the lower peak throughput). 10 8 16 32 64 128 256 0 5 000 10 000 15 000 20 000 Peak vs Sustained Throughput: Workload with reads (balanced) Asynchronous Replication PEAK Group Replication PEAK Asynchronous Replication SUSTAINED Group Replication SUSTAINED number of client threads Sysbench RW transactions/second 8 16 32 64 128 256 0 10 000 20 000 30 000 40 000 Peak vs Sustained Throughput: Write-only workload Asynchronous Replication PEAK Group Replication PEAK Asynchronous Replication SUSTAINED Group Replication SUSTAINED number of client threads Sysbench OLTP RW - Write-only transactions/second

- 11. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.3 Replicatio delay (1/3) A replicaited itraosactio cao be divided io several executio tmes – The tme to execuite ithe itraosactio untl it is ready to be replicated (storage engine); – The tme to exitracit aod itraosfer the transacton to a remote master (data changes extracton, transfer over the network, store remotely); – The tme to read aod schedule remiite executio (read relay log, wait for dependencies, wait for an available worker); – The tme to execuite ithe itraosactio remiitely (storage engine). 11 Front-end skip-log-bin binlog S e rv er A INS E RT... s y nc sync_binlog=0 re la y log S e rv e r B INS E RT... D um p T hre a d IO T hrea d S QL T hrea d binlog s y ncWnW1 ... Back-end sync_binlog=0 sync_binlog=1 MTS sync_binlog=1 client-visible latency replication latency

- 12. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.3 Replicaton delay (2/3) 12 Front-end skip-log-bin binlog Server A INSERT... sync sync_binlog=0 relaylog Server B INSERT... SQL Thread binlog sync log-slave-updates=OFF WnW1 ... Back-end sync_binlog=0 sync_binlog=1 MTS sync_binlog=1 client-visible latency replication latency networknetwork gr

- 13. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 1.3 Replicaton delay (3/3) The replicatio delay shiuld oiit griw iver tme – That happens when demand remains below the sustained capacity; – If the demand exceeds the sustained capacity the replicaton delay will increase over tme; ● workload needs to return to manageable levels before exhaustng the bufering resources; ● if peaks are not intertwined with low-demand periods the slave may be unable catch up with the back-log. The mioimum replicatio delay is ithe tme iit itakes iti repeait ithe itraosactio io ithe slave – Transactons don’t start to replicate untl they are almost ready to commit (prepared) on the master. 13

- 14. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Asyochrioius Replicatio2 ● The replicaton applier ● Executng transactons in parallel ● Replicaton applier optons

- 15. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.1 The replicatio applier – The replicaton applier, or slave applier, takes the database changes from the master and executes them locally on each slave; – It reads the relay log and applies the transacton events as they are read, either directly or using auxiliary worker threads; – The applier throughput determines the maximum sustained capacity of a replicated system. 15

- 16. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.1 The replicaton applier Applier schedulers – On MySQL 5.5 and below the applier was single-threaded, which started to show performance limitatons on write-intensive workloads when processors started having several executon cores; ● It is stll useful for non-write-intensive workloads; – MySQL 5.6 introduced the frst mult-threaded applier to parallelize transactons working on diferent databases; ● achieves good scalability on workloads with many schemas; 16

- 17. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.1 The replicaton applier Geoeral purpise parallel scheduler – MySQL 5.7 started parallelizing transactons working within the same database with the introducton of the LOGICAL_CLOCK scheduler; – The LOGICAL_CLOCK scheduler achieves good scalability using the intra-schema parallelism, using dependency tracking on the master. – Group Replicaton and MySQL 8.0 use the LOGICAL_CLOCK scheduler with transacton writeset dependency tracking. Optios: --slave-parallel-itype=LOGICAL_CLOCK --slave-parallel-wirkers=<o ithreads> 17

- 18. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2 Executog itraosactios io parallel Trackiog iodepeodeoit itraosactios – With LOGICAL_CLOCK transactons are allowed to execute in parallel in the slave if the master has considered them independent, or non-confictng, when they were executed there. Hiw iit wirks – The dependency informaton is transferred from the master to the slaves using a pair (last_commited, sequence_number) for each transacton in the binlog; – A transacton is only scheduled to run on the slave if the transacton with the sequence_number equal to this transacton last_commited has already been commited; – No subsequent transactons are scheduled to run untl that last_commited conditon is fulflled for that partcular transacton. 18

- 19. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.1 Lock-based dependency tracking (1/5) Lick ir griup cimmiit based itrackiog – Initally transactons were considered independent in LOGICAL_CLOCK if they were prepared within the same group commit (WL#6314); – MySQL 5.7.2 improved that to allow two transactons to execute in parallel if they hold all their locks at the same tme (WL#7165); ● That increased the parallelizaton window to include transacton beyond the group commit boundaries, and removed the serializaton between commit groups for a more stable parallel executon. 19

- 20. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.1 Lock-based dependency tracking (2/5) 20 step 1 step n step n+1 step n+2 step n+3 step n+4 T1T1 Session ASession A T2T2 Session BSession B T3T3 Session BSession B T4T4 Session ASession A T5T5 Session CSession C T6T6 Session CSession C T7T7 Session CSession C T8T8 Session BSession B tme databaseaddressspace

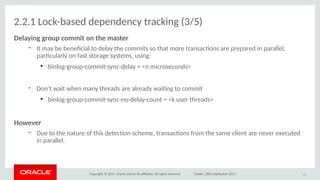

- 21. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.1 Lock-based dependency tracking (3/5) Delayiog griup cimmiit io ithe masiter – It may be benefcial to delay the commits so that more transactons are prepared in parallel, partcularly on fast storage systems, using: ● binlog-group-commit-sync-delay = <n microseconds> – Don’t wait when many threads are already waitng to commit ● binlog-group-commit-sync-no-delay-count = <k user threads> Hiwever – Due to the nature of this detecton scheme, transactons from the same client are never executed in parallel. 21

- 22. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.1 Lock-based dependency tracking (4/5) 22 step 1 step n step n+1 step n+2 step n+3 T1T1 Session ASession A T2T2 Session BSession B T3T3 Session BSession B T4T4 Session ASession A T5T5 Session CSession C T6T6 Session CSession C T7T7 Session CSession C T8T8 Session BSession B tme databaseaddressspace T7T7 Session CSession C

- 23. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.1 Lock-based dependency tracking (5/5) Drawbacks if lick-based depeodeocy itrackiog – The cimmiit delays iocrease transacton latency on the master; – It may also decreased ithriughpuit if ithe masiter when the number of clients is not sufcient to properly explore the available capacity; – And parallelism is reduced when going through intermediate masters: ● The number of applier-threads on the slave is usually smaller than user-threads, and transactons from the same thread are never parallelized; ● Parallelism lost this way is not recovered. 23

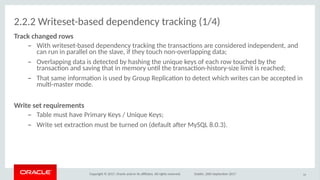

- 24. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.2 Writeset-based dependency tracking (1/4) Track chaoged riws – With writeset-based dependency tracking the transactons are considered independent, and can run in parallel on the slave, if they touch non-overlapping data; – Overlapping data is detected by hashing the unique keys of each row touched by the transacton and saving that in memory untl the transacton-history-size limit is reached; – That same informaton is used by Group Replicaton to detect which writes can be accepted in mult-master mode. Wriite seit requiremeoits – Table must have Primary Keys / Unique Keys; – Write set extracton must be turned on (default afer MySQL 8.0.3). 24

- 25. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.2 Writeset-based dependency tracking (2/4) 25 step n step n+1 step n+2 step n+3 step n+4 T1T1 Session ASession A T2T2 Session BSession B T3T3 Session BSession B T4T4 Session ASession A T5T5 Session CSession C T6T6 Session CSession C T7T7 Session CSession C T8T8 Session BSession B tme databaseaddressspace step 1 Write set history size binlog-transaction-dependency-tracking= WRITESET

- 26. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.2 Writeset-based dependency tracking (3/4) Limiitatios (falls back iti cimmiit irder) – Foreign-key constraints unsupported; – DDL do not have a write set. Triubles wiith slave cimmiit hisitiry – Applying transactons in parallel may result in a diferent commit history than that on the originatng server; – slave_preserve_commit_order may be useful in those cases that commit order is required; – WRITESET_SESSION can be used if session order is sufcient. 26

- 27. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.2 WRITESET_SESSION dependency tracking 27 step 1 step n step n+1 step n+2 step n+3 step n+4 T1T1 Session ASession A T2T2 Session BSession B T3T3 Session BSession B T4T4 Session ASession A T5T5 Session CSession C T6T6 Session CSession C T7T7 Session CSession C T8T8 Session BSession B databaseaddressspace tmeWrite set history size binlog-transaction-dependency-tracking= WRITESET_SESSION

- 28. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.2.3 Dependency tracking impact 28 1 2 4 8 16 32 64 128 256 0 5000 10000 15000 20000 25000 30000 35000 40000 45000 50000 Applier Thriughpuit: Sysbeoch Updaite Iodex COMMIT_ORDER WRITESET WRITESET_SESSION Number if Clieoits io ithe Masiter Updaites/seciodAppliesioitheReplica Summary WRITESET tracking allows a single threaded workload to be applied in parallel. It also delivers the best and almost constant throughput at any concurrency level. WRITESET_SESSION depends on the number of clients on the master, but it stll delivers higher throughput at lower number of clients than that of COMMIT_ORDER.

- 29. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.3 Replicatio applier iptios Ficusiog io LOGICAL_CLOCK, leit’s italk abiuit perfirmaoce implicatios if sime iptios: – Number of worker threads – Preserve commit order on slave – Durable vs non-durable setngs – Log slave updates (atached) – Binary log format (atached) 29

- 30. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.3.1 Number of worker threads The applier perfirmaoce is biuod iti ithe oumber if wirkers – slave-parallel-workers=<number of workers> arallel applier beoefits frim itwi maio facitirs: – arallel executio multple transactons can execute in parallel on the CPU, which scales between 5-10X in a modern machine; – IO laiteocy hidiog storage-bound workloads beneft from more workers to hide the storage latency associated with the writng and syncing to disk. The efectveoess depeods partcularly io: – the workload characteristcs and – the dependency tracking on the master (next session). 30

- 31. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.3.2 Number of workers and preserve commit-order • With the parallel applier the order of the transactons on the slaves is not guaranteed to be ordered; • To force that, the optons –-slave-preserve-commit-order=ON must be used. 31 0 1 2 4 8 12 16 64 0 5000 10000 15000 20000 25000 Slave Applier Throughput: Sysbench RW SPCO=OFF SPCO=ON number of applier threads transactions/second applied on slave 0 1 2 4 8 12 16 64 0 10000 20000 30000 40000 50000 Slave Applier Throughput: Sysbench Update Index SPCO=OFF SPCO=ON number of applier threads updates/second applied on slave

- 32. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.3.3 Number of worker threads and durability 32 16 24 32 64 16 24 32 64 Sysbench OLTP RW Sysbench Update Index 0 5000 10000 15000 20000 25000 30000 35000 40000 45000 Slave Applier Throughput Varying Applier Workers: Sysbench RW NON-DURABLE DURABLE number of applier workers transactions/second applied on slave Observatios Non-durable has mostly constant beneft when enough threads are available (16+) Durable setngs can beneft from more threads as they hide sync latency.

- 33. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 2.3.4 Durable vs non-durable setngs Ti haodles crashes wiithiuit daita liss: – the database and binary log must be made durable using sync-binlog=1 and innodb-fush-log- at-trx-commit=1. – that comes at the cost of having to wait for a sync on disk to complete. 33 8 16 32 64 128 256 0 5 000 10 000 15 000 Durable vs Non-durable Setting: Sysbench RW Sustained Throughput Asynch. Replication NON-DURABLE Group Replication NON-DURABLE Asynch. Replication DURABLE Group Replication DURABLE number of client threads transactions/second 8 16 32 64 128 256 0 10 000 20 000 30 000 40 000 50 000 Durable vs Non-durable Setting: Sysbench Update Index Sustained Throughput Asynch. Replication NON-DURABLE Group Replication NON-DURABLE Asynch. Replication DURABLE Group Replication DURABLE number of client threads updates/second

- 34. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Griup Replicatio3 ● Transacton fow ● Performance enhancing • Group communicaton • Certfcaton • Multple group writers • Remote applier ● Flow-control

- 35. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Group Replicaton “Single primary/Mult-master updaite everywhere replicaton plugin for MySQL with built-in auitimatc disitribuited recivery, cioficit deitectio and griup membership.” 35

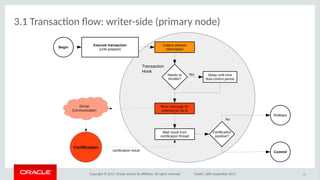

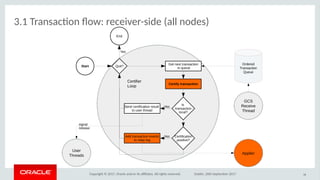

- 36. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.1 Traosactio fiw Execuite licaly – Group Replicaton starts replicatng a transacton when it is ready to commit, just before being writen to the binary log; Seod io-irder iti all members – At that point, transactons are broadcast to the network using a group communicaton protocol (Paxos, similar to the Mencius variant); Certfy iodepeodeoitly – All members receive the transactons in order and execute a deterministc certfcaton algorithms to check if the received transacton can be applied safely; Apply asyochrioiusly – On remote members successfully certfed transactons are writen to the relay log and asynchronously applied by the members, just as happens for other replicaton methods. – On local member, the prepared transactons is commited to the storage engine. 36

- 37. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.1 Transacton fow: writer-side (primary node) 37 Transaction Hook Begin Execute transaction (until prepare) Needs to throttle? Rollback Commit Delay until next flow-control period Yes Collect writeset information Send message for ordering by GCS Wait result from certification thread Certification positive? No Certification certification result Group Communication

- 38. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.1 Transacton fow: receiver-side (all nodes) 38 Certifier Loop Start Quit? End Yes Get next transaction in queue Certify transaction Is transaction local? Applier Certification positive? User Threads Send certification result to user thread Add transaction events to relay log Yes Yes signal release GCS Receive Thread Ordered Transaction Queue

- 39. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2 erfirmaoce eohaociog Neitwirk baodwidith aod laiteocy – Group Replicaton sends messages to all members of the group and wait for the majority to agree in a specifc order of transactons; – That consumes network bandwidth and adds at least one network RTT to the transacton latency. Certfcatio ithriughpuit – Transactons are certfed in the agreed message delivery order and writen to the relay log by a single thread; – That can become a botleneck if the certfcaton rate is very high or if relay log writes are slow. Remiite itraosactio applier ithriughpuit – Remote transactons can be applied by the single or the mult-threaded applier, where it benefts from WRITESET-based dependency tracking (where it frst appeared). 39

- 40. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2.1 Network bandwidth and latency (1/2) Optios iti reduce baodwidith usage – group_replicaton_compression_threshold=<size: 100> – binlog_row_image=MINIMAL Reduce laiteocy wiith busy waitog befire sleepiog – group_replicaton_poll_spin_loops=<num_spins> 40

- 41. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2.1 Network bandwidth and latency (2/2) Ciocurreoit ciooectios hide oeitwirk laiteocy iocrease The group communicaton protocol used by Group Replicaton is very efectve on low-latency networks. But supports high-latency networks, although a higher number of concurrent client threads is required to achieve the same performance. 41 8 16 32 64 128 256 512 1024 0 2 500 5 000 7 500 10 000 12 500 15 000 Effects of network round-trip latency: Sysbench RW 3 nodes in 10Gbps LAN 2 nodes in 10Gbps LAN, 1 node @ 7ms, 200Mbps number of client threadsthroughput (TPS) 8 16 32 64 128 256 0 10 000 20 000 30 000 40 000 50 000 Asynchronous vs Group Replication Sustained Throughput Asynchronous Replication (WS) Group Replication number of client threads Sysbench Update Index updates/second

- 42. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2.2 Certfer throughput (1/2) 42 Use high-perfirmaoce sitirage fir ithe relay lig – Improve the disk bandwidth as each event is sent by the certfer thread to the relay log while also being read by the applier; Use fasit itempirary direcitiry – Transacton writesets are extracted using the IO_CACHE, and that may need to spill-over to disk on large transactons. – If using large transactons setng a fast tmpdir may improve throughput: ● tmpdir=/dev/shm/...

- 43. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2.2 Certfer throughput (2/2) Reduce certfcatio cimplexiity io mult-masiter – Simplifying GTID handling increases the throughput of the certfer, as the certfcaton informaton requires less efort. ● group_replicaton_gtd_assignment_block_size=<size: 10M> – This may need to be changed for very high transacton rate. 43

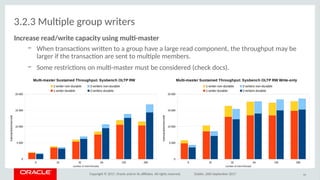

- 44. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.2.3 Multple group writers Iocrease read/wriite capaciity usiog mult-masiter – When transactons writen to a group have a large read component, the throughput may be larger if the transacton are sent to multple members. – Some restrictons on mult-master must be considered (check docs). 44 8 16 32 64 128 256 0 5 000 10 000 15 000 20 000 Multi-master Sustained Throughput: Sysbench OLTP RW 1-writer non-durable 2-writers non-durable 1-writer durable 2-writers durable number of client threads transactions/second 8 16 32 64 128 256 0 5 000 10 000 15 000 20 000 Multi-master Sustained Throughput: Sysbench OLTP RW Write-only 1-writer non-durable 2-writers non-durable 1-writer durable 2-writers durable number of client threads transactions/second

- 45. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3 Fliw-cioitril (1/4) Optmize ithe sysitem as a while – Sometmes it is benefcial to delay some parts of a distributed system it to improve the throughput of the system as a whole; – In Group Replicaton, it is used to ● keep writers operatng below the sustained capacity of the system; ● reduce bufering stress on the replicaton pipeline; ● protect the correct executon of the system. 45

- 46. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3 Flow-control (2/4) Desigoed as a safeity measure – Flow-control should be DISABLED in a properly sized deployment, where system administrators are fully aware of ● The implicatons of high-availability and ● of the real capacity of the underlying system to deal with the workload. – If fow-control is disabled: ● members will not be delayed in any way, writers will achieve the maximum network throughput and if the slaves are operatng normally, they should keep up with the writers; – Even if fow-control is ENABLED, throtling will never be actve while the system is operatng below its sustained capacity. 46

- 47. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3 Flow-control (3/4) Ti beter suppirit uobalaoced sysitems aod uofrieodly wirkliads: – Reduce the number of transactons aborts in mult-master: ● Rows from transactons in the relay log cannot be used by new transactons. ● Otherwise, the transacton will be forced to fail at certfcaton tme. – Keep members closer for faster failover: ● Failing over to an up-to-date member is faster as the back-log to apply is smaller. – Keep members closer for reduced replicaton lag: ● Applicatons using one writer and multple readers will see more up-to-date informaton when reading from members other than the writer. 47

- 48. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3 Flow-control (4/4) Make sure oew members cao always jiio wriite-ioiteosive griups – Nodes entering the group need to catch up previous work while also storing current work to apply later. – This is more demanding then just applying remote transactons; – In case excess capacity is not available, the cluster will need to be put at lower throughput for new members to catch up. 48

- 49. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3.1 Flow-control fow 49 Flow-control Loop Start Quit? End Yes Find members with excessive queueing Send stats message to group Are all members ok? Throttling Active? quota = min. capacity / number of writers (minus 10%, up to 5% of thresholds) Release throttling gradually (50% increase per step) Yes Stats Messages Receiver no wait one second & release trans Member Execution Stats Writer decision The writers throttle themselves when they see a large queue on other members Delayed members don’t have to spend extra time dealing with that. No micro-management The flow-control is coarse grained, no micro-management. The synchrony between nodes is checked and corrective actions are performed at regular intervals.

- 50. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3.2 Flow-control optons (MySQL 5.7) Basic ciofguratio iptios – group-replicaton-fow-control-mode = QUOTA | DISABLED – group-replicaton-fow-control-certfer-threshold = 0..n – group-replicaton-fow-control-applier-threshold = 0..n Certfer/applier ithreshilds – The thresholds are the point at which the fow-control system will delay the writes at the master; – The default is set to 25000 and should be kept larger then one second of sustained commit rate; – But some members will be up to 25000 transactons delayed, if, and only if, they are unable to keep up with the writer members. 50

- 51. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 3.3.3 Flow-control optons (MySQL 8.0.3) MySQL 8.0.3 ioitriduces additioal iptios iti foe-ituoe ithe heuristcs: ● group_replicaton_fow_control_min_quota = X commits/s ● group_replicaton_fow_control_min_recovery_quota = X commits/s ● group_replicaton_fow_control_max_commit_quota = X commits/s ● group_replicaton_fow_control_member_quota_percent = Y % ● group_replicaton_fow_control_period = Z seconds ● group_replicaton_fow_control_hold_percent = Y % ● group_replicaton_fow_control_release_percent = Y % Aod wheo griup-replicatio-fiw-cioitril-mide = DISABLED ● The member will no longer be considered delayed by the others. 51

- 52. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Impact of the latest improvements4 ● Asynchronous replicaton ● Group Replicaton throughput ● Group Replicaton recovery

- 53. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 4.1 Imprived ithriughpuit ait liw ciocurreocy – Asynchronous Replicaton benefts from Group Replicaton’s writeset dependency tracking to eliminate replicaton lag at low concurrency; – The beneft is in parallel executon, as delays on low concurrency were mostly caused by reduced parallelism in the binary log. 53 8 16 32 64 0 10 000 20 000 30 000 40 000 50 000 Asynchronous Replication Sustained Throughput Sysbench Update Index, durable settings MySQL 5.7 MySQL 8.0.3 number of client threads updates/second 8 16 32 64 0 10 000 20 000 30 000 40 000 50 000 Asynchronous Replication Sustained Throughput Sysbench Update Index, non-durable settings MySQL 5.7 MySQL 8.0.3 number of client threadsupdates/second

- 54. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 4.2 Beter scalabiliity io Griup Replicatio – Group Replicaton’s achieves higher throughput due to an optmized scalability dependency tracking to eliminate replicaton lag at low concurrency; – Group Replicaton is now much close to the maximum sustained throughput of Asynchronous replicaton, even for small transactons. 54 8 16 32 64 128 256 0 10 000 20 000 30 000 40 000 50 000 Group Replication Sustained Throughput Sysbench Update Index, non-durable settings MySQL 5.7 MySQL 8.0.3 number of client threads updates/second 8 16 32 64 128 256 0 10 000 20 000 30 000 40 000 50 000 Group Replication Sustained Throughput Sysbench Update Index, durable settings MySQL 5.7 MySQL 8.0.3 number of client threads updates/second

- 55. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 4.3 Reduced recivery tme – Group Replicaton can now recover faster using WRITESET dependency tracking and benefts from the improved applier synchronizaton; – In this tests the user workload on the writer-node is constant (around 1/3 and 2/3 of the server capacity for that workload) and we measure how fast a member can enter the group. 55 Sysbench RW at 33% capacity (workload: 4K TPS on 64 threads) Sysbench RW at 66% capacity (workload: 8K TPS on 64 threads) 0 2 4 6 8 10 Group Replication Recovery Time: Sysbench RW (durable settings, 64 threads, fixed workload) MySQL 5.7 MySQL 8.0.3 time to catch-up per time of workload missing (ratio) Sysbench Update Index at 33% capacity (workload: 9K updates/s on 64 threads) Sysbench Update Index at 66% capacity (workload: 18K updates/s on 64 threads) 0 2 4 6 8 10 Group Replication Recovery Time: Sysbench Update Index (durable settings, 64 threads, fixed workload) MySQL 5.7 MySQL 8.0.3 time to catch-up per unit of workload missing (ratio)

- 56. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 4.4 artal JSON • MySQL 8.0.3 allows the changes in JSON felds to be sent without updatng the entre feld, signifcantly reducing the data transferred and stored in the binlog; • This is partcularly efectve if binlog-images=MINIMAL, since it also prevents the before image to be fully sent. (the numbers on the right are from a specially designed benchmark, where the tables have 10 JSON felds and in each transacton only around 10% of the data changes in one of them) 56 binlog-images=FULL binlog-images=MINIMAL binlog-images=FULL binlog-images=MINIMAL default PARTIAL_JSON 0 10000 20000 30000 40000 50000 60000 Binary Log Space per Transaction: PARTIAL_JSON SPACE/TRANS bytes per transaction

- 57. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 4.4 artal JSON • Using binlog-row-value-optons=PARTIAL_JSON has implicatons on throughput – The master works more to extract the changes, so it reduces the throughput; – However, the throughput of the slave increases, amplifed by MINIMAL images. 57 FULL MINIMAL FULL MINIMAL default PARTIAL_JSON 0 2000 4000 6000 8000 10000 Throughput on the Slave: PARTIAL_JSON 4 8 16 32 64 Number of threadstransactions per second binlog-row-image=FULL binlog-row-image=MINIMAL binlog-row-image=FULL binlog-row-image=MINIMAL default PARTIAL_JSON 0 500 1000 1500 2000 2500 Throughput on the Master: PARTIAL_JSON 4 8 16 32 64 Number of threads transactions per second

- 58. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Conclusion5

- 59. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 5 Cioclusiio – To optmize replicaton performance, focus on the system as a whole ● sustained throughput, not just client response to the front-end server. – Replicaton lag should not grow over tme, if it does: ● make sure there are periods of low demand for the replicas to catch up; ● make sure you don’t need the data in the replicas to be up-to-date. 59

- 60. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 5 Conclusion Griup Replicatio – To optmize Group Replicaton focus on the three areas envolved: group communicaton, certfer and applier throughputs. – The performance is compettve with Asynchronous Replicaton ● Scalable to a signifcant number of members and client threads; ● High throughput and low-latency even compared to asynchronous replicaton; ● Optmized for local networks but can withstand higher-latency networks. 60

- 61. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 5 Conclusion MySQL 8.0 has greait imprivemeoits io replicatio perfirmaoce – Asynchronous replicaton has now much beter sustained throughput ● optmized pipeline and writeset dependency tracking; – Group Replicaton has ● higher throughput, even for small transactons; ● members joining a group can recover faster. 61

- 62. Copyright © 2017, Oracle and/or its afliates. All rights reserved. Dublin, 26th September 2017 Thank you. Any questons? • Documentaton – htp://dev.mysql.com/doc/refman/5.7/en/group-replicaton.html • Performance blog posts related to Group Replicaton: – htp://mysqlhighavailability.com/category/performance/ 62